Real-Time Remote Sensing of the Lobesia botrana Moth Using a Wireless Acoustic Detection Sensor

Abstract

:1. Introduction

2. Materials and Methods

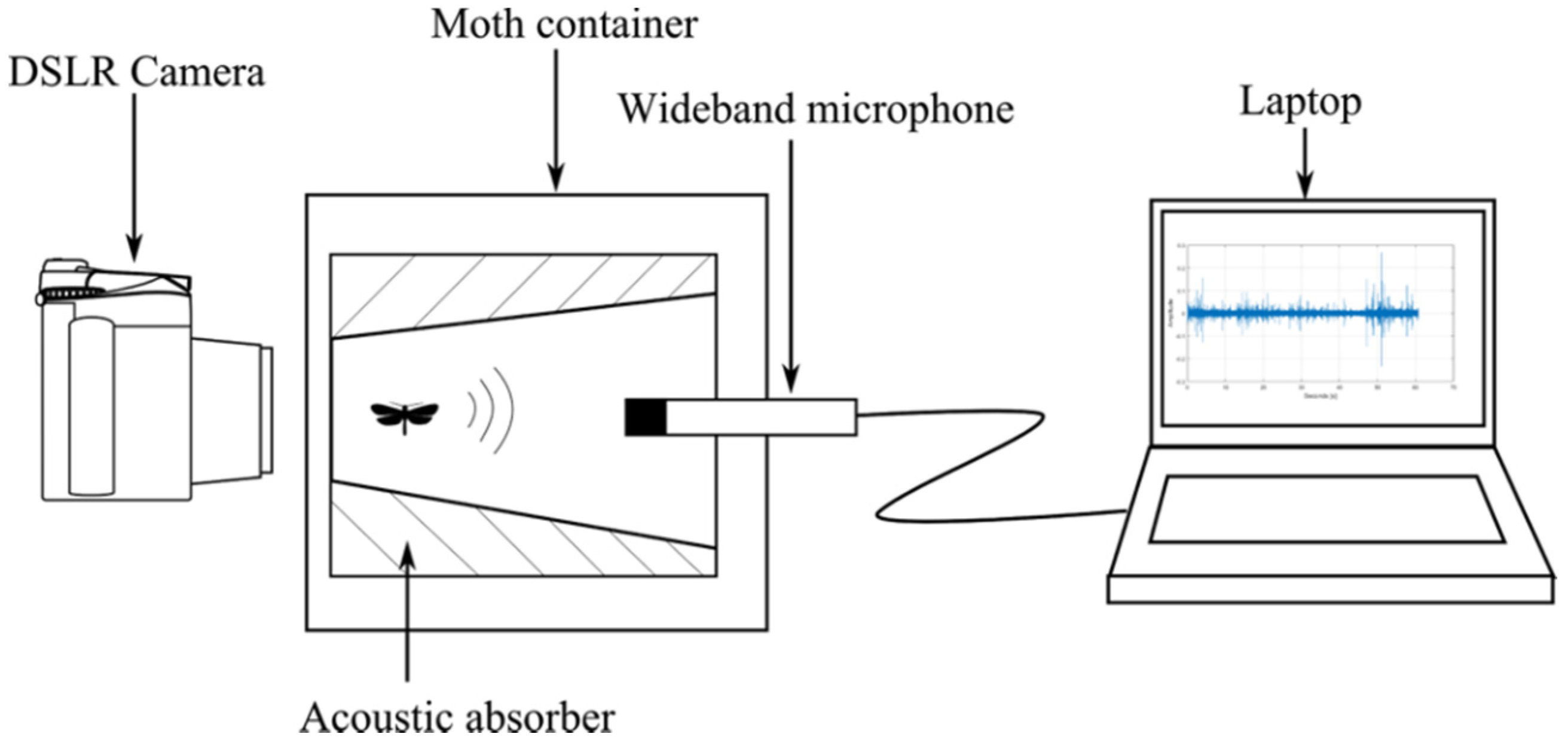

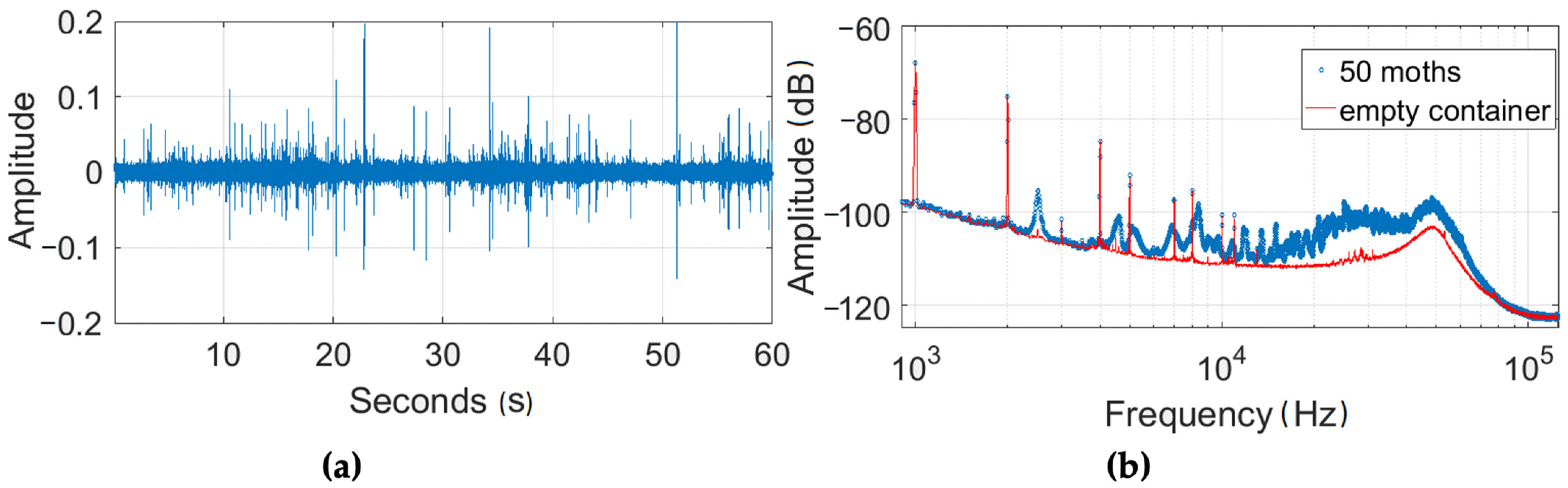

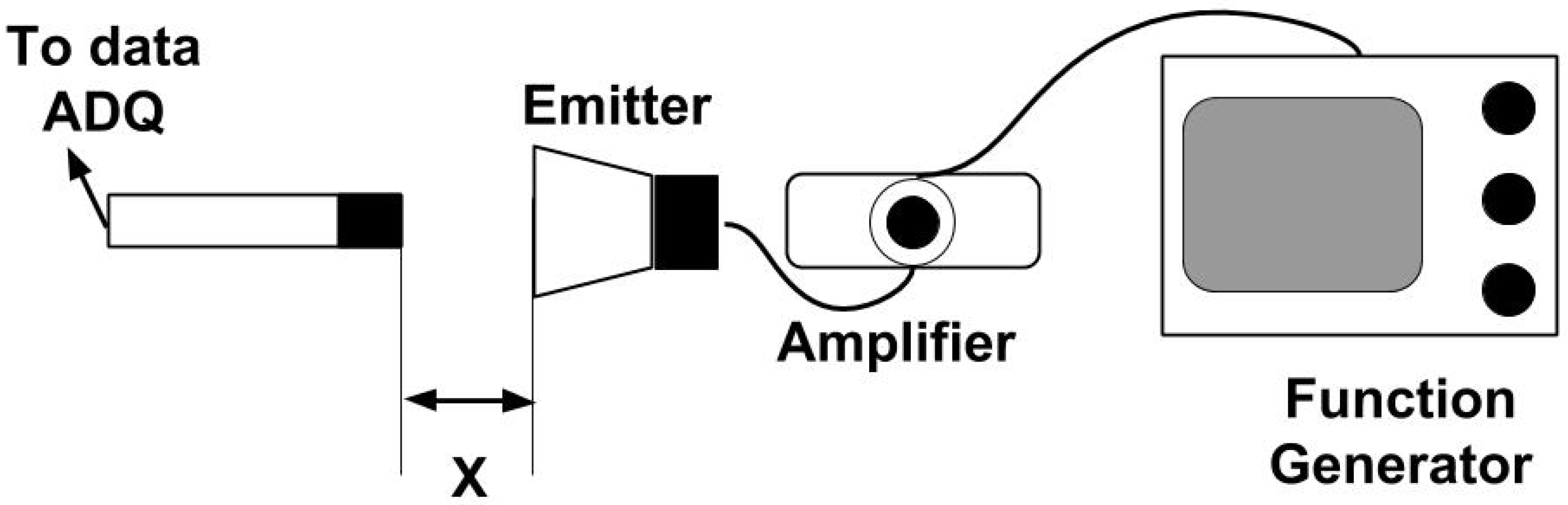

2.1. Experimental Setup and Sound Recordings

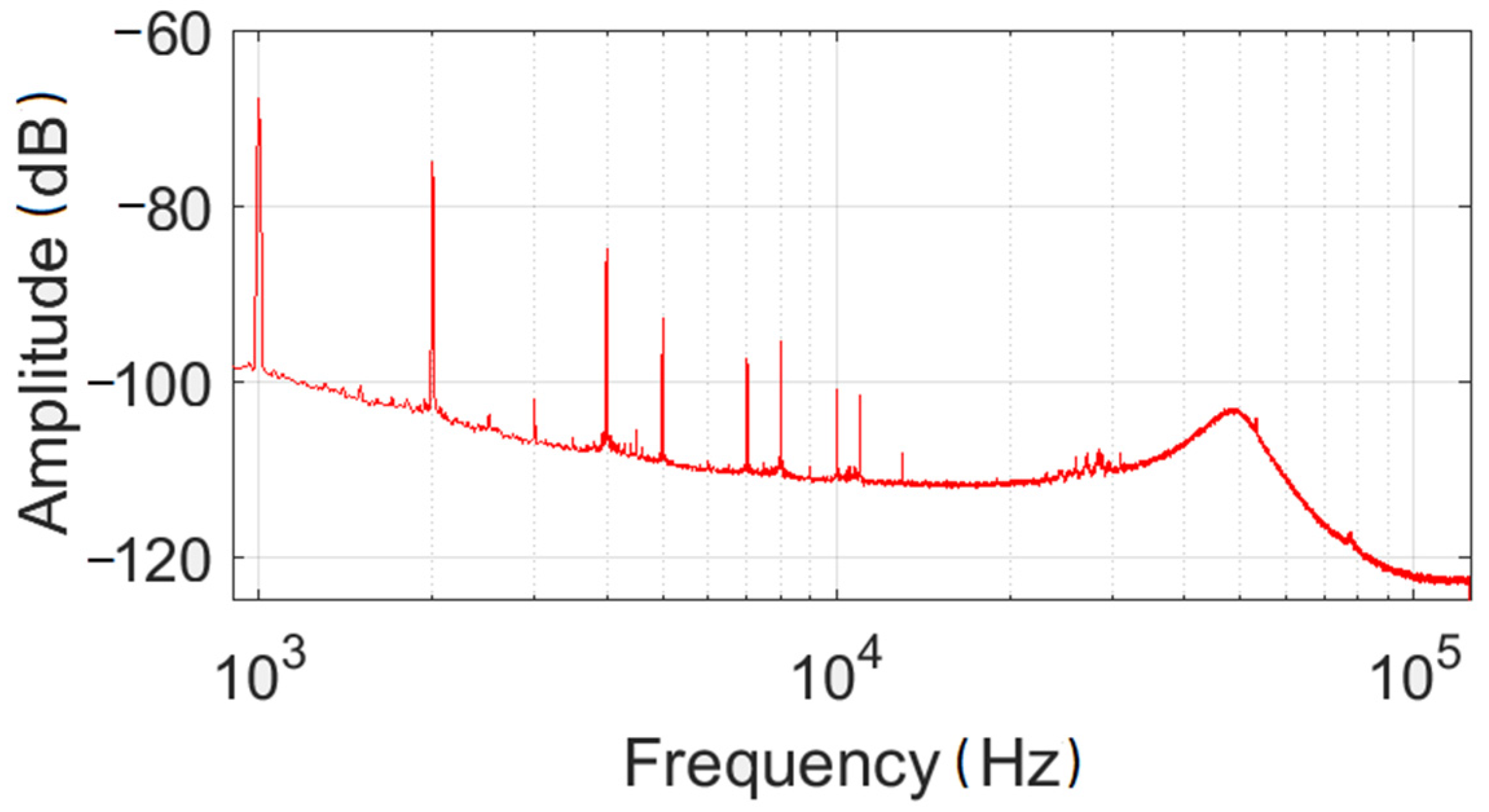

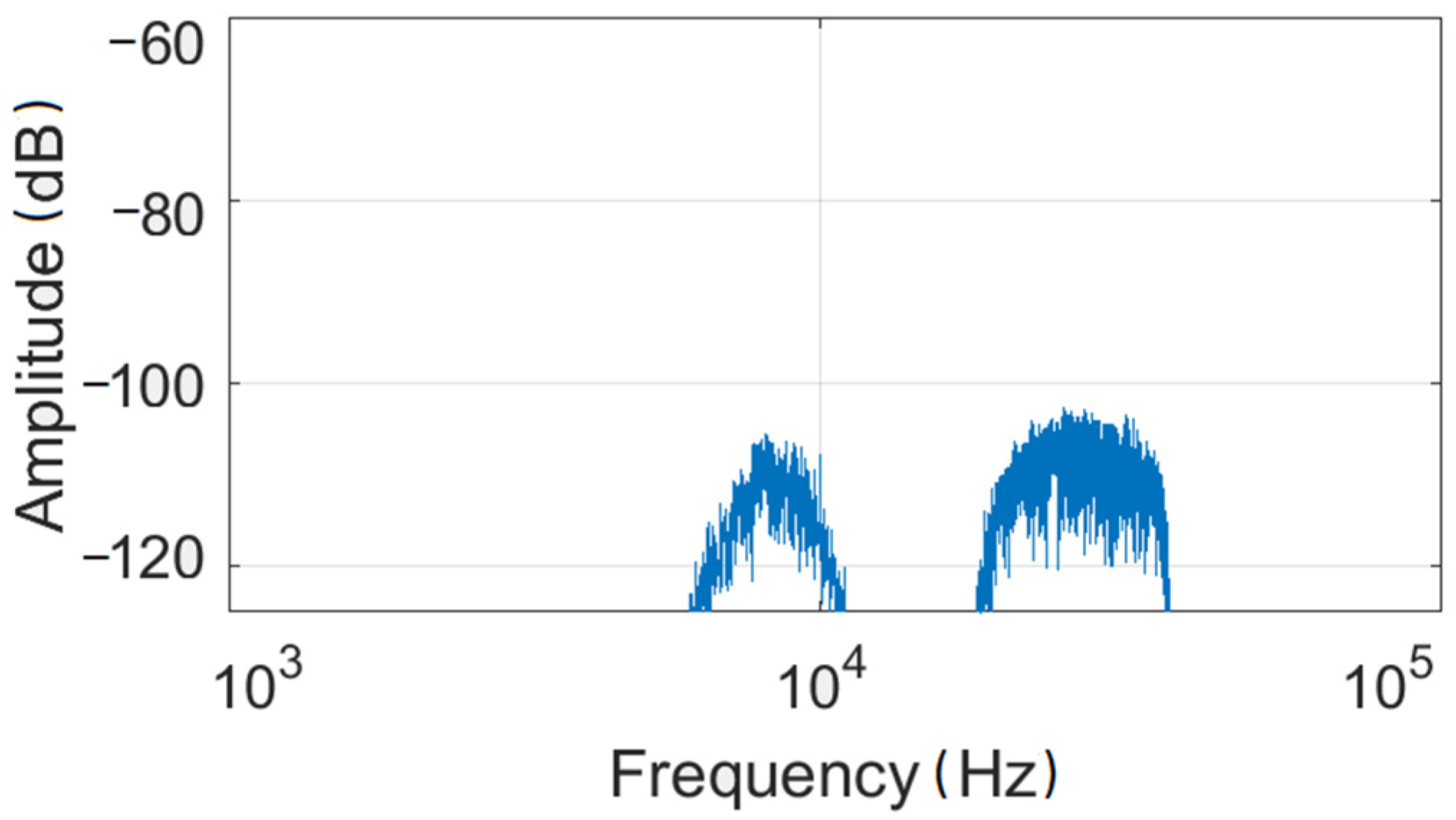

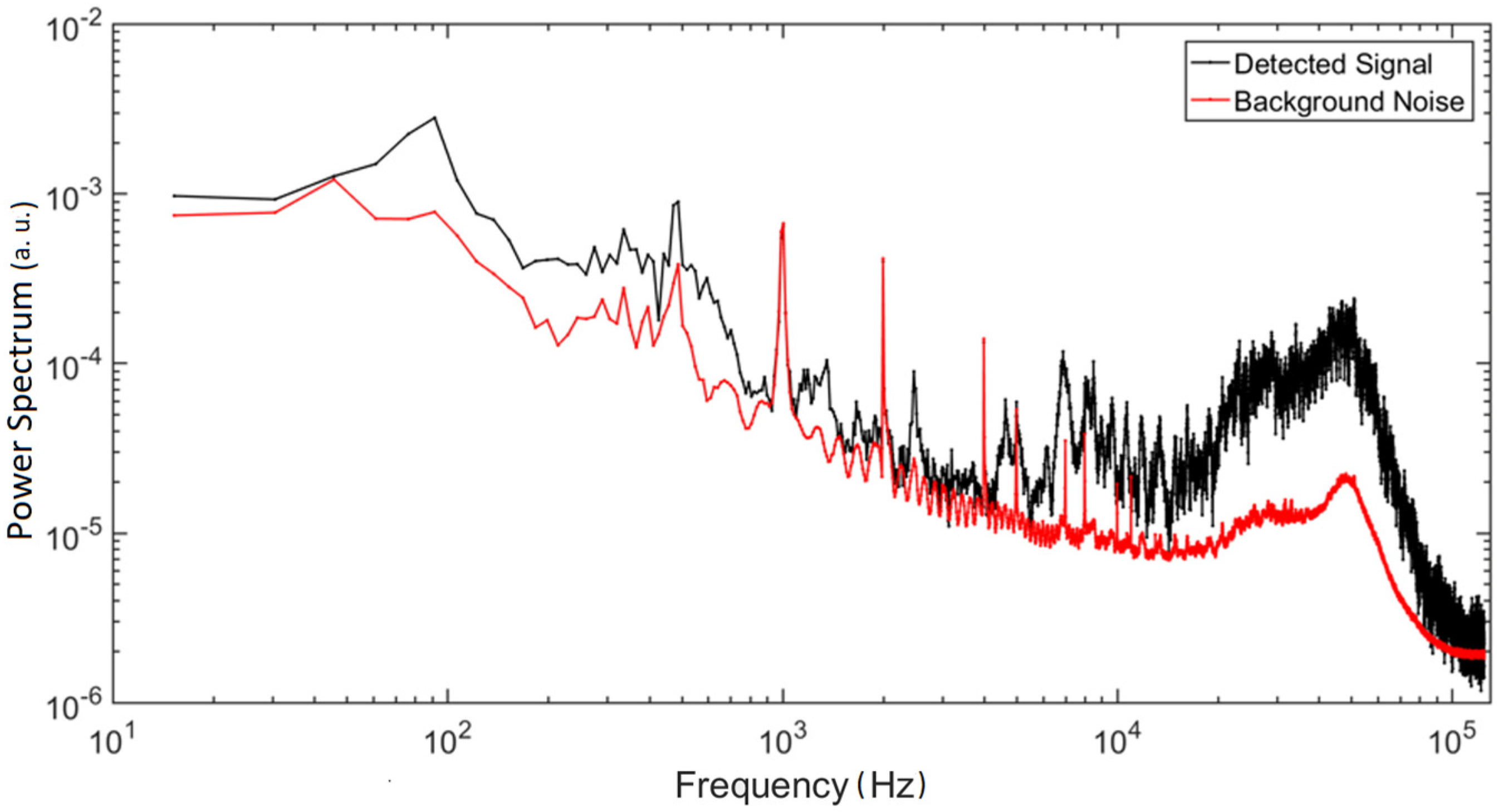

2.2. Filter Design Procedure

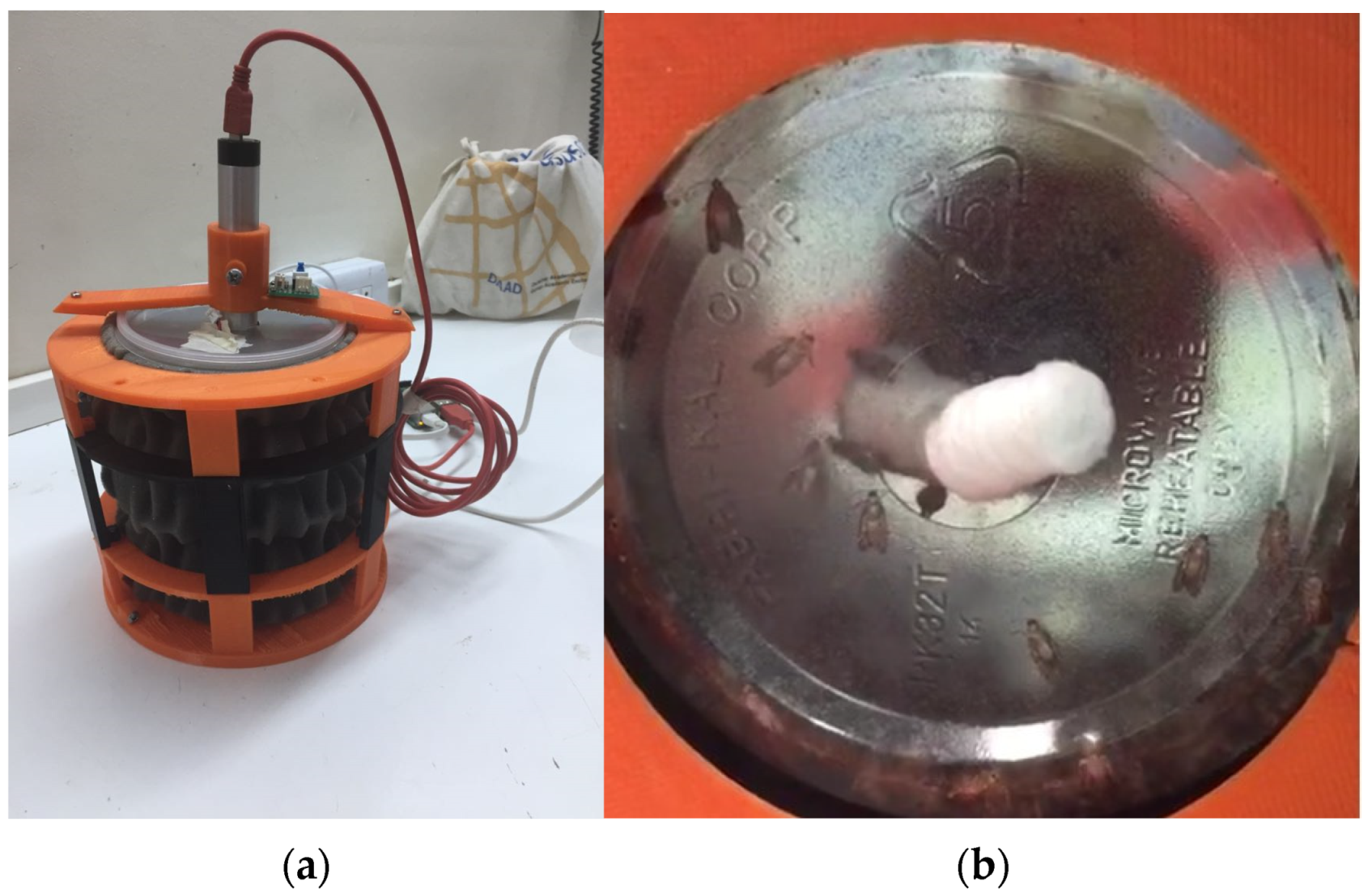

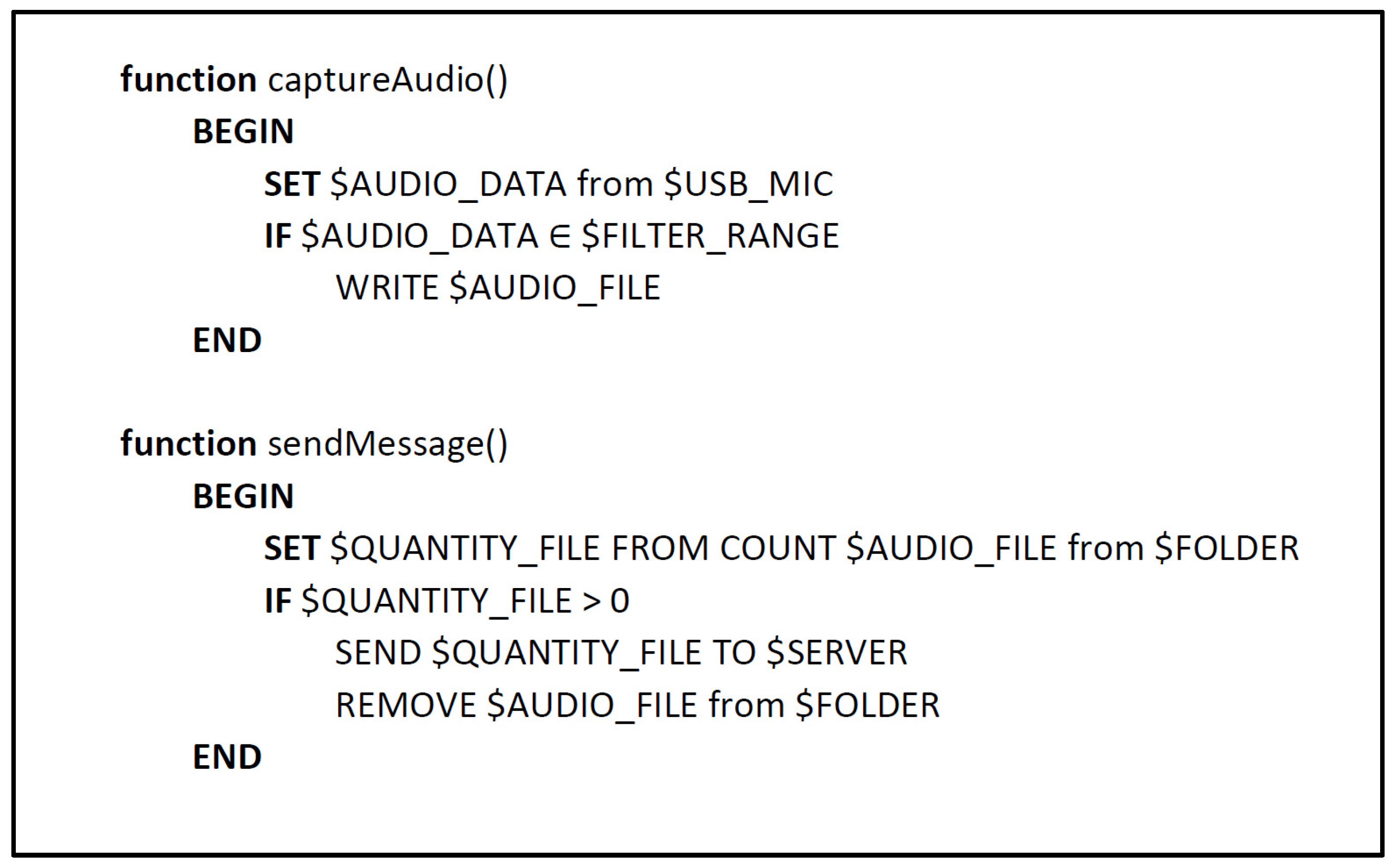

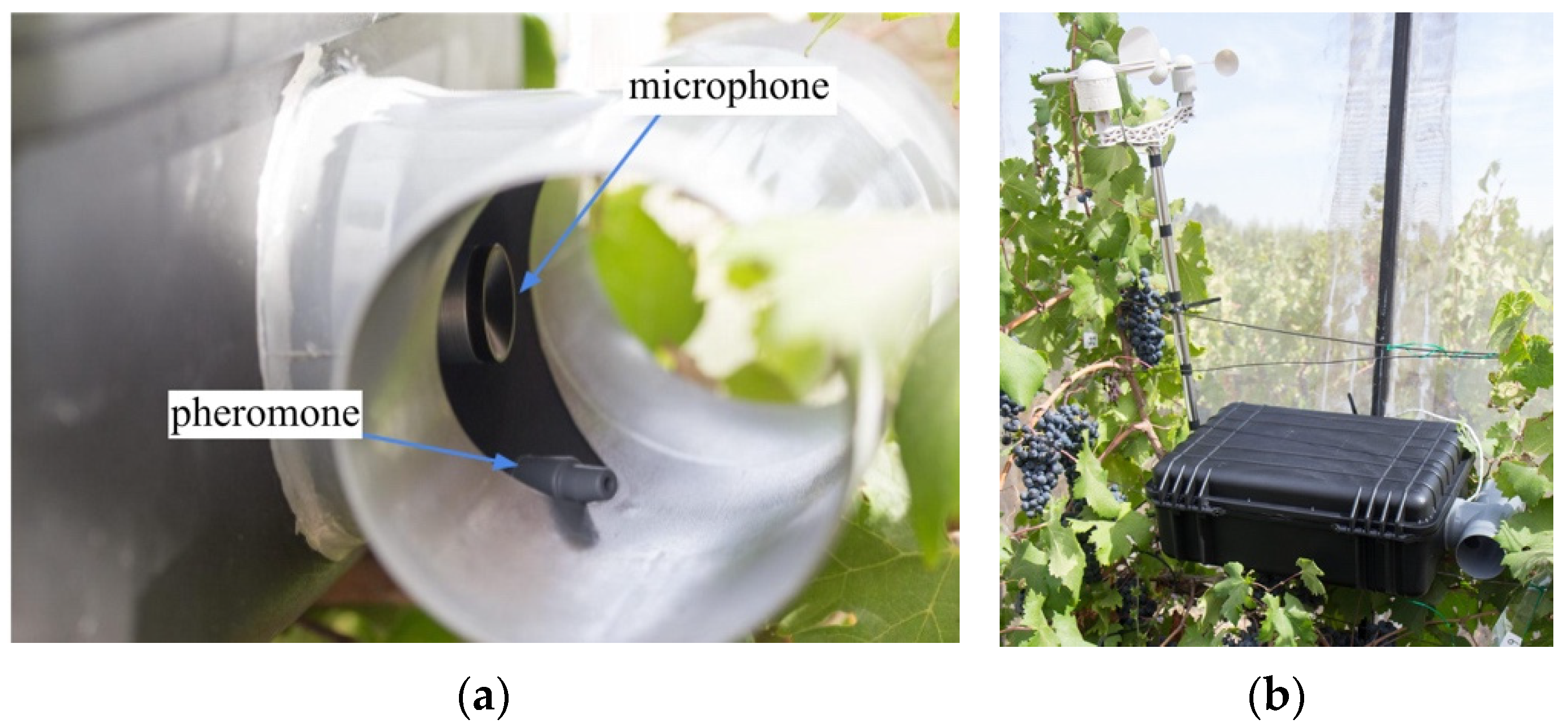

2.3. Sensor

2.3.1. Microphone

2.3.2. Processing Unit

2.3.3. Network Connection

2.3.4. Data Server

3. Results

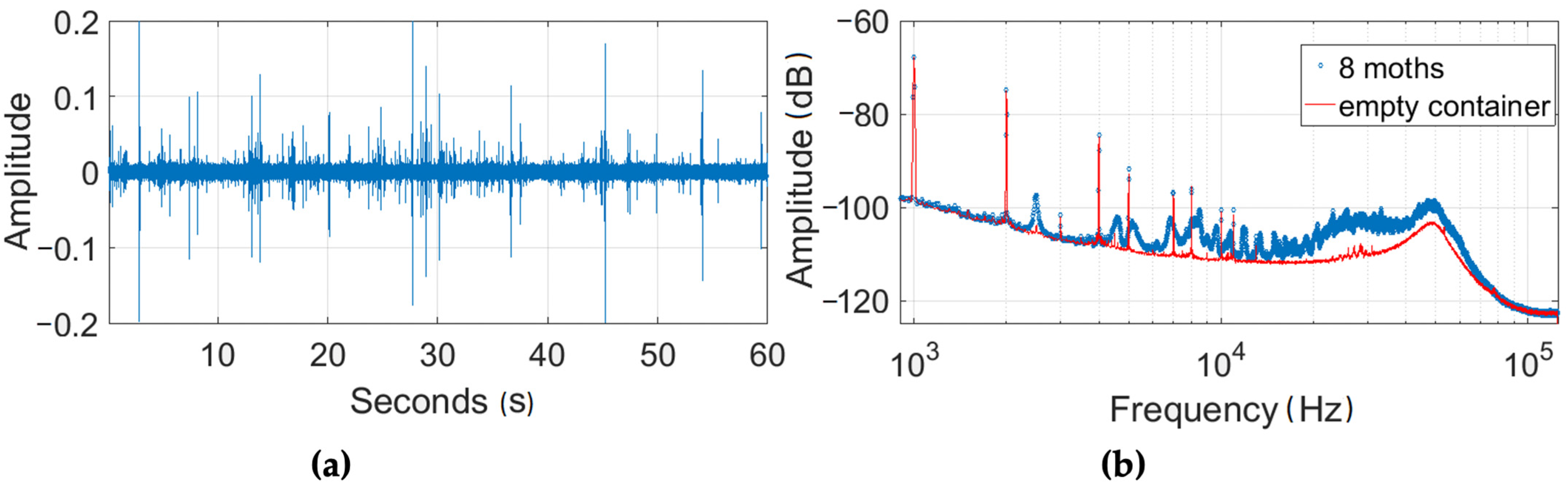

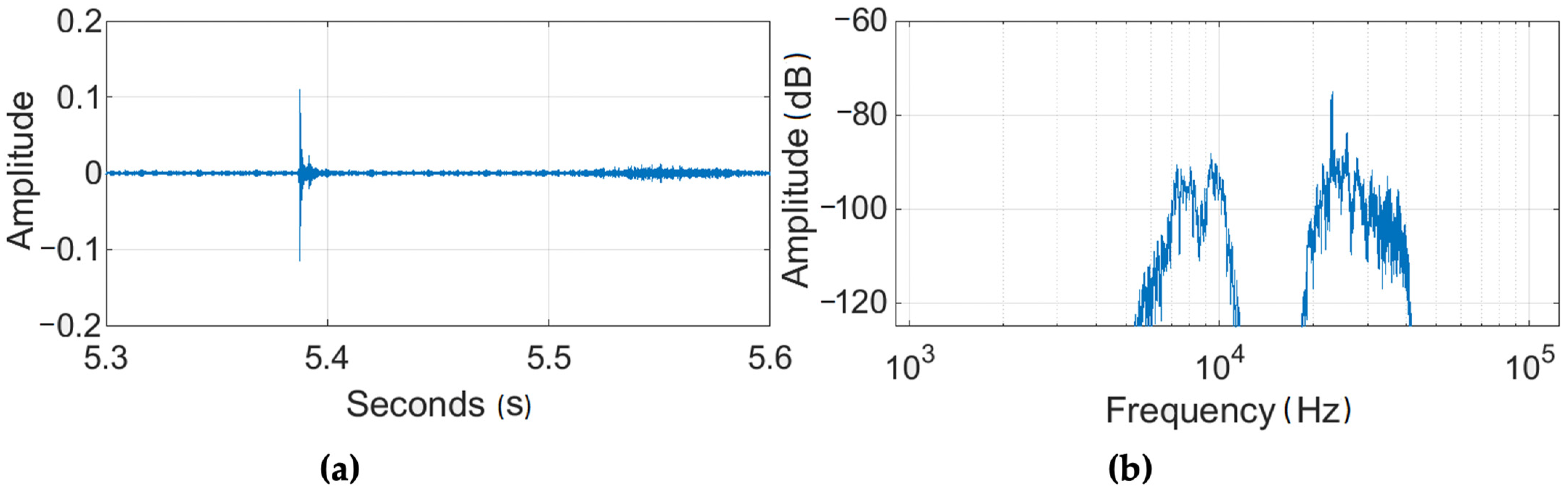

3.1. Laboratory Validation

3.2. Simulated Moth

3.3. Field Test

4. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Acknowledgments

Conflicts of Interest

References

- Bruinsma, J. World Agriculture: Towards 2015/2030: An FAO Perspective; Earthscan: London, UK, 2003. [Google Scholar]

- Turrén-Cruz, T.; Zavala, M.Á.L. Framework Proposal for Achieving Smart and Sustainable Societies (S3). Sustainability 2021, 13, 13034. [Google Scholar] [CrossRef]

- United Nations Department of Economic and Social Affairs. The Sustainable Development Goals Report 2021; Cambridge University Press: Cambridge, UK, 2021. [Google Scholar]

- Tagarakis, A.C.; Dordas, C.; Lampridi, M.; Kateris, D.; Bochtis, D. A Smart Farming System for Circular Agriculture. Eng. Proc. 2021, 9, 10. [Google Scholar] [CrossRef]

- Bernhardt, H.; Schumacher, L.; Zhou, J.; Treiber, M.; Shannon, K. Digital Agriculture Infrastructure in the USA and Germany. Eng. Proc. 2021, 9, 1. [Google Scholar] [CrossRef]

- Molnar, I.; Rakosy-Tican, E. Difficulties in Potato Pest Control: The Case of Pyrethroids on Colorado Potato Beetle. Agronomy 2021, 11, 1920. [Google Scholar] [CrossRef]

- Adedeji, A.A.; Ekramirad, N.; Rady, A.; Hamidisepehr, A.; Donohue, K.D.; Villanueva, R.T.; Parrish, C.A.; Li, M. Non-Destructive Technologies for Detecting Insect Infestation in Fruits and Vegetables under Postharvest Conditions: A Critical Review. Foods 2020, 9, 927. [Google Scholar] [CrossRef] [PubMed]

- Ramalingam, B.; Mohan, R.E.; Pookkuttath, S.; Gómez, B.F.; Sairam Borusu, C.S.C.; Wee Teng, T.; Tamilselvam, Y.K. Remote Insects Trap Monitoring System Using Deep Learning Framework and IoT. Sensors 2020, 20, 5280. [Google Scholar] [CrossRef] [PubMed]

- CORFO. Programa Estratégico Industrias Inteligentes, CORFO, Executive Resume 2016. Available online: http://seguimiento.agendadigital.gob.cl/download?filename=1507037460_20150122 PENII Resumen Ejecutivo vF.pdf (accessed on 11 November 2021).

- EPPO Global Database. Available online: https://gd.eppo.int/ (accessed on 11 November 2021).

- Bovey, P. Super famille des Tortricidae. Entomol. Appl. L’Agric. 1966, 2, 456–893. [Google Scholar]

- Al-Zyoud, F.A.; Elmosa, H.M. Population dynamics of the grape berry moth, Lobesia botrana Schiff. (Lepidoptera: Tortricidae) and its parasites in Jerash Area, Jordan. Dirasat Agric. Sci. 2001, 28, 6–13. [Google Scholar]

- Venette, R.C.; Davis, E.E.; DaCosta, M.; Heisler, H.; Larson, M. Mini Risk Assessment Grape Berry Moth, Lobesia botrana. Department of Entomology, University of Minnesota, St. Paul, Minnesota, September 2003. Available online: https://www.aphis.usda.gov/plant_health/plant_pest_info/eg_moth/downloads/lbotrana-minipra.pdf (accessed on 11 November 2021).

- Ministerio de Agricultura de Chile. Servicio Agrícola y Ganadero (SAG). Estrategia 2016–2017 Programa Nacional de Lobesia botrana, December 2016. Available online: http://www.fdf.cl/lobesia_botrana/estrategia_pnlb_2016_2017.pdf (accessed on 4 November 2021).

- Huddar, S.R.; Gowri, S.; Keerthana, K.; Vasanthi, S.; Rupanagudi, S.R. Novel algorithm for segmentation and automatic identification of pests on plants using image processing. In Proceedings of the 2012 Third International Conference on Computing, Communication and Networking Technologies (ICCCNT’12), Coimbatore, India, 26–28 July 2012; pp. 1–5. [Google Scholar] [CrossRef]

- Rajan, P.; Radhakrishnan, B.; Suresh, L.P. Detection and classification of pests from crop images using Support Vector Machine. In Proceedings of the 2016 International Conference on Emerging Technological Trends (ICETT), Kollam, India, 21–22 October 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Zheng, X.; Cai, R. Geometrid larvae detection using contour feature. In Proceedings of the 2016 IEEE 13th International Conference on Signal. Processing (ICSP), Chengdu, China, 6–10 November 2016; pp. 673–676. [Google Scholar] [CrossRef]

- Gavhale, K.R.; Gawande, U.; Hajari, K.O. Unhealthy region of citrus leaf detection using image processing techniques. In Proceedings of the International Conference for Convergence for Technology-2014, Pune, India, 6–8 April 2014; pp. 1–6. [Google Scholar] [CrossRef]

- Zhong, Y.; Gao, J.; Lei, Q.; Zhou, Y. A Vision-Based Counting and Recognition System for Flying Insects in Intelligent Agriculture. Sensors 2018, 18, 1489. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Vanegas, F.; Bratanov, D.; Powell, K.; Weiss, J.; Gonzalez, F. A Novel Methodology for Improving Plant Pest Surveillance in Vineyards and Crops Using UAV-Based Hyperspectral and Spatial Data. Sensors 2018, 18, 260. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Garcia-Lesta, D.; Ferro, E.; Brea, V.M.; López, P.; Cabello, D.; Iglesias, J.; Castillejo, J. Live demonstration: Wireless sensor network for snail pest detection. In Proceedings of the 2016 IEEE International Symposium on Circuits and Systems (ISCAS), Montréal, QC, Canada, 22–25 May 2016; p. 2371. [Google Scholar] [CrossRef]

- Qi, S.-F.; Li, Y.-H. A New Wireless Sensor Used in Grain Pests Detection. In Proceedings of the 2012 International Conference on Control. Engineering and Communication Technology, Shenyang, China, 7–9 December 2012; pp. 755–758. [Google Scholar] [CrossRef]

- Noskov, A.; Bendix, J.; Friess, N. A Review of Insect Monitoring Approaches with Special Reference to Radar Techniques. Sensors 2021, 21, 1474. [Google Scholar] [CrossRef] [PubMed]

- Balla, E.; Flórián, N.; Gergócs, V.; Gránicz, L.; Tóth, F.; Németh, T.; Dombos, M. An Opto-Electronic Sensor-Ring to Detect Arthropods of Significantly Different Body Sizes. Sensors 2020, 20, 982. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Najdenovska, E.; Dutoit, F.; Tran, D.; Plummer, C.; Wallbridge, N.; Camps, C.; Raileanu, L.E. Classification of Plant Electrophysiology Signals for Detection of Spider Mites Infestation in Tomatoes. Appl. Sci. 2021, 11, 1414. [Google Scholar] [CrossRef]

- Martin, B.; Juliet, V. Detection of pest infestation by preprocessing sound using vector quantization. In Proceedings of the 2010 2nd International Conference on Signal. Processing Systems, Dalian, China, 5–7 July 2010; pp. V3-219–V3-223. [Google Scholar] [CrossRef]

- Yazgac, B.G.; Kirci, M.; Kivan, M. Detection of sunn pests using sound signal processing methods. In Proceedings of the 2016 Fifth International Conference on Agro-Geoinformatics (Agro-Geoinformatics), Tianjin, China, 18–20 July 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Potamitis, I.; Ganchev, T.; Fakotakis, N. Automatic bioacoustic detection of Rhynchophorus ferrugineus. In Proceedings of the 2008 16th European Signal Processing Conference, Lausanne, Switzerland, 25–29 August 2008; pp. 1–4. [Google Scholar]

- Flynn, T.; Salloum, H.; Hull-Sanders, H.; Sedunov, A.; Sedunov, N.; Sinelnikov, Y.; Sutin, A.; Masters, A. Acoustic methods of invasive species detection in agriculture shipments. In Proceedings of the 2016 IEEE Symposium on Technologies for Homeland Security (HST), Waltham, MA, USA, 10–11 May 2016; pp. 1–5. [Google Scholar] [CrossRef]

- de la Rosa, J.J.G.; Lloret, I.; Moreno, A.; Puntonet, C.G.; Gorriz, J.M. Higher-order spectral characterization of termite emissions using acoustic emission probes. In Proceedings of the 2007 IEEE Sensors Applications Symposium, San Diego, CA, USA, 6–8 February 2007; pp. 1–6. [Google Scholar] [CrossRef]

- Potamitis, I.; Rigakis, I.; Tatlas, N.-A. Automated Surveillance of Fruit Flies. Sensors 2017, 17, 110. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Martin, B.; Juliet, V.; Sankaranarayanan, P.E.; Gopal, A.; Rajkumar, I. Wireless implementation of mems accelerometer to detect red palm weevil on palms. In Proceedings of the 2013 International Conference on Advanced Electronic Systems (ICAES), Pilani, India, 21–23 September 2013; pp. 248–252. [Google Scholar] [CrossRef]

- Pilli, S.K.; Nallathambi, B.; George, S.J.; Diwanji, V. eAGROBOT—A robot for early crop disease detection using image processing. In Proceedings of the 2015 2nd International Conference on Electronics and Communication Systems (ICECS), Coimbatore, India, 26–27 February 2015; pp. 1684–1689. [Google Scholar] [CrossRef]

- Dagatti, C.V.; Becerra, V.C. Ajuste de modelo fenológico para predecir el comportamiento de Lobesia botrana (Lepidoptera: Tortricidae) en un viñedo de Mendoza, Argentina. Rev. Soc. Entomol. Argent. 2015, 74, 117–122. [Google Scholar]

- Raman, D.R.; Gerhardt, R.R.; Wilkerson, J.B. Detecting Insect Flight Sounds in the Field: Implications for Acoustical Counting of Mosquitoes. Trans. ASABE 2007, 50, 1481–1485. [Google Scholar] [CrossRef] [Green Version]

- Mankin, R.W.; Machan, R.; Jones, R.L. Field testing of a Prototype Acoustic device for d etection of Mediterranean Fruit Flies Flying into a trap. In Proceedings of the 7th International Symposium on Fruit Flies of Economic Importance, Salvador, Brazil, 10–15 September 2006. [Google Scholar]

- Lapshin, D.N.; Vorontsov, D.D. Acoustic irradiation produced by flying moths (Lepidoptera, Noctuidae). Entmol. Rev. 2007, 87, 1115–1125. [Google Scholar] [CrossRef]

- Bailey, W.J. Resonant wing systems in the Australian whistling moth Hecatesia (Agarasidae, Lepidoptera). Nature 1978, 272, 444–446. [Google Scholar] [CrossRef]

- Audacity. Available online: https://sourceforge.net/projects/audacity (accessed on 4 November 2021).

- Welch, P. The use of fast Fourier transform for the estimation of power spectra: A method based on time averaging over short, modified periodograms. IEEE Trans. Audio Electroacoust. 1967, 15, 70–73. [Google Scholar] [CrossRef] [Green Version]

- Oppenheim, A.V.; Schafer, R.W. Discrete-Time Signal. Processing; Prentice Hall: Hoboken, NJ, USA, 1989. [Google Scholar]

- Knowles Acoustics. MEMS Microphone, Ultrasonic MEMS Sensor SPM0404UD5. Available online: https://www.digikey.com/en/articles/ultrasonic-mems-sensor-spm0404ud5 (accessed on 4 November 2021).

- Dodotronic. Microphone Ultramic250K, Ultramic UM250K. Available online: https://www.dodotronic.com/product/ultramic-um250k/?v=2a47ad90f2ae (accessed on 4 November 2021).

- Fledermausschutz Aachen, A.K. Düren, Euskirchen, Raspberry Pi Bat Project, Bat Pi 3. Available online: http://www.bat-pi.eu/EN/index-EN.html (accessed on 4 November 2021).

- SoX—Sound eXchange. Available online: http://sox.sourceforge.net (accessed on 4 November 2021).

- Servicio Agrícola y Ganadero. Vigilancia. Available online: http://www.sag.cl/ambitos-de-accion/vigilancia (accessed on 4 November 2021).

| Features | Description |

|---|---|

| 1 | Signal mean |

| 2 | Root mean square |

| 3 | Amplitude of the central maxima of the autocorrelation function |

| 4 | Position of the first peak in the autocorrelation function |

| 5 | Amplitude of the first peak in the autocorrelation function |

| 6 | Spectral power from 20 kHz to 40 kHz |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hermosilla, G.; Pizarro, F.; Fingerhuth, S.; Lazcano, F.; Santibanez, F.; Baker, N.; Castro, D.; Yáñez, C. Real-Time Remote Sensing of the Lobesia botrana Moth Using a Wireless Acoustic Detection Sensor. Appl. Sci. 2021, 11, 11889. https://doi.org/10.3390/app112411889

Hermosilla G, Pizarro F, Fingerhuth S, Lazcano F, Santibanez F, Baker N, Castro D, Yáñez C. Real-Time Remote Sensing of the Lobesia botrana Moth Using a Wireless Acoustic Detection Sensor. Applied Sciences. 2021; 11(24):11889. https://doi.org/10.3390/app112411889

Chicago/Turabian StyleHermosilla, Gabriel, Francisco Pizarro, Sebastián Fingerhuth, Francisco Lazcano, Francisco Santibanez, Nelson Baker, David Castro, and Carolina Yáñez. 2021. "Real-Time Remote Sensing of the Lobesia botrana Moth Using a Wireless Acoustic Detection Sensor" Applied Sciences 11, no. 24: 11889. https://doi.org/10.3390/app112411889

APA StyleHermosilla, G., Pizarro, F., Fingerhuth, S., Lazcano, F., Santibanez, F., Baker, N., Castro, D., & Yáñez, C. (2021). Real-Time Remote Sensing of the Lobesia botrana Moth Using a Wireless Acoustic Detection Sensor. Applied Sciences, 11(24), 11889. https://doi.org/10.3390/app112411889