Viewpoint Robustness of Automated Facial Action Unit Detection Systems

Abstract

:1. Introduction

2. Materials and Methods

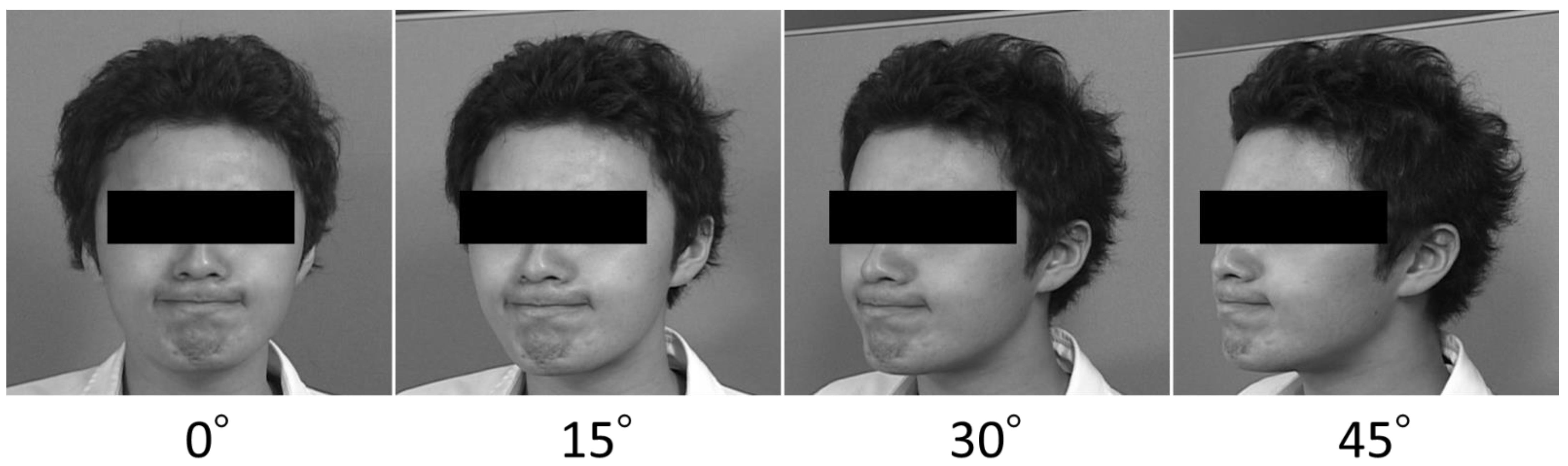

2.1. Dataset

2.2. Automatic Facial Action Detection System

2.2.1. FaceReader

2.2.2. OpenFace

2.2.3. Py-Feat

2.3. Evaluation Metrics

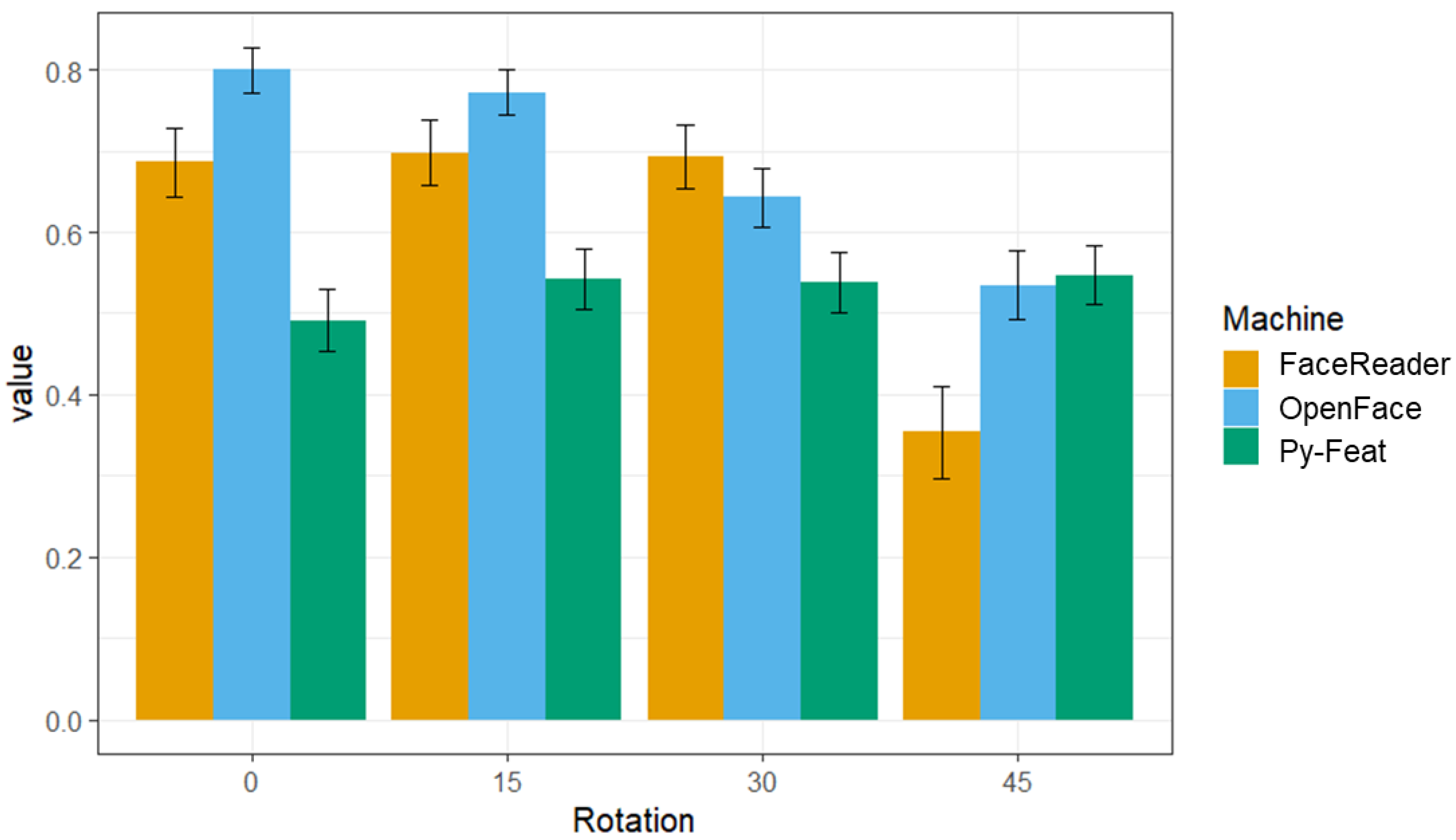

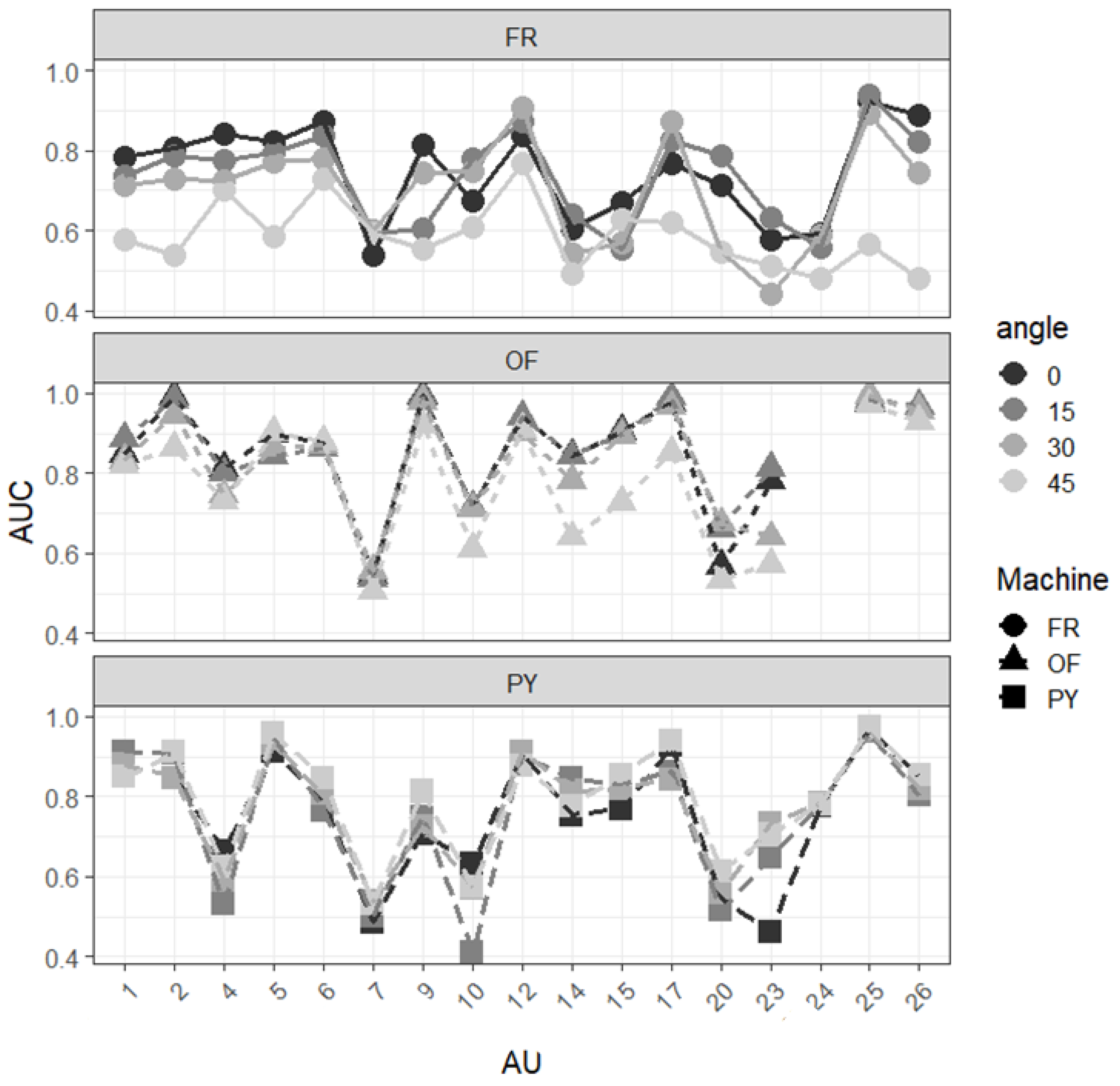

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Dukes, D.; Abrams, K.; Adolphs, R.; Ahmed, M.E.; Beatty, A.; Berridge, K.C.; Broomhall, S.; Brosch, T.; Campos, J.J.; Clay, Z.; et al. The rise of affectivism. Nat. Hum. Behav. 2021, 5, 816–820. [Google Scholar] [CrossRef]

- Cohn, J.F.; Ertugrul, I.O.; Chu, W.S.; Girard, J.M.; Jeni, L.A.; Hammal, Z. Affective facial computing: Generalizability across domains. In Multimodal Behavior Analysis in the Wild; Alameda-Pineda, X., Ricci, E., Sebe, N., Eds.; Academic Press: Cambridge, MA, USA, 2019; pp. 407–441. [Google Scholar]

- Li, S.; Deng, W. Deep facial expression recognition: A survey. IEEE Trans. Affect. Comput. 2020. [Google Scholar] [CrossRef] [Green Version]

- Martinez, B.; Valstar, M.F.; Jiang, B.; Pantic, M. Automatic analysis of facial actions: A survey. IEEE Trans. Affect. Comput. 2017, 10, 325–347. [Google Scholar] [CrossRef]

- Zhi, R.; Liu, M.; Zhang, D. A comprehensive survey on automatic facial action unit analysis. Vis. Comput. 2020, 36, 1067–1093. [Google Scholar] [CrossRef]

- Bavelas, J.; Chovil, N. Some pragmatic functions of conversational facial gestures. Gesture 2018, 17, 98–127. [Google Scholar] [CrossRef]

- Van Kleef, G.A. The emerging view of emotion as social information. Soc. Personal. Psychol. Compass 2010, 4, 331–343. [Google Scholar] [CrossRef] [Green Version]

- Ekman, P.; Friesen, W.V.; Hager, J.C. Facial Action Coding System, 2nd ed.; Research Nexus eBook: Salt Lake City, UT, USA, 2002. [Google Scholar]

- Valstar, M. Automatic facial expression analysis. In Understanding Facial Expressions in Communication; Mandak, M.K., Awasthi, A., Eds.; Springer: New Delhi, India, 2015; pp. 143–172. [Google Scholar]

- Shao, Z.; Liu, Z.; Cai, J.; Ma, L. JÂA-net: Joint facial action unit detection and face alignment via adaptive attention. Int. J. Comput. Vis. 2021, 129, 321–340. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, L.; Hossain, M.A. Adaptive 3D facial action intensity estimation and emotion recognition. Expert Syst. Appl. 2015, 42, 1446–1464. [Google Scholar] [CrossRef] [Green Version]

- Meng, Z.; Han, S.; Liu, P.; Tong, Y. Improving speech related facial action unit recognition by audiovisual information fusion. IEEE Trans. Cybern. 2018, 49, 3293–3306. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Zhang, X.; Zhou, J.; Li, X.; Li, Y.; Zhao, G.; Li, Y. Graph-based Facial Affect Analysis: A Review of Methods, Applications and Challenges. arXiv 2021, arXiv:2103.15599. [Google Scholar]

- Perusquía-Hernández, M. Are people happy when they smile?: Affective assessments based on automatic smile genuineness identification. Emot. Stud. 2021, 6, 57–71. [Google Scholar]

- Dupré, D.; Krumhuber, E.G.; Küster, D.; McKeown, G.J. A performance comparison of eight commercially available automatic classifiers for facial affect recognition. PLoS ONE 2020, 15, e0231968. [Google Scholar] [CrossRef] [PubMed]

- Baltrušaitis, T.; Zadeh, A.; Lim, Y.C.; Morency, L.P. OpenFace 2.0: Facial behavior analysis toolkit. In Proceedings of the 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG), Xi’an, China, 15–19 May 2018; pp. 59–66. [Google Scholar]

- Ertugrul, I.O.; Cohn, J.F.; Jeni, L.A.; Zhang, Z.; Yin, L.; Ji, Q. Crossing domains for AU coding: Perspectives, approaches, and measures. IEEE Trans. Biom. Behav. Identity Sci. 2020, 2, 158–171. [Google Scholar] [CrossRef] [PubMed]

- Cheong, J.H.; Xie, T.; Byrne, S.; Chang, L.J. Py-Feat: Python Facial Expression Analysis Toolbox. arXiv 2021, arXiv:2104.03509. [Google Scholar]

- Namba, S.; Sato, W.; Osumi, M.; Shimokawa, K. Assessing Automated Facial Action Unit Detection Systems for Analyzing Cross-Domain Facial Expression Databases. Sensors 2021, 21, 4222. [Google Scholar] [CrossRef] [PubMed]

- Surcinelli, P.; Andrei, F.; Montebarocci, O.; Grandi, S. Emotion Recognition of Facial Expressions Presented in Profile. Psychol. Rep. 2021, 00332941211018403. [Google Scholar] [CrossRef]

- Guo, K.; Shaw, H. Face in profile view reduces perceived facial expression intensity: An eye-tracking study. Acta. Psychol. 2015, 155, 19–28. [Google Scholar] [CrossRef]

- Matsumoto, D.; Hwang, H.S. Judgments of facial expressions of emotion in profile. Emotion 2011, 11, 1223–1229. [Google Scholar] [CrossRef] [Green Version]

- Hill, H.; Schyns, P.G.; Akamatsu, S. Information and viewpoint dependence in face recognition. Cognition 1997, 62, 201–222. [Google Scholar] [CrossRef]

- Sutherland, C.A.; Young, A.W.; Rhodes, G. Facial first impressions from another angle: How social judgements are influenced by changeable and invariant facial properties. Br. J. Psychol. 2017, 108, 397–415. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hess, U.; Adams, R.B.; Kleck, R.E. Looking at you or looking elsewhere: The influence of head orientation on the signal value of emotional facial expressions. Motiv. Emot. 2007, 31, 137–144. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V. Pictures of Facial Affect; Consulting Psychologist: Palo Alto, CA, USA, 1976. [Google Scholar]

- Sato, W.; Hyniewska, S.; Minemoto, K.; Yoshikawa, S. Facial expressions of basic emotions in Japanese laypeople. Front. Psychol. 2019, 10, 259. [Google Scholar] [CrossRef] [Green Version]

- Zadeh, A.; Chong, L.Y.; Baltrusaitis, T.; Morency, L.P. Convolutional experts constrained local model for 3d facial landmark detection. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 2519–2528. [Google Scholar]

- Savran, A.; Alyüz, N.; Dibeklio ğlu, H.; Çeliktutan, O.; Gökberk, B.; Sankur, B.; Akarun, L. Bosphorus database for 3D face analysis. In Proceedings of the European Workshop on Biometrics and Identity Management, Roskilde, Denmark, 7–8 May 2008; pp. 47–56. [Google Scholar]

- Zhang, X.; Yin, L.; Cohn, J.F.; Canavan, S.; Reale, M.; Horowitz, A.; Liu, P.; Girard, J.M. Bp4d-spontaneous: A high-resolution spontaneous 3D dynamic facial expression database. Image Vis. Comput. 2014, 32, 692–706. [Google Scholar] [CrossRef]

- Mavadati, S.M.; Mahoor, M.H.; Bartlett, K.; Trinh, P.; Cohn, J.F. DISFA: A spontaneous facial action intensity database. IEEE Trans. Affect. Comput. 2013, 4, 151–160. [Google Scholar] [CrossRef]

- Valstar, M.F.; Jiang, B.; Mehu, M.; Pantic, M.; Scherer, K. The first facial expression recognition and analysis challenge. In Proceedings of the 2011 IEEE International Conference on Automatic Face & Gesture Recognition (FG), Santa Barbara, CA, USA, 21–25 March 2011; pp. 921–926. [Google Scholar]

- McKeown, G.; Valstar, M.; Cowie, R.; Pantic, M.; Schroder, M. The semaine database: Annotated multimodal records of emotionally colored conversations between a person and a limited agent. IEEE Trans. Affect. Comput. 2011, 3, 5–17. [Google Scholar] [CrossRef] [Green Version]

- Lucey, P.; Cohn, J.F.; Prkachin, K.M.; Solomon, P.E.; Matthews, I. Painful data: The UNBC-McMaster shoulder pain expression archive database. In Proceedings of the 2011 IEEE International Conference on Automatic Face & Gesture Recognition (FG), Santa Barbara, CA, USA, 21–25 March 2011; pp. 57–64. [Google Scholar]

- Lucey, P.; Cohn, J.F.; Kanade, T.; Saragih, J.; Ambadar, Z.; Matthews, I. The Extended Cohn-Kanade Dataset (CK+): A complete dataset for action unit and emotion-specified expression. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition-Workshops, San Francisco, CA, USA, 13–18 June 2010; pp. 94–101. [Google Scholar]

- Deng, J.; Guo, J.; Zhou, Y.; Yu, J.; Kotsia, I.; Zafeiriou, S. Retinaface: Single-stage dense face localisation in the wild. arXiv 2019, arXiv:1905.00641. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Kollias, D.; Nicolaou, M.A.; Kotsia, I.; Zhao, G.; Zafeiriou, S. Recognition of affect in the wild using deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 26–33. [Google Scholar]

- Kollias, D.; Zafeiriou, S. Aff-wild2: Extending the Aff-wild database for affect recognition. arXiv 2018, arXiv:1811.07770. [Google Scholar]

- Kollias, D.; Zafeiriou, S. A multi-task learning & generation framework: Valence–arousal, action units & primary expressions. arXiv 2018, arXiv:1811.07771. [Google Scholar]

- Kollias, D.; Zafeiriou, S. Expression, affect, action unit recognition: Aff-wild2, multi-task learning and ArcFace. arXiv 2019, arXiv:1910.04855. [Google Scholar]

- Kollias, D.; Tzirakis, P.; Nicolaou, M.A.; Papaioannou, A.; Zhao, G.; Schuller, B.; Kotsia, I.; Zafeiriou, S. Deep affect prediction in-the-wild: Aff-wild database and challenge, deep architectures, and beyond. Int. J. Comput. Vis. 2019, 127, 907–929. [Google Scholar] [CrossRef] [Green Version]

- Zafeiriou, S.; Kollias, D.; Nicolaou, M.A.; Papaioannou, A.; Zhao, G.; Kotsia, I. Aff-wild: Valence and arousal ‘n-the-Wild’ challenge. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 34–41. [Google Scholar]

- Jeni, L.A.; Cohn, J.F.; De La Torre, F. Facing imbalanced data—Recommendations for the use of performance metrics. In Proceedings of the Humaine Association Conference on Affective Computing and Intelligent Interaction, Washington, DC, USA, 2–5 September 2015; pp. 245–251. [Google Scholar]

- Dowle, M.; Srinivasan, A. Data.table: Extension of ‘data.frame’. R Package, Version 1.14.2. 2021. Available online: https://CRAN.R-project.org/package=data.table (accessed on 6 October 2021).

- Robin, X.; Turck, N.; Hainard, A.; Tiberti, N.; Lisacek, F.; Sanchez, J.C.; Müller, M. pROC: An open-source package for R and S+ to analyze and compare ROC curves. BMC Bioinform. 2011, 12, 77. [Google Scholar] [CrossRef]

- Wickham, H.; Averick, M.; Bryan, J.; Chang, W.; McGowan, L.D.A.; François, R.; Grolemund, G.; Hayes, A.; Henry, L.; Hester, J.; et al. Welcome to the Tidyverse. J. Open Source Softw. 2019, 4, 1686. [Google Scholar] [CrossRef]

- Zhang, N.; Luo, J.; Gao, W. Research on Face Detection Technology Based on MTCNN. In Proceedings of the 2020 International Conference on Computer Network, Electronic and Automation (ICCNEA), Xi’an, China, 25–27 September 2020; pp. 154–158. [Google Scholar]

- Zhang, S.; Zhu, X.; Lei, Z.; Shi, H.; Wang, X.; Li, S.Z. FaceBoxes: A CPU real-time face detector with high accuracy. In Proceedings of the 2017 IEEE International Joint Conference on Biometrics (IJCB), Denver, CO, USA, 1–4 October 2017; pp. 1–9. [Google Scholar]

- Zhao, K.; Chu, W.S.; Zhang, H. Deep region and multi-label learning for facial action unit detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3391–3399. [Google Scholar]

- Guo, X.; Li, S.; Yu, J.; Zhang, J.; Ma, J.; Ma, L.; Liu, W.; Ling, H. PFLD: A practical facial landmark detector. arXiv 2019, arXiv:1902.10859. [Google Scholar]

- Chen, S.; Liu, Y.; Gao, X.; Han, Z. Mobilefacenets: Efficient cnns for accurate real-time face verification on mobile devices. In Proceedings of the Chinese Conference on Biometric Recognition, Urumchi, China, 11–12 August 2018; pp. 428–438. [Google Scholar]

- Garcia, R.V.; Wandzik, L.; Grabner, L.; Krueger, J. The harms of demographic bias in deep face recognition research. In Proceedings of the 2019 International Conference on Biometrics (ICB), Crete, Greece, 4–7 June 2019; pp. 1–6. [Google Scholar]

- Dailey, M.N.; Joyce, C.; Lyons, M.J.; Kamachi, M.; Ishi, H.; Gyoba, J.; Cottrell, G.W. Evidence and a computational explanation of cultural differences in facial expression recognition. Emotion 2010, 10, 874–893. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Niinuma, K.; Onal Ertugrul, I.; Cohn, J.F.; Jeni, L.A. Systematic Evaluation of Design Choices for Deep Facial Action Coding Across Pose. Front. Comput. Sci. 2021, 3, 27. [Google Scholar] [CrossRef]

- Hassner, T.; Harel, S.; Paz, E.; Enbar, R. Effective face frontalization in unconstrained images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4295–4304. [Google Scholar]

- Sagonas, C.; Panagakis, Y.; Zafeiriou, S.; Pantic, M. Robust statistical face frontalization. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3871–3879. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Namba, S.; Sato, W.; Yoshikawa, S. Viewpoint Robustness of Automated Facial Action Unit Detection Systems. Appl. Sci. 2021, 11, 11171. https://doi.org/10.3390/app112311171

Namba S, Sato W, Yoshikawa S. Viewpoint Robustness of Automated Facial Action Unit Detection Systems. Applied Sciences. 2021; 11(23):11171. https://doi.org/10.3390/app112311171

Chicago/Turabian StyleNamba, Shushi, Wataru Sato, and Sakiko Yoshikawa. 2021. "Viewpoint Robustness of Automated Facial Action Unit Detection Systems" Applied Sciences 11, no. 23: 11171. https://doi.org/10.3390/app112311171

APA StyleNamba, S., Sato, W., & Yoshikawa, S. (2021). Viewpoint Robustness of Automated Facial Action Unit Detection Systems. Applied Sciences, 11(23), 11171. https://doi.org/10.3390/app112311171