Data Mining of Students’ Consumption Behaviour Pattern Based on Self-Attention Graph Neural Network

Abstract

1. Introduction

2. Related Work

3. Methods

3.1. Data Description

3.2. Data Preprocessing

| Algorithm 1 Data pre-processing |

|

- Indicators of regularity

- Amount of consumption

- Behaviour sequence pattern

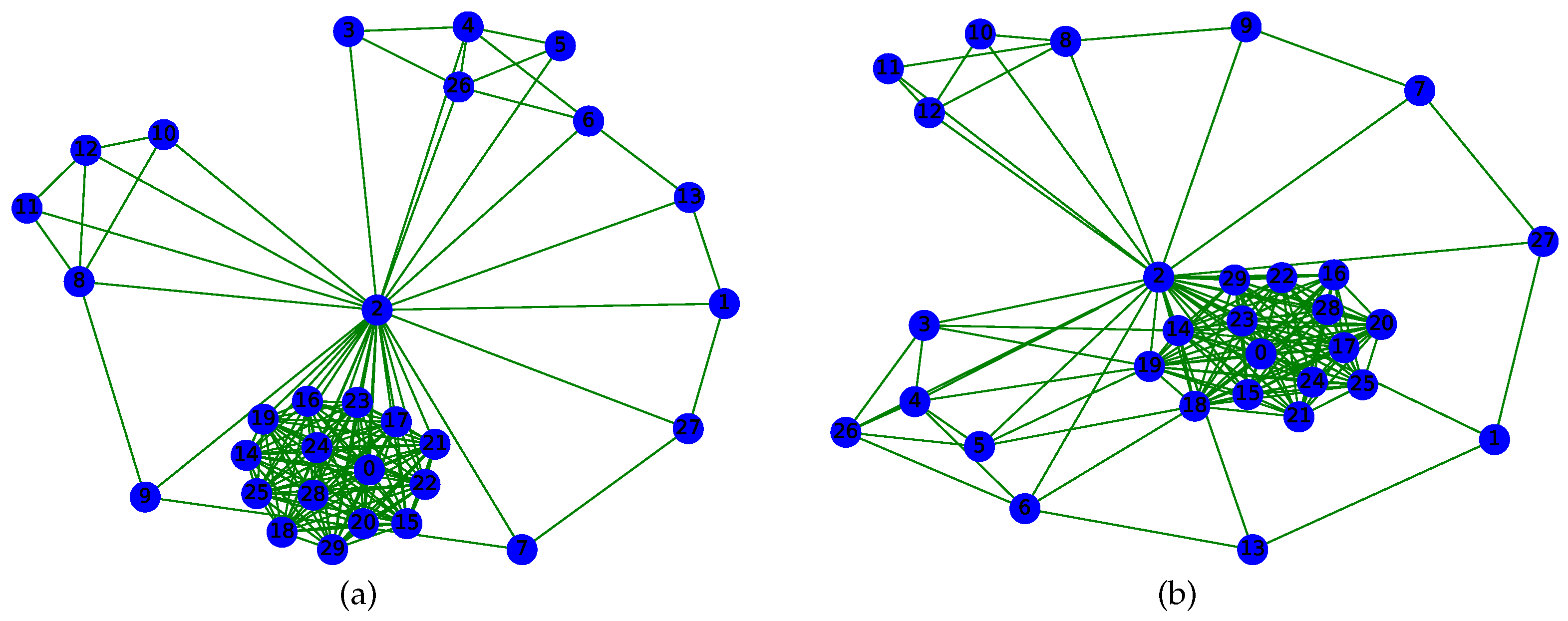

3.3. Graph Construction

3.3.1. p-Clique

3.3.2. Other Criterion

- Necessary connection: This condition means that the two nodes are interrelated, for example, the edge between the total-month-money and total-lunch-money. The cost of lunch must be a part of the total monthly cost, and there exists a connection between the two features.

- Unnecessary connection: This condition means that if the values of the two potentially related features are non-zero at the same time, there exists an edge between the two features. For example, if getting-up and breakfast-study are both non-zero at the same time, then there is a connection between the two features, which means that the student may tend to get up early for breakfast.

3.4. Model Description

| Algorithm 2 Graph classification based on improved self-attention GNN |

|

4. Result and Analysis

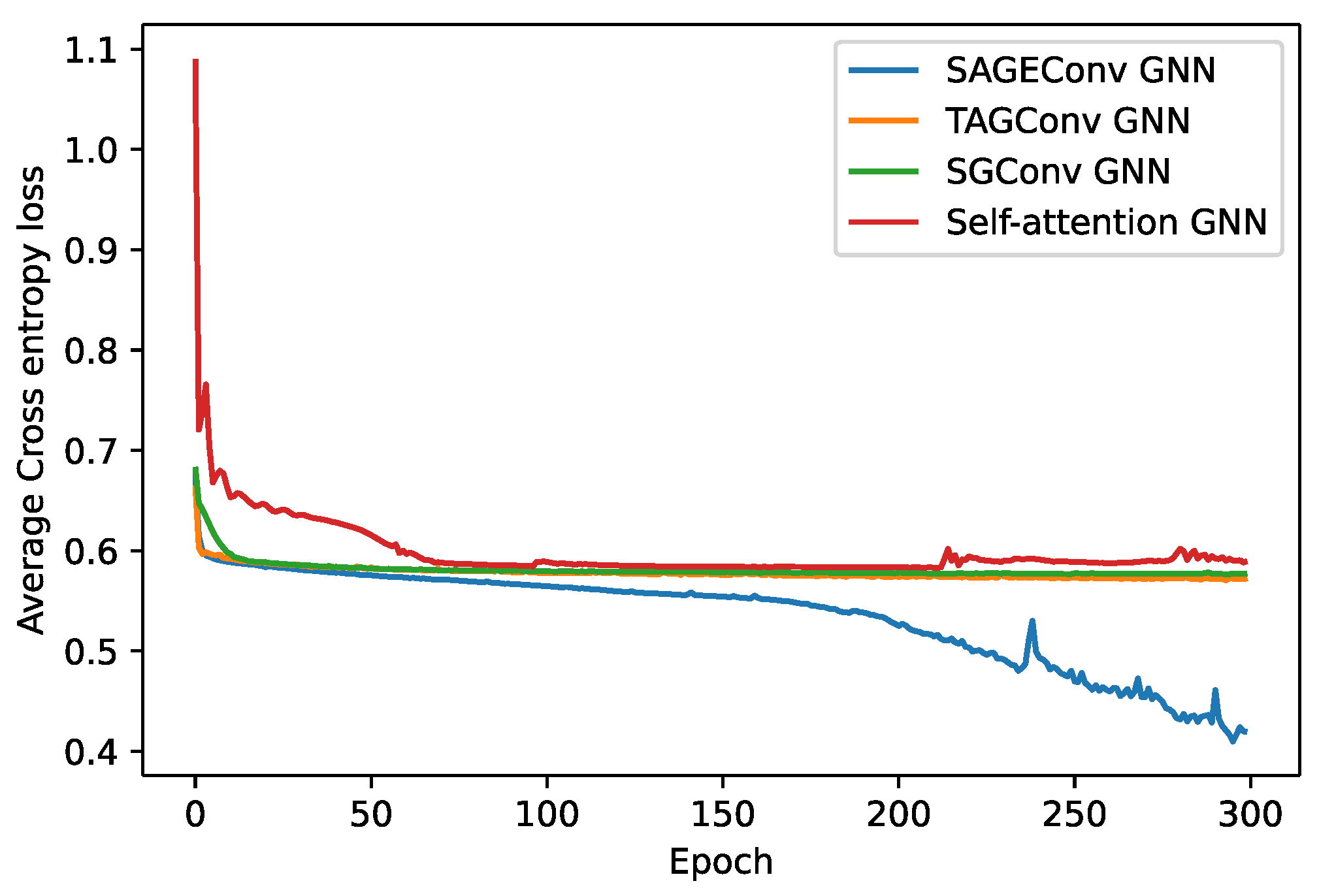

4.1. Experiment Result

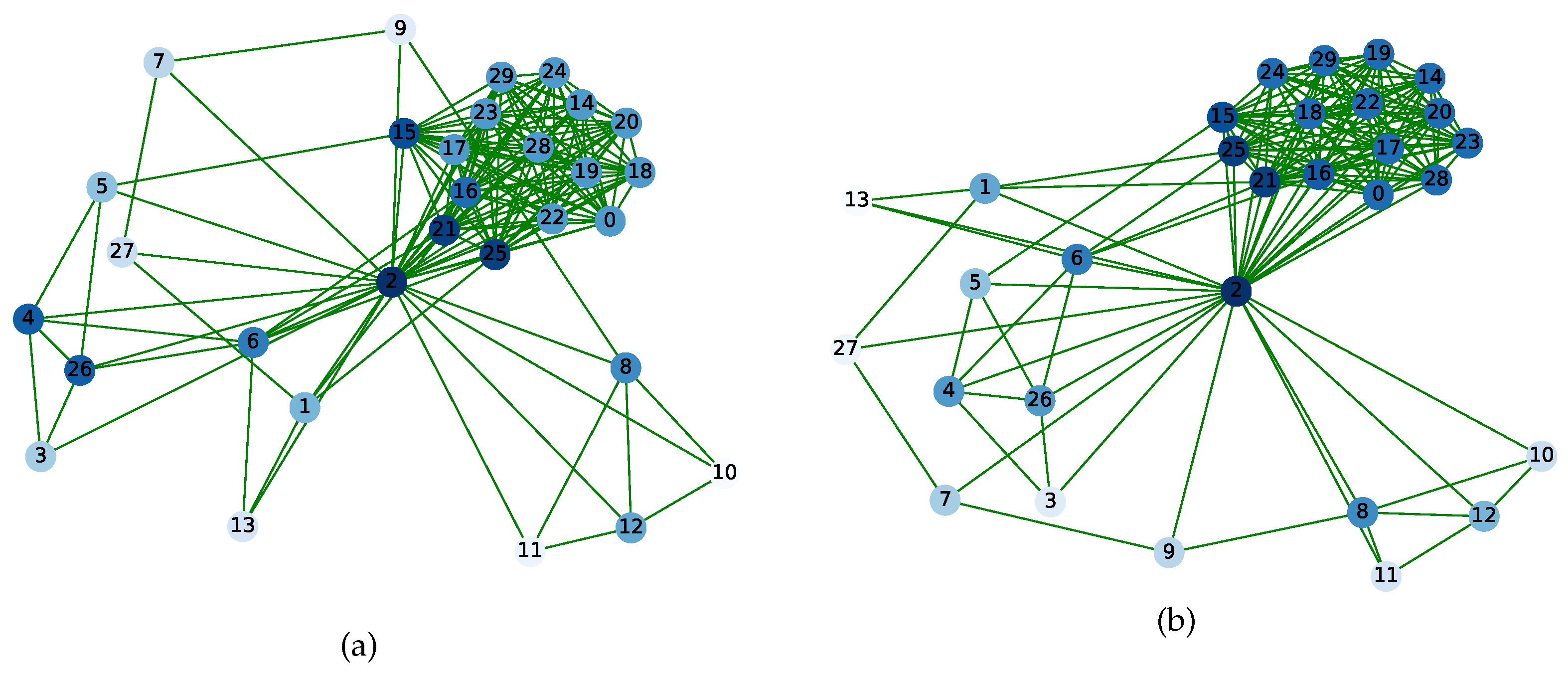

4.2. Result Analysis

5. Open Issues and Conclusions

5.1. Open Issues

- How to use nodes and edges to reflect the relationship between features is still an open question.

- The learning data is various, such as the data generated during MOOC online course learning, which inspires us to construct graphs to present more kinds of data for information mining.

- As for node score based on self-attention mechanism, the features represented by different nodes can be ranked by importance. Thus, how to combine traditional feature selection methods and GNN to determine useful features is also worth exploring.

- Through the comparison of scores, we can judge what the student’s next behaviour pattern is most likely to be. However, based on the score values obtained from training, new quantification methods are needed.

- How to build a behavioural network of all students, and integrate more information including curriculum arrangements, to conduct more diversified analysis, including skipping classes and social interactions, is encouraged.

5.2. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jugo, I.; Kovai, B.; Slavuj, V. Increasing the adaptivity of an intelligent tutoring system with educational data mining: A system overview. Int. J. Emerg. Technol. Learn. 2016, 11, 67–70. [Google Scholar] [CrossRef][Green Version]

- Grigorova, K.; Malysheva, E.; Bobrovskiy, S. Application of Data Mining and Process Mining approaches for improving e-Learning Processes. In Proceedings of the 3rd International Conference on Information Technology and Nanotechnology, Samara, Russia, 24–27 April 2017; Volume 1903, pp. 115–121. [Google Scholar] [CrossRef]

- Karthikeyan, V.G.; Thangaraj, P.; Karthik, S. Towards developing hybrid educational data mining model (HEDM) for efficient and accurate student performance evaluation. Soft Comput. 2020, 24, 18477–18487. [Google Scholar] [CrossRef]

- Anoopkumar, M.; Md Zubair Rahman, A. A Review on Data Mining techniques and factors used in Educational Data Mining to predict student amelioration. In Proceedings of the 2016 International Conference on Data Mining and Advanced Computing (SAPIENCE), Ernakulam, India, 16–18 March 2016; pp. 122–133. [Google Scholar] [CrossRef]

- Fernandes, E.; Carvalho, R.; Holanda, M.; Van Erven, G. Educational data mining: Discovery standards of academic performance by students in public high schools in the federal district of Brazil. In World Conference on Information Systems and Technologies; Springer: Cham, Switzerland, 2017; Volume 569, pp. 287–296. [Google Scholar] [CrossRef]

- Nuankaew, W.; Nuankaew, P.; Teeraputon, D.; Phanniphong, K.; Bussaman, S. Perception and attitude toward self-regulated learning of Thailand’s students in educational data mining perspective. Int. J. Emerg. Technol. Learn. 2019, 14, 34–49. [Google Scholar] [CrossRef]

- Sabourin, J.; McQuiggan, S.; de Waal, A. SAS Tools for educational data mining. In Proceedings of the EDM 2016, Raleigh, NC, USA, 29 June–2 July 2016; pp. 632–633. [Google Scholar]

- Xu, S.; Wang, J. Dynamic extreme learning machine for data stream classification. Neurocomputing 2017, 238, 433–449. [Google Scholar] [CrossRef]

- Costa, E.B.; Fonseca, B.; Santana, M.A.; de Araujo, F.F.; Rego, J. Evaluating the effectiveness of educational data mining techniques for early prediction of students academic failure in introductory programming courses. Comput. Hum. Behav. 2017, 73, 247–256. [Google Scholar] [CrossRef]

- Ducange, P.; Pecori, R.; Sarti, L.; Vecchio, M. Educational big data mining: How to enhance virtual learning environments. In International Conference on EUropean Transnational Education; Springer: Berlin/Heidelberg, Germany, 2017; Volume 527, pp. 681–690. [Google Scholar] [CrossRef]

- Chen, J.; Zhao, J. An educational data mining model for supervision of network learning process. Int. J. Emerg. Technol. Learn. 2018, 13, 67–77. [Google Scholar] [CrossRef]

- de J. Costa, J.; Bernardini, F.; Artigas, D.; Viterbo, J. Mining direct acyclic graphs to find frequent substructures—An experimental analysis on educational data. Inf. Sci. 2019, 482, 266–278. Available online: https://www.sciencedirect.com/science/article/pii/S0020025519300398 (accessed on 11 January 2019). [CrossRef]

- Malkiewich, L.; Baker, R.S.; Shute, V.; Kai, S.; Paquette, L. Classifying behaviour to elucidate elegant problem solving in an educational game. In Proceedings of the Ninth International Conference on Educational Data Mining, Raleigh, NC, USA, 29 June–2 July 2016; pp. 448–453. [Google Scholar]

- Li, Y.; Li, D. University students’ behaviour characteristics analysis and prediction method based on combined data mining model. In Proceedings of the 2020 3rd International Conference on Computers in Management and Business, Tokyo, Japan, 31 January–2 February 2020; pp. 9–13. [Google Scholar] [CrossRef]

- Zheng, L.; Xia, D.; Zhao, X.; Tan, L.; Li, H.; Chen, L.; Liu, W. Spatial–temporal travel pattern mining using massive taxi trajectory data. Phys. A Stat. Mech. Its Appl. 2018, 501, 24–41. [Google Scholar] [CrossRef]

- Altaf, S.; Soomro, W.; Rawi, M.I.M. Student Performance Prediction using Multi-Layers Artificial Neural Networks: A case study on educational data mining. In Proceedings of the 2019 3rd International Conference on Information System and Data Mining, Houston, TX, USA, 6–8 April 2019; pp. 59–64. [Google Scholar] [CrossRef]

- Nakagawa, H.; Iwasawa, Y.; Matsuo, Y. End-to-end deep knowledge tracing by learning binary question-embedding. In Proceedings of the 2018 IEEE International Conference on Data Mining Workshops (ICDMW), Singapore, 17–20 November 2018; pp. 334–342. [Google Scholar] [CrossRef]

- Pascanu, R.; Mikolov, T.; Bengio, Y. On the difficulty of training recurrent neural networks. In Proceedings of the International Conference on Machine Learning, Atlanta, GA, USA, 17–19 June 2013; Number PART 3. pp. 2347–2355. [Google Scholar]

- Tseng, C.W.; Chou, J.J.; Tsai, Y.C. Text mining analysis of teaching evaluation questionnaires for the selection of outstanding teaching faculty members. IEEE Access 2018, 6, 72870–72879. [Google Scholar] [CrossRef]

- Morsy, S.; Karypis, G. A study on curriculum planning and its relationship with graduation GPA and time to degree. In Proceedings of the 9th International Conference on Learning Analytics & Knowledge, Tempe, AZ, USA, 4–8 March 2019; pp. 26–35. [Google Scholar] [CrossRef]

- Hu, Q.; Polyzou, A.; Karypis, G.; Rangwala, H. Enriching course-Specific regression models with content features for grade prediction. In Proceedings of the 2017 IEEE International Conference on Data Science and Advanced Analytics (DSAA), Tokyo, Japan, 19–21 October 2017; Volume 2018, pp. 504–513. [Google Scholar] [CrossRef]

- Yang, Y.; Liu, H.; Carbonell, J.; Ma, W. Concept graph learning from educational data. In Proceedings of the Eighth ACM International Conference on Web Search and Data Mining, Shanghai, China, 2–6 February 2015; pp. 159–168. [Google Scholar] [CrossRef]

- Aldowah, H.; Al-Samarraie, H.; Fauzy, W.M. Educational data mining and learning analytics for 21st century higher education: A review and synthesis. Telemat. Inform. 2019, 37, 13–49. [Google Scholar] [CrossRef]

- Jones, K.M.; Rubel, A.; LeClere, E. A matter of trust: Higher education institutions as information fiduciaries in an age of educational data mining and learning analytics. J. Assoc. Inf. Sci. Technol. 2020, 71, 1227–1241. [Google Scholar] [CrossRef]

- Amrieh, E.A.; Hamtini, T.; Aljarah, I. Mining educational data to predict student’s academic performance using ensemble methods. Int. J. Database Theory Appl. 2016, 9, 119–136. [Google Scholar] [CrossRef]

- Bhagavan, K.S.; Thangakumar, J.; Subramanian, D.V. Predictive analysis of student academic performance and employability chances using HLVQ algorithm. J. Ambient Intell. Humaniz. Comput. 2021, 12, 3789–3797. [Google Scholar] [CrossRef]

- Gao, H.; Qi, G.; Ji, Q. Schema induction from incomplete semantic data. Intell. Data Anal. 2018, 22, 1337–1353. [Google Scholar] [CrossRef]

- Wang, X.; Yu, X.; Guo, L.; Liu, F.; Xu, L. Student performance prediction with short-term sequential campus behaviours. Information 2020, 11, 201. [Google Scholar] [CrossRef]

- Wu, Z.; He, T.; Mao, C.; Huang, C. Exam paper generation based on performance prediction of student group. Inf. Sci. 2020, 532, 72–90. Available online: https://www.sciencedirect.com/science/article/pii/S0020025520303716 (accessed on 4 May 2020). [CrossRef]

- Sun, Y.; Chai, R. An early-warning model for online learners based on user portrait. Ing. Des Syst. D’Inf. 2020, 25, 535–541. [Google Scholar] [CrossRef]

- Onan, A. Sentiment analysis on massive open online course evaluations: A text mining and deep learning approach. Comput. Appl. Eng. Educ. 2021, 29, 572–589. [Google Scholar] [CrossRef]

- Zhang, H.; Huang, T.; Lv, Z.; Liu, S.; Zhou, Z. MCRS: A course recommendation system for MOOCs. Multimed. Tools Appl. 2018, 77, 7051–7069. [Google Scholar] [CrossRef]

- Kardan, A.A.; Ebrahimi, M. A novel approach to hybrid recommendation systems based on association rules mining for content recommendation in asynchronous discussion groups. Inf. Sci. 2013, 219, 93–110. Available online: https://www.sciencedirect.com/science/article/pii/S0020025512004756 (accessed on 24 July 2012). [CrossRef]

- Xie, T.; Zheng, Q.; Zhang, W. Mining temporal characteristics of behaviours from interval events in e-learning. Inf. Sci. 2018, 447, 169–185. Available online: https://www.sciencedirect.com/science/article/pii/S0020025518301993 (accessed on 1 June 2018). [CrossRef]

- Dalvi-Esfahani, M.; Alaedini, Z.; Nilashi, M.; Samad, S.; Asadi, S.; Mohammadi, M. Students green information technology behaviour: Beliefs and personality traits. J. Clean. Prod. 2020, 257, 120406. [Google Scholar] [CrossRef]

- Islam, M.T.; Dias, P.; Huda, N. Young consumers e-waste awareness, consumption, disposal, and recycling behaviour: A case study of university students in Sydney, Australia. J. Clean. Prod. 2021, 282, 124490. [Google Scholar] [CrossRef]

- Mei, G.; Hou, Y.; Zhang, T.; Xu, W. Behaviour Represents Achievement: Academic Performance Analytics of Engineering Students via Campus Data. In 2020 Chinese Automation Congress (CAC); IEEE: Piscataway, NJ, USA, 2020; pp. 4348–4353. [Google Scholar] [CrossRef]

- Cao, Y.; Gao, J.; Lian, D.; Rong, Z.; Shi, J.; Wang, Q.; Wu, Y.; Yao, H.; Zhou, T. Orderliness predicts academic performance: Behavioural analysis on campus lifestyle. J. R. Soc. Interface 2018, 15, 20180210. [Google Scholar] [CrossRef]

- Vijayalakshmi, M.; Salimath, S.; Shettar, A.S.; Bhadri, G. A study of team formation strategies and their impact on individual student learning using educational data mining (EDM). In Proceedings of the 2018 IEEE Tenth International Conference on Technology for Education (T4E), Chennai, India, 10–13 December 2018; pp. 182–185. [Google Scholar] [CrossRef]

- Hao, J.; Liu, L.; von Davier, A.A.; Kyllonen, P.; Kitchen, C. Collaborative Problem Solving Skills versus Collaboration Outcomes: Findings from Statistical Analysis and Data Mining; International Educational Data Mining Society: Raleigh, NC, USA, 2016; pp. 382–387. [Google Scholar]

- Gowri, G.; Thulasiram, R.; Baburao, M.A. Educational Data Mining Application for Estimating Students Performance in Weka Environment. In IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2017; Volume 263. [Google Scholar] [CrossRef]

- Jovanovic, M.; Vukicevic, M.; Milovanovic, M.; Minovic, M. Using data mining on student behaviour and cognitive style data for improving e-learning systems: A case study. Int. J. Comput. Intell. Syst. 2012, 5, 597–610. [Google Scholar] [CrossRef]

- Viloria, A.; Garcia Guliany, J.; Niebles Nuz, W.; Hernandez Palma, H.; Niebles Nuz, L. Data Mining Applied in School Dropout Prediction. J. Phys. Conf. Ser. 2020, 1432. [Google Scholar] [CrossRef]

- Injadat, M.; Moubayed, A.; Nassif, A.B.; Shami, A. Multi-split optimized bagging ensemble model selection for multi-class educational data mining. Appl. Intell. 2020, 50, 4506–4528. [Google Scholar] [CrossRef]

- Matayoshi, J.; Cosyn, E.; Uzun, H. Are We There Yet? Evaluating the Effectiveness of a Recurrent Neural Network-Based Stopping Algorithm for an Adaptive Assessment. Int. J. Artif. Intell. Educ. 2021, 31, 304–336. [Google Scholar] [CrossRef]

- Issa, S.; Adekunle, O.; Hamdi, F.; Cherfi, S.S.S.; Dumontier, M.; Zaveri, A. Knowledge Graph Completeness: A Systematic Literature Review. IEEE Access 2021, 9, 31322–31339. [Google Scholar] [CrossRef]

- Vashishth, S.; Yadati, N.; Talukdar, P. Graph-based deep learning in natural language processing. In Proceedings of the 7th ACM IKDD CoDS and 25th COMAD, Hyderabad, India, 5–7 January 2020; pp. 371–372. [Google Scholar] [CrossRef]

- Osman, A.H.; Barukub, O.M. Graph-Based Text Representation and Matching: A Review of the State of the Art and Future Challenges. IEEE Access 2020, 8, 87562–87583. [Google Scholar] [CrossRef]

- Chen, Y.; Wu, Y.; Ma, S.; King, I. A Literature Review of Recent Graph Embedding Techniques for Biomedical Data. In International Conference on Neural Information Processing 2020; Springer: Cham, Switzerland, 2020; Volume 1333, pp. 21–29. [Google Scholar] [CrossRef]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph attention networks. arXiv 2017, arXiv:1710.10903. [Google Scholar]

- Kherad, M.; Bidgoly, A.J. Recommendation system using a deep learning and graph analysis approach. arXiv 2020, arXiv:2004.08100. [Google Scholar]

- Wang, R.; Ma, X.; Jiang, C.; Ye, Y.; Zhang, Y. Heterogeneous information network-based music recommendation system in mobile networks. Comput. Commun. 2020, 150, 429–437. [Google Scholar] [CrossRef]

- Durand, G.; Belacel, N.; LaPlante, F. Graph theory based model for learning path recommendation. Inf. Sci. 2013, 251, 10–21. Available online: https://www.sciencedirect.com/science/article/pii/S0020025513003149 (accessed on 30 April 2013). [CrossRef]

- Zhang, R.; Zettsu, K.; Kidawara, Y.; Kiyoki, Y.; Zhou, A. Context-sensitive Web service discovery over the bipartite graph model. Front. Comput. Sci. 2013, 7, 875–893. [Google Scholar] [CrossRef]

- Zhao, X.; Liang, J.; Wang, J. A community detection algorithm based on graph compression for large-scale social networks. Inf. Sci. 2021, 551, 358–372. [Google Scholar] [CrossRef]

- Chen, J.; Li, R.; Zhao, S.; Zhang, Y.P. A New Clustering Cover Algorithm Based on Graph Representation for Community Detection. Tien Tzu Hsueh Pao/Acta Electron. Sin. 2020, 48, 1680–1687. [Google Scholar] [CrossRef]

- Du, J.; Zhang, S.; Wu, G.; Moura, J.M.; Kar, S. Topology adaptive graph convolutional networks. arXiv 2017, arXiv:1710.10370. [Google Scholar]

- Hamilton, W.L.; Ying, R.; Leskovec, J. Inductive representation learning on large graphs. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 1025–1035. [Google Scholar]

- Wu, F.; Souza, A.; Zhang, T.; Fifty, C.; Yu, T.; Weinberger, K. Simplifying graph convolutional networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 10–15 June 2019; pp. 6861–6871. [Google Scholar]

- Cuji Chacha, B.R.; Gavilanes Lopez, W.L.; Vicente Guerrero, V.X.; Villacis Villacis, W.G. Student Dropout Model Based on Logistic Regression. In International Conference on Applied Technologies 2020; Springer: Cham, Switzerland, 2020; Volume 1194, pp. 321–333. [Google Scholar] [CrossRef]

- Dervisevic, O.; Zunic, E.; Donko, D.; Buza, E. Application of KNN and Decision Tree Classification Algorithms in the Prediction of Education Success from the Edu720 Platform. In Proceedings of the 2019 4th International Conference on Smart and Sustainable Technologies (SpliTech), Split, Croatia, 18–21 June 2019. [Google Scholar] [CrossRef]

- Mkwazu, H.R.; Yan, C. Grade Prediction Method for University Course Selection Based on Decision Tree. In Proceedings of the 2020 International Conference on Aviation Safety and Information Technology, Weihai, China, 14–16 October 2020; pp. 593–599. [Google Scholar] [CrossRef]

| ID Number | Consumption Money (RMB Cent) | Time | Action |

|---|---|---|---|

| 36984 | 200 | 2018/5/2 9:18 | Breakfast |

| 36984 | 400 | 2018/5/2 11:47 | Lunch |

| 36984 | 1500 | 2018/5/2 16:52 | Dinner |

| 17347 | 300 | 2018/5/31 11:24 | Lunch |

| 17347 | 250 | 2018/5/31 11:25 | Lunch |

| 17347 | 38 | 2018/5/31 11:26 | Lunch |

| 17347 | 150 | 2018/5/31 11:27 | Lunch |

| 10075 | 180 | 2018/5/20 11:16 | Lunch |

| 10075 | 200 | 2018/5/20 11:39 | Lunch |

| 10075 | 1500 | 2018/5/20 17:50 | Dinner |

| 10075 | 300 | 2018/5/21 07:56 | Breakfast |

| 10075 | 50 | 2018/5/21 07:56 | Breakfast |

| Behaviour | Action Explanation |

|---|---|

| Dinner | Consumption after 4:00 p.m. in the cafeteria. |

| Lunch | Consumption between 10:00 a.m. and 4:00 p.m. in the cafeteria |

| Breakfast | Consumption before 10:00 a.m. in the cafeteria. |

| Supermarket | Consumption in the supermarket |

| Library | Consumption in the library |

| Dormitory bathroom | Consumption in the dormitory bathroom |

| Dormitory boiled water | Consumption on the dormitory water |

| Gym | Consumption in the school gym |

| School bus | Consumption caused by taking the school bus between campuses |

| Management office | Consumption in the school management office |

| Classroom boiled water | Consumption of water available in the classroom |

| Type | Feature Name | Feature Explanation | Intermediate | Node ID |

|---|---|---|---|---|

| Indicators of regularity | Study-actual | Actual number of visits to library or classroom | No | 0 |

| Paid-days | Number of days with consumption records | No | 2 | |

| Getting-up-num | Number of wake-ups | No | 3 | |

| Amount of consumption | total-meals | Total number of meals | Yes | 26 |

| Total-supermarket-num | Total number of times to go shopping | Yes | 7 | |

| Total-month-money | Total monthly cost | Yes | 8 | |

| Behaviour sequence pattern | Dinner-dormitory-num | Number of times in the dormitory after dinner | No | 13 |

| Lunch-study-num | Number of times to study after lunch | No | 15 | |

| Dinner-study-num | Number of times to study after dinner | No | 16 | |

| Dinner-study-dorm-bathroom-num | Number of times to study after dinner and go back to the dorm | No | 21 |

| Model | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Improved self-attention graph neural network | 84.86% | 94.84% | 84.86% | 91.81% |

| Topology adaptive graph convolutional network (TAGConv GNN) [57] | 68.57% | 62.60% | 68.57% | 64.88% |

| GraphSAGE convolutional network (SAGEConv GNN) [58] | 65.76% | 65.29% | 70.29% | 67.04% |

| Simplified graph convolutional network (SGConv GNN) [59] | 70.43% | 66.19% | 70.43% | 67.71% |

| Logistic regression [60] | 61.14% | 81.39% | 61.14% | 66.64% |

| KNN [61] | 84.00% | 76.57% | 84.00% | 78.44% |

| Decision tree [62] | 76.00% | 77.00% | 76.00% | 77.00% |

| Model | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Improved self-attention graph neural network | 79.43% | 97.20% | 79.43% | 87.28% |

| Topology adaptive graph convolutional network (TAGConv GNN) [57] | 64.71% | 62.44% | 64.71% | 62.99% |

| GraphSAGE convolutional network (SAGEConv GNN) [58] | 66.06% | 65.91% | 65.86% | 65.43% |

| Simplified graph convolutional network (SGConv GNN) [59] | 67.00% | 63.51% | 67.00% | 64.56% |

| Logistic regression [60] | 48.29% | 72.80% | 48.29% | 53.49% |

| KNN [61] | 79.14% | 68.93% | 79.14% | 68.00% |

| Decision tree [62] | 68.05% | 67.16% | 68.09% | 68.17% |

| Behavioural Pattern Sequence | Average Times of Outstanding Students | Average Times of Lagging Students | Ratio | Node ID |

|---|---|---|---|---|

| Study-actual | 5.3516 | 2.2573 | 2.3708 | 0 |

| Paid-days | 29.5463 | 27.5556 | 1.0722 | 2 |

| Getting-up-num | 10.7108 | 4.9357 | 2.1701 | 3 |

| Dinner-dormitory-num | 8.3119 | 6.0819 | 1.3667 | 13 |

| Breakfast-study-num | 1.2968 | 0.4269 | 3.0377 | 14 |

| Lunch-study-num | 1.0605 | 0.3275 | 3.2383 | 15 |

| Dinner-study-num | 0.7278 | 0.2982 | 2.4402 | 16 |

| Classroom-lunch-or-dinner-num | 1.8204 | 0.8655 | 2.1033 | 18 |

| Breakfast-study-lunch-num | 0.7675 | 0.2690 | 2.8530 | 19 |

| Dinner-study-dorm-bathroom-num | 0.2287 | 0.0526 | 4.4359 | 21 |

| Total-meals | 56.3516 | 42.7252 | 1.3189 | 26 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, F.; Qu, S. Data Mining of Students’ Consumption Behaviour Pattern Based on Self-Attention Graph Neural Network. Appl. Sci. 2021, 11, 10784. https://doi.org/10.3390/app112210784

Xu F, Qu S. Data Mining of Students’ Consumption Behaviour Pattern Based on Self-Attention Graph Neural Network. Applied Sciences. 2021; 11(22):10784. https://doi.org/10.3390/app112210784

Chicago/Turabian StyleXu, Fangyao, and Shaojie Qu. 2021. "Data Mining of Students’ Consumption Behaviour Pattern Based on Self-Attention Graph Neural Network" Applied Sciences 11, no. 22: 10784. https://doi.org/10.3390/app112210784

APA StyleXu, F., & Qu, S. (2021). Data Mining of Students’ Consumption Behaviour Pattern Based on Self-Attention Graph Neural Network. Applied Sciences, 11(22), 10784. https://doi.org/10.3390/app112210784