VRChem: A Virtual Reality Molecular Builder

Abstract

:1. Introduction

1.1. Immersive Virtual Reality

1.2. Virtual Reality as an Educational Tool

1.3. Existing Molecular Modeling VR Applications

2. Implementation

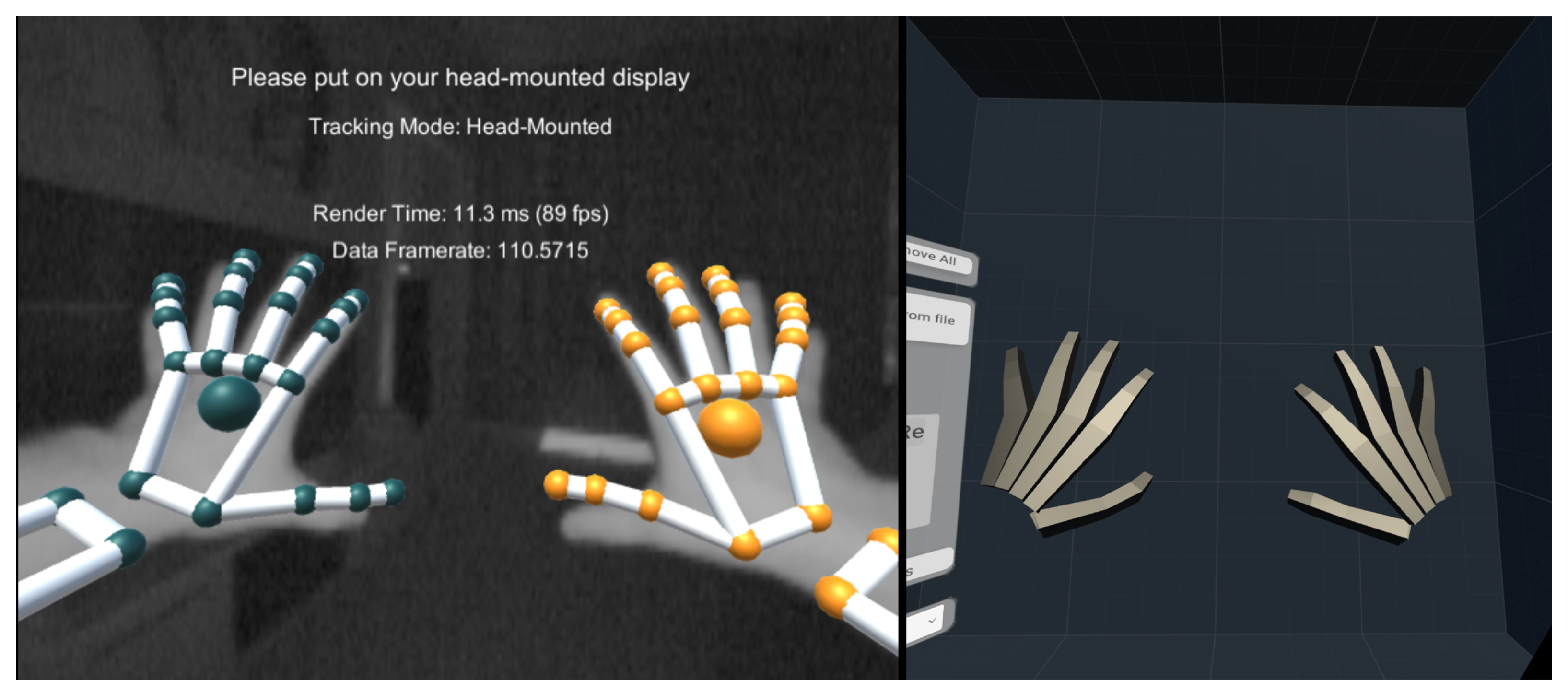

2.1. Hardware

2.2. Design

- A quick and simple system for building organic molecules. The modeling mechanics should conform with those of common modeling software for faster learning.

- A simple system for molecular editing: move atoms, and adjust bond lengths, rotational angles and dihedral angles.

- A mode for measuring bond lengths, rotational angles and dihedral angles.

- An automatic saturation of new atoms following VSEPR theory.

- Edit history with undo and redo.

- The ability to save and load molecular models in common file format(s).

- Coarse real-time structure optimization using classical force field-based methods.

- Animations and clean visual appearance for enjoyable VR use.

2.3. Software Structure

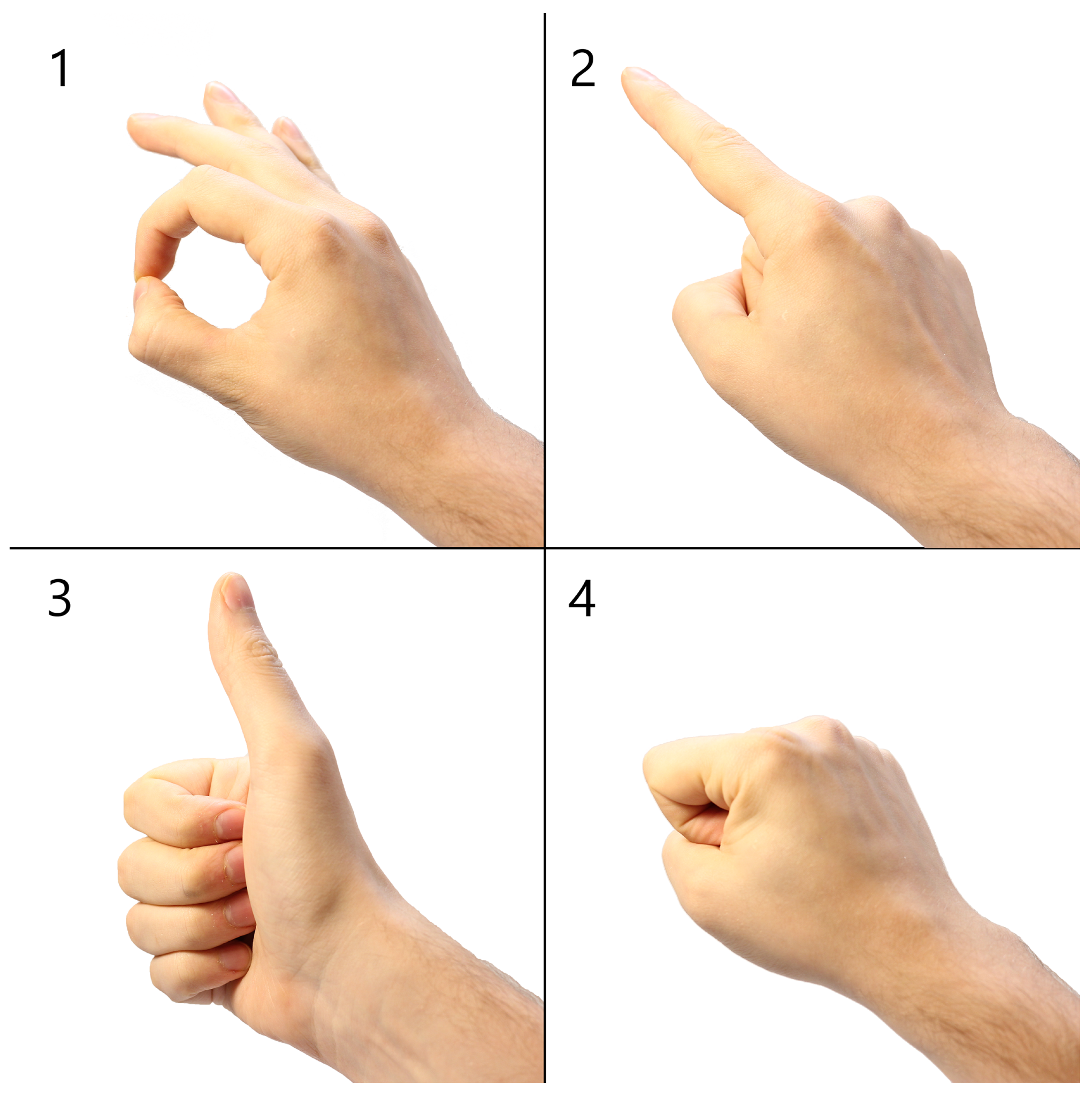

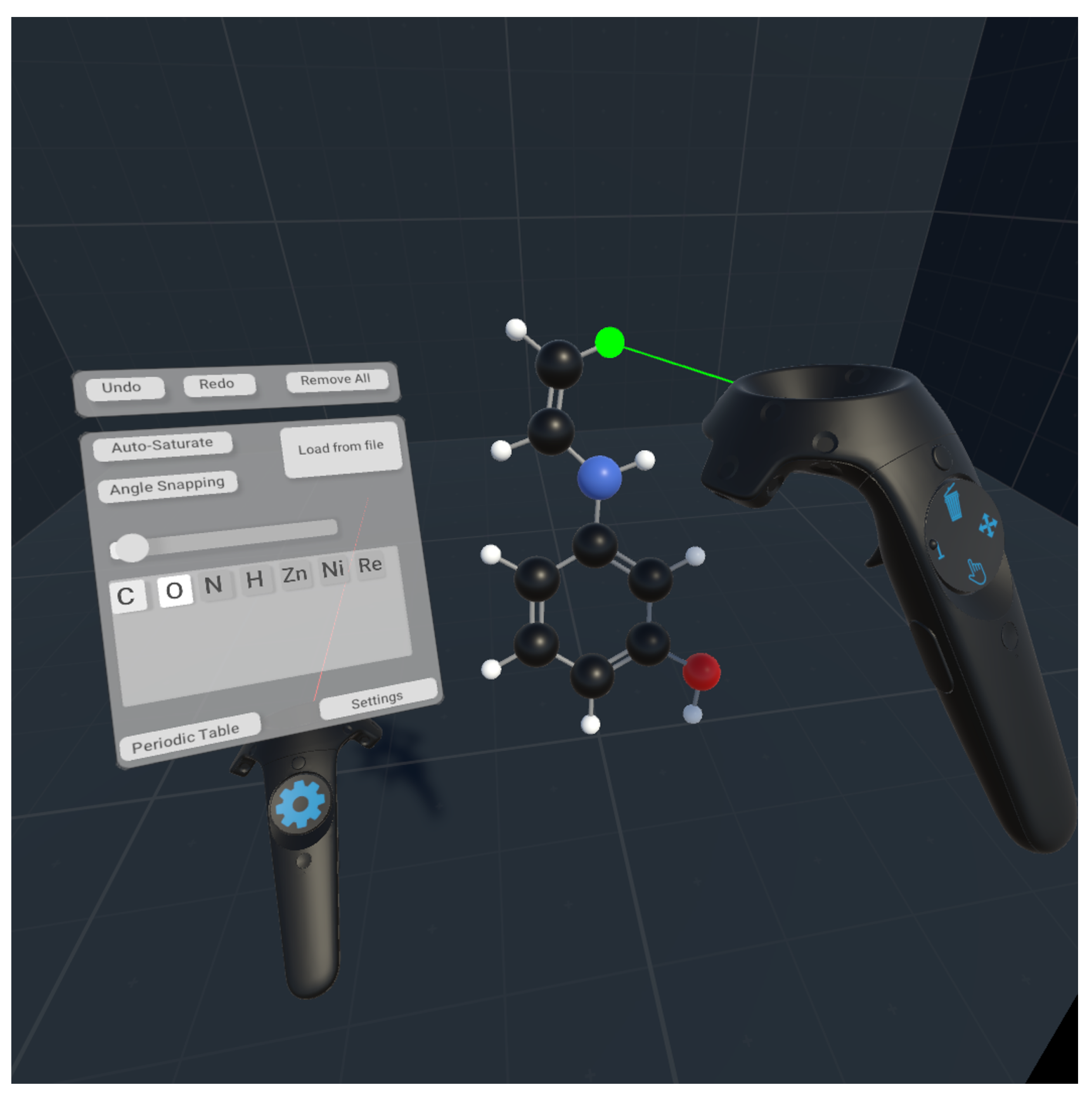

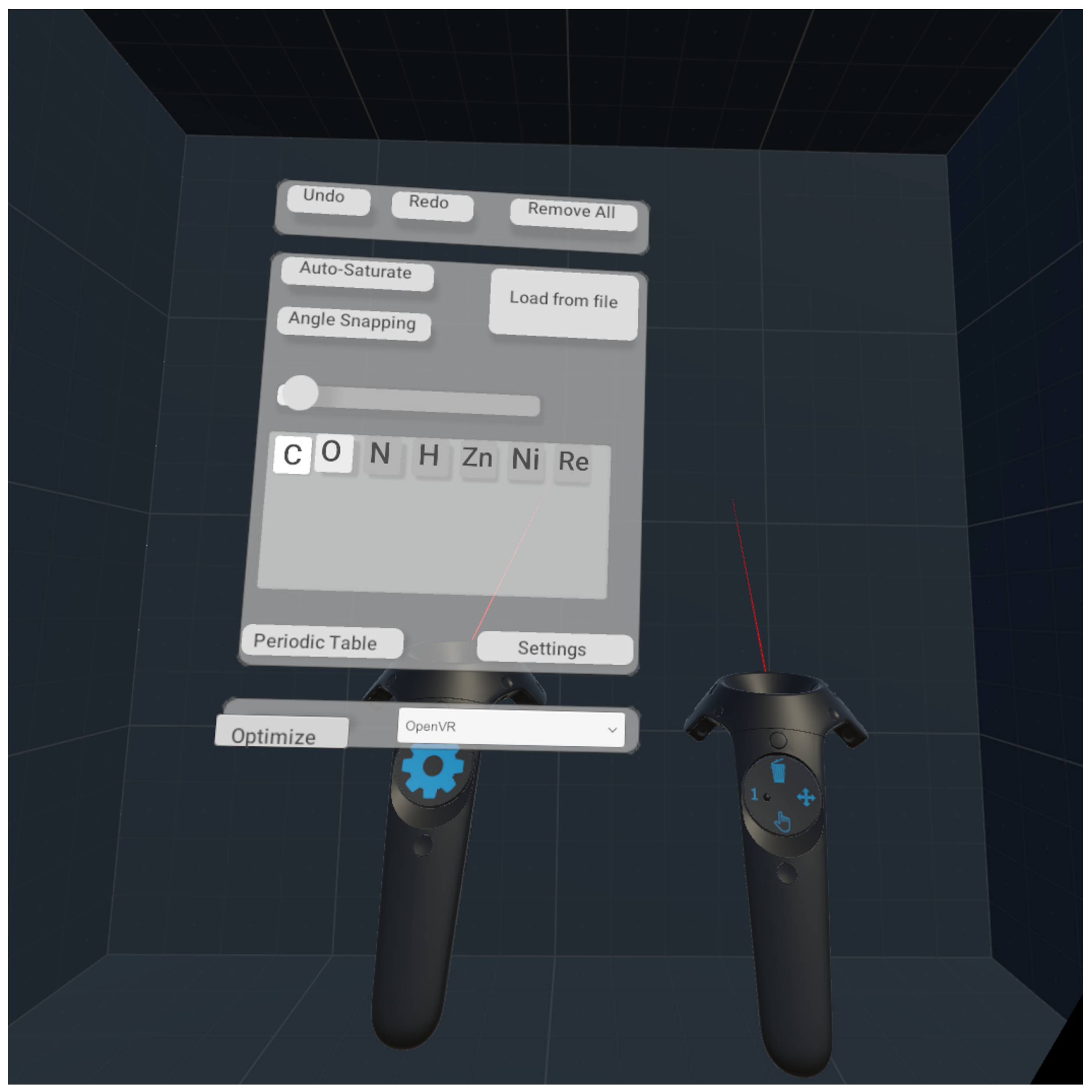

2.4. User Experience

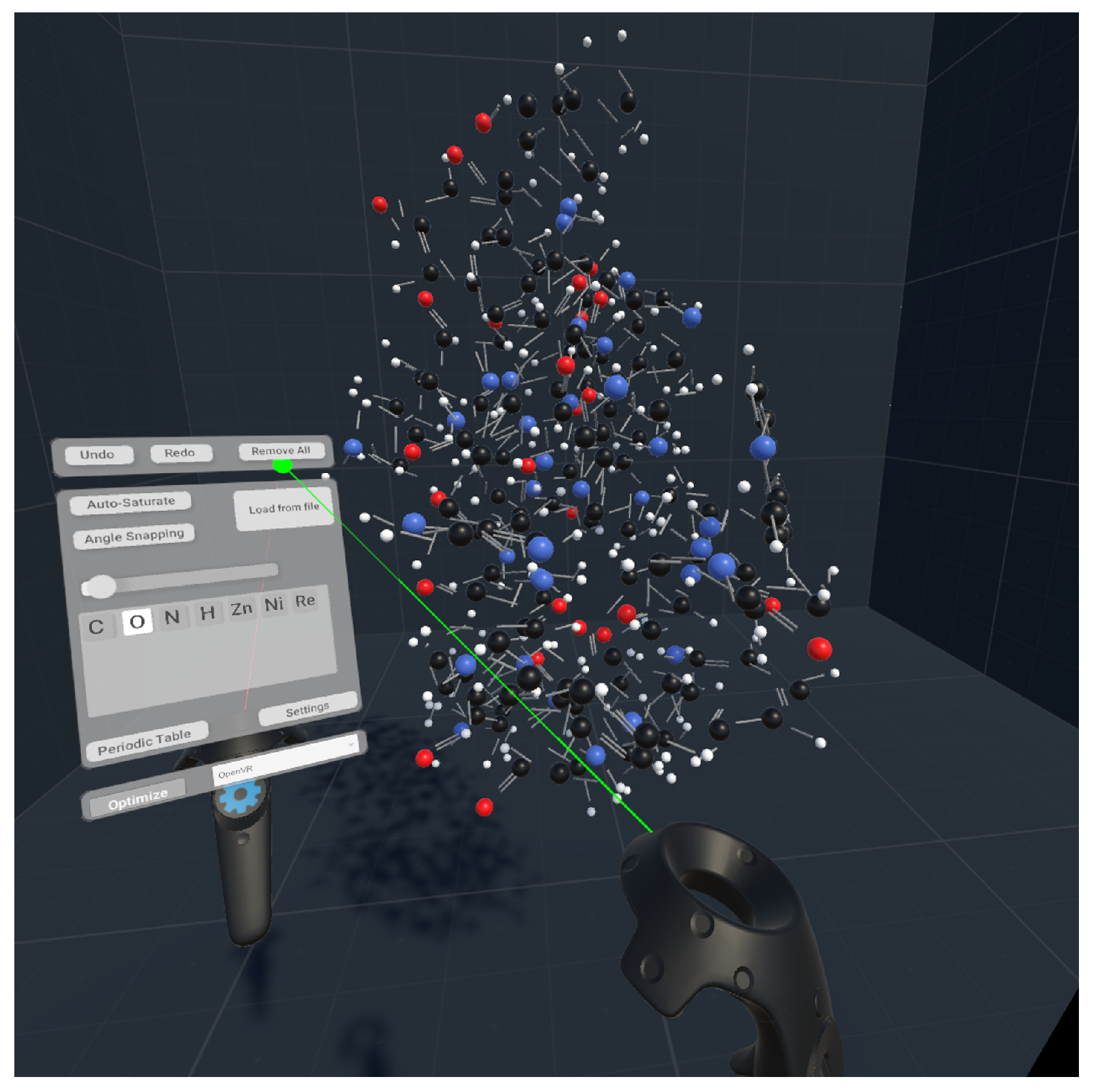

2.4.1. Application Menu

2.4.2. Functions and Usage

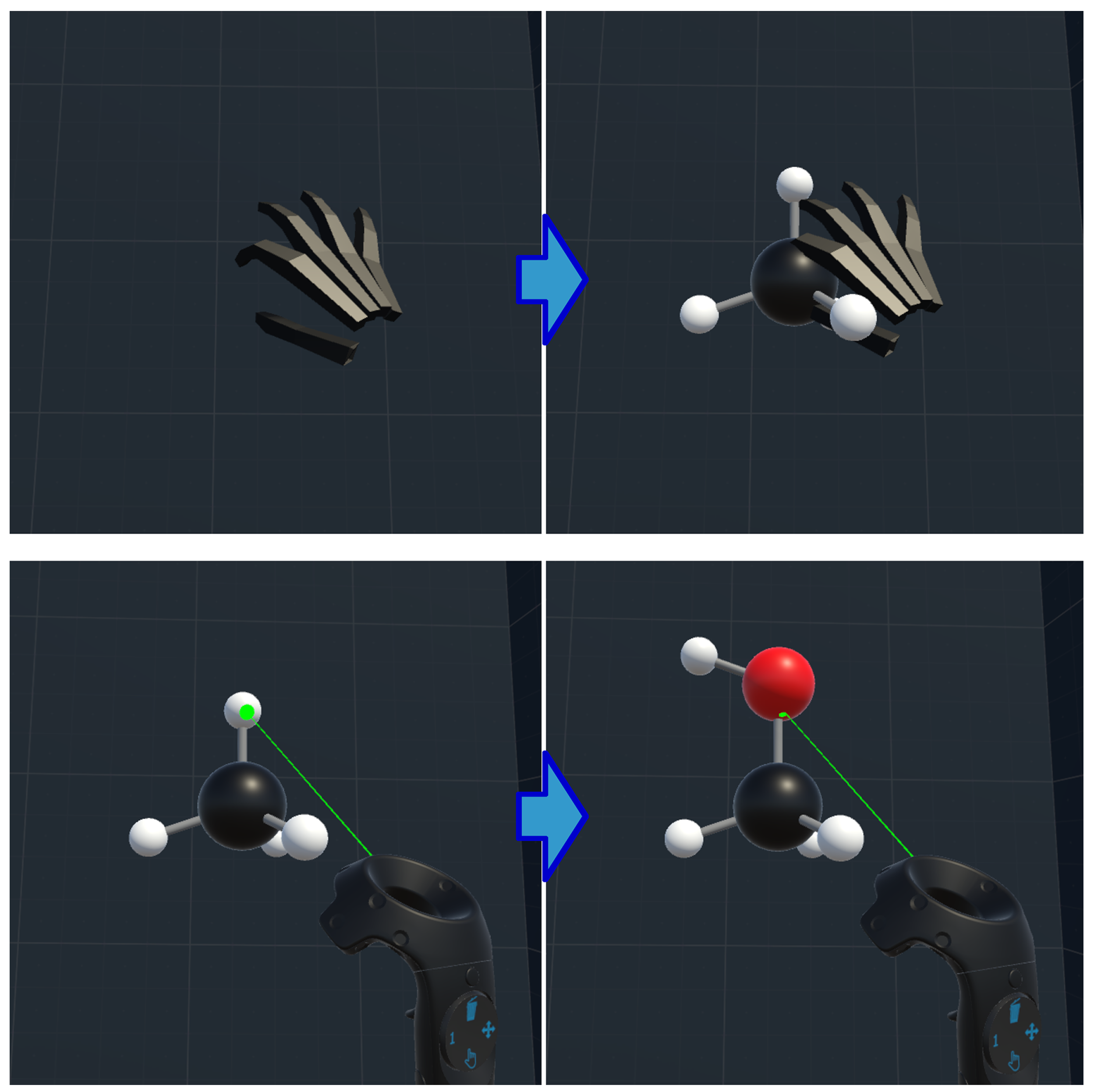

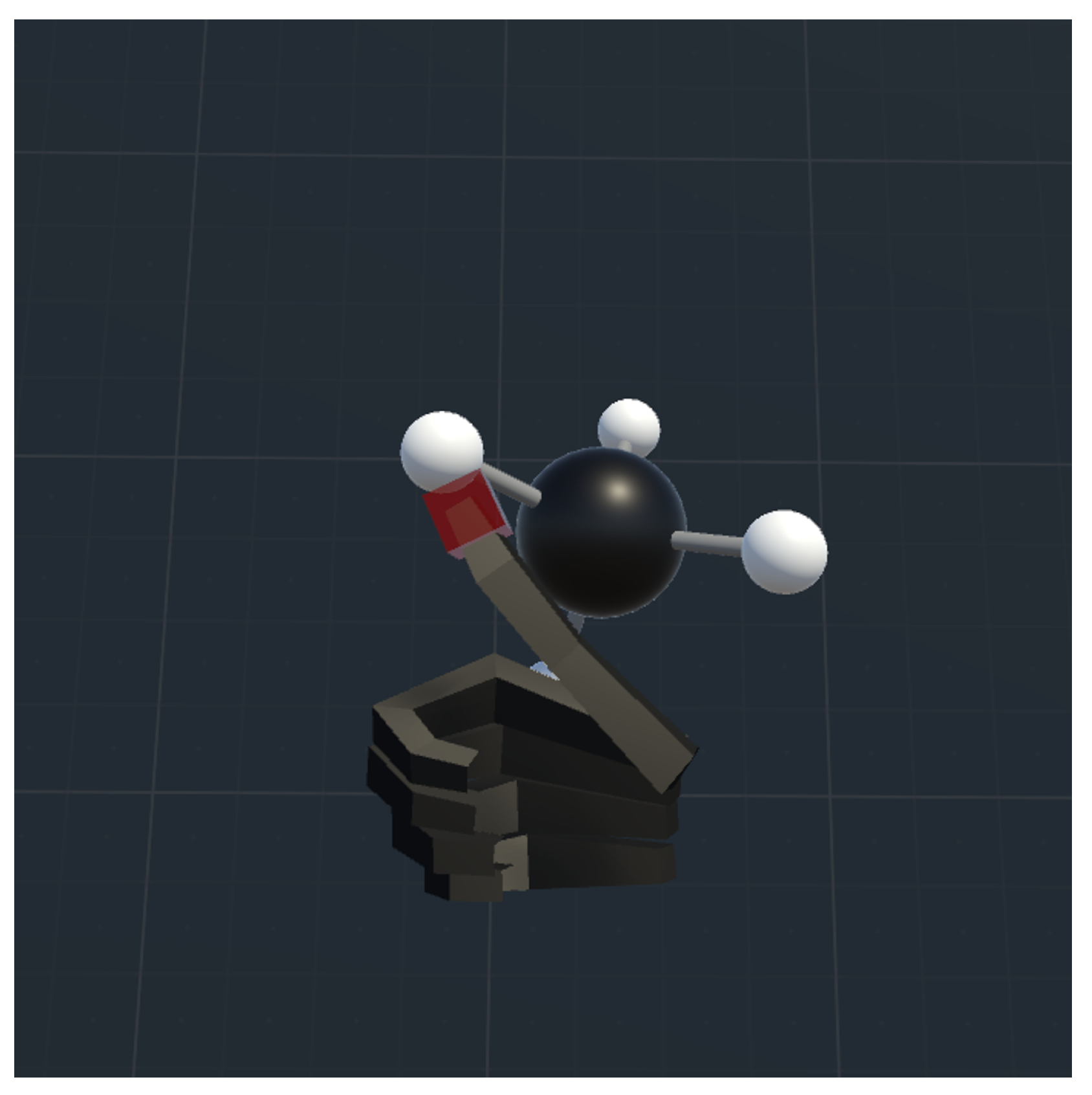

Atom and Bond Creation and Removal

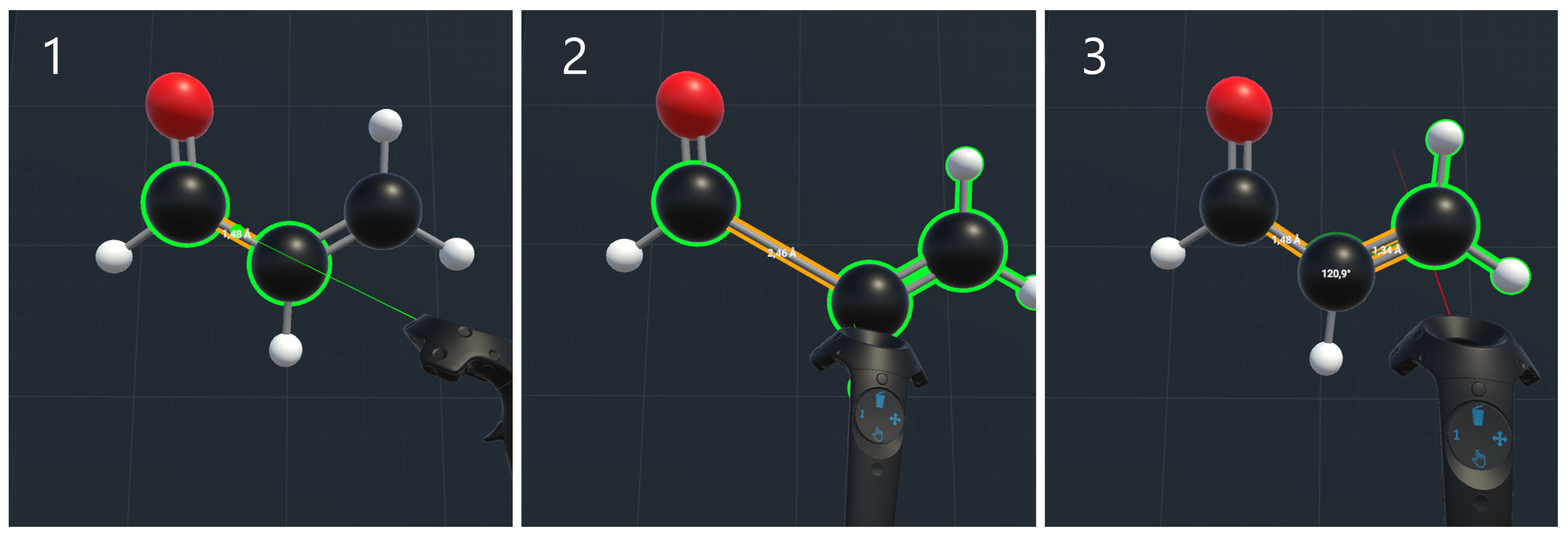

Editing and Measuring the Molecule Structure

Scene Rotation

2.4.3. OpenBabel, Energy Minimization and File Handling

3. Discussion

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sutherland, I.E. A Head-Mounted Three Dimensional Display. In Proceedings of the 9–11 December 1968, Fall Joint Computer Conference, Part I, San Francisco, CA, USA, 9–11 December 1968; Association for Computing Machinery: New York, NY, USA, 1968; pp. 757–764. [Google Scholar] [CrossRef] [Green Version]

- Cruz-Neira, C.; Sandin, D.J.; DeFanti, T.A. Surround-Screen Projection-Based Virtual Reality: The Design and Implementation of the CAVE. In Proceedings of the 20th Annual Conference on Computer Graphics and Interactive Techniques, Anaheim, CA, USA, 2–6 August 1993; SIGGRAPH ’93. pp. 135–142. [Google Scholar] [CrossRef]

- Kalning, K. If Second Life Is Not a Game, What Is It? 2007. Available online: https://www.nbcnews.com/id/wbna17538999 (accessed on 5 January 2021).

- Lancaster, S.J. Immersed in virtual molecules. Nat. Rev. Chem. 2018, 2, 253–254. [Google Scholar] [CrossRef]

- Castellanos, S. Rolls-Royce Enlists Virtual Reality to Help Assemble Jet Engine Parts. Wall Str. J. 2019. Available online: https://www.wsj.com/articles/rolls-royce-enlists-virtual-reality-to-help-assemble-jet-engine-parts-1506027011 (accessed on 11 March 2021).

- Volkswagen, A.G. The Largest Training Camp in the Automotive Industry. 2019. Available online: https://www.volkswagen-newsroom.com/en/stories/the-largest-training-camp-in-the-automotive-industry-5533 (accessed on 11 March 2021).

- Pottle, J. Virtual reality and the transformation of medical education. Future Healthc. J. 2019, 6, 181–185. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hauptman, H. Enhancement of spatial thinking with Virtual Spaces 1.0. Comput. Educ. 2010, 54, 123–135. [Google Scholar] [CrossRef]

- Dünser, A.; Steinbügl, K.; Kaufmann, H.; Glück, J. Virtual and Augmented Reality as Spatial Ability Training Tools. In Proceedings of the 7th ACM SIGCHI New Zealand Chapter’s International Conference on Computer-Human Interaction: Design Centered HCI, Christchurch, New Zealand, 6–7 July 2006; Association for Computing Machinery: New York, NY, USA, 2006. CHINZ ’06. pp. 125–132. [Google Scholar] [CrossRef]

- Wu, H.K.; Shah, P. Exploring visuospatial thinking in chemistry learning. Sci. Educ. 2004, 88, 465–492. [Google Scholar] [CrossRef]

- Kozma, R.; Russell, J. Students Becoming Chemists: Developing Representational Competence. In Visualization in Science Education; Springer: Dordrecht, The Netherlands, 2005; pp. 121–145. [Google Scholar] [CrossRef]

- Stieff, M.; Ryu, M.; Dixon, B.; Hegarty, M. The Role of Spatial Ability and Strategy Preference for Spatial Problem Solving in Organic Chemistry. J. Chem. Educ. 2012, 89, 854–859. [Google Scholar] [CrossRef]

- Carlisle, D.; Tyson, J.; Nieswandt, M. Fostering spatial skill acquisition by general chemistry students. Chem. Educ. Res. Pract. 2015, 16, 478–517. [Google Scholar] [CrossRef]

- Limniou, M.; Roberts, D.; Papadopoulos, N. Full immersive virtual environment CAVETM in chemistry education. Comput. Educ. 2008, 51, 584–593. [Google Scholar] [CrossRef]

- Barrow, J.; Forker, C.; Sands, A.; O’Hare, D.; Hurst, W. Augmented Reality for Enhancing Life Science Education. In Proceedings of the VISUAL 2019-The Fourth International Conference on Applications and Systems of Visual Paradigms, VISUAL 2019, Rome, Italy, 30 June–4 July 2019. [Google Scholar]

- Bennie, S.J.; Ranaghan, K.E.; Deeks, H.; Goldsmith, H.E.; O’Connor, M.B.; Mulholland, A.J.; Glowacki, D.R. Teaching Enzyme Catalysis Using Interactive Molecular Dynamics in Virtual Reality. J. Chem. Educ. 2019, 96, 2488–2496. [Google Scholar] [CrossRef] [Green Version]

- Brooks, B.R.; Brooks, C.L., III; Mackerell, A.D., Jr.; Nilsson, L.; Petrella, R.J.; Roux, B.; Won, Y.; Archontis, G.; Bartels, C.; Boresch, S.; et al. CHARMM: The biomolecular simulation program. J. Comput. Chem. 2009, 30, 1545–1614. [Google Scholar] [CrossRef]

- Humphrey, W.; Dalke, A.; Schulten, K. VMD–Visual Molecular Dynamics. J. Mol. Graph. 1996, 14, 33–38. [Google Scholar] [CrossRef]

- Edwards, B.I.; Bielawski, K.S.; Prada, R.; Cheok, A.D. Haptic virtual reality and immersive learning for enhanced organic chemistry instruction. Virtual Real. 2019, 23, 363–373. [Google Scholar] [CrossRef]

- Ferrell, J.B.; Campbell, J.P.; McCarthy, D.R.; McKay, K.T.; Hensinger, M.; Srinivasan, R.; Zhao, X.; Wurthmann, A.; Li, J.; Schneebeli, S.T. Chemical Exploration with Virtual Reality in Organic Teaching Laboratories. J. Chem. Educ. 2019, 96, 1961–1966. [Google Scholar] [CrossRef]

- Won, M.; Mocerino, M.; Tang, K.S.; Treagust, D.F.; Tasker, R. Interactive Immersive Virtual Reality to Enhance Students’ Visualisation of Complex Molecules. In Research and Practice in Chemistry Education: Advances from the 25th IUPAC International Conference on Chemistry Education 2018; Springer: Singapore, 2019; pp. 51–64. [Google Scholar] [CrossRef]

- Bernholt, S.; Broman, K.; Siebert, S.; Parchmann, I. Digitising Teaching and Learning—Additional Perspectives for Chemistry Education. Isr. J. Chem. 2019, 59, 554–564. [Google Scholar] [CrossRef]

- Gupta, T.; Belford, R.E. Conclusion: Technology Integration in Chemistry Education and Research: What Did We Learn and What Can We Expect Going Forward. In Technology Integration in Chemistry Education and Research (TICER); ACS Symposium Series; American Chemical Society: Washington, DC, USA, 2019; Chapter 18; pp. 281–301. [Google Scholar] [CrossRef]

- Jiménez, Z.A. Teaching and Learning Chemistry via Augmented and Immersive Virtual Reality. In Technology Integration in Chemistry Education and Research (TICER); ACS Symposium Series; American Chemical Society: Washington, DC, USA, 2019; Chapter 3; pp. 31–52. [Google Scholar] [CrossRef]

- Makransky, G.; Terkildsen, T.S.; Mayer, R.E. Adding immersive virtual reality to a science lab simulation causes more presence but less learning. Learn. Instr. 2019, 60, 225–236. [Google Scholar] [CrossRef]

- Ihlenfeldt, W.D. Virtual Reality in Chemistry. Mol. Model. Annu. 1997, 3, 386–402. [Google Scholar] [CrossRef]

- Pettersen, E.F.; Goddard, T.D.; Huang, C.C.; Couch, G.S.; Greenblatt, D.M.; Meng, E.C.; Ferrin, T.E. UCSF Chimera-A visualization system for exploratory research and analysis. J. Comput. Chem. 2004, 25, 1605–1612. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Schrödinger Inc. The PyMOL Molecular Graphics System Version 2.4. Available online: https://pymol.org/ (accessed on 13 November 2021).

- Extremera, J.; Vergara, D.; Dávila, L.P.; Rubio, M.P. Virtual and Augmented Reality Environments to Learn the Fundamentals of Crystallography. Crystals 2020, 10, 456. [Google Scholar] [CrossRef]

- Norrby, M.; Grebner, C.; Eriksson, J.; Boström, J. Molecular Rift: Virtual reality for drug designers. J. Chem. Inf. Model. 2015, 55, 2475–2484. [Google Scholar] [CrossRef] [PubMed]

- Salvadori, A.; Del Frate, G.; Pagliai, M.; Mancini, G.; Barone, V. Immersive virtual reality in computational chemistry: Applications to the analysis of QM and MM data. Int. J. Quantum Chem. 2016, 116, 1731–1746. [Google Scholar] [CrossRef] [Green Version]

- Zheng, M.; Waller, M.P. ChemPreview: An augmented reality-based molecular interface. J. Mol. Graph. Model. 2017, 73, 18–23. [Google Scholar] [CrossRef]

- García-Hernández, R.J.; Kranzlmüller, D. NOMAD VR: Multiplatform virtual reality viewer for chemistry simulations. Comput. Phys. Commun. 2019, 237, 230–237. [Google Scholar] [CrossRef]

- O’Connor, M.B.; Bennie, S.J.; Deeks, H.M.; Jamieson-Binnie, A.; Jones, A.J.; Shannon, R.J.; Walters, R.; Mitchell, T.J.; Mulholland, A.J.; Glowacki, D.R. Interactive molecular dynamics in virtual reality from quantum chemistry to drug binding: An open-source multi-person framework. J. Chem. Phys. 2019, 150, 220901. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Intangible Realities Lab. Narupa Builder Gitlab. 2020. Available online: https://gitlab.com/intangiblerealities/narupa-applications/narupa-builder (accessed on 7 April 2021).

- O’Boyle, N.M.; Banck, M.; James, C.A.; Morley, C.; Vandermeersch, T.; Hutchison, G.R. Open Babel: An open chemical toolbox. J. Cheminform. 2011, 3, 33. [Google Scholar] [CrossRef] [Green Version]

- Kingsley, L.J.; Brunet, V.; Lelais, G.; McCloskey, S.; Milliken, K.; Leija, E.; Fuhs, S.R.; Wang, K.; Zhou, E.; Spraggon, G. Development of a virtual reality platform for effective communication of structural data in drug discovery. J. Mol. Graph. Model. 2019, 89, 234–241. [Google Scholar] [CrossRef] [PubMed]

- Qin, T.; Cook, M.; Courtney, M. Exploring Chemistry with Wireless, PC-Less Portable Virtual Reality Laboratories. J. Chem. Educ. 2021, 98, 521–529. [Google Scholar] [CrossRef]

- Oculus. Guidelines for VR Performance Optimization. 2021. Available online: https://developer.oculus.com/documentation/native/pc/dg-performance-guidelines/ (accessed on 6 January 2021).

- Games, E. Unreal Engine Virtual Reality Best Practices. 2021. Available online: https://docs.unrealengine.com/en-US/SharingAndReleasing/XRDevelopment/VR/DevelopVR/ContentSetup/index.html (accessed on 6 January 2021).

- Dhinakaran, K. Developing a Virtual Reality Interface for Molecular Modelling. Aalto University. 2017. Available online: http://urn.fi/URN:NBN:fi:aalto-201711017486 (accessed on 28 October 2021).

- UltraLeap. Leap Motion Controller Data Sheet. 2021. Available online: https://www.ultraleap.com/datasheets/Leap_Motion_Controller_Datasheet.pdf (accessed on 6 January 2021).

- Sigg, C.; Weyrich, T.; Botsch, M.; Gross, M. GPU-Based Ray-Casting of Quadratic Surfaces. In Symposium on Point-Based Graphics; Botsch, M., Chen, B., Pauly, M., Zwicker, M., Eds.; The Eurographics Association: Boston, MA, USA, 2006. [Google Scholar] [CrossRef]

- Tarini, M.; Cignoni, P.; Montani, C. Ambient Occlusion and Edge Cueing for Enhancing Real Time Molecular Visualization. IEEE Trans. Vis. Comput. Graph. 2006, 12, 1237–1244. [Google Scholar] [CrossRef]

- Li, N.; Han, T.; Tian, F.; Huang, J.; Sun, M.; Irani, P.; Alexander, J. Get a Grip: Evaluating Grip Gestures for VR Input Using a Lightweight Pen. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–13. [Google Scholar] [CrossRef]

- Hanwell, M.D.; Curtis, D.E.; Lonie, D.C.; Vandermeersch, T.; Zurek, E.; Hutchison, G.R. Avogadro: An advanced semantic chemical editor, visualization, and analysis platform. J. Cheminform. 2012, 4, 17. [Google Scholar] [CrossRef] [Green Version]

- Dennington, R.; Keith, T.A.; Millam, J.M. GaussView Version 6; Semichem Inc.: Shawnee Mission, KS, USA, 2020. [Google Scholar]

- Perkinelmer Informatics ChemDraw Software. Available online: https://perkinelmerinformatics.com/products/research/chemdraw/ (accessed on 13 November 2021).

- Bergwerf, H. MolView Open-Source Web-Application. Available online: https://molview.org/ (accessed on 13 November 2021).

- Halgren, T.A. Merck molecular force field. I. Basis, form, scope, parameterization, and performance of MMFF94. J. Comput. Chem. 1996, 17, 490–519. [Google Scholar] [CrossRef]

- Deterding, S.; Dixon, D.; Khaled, R.; Nacke, L. From Game Design Elements to Gamefulness: Defining “Gamification”. In Proceedings of the 15th International Academic MindTrek Conference: Envisioning Future Media Environments, Tampere, Finland, 28–30 September 2011; pp. 9–15. [Google Scholar] [CrossRef]

- Huotari, K.; Hamari, J. Defining Gamification: A Service Marketing Perspective. In Proceedings of the 16th International Academic MindTrek Conference, Tampere, Finland, 3–5 October 2012; pp. 17–22. [Google Scholar] [CrossRef]

- Hamari, J.; Koivisto, J.; Sarsa, H. Does Gamification Work?—A Literature Review of Empirical Studies on Gamification. In Proceedings of the 2014 47th Hawaii International Conference on System Sciences, Waikoloa, HI, USA, 6–9 January 2014; pp. 3025–3034. [Google Scholar] [CrossRef]

- Martínez, T.J. Ab Initio Reactive Computer Aided Molecular Design. Accounts Chem. Res. 2017, 50, 652–656. [Google Scholar] [CrossRef]

- Azuma, R.T. A Survey of Augmented Reality. Presence Teleoperators Virtual Environ. 1997, 6, 355–385. [Google Scholar] [CrossRef]

- Free Software Foundation. GNU General Public License, Version 2. 1991. Available online: http://www.gnu.org/licenses/old-licenses/gpl-2.0.html (accessed on 22 June 2021).

- Pietikäinen, O. VRChem Git Repository. 2021. Available online: https://github.com/XRChem/VRChem (accessed on 22 June 2021).

- Pietikäinen, O. VRChem Tutorial. 2021. Available online: https://www.youtube.com/watch?v=B2yuzdXad-k (accessed on 22 June 2021).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pietikäinen, O.; Hämäläinen, P.; Lehtinen, J.; Karttunen, A.J. VRChem: A Virtual Reality Molecular Builder. Appl. Sci. 2021, 11, 10767. https://doi.org/10.3390/app112210767

Pietikäinen O, Hämäläinen P, Lehtinen J, Karttunen AJ. VRChem: A Virtual Reality Molecular Builder. Applied Sciences. 2021; 11(22):10767. https://doi.org/10.3390/app112210767

Chicago/Turabian StylePietikäinen, Otso, Perttu Hämäläinen, Jaakko Lehtinen, and Antti J. Karttunen. 2021. "VRChem: A Virtual Reality Molecular Builder" Applied Sciences 11, no. 22: 10767. https://doi.org/10.3390/app112210767

APA StylePietikäinen, O., Hämäläinen, P., Lehtinen, J., & Karttunen, A. J. (2021). VRChem: A Virtual Reality Molecular Builder. Applied Sciences, 11(22), 10767. https://doi.org/10.3390/app112210767