The Usefulness of Video Learning Analytics in Small Scale E-Learning Scenarios

Abstract

:1. Introduction

2. Literature Review

2.1. Educational Video

2.2. Learning Analytics and Educational Data Mining to Enhance the Teaching/Learning Process

2.3. Clickstream Data Visualization

3. Methods and Materials

3.1. Research Questions and Research Design

- RQ1.

- Are data generated by students watching educational videos perceived as useful by teachers in small scale learning scenarios?

- RQ2.

- Is there a minimum number of video playbacks to record so that the presented data are perceived as useful by teachers to enhance the learning process? In the affirmative case, what is that minimum?

3.2. Context

3.3. Participants, Study Procedure and Instruments

3.4. Data Analysis

- Have you found the graphs corresponding to the five single playbacks with the most events useful?

- Would you be interested in having those graphs for videos you use in your teaching activity?

- Is there any information in those graphs that will lead you to take any action related to your teaching activity related to those videos useful? If so, please state which information and which actions.

- On a scale from 1 (very hard to read) to 5 (very easy to read), how easy to read are these graphs?

- 5.

- Can you think of any improvements we could make to the graphs you have been shown? If so, which?

4. Results

4.1. Summary of the Obtained Results

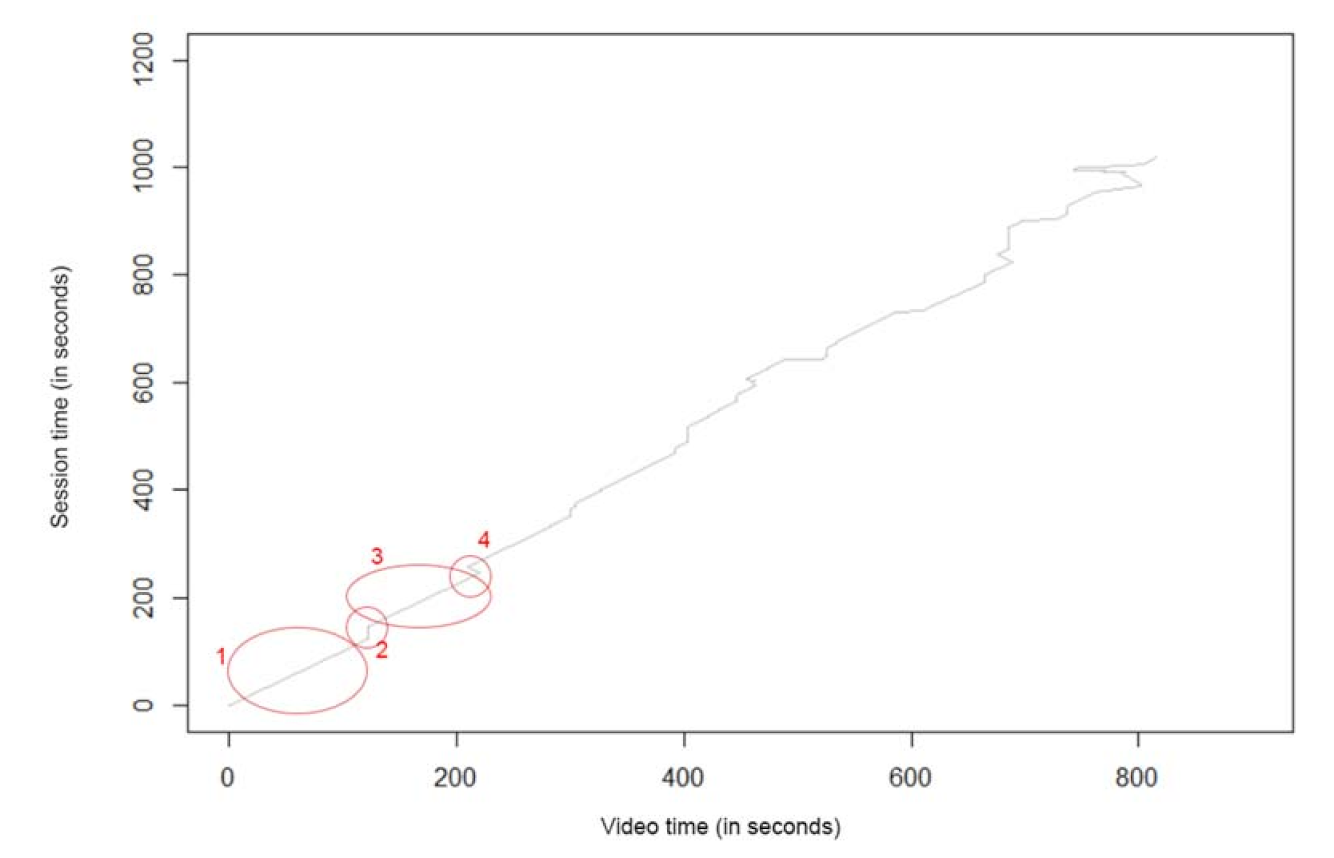

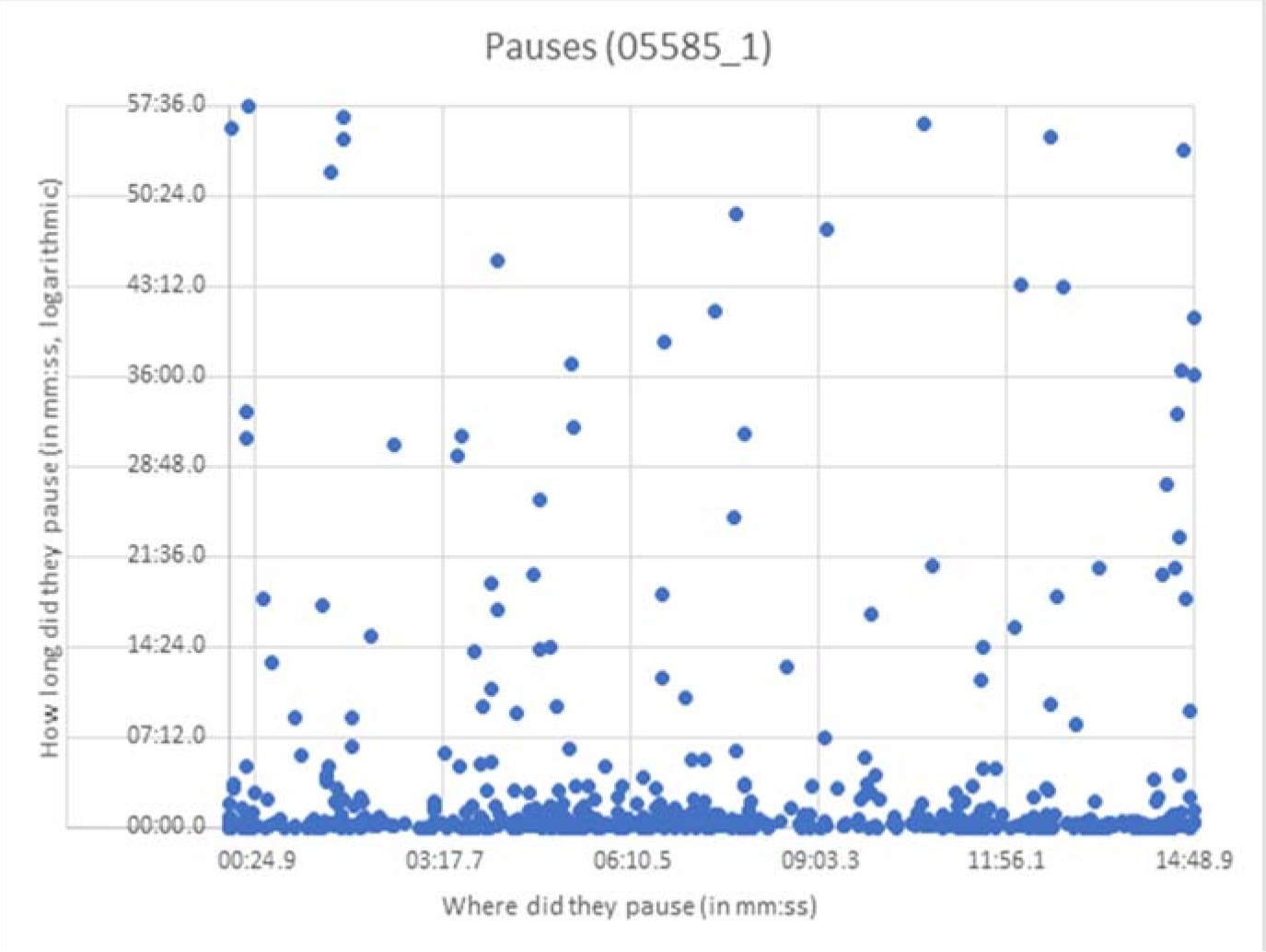

- “I don’t think the video should be changed for this, but I interpret these two pause patterns (one towards the beginning and one towards the end) as indicating that the students have stopped in these two areas to reproduce with their practice kits what is being shown in the video (the cluster of pauses at the beginning: testing the tracks of the breadboard; the cluster of pauses at the end: assembly of the circuit). The absence of pauses in the central area can be interpreted as that this part of the video hardly provides any information and, perhaps, could be shortened to obtain a shorter but just as informative video.”

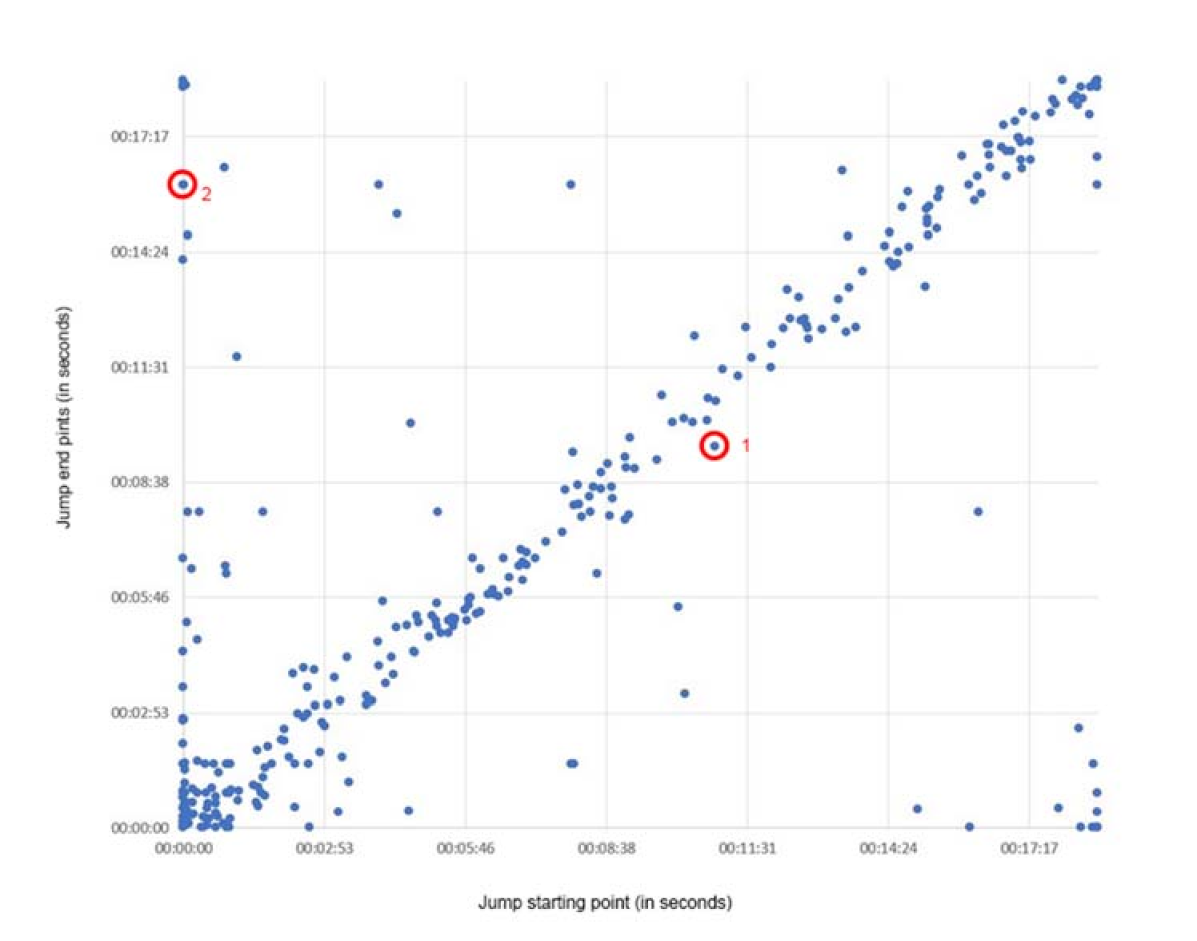

- “I see a lot more jumping forward than backward. This may be indicative that the video is too long, or perhaps that it has parts of little interest or that provide redundant information (e.g., once you have shown how to connect one or two components on the breadboard, the connection procedures of the rest of the components are exactly the same, and maybe they could be elided and directly show the result of the connection) and that they last too long.”

- A lecturer in the non-traditional database course (in which an index was provided allowing students to jump to sections in the video) spotted a number of students jumping to points in the video corresponding to certain slides that had not been in the index, and suggested that those slides may be included in the index for future students. Additionally, she suggested that aggregations of jumps may change from first views to second views of a video, and that if this is the case, the provided index could change dynamically to better help students navigate the video.

4.2. Answers to the Research Questions

5. Discussion

6. Conclusions and Future Lines of Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Tiernan, P. An inquiry into the current and future uses of digital video in University Teaching. Educ. Inf. Technol. 2015, 20, 75–90. [Google Scholar] [CrossRef]

- Makarem, S.C. Using online video lectures to enrich traditional face-to-face courses. Int. J. Instr. 2015, 8, 155–164. [Google Scholar] [CrossRef]

- Wieling, M.B.; Hofman, W.H.A. The impact of online video lecture recordings and automated feedback on student performance. Comput. Educ. 2010, 54, 992–998. [Google Scholar] [CrossRef]

- Kaltura Inc. The State of Video in Education 2020; Kaltura Inc.: New York, NY, USA, 2020. [Google Scholar]

- Lange, C.; Costley, J. Improving online video lectures: Learning challenges created by media. Int. J. Educ. Technol. High. Educ. 2020, 17, 1–18. [Google Scholar] [CrossRef]

- Costley, J.; Christopher, H.L. Video lectures in e-learning: Effects of viewership and media diversity on learning, satisfaction, engagement, interest, and future behavioral intention. Interact. Technol. Smart Educ. 2017, 14, 14–30. [Google Scholar] [CrossRef]

- Nagy, J.T. Evaluation of online video usage and learning satisfaction: An extension of the technology acceptance model. Int. Rev. Res. Open Distrib. Learn. 2018, 19. [Google Scholar] [CrossRef] [Green Version]

- Ozan, O.; Ozarslan, Y. Video lecture watching behaviors of learners in online courses. Educ. Media Int. 2016, 53, 27–41. [Google Scholar] [CrossRef]

- Razak, R.A.; Kaur, D.; Halili, S.H.; Ramlan, Z. Flipped ESL teacher professional development: Embracing change to remain relevant. Teach. Engl. Technol. 2016, 16, 85–102. [Google Scholar]

- Hung, J.C.-S.; Chiang, K.-H.; Huang, Y.-H.; Lin, K.-C. Augmenting teacher-student interaction in digital learning through affective computing. Multimed. Tools Appl. 2017, 76, 18361–18386. [Google Scholar] [CrossRef]

- Mozilla. Mozilla/Popcorn-Js: The HTML5 Media Framework. GitHub. Available online: https://github.com/mozilla/popcorn-js (accessed on 31 August 2021).

- H5P. Available online: https://h5p.org/ (accessed on 31 August 2021).

- Giannakos, M.N.; Chorianopoulos, K.; Chrisochoides, N. Making sense of video analytics: Lessons learned from clickstream interactions, attitudes, and learning outcome in a video-assisted course. Int. Rev. Res. Open Distrib. Learn. 2015, 16, 260–283. [Google Scholar] [CrossRef] [Green Version]

- Marshall, J.; Marshall, F.; Chauhan, A. Students’ video watching patterns within online instructional videos. In Innovate Learning Summit 2020; Association for the Advancement of Computing in Education (AACE): Morgantown, WV, USA, 2020; pp. 202–210. [Google Scholar]

- What Is XAPI Aka the Experience API or Tin Can API. xAPI.com. Available online: https://xapi.com/overview/ (accessed on 31 August 2021).

- Shi, C.; Fu, S.; Chen, Q.; Qu, H. VisMOOC: Visualizing video clickstream data from massive open online courses. In Proceedings of the 2015 IEEE Pacific visualization symposium (PacificVis), Hangzhou, China, 14–17 April 2015; IEEE: New York, NY, USA, 2015; pp. 159–166. [Google Scholar]

- Davis, F.D.; Davis, G.; Morris, M.; Venkatesh, V. Technology acceptance model. J. Manag. Sci. 1989, 35, 982–1003. [Google Scholar]

- Davis, F.D. A Technology Acceptance Model for Empirically Testing New End-User Information Systems: Theory and Results. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 1985. [Google Scholar]

- Lee, Y.; Kozar, K.A.; Larsen, K.R.T. The technology acceptance model: Past, present, and future. Commun. Assoc. Inf. Syst. 2003, 12, 50. [Google Scholar] [CrossRef]

- Cruse, E. Using educational video in the classroom: Theory, research and practice. Libr. Video Co. 2006, 12, 56–80. [Google Scholar]

- Kay, R.H. Exploring the use of video podcasts in education: A comprehensive review of the literature. Comput. Hum. Behav. 2012, 28, 820–831. [Google Scholar] [CrossRef]

- Poquet, O.; Lim, L.; Mirriahi, N.; Dawson, S. Video and learning: A systematic review (2007–2017). In Proceedings of the 8th International Conference on Learning Analytics and knowledge, Sydney, Australia, 7–9 March 2018; pp. 151–160. [Google Scholar]

- Yousef, A.; Fahmy, M.; Chatti, M.A.; Schroeder, U. Video-based learning: A critical analysis of the research published in 2003–2013 and future visions. In Proceedings of the eLmL 2014: The Sixth International Conference on Mobile, Hybrid, and Online Learning, Barcelona, Spain, 23–27 March 2014; pp. 112–119. [Google Scholar]

- Lau, K.; Vincent, H.; Farooque, P.; Leydon, G.; Schwartz, M.L.; Sadler, R.M.; Moeller, J.J. Using learning analytics to evaluate a video-based lecture series. Med Teach. 2018, 40, 91–98. [Google Scholar] [CrossRef]

- Atapattu, T.; Falkner, K. Impact of lecturer’s discourse for students’ video engagement: Video learning analytics case study of moocs. J. Learn. Anal. 2018, 5, 182–197. [Google Scholar] [CrossRef] [Green Version]

- Kim, J.; Guo, P.J.; Seaton, D.T.; Mitros, P.; Gajos, K.Z.; Miller, C. Understanding in-video dropouts and interaction peaks in online lecture videos. In Proceedings of the First ACM Conference on Learning@ Scale Conference, Atlanta, GA, USA, 4–5 March 2014; pp. 31–40. [Google Scholar]

- Panigrahi, R.; Srivastava, P.R.; Sharma, D. Online learning: Adoption, continuance, and learning outcome—A review of literature. Int. J. Inf. Manag. 2018, 43, 1–14. [Google Scholar] [CrossRef]

- Brown, M.; McCormack, M.; Reeves, J.; Brook, D.C.; Grajek, S.; Alexander, B.; Bali, M. 2020 Educause Horizon Report Teaching and Learning Edition; EDUCAUSE: Louisville, CO, USA, 2020. [Google Scholar]

- Sclater, N.; Peasgood, A.; Mullan, J. Learning analytics in higher education. Lond. Jisc. Accessed Febr. 2016, 8, 176. [Google Scholar]

- Romero, C.; Sebastian, V. Educational data mining and learning analytics: An updated survey. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2020, 10, e1355. [Google Scholar] [CrossRef]

- Mirriahi, N.; Vigentini, L. Analytics of learner video use. In Handbook of Learning Analytics; SOLAR: New York, NY, USA, 2017; pp. 251–267. [Google Scholar]

- Li, N.; Kidziński, Ł.; Jermann, P.; Dillenbourg, P. MOOC video interaction patterns: What do they tell us? In European Conference on Technology Enhanced Learning; Springer: Cham, Switzerland, 2015; pp. 197–210. [Google Scholar]

- Renkl, A. The worked-out examples principle in multimedia learning. In The Cambridge Handbook of Multimedia Learning; Cambridge University Press: Cambridge, UK, 2005; pp. 229–245. [Google Scholar]

- Bisra, K.; Liu, Q.; Nesbit, J.C.; Salimi, F.; Winne, P.H. Inducing self-explanation: A meta-analysis. Educ. Psychol. Rev. 2018, 30, 703–725. [Google Scholar] [CrossRef]

- Roy, M.; Michelene, T.H. The self-explanation principle in multimedia learning. In The Cambridge Handbook of Multimedia Learning; Cambridge University Press: New York, NY, USA, 2005; pp. 271–286. [Google Scholar]

- Kateja, R.; Rohith, A.; Kumar, P.; Sinha, R. VizClick visualizing clickstream data. In Proceedings of the 2014 International Conference on Information Visualization Theory and Applications (IVAPP), Lisbon, Portugal, 5–8 January 2014; IEEE: New York, NY, USA, 2014; pp. 247–255. [Google Scholar]

- Goulden, M.C.; Gronda, E.; Yang, Y.; Zhang, Z.; Tao, J.; Wang, C.; Duan, G.X.; Ambrose, A.; Abbott, K.; Miller, P. CCVis: Visual analytics of student online learning behaviors using course clickstream data. Electron. Imaging 2019, 2019, 681-1–681-12. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.; Chen, Q.; Zhao, M.; Boyer, S.; Veeramachaneni, K.; Qu, H. DropoutSeer: Visualizing learning patterns in massive open online courses for dropout reasoning and prediction. In Proceedings of the 2016 IEEE Conference on Visual Analytics Science and Technology (VAST), Baltimore, MD, USA, 23–28 October 2016; IEEE: New York, NY, USA, 2016; pp. 111–120. [Google Scholar]

- Wang, Y.; Chen, Z.; Li, Q.; Ma, X.; Luo, Q.; Qu, H. Animated narrative visualization for video clickstream data. In SIGGRAPH Asia 2016 Symposium on Visualization; Association for Computing Machinery: New York, NY, USA, 2016; pp. 1–8. [Google Scholar]

- Hu, H.; Zhang, G.; Gao, W.; Wang, M. Big data analytics for MOOC video watching behavior based on Spark. Neural Comput. Appl. 2020, 32, 6481–6489. [Google Scholar] [CrossRef]

- Mubarak, A.A.; Cao, H.; Zhang, W.; Zhang, W. Visual analytics of video-clickstream data and prediction of learners ‘performance using deep learning models in MOOCs’ courses. Comput. Appl. Eng. Educ. 2021, 29, 710–732. [Google Scholar] [CrossRef]

- Oates, B.J. Researching Information Systems and Computing; Sage: Thousand Oaks, CA, USA, 2005. [Google Scholar]

- Córcoles, C. Videolearninganalytics. GitHub. Available online: https://github.com/ccorcoles/videolearninganalytics (accessed on 24 September 2021).

- Hoel, T.; Chen, W. Privacy-driven design of Learning Analytics applications–exploring the design space of solutions for data sharing and interoperability. J. Learn. Anal. 2016, 3, 139–158. [Google Scholar] [CrossRef] [Green Version]

- Kitto, K.; Knight, S. Practical ethics for building learning analytics. Br. J. Educ. Technol. 2019, 50, 2855–2870. [Google Scholar] [CrossRef]

- UOC. Functions and Aims. Ethics Committee—Research and Innovation—(UOC). Available online: https://research.uoc.edu/portal/en/ri/activitat-rdi/comite-etica/funcions/index.html (accessed on 31 August 2021).

| Course | Number of Videos | Video Durations | Accesses | Sessions Longer than 10″ | Recorded Events |

|---|---|---|---|---|---|

| Database design | 4 (2 + 2) | 8:34–15:10 | 1208 | 572 (47.4%) | 17,181 |

| Introductory statistics | 4 | 7:11–12:07 | 408 | 320 (78.4%) | 6239 |

| Electronics | 8 (4 + 4) | 4:01–7:04 | 334 | 243 (72.8%) | 2592 |

| Non-traditional databases | 20 | 6:36–1:00:30 | 6741 | 2205 (32.7%) | 44,987 |

| Page Accessed | Video | Timestamp | Action | Parameters |

|---|---|---|---|---|

| Tue, 10 March 2019 11:27:44 GMT | 05585_01 | 11:27:44 GMT | page loaded | |

| 05585_01 | 11:27:49 GMT | play | 0 | |

| 05585_01 | 11:27:50 GMT | seek | 77.867 | |

| 05585_01 | 11:27:51 GMT | seek | 196.011 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Córcoles, C.; Cobo, G.; Guerrero-Roldán, A.-E. The Usefulness of Video Learning Analytics in Small Scale E-Learning Scenarios. Appl. Sci. 2021, 11, 10366. https://doi.org/10.3390/app112110366

Córcoles C, Cobo G, Guerrero-Roldán A-E. The Usefulness of Video Learning Analytics in Small Scale E-Learning Scenarios. Applied Sciences. 2021; 11(21):10366. https://doi.org/10.3390/app112110366

Chicago/Turabian StyleCórcoles, César, Germán Cobo, and Ana-Elena Guerrero-Roldán. 2021. "The Usefulness of Video Learning Analytics in Small Scale E-Learning Scenarios" Applied Sciences 11, no. 21: 10366. https://doi.org/10.3390/app112110366

APA StyleCórcoles, C., Cobo, G., & Guerrero-Roldán, A.-E. (2021). The Usefulness of Video Learning Analytics in Small Scale E-Learning Scenarios. Applied Sciences, 11(21), 10366. https://doi.org/10.3390/app112110366