Deep Learning-Based Methods for Prostate Segmentation in Magnetic Resonance Imaging

Abstract

Featured Application

Abstract

1. Introduction

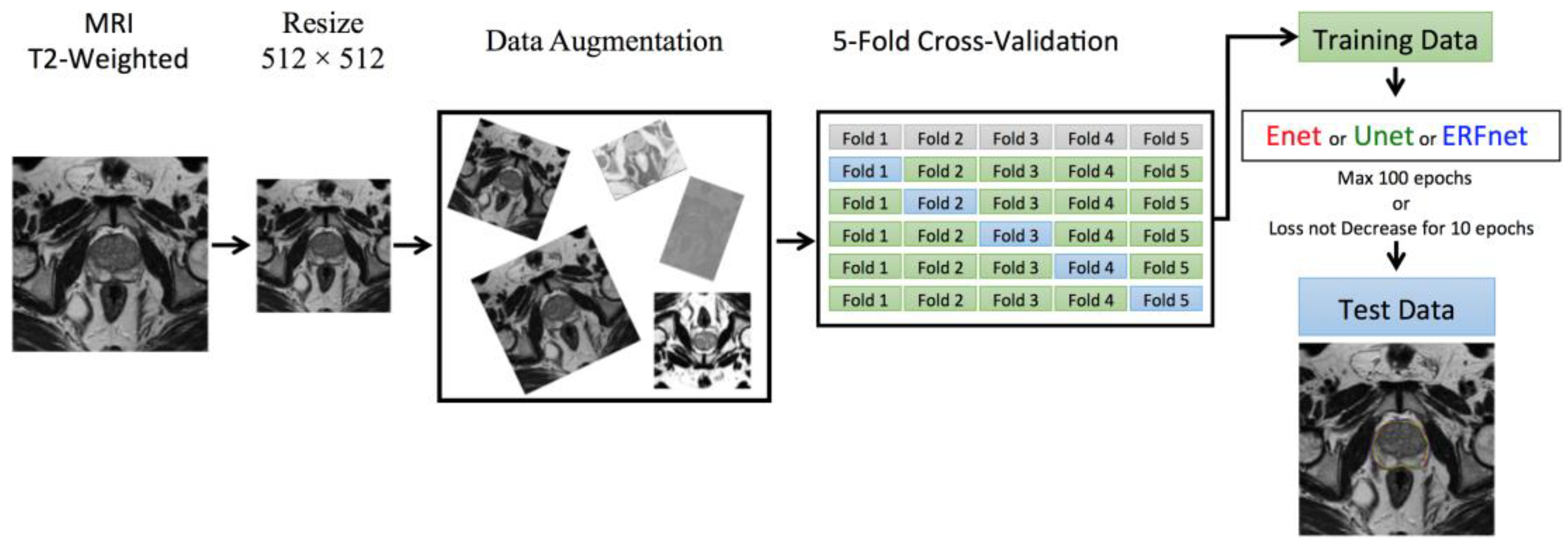

2. Materials and Methods

2.1. Experimental Setup

2.2. Deep Learning Models

2.3. Training Methodology

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Stefano, A.; Comelli, A.; Bravata, V.; Barone, S.; Daskalovski, I.; Savoca, G.; Sabini, M.G.; Ipplito, M.; Russo, G. A preliminary PET radiomics study of brain metastases using a fully automatic segmentation method. BMC Bioinform. 2020, 21, 325. [Google Scholar] [CrossRef]

- Cuocolo, R.; Stanzione, A.; Ponsiglione, A.; Romeo, V.; Verde, F.; Creta, M.; La Rocca, R.; Longo, N.; Pace, L.; Imbriaco, M. Clinically significant prostate cancer detection on MRI: A radiomic shape features study. Eur. J. Radiol. 2019, 116, 144–149. [Google Scholar] [CrossRef]

- Baeßler, B.; Weiss, K.; Santos, D.P. Dos Robustness and Reproducibility of Radiomics in Magnetic Resonance Imaging: A Phantom Study. Investig. Radiol. 2019, 54, 221–228. [Google Scholar]

- Gallivanone, F.; Interlenghi, M.; D’Ambrosio, D.; Trifirò, G.; Castiglioni, I. Parameters influencing PET imaging features: A phantom study with irregular and heterogeneous synthetic lesions. Contrast Media Mol. Imaging 2018, 2018, 12. [Google Scholar] [CrossRef]

- Comelli, A.; Stefano, A.; Coronnello, C.; Russo, G.; Vernuccio, F.; Cannella, R.; Salvaggio, G.; Lagalla, R.; Barone, S. Radiomics: A New Biomedical Workflow to Create a Predictive Model; Springer: Cham, Switzerland, 2020; pp. 280–293. [Google Scholar]

- Comelli, A.; Stefano, A.; Russo, G.; Sabini, M.G.; Ippolito, M.; Bignardi, S.; Petrucci, G.; Yezzi, A. A smart and operator independent system to delineate tumours in Positron Emission Tomography scans. Comput. Biol. Med. 2018, 102, 1–15. [Google Scholar] [CrossRef]

- Dahiya, N.; Yezzi, A.; Piccinelli, M.; Garcia, E. Integrated 3D anatomical model for automatic myocardial segmentation in cardiac CT imagery. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 2019, 7, 690–706. [Google Scholar] [CrossRef]

- Comelli, A. Fully 3D Active Surface with Machine Learning for PET Image Segmentation. J. Imaging 2020, 6, 113. [Google Scholar] [CrossRef]

- Foster, B.; Bagci, U.; Mansoor, A.; Xu, Z.; Mollura, D.J. A review on segmentation of positron emission tomography images. Comput. Biol. Med. 2014, 50, 76–96. [Google Scholar] [CrossRef] [PubMed]

- Bi, L.; Kim, J.; Wen, L.; Feng, D.; Fulham, M. Automated thresholded region classification using a robust feature selection method for PET-CT. In Proceedings of the International Symposium on Biomedical Imaging, Brooklyn, NY, USA, 16–19 April 2015; Volume 2015-July. [Google Scholar]

- Dhanachandra, N.; Manglem, K.; Chanu, Y.J. Image Segmentation Using K-means Clustering Algorithm and Subtractive Clustering Algorithm. In Proceedings of the Procedia Computer Science, Algiers, Algeria, 18–19 October 2015. [Google Scholar]

- Chevrefils, C.; Chériet, F.; Grimard, G.; Aubin, C.E. Watershed segmentation of intervertebral disk and spinal canal from MRI images. In Proceedings of the Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), Redondo Beach, CA, USA, 8–11 July 2007. [Google Scholar]

- Comelli, A.; Bignardi, S.; Stefano, A.; Russo, G.; Sabini, M.G.; Ippolito, M.; Yezzi, A. Development of a new fully three-dimensional methodology for tumours delineation in functional images. Comput. Biol. Med. 2020, 120, 103701. [Google Scholar] [CrossRef]

- Boykov, Y.; Veksler, O.; Zabih, R. Fast approximate energy minimization via graph cuts. Pattern Anal. Mach. Intell. IEEE Trans. 2001, 23, 1222–1239. [Google Scholar] [CrossRef]

- Stefano, A.; Vitabile, S.; Russo, G.; Ippolito, M.; Marletta, F.; D’arrigo, C.; D’urso, D.; Gambino, O.; Pirrone, R.; Ardizzone, E.; et al. A fully automatic method for biological target volume segmentation of brain metastases. Int. J. Imaging Syst. Technol. 2016, 26, 29–37. [Google Scholar] [CrossRef]

- Plath, N.; Toussaint, M.; Nakajima, S. Multi-class image segmentation using conditional random fields and global classification. In Proceedings of the 26th International Conference on Machine Learning, ICML 2009, Montreal, QC, Canada, 14–18 June 2009. [Google Scholar]

- Dey, D.; Slomka, P.J.; Leeson, P.; Comaniciu, D.; Shrestha, S.; Sengupta, P.P.; Marwick, T.H. Artificial Intelligence in Cardiovascular Imaging: JACC State-of-the-Art Review. J. Am. Coll. Cardiol. 2019, 73, 1317–1335. [Google Scholar] [CrossRef] [PubMed]

- Zhou, T.; Fu, H.; Zhang, Y.; Zhang, C.; Lu, X.; Shen, J.; Shao, L. M2Net: Multi-modal Multi-channel Network for overall survival time prediction of brain tumor patients. arXiv 2020, arXiv:2006.10135. [Google Scholar]

- Cuocolo, R.; Cipullo, M.B.; Stanzione, A.; Ugga, L.; Romeo, V.; Radice, L.; Brunetti, A.; Imbriaco, M. Machine learning applications in prostate cancer magnetic resonance imaging. Eur. Radiol. Exp. 2019, 3, 35. [Google Scholar] [CrossRef] [PubMed]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Vincent, P.; Larochelle, H.; Lajoie, I.; Bengio, Y.; Manzagol, P.A. Stacked denoising autoencoders: Learning Useful Representations in a Deep Network with a Local Denoising Criterion. J. Mach. Learn. Res. 2010, 11, 3371–3408. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Ghiasi, G.; Fowlkes, C.C. Laplacian pyramid reconstruction and refinement for semantic segmentation. In Proceedings of the Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), Amsterdam, The Netherlands, 8–16 October 2016. [Google Scholar]

- He, J.; Deng, Z.; Qiao, Y. Dynamic multi-scale filters for semantic segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019. [Google Scholar]

- Chen, L.C.; Yang, Y.; Wang, J.; Xu, W.; Yuille, A.L. Attention to Scale: Scale-Aware Semantic Image Segmentation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. In Proceedings of the 4th International Conference on Learning Representations, ICLR 2016—Conference Track Proceedings, San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Mirza, M.; Osindero, S. Conditional Generative Adversarial Nets Mehdi. arXiv 2018, arXiv:1411.1784. [Google Scholar]

- Chena, X.; Yao, L.; Zhou, T.; Dong, J.; Zhang, Y. Momentum Contrastive Learning for Few-Shot COVID-19 Diagnosis from Chest CT Images. arXiv 2020, arXiv:2006.13276. [Google Scholar]

- Chen, X.; Yao, L.; Zhang, Y. Residual attention U-net for automated multi-class segmentation of COVID-19 chest CT images. arXiv 2020, arXiv:2004.05645. [Google Scholar]

- Garcia-Garcia, A.; Orts-Escolano, S.; Oprea, S.; Villena-Martinez, V.; Martinez-Gonzalez, P.; Garcia-Rodriguez, J. A survey on deep learning techniques for image and video semantic segmentation. Appl. Soft Comput. J. 2018, 70, 41–65. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.W.M.; van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed]

- Borasi, G.; Russo, G.; Alongi, F.; Nahum, A.; Candiano, G.C.; Stefano, A.; Gilardi, M.C.; Messa, C. High-intensity focused ultrasound plus concomitant radiotherapy: A new weapon in oncology? J. Ther. Ultrasound 2013, 1, 6. [Google Scholar] [CrossRef] [PubMed]

- Langan, R.C. Benign Prostatic Hyperplasia. Prim. Care Clin. Off. Pract. 2019, 361, 1359–1367. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Wang, Y.; Qin, Z.; Gao, X.; Xing, Q.; Li, R.; Wang, W.; Song, N.; Zhang, W. Correlation between prostatitis, benign prostatic hyperplasia and prostate cancer: A systematic review and meta-analysis. J. Cancer 2020, 11, 177–189. [Google Scholar] [CrossRef]

- Giambelluca, D.; Cannella, R.; Vernuccio, F.; Comelli, A.; Pavone, A.; Salvaggio, L.; Galia, M.; Midiri, M.; Lagalla, R.; Salvaggio, G. PI-RADS 3 Lesions: Role of Prostate MRI Texture Analysis in the Identification of Prostate Cancer. Curr. Probl. Diagn. Radiol. 2019. In press. [Google Scholar] [CrossRef]

- Cuocolo, R.; Stanzione, A.; Ponsiglione, A.; Verde, F.; Ventimiglia, A.; Romeo, V.; Petretta, M.; Imbriaco, M. Prostate MRI technical parameters standardization: A systematic review on adherence to PI-RADSv2 acquisition protocol. Eur. J. Radiol. 2019, 120, 108662. [Google Scholar] [CrossRef]

- Turkbey, B.; Fotin, S.V.; Huang, R.J.; Yin, Y.; Daar, D.; Aras, O.; Bernardo, M.; Garvey, B.E.; Weaver, J.; Haldankar, H.; et al. Fully automated prostate segmentation on MRI: Comparison with manual segmentation methods and specimen volumes. Am. J. Roentgenol. 2013, 201, W720–W729. [Google Scholar] [CrossRef]

- Rusu, M.; Purysko, A.S.; Verma, S.; Kiechle, J.; Gollamudi, J.; Ghose, S.; Herrmann, K.; Gulani, V.; Paspulati, R.; Ponsky, L.; et al. Computational imaging reveals shape differences between normal and malignant prostates on MRI. Sci. Rep. 2017, 7, 1–11. [Google Scholar] [CrossRef]

- Comelli, A.; Terranova, M.C.; Scopelliti, L.; Salerno, S.; Midiri, F.; Lo Re, G.; Petrucci, G.; Vitabile, S. A kernel support vector machine based technique for Crohn’s disease classification in human patients. In Proceedings of the Advances in Intelligent Systems and Computing, Torino, Italy, 10–12 July 2017; Springer: Cham, Switzerland, 2018; Volume 611, pp. 262–273. [Google Scholar]

- Ghose, S.; Oliver, A.; Martí, R.; Lladó, X.; Vilanova, J.C.; Freixenet, J.; Mitra, J.; Sidibé, D.; Meriaudeau, F. A survey of prostate segmentation methodologies in ultrasound, magnetic resonance and computed tomography images. Comput. Methods Programs Biomed. 2012, 108, 262–287. [Google Scholar] [CrossRef]

- Litjens, G.; Toth, R.; van de Ven, W.; Hoeks, C.; Kerkstra, S.; van Ginneken, B.; Vincent, G.; Guillard, G.; Birbeck, N.; Zhang, J.; et al. Evaluation of prostate segmentation algorithms for MRI: The PROMISE12 challenge. Med. Image Anal. 2014, 18, 359–373. [Google Scholar] [CrossRef]

- Paszke, A.; Chaurasia, A.; Kim, S.; Culurciello, E. ENet: A Deep Neural Network Architecture for Real-Time Semantic Segmentation. arXiv 2016, arXiv:1606.02147. [Google Scholar]

- Romera, E.; Alvarez, J.M.; Bergasa, L.M.; Arroyo, R. ERFNet: Efficient Residual Factorized ConvNet for Real-Time Semantic Segmentation. IEEE Trans. Intell. Transp. Syst. 2018, 19, 263–272. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), Fuzhou, China, 13–15 November 2015. [Google Scholar]

- Prostate Imaging Reporting & Data System (PI-RADS). Available online: https://www.acr.org/Clinical-Resources/Reporting-and-Data-Systems/PI-RADS (accessed on 17 February 2019).

- Warfield, S.K.; Zou, K.H.; Wells, W.M. Simultaneous truth and performance level estimation (STAPLE): An algorithm for the validation of image segmentation. IEEE Trans. Med. Imaging 2004, 23, 903–921. [Google Scholar] [CrossRef] [PubMed]

- Comelli, A.; Dahiya, N.; Stefano, A.; Benfante, V.; Gentile, G.; Agnese, V.; Raffa, G.M.; Pilato, M.; Yezzi, A.; Petrucci, G.; et al. Deep learning approach for the segmentation of aneurysmal ascending aorta. Biomed. Eng. Lett. 2020, 1–10. [Google Scholar] [CrossRef]

- Comelli, A.; Coronnello, C.; Dahiya, N.; Benfante, V.; Palmucci, S.; Basile, A.; Vancheri, C.; Russo, G.; Yezzi, A.; Stefano, A. Lung Segmentation on High-Resolution Computerized Tomography Images Using Deep Learning: A Preliminary Step for Radiomics Studies. J. Imaging 2020, 6, 125. [Google Scholar] [CrossRef]

- Salehi, S.S.M.; Erdogmus, D.; Gholipour, A. Tversky loss function for image segmentation using 3D fully convolutional deep networks. In Proceedings of the Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), Quebec City, QC, Canada, 10 September 2017; pp. 379–387. [Google Scholar]

- Tversky, A. Features of similarity. Psychol. Rev. 1977, 84, 327. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J.L. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015—Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Zheng, H.; Yang, L.; Chen, J.; Han, J.; Zhang, Y.; Liang, P.; Zhao, Z.; Wang, C.; Chen, D.Z. Biomedical image segmentation via representative annotation. In Proceedings of the 33rd AAAI Conference on Artificial Intelligence, AAAI 2019, Honolulu, HI, USA, 27 January–1 February 2019; pp. 5901–5908. [Google Scholar]

| Parameter | Repetition Time (ms) | Echo Time (ms) | Flip Angle (Degrees) | Signal Averages | Signal-to-Noise Ratio |

|---|---|---|---|---|---|

| T2w TSE | 3091 | 100 | 90 | 3 | 1 |

| Layer (Type) | Output Shape | Parameters Number |

|---|---|---|

| input_1 (InputLayer) | (None, 512, 512, 1) | 0 |

| conv2d_1 (Conv2D) | (None, 256, 256, 15) | 150 |

| Sensitivity | PPV | DSC | VOE | VD | |

|---|---|---|---|---|---|

| ENet | |||||

| Mean | 93.06% | 89.25% | 90.89% | 16.50% | 4.53% |

| ±std | 6.37% | 3.94% | 3.87% | 5.86% | 9.43% |

| ±CI (95%) | 1.36% | 0.84% | 0.82% | 1.24% | 2.00% |

| UNet | |||||

| Mean | 88.89% | 91.89% | 90.14% | 17.66% | 3.16% |

| ± std | 7.61% | 3.31% | 4.69% | 6.91% | 9.36% |

| ±CI (95%) | 1.62% | 0.70% | 1.00% | 1.47% | 1.99% |

| ERFNet | |||||

| Mean | 89.93% | 85.44% | 87.18% | 22.18% | 5.70% |

| ±std | 10.92% | 5.43% | 6.44% | 9.61% | 14.72% |

| ±CI (95%) | 2.32% | 1.16% | 1.37% | 2.04% | 3.13% |

| ANOVA | F Value | F Critic Value | p-Value |

|---|---|---|---|

| ENet vs. ERFNet | 20.70407668 | 3.897407169 | 0.000010236 |

| ERFNet vs. UNet | 11.69135829 | 3.897407169 | 0.000788084 |

| ENet vs. UNet | 1.301554482 | 3.897407169 | 0.255553164 |

| Model Name | Number of Parameters | Size on Disk | Inference Times/Dataset | ||

|---|---|---|---|---|---|

| Trainable | Non-Trainable | CPU | GPU | ||

| ENet | 362,992 | 8352 | 5.8 MB | 6.17 s | 1.07 s |

| ERFNet | 2,056,440 | 0 | 25.3 MB | 8.59 s | 1.03 s |

| UNet | 5,403,874 | 0 | 65.0 MB | 42.02 s | 1.57 s |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Comelli, A.; Dahiya, N.; Stefano, A.; Vernuccio, F.; Portoghese, M.; Cutaia, G.; Bruno, A.; Salvaggio, G.; Yezzi, A. Deep Learning-Based Methods for Prostate Segmentation in Magnetic Resonance Imaging. Appl. Sci. 2021, 11, 782. https://doi.org/10.3390/app11020782

Comelli A, Dahiya N, Stefano A, Vernuccio F, Portoghese M, Cutaia G, Bruno A, Salvaggio G, Yezzi A. Deep Learning-Based Methods for Prostate Segmentation in Magnetic Resonance Imaging. Applied Sciences. 2021; 11(2):782. https://doi.org/10.3390/app11020782

Chicago/Turabian StyleComelli, Albert, Navdeep Dahiya, Alessandro Stefano, Federica Vernuccio, Marzia Portoghese, Giuseppe Cutaia, Alberto Bruno, Giuseppe Salvaggio, and Anthony Yezzi. 2021. "Deep Learning-Based Methods for Prostate Segmentation in Magnetic Resonance Imaging" Applied Sciences 11, no. 2: 782. https://doi.org/10.3390/app11020782

APA StyleComelli, A., Dahiya, N., Stefano, A., Vernuccio, F., Portoghese, M., Cutaia, G., Bruno, A., Salvaggio, G., & Yezzi, A. (2021). Deep Learning-Based Methods for Prostate Segmentation in Magnetic Resonance Imaging. Applied Sciences, 11(2), 782. https://doi.org/10.3390/app11020782