1. Introduction

For a long time, engineers have been using different techniques to control systems without directly supervising them. Various types of mechanical control systems were perfected by their creators, obtaining the highest forms as windmills, steam machines, locomotives, cars and entire factories. As a result of the development of science, however, new technical possibilities have emerged, enabling the creation of decision-making systems, e.g., using biological, chemical, quantum and electronic systems. Advanced information processing capabilities mean that we are not currently dealing only with simple control systems, but very complex systems, having the ability to acquire information, collect it, transform it into knowledge and even process the knowledge. The indicated capabilities allow us to create more and more advanced systems, such as banking advisory systems, autonomous cars, robots cooking meals or vacuum cleaners cleaning our apartments automatically. Currently, developed and manufactured systems are designed to support very specific tasks with a relatively narrow spectrum of applications. Those systems often use deep learning techniques that achieve very good results, e.g., in image recognition, creation of artificial images or text.

The challenge now is to create general-purpose systems that would enable both learning about the environment in an autonomous manner and the performance of commissioned tasks in a diverse environment. The implementation of general-purpose systems would not be possible without the development of systems that perform specific tasks, since they are the basis for the correct interpretation of the data flowing from the sensors, and they make it possible to perform the activities that make up the entire large task.

However, it can be stated that technological progress is already at such a high level that it will be possible to develop an intelligent general-purpose autonomous system and implement it into practical solutions. The use of such systems would, of course, be extensive, starting with home applications, in which the robot would perform simple operations such as cooking and cleaning, continuing with applications in industry and agriculture, and ending with applications in the space industry.

Work on general-purpose artificial intelligence has been going on for over 20 years. However, it should be noted that despite the fact that the new proposals and solutions are emerging in this area, unfortunately, no significant and noticeable breakthrough has been made that would enable widespread implementation of the proposed technologies. The vast majority of the AI field today is concerned with what might be called “narrow AI”—concentrated on creating programs that demonstrate intelligence in one specialized area [

1]. There is still a need to work on general solutions that would constitute a framework enabling, on the one hand, the integration of already developed “narrow AI” solutions, and on the other hand, general problem-solving.

In the following article, a new proposal in the field of general-purpose artificial intelligence is discussed. We present a new language that may be used to describe almost any environment in which robots or agents can potentially work. In addition, the presented language makes it possible to give meaning to objects observed in the environment and to plan tasks using the objects.

1.1. Literature Review

At first, the definition of artificial intelligence was very much identified with the intelligence present in humans or animals. It was referred to as self-awareness, i.e., having thoughts, feelings, worries, understanding the situation. However, such a definition of artificial intelligence causes its immeasurability (e.g., some systems can imitate human emotions very well through their behavior). Definitions of artificial intelligence are evolving with the emergence of new systems. To assess whether a new system will be considered intelligent or not, we can use the Universal Evaluation of Behavioral Features [

2]. Another approach, which assumes that the definition should be simple and lead to fruitful research, states that it is “adaptation with insufficient knowledge and resources” [

3]. In other publications, it is important to distinguish intelligent systems according to the specific criteria—general and narrow, individual and collective, biological and artificial, new and old, dispersed and centralized [

4]. In yet another approach, artificial intelligence should work with human intelligence in order to complement it [

5].

Many solutions concerned with artificial intelligence focus on solving one type of task (e.g., image recognition, creating music, driving a vehicle, etc.). In addition to such partially intelligent systems, there are attempts to develop artificial intelligence of general-purpose. The problem in this approach is the integration of many more straightforward solutions using one Artificial General Intelligence (AGI) tool [

1]. Sometimes, however, in order to solve a complex problem, there is a need to integrate many smaller systems. One can then talk about General Collective Intelligence [

6,

7,

8,

9]. AGI should be able to anticipate certain behaviors or actions that should be taken to achieve the expected result [

10]. Another essential feature of AGI is adapting to changing conditions, i.e., using new environmental features while not forgetting what has been developed in previous situations (cumulative learning) [

11]. Understanding natural language (drawing conclusions, learning) is also one of the directions of AGI research [

12].

Creating an intelligent system to solve any problem can be difficult, which is why systems are designed to address a particular group of tasks, e.g., playing games [

13]. A special framework for testing agents (game players) has been created. New rules of the game can be described using the Game Description Language (GDL) in this environment. In this way, tournaments are played between agents to determine the algorithm that best solves the problems with changing rules [

14].

Another area in which AGI is used is automatic planning. The issues of automated planning have widened from toy problems to real applications. Automatic planning is difficult—we need to know the structure of the problem. Automatic planners do not provide good results in many areas. An overview of research on automated planning can be found in the literature [

15,

16]. The issue of planning in business is a specific subgroup of algorithms for planning. The objective is to find a procedural way of working for a system that is declaratively described, while optimizing performance measures [

17,

18]. This can be achieved using Formal State Transition Systems [

19]. Automatic planning is used in robotics, production, logistics, transport and spaceflight. In an intelligent plan, attention needs to be paid to the analysis phase because the systems can identify and redefine variables, so the accuracy of the model (generated by automatic planners) can be increased [

20].

The use of the Non-Axiomatic Reasoning System (NARS) is an interesting proposition in the field of AGI. It assumes that the system should be finished and open, work in real-time and adapt with insufficient knowledge or resources. A language that has semantics based on experience has been introduced. According to this, the value of truth of the judgment is determined on the basis of the previous experience, and the meaning of a term depends on its relationship with other terms [

21,

22,

23].

Sometimes, systems have limitations related to the way of recording knowledge about the surrounding environment. They are too limited or too complicated. The recording method, which is not general enough, causes the system to be dedicated to one task only. Therefore, an important part of these systems is the way to record knowledge about the environment in which the job must be solved. The system environment can be a world discovered by a robot using sensors. It can also be a policy environment in strategic games or a production facility operation. Many publications have introduced languages that could describe specific contexts in which AGI applications will run.

The game description language is a language to describe the rules of the game [

24]. The language consists of concepts such as term, atomic sentence, literal, datalog rule, dependency graph, model and satisfaction. By defining these statements, it is possible to set the rules of the game. An agent (player) operating in this environment must, by following these principles, demonstrate better adaptation to new conditions than the other agents.

Many agent description languages have been created in recent years, e.g., Q [

25], JADL [

26], ADL [

27], JADEL [

28]. Q describes the interaction scenarios between agents and users based on external policies. External rules describe the environment in which agents move. These rules can be changed, e.g., in the event of an emergency and evacuation. The “JADL” language (JIAC Agent Description Language) is a language of description in which the Agent environment is defined by means of a goal to be achieved and rules [

26]. Rules are implemented quite simply, consisting of a condition and two actions, one of which is executed when the condition becomes true and the other when the condition becomes false. In Architectural Description Language (ADL), the behavioral model consists of eight main project units: agent, knowledge base purpose, ability, beliefs, plan, events, activities and services [

27]. An agent needs knowledge of his environment to make good decisions. The knowledge is stored in the agent in the form of one or many knowledge bases making its information state. The knowledge base consists of a set of beliefs that the agent has about his environment. A belief represents the view of the agent’s current environment. JADEL (JADE Language) was created as a way to reduce the complexity of building systems based on Java Agent Development framework (JADE), providing support during the implementation of agents, behaviors and ontologies. JADEL is to enable agents to be used as components [

28].

Among the languages that use non-axiomatic knowledge, we can distinguish NARSESE [

29], NARS [

30], ALAS [

31,

32]. NARSESE and NARS, that are languages used to build a system with learning ability. The system is able to acquire problem-solving skills based on experience, it also is adaptive, and able to distinguish between the external environment and internal knowledge. The following elements are defined in these languages: a judgment—an expression with the value true at the input, which is a representative of a piece of knowledge that the system learns or checks; a question—which the system answers in accordance with the beliefs of the system; a goal—an expression to be implemented by performing certain operations in accordance with the beliefs of the system. The ALAS language uses non-axiomatic logic for distributed inference for agents.

Planning is a branch of artificial intelligence (AI) that attempts to automate reasoning about plans and, above all, reasoning that serves to formulate a plan needed to achieve a specific goal in a given situation. Planning for artificial intelligence is model-based: the planning system takes the description of the initial situation as input, the activities available for its change and the condition of the goal to create a plan consisting of those activities that will achieve the goal after execution from the initial situation. Planning languages include Planning Domain Definition Language (PDDL) [

33,

34] and Stands for Stanford Research Institute Problem Solver (STRIPS) [

35,

36] for multi-agent environments. PDDL aims to express the “physics” of the domain, that is, what the predicates are, what actions are possible, what the structure of complex operations is and what the effects of actions are. Most planners also require some kind of “advice” or annotation including information which activities should be used in order to achieve one of the goal, or in what complex actions, under which circumstances. The PDDL language does not provide such advice, making it a neutral tool that can be used in various places. As a result of this neutrality, almost all planners need to expand notation, but this can be done in different ways. The STRIPS language works in a multi-agent environment; each agent tries to achieve its own goals, usually leading to a conflict of objectives. However, there is a group of problems with conflicting goals that can be met at the same time. Such problems can be modeled as a STRIPS system. If the STRIPS planning problem is reversible, planning under uncertainty methodology can be used in order to solve the inverted problem, and then find a plan that solves the problem with multiple agents.

Language grounding is another important issue in the context of language creation for intelligent systems. Grounding means connecting linguistic symbols to perceptual experiences and actions [

37]. These issues can be particularly useful e.g., in the communication of people with robots [

38]. Robots can learn to correlate natural language with the physical world being sensed and manipulated [

39,

40]. There are publications concerned with teaching robots to recognize objects by their names and attributes, and demonstrating their learning action [

41,

42].

1.2. Motivation and Goals

The main objective of the following work is to present a new General Environment Description Language (GEDL) that may describe almost any environment in which robots or programs can potentially operate independently.

The proposed language differs from the languages and solutions described in this subsection A. GEDL can be treated more like a frame that determines the way of perceiving reality and organizing the data and knowledge. We believe that the presented language should use AI techniques developed so far and also presented in subsection A. For example, PDDL or STRIPS languages could potentially be used by individuals using GEDL to solve problems. Likewise, other artificial intelligence techniques and tools, such as deep learning libraries TensorFlow [

43], Keras [

44], PyTorch [

45] or fuzzy logic [

46] can be a part of GEDL based autonomous system devoted to building intelligent general-purpose individuals.

It is assumed that individuals using our language will have unlimited resources, including memory and computing power. This assumption is the opposite of that commonly used in the agent systems discussed above. We believe that individuals (robots, programs) using the language will have access to cloud computing or will use future technologies.

The use of concepts that give meaning to the observed elements is a novelty, significantly differentiating the proposed language from similar languages previously described in the literature. The manner of describing the actions that individuals can take is also of great importance. We do not define the manner of their implementation, but only the state of the system before and after execution.

1.3. Paper Organization

The paper is structured in the following way: in

Section 2, the overall concept of the proposed GEDL language is introduced.

Section 3,

Section 4,

Section 5,

Section 6 and

Section 7 contain notation, definitions and examples of the elements of this language. In

Section 8, an exemplary individual knowledge with problem solution is presented. The conclusions are presented in

Section 10.

2. Introduction to GEDL Language

In this chapter, the general assumptions of the GEDL language are described. It is presented how the elements are included in the GEDL language and how they are further interpreted. It is necessary to be able to define the individual elements of this language later.

Humans are intelligent beings who are able to build conceptual systems in their minds, learn from mistakes, save knowledge, and transfer it to other individuals. Trying to develop a general language for describing the environment that could be used in autonomous general-purpose robots, an attempt can be made to model ourselves by observing our understanding of the environment and behavior. In some ways, we are also doomed in our considerations to recreate our understanding. It is because, in most cases, we are not able to understand other ways sufficiently, e.g., represented by animals.

The presented General Environment Description Language helps to describe the environment, and thus also save knowledge about the environment for the needs of autonomous systems that make decisions and perform tasks in the environment. This language makes it possible to systematize uncertain, vague, and sometimes not fully defined knowledge.

The environment can be a fragment of the physical world in which we live or another reality in which information is processed, for example, a computer game.

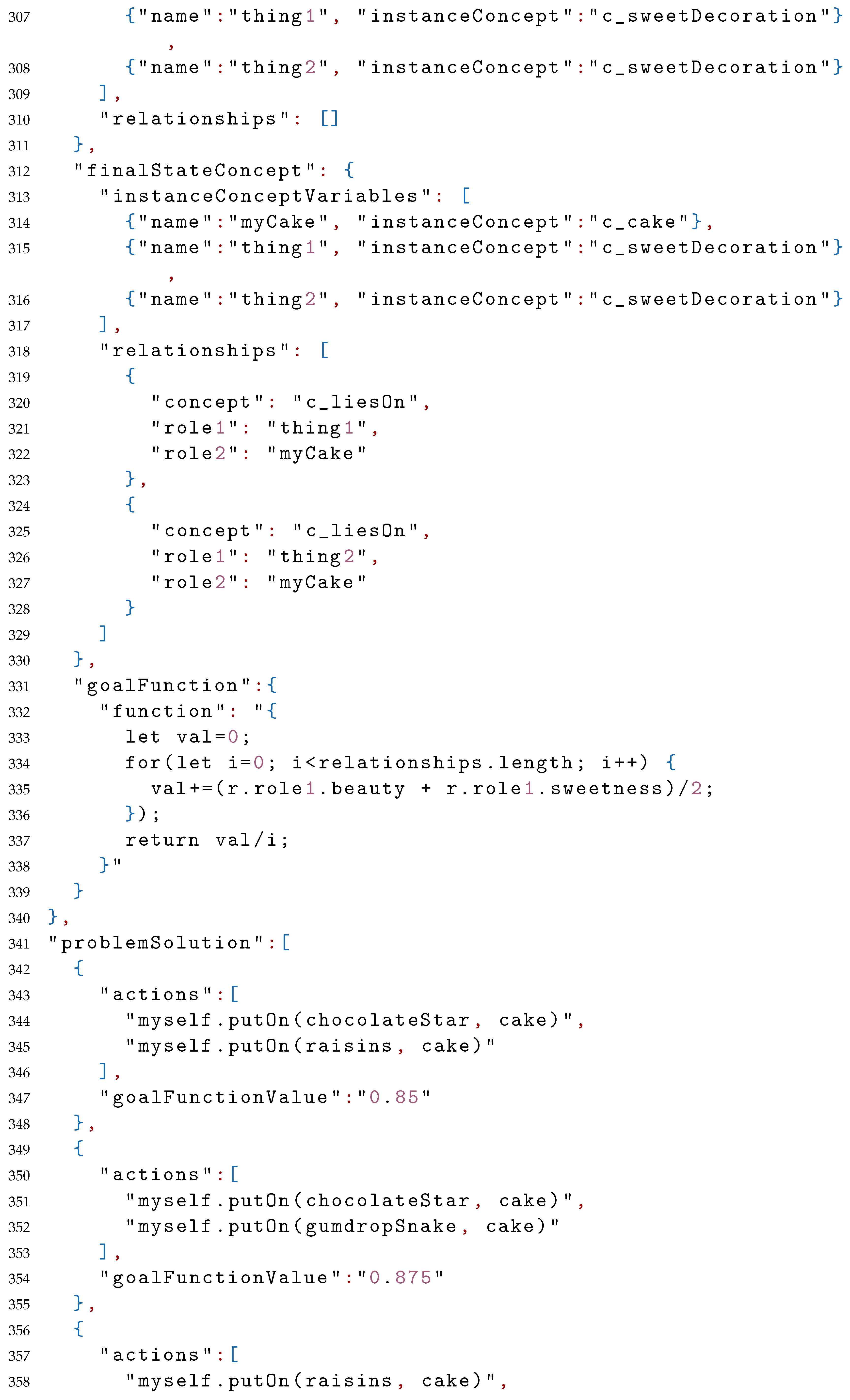

There are individuals in the environment, i.e., individuals who possess and collect individual knowledge. Individuals acquire knowledge using a cognitive mechanism. The mechanism may not provide full knowledge, or this knowledge may not be accurate. Therefore, it should be noted that in the described approach, the environment with its objects is distinguished from the knowledge of the environment possessed by the individual. Each individual may have utterly different knowledge of the environment. In extreme cases, when individual knowledge will be empty, the individual will find that the environment does not exist. The way the individual perceives the environment is presented in

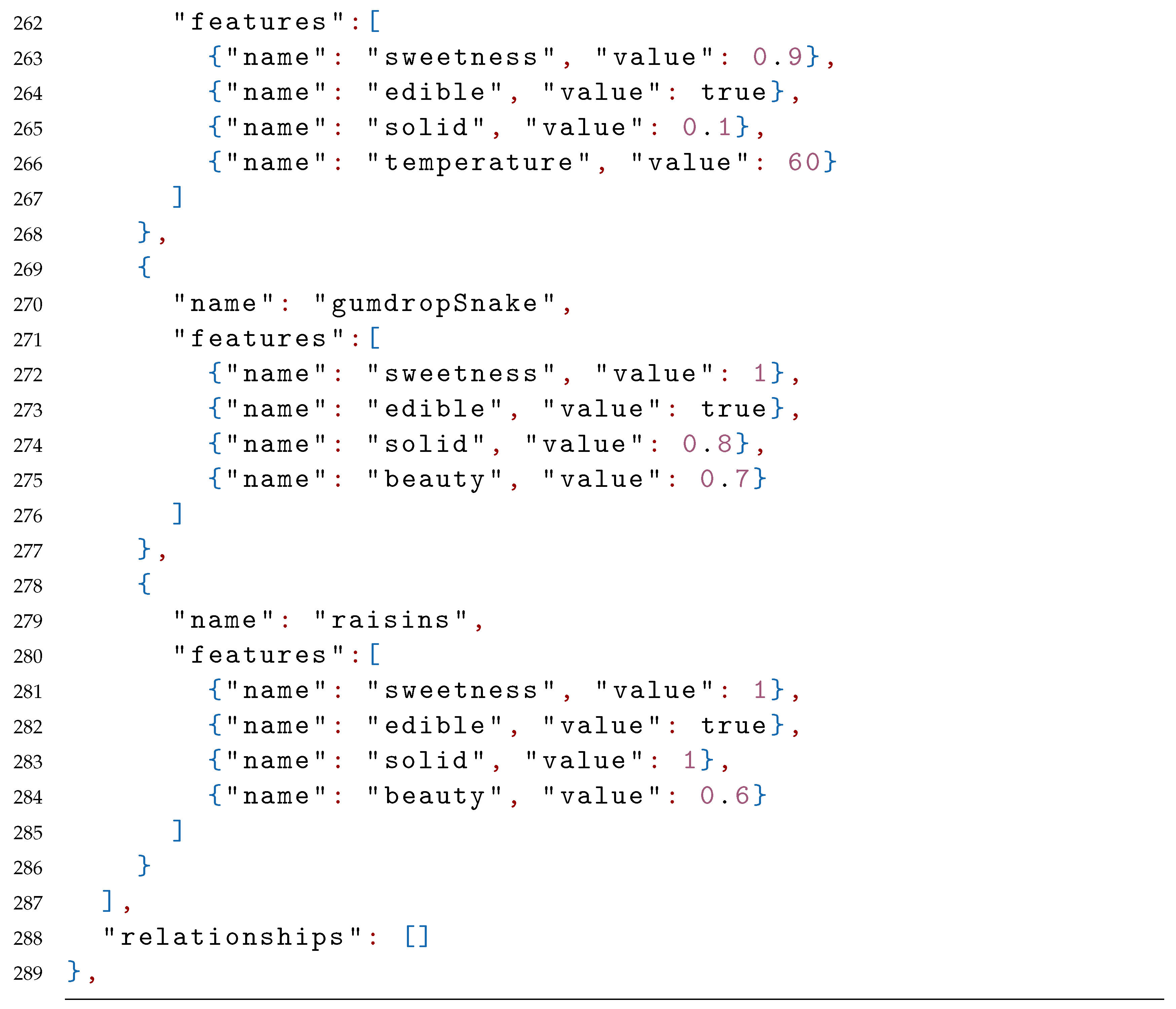

Figure 1.

An individual in the environment can distinguish an object that is an element of the environment. The individual decides on distinguishing or perceiving the element. To distinguish it, the individual must need it, and the cognitive mechanism must be able to do it. An element called an instance in individual knowledge is the equivalent of an object in the environment. The instance is the knowledge about the objects and it contains features subjectively distinguished by an individual. Features can have values, e.g., color can be green, tastiness can be unpalatable. Instances may also be in relationships with other instances and they may perform actions. It should be noted that an individual is also an instance having its own features, relationships and actions.

An instance can change over time by changing the configuration of its features, relationships and actions. The values of features and relationships of an instance, at a particular moment, are called a state.

Two objects with the same characteristics should be distinguished in the individual knowledge as two independent instances, in which attributes will be assigned independently. Such an approach can, however, be problematic when making decisions autonomously because a large number of ungrouped instances would be in the individual knowledge then. In connection with the above, we are introducing a new approach in the field of environment description languages, called an instance concept. The instance concept is a set of all instances with features that have assigned values from a specific range. For example, let us take the knife concept, i.e., sets of all instances in which we can distinguish a blade having a length of 1 to 25 cm. At the same time, some instances belonging to the knife concept may belong to the cutlery concept, and others may belong to the concept of a hunting knife.

The idea of the instance concept can also help to formulate problems to be solved by autonomous systems and to improve the communication of these systems with people. Let’s consider the following example: the robot is to make pancakes from edible ingredients including flour and eggs. Edible ingredients, flour and eggs, are concepts of instances. To perform the task, the robot has wheat flour and lime flour (used in construction) available. Both types of flour belong to the concept of flour, which has the following features: they combine well with water, form a sticky mass when mixed with water, are white, form dust, etc. However, in the concept of edible ingredients, it is indicated that the instance should have an eatable feature. In this way, the robot will easily be able to classify instances and choose wheat flour, having the eatable feature as opposed to lime flour. A properly developed decision-making mechanism could also ensure that it would not be necessary to indicate that the ingredients of the pancake are edible because the pancake itself is edible. The mechanism can “guess” that an inedible ingredient cannot be used there.

As it was mentioned earlier, we can also distinguish the relationships between instances, e.g., instance 1 is above instance 2 or instance 1 is a mother of instance 2. In order to create a relationship between instances, the individual should notice the relationship between objects in the environment, or it must result from logical premises in the individual knowledge. We also distinguish the concept of a relationship that gives the meaning of a relationship.

Instances can perform actions that can change a fragment of the environment and, more precisely, change the instance states, e.g., by changing existing objects. Actions are performed by instances, and other instances may also be used in their performance. For example, a robot can do laundry at home using a washing machine. Actions are attributed only to those instances that are able to carry out a specific action autonomously (they make decisions on their own). For example, a kitchen knife is used for chopping, but it cannot perform the chopping on its own. This can be done, however, by a human or possibly by an autonomous robot, and a knife is only a tool when performing actions. The performance of actions by an individual does not always mean that the goal of carrying out actions will be achieved in the environment, e.g., making pancakes may fail for several reasons, often even independently of the quality of the decisions made.

For a set of similar actions, regardless of the instances that implement them, concepts of action can be distinguished. The action concept is a set of all actions that transform instances in such a way that they finally obtain instances being in a similar state. Thanks to the action concept, it will be possible to find all instances being able to perform similar actions. For example, a robot that will be given the task of doing laundry will search, at home or in a wider available environment, for all instances that can wash clothes.

Actions are atomic, and smaller indirect activities are not distinguished for them, although they may consist of such. Actions can be implemented using advanced subsystems, such as artificial neural networks. In this case, we act similarly to biological systems, when, for example, we have to squeeze an object by hand, we do not think which muscles should be used, we just do it.

Actions are used in problem solutions. The solution may consist of a group of actions carried out in a specific order, in parallel or concurrently. An individual can solve the problem by developing several solutions, choosing the best, and implementing it. Developing solutions is time-consuming, which is why they should be stored in the individual knowledge and used when a new similar problem appears.

As it was mentioned earlier, each individual perceives and learns the environment on their own, perhaps without even understanding that there are other individuals in that environment. However, since there may be many individuals in the environment with similar cognitive capabilities, e.g., several robots of the same type, we should consider sharing the individual knowledge or fragments of this knowledge. This would significantly accelerate knowledge growth and enable us to share previously-found solutions. In the humans’ world, such knowledge sharing is called education.

Precise definitions of the ideas and concepts outlined above will be presented in the further sections.

3. Notation Used in GEDL Language

The GEDL language allows an individual to describe the environment in which the individual acts. The JSON notation [

47] was chosen for storing knowledge according to the GEDL. The JSON has many advantages, including the universality of applications, numerous implementations, ease of storing data in databases. It is easy for humans to read and write, and also easy for machines to parse, generate and interpret. The JSON allows us to use universal data structures and most of the modern programming languages support it. Thanks to this, it would be potentially possible to exchange or even share knowledge by individuals constructed using various technologies. However, it should be mentioned that other notations, such as XML, could also be used to store data according to the GEDL.

The definitions presented in further chapters indicate the meanings of the terms used. They also present the way of storing individual knowledge using the JSON notation. It is worth mentioning that JSON objects are surrounded by curly braces “{}” and are written in key/value pairs. Arrays are surrounded by square braces “[]”. Keys must be strings (text) and values must be valid JSON data types: string, number, another JSON object, array, boolean or null defined according to [

47]. Besides, in the GEDL language, some of the JSON strings contain a source code written in a programming language. This approach is sometimes used in JSON [

48,

49], and in our case, it makes the language more flexible in the way of expression.

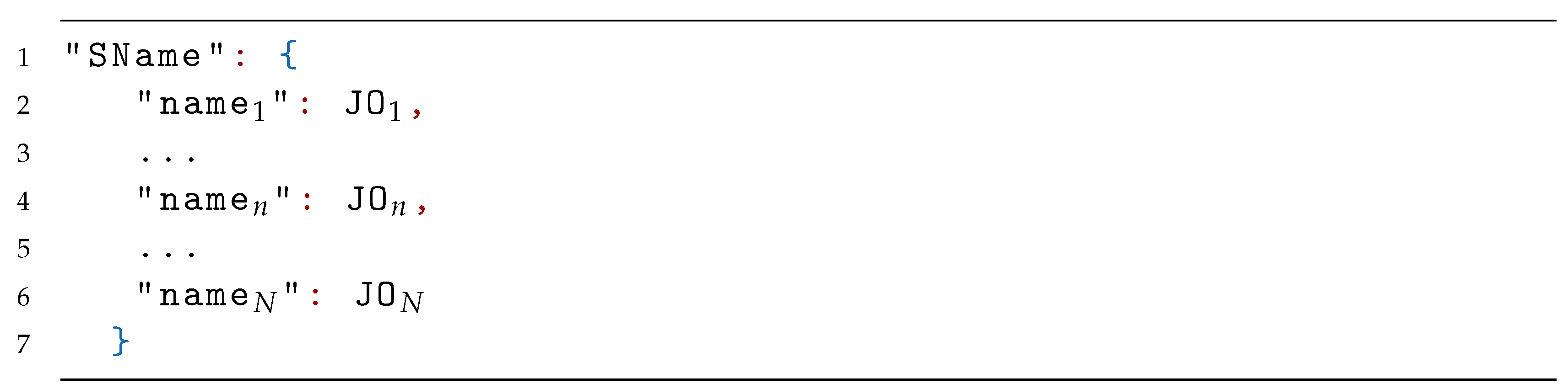

Further, the JSON objects are defined according to the following example:

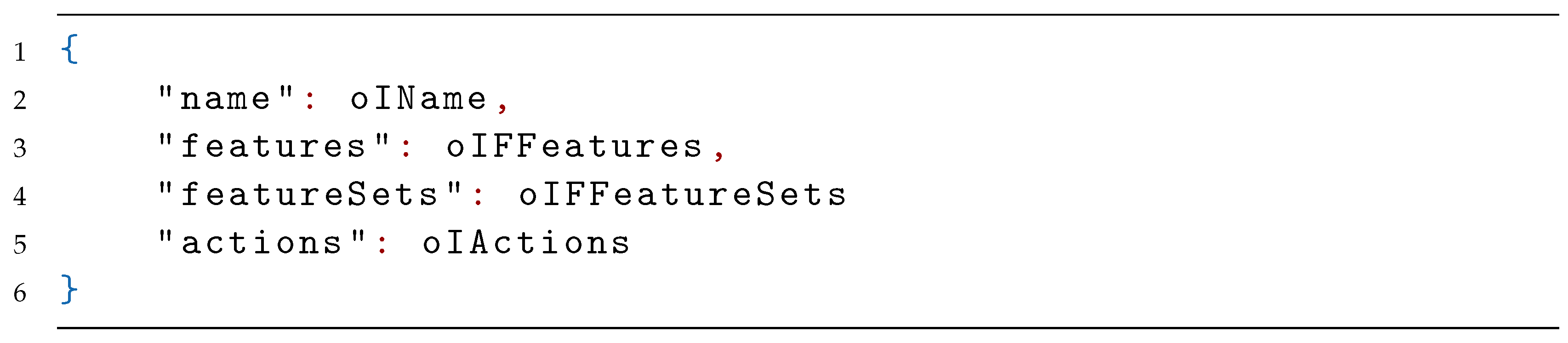

An element S is a JSON object constructed in the following way:

In the example, denote certain structures according to the JSON notation, while the elements indicate the place in the structure of the object and the order of individual elements. They are also human-understandable.

As a part of the description of elements of the GEDL language, the mathematical notation is introduced to clarify the presented definitions. According to the notation,

S is a Cartesian product

, where

, and

are sets constructed in accordance with its definitions, and

, where

and

is an element (a JSON object or another JSON structure). A subset of occurrences of JSON objects (relation) is marked in the following way

, where

K is the number of existing JSON objects. The object

is defined as a series

. The presented approach is similar to the approach used in the database theory [

50].

The upper index o indicates the occurrence of an element built according to the presented definitions of JSON objects or another element constructed according to the JSON notation (e.g., ). The lower index is added in the case of references to specific elements of sets or series (e.g., is the n-th element of ). The lack of a lower index indicates any element of the set or a series (e.g., is an example of any element of the set ).

We denote the JSON array in the mathematical notation as series , where , is the JSON object or another JSON structure.

We use the following definitions:

Let

, where

be any relation. A projection of the

on the set

is denoted as

and defined in the following way:

Let

, be a relation and

, where

. The function

is defined as follows:

where

.

For example:

Let

be a series

, where

. The function

is defined as follows:

where

.

For example: , .

Let

be a series

, where

. The set of values of the series

is defined as follows:

Due to a large number of definitions describing the language, they are divided into four sections presenting:

environment and the individual building the knowledge of the environment,

a conceptual system storing concepts of elements which can potentially occur in the environment,

occurrences of observed elements of the environment,

experience of the individual containing problems to solve and the solutions.

The elements describing the GEDL language are complex, and there are many connections and dependencies between them, making it impossible to arrange the definitions from the simplest to the most complex one. The order of definitions is arranged in this way to facilitate reading and understanding of the text.

4. Environment and Individual

This chapter defines basic elements used in the GEDL language: the environment, the individual and the individual knowledge.

Definition 1. Anenvironmentis a set containing objects . The environment can be a fragment of the physical world, information space (e.g., computer game, computer network, stock exchange) or mixed physical and information space.

Definition 2. Anindividualis an entity building knowledge about the environment or having such knowledge about a fragment of the environment that is in its sphere of interest, and/or learning about it, and/or performing tasks in the environment. The individual can be an objectin the environment or can only observe it.

Definition 3. Anyindividual knowledgeis a systematized knowledge about the environment possessed by an individual. Individual knowledge can be built using various techniques:

an observation with the usage of sensors,

an adaptation that is the improvement of knowledge about the environment which is the effect of subsequent observations or activities that bring new information,

obtaining information stored outside the individual, e.g., previously collected by other individuals,

a deduction or inference (knowledge, in this case, can be uncertain),

other.

The contains the following elements:

oCS—is a JSON object containing a conceptual system defined in Definition 4,

oOC—is a JSON object containing occurrences of instances and relationships, defined in Definition 12,

aE—is a JSON array containing a finite set of solved problems oE, defined in Definition 22,

.

The individual knowledge can change over time. We distinguish moments of time , , when the knowledge is updated, and in this way, we get a series of subsequent versions of knowledge. In the definitions presented further in the article, we consider only one version of the knowledge in one moment .

5. Conceptual System and Its Elements

The conceptual system of the GEDL language is presented. Later, all elements of this system are described. Firstly, features and feature sets are introduced. Then, there are definitions of the instance, relationship, state and action concepts. Additionally, the instance concept variable definition is presented.

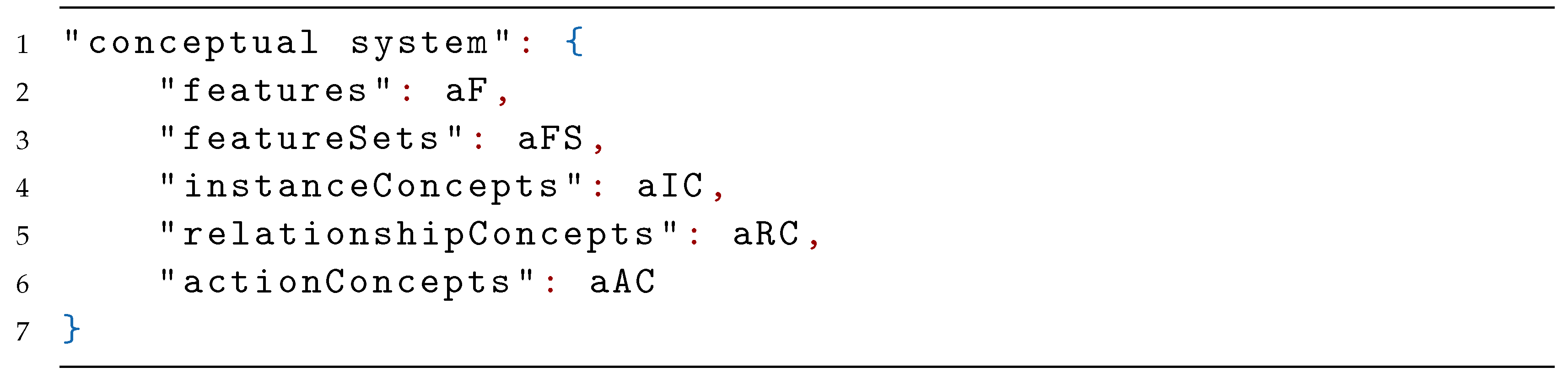

Definition 4. A conceptual system oCS is a JSON object containing concepts of entities, concepts of relationships, concepts of activities. The conceptual system is:

aF—is a JSON array containing a finite set of elements of the following type: feature oF, ,

aFS—is a JSON array containing a finite set of feature sets oFS, ,

aIC—is a JSON array containing a finite set of instance concepts oIC, ,

aRC—is a JSON array containing a finite set of relationship concepts oRC, = (, ..., ,

aAC—is a JSON array containing a finite set of action concepts oAC, ,

, where, is defined in Definition 5, is defined in Definition 6, is defined in Definition 7, is defined in Definition 8, and is defined in Definition 11.

5.1. Features

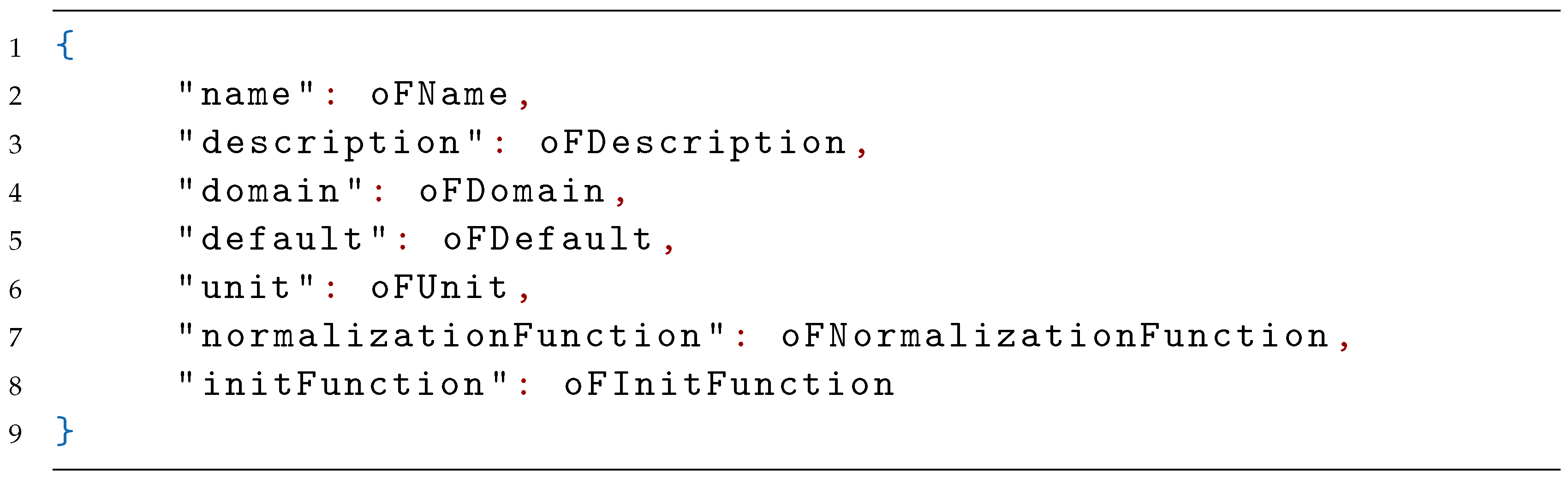

Definition 5. A feature is a JSON object representing a distinguished property of an object in the environment. The feature is:

![Applsci 11 00740 i004 Applsci 11 00740 i004]() where:

where:oFName—a JSON string containing the name of a feature uniquely identifying the feature,

oFDescription—a JSON string containing the description defining the meaning of the feature (optional),

oFDomain—a JSON object describing a set of values that the feature can take. The domain contains:

![Applsci 11 00740 i005 Applsci 11 00740 i005]() where:

where:- -

oFDSet—a set of values:

- *

for measurable values (quantitative) it can be a JSON string containing the name of an earlier defined set, for example: real, integer, float numbers etc.,

- *

for non-measurable values it can be a JSON array containing a set of acceptable values of the feature, where aFDSet is a finite set of values used as a dictionary, for example for a blood type .

- -

oFDMin—a JSON string or number containing a minimal value of this feature (optional),

- -

oFDMax—a JSON string or number containing a maximal value of this feature (optional),

.

Let and . A set of values is defined by . If and are not fixed (defined), then .

oFDefault—a JSON string or number containing a default value of the feature if it is not specified (optional),

oFUnit—a JSON string containing a unit of the value (optional),

oFNormalizationFunction—a JSON string containing a source code written in a programming language, containing a normalization function that returns a normalized value of the feature; it can be useful while choosing the optimal solution (optional),

oFInitFunction—a JSON string containing a source code written in a programming language, containing a function that can calculate the value of a feature, based on the value of signals from an individual sensor or based on the value of other features (optional).

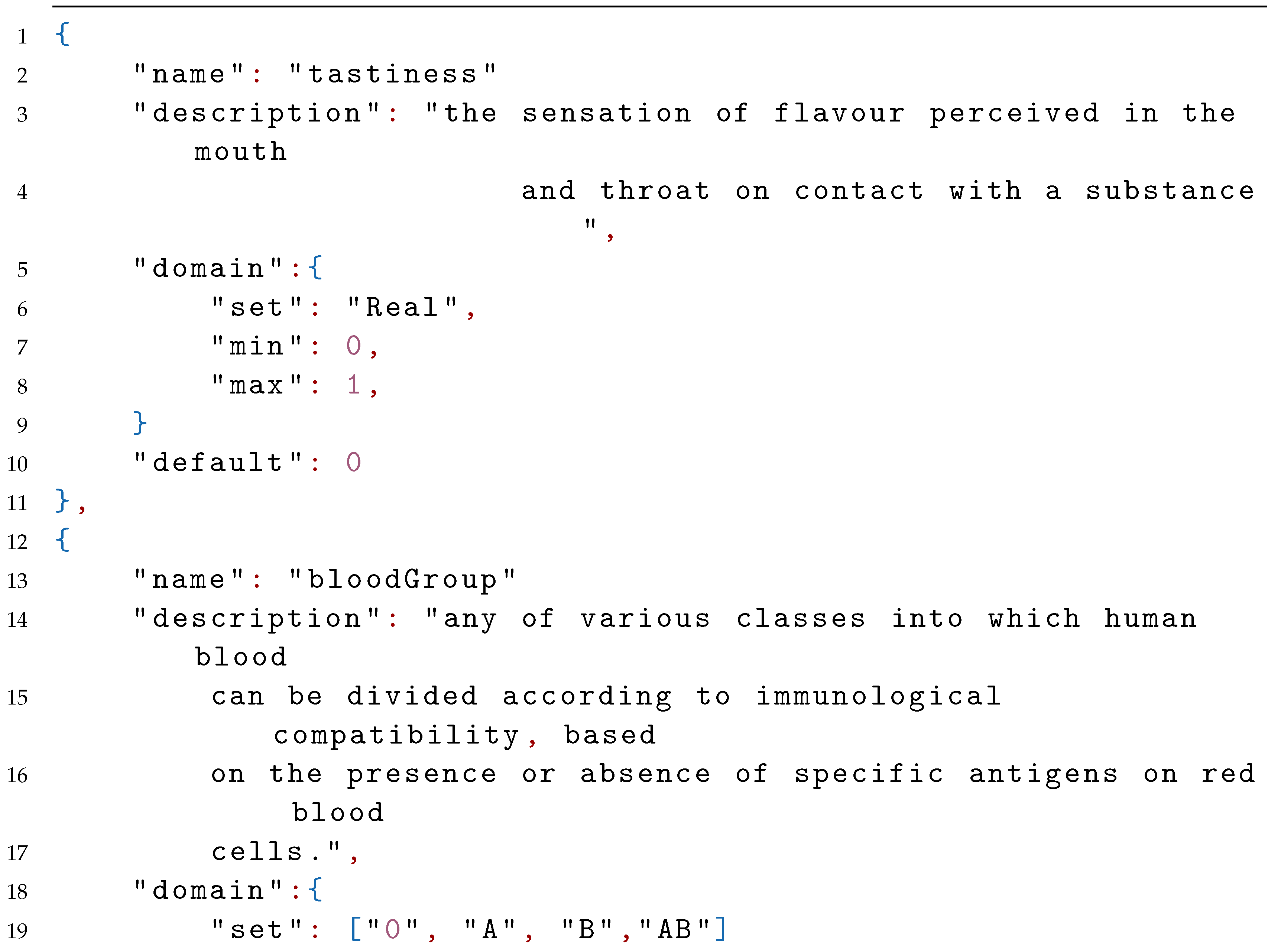

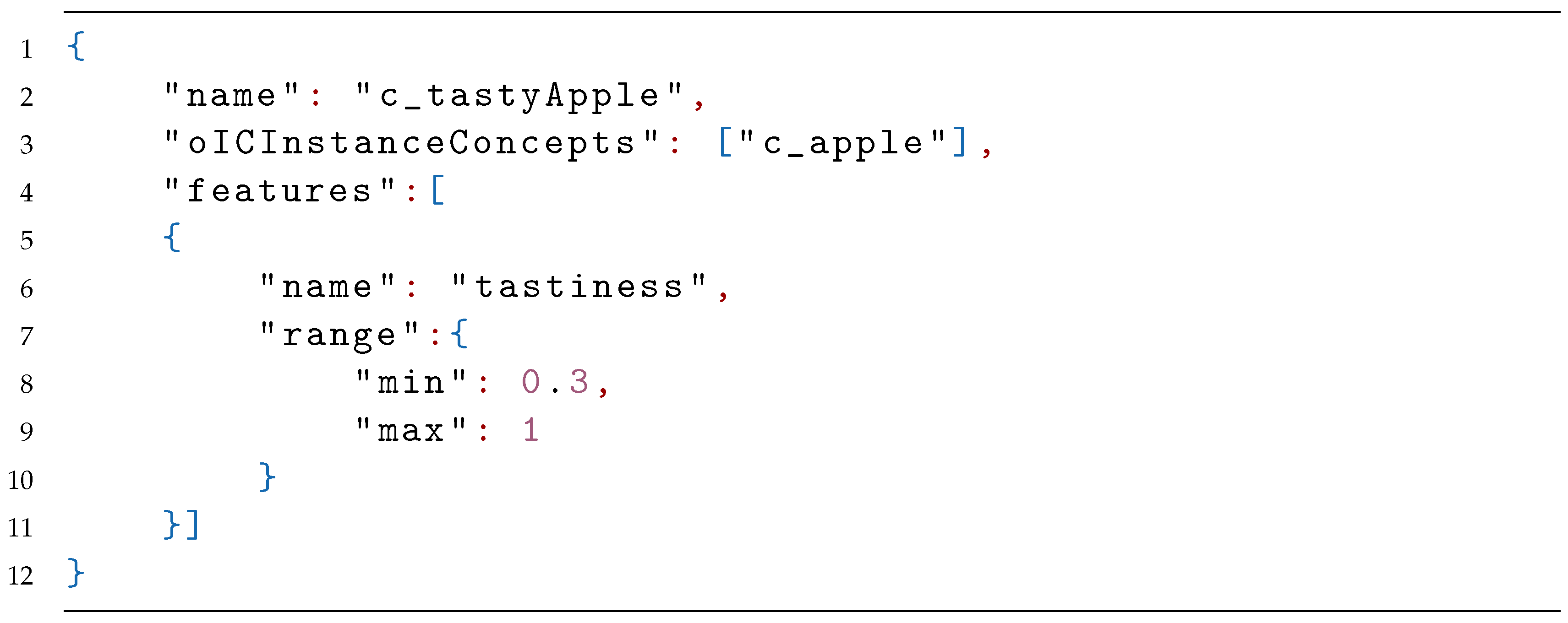

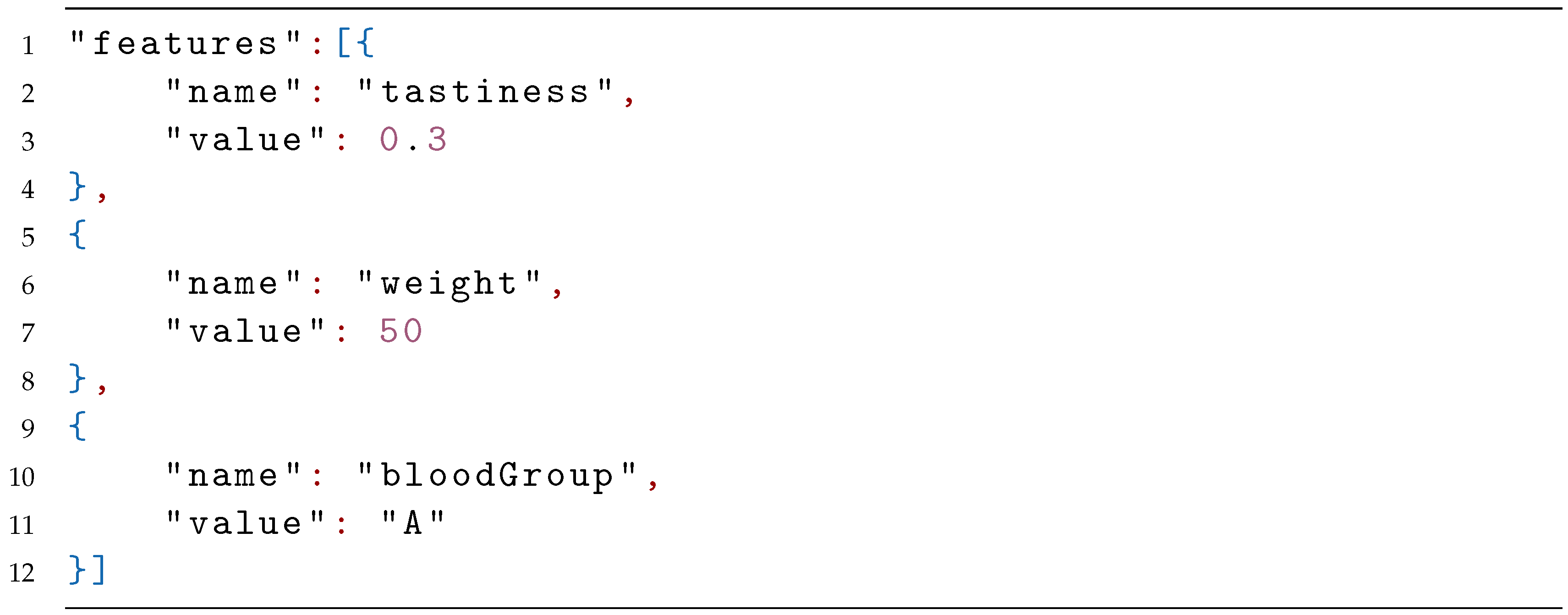

Examples of two features—tastiness and bloodGroup:

5.2. Feature Sets

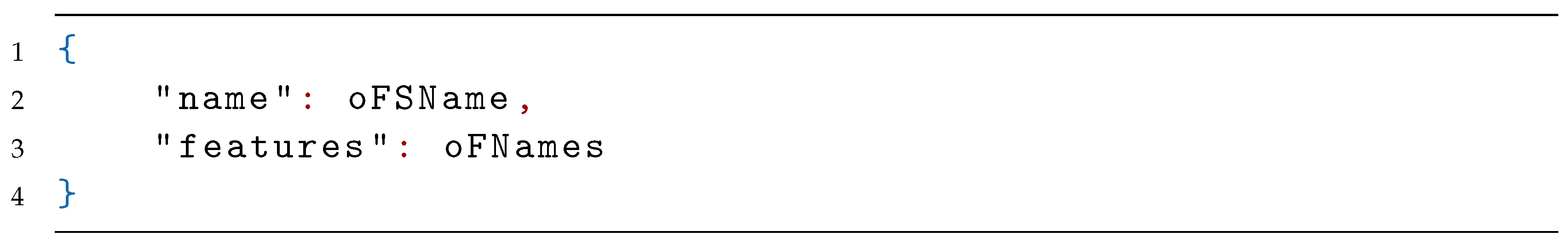

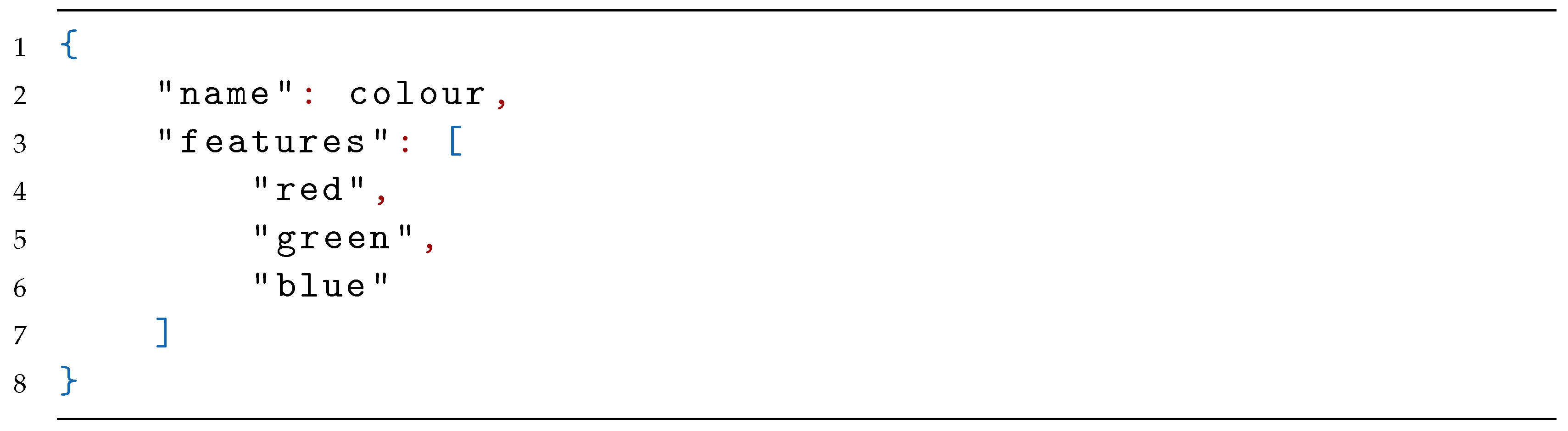

Definition 6. A feature set is a JSON object grouping a collection of features under one name. The feature set groups features. It can be written:

![Applsci 11 00740 i007 Applsci 11 00740 i007]() where:

where:oFSName—a JSON string containing the name of the feature set uniquely identifying the feature set,

oFNames—is a JSON array containing a finite ordered set of feature names oFName, = , the feature having the name has to be defined in the conceptual system, , ,

.

For example:

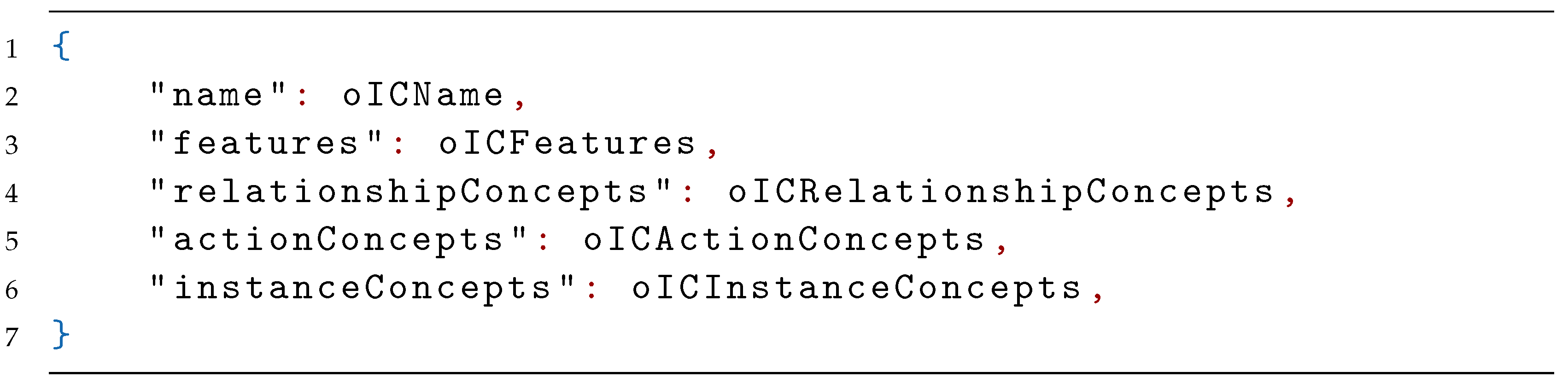

5.3. Instance Concepts

Definition 7. An instance concept oIC is a JSON object defining a set of all instances that are similar in some aspects. The instance concept is defined as follows:

![Applsci 11 00740 i009 Applsci 11 00740 i009]() where:

where:textbfoICName—a JSON string containing the name of an instance concept uniquely identifying the concept,

oICFeatures—a JSON array containing a set of elements called a ( is optional).

The is a JSON object defining a narrowed set of values for the existing feature . The object is constructed as follows:

![Applsci 11 00740 i010 Applsci 11 00740 i010]() where:

where:- -

oFName— is a JSON string containing a name of an existing feature, = ,

- -

oICFRange—is a JSON object defining a range of values from the domain of the feature to which a value of an instance feature has to belong to be in the set of the instance concept,

- *

oICFRSet—is a JSON string or an array defining a set of values from the domain of the feature (optional),

- *

oICFRMin—is a JSON string or decimal containing a minimal value of the range (optional),

- *

oICFRMax—is a JSON string or decimal containing a maximal value of the range (optional),

Let , moreover .

A set of values is defined as .If and are not fixed, then .

oICRelationshipConcepts—is a JSON array containing a set of elements , .The is a JSON object indicating a relationship concept. The instance belonging to the instance concept should be in a relationship belonging to the relationship concept. The object is constructed as follows:

![Applsci 11 00740 i012 Applsci 11 00740 i012]() where:

where:oICRName—is a JSON string containing a name of an existing relationship concept (defined in Definition 12) to which a relationship should belong, in which there is an instance belonging to the instance concept. The following roles should be met: ,

oICRRole—is a JSON string containing a role that should have an instance in the relationship belonging to the relationship concept. Possible values: role1, role2.

oICRelationshipConcepts is optional.

oICActionConcepts—is a JSON array containing a finite set of oACNames identifying action concepts (defined in Definition 11). . An instance belonging to a given instance concept must contain actions belonging to all action concepts with identifiers contained in oICActionConcepts.

, .

oICActionConcepts is optional.

oICInstanceConcepts—is a JSON array containing a finite a set of oICName identifying other instance concepts to which an instance has to belong to be in a given instance concept.

. The set of oICNames indicates the instance concept that narrows down a set of instances belonging to the given instance concept.

The instance concepts group instances that are similar in some way or belong to the same kind. It can be said that the instance is in the instance concept when the instance is in the set ICM defined in Definition 18. In order to distinguish concept names from instance names, all concept names start with a prefix c. Definition 18 accurately determined the belonging of instance to the instance concept.

For example:

The concept can also be narrowed down to another concept, for example:

5.4. Relationship Concepts

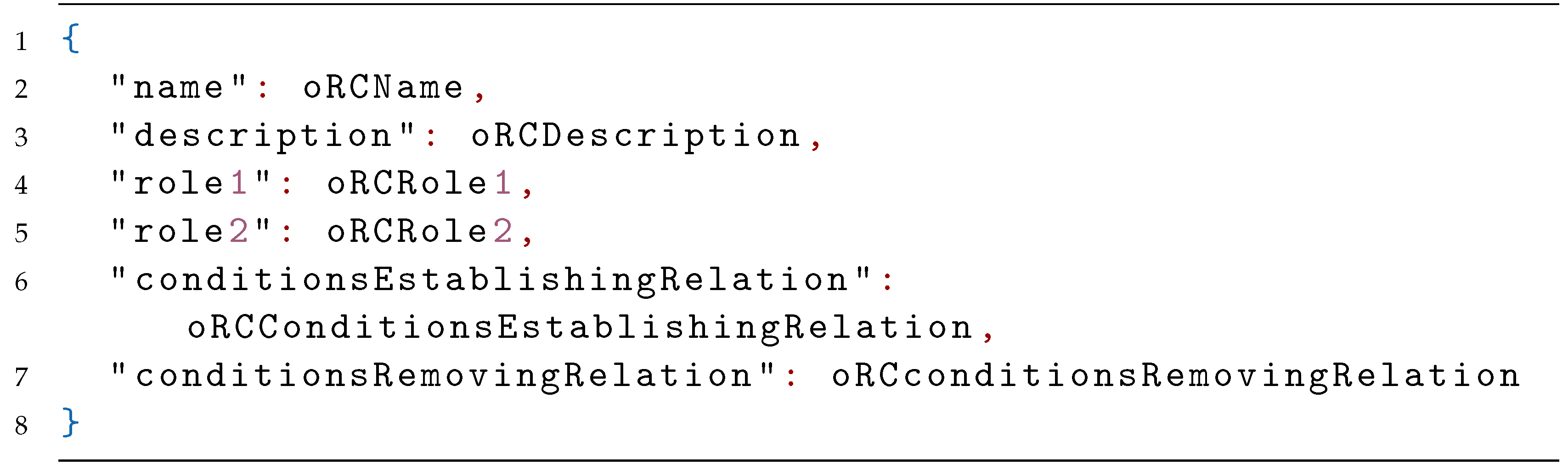

Definition 8. A relationship concept oRC is a JSON object defining a set of all relationships that have the same meaning. The relationship concept represents relationships between instances belonging to two instance concepts. The concept gives meaning to the relationship. It can be defined as follows:

![Applsci 11 00740 i015 Applsci 11 00740 i015]() The concept of a relationship includes:

The concept of a relationship includes:oRCName—is a JSON string containing a name uniquely identifying the relationship concept,

oRCDescription—is a JSON string containing a description of the meaning of the relationship (optimal),

oRCRole1—is a oFName identifying the name of the first instance concept to which instances being a part of a relationship belong,

oRCRole2—is a oFName identifying the name of the second instance concept to which instances being a part of a relationship belong,

oRCconditionsEstablishingRelation—is a JSON string containing a set of conditions that have to be fulfilled in order to build a relationship according to the relationship concept. Conditions should be written in a source code in a programming language (optional),

oRCconditionsRemovingRelation—is a JSON string containing a set of conditions that have to be fulfilled to remove a relationship built according to the relationship concept. Conditions should be written in a source code in a programming language (optional).

The relationship concept describes a one-direction relation (but it is not the relationship itself) between the first element () and the second one (). The order of those two parameters of the relationship is important.

Examples of two relationship concepts c_liesOn and c_isMother:

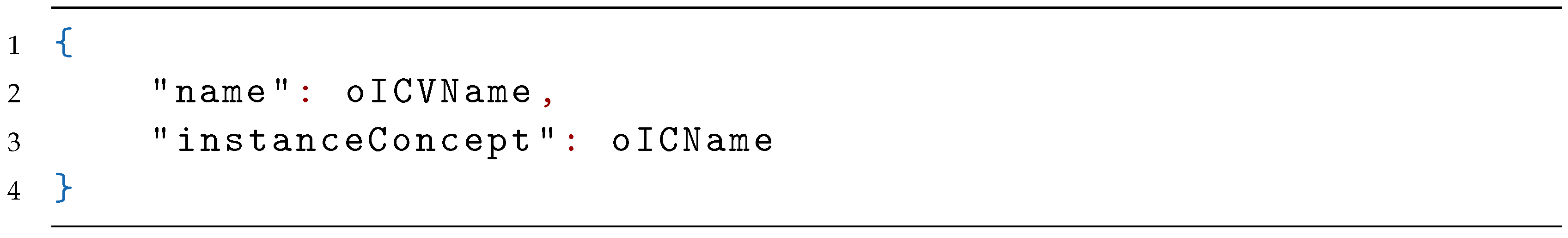

Definition 9. Aninstance concept variableoICV is a JSON object representing an exemplary instance (not existing in occurrences in IK) about which we do not have any knowledge besides the knowledge about belonging to instance concepts. It consists of the following elements:

oICVName—is a JSON string containing a name uniquely identifying the instance concept variable,

oICName—is the oICName identifying an existing instance concept,

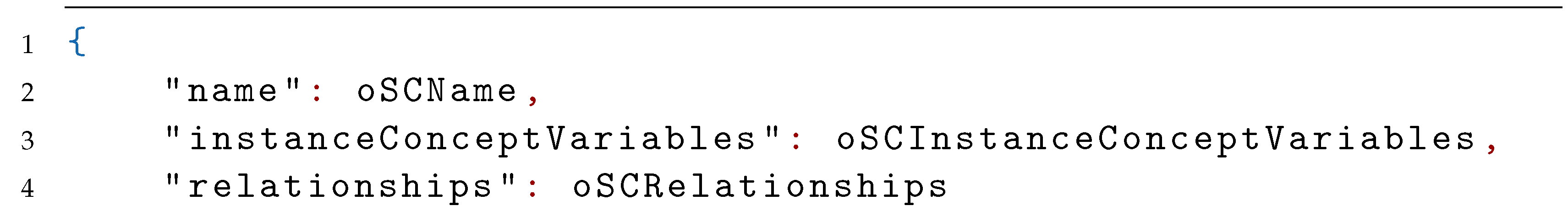

Definition 10. A state concept oSC is a JSON object describing the possible state of the fragment of the IK. It consists of the following elements:

oSCName—is a JSON string containing a name uniquely identifying the state concept (optional),

oSCInstanceConceptVariables—is a JSON array containing a finite set of instance concept variables (optional),

,

oSCRelationships—is a JSON array containing a finite set of relationship (Definition 17) occurring between instance concept variables indicated in instanceConceptVariables (optional),

,

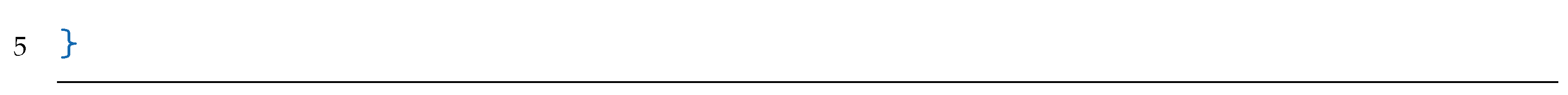

Example of a state concept:

5.5. Action Concepts

Definition 11. An action concept oAC is a JSON object defining a set of actions, each of which can transform a specified state of a fragment of the IK into another specified state of a fragment of the IK.

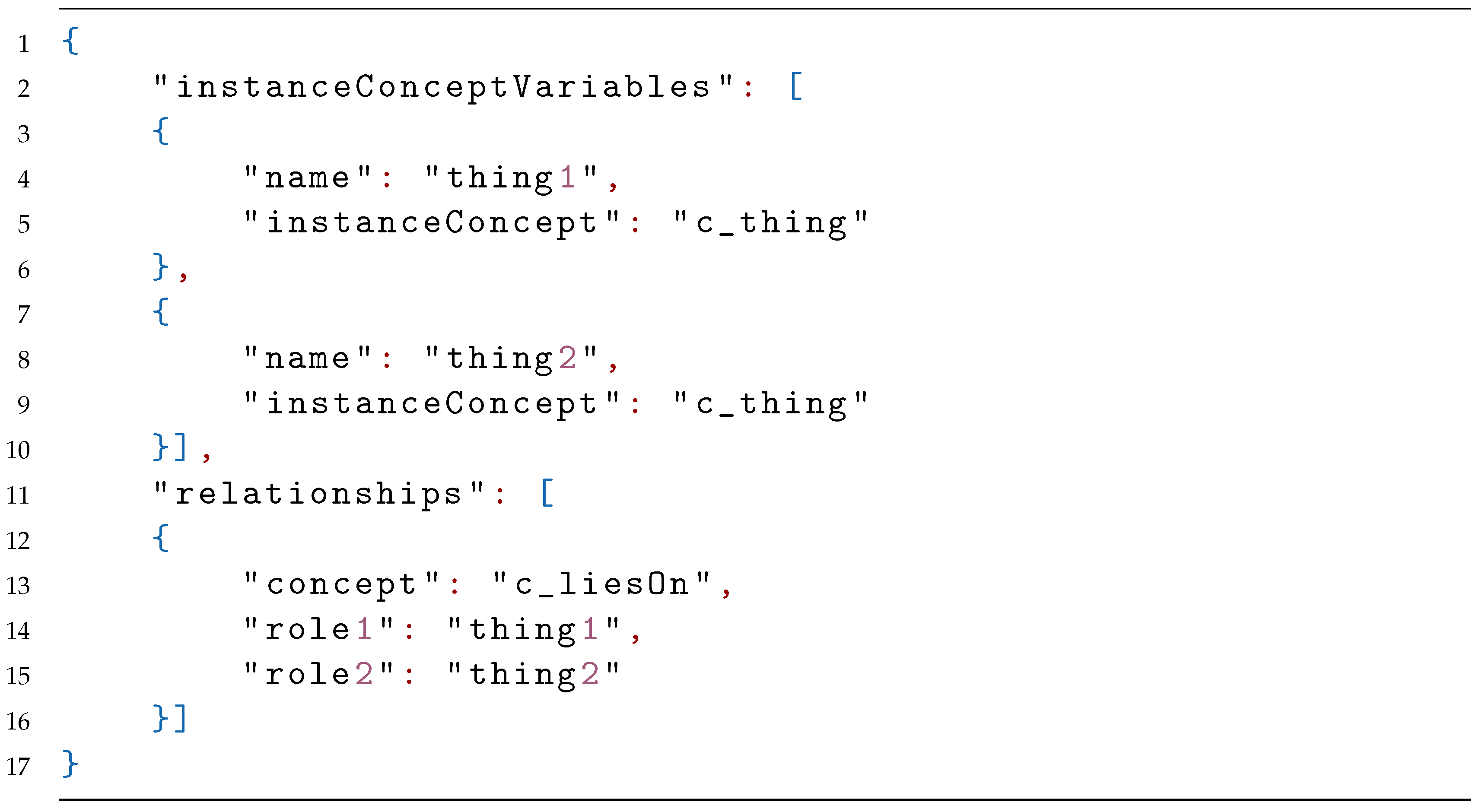

The action concept consists of:

oACName—is a JSON string containing a name that uniquely identifies the action concept,

oACinputStateConcept—a state concept oSC before the start of the action, regarding instances to be transformed as a part of the implementation of the action,

oACoutputStateConcept—a state concept oSC expected to be after completion of the action, regarding instances to be transformed as a part of the implementation of the action,

The action concept can be compared to the abstract method or interface in the programming language like C# or Java.

Example of the action concept:

6. Occurrences in GEDL Language

In this section, the following elements of the GEDL language are defined: occurrences, the feature usage, the feature set usage, the action, the instance, the relationship, and the instance concept membership.

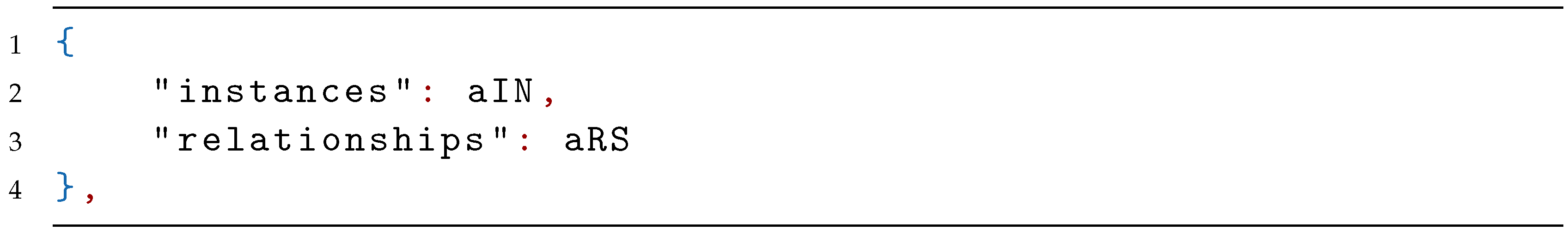

Definition 12. Occurrences oOC—is an JSON object containing a finite set of instances and relationship occurrences observed by the individual. Occurrences include:

aIN—is a JSON array containing a finite set of instances oI

aRS—is a JSON array containing a finite set of relationships ,

where is defined in Definition 16, and is defined in Definition 17.

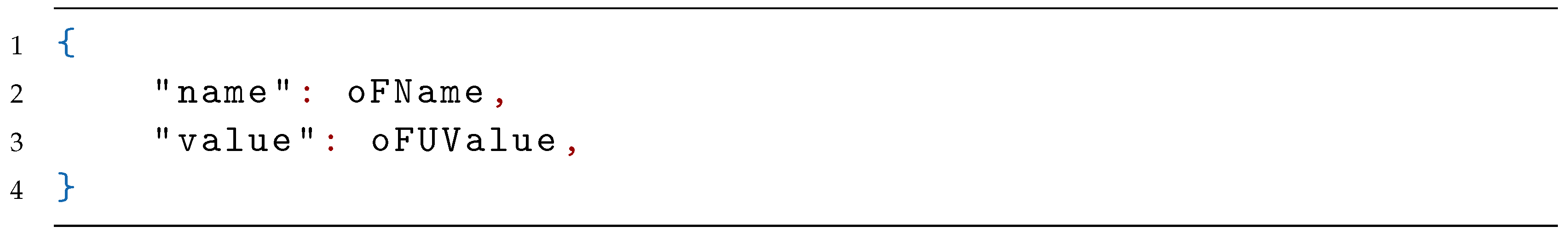

Definition 13. A feature usage is an JSON object representing values assigned to the existing feature of the instance . The is composed of the following elements:

![Applsci 11 00740 i023 Applsci 11 00740 i023]() where:

where:oFName—is a JSON string representing the name of the feature existing in the conceptual system ,

oFUValue—is a JSON value assigned to the feature from the of ,

.

The example of the usage of three features is presented below:

![Applsci 11 00740 i024 Applsci 11 00740 i024]()

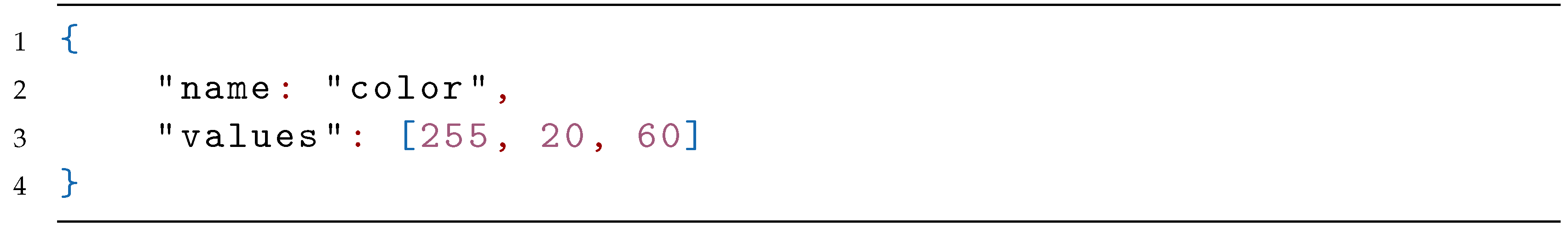

Definition 14. The feature set usage is an JSON object representing values assigned to the existing feature set . The is:

![Applsci 11 00740 i025 Applsci 11 00740 i025]() where:

where:oFSName—is a JSON string containing a name of the feature set existing in , ,

aFSUvalues—is a JSON array containing an ordered set of values assigned to features in the feature set,

.

All of the values should belong to the domains of the features,

, where , function return number of elements of a set.

The example of the feature set usage is presented below:

![Applsci 11 00740 i026 Applsci 11 00740 i026]()

Definition 15. An action oA is a JSON object containing a description of an action, which can be potentially performed by the instance to which it is assigned, and result in a change in the state of the fragment of . An instance uses its own capabilities to carry out an action. It can also use other instances and their actions. The action consists of:

![Applsci 11 00740 i027 Applsci 11 00740 i027]()

oAName—is a JSON string containing a name uniquely identifying the action,

oACName—name of the existing action concept that precisely defines the transformation of the concepts of states within the action,

oAParameters—is a JSON array containing instances, values, relationships and any information necessary for the action accomplishment. The parameters can contain, for example, instances that are necessary tools or instances changed while taking the action (optional).

oAInitialConditions—is a JSON array containing strings built of a source code written in a programming language, having initial conditions specifying what must be fulfilled to perform the action (optional). The conditions must relate to the instances and the environment in which the action operates. The conditions include:

- -

a state concept of a fragment of the IK which has to be attained to start the action,

- -

ranges of the feature values (except the values indicated in the instance concepts) for the instances,

- -

relationships to which instances must belong or not.

oASuccessProbability—is a JSON number containing probability of success. It determines how likely it is that the activity will be carried out correctly in the environment (optional).

oASuccessProbability , oASuccessProbability .

Actions are assigned only to those instances which are able to perform actions autonomously. For example, a chopping knife will not have a slice action because it cannot carry out operations autonomously. However, a bread slicer machine, automatically cutting bread, will have a slicing action only if, after starting the operation, it can make its own decision to end the action.

Actions are atomic and we do not specify any sub-actions for them. Complex actions are problems with their solutions.

Example of an action:

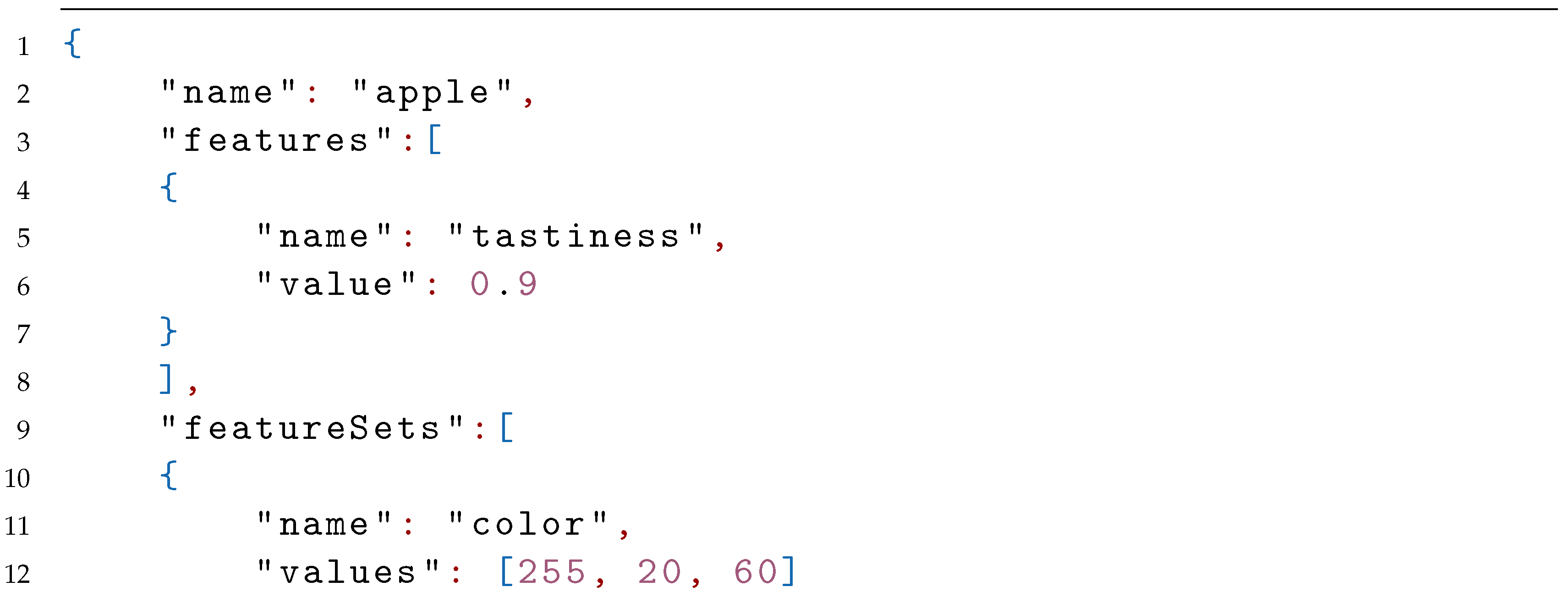

Definition 16. An instance oI is the knowledge about an object observed and distinguished in the environment by the individual. The instance consists of the following elements:

oIName—a JSON string containing the name of an instance oI uniquely identifying the instance (optional),

oIFFeatures—is a JSON array containing a finite set of features usage oFU (optional),

oIFFeatureSets—is a JSON array containing a finite set of feature sets usage oFSU (optional),

oIActions—is a JSON array containing a finite set of actions oA distinguished for the instance by the individual (optional), .

.

Example of an instance apple:

As it was mentioned in Definition 2, the individual is also an instance and can have all of the attributes belonging to the instance. The name of the instance representing the individual is “myself”.

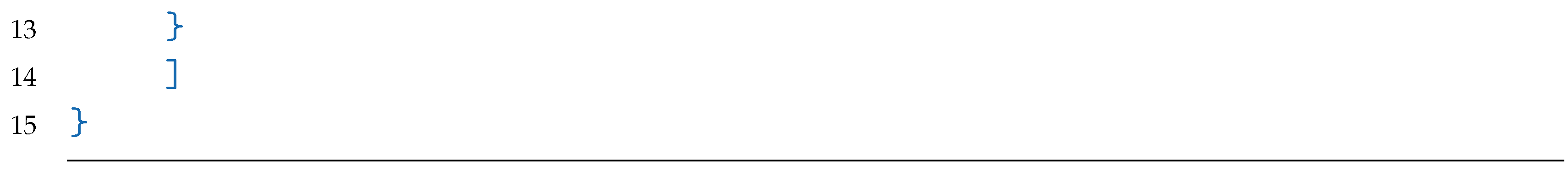

Definition 17. A relationship oR is a JSON object representing a connection distinguished by the individual between two instances oI. The relationship includes:

![Applsci 11 00740 i031 Applsci 11 00740 i031]() where:

where:oRCName—is a JSON string containing a name of an existing relationship concept . The relationship concept defines the relationship. ,

oRRole1—is a JSON string containing of which is the first parameter in this relationship. The instance has to belong to the first instance concept , which name is indicated in the relationship concept,

where function is defined in Definition 18.

oRRole2—is a JSON string containing of which is the second parameter in this relationship. The instance has to belong to the second instance concept , which name is indicated in the relationship concept,

For example:

![Applsci 11 00740 i032 Applsci 11 00740 i032]()

Definition 18. Let . An instance concept membership set of the instance concept is a set defined as follows:

The defined set allows us to determine whether a particular instance belongs to the instance concept or not. We check in the definition several conditions the instance has to fulfill in order to belong to the set . At first, values of the features and feature sets of are checked in order to determine whether they are within the ranges of features and feature sets of the instance concepts or not. Then, it is checked if the has relationships belonging to appropriate relationship concepts. Next, actions of the are tested. In the end, we check if the is in the sets of other listed instance conceps.

7. Experience in GEDL Language

The experiences in the IK contain problems and their solutions. A solution to the problem is composed of actions performed by an individual or other instances used as tools.

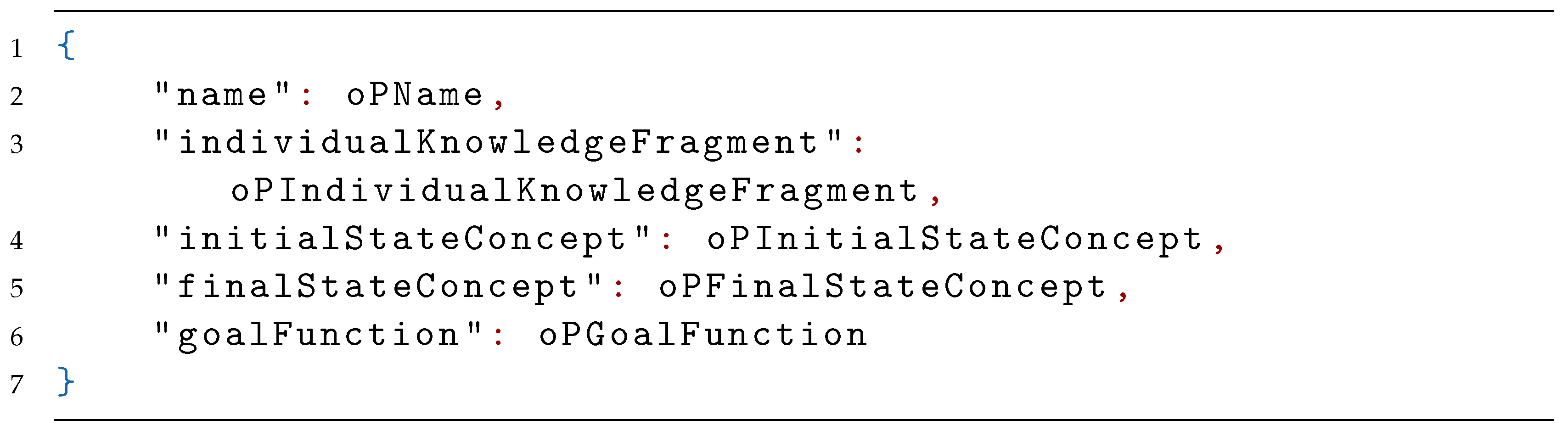

Definition 19. A problem oP is a JSON object containing a description of a complex task that the individual should perform. The problem may be ordered to be solved by an entity that is not represented in the . The problem must be formulated using terms understandable to the individual, i.e., those belonging to the . The problem consists of:

![Applsci 11 00740 i033 Applsci 11 00740 i033]()

oPName—is a JSON string containing a name uniquely identifying the problem (optional),

oPIndividualKnowledgeFragment—is a JSON array containing names of instances from occurrences in which can be used to solve the problem ,

oPInitialStateConcept—is a state concept oSC before solving the problem,

oPFinalStateConcept—is a state concept oSC after solving the problem,

oPGoalFunction—a goal function oGF (optional).

.

Section 8 contains an example of a problem and a solution to the problem.

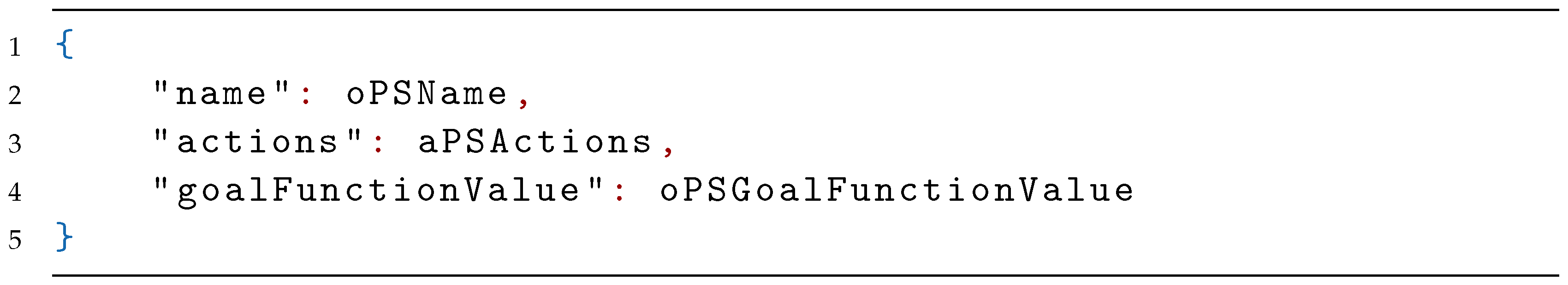

Definition 20. A problem solution oPS is a JSON object containing a solution to a given problem. The solution contains a list of actions to be performed in a specific order or concurrently/parallelly, and instances that are used to enable the transition from the initial state concept to the final state concept.

The problem solution oPS is constructed in the following way:

oPSName—is a JSON string containing a name uniquely identifying the problem solution,

oPSActions—is a JSON array containing strings built of a source code written in a programming language, containing actions oA of a given individual or other instances indicated in oPIndividualKnowledgeFragment. Values of parameters or objects should be added to the listed actions. An exemplary code written in an object language may look as follows:

goalFunctionValue—is a JSON number containing a value of the performed goal function for a given solution.

A problem can have many solutions. A description of the implementation of the action can be made in a specific programming language. The implementation may consist of actions of the myself instance or actions of other instances used in the solution.

Definition 21. A goal function oGF takes the form of JSON strings built of a source code written in a programming language and contains a method for calculating a value that enables the evaluation of solutions to the problem, . The bigger the is, the better the solution is evaluated.

Definition 22. An experience oE is a JSON object containing problems and solutions developed by the individual. The experience is defined as follows:

![Applsci 11 00740 i036 Applsci 11 00740 i036]() where:

where:oEProblem—a problem oP, ,

oEProblemSolutions—is a JSON array containing a finite set of different problem solutions oPS, ,

oEBestSolution—is a oPSName of the best solution to the problem, for which the goal function obtains the highest rated value, , where ,

8. Exemplary Individual Knowledge

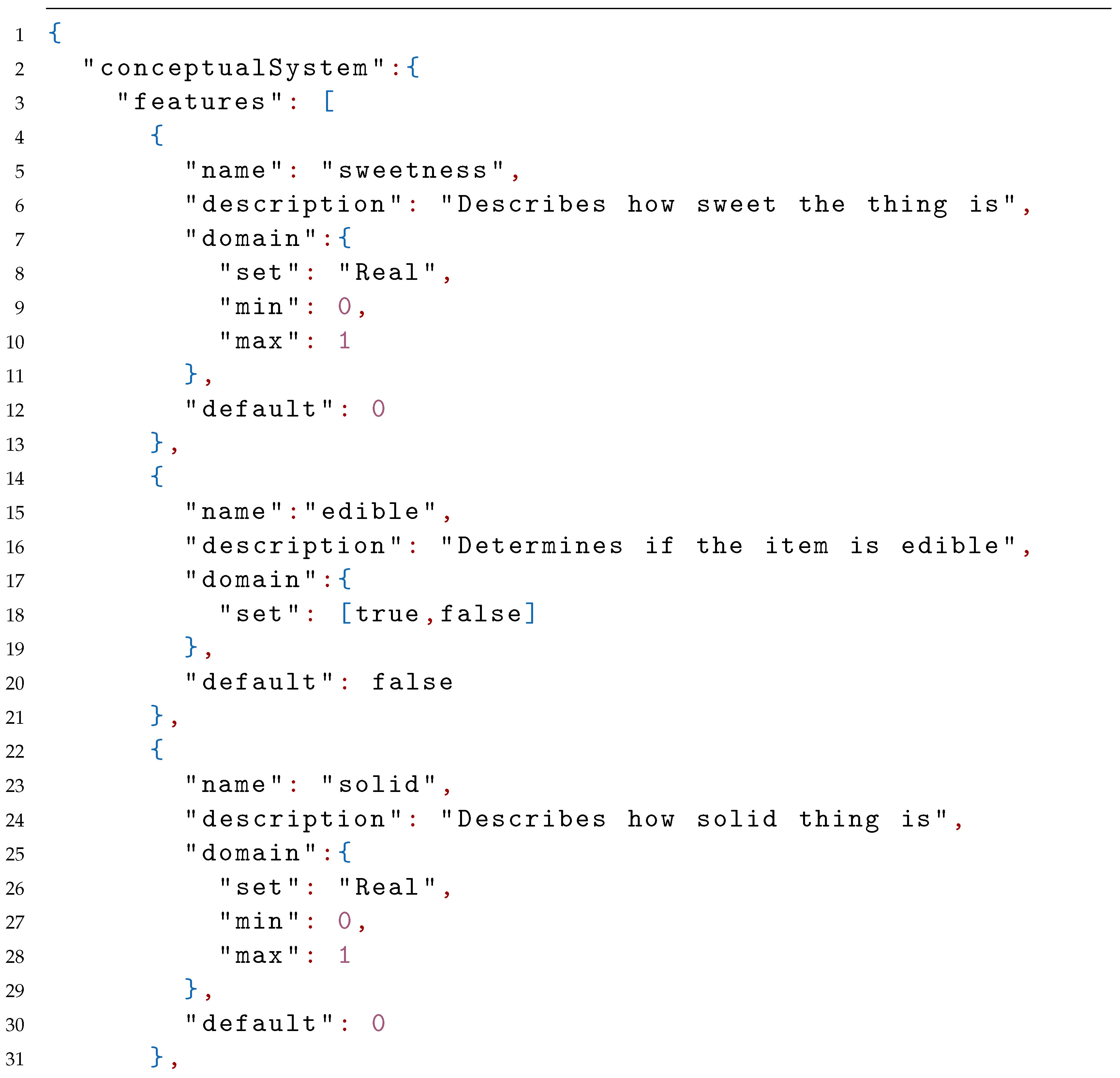

This chapter presents an exemplary content of the IK. It can be divided into three sections: conceptualSystem oCS, occurrences oOC and experience aE.

8.1. The Conceptual System

The conceptual system oCS is the first part of the IK. The listing below presents a part of the IK containing the oCS. At the beginning, feature definitions (their possible ranges and default values) and feature sets are introduced. Next, definitions of concepts: instance concepts, relationship concepts, and action concepts, are presented based on previously defined features and feature sets. These concepts are a part of the IK, which helps to understand the surrounding environment and how to influence it.

![Applsci 11 00740 i037a Applsci 11 00740 i037a]()

![Applsci 11 00740 i037b Applsci 11 00740 i037b]()

![Applsci 11 00740 i037c Applsci 11 00740 i037c]()

![Applsci 11 00740 i037d Applsci 11 00740 i037d]()

![Applsci 11 00740 i037e Applsci 11 00740 i037e]()

The conceptual system oCS contains instance concepts (c_sweets, c_thing, c_solidSweets, and c_sweetDecoration). These concepts have features with restrictions on the range of their values. The individual classifies the observed instances based on their features. If a given instance has the given features and their values are within certain ranges, then according to the individual knowledge, this instance belongs to this concept. The c_solidSweets concept contains the c_sweets and c_thing concepts, thus contains all their features with appropriate ranges of values. If a concept contains features from two other concepts that have the same features, the resulting feature in this concept has restrictions that are an intersection of the limits of these two concepts.

The next part of the listing describes the relationship concept c_liesOn. This concept defines the relationship between two instances. In the beginning, there is a JSON string with a description of the relationship in a natural language. Then, the two instance concepts (to which the instances must belong) are defined.

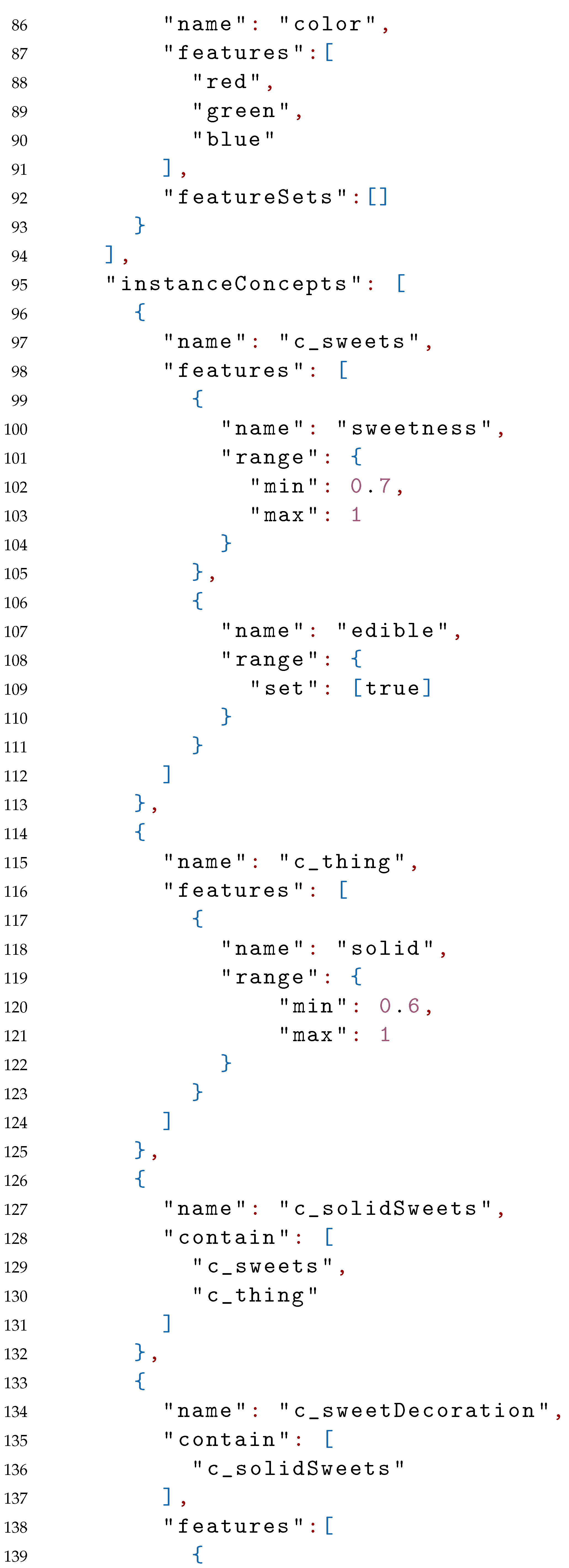

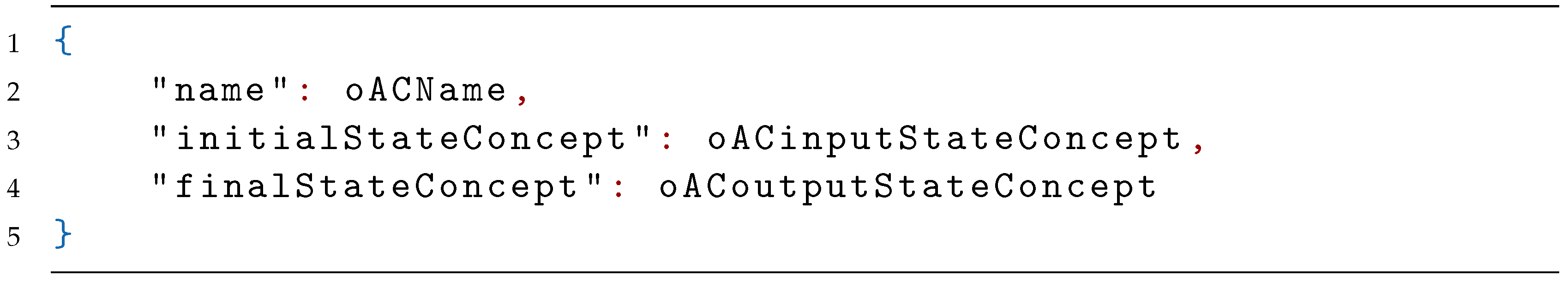

The action concepts (c_putOn and c_boilWater) are placed further in the individual conceptual system oCS. It should be noted that in their structure there is no description of how they work. On the input (initialStateConcept), there is a description of a part of the environment that is found before the action is carried out. On the output (finalStateConcept), there is a description of the same part of the environment with all the changes made by this action. Knowledge of the result that should be obtained allows the individual to verify whether the action has been carried out correctly and to take appropriate steps in case of failure. The part initialStateConcept lists the instances (and instance concepts to which they belong) and their relationships. The part finalStateConcept consists of the same concepts (instance concepts or relationship concepts) that were listed in the part initialStateConcept, and which will not be liquidated as a result of the action, and also consists of concepts that will arise as a result of the performed action. For example, the action concept c_putOn needs two instances belonging to the c_thing concept as input. After completing this action, these two instances should still exist but should be in the c_liesOn relationship.

8.2. The Occurrences

The next part of IK includes occurrences oOC of instances (chocolateStar, fish, cake, hotChocolate, gumdropSnake, raisins) and their relationships (currently an empty set). These are instances representing objects recognized by the individual in the surrounding environment. Instances have specific features with specific values and may have actions that can be performed with these instances. In a conceptual system oCS, there may be many actions that implement the same task, but depending on the instance that implements it, additional assumptions (initialConditions) or parameters may be needed. One of the instances is myself—it defines the individual in which the current IK is located. The putOn action described in the myself instance can be used by the individual to perform the action by itself.

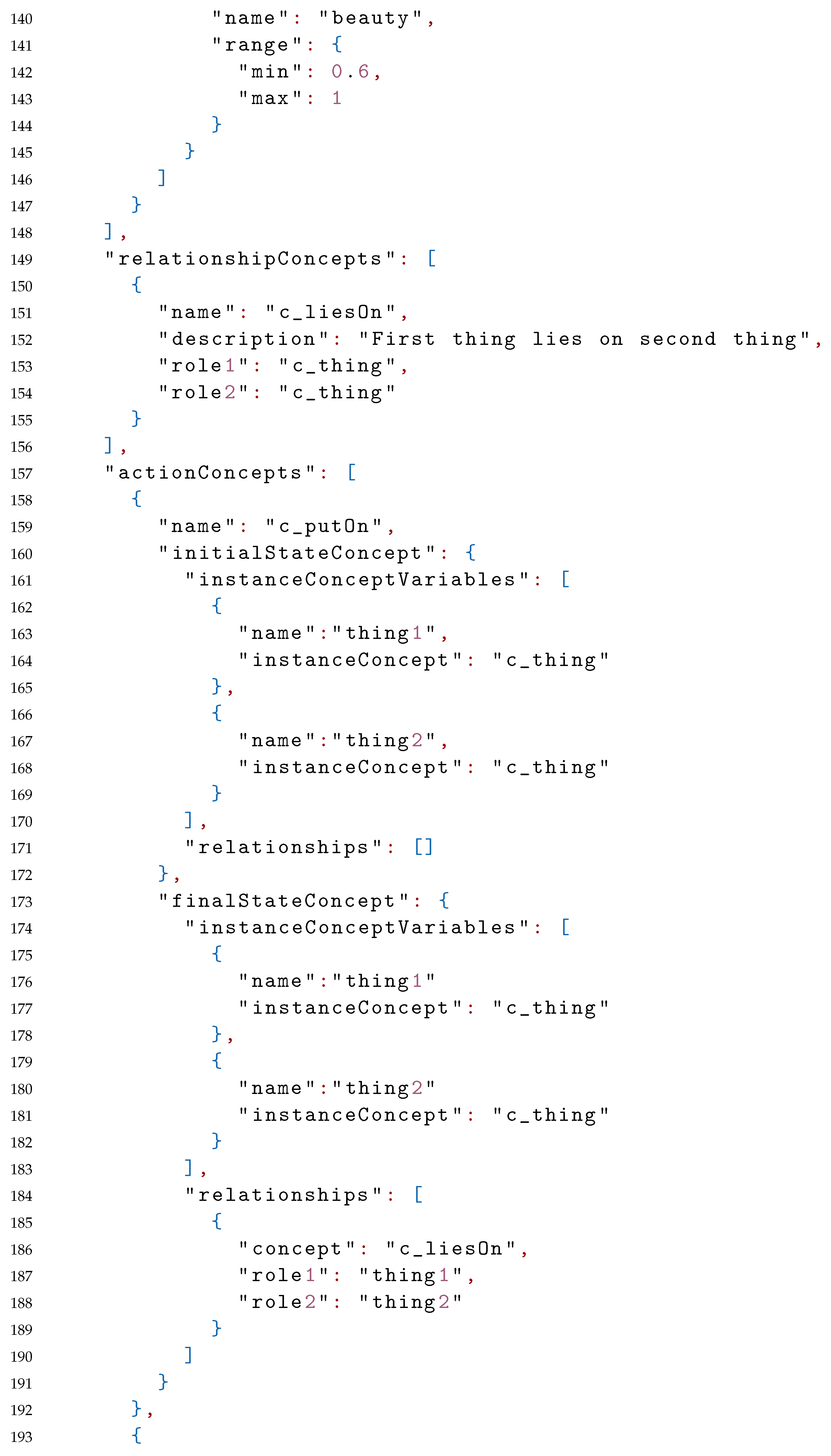

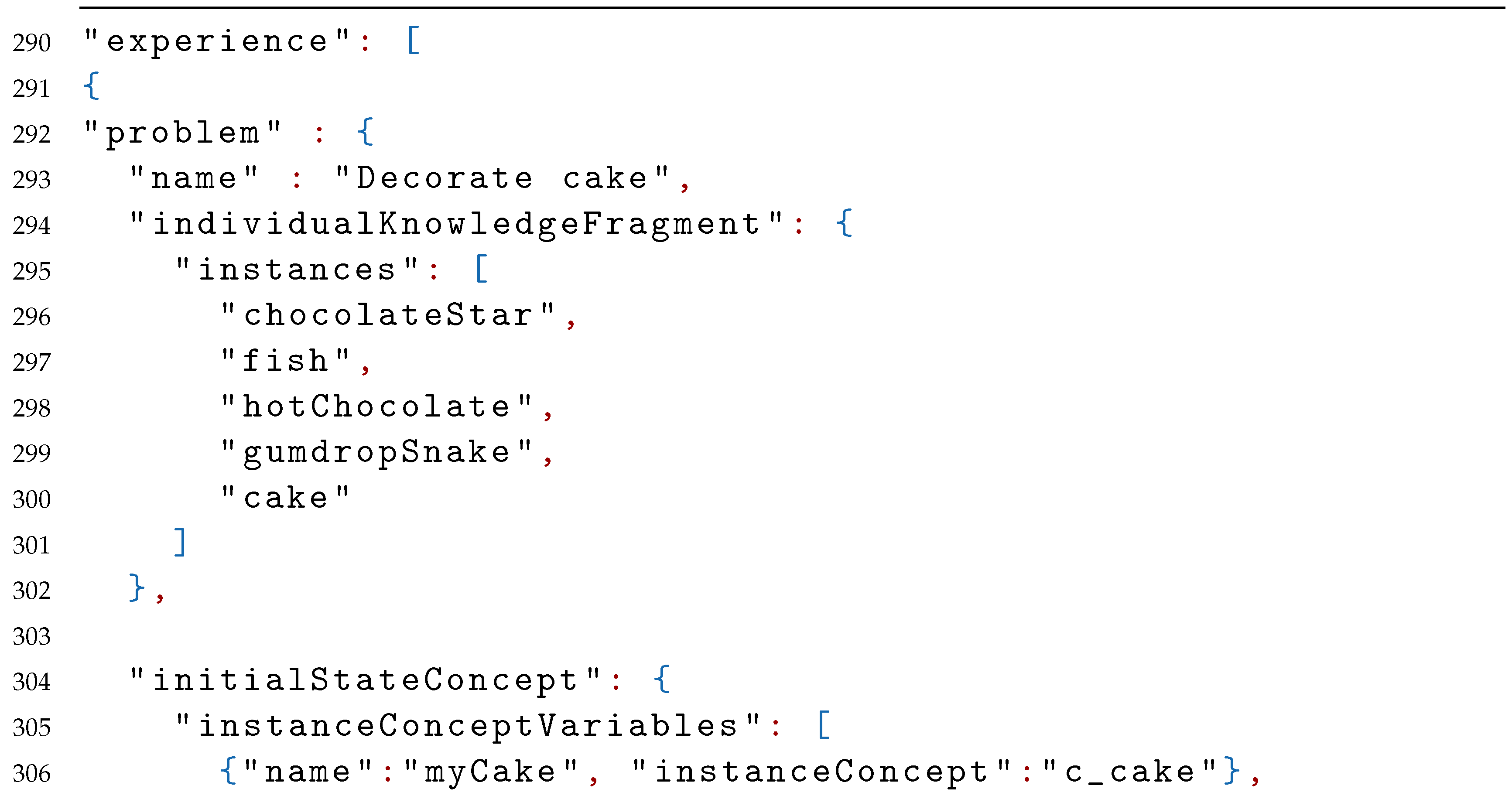

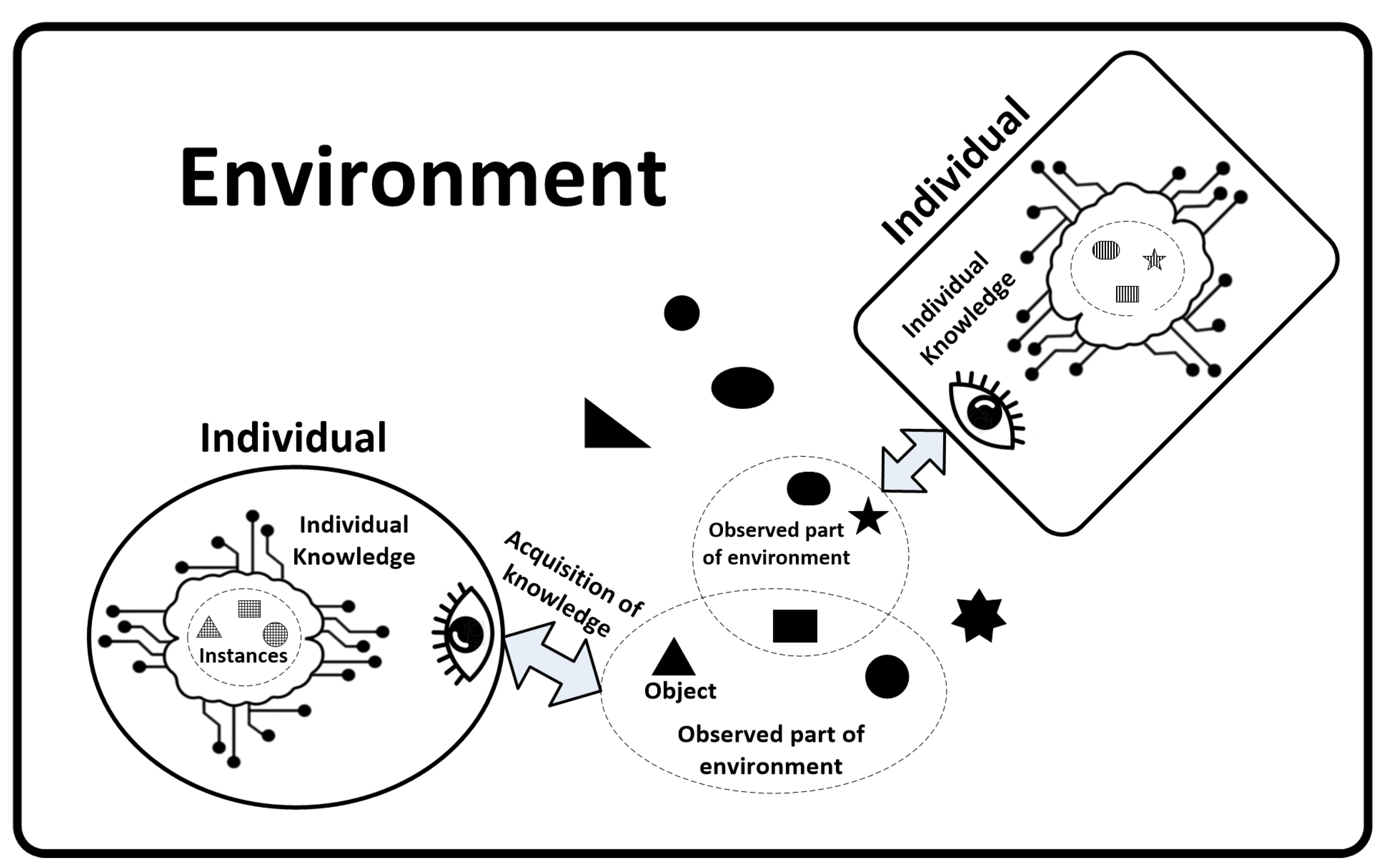

8.3. The Experience

The listing below presents a part of the IK representing experience aE. It consists of problems to be solved by the individual and solutions. In order to initiate the search for solutions, the problem should be defined—the part of the environment that can be used (individualKnowledgeFragment) and the transformation that we want to achieve (from initialStateConcept to finalStateConcept) must be indicated. To achieve the optimal solution, the goal function should also be provided.

![Applsci 11 00740 i039a Applsci 11 00740 i039a]()

![Applsci 11 00740 i039b Applsci 11 00740 i039b]()

![Applsci 11 00740 i039c Applsci 11 00740 i039c]()

Our objective is to have two sweet decorations on the cake, in other words, we should have two sweet decorations in the relationship “lies on” with the cake. Therefore, the individual analyses all action concepts that can lead to the creation of such relationships. The c_putOn action concept is the only one in this case. Then, the individual checks all actions in the system that belong to the given action concept. The only action that fulfills this condition belongs to the myself instance. Therefore, the individual must carry out this action itself. Then, it analyses the prerequisites needed to perform this action. In this case, the existence of three instances is the condition—one belonging to the c_cake concept and two belonging to the c_sweetDecoration concept. In the IK, three instances belong to the c_sweetDecoration concept: gumdropSnake, chocolateStar, and raisins. They can be used in potential solutions. The problem has a goal function (goalFunction) that helps to determine which of the solutions implements the given challenge most effectively. Although JSON does not allow a string on many lines, the goalFunction is divided into several lines in the listing for readability reasons. The goal function chooses the best solution based on the beauty and sweetness of concepts in the presented example. Three solutions with assessments are the result of the algorithm. As can be seen, the best solution is to decorate the cake with a chocolate star and a gel snake by performing action c_putOn twice.

8.4. The More Complex Example

The presented example has a straightforward solution because all the required instances were available and needed only the putOn action. However, we can imagine a situation in which the instance belonging to the c_sweetDecoration concept is missing, but there is a sugar instance belonging to the c_sweets concept, cocoaLion instance belonging to the c_edibleDecoration concept and a sprinkle action that will combine these instances into one that will meet the requirements of the c_sweetDecoration concept. In this way, a newly created instance (and a physically made object that has been created in the environment) can be put together with other cake decorations.

8.5. Summary of the Example

When solving tasks, the individual may decide to perform actions that may change the properties of the instance, add or remove the relationship between them, or decide to create a non-existent instance (e.g., making pancake dough or cooking an egg for a sandwich). There can be many ways to solve the problem.

Many factors can be used to assess a given solution. These may be: expected results, costs incurred, time of implementation, the uncertainty of receiving the intended solution, assessment of previous similar actions, or subjective assessment made with the help of another individual (e.g., a human being).

After the physical execution of the action in the surrounding environment, an attempt may be made to undertake the final assessment of the task. The assessment can be made using available sensors. The solution with input and output parameters and the final grade can be saved in the IK and become the main solution in future tasks. The problem of evaluation of the adopted solution will be the topic of discussion in future articles.

9. Applications of GEDL Language and Directions of Future Work

The language presented in the article allows an individual possessing a cognitive mechanism to describe almost any environment. The way the individual will describe the environment is not imposed in advance, and two individuals of a similar type (e.g., two identical robots) can build very different IK for the same environment. These differences may be caused by different initial conditions (different time or place to start work), the stochastic nature of the environment, or a difference in tasks performed in the environment.

The GEDL language will help to combine various data and knowledge processing technologies, including artificial intelligence techniques. For example, the cognitive mechanism, apart from sensors, will require the use of techniques that enable data interpretation so that objects can be distinguished in the environment, and characterized. In this case, cameras and appropriate image recognition techniques could be used to distinguish objects and give them features such as color, shape etc.

In addition, the instance can carry out our atomic actions in the GEDL language. The way the instance processes actions is not precisely defined, and different complex techniques of artificial intelligence may also be used to carry them out. The description of actions in the new language consists of a description of the state before and after the action, so that the way the actions are executed is not narrowed down to the use of strictly defined techniques for their implementation.

So far, in the article, the definitions forming the basis of the new language have been presented, and it was shown how it may work on a simple example of IK along with the problem and its solution. The further articles will include a description of the basic operation of the product. They will sum up our research into concepts and concept states. It will be shown how to store historical states of instances (marked as memory aM) in the IK. In the future, an attempt will be made to deal with the issue of inference based on historical data. An effective way of storing IK in databases currently used will be presented.

Subsequent publications will also deal with the issue of building a conceptual system that defines the understanding and perception of the environment by an individual. It is going to be determined how a conceptual system should be constructed in an individual that starts their activities in the environment, and how such a system can be developed automatically. Building an IK shared by a larger number of individuals, so that the conceptual system remains coherent, is also a separate research problem for the future.

10. Summary

In this article, a new language called the General Environment Description Language (GEDL) was presented. The language is dedicated to being used in autonomous systems (robots, programs) working in a diverse environment. It enables the description of the environment in which the system acts and helps to make autonomous decisions.

According to the adopted conception, the autonomous system called an Individual collects its knowledge in three sections: Conceptual System, Occurrences and Experience. The Conceptual System contains concepts which, in a way, group occurrences observed in the environment. The section Occurrences presents knowledge about objects observed in the environment. Spotted objects are called instances in the Occurrences. The Experience in GEDL language section contains problems to be solved and their solutions. The Individual Knowledge in the GEDL language is described using the JSON notation.

In this article, an example was provided showing how to store the knowledge, define the problem and find the problem solutions.

In further articles, an attempt will be made to deal with the problem of describing and solving many issues that were only mentioned. Among other things, methods will be proposed for optimal problem solving, language grounding, automatic development of the conceptual system, and others.

where:

where:where:

where:

where:

where:

where:where:

where:

The concept of a relationship includes:

The concept of a relationship includes:

where:

where:

where:

where:

where:

where:

where:

where: