Abstract

Absolute moment block truncated coding (AMBTC) is a lossy image compression technique aiming at low computational cost, and has been widely studied. Previous studies have investigated the performance improvement of AMBTC; however, they often over describe the details of image blocks during encoding, causing an increase in bitrate. In this paper, we propose an efficient method to improve the compression performance by classifying image blocks into flat, smooth, and complex blocks according to their complexity. Flat blocks are encoded by their block means, while smooth blocks are encoded by a pair of adjusted quantized values and an index pointing to one of the k representative bitmaps. Complex blocks are encoded by three quantized values and a ternary map obtained by a clustering algorithm. Ternary indicators are used to specify the encoding cases. In our method, the details of most blocks can be retained without significantly increasing the bitrate. Experimental results show that, compared with prior works, the proposed method achieves higher image quality at a better compression ratio for all of the test images.

1. Introduction

With the rapid development of imaging technology, digital images are perhaps the most widely used media of the Internet. Because digital images themselves contain significant amounts of spatial redundancy, an efficient lossy image compression technique is required for lower storage requirement and faster transmission. The Joint Photographic Experts Group (JPEG) [1,2], vector quantization (VQ) [3,4], and block truncation coding (BTC) [5,6] are well-known lossy compression methods and have been extensively investigated in the literature. Among these techniques, BTC requires significantly less computation cost than others while offering acceptable image quality. BTC has been widely investigated in the disciplines of remote sensing and portable devices, in which computational costs are limited. BTC was firstly proposed by Delp and Mitchell [7]. This method partitions image into blocks, and each block is represented by two quantized values and a bitmap. Inspired by [7], Lema and Mitchel [8] propose a variant method called absolute moment block truncation coding (AMBTC), which offers a simpler computation than that of BTC.

The applications of AMBTC are studied in video compression [9], image authentication [10,11,12], and image steganography [13,14]. Moreover, some recoverable authentication methods adopt AMBTC codes as the recovery information to recover the tampered regions. Because the recovery codes have to be embedded into the host image, a more efficient coding of AMBTC is always desirable because the burden of the embedment can be reduced and the quality of the recovered regions can be enhanced. To improve the compression efficiency of the AMBTC method, several approaches, including bitmap omission [15], block classification [16,17], and quantized value adjustment [18], are adopted to lower the bitrate while maintaining the image quality. For example, Hu [15] recognizes that if the difference between two quantized values is smaller than a predefined threshold, the bitmap plays an insignificant role in reconstructed image quality. Therefore, Hu employs the bitmap omission approach by neglecting the recording of a bitmap if a block is considered to be flat, and only uses block means to represent the flat block. Chen et al. [17] adopt quadtree partitioning and propose a variable-rate AMBTC compression method for color images. The basic idea of [17] is to partition the image into blocks with various sizes according to their complexities. The AMBTC and bitmap omission technique are then employed to encode the image blocks. In some applications, such as data hiding or image authentication, bitmaps have to be altered to carry some required information, causing a degradation in image quality. Hong [18] optimizes the quantized values so that the impact of bitmap alteration can be reduced. Mathews and Nair [19] propose an adaptive AMBTC method based on edge quantization by considering human visual characteristics. This method separates image blocks into edge and non-edge blocks, and quantized values are calculated based on the edge information. Because the edge characteristics are considered, their method provides better image quality than other AMBTC variants.

Xiang et al. [16] in 2019 proposed a dynamic multi-grouping scheme for AMBTC focusing on improving the reconstructed image quality and reducing the bitrate. Their method partitions an image into non-overlapping blocks. According to the block complexity, varied grouping techniques are designed. An indicator is employed to distinguish the grouping types. In addition, instead of recording the quantized values, the differences between them are recorded so as to reduce the bitrate. Xiang et al.’s method provides better compression performance than those of prior works.

In Xiang et al.’s method, the number of pixel groups of an image block directly affects the reconstructed image quality and bitrate. Their method divides pixels of complex blocks into three or four groups during encoding, which may improve the image quality insignificantly but requires more bits for encoding. In this paper, we propose a ternary representation technique, which uses two thresholds to classify image blocks into three types, namely flat, smooth, and complex. We use the bitmap omission technique [15] to code flat blocks. The adjusted quantized values and an index pointing to one of the representative bitmaps are used to encode the smooth blocks. The complex blocks are encoded using three quantized values and a ternary bitmap. Compared with the AMBTC and Xiang et al.’s work, the proposed method achieves a higher reconstructed image quality with a smaller bitrate.

2. Related Works

In this section, we briefly introduce AMBTC and Xiang et al.’s methods, which are compared with the proposed method for evaluating the encoding performance.

2.1. The AMBTC Method

The AMBTC method [8] compresses image blocks into two quantized values and a bitmap. The detailed approaches are as follows. Let be the original image of size and partition into non-overlapping blocks of size , where is the total number of blocks. Let be the pixel of block. Therefore, . For block , the averaged value can be calculated by:

The bit of bitmap , indicated by , is used to indicate the relationship between and . can be obtained by:

The lower quantized value and higher quantized value are obtained by averaging the pixels in with values smaller than and larger than or equal to , respectively. This can be implemented by sequentially visiting pixels in . The lower quantized value is obtained by calculating the averaged value of visited pixels with values smaller than . Similarly, the higher quantized value is the averaged values of the other pixels. Therefore, the compressed code of is . Each block is processed using the same manner, and the AMBTC compressed codes of image are then obtained.

To decode , blocks of size are prepared, where . The pixel of can be decoded by:

After all of the image blocks are reconstructed, the image can then be obtained.

2.2. Xiang et al.’s Method

AMBTC uses the same approach to compress all image blocks. However, the same approach may not suitable for flat and complex blocks. As a result, Xiang et al. proposed an improved scheme to efficiently encode blocks according to their complexity, and achieve a better image quality than that of AMBTC with a satisfactory bitrate.

Let be the AMBTC compressed code of the original image . To determine the complexity of block , a threshold is set. If , the variations of pixel values in block are relatively small. Therefore, all the pixels in this block are categorized as one group. In this case, the block mean is calculated, and this block is encoded by , which is the 8-bit binary representation of .

If , the variations of pixels in block are large and these pixels need to be regrouped to achieve a better reconstructed image quality. Let and be the group of pixels with and , respectively. Apply the AMBTC method to and to obtain codes and . According to a given threshold , this method uses the following rules to determine whether and should be regrouped:

Rule 1: If and the total number of pixels in is greater than .

Rule 2: If and the total number of pixels in is greater than .

If neither rule is met, block does not need to be further divided. Otherwise, block will be sub-divided into three or four groups using the following rules:

- (1)

- If only rules 1 or 2 are met, group or needs to be subdivided. The number of pixels needing to be subdivided is denoted by , and bitmap or has to be used to record the bitmap of or . In this case, block is eventually divided into three groups.

- (2)

- If both rules 1 and 2 are met, both and need to be subdivided, and block is eventually divided into four groups. Bitmap and have to be recorded to maintain the grouping information.

Xiang et al.’s method uses a 2-bit indicator to record grouping information of . When block is divided into one to four groups, the indicator is set to be , , , and , respectively. Moreover, if needs to be divided into three groups, an extra indicator is required to show which group is subdivided. Specifically, if is sub-divided, then . On the contrary, if is sub-divided, then .

To record the quantized values, Xiang et al.’s method records the smallest quantized value of a block using 8 bits, and utilizes a difference encoding scheme (DES) to encode the difference between two quantized values. In DES, if , where is a predefined threshold, is recorded using bits. Otherwise, is recorded using bits, where is the maximum difference between quantized values in all blocks. An extra indicator is used to distinguish these two methods. That is, if , is set. Otherwise, . The number of bits used to record the difference can be expressed as:

We use the symbol to represent the R-bit encoded result of the difference between and using DES. For example, if , , and , then . Therefore, and the encoding result is , where is the concatenation operator.

The compressed code and the number of bits required to record blocks of different grouping cases are summarized in Table 1. Each block is compressed using the same procedures and the final compressed code stream of image is obtained.

Table 1.

Number of bits required to record a compressed block.

To decode , the 2-bit indicator is read. According to the read bits, four possible compressed codes shown in Table 1 with different lengths can be extracted. The image blocks can be reconstructed from the compressed codes, and the decompressed image can be obtained. The detailed decoding procedures can be referred to [16].

3. Proposed Method

The traditional AMBTC compression method uses the same number of bits to compress each block. However, coding in this way requires more bits than necessary for flat blocks and neglects too much image detail for complex blocks. Xiang et al.’s method improves AMBTC, resulting in better compression effects for both flat and complex blocks. However, in the processing of complex blocks, Xiang et al.’s method reconstructs the gray values of the image block by four quantized values. Although the quality of the reconstructed block is improved, it requires quantized values to be recorded and bitmaps with more bits. In addition, Xiang et al. adopt the traditional AMBTC method to compress the smooth blocks, which may increase the cost of recording bitmaps and quantized values.

In this paper, we propose a more effective solution by classifying image blocks into flat, smooth, and complex blocks based on thresholds and (). Let be the AMBTC codes of . If , is classified as a flat block. Because pixel variations in a flat block are small, all pixels in a flat block can be simply reconstructed by their mean to a satisfactory visual quality. If , is classified as a smooth block. For the smooth block, we use a clustering algorithm to obtain representative bitmaps, and the original bitmaps are replaced by the indices pointing to the obtained bitmap. The two quantized values are also adjusted to reduce the error caused by the bitmap replacement. If , is classified as a complex block. We use three quantized values and a ternary map to represent the complex block to maintain better texture details. The encoding algorithms of these three types of blocks will be presented in the following sections.

3.1. Encoding of Flat Blocks

The pixel values of a flat block (i.e., ) are relatively close, and thus the bitmap plays an insignificant role in reconstructing the image block. Therefore, we omit the recording of the quantization value in addition to the bitmap, and use an 8-bit mean value to represent the flat block, where:

and is the function rounding to the nearest integer.

3.2. Encoding of Smooth Blocks

If , the fluctuation of pixel values of block is more than that of a flat block. Therefore, we refer to as a smooth block. To reduce the bitrate, a codebook consisting of the most representative bitmaps (codewords) is found, and the bitmap of the smooth block will be replaced by an index pointing to one of the codewords in the codebook. We use the k-means algorithm [20] to obtain the most representative bitmaps. Let be the set of AMBTC codes satisfying for , where is the number of smooth blocks. Firstly, an initial codebook is constructed by randomly selecting bitmaps from , where is much less than . Secondly, the bitmaps are classified into clusters according to the similarities between and . That is, if has more bits identical to than other codewords, then is classified into group , where . Thirdly, of the same group are averaged and rounded to obtain the updated codebook . Repeat the classification process times and the final representative bitmaps are obtained. Normally, setting can already obtain a satisfactory result. We denote the final representative bitmaps as . Once the classification process is completed, the classification results of bitmaps are also obtained. Note that the codeword with index has the nearest distance to , that is:

where and represent the element of and , respectively. Instead of recording , the proposed method uses the binary representation of as the required bitmap information. Therefore, the bits required to record the bitmap are reduced from bits to bits. To successfully decode the bitmap, we must have cluster centers and cluster indices . Therefore, must be included as part of the compressed codes.

When decoding a smooth block, because we use cluster center to replace the original bitmap , the quality of the reconstructed image block will be reduced. To minimize the reduced quality, a quantized value adjustment (QA) technique [18] is employed. QA is a technique originally used in a data hiding technique to reduce the distortions of the reconstructed AMBTC block when the original bitmap is replaced by secret data. Because bits in the bitmap are altered, distortions of the reconstructed block are inevitable. QA subtly adjusts the quantized values by counting the bit difference between the original bitmap and secret data. In the proposed method, the original bitmap is replaced by a cluster center, which resembles the situations in which the bitmap is replaced by secret data. Therefore, the QA technique can be applied in the proposed method. To find the minimum distortion, the QA technique adjusts and to and by calculating:

and:

respectively, where is the number of bits with and , . For example, indicates the number of bits with and . After adjustment of quantized values, the distortion due to the bitmap replacement will be smaller than that without adjustment.

3.3. Encoding of Complex Blocks

Blocks with are classified as complex blocks. Let be the set of complex blocks in . For a given complex block , the proposed method uses the k-means clustering algorithm to obtain three most representative quantized values and a ternary map , where is a ternary digit ranging from 0 to 2 used to indicate which quantized value should be used to reconstruct the pixel of . Because the value of is equally distributed over 0 to 2, we can simply encode the ternary digits , , and by , , and , respectively. We assume the encoded result of is of L-bit. Once the decoder has and , blocks can be reconstructed.

When encoding a ternary map, the average number of bits required in the proposed method is:

Theoretically, recording 16 ternary digits requires bits, which is almost the same as in the proposed method. Therefore, the encoding of the ternary map used in the proposed method is effective.

3.4. Encoding Procedures

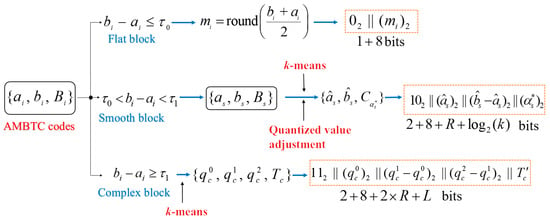

This section describes the procedures of the proposed method. To distinguish the encoding methods of three types of image blocks, an indicator is prepended to the code stream of each encoded block. The indicators ,, and are used to indicate a flat, smooth, and complex block is encoded, respectively. The detailed encoding procedures are shown as follows:

- Input:

- Original image , block size , thresholds and , parameter , and cluster size .

- Output:

- Code stream .

- Step 1:

- Partition the original image into blocks of size . Encode using the AMBTC encoder and obtain codes , as described in Section 2.1.

- Step 2:

- Scan codes . Let be the bitmap of smooth blocks. Clustering into groups using the k-means clustering algorithm, we obtain cluster centers and cluster indices . Concatenate the binary representation of and obtain the concatenated code stream . The pairs of adjusted quantized values of smooth blocks are also obtained, as described in Section 3.2. Similarly, quantized values and ternary maps of complex blocks are also obtained, as described in Section 3.3.

- Step 3:

- Scan codes again and perform the encoding according to the cases listed below:

- Case 1:

- If , a flat block is visited and the code stream of block is .

- Case 2:

- If , a smooth block is visited. Extract , , and from obtained in Step 2, and block is encoded by . Note that is encoded using the DES, as described in Section 2.2.

- Case 3:

- If , block is a complex one. Extract , , , and from obtained in Step 2, and block is encoded by . Note that and are encoded using the DES (see Section 2.2).

- Step 4:

- Repeat Step 3 until the code stream of blocks are obtained. Concatenate , we have the concatenated code stream .

- Step 5:

- Concatenate and ; we obtain the final code stream of image , i.e., .

The encoding of a given image block and the number of required bits for each block types are shown in Figure 1.

Figure 1.

Illustration of image block encoding.

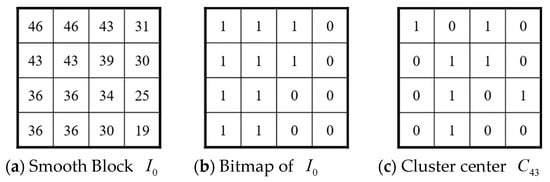

We take a simple example to illustrate the encoding of smooth and complex blocks. Let be a block to be encoded, as shown in Figure 2a. Suppose , , , , and are used in this example. The AMBTC compressed code of is , and is depicted in Figure 2b. Because , is a smooth block. Assume and (see Figure 2c). By comparing and , we have , , , and . Using Equations (7) and (8), we have and . Because , we have . Because , , and , the code stream of should be .

Figure 2.

An example of image encoding.

Figure 2d shows another block to be encoded. For this block, quantized values and of the AMBTC code are calculated. Because , is regarded as a complex block. Suppose after applying the k-means clustering algorithm to , we obtain three quantized values and the ternary cluster indices of pixels , as shown in Figure 2e. The difference between the first two quantized values is . Therefore, indicator should be placed in front of the binary representation of 66 (i.e., ). Similarly, because , indicator should be placed in front of the binary representation of 48 (i.e., ). Finally, the ternary cluster indices are encoded by , which is illustrated in Figure 2f. Therefore, according to Step 3 of Case 3 in Section 3.4, the code stream of block should be .

3.5. Decoding Procedures

In decoding, data bits are sequentially read and decoded, and image blocks are reconstructed by decoding the read data bits. The detailed steps of decoding are listed as follows:

- Input:

- Code stream , block size , parameter , , and cluster size .

- Output:

- Decompressed image .

- Step 1:

- Extract from and reconstruct cluster centers .

- Step 2:

- Extract one bit from . According to the extracted bit, one of the following decoding cases is then performed:

- Case 1:

- If , the block to be reconstructed is a flat block. All the pixel values of block are the decimal value of the next 8 bits extracted from .

- Case 2:

- If and the next extracted bit is , the block to be reconstructed is a smooth block. Extract the next 8 bits and convert them to a decimal value to obtain the quantized value . Read the next bit from . If the read bit is , is reconstructed by the decimal value of the next bits plus . Otherwise, is reconstructed by the decimal value of next bits plus . The clustering index is the decimal value of next bits, and the bitmap can be obtained from . Using the AMBTC decoder to decode , the image block can be reconstructed.

- Case 3:

- If and the next extracted bits is , the block to be reconstructed is a complex block. Extract the next 8 bits and convert them to a decimal value to obtain the quantized value . Read the next bit from . If the read bit is , is reconstructed by the plus the decimal value of the next bits; otherwise, is reconstructed by the decimal value of the next bits plus . Using a similar manner, is reconstructed. To reconstruct the ternary map , we start from to and repeat the following process: Read a bit from . If , we have . Otherwise, read the next bit from . If , . If , . Once we have and , the j-th pixel of the image block is reconstructed by , , or if , 1, or 2, respectively.

- Step 3:

- Repeat Step 2 until all image blocks are reconstructed, and the final decompressed image is obtained.

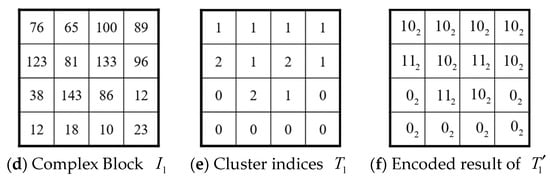

We continue the example given in Section 3.4 to illustrate the decoding process. The detailed process and the decoded result are depicted in Figure 3. To decode the code stream , because the first bit is and the second bit is , the to-be-reconstructed block is a smooth block. Extract the next 8 bits from and convert them into decimal representation; we obtain . The next extracted bit is 0. Therefore, the difference is the decimal value of the next bits, and we have . Finally, extract bits and convert them to a decimal value; we have and the bitmap is obtained. The image block can then be constructed by decoding using the AMBTC decompression technique.

Figure 3.

Illustration of the decoding procedures of image blocks.

To decode , because the first two extracted bits are , the block to be decompressed is a complex block. Extract 8 bits and is the decimal value of these 8 bits. The next bit is ; therefore, is the decimal value of the next bits and can be obtained. Similarly, the next extracted bit is ; therefore, is obtained by converting the next bits to their decimal value, and can be obtained. Finally, we have to reconstruct from the remaining bits. Because the next extracted bit is , we extract one more bit, which is . Therefore, is obtained. The remaining 15 ternary digits can be decoded in the similar manner. Once we have and , block can be reconstructed. Figure 3b illustrates the decoding process of .

4. Experimental Results

In this section, we conduct several experiments to show the effectiveness and applicability of the proposed scheme. We take eight grayscale images of size , namely, Lena, Jet, Baboon, Tiffany, Boat, Stream, Peppers and House, as the test images, as shown in Figure 4. These images can be obtained from the USC-SIPI image database [21]. We use the peak signal-to-noise ratio (PSNR) and bitrate to measure the performance. The PSNR is calculated by:

where and represent the pixel values of the original and decompressed images, respectively. The bitrate metric is measured by the number of bits required to record each pixel (i.e., bit per pixel, bpp).

Figure 4.

Eight test images.

In all of the experiments, we set because the flat blocks under this setting show no apparent block boundary artifacts.

4.1. The Performance of the Proposed Method

Because the number of cluster centers k and threshold greatly affect the coding efficiency in the application of the quantized value adjustment (QA) technique [18], we evaluate how the QA technique and these parameters influence the bitrate and image quality in this section.

4.1.1. Coding Efficiency Comparisons

In the coding of smooth blocks, the original bitmaps of smooth blocks are used to obtain the cluster centers, and the quantized values are adjusted using the QA technique to lower the distortions. Table 2 and Table 3 show how the QA technique improves the image quality when the block size is set to and , respectively. In this experiment, and are set. As seen from the tables, the QA technique effectively enhances the quality of reconstructed images for every . For example, in Table 2 and , the averaged quality of the reconstructed images with and without the QA technique is 34.63 and 34.52 dB, respectively. The averaged quality has improved by dB. Similarly, in Table 3 when , the PSNR improvement is dB. Therefore, the QA technique indeed reduces the distortion caused by replacing the original bitmap with a cluster center.

Table 2.

Peak signal-to-noise ratio (PSNR) and bitrate of compressed images with block size.

Table 3.

PSNR and bitrate of compressed images with block size.

Table 2 and Table 3 also reveal that the increase in cluster size also enhances the image quality. For example, in Table 3 when , the averaged PSNR of eight test images is 31.86 dB. When and 512, the PSNR increases dB and dB, respectively. The reason is that a larger cluster size provides a greater chance to reduce the difference between the cluster centers and original bitmaps.

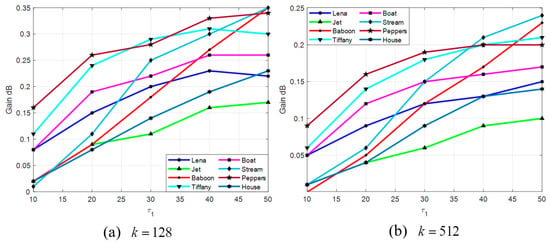

To evaluate how threshold affects the performance of the QA, we plot the gain of PSNR when using the QA for various with and 512. The results are shown in Figure 5. Note that, in this experiment, a block size of is set.

Figure 5.

The gain of PSNR when the quantized value adjustment (QA) technique is applied.

Figure 5a,b shows that the gain in PSNR increases as increases, and this is mainly because the number of smooth blocks also increases as increases. Because more blocks are classified as smooth for larger , more blocks will be processed using the QA technique. As a result, the gain in PSNR is higher when is larger. It also can be observed that for each test image, the gain in PSNR is larger when than that when . The reason is that a smaller implies larger differences between the original bitmaps and cluster centers. Because the QA technique is capable of reducing the distortion caused by the differences, a larger PSNR improvement can be achieved for smaller .

It is interesting to note that the gain in PSNR of the Stream and Baboon images increases more than that of other test images when varying to for both and . Because these two images are more complex than the others, their bitmaps of smooth blocks are expected to be more different from the selected cluster centers used to replace the bitmaps. As previously mentioned, the QA technique is effective in reducing the distortion caused by the differences, and the bitmaps of Stream and Baboon images are more different from the cluster centers than the other images. Therefore, the improvement in PSNR after applying the QA technique is more significant than for the others.

4.1.2. Performance Comparison of Various

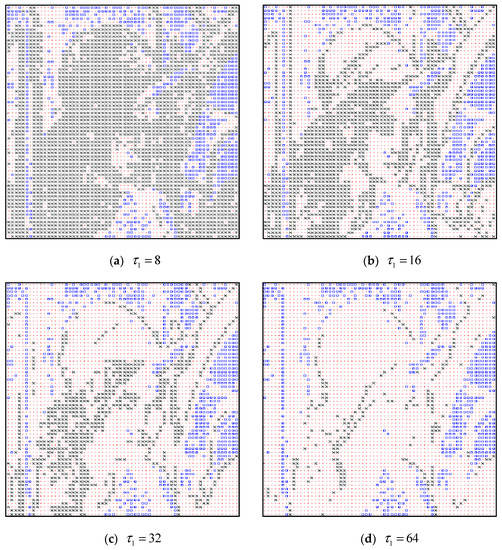

The parameter controls the number of smooth and complex blocks. The number of complex blocks decreases as increases. To see the distribution of flat, smooth, and complex blocks of a test image, we take the Lena image as an example to illustrate their distribution by varying . Figure 6a–d shows the distributions of blocks when , 16, 32, and 64 are set. The block sizes in these figures are and . In this figure, the blue squares, red dots, and black cross marks represent flat, smooth, and complex blocks, respectively.

Figure 6.

Distribution of flat, smooth and complex blocks.

Because the same is applied, it can be seen that the number of blue squares (flat blocks) is the same in Figure 6a–d. However, as increases, the red dots increase and black cross marks decrease. The reason is that an increase in leads more blocks to be categorized as smooth. It can also be inferred that a better image quality can be achieved at a smaller but the bitrate will be higher because more blocks are deemed to be complex. Note that in the proposed method, more bits are required to represent a complex block than a smooth block.

Table 4 and Table 5 show the PSNR and bitrate for all of the test images under various with block size and , respectively. In this experiment, , , and are set. We also list the PSNR and bitrate of the standard AMBTC method as a comparison. Note that the bitrates of the AMBTC with block size and are 2.0 and 1.25 bpp, respectively.

Table 4.

PSNR and bitrate of the proposed method for various (block size ).

Table 5.

PSNR and bitrate of the proposed method for various (block size ).

As seen in Table 4 and Table 5, the PSNR of block size is lower than that of block size . For example, when , the PSNR of the Lena image of block sizes and are 34.73 and 31.91 dB, respectively. However, the former requires more bits per pixel than the latter. In addition, the experiments also reveal the fact that a large effectively reduces the bitrate at the expense of image quality. On the contrary, a small provides better image quality, but requires more bitrate. This result is expected because a small increases the number of complex blocks and, therefore, the bitrate, however, the image quality also increases.

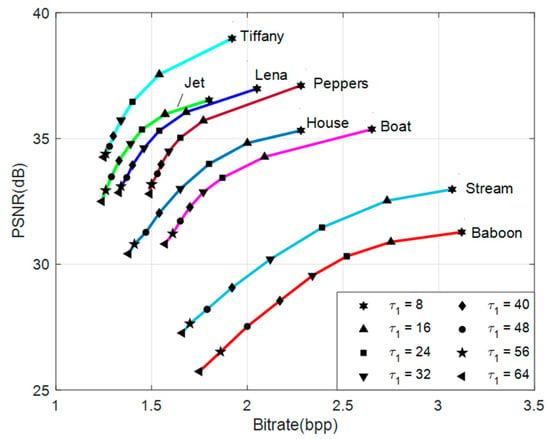

Figure 7 shows the bitrate–PSNR curves of each of the eight test images by varying the threshold from 8 to 64. The figure shows that for all of the test images, the PSNR increases as the bitrate increases. Moreover, the figure also reveals that smooth images, such as Tiffany or Jet, have a better compression efficiency than those of complex images, such as Stream or Baboon. The reason is that a smooth block not only requires less bits to record its compressed code but also provides better reconstructed quality. Because the smooth images naturally possess more smooth blocks than complex blocks, their bitrate–PSNR curves are higher than those of complex ones.

Figure 7.

Bitrate–PSNR curves of each of the eight test images.

It also can be seen from Figure 7 that the PSNR and bitrate vary as the threshold changes. A larger gives a lower bitrate with lower PSNR. In contrast, a smaller offers a higher image quality, but the bitrate is also higher. Therefore, the selection of threshold depends on real applications. For example, if an application requires higher image quantity, a smaller is required.

It is worth noting that, for most of the test images, the proposed method provides better performance than AMBTC, particularly for smooth images. For example, Lena, Jet, Tiffany, Boat, and Peppers are considered to be smooth. For these smooth images, regardless of the value of , the PSNR is always higher and the bitrate is always lower than those of the AMBTC method. In contrast, for the complex images such as Baboon or Stream, few blocks are classified as flat, which require only 8 bits to record them. Therefore, the reduction in bitrate is limited. Nevertheless, the proposed method either provides a better image quality or lower bitrate than those of AMBTC.

4.2. Comparisons with Xiang et al.’s Work

Xiang et al.’s method [16] also improves the AMBTC method by dynamically splitting images into multiple groups and achieves a good performance. In this section, we compare the proposed method with that of Xiang et al. in terms of PSNR and bitrate. To make a fair comparison, threshold and are set in both methods. The proposed method uses to control the number of smooth and complex blocks, whereas Xiang et al.’s method uses to control the number of pixel groups. We select , 16, and 24 in the proposed method and compare the results with those of Xiang et al. by setting , 7, and 8 for block size . The results are shown in Table 6. Table 7 shows the same experimental results, except block size is and , 32, and 36. The settings of in Xiang et al.’s method ensure that best performance can be achieved.

Table 6.

Comparisons of PSNR and bitrate with block size .

Table 7.

Comparisons of PSNR and bitrate with block size.

Table 6 shows that in Xiang et al.’s method, as increases, the image quality and bitrate decrease. The reason is that a large prevents more blocks from being split, leading to a decrease in bitrate and PSNR. Note that for most of the test images, the proposed method performs better than that of Xiang et al. We take the Lena image as an example: when and are set, the PSNR of the proposed and Xiang et al.’s methods are 36.05 dB with 1.68 bpp and 35.12 dB with 2.20 bpp, respectively. The PSNR of the proposed method is dB higher and the bitrate is bpp lower than that of Xiang et al.’s method. Comparisons with other images and another set of parameters also reveal similar results, with the exception of the Baboon image. When and are set, the PSNR of Xiang et al.’s method is dB higher than the proposed method. The reason is that under these settings, more blocks are divided into four groups and thus a better image quality is achieved. However, their method requires bpp more than the proposed method.

In the performance comparisons with block size , the proposed method shows better results for all test images. For example, as shown in Table 7 when and , the PSNR of the Baboon image of the proposed method is 28.84 dB at 1.88 bpp. The PSNR is dB higher and the bitrate is bpp lower than those of Xiang et al.’s method.

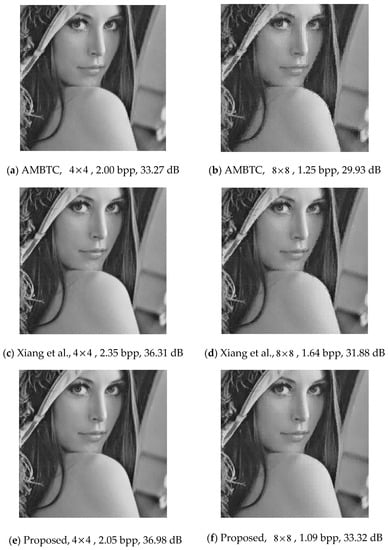

Figure 8a–f shows the visual quality comparisons of the AMBTC, Xiang et al.’s, and the proposed methods. As seen from Figure 8a when the block size is and the AMBTC method is applied, apparent distortions can be seen in the image edges, and noticeable boundary artifacts are observed (see Figure 8a). Note that the PSNR of the AMBTC is 33.27 dB with 2.0 bpp. Xiang et al. improve the AMBTC method by adding more details to complex blocks. As a result, the PSNR (36.31 dB) is significantly higher and blocks at the edges look more natural than those of AMBTC (Figure 8c, ). However, their method requires bpp more to achieve this effect. In contrast, the visual quality of the proposed method (Figure 8e, ) is comparable with that of Xiang et al.’s method, but the bitrate is bpp lower with a slightly higher PSNR.

Figure 8.

Visual quality comparisons of the proposed method and that of Xiang et al.

When the block size is , the distortion of AMBTC is more apparent (Figure 8b) than that of , but the bitrate reduces from 2.0 to 1.25 bpp. The visual quality of Xiang et al.’s method (Figure 8d, ) is significantly better than that of AMBTC, and has no noticeable block boundary artifacts. However, their method requires bpp more to improve the image quality. In addition, some edges in Lena’s face, eyes, and shoulder exhibit apparent distortions because the pixel splitting operation may not be triggered due to the setting of . In contrast, the edges of the proposed method exhibit no apparent distortion (see Figure 8f, ). Moreover, the bitrate required in the proposed method is even lower than that of AMBTC by bpp.

5. Conclusions

In this paper, we propose a hybrid encoding scheme for AMBTC compressed images using a ternary representation technique. Considering that the number of quantized values greatly affects the quality of the reconstructed image, the proposed method classifies image blocks into flat, smooth, and complex. These three types of blocks are encoded by using one, two, or three quantized values. Flat blocks require no bitmap, whereas smooth and complex blocks require binary and ternary maps, respectively, to record the quantized values to be used to reconstruct the corresponding pixels. A sophisticated design indicator is prepended before the code stream of a block to signify the block type. The proposed method achieves a better image quality than that of prior works with a smaller bitrate. The effectiveness of the proposed method is observed from the experimental results. Note that although the k-means algorithm used in the proposed method may require slightly higher computational cost than that of the discrete cosine transform (DCT) based methods, it is only applied to smooth blocks in the encoding stage to obtain the representative bitmaps rather than the whole image. Furthermore, the k-means algorithm does not need to be applied again during decoding. Therefore, the overall computational cost of the proposed method is smaller than that of DCT-based compression methods.

Author Contributions

W.H., J.W., and K.S.C. contributed to the conceptualization, methodology, and writing of this paper. J.Y. and T.-S.C conceived the simulation setup, formal analysis and conducted the in-vestigation. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data is contained within the article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Liu, J.; Tian, Y.-G.; Han, T.; Yang, C.-F.; Liu, W.-B. LSB steganographic payload location for JPEG-decompressed images. Digit. Signal Process. 2015, 38, 66–76. [Google Scholar] [CrossRef]

- Liu, J.; Tian, Y.; Han, T.; Wang, J.; Luo, X. Stego key searching for LSB steganography on JPEG decompressed image. Sci. China Inf. Sci. 2016, 59, 1–15. [Google Scholar] [CrossRef]

- Qin, C.; Chang, C.-C.; Chiu, Y.-P. A Novel Joint Data-Hiding and Compression Scheme Based on SMVQ and Image Inpainting. IEEE Trans. Image Process. 2014, 23, 969–978. [Google Scholar] [CrossRef]

- Qin, C.; Hu, Y.-C. Reversible data hiding in VQ index table with lossless coding and adaptive switching mechanism. Signal Process. 2016, 129, 48–55. [Google Scholar] [CrossRef]

- Tsou, C.-C.; Hu, Y.-C.; Chang, C.-C. Efficient optimal pixel grouping schemes for AMBTC. Imaging Sci. J. 2008, 56, 217–231. [Google Scholar] [CrossRef]

- Hu, Y.C.; Su, B.H.; Tsai, P.Y. Color image coding scheme using absolute moment block and prediction technique. Imaging Sci. J. 2008, 56, 254–270. [Google Scholar] [CrossRef]

- Delp, E.J.; Mitchell, O.R. Image coding using block truncation coding. IEEE Trans. Commun. 1979, 27, 1335–1342. [Google Scholar] [CrossRef]

- Lema, M.; Mitchell, O. Absolute Moment Block Truncation Coding and Its Application to Color Images. IEEE Trans. Commun. 1984, 32, 1148–1157. [Google Scholar] [CrossRef]

- Kumaravadivelan, A.; Nagaraja, P.; Sudhanesh, R. Video compression technique through block truncation coding. Int. J. Res. Anal. Rev. 2019, 6, 236–242. [Google Scholar]

- Hemida, O.; He, H. A self-recovery watermarking scheme based on block truncation coding and quantum chaos map. Multimed. Tools Appl. 2020, 79, 18695–18725. [Google Scholar] [CrossRef]

- Qin, C.; Ji, P.; Zhang, X.; Dong, J.; Wang, J. Fragile image watermarking with pixel-wise recovery based on overlapping embedding strategy. Signal Process. 2017, 138, 280–293. [Google Scholar] [CrossRef]

- Qin, C.; Ji, P.; Chang, C.-C.; Dong, J.; Sun, X. Non-uniform Watermark Sharing Based on Optimal Iterative BTC for Image Tampering Recovery. IEEE MultiMed. 2018, 25, 36–48. [Google Scholar] [CrossRef]

- Ma, Y.Y.; Luo, X.Y.; Li, X.L.; Bao, Z.; Zhang, Y. Selection of rich model steganalysis features based on decision rough set α-positive region reduction. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 336–350. [Google Scholar] [CrossRef]

- Zhang, Y.; Qin, C.; Zhang, W.M.; Liu, F.L.; Luo, X.Y. On the fault-tolerant performance for a class of robust image ste-ganography. Signal Process. 2018, 146, 99–111. [Google Scholar] [CrossRef]

- Hu, Y.-C. Low-complexity and low-bit-rate image compression scheme based on absolute moment block truncation coding. Opt. Eng. 2003, 42, 1964–1975. [Google Scholar] [CrossRef]

- Xiang, Z.; Hu, Y.-C.; Yao, H.; Qin, C. Adaptive and dynamic multi-grouping scheme for absolute moment block truncation coding. Multimed. Tools Appl. 2018, 78, 7895–7909. [Google Scholar] [CrossRef]

- Chen, W.-L.; Hu, Y.-C.; Liu, K.-Y.; Lo, C.-C.; Wen, C.-H. Variable-Rate Quadtree-segmented Block Truncation Coding for Color Image Compression. Int. J. Signal Process. Image Process. Pattern Recognit. 2014, 7, 65–76. [Google Scholar] [CrossRef]

- Hong, W. Efficient Data Hiding Based on Block Truncation Coding Using Pixel Pair Matching Technique. Symmetry 2018, 10, 36. [Google Scholar] [CrossRef]

- Mathews, J.; Nair, M.S. Adaptive block truncation coding technique using edge-based quantization approach. Comput. Electr. Eng. 2015, 43, 169–179. [Google Scholar] [CrossRef]

- Hartigan, J.A.; Wong, M.A. A K-means clustering algorithm. Appl. Stat. 1979, 28, 100–108. [Google Scholar] [CrossRef]

- The USC-SIPI Image Database. Available online: http://sipi.usc.edu/database/ (accessed on 1 November 2020).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).