Automatic Outdoor Patrol Robot Based on Sensor Fusion and Face Recognition Methods

Abstract

:1. Introduction

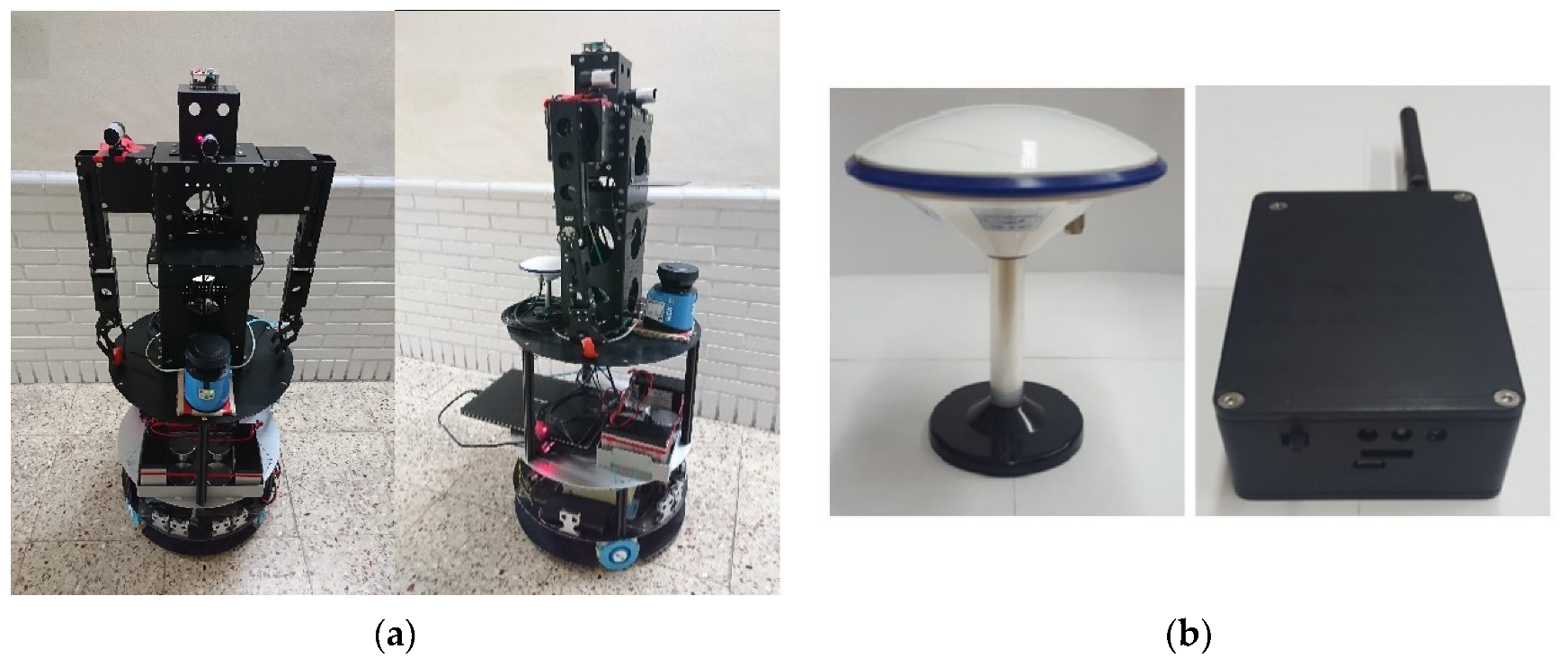

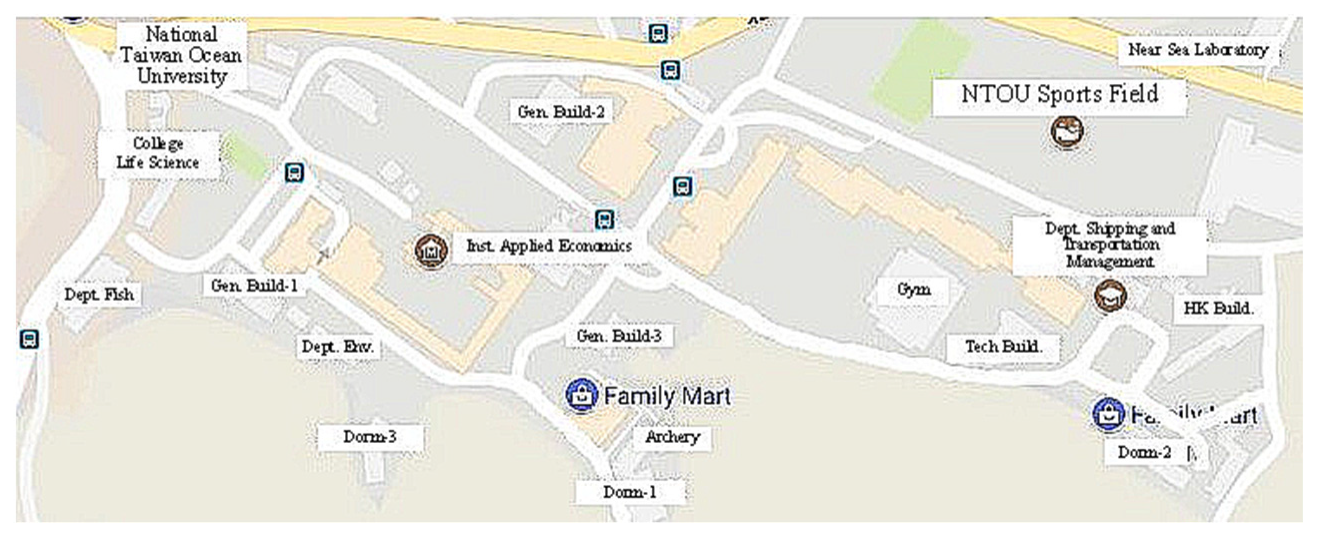

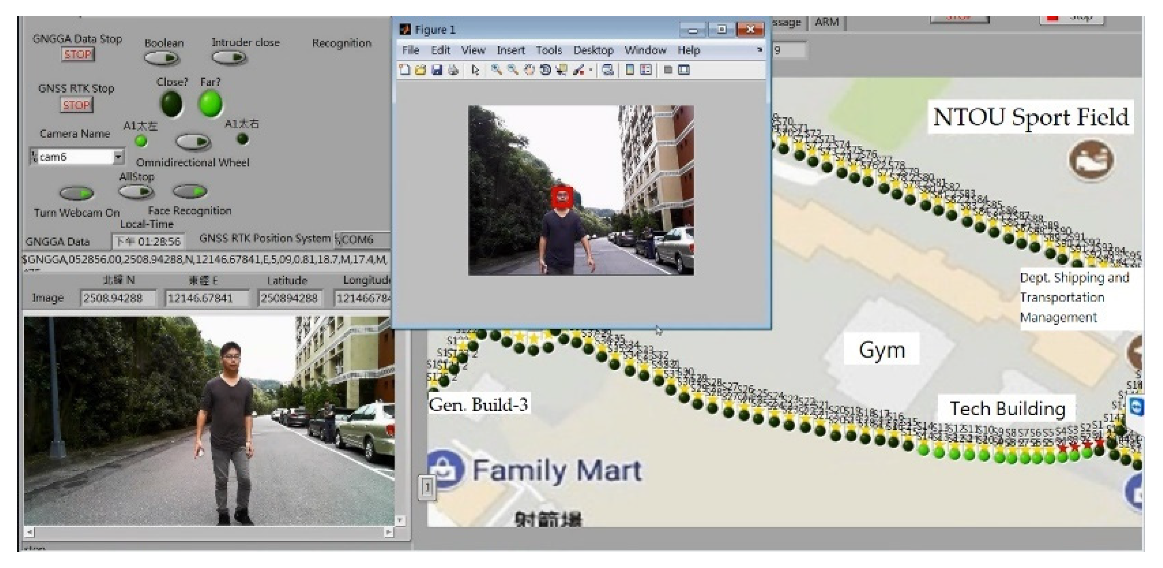

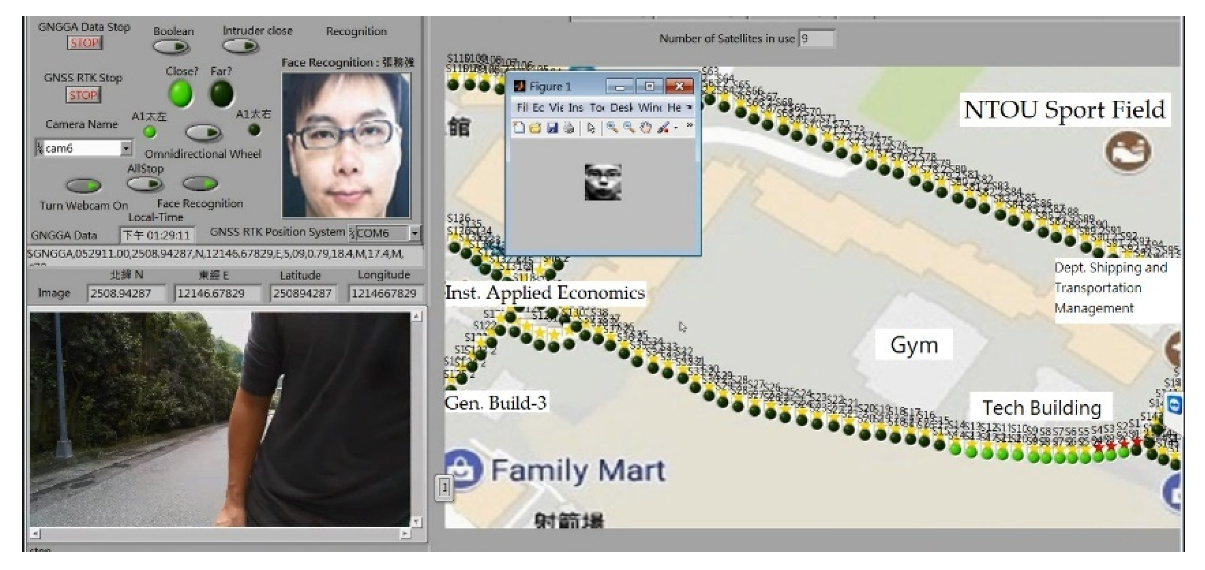

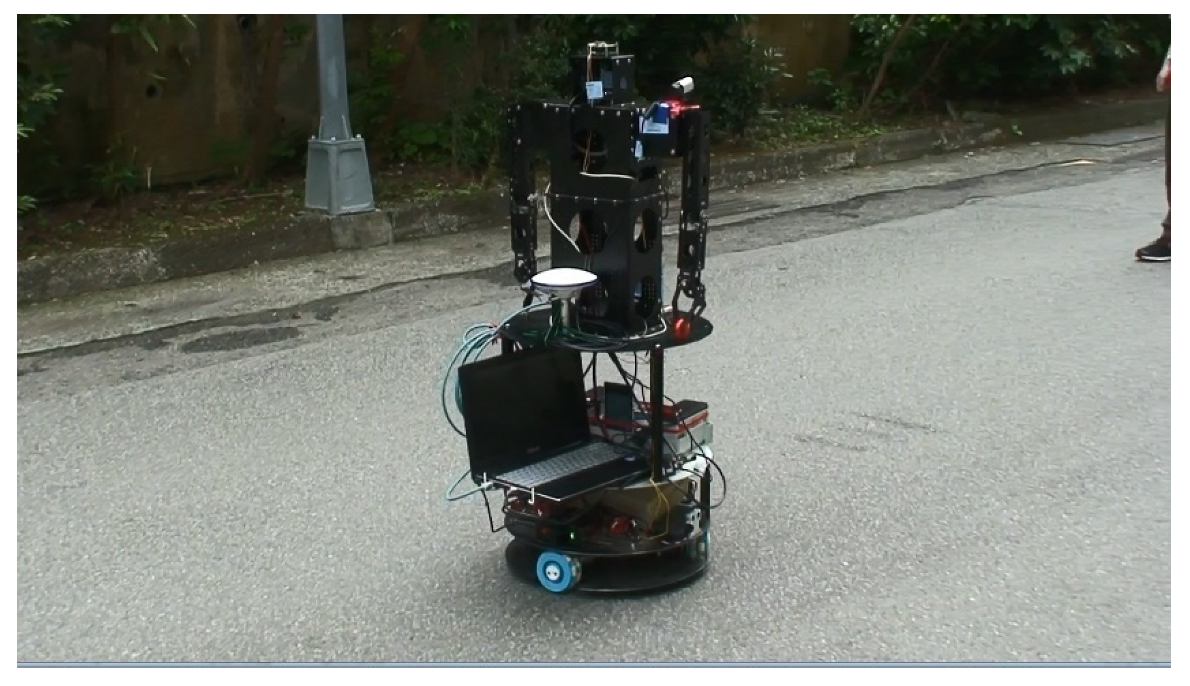

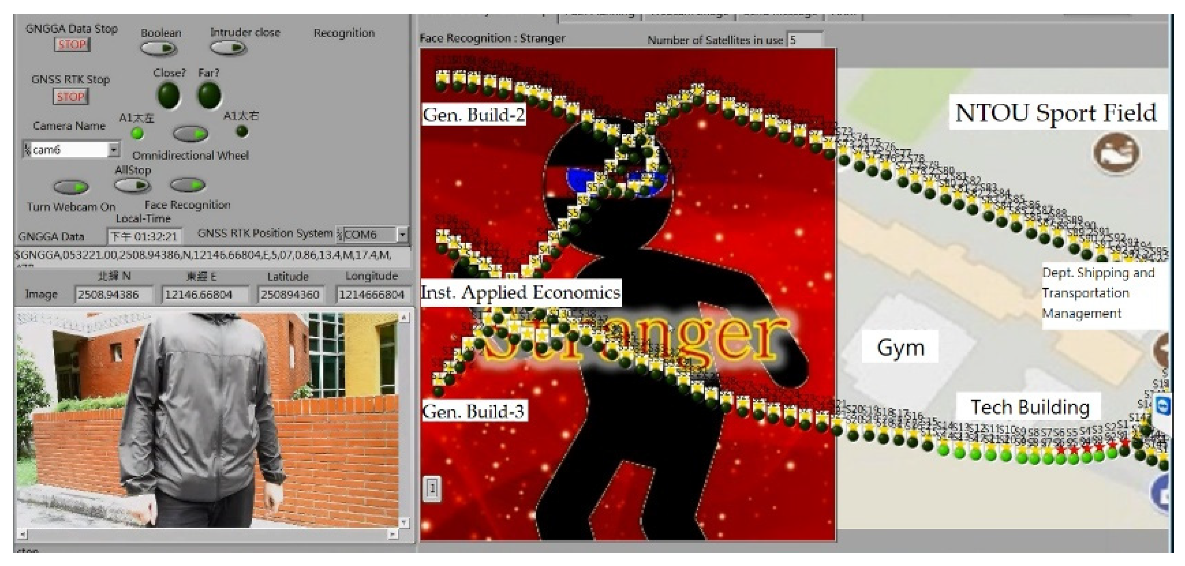

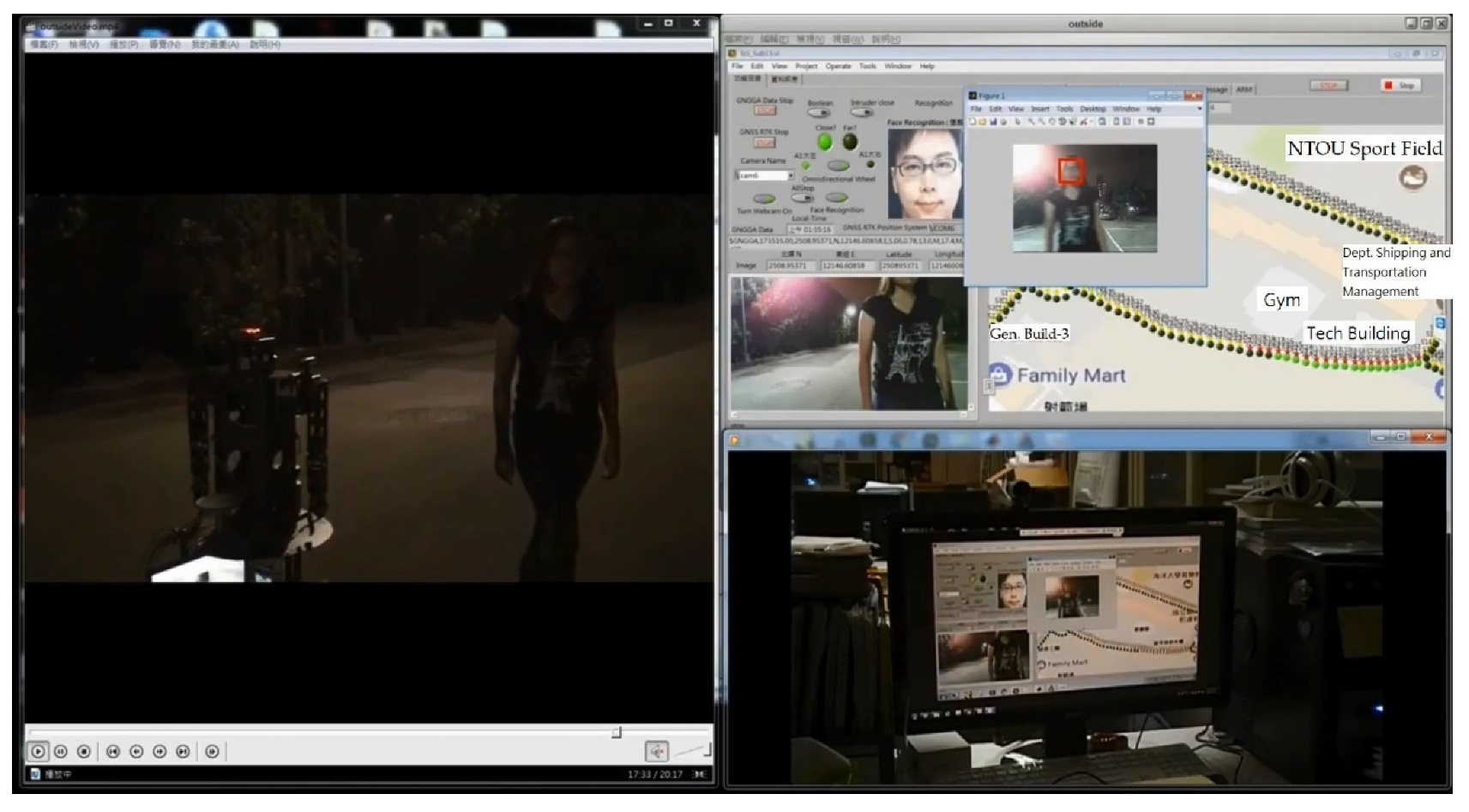

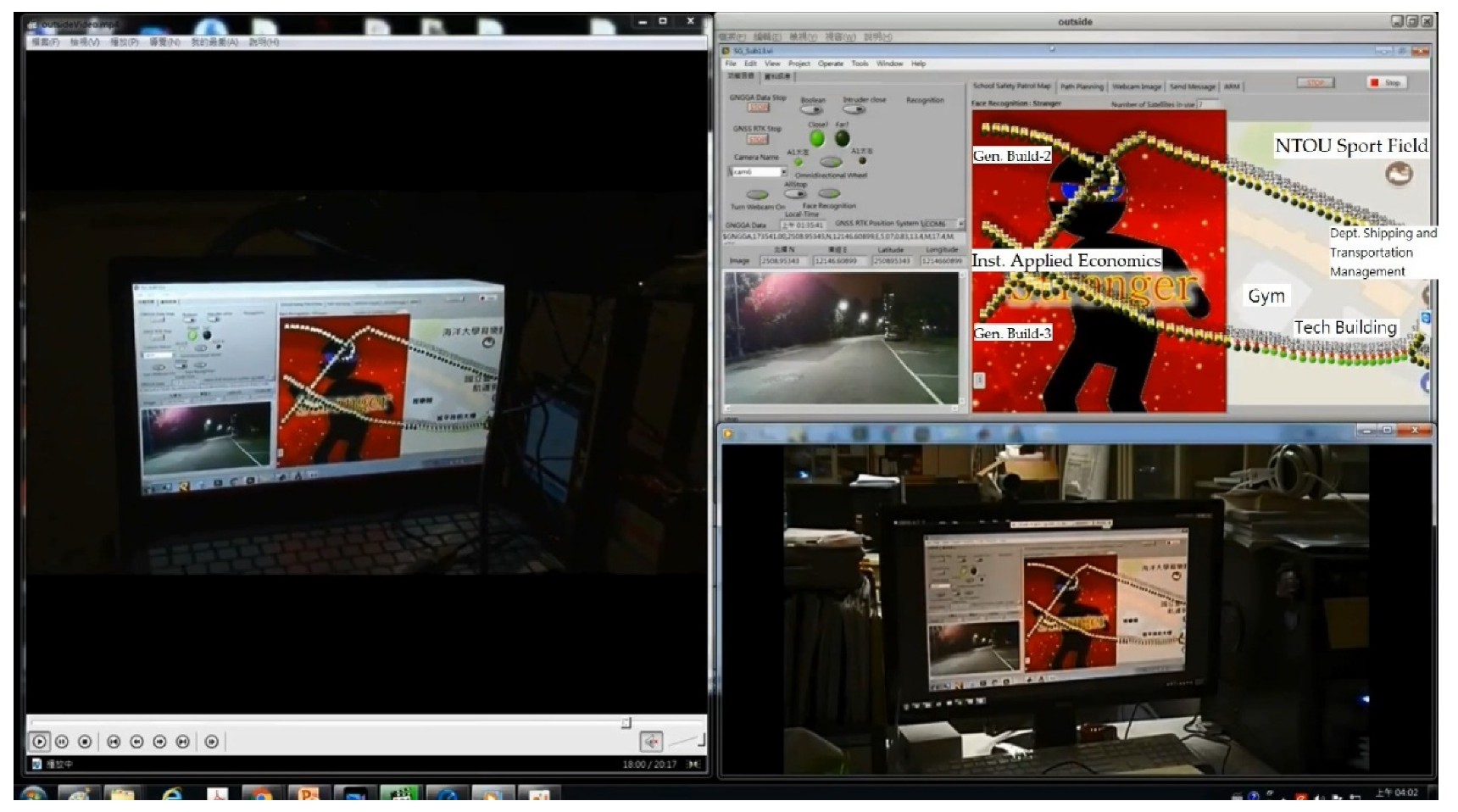

2. Materials and Methods

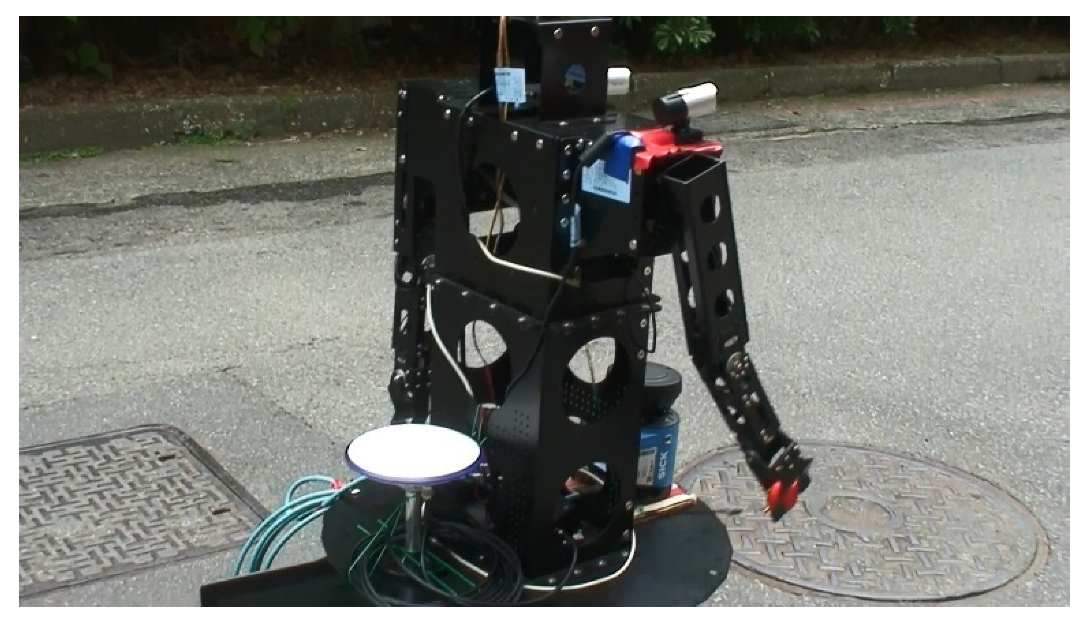

2.1. Composition and Structure of the Robot

2.2. GNSS RTK Positioning System and Differential GPS

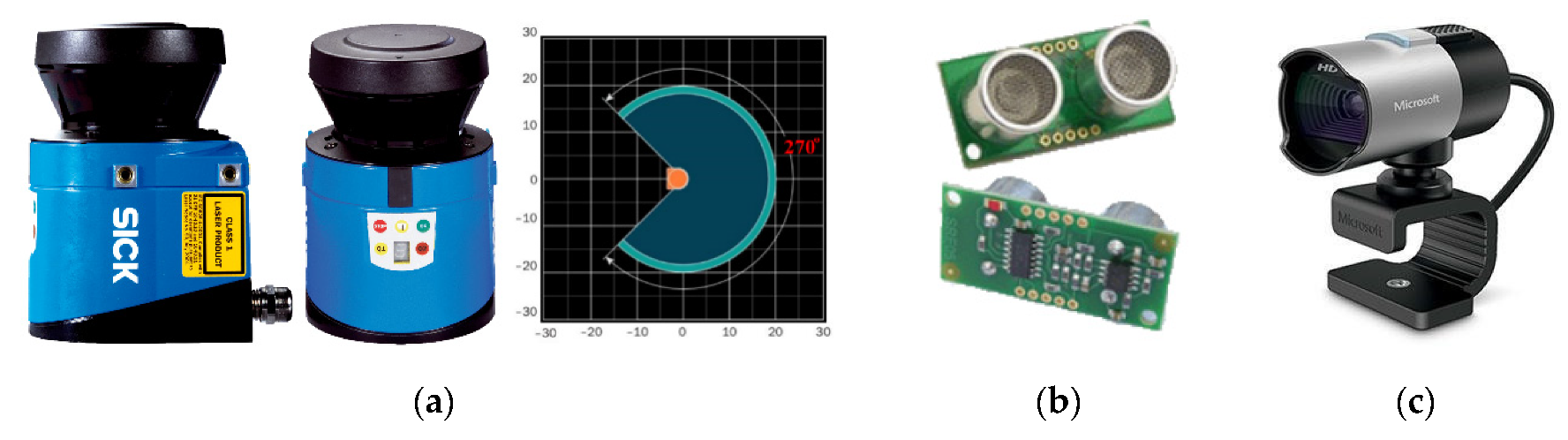

2.3. Environmental Sensors of the Robot

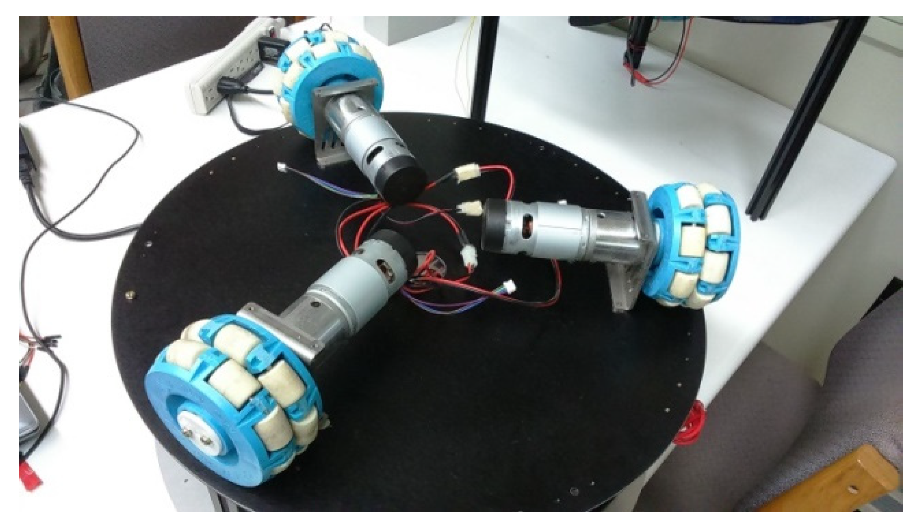

2.4. Composition of the Motion System

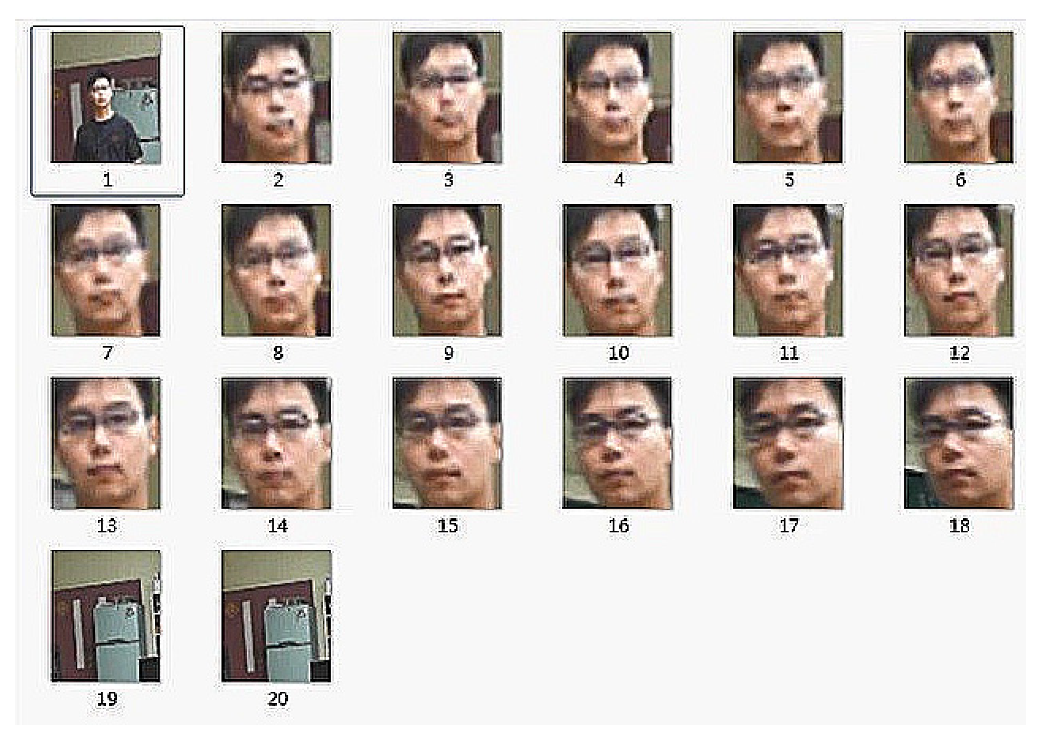

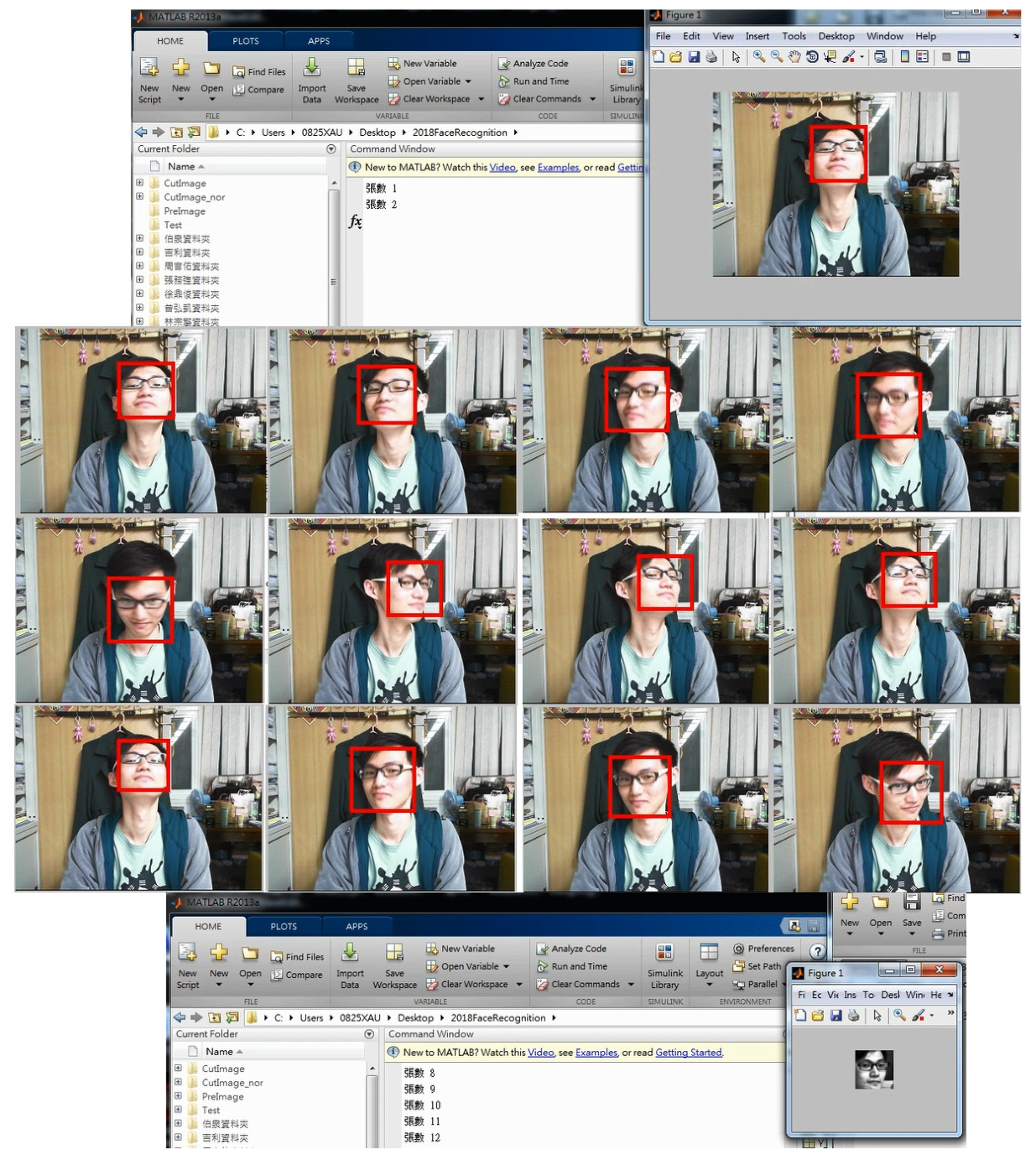

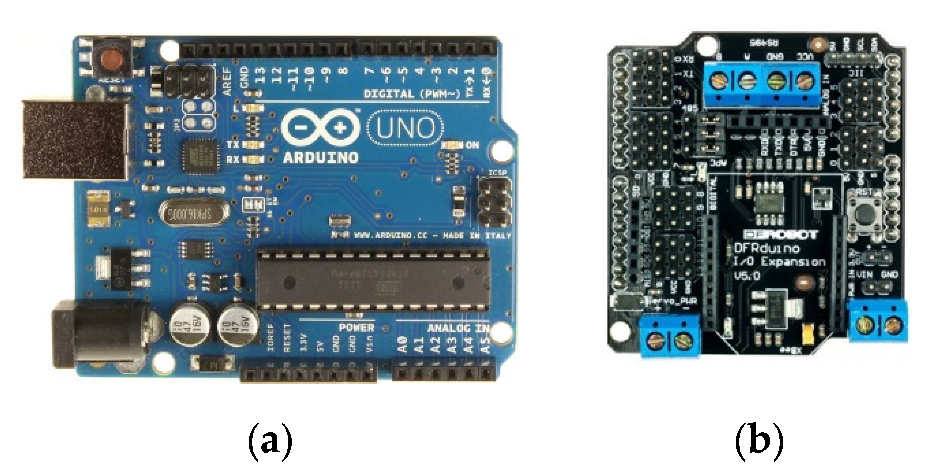

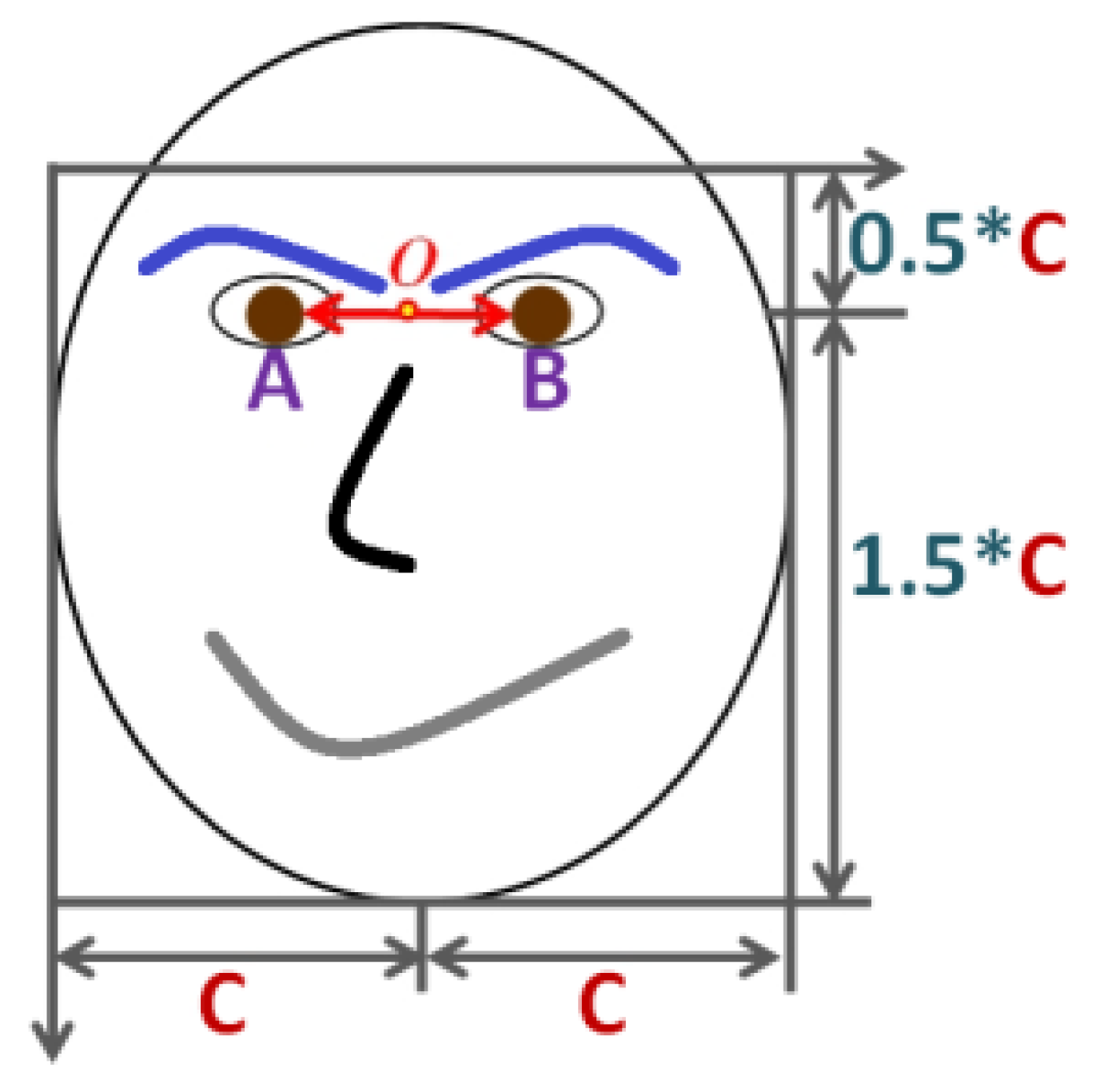

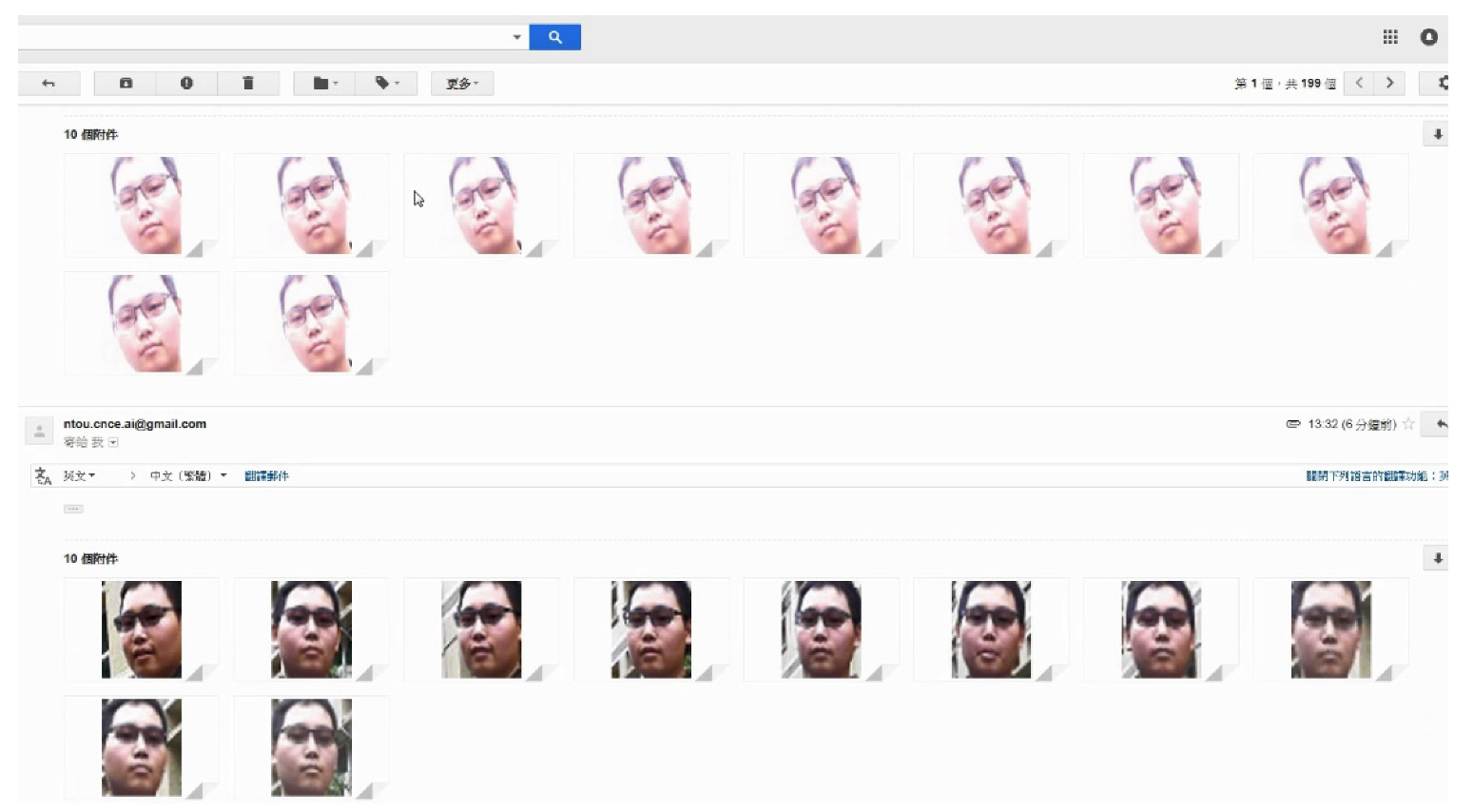

2.5. Face Recognition

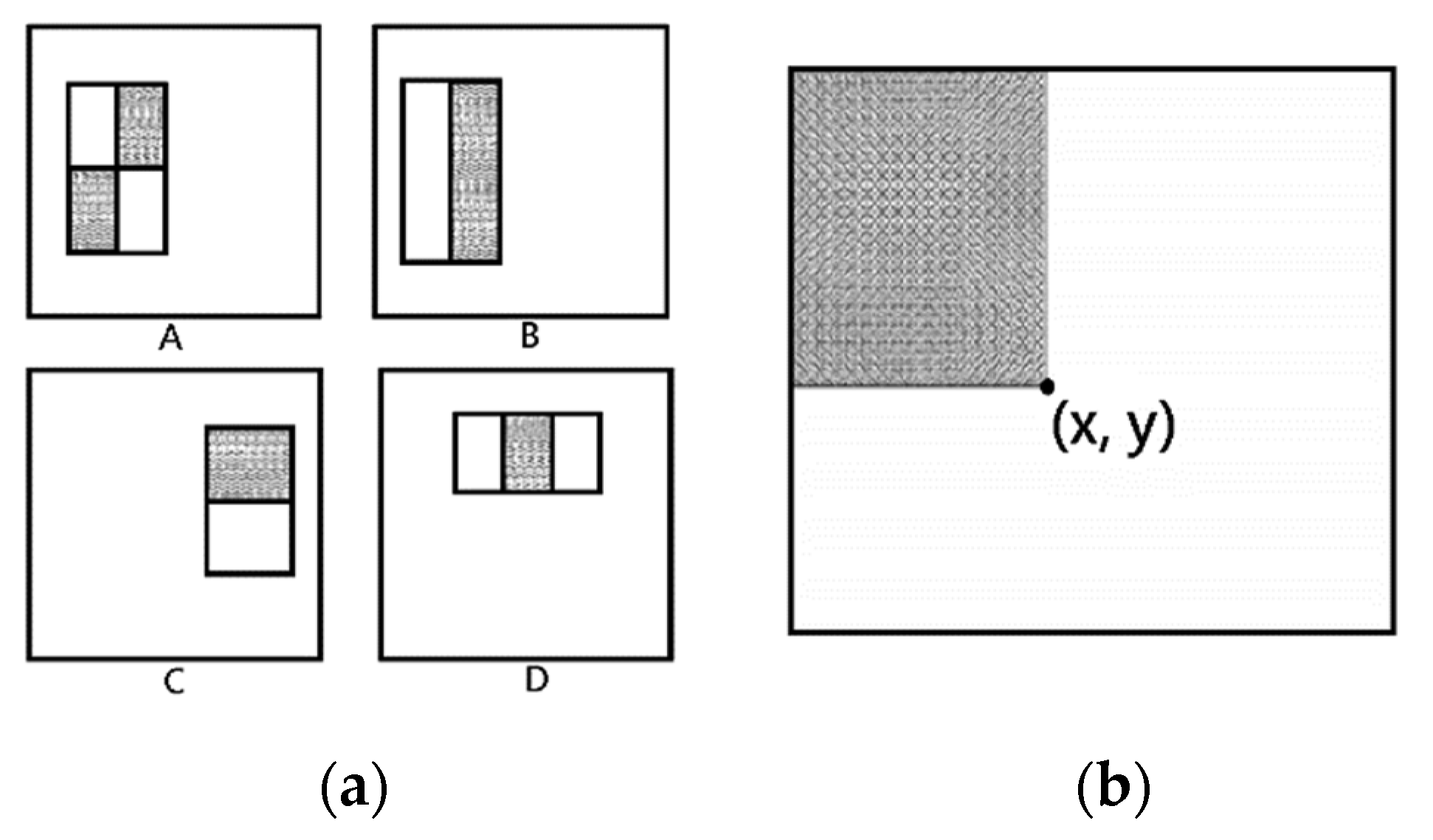

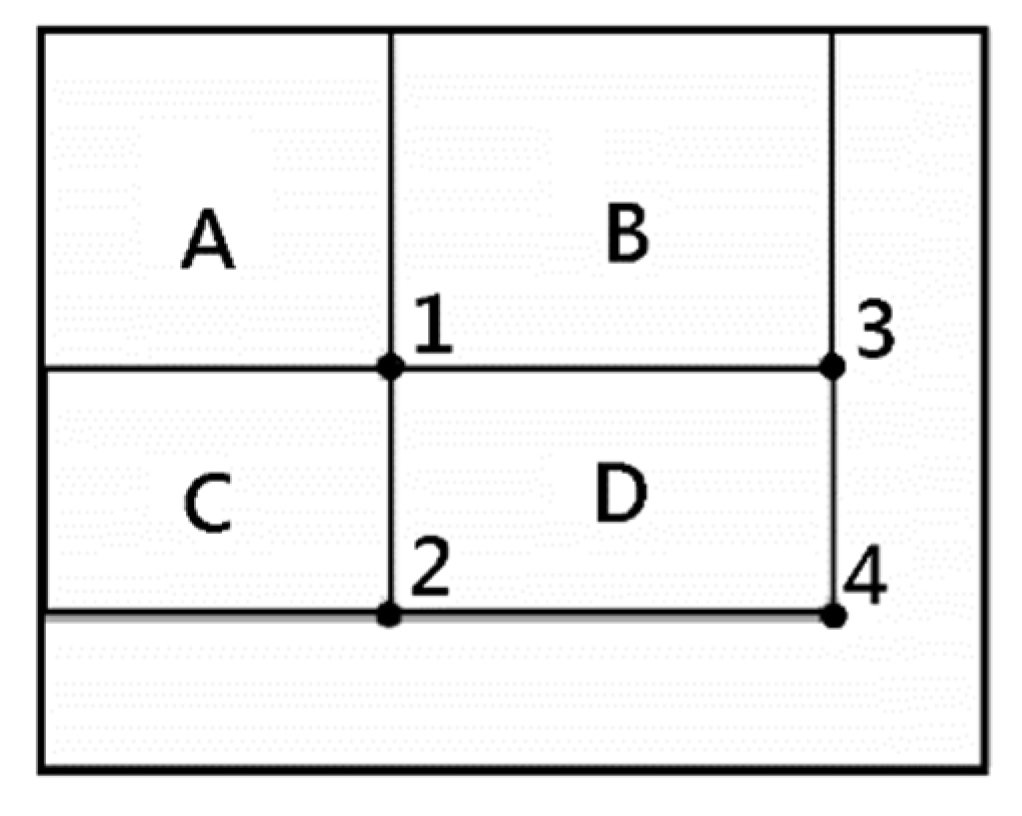

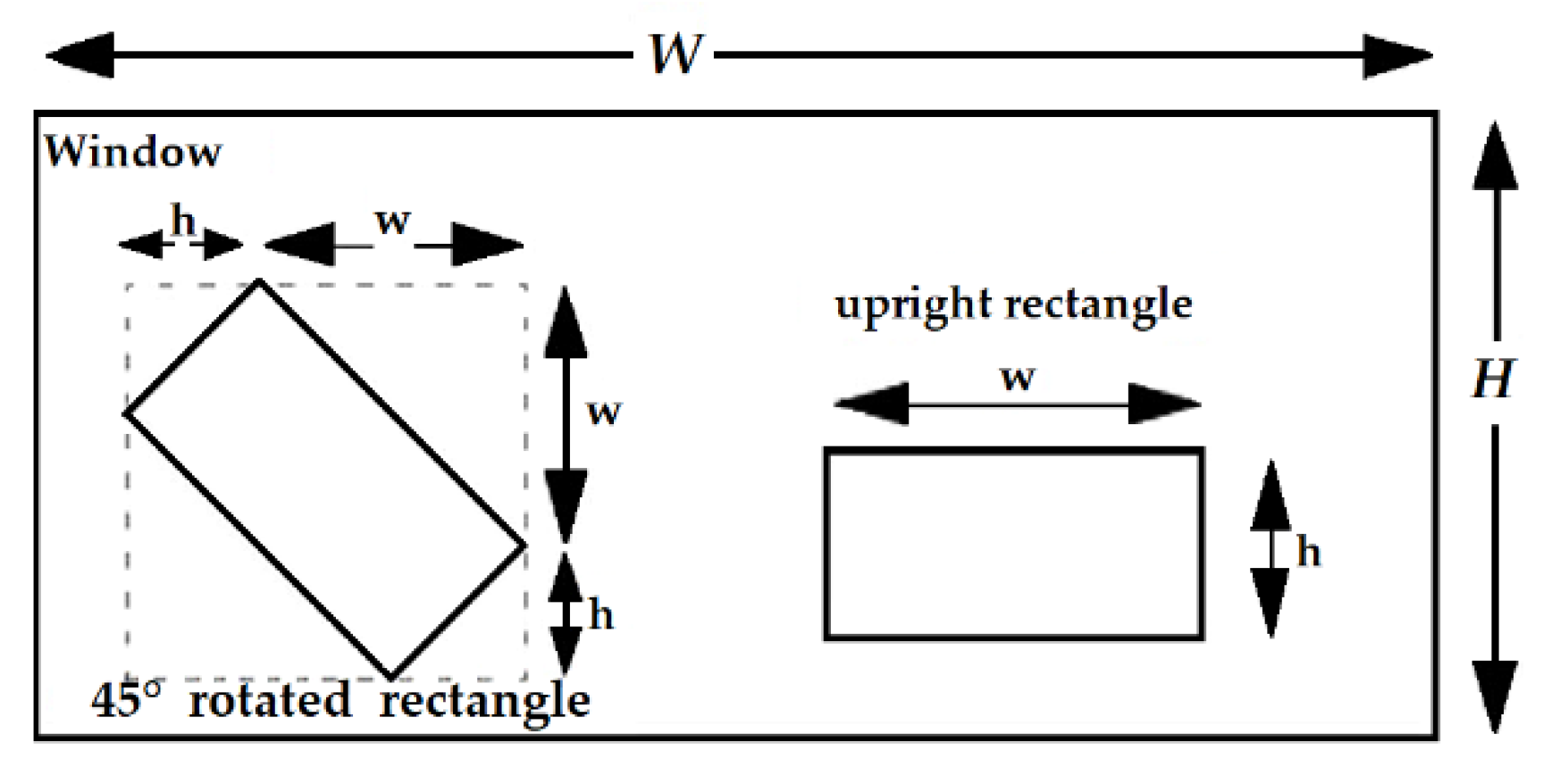

2.5.1. Integral Image

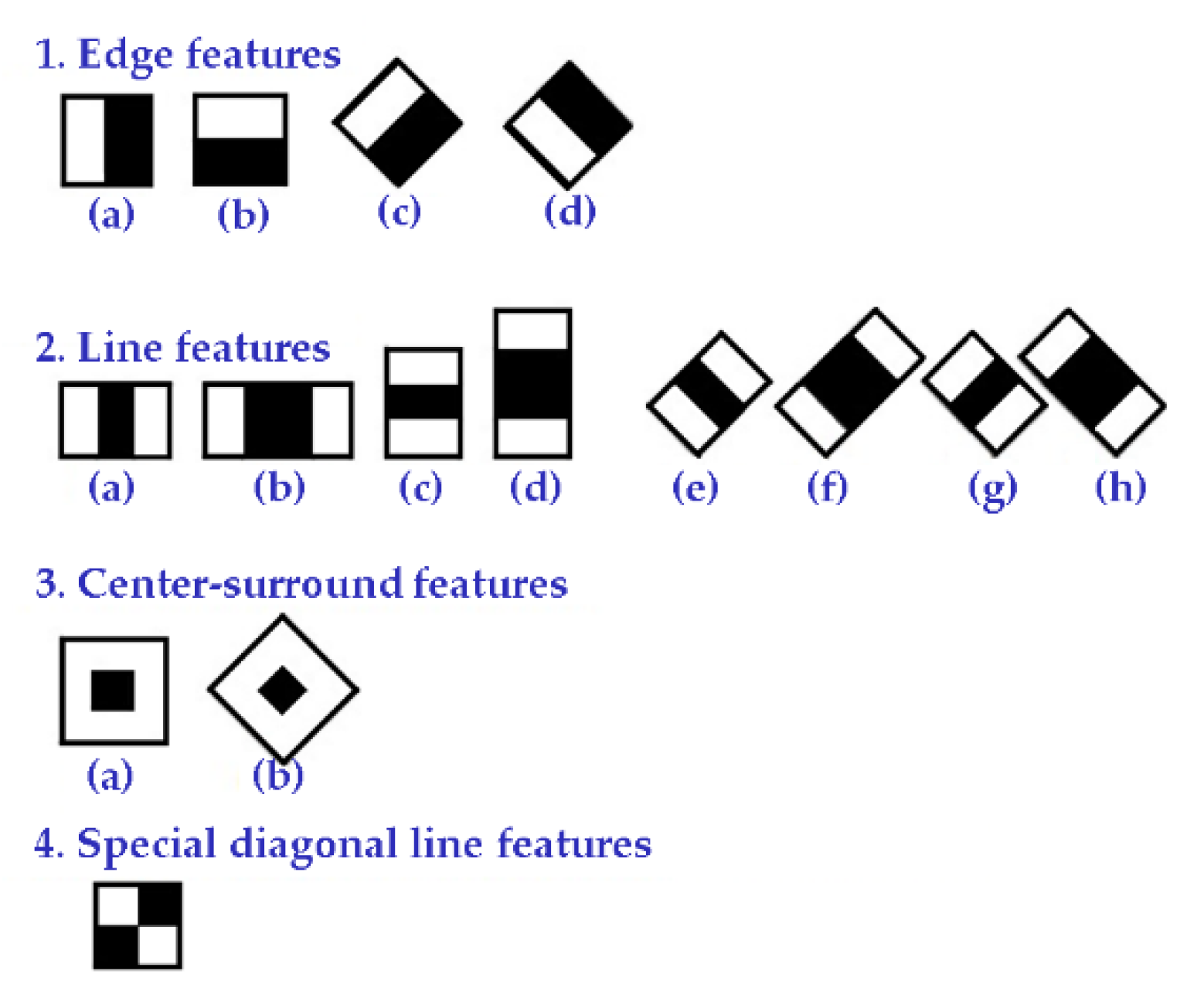

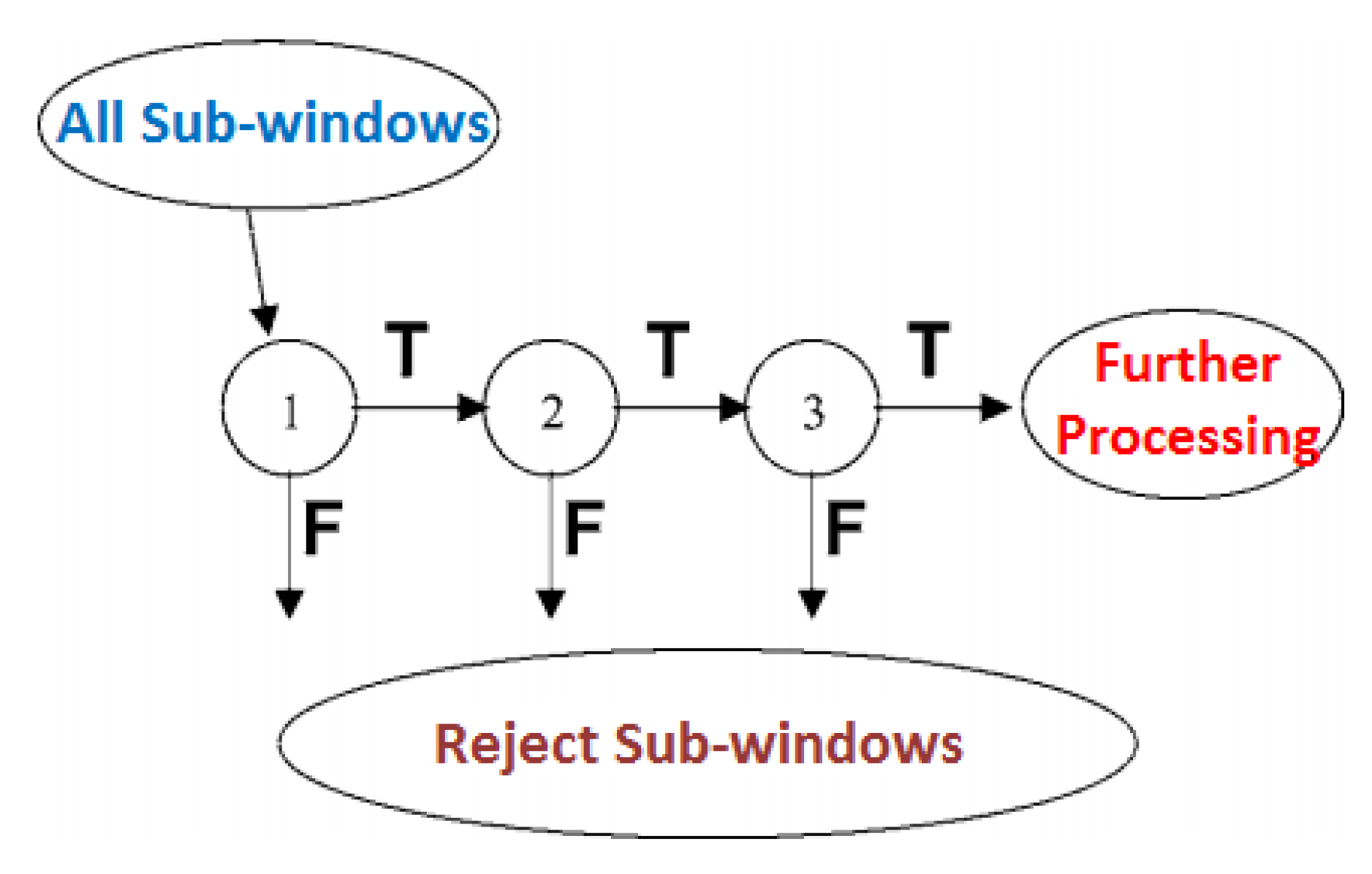

2.5.2. Adaptive Boosting and Preprocessing

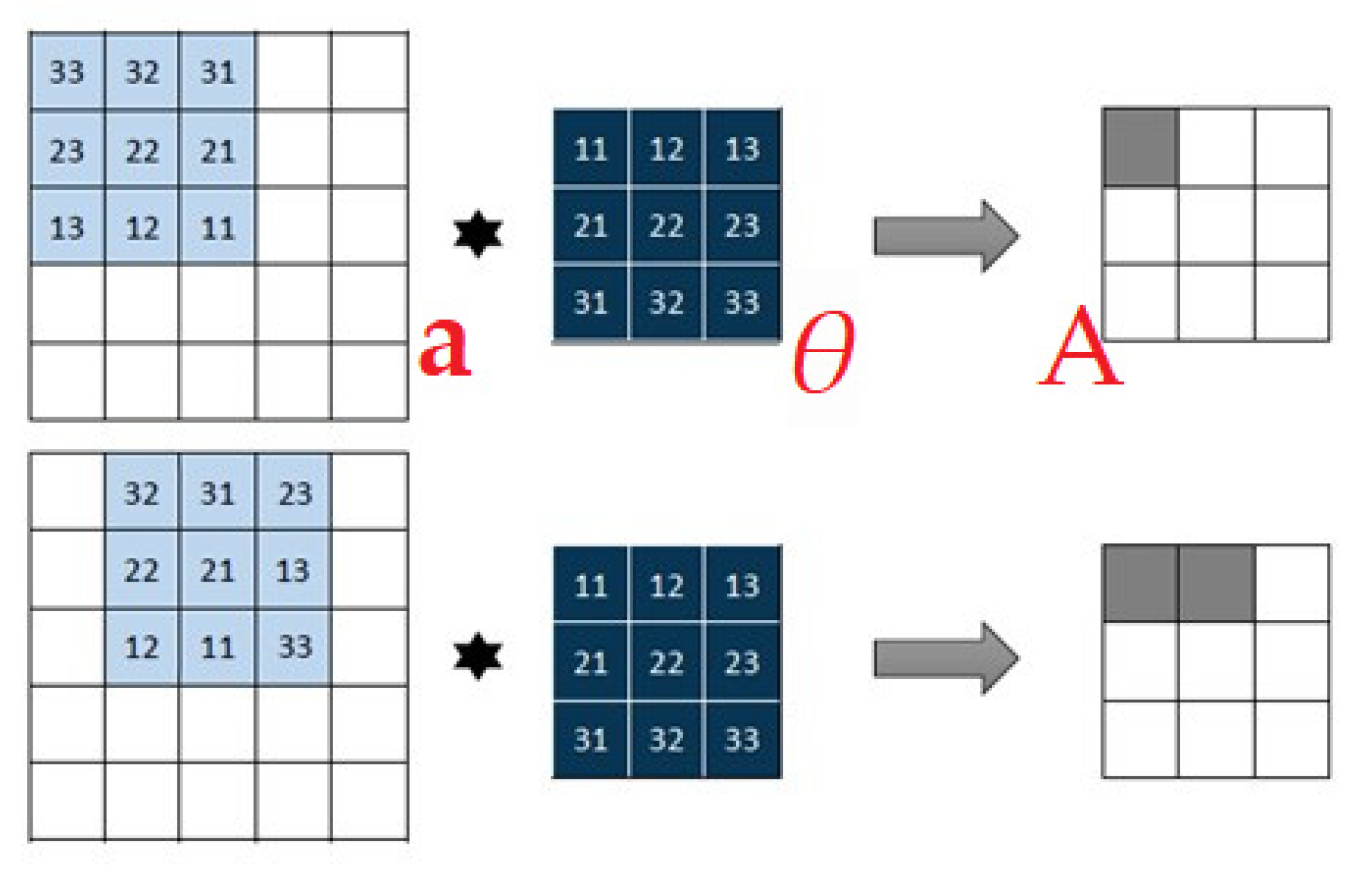

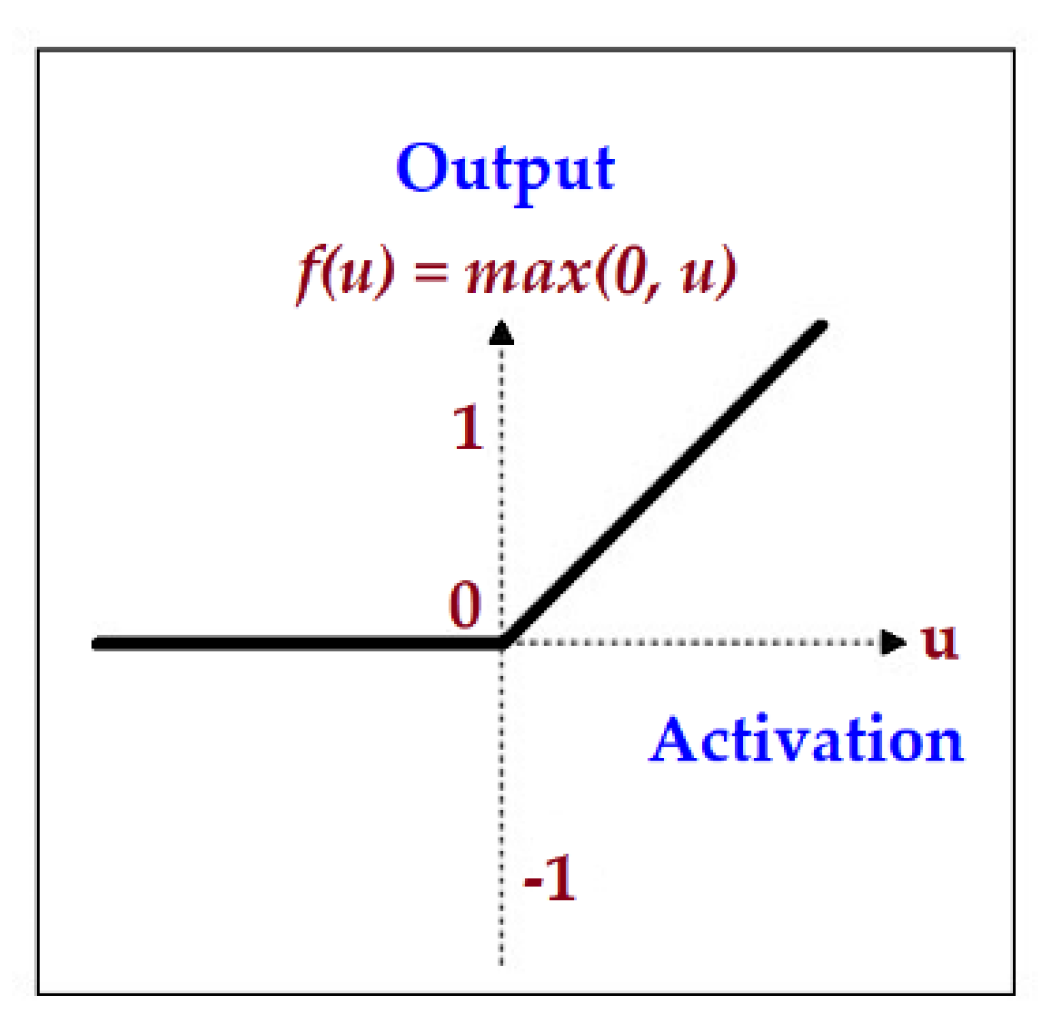

2.5.3. Convolutional Neural Network

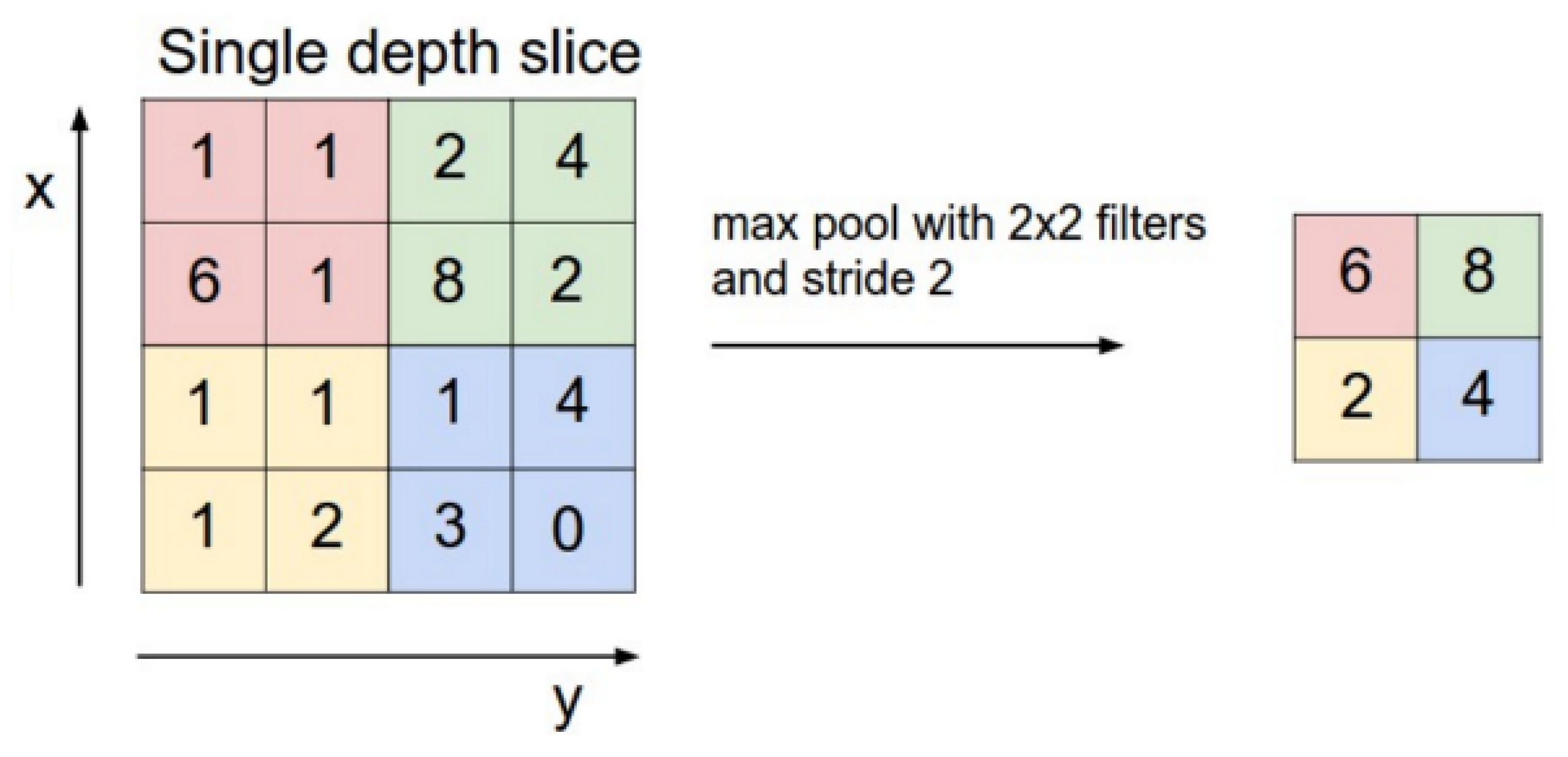

- Max Pooling, take the maximum inside of the 4 points. This pooling method is used in this study, as shown in Figure 15.

- Mean Pooling, take the average for the 4 points.

- Gaussian pooling, learn from Gaussian fuzzy methods, uncommonly used.

- It can be pooled, the training function (f) accepts 4 points as input and 1 point as output, uncommonly used.

- Since the length of the feature map is not necessarily a multiple of 2, there are also two schemes for edge processing:

- Ignore edges, save the extra edges directly.

- Keep edges, the variable length of the feature map is filled with 0 as a multiple of 2, and then pooled. This is the edge processing method used in this study.

2.5.4. Real-Time Face Detection in Dynamic Background

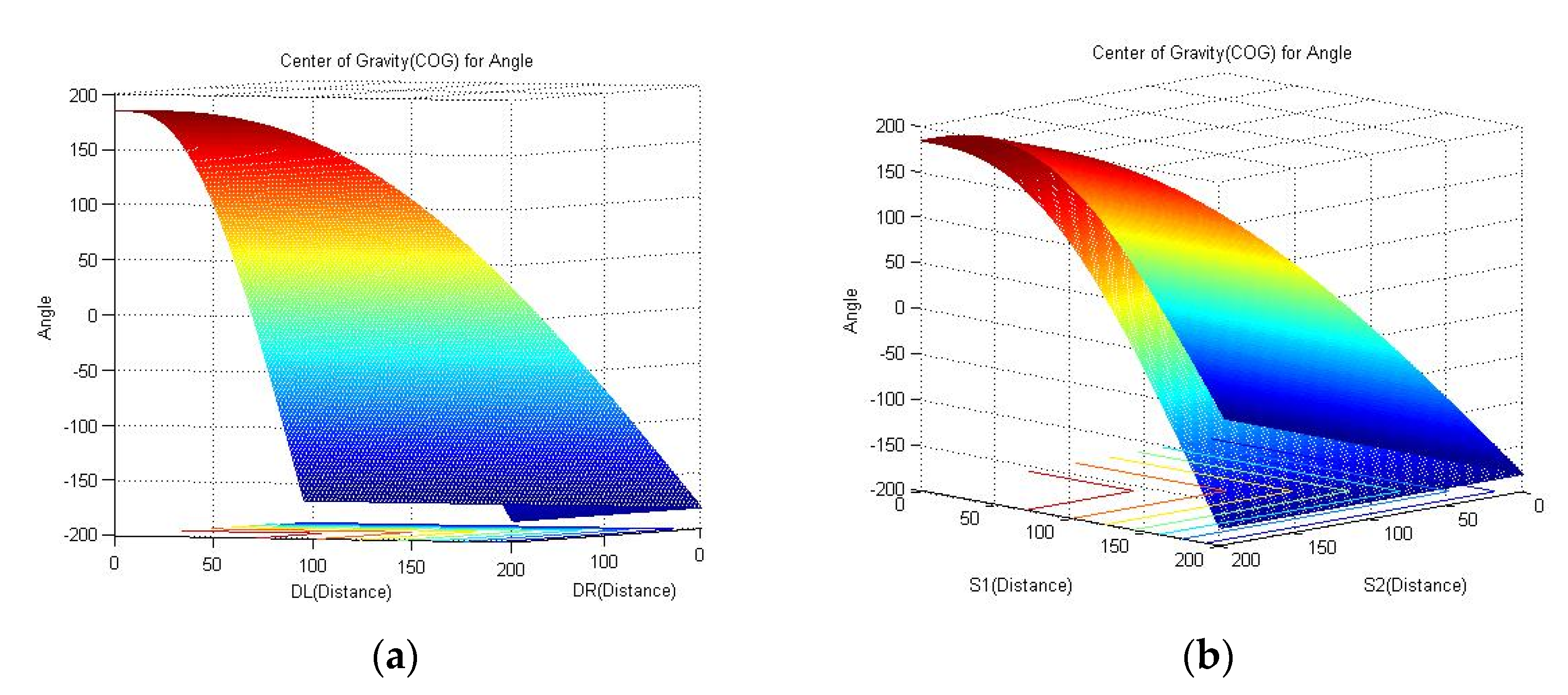

2.6. Fuzzy Control

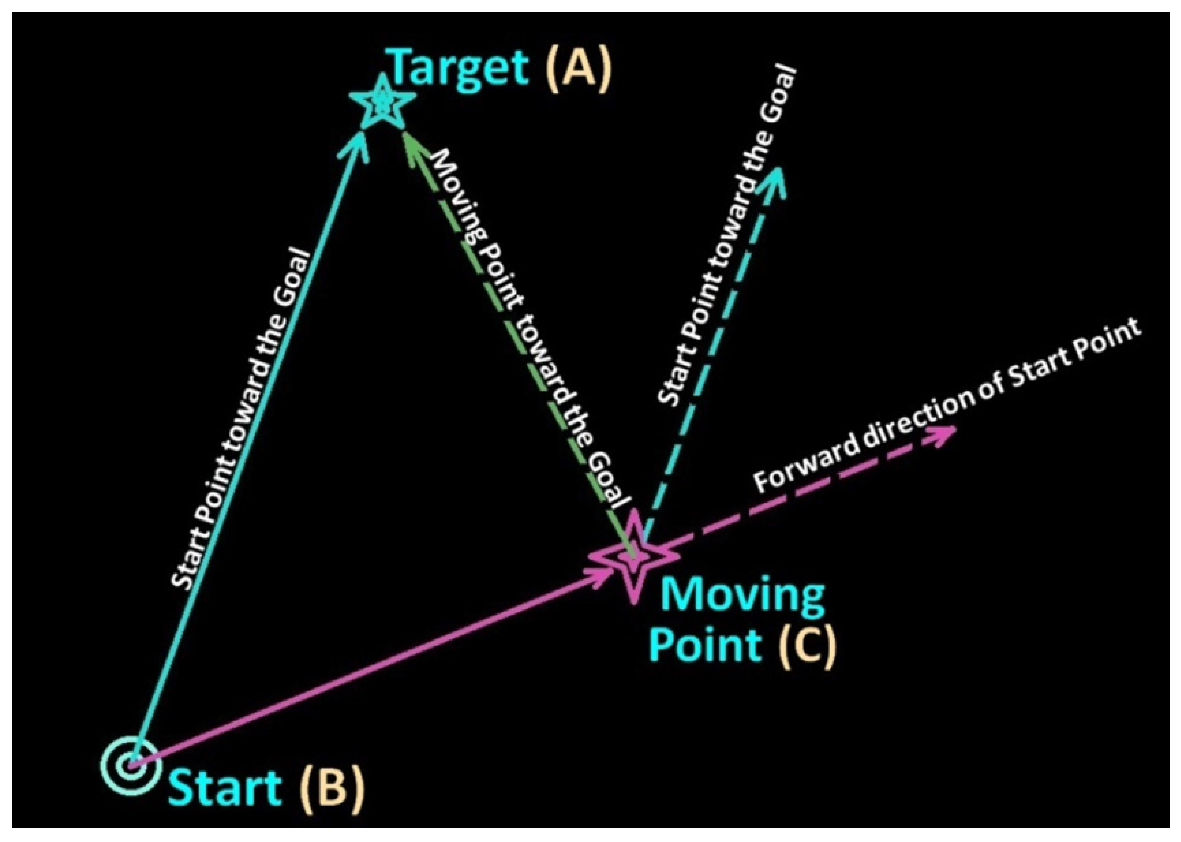

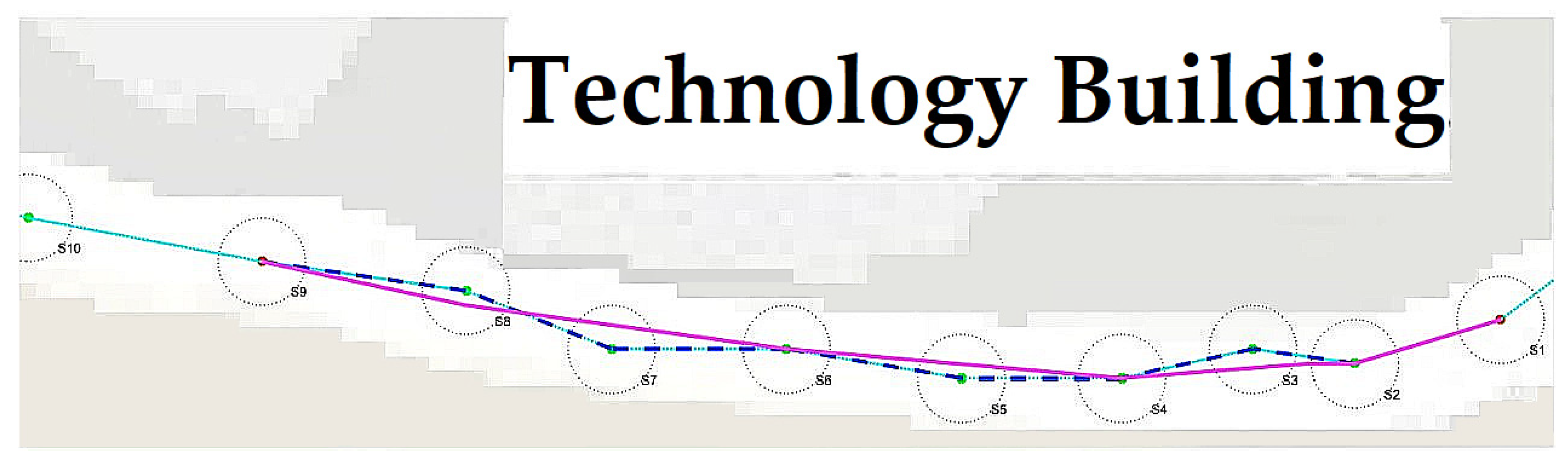

2.7. The Path Planning

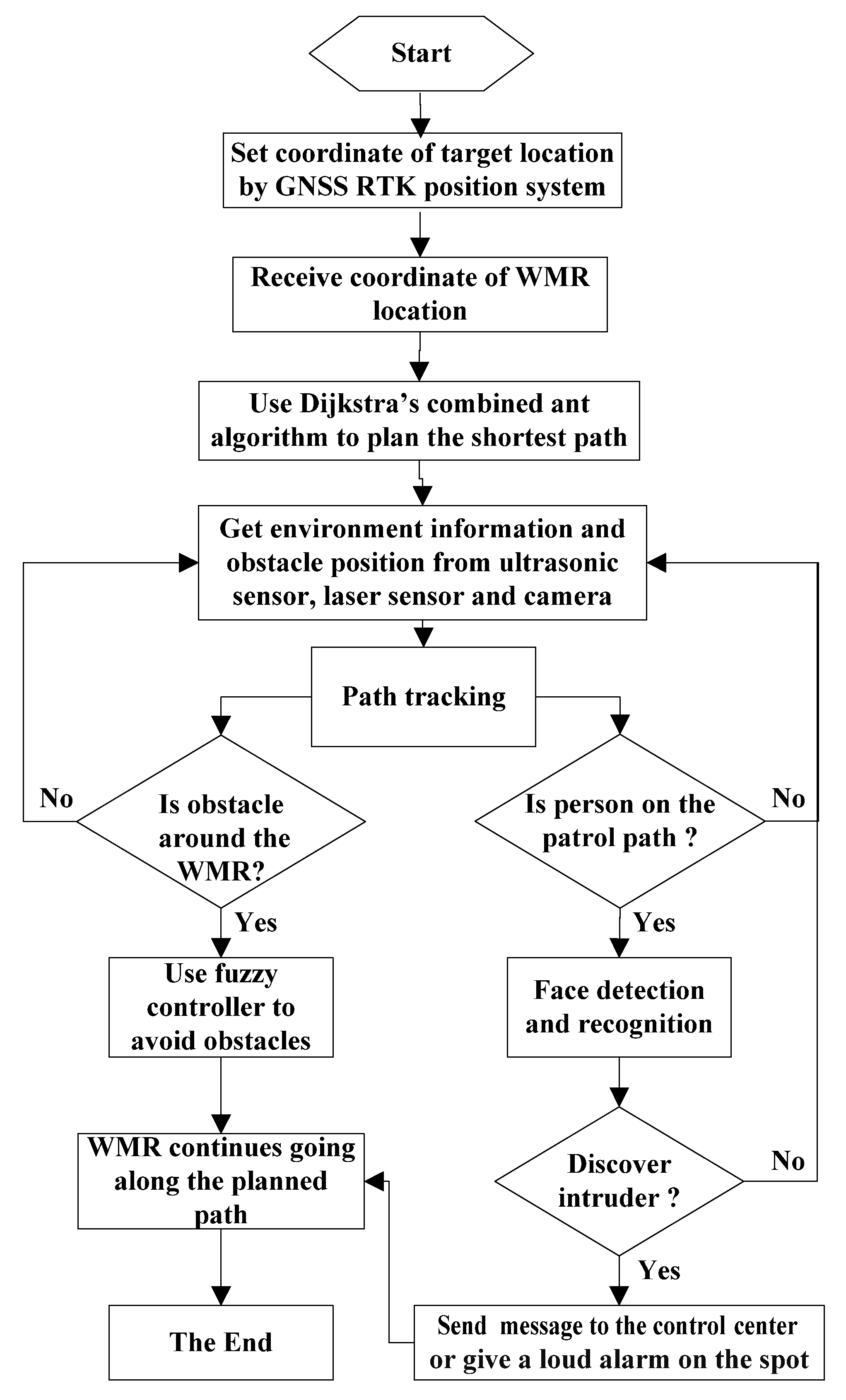

2.8. The Control Scheme

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix B

| Classification | Misclassification | Test Sample | False Rate |

|---|---|---|---|

| Class 1 | 1 | 15 | 0.067 |

| Class 2 | 0 | 15 | 0.000 |

| Class 3 | 0 | 15 | 0.000 |

| Class 4 | 0 | 15 | 0.000 |

| Class 5 | 1 | 15 | 0.067 |

| Class 6 | 1 | 15 | 0.067 |

| Class 7 | 2 | 15 | 0.133 |

| Class 8 | 0 | 15 | 0.000 |

| Class 9 | 0 | 15 | 0.000 |

| Class 10 | 0 | 15 | 0.000 |

| Class 11 | 2 | 15 | 0.133 |

| Class 12 | 0 | 15 | 0.000 |

| Total | 7 | 180 | 0.039 |

| Classification | Misclassification | Test Sample | False Rate |

|---|---|---|---|

| Class 1 | 2 | 10 | 0.200 |

| Class 2 | 1 | 10 | 0.100 |

| Class 3 | 1 | 10 | 0.100 |

| Class 4 | 2 | 10 | 0.200 |

| Class 5 | 1 | 10 | 0.100 |

| Class 6 | 3 | 10 | 0.300 |

| Class 7 | 4 | 10 | 0.400 |

| Class 8 | 2 | 10 | 0.200 |

| Class 9 | 1 | 10 | 0.100 |

| Class 10 | 1 | 10 | 0.100 |

| Class 11 | 5 | 10 | 0.500 |

| Class 12 | 0 | 10 | 0.000 |

| Total | 23 | 120 | 0.192 |

Appendix C

| Fuzzy Rules | Input of DL | Input of DF | Input of DR | Output of Fuzzy Control | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Near | Medium | Far | Near | Medium | Far | Near | Medium | Far | VLL | VL | S | VR | VRR | |

| 1 | 1 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 |

| 2 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 |

| 3 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 1 |

| 4 | 1 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 |

| 5 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 |

| 6 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 |

| 7 | 1 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 |

| 8 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 |

| 9 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 |

| 10 | 0 | 1 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 |

| 11 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 |

| 12 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 1 |

| 13 | 0 | 1 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 |

| 14 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 0 |

| 15 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 |

| 16 | 0 | 1 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 |

| 17 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 |

| 18 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 |

| 19 | 0 | 0 | 1 | 1 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 |

| 20 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 |

| 21 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 1 |

| 22 | 0 | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 |

| 23 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 |

| 24 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 |

| 25 | 0 | 0 | 1 | 0 | 0 | 1 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 |

| 26 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 |

| 27 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 |

Appendix D. The Use of GNSS

References

- Juang, J.G.; Chen, H.S.; Lin, C.C. Intelligent path tracking and motion control for wheeled mobile robot. GESTS Int. Trans. Comput. Sci. Eng. 2010, 61, 57–68. [Google Scholar]

- Ohya, A.; Kosaka, A.; Kak, A. Vision-based navigation by a mobile robot with obstacle avoidance using single-camera vision and ultrasonic sensing. IEEE Trans. Robot. Autom. 1998, 14, 969–978. [Google Scholar] [CrossRef]

- Juang, J.-G.; Tsai, Y.-J.; Fan, Y.-W. Visual recognition and its application to robot arm control. Appl. Sci. 2015, 5, 851–880. [Google Scholar] [CrossRef]

- Coelho, J.; Ribeiro, F.; Dias, B.; Lopes, G.; Flores, P. Trends in the Control of Hexapod Robots: A survey. Robotics 2021, 10, 100. [Google Scholar] [CrossRef]

- Azizi, M.R.; Rastegarpanah, A.; Stolkin, R. Motion planning and control of an omnidirectional mobile robot in dynamic environments. Robotics 2021, 10, 48. [Google Scholar] [CrossRef]

- Qian, J.; Zi, B.; Wang, D.; Ma, Y.; Zhang, D. The design and development of an omni-directional mobile robot oriented to an intelligent manufacturing system. Sensors 2017, 17, 2073. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kanjanawanishkul, K. Omnidirectional wheeled mobile robots: Wheel types and practical applications. Int. J. Adv. Mechatron. Syst. 2015, 6, 289–302. [Google Scholar] [CrossRef]

- Juang, J.G.; Hsu, K.J.; Lin, C.M. A wheeled mobile robot path-tracking system based on image processing and adaptive CMAC. J. Mar. Sci. Technol. 2014, 22, 331–340. [Google Scholar]

- Pastore, T.H.; Everett, H.R.; Bonner, K. Mobile Robots for Outdoor Security Applications. 1999. Available online: https://www.semanticscholar.org (accessed on 21 March 2018).

- Ohno, K.; Tsubouchi, T.; Shigematsu, B.; Yuta, S. Differential GPS and odometry-based outdoor navigation of a mobile robot. Adv. Robot. 2004, 18, 611–635. [Google Scholar] [CrossRef]

- Maram, S.S.; Vishnoi, T.; Pandey, S. Neural Network and ROS based threat detection and patrolling assistance. In Proceedings of the International Conference on Advanced Computational and Communication Paradigms, Gangtok, India, 25–28 February 2019. [Google Scholar]

- Fava, A.D.; Satler, M.; Tripicchio, P. Visual navigation of mobile robots for autonomous patrolling of indoor and outdoor areas. In Proceedings of the Mediterranean Conference on Control and Automation, Torremolinos, Spain, 16 July 2015. [Google Scholar]

- Shin, Y.; Jung, C.; Chung, W. Drivable road region detection using a single laser range finder for outdoor patrol robots. In Proceedings of the IEEE Intelligent Vehicles Symposium, La Jolla, CA, USA, 21–24 June 2010. [Google Scholar]

- Kartikey, A.D.; Srivastava, U.; Srivastava, V.; Rajesh, S. Nonholonomic shortest robot path planning in a dynamic environment using polygonal obstacles. In Proceedings of the IEEE International Conference on Industrial Technology, Via del Mar, Chile, 14–17 March 2010. [Google Scholar]

- Lin, R.; Li, M.; Sun, L. Real-time objects recognition and obstacles avoidance for mobile robot. In Proceedings of the IEEE International Conference on Robotics and Biomimetics, Shenzhen, China, 12–14 December 2013. [Google Scholar]

- Sun, T.; Wang, Y.; Yang, J.; Hu, X. Convolution neural networks with two pathways for image style recognition. IEEE Trans. Image Process. 2017, 26, 4102–4113. [Google Scholar] [CrossRef] [PubMed]

- Huang, G.S.; Tung, C.K.; Lin, H.C.; Hsiao, S.H. Inverse kinematics analysis trajectory planning for a robot arm. In Proceedings of the 8th Asian Control Conference, Kaohsiung, Taiwan, 15–18 May 2011. [Google Scholar]

- Lian, S.H. Fuzzy logic control of an obstacle avoidance robot. In Proceedings of the 5th IEEE International Conference on Fuzzy Systems, New Orleans, LA, USA, 11 September 1996. [Google Scholar]

- Chung, J.H.; Yi, B.J.; Kim, W.K.; Lee, H. The dynamic modeling and analysis for an omnidirectional mobile robot with three caster wheels. In Proceedings of the 2003 IEEE International Conference on Robotics & Automation, Taipei, Taiwan, 14–19 September 2003. [Google Scholar]

- Aman, M.S.; Mahmud, M.A.; Jiang, H.; Abdelgawad, A.; Yelamarthi, K. A sensor fusion methodology for obstacle avoidance robot. In Proceedings of the IEEE International Conference on Electro Information Technology, Grand Forks, ND, USA, 19–21 May 2016. [Google Scholar]

- Fukai, H.; Mitsukura, Y.; Xu, G. The calibration between range sensor and mobile robot, and construction of an obstacle avoidance robot. In Proceedings of the 21st IEEE International Symposium on Robot and Human Interactive Communication, Paris, France, 9–13 September 2012. [Google Scholar]

- Liu, Y.; Wu, X.; Zhu, J.; Lew, J. Omni-directional mobile robot controller design by trajectory linearization. In Proceedings of the American Control Conference, Denver, CO, USA, 4–6 June 2003. [Google Scholar]

- Lin, K.H.; Lee, H.S.; Chen, W.-T. Implementation of obstacle avoidance and zigbee control functions for omni directional mobile robot. In Proceedings of the 2008 IEEE International Conference on Advanced Robotics and Its Social Impacts, Taipei, Taiwan, 23–25 August 2008. [Google Scholar]

- Zhang, L.; Min, H.; Wei, H.; Huang, H. Global path planning for mobile robot based on a* algorithm and genetic algorithm. In Proceedings of the IEEE International Conference on Robotics and Biomimetics, Guangzhou, China, 11–14 December 2012. [Google Scholar]

- Fadzli, S.A.; Abdulkadir, S.I.; Makhtar, M.; Jamal, A.A. Robotic indoor path planning using dijkstra’s algorithm with multi-layer dictionaries. In Proceedings of the 2nd International Conference on Information Science and Security, Seoul, Korea, 14–16 December 2015. [Google Scholar]

- Kho, T.; Salih, M.H.; Woo, Y.S.; Ng, Z.; Min, J.J.; Yee, F. Enhance implementation of embedded robot auto-navigation system using FPGA for better performance. In Proceedings of the 3rd International Conference on Electronic Design, Phuket, Thailand, 11–12 August 2016. [Google Scholar]

- North, E.; Georgy, J.; Tarbouchi, M.; Iqbal, U.; Noureldin, A. Enhanced mobile robot outdoor localization using INS/GPS integration. In Proceedings of the International Conference on Computer Engineering & Systems, Caitro, Egypt, 14–16 December 2009. [Google Scholar]

- Mahendran, A.; Vedaldi, A. Understanding deep image representations by inverting them. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Shuyun, H.; Shoufeng, T.; Bin, S. Robot path planning based on improved ant colony optimization. In Proceedings of the International Conference on Robots & Intelligent System (ICRIS), Changsha, China, 26–27 May 2018. [Google Scholar]

- Xiu, C.; Lai, T.; Chai, Z. Design of automatic handling robot control system. In Proceedings of the Chinese Control and Decision Conference (CCDC), Shenyang, China, 9–11 June 2018. [Google Scholar]

- Parada-Salado, J.G.; Ortega-Garcia, L.E.; Ayala-Ramirez, L.F.; Perez-Pinal, F.J.; Herrera-Ramirez, C.A.; Padilla-Medina, J.A. A low-cost land wheeled autonomous mini-robot for in-door surveillance. IEEE Lat. Am. Trans. 2018, 16, 1298. [Google Scholar] [CrossRef]

- Isakhani, H.; Aouf, N.; Kechagias-Stamatis, O.; Whidborne, J.F. A furcated visual collision avoidance system for an autonomous micro robot. IEEE Trans. Cogn. Dev. Syst. 2018, 12, 1. [Google Scholar] [CrossRef]

- Luo, L.; Li, X. A method to search for color segmentation threshold in traffic sign detection. In Proceedings of the International Conference on Image and Graphics, Xi’an, China, 20–23 September 2009. [Google Scholar]

- Shih, C.-H.; Juang, J.-G. Moving object tracking and its application to indoor dual-robot patrol. Appl. Sci. 2016, 6, 349. [Google Scholar] [CrossRef] [Green Version]

- Lienhart, R.; Maydt, J. An extended set of haar-like features for rapid object detection. In Proceedings of the International Conference on Image Processing, Rochester, NY, USA, 22–25 September 2002. [Google Scholar]

- Lienhart, R.; Kuranov, A.; Pisarevsky, V. Empirical analysis of detection cascades of boosted classifiers for rapid object detection. In Proceedings of the 25th DAGM Symposium on Pattern Recognition, Magdeburg, Germany, 10–12 September 2003. [Google Scholar]

- Viola, P.; Jones, M. Robust real-time face detection. Int. J. Comput. Vis. 2004, 57, 137–154. [Google Scholar] [CrossRef]

- Qi, X.; Liu, C.; Schuckers, S. CNN based key frame extraction for face in video recognition. In Proceedings of the IEEE 4th International Conference on Identity, Security, and Behavior Analysis, Potsdam, NY, USA, 11–12 January 2018. [Google Scholar]

- Wikipedia, Summed Area Table. Available online: https://en.wikipedia.org/wiki/Summed_area_table (accessed on 12 December 2017).

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December 2001. [Google Scholar]

- Kruppa, H.; Castrillon-Santana, M.; Schiele, B. Fast and robust face finding via local context. In Proceedings of the Joint IEEE International Workshop on Visual Surveillance and Performance Evaluation of Tracking and Surveillance, Beijing, China, 12–13 October 2003. [Google Scholar]

- Chen, C.-L.; Wang, P.-B.; Juang, J.-G. Application of real-time positioning system with visual and range sensors to security robot. Sens. Mater. 2019, 31, 543. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Saito, K. Deep Learning; Gotop Information Inc.: Taipei, Taiwan, 2017. [Google Scholar]

- Available online: https://zh.wikipedia.org/wiki/%E7%BA%BF%E6%80%A7%E6%95%B4%E6%B5%81%E5%87%BD%E6%95%B0 (accessed on 18 August 2021).

- Available online: http://ufldl.stanford.edu/wiki/index.php/Softmax%E5%9B%9E%E5%BD%92 (accessed on 18 August 2021).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chang, W.-C.; Juang, J.-G. Automatic Outdoor Patrol Robot Based on Sensor Fusion and Face Recognition Methods. Appl. Sci. 2021, 11, 8857. https://doi.org/10.3390/app11198857

Chang W-C, Juang J-G. Automatic Outdoor Patrol Robot Based on Sensor Fusion and Face Recognition Methods. Applied Sciences. 2021; 11(19):8857. https://doi.org/10.3390/app11198857

Chicago/Turabian StyleChang, Wu-Chiang, and Jih-Gau Juang. 2021. "Automatic Outdoor Patrol Robot Based on Sensor Fusion and Face Recognition Methods" Applied Sciences 11, no. 19: 8857. https://doi.org/10.3390/app11198857

APA StyleChang, W.-C., & Juang, J.-G. (2021). Automatic Outdoor Patrol Robot Based on Sensor Fusion and Face Recognition Methods. Applied Sciences, 11(19), 8857. https://doi.org/10.3390/app11198857