A Waste Classification Method Based on a Multilayer Hybrid Convolution Neural Network

Abstract

:1. Introduction

2. Related Work

2.1. Based on Traditional Methods

2.2. Based on Neural Network Methods

- (1)

- We analyze the characteristics of the TrashNet dataset and give the reason why the classical convolution neural network based on fine-tuning is not suitable for waste image classification;

- (2)

- We proposed a multilayer hybrid convolutional neural network method (MLH-CNN), which can provide the best classification performance by changing the number of network modules and channels. Meanwhile, the influence of optimizers on waste image classification is also analyzed and the best possible optimizer is selected;

- (3)

- Compared with some state-of-the-art methods, the proposed MLH-CNN network has a simper structure and fewer parameters, and can provide better classification performance for waste images.

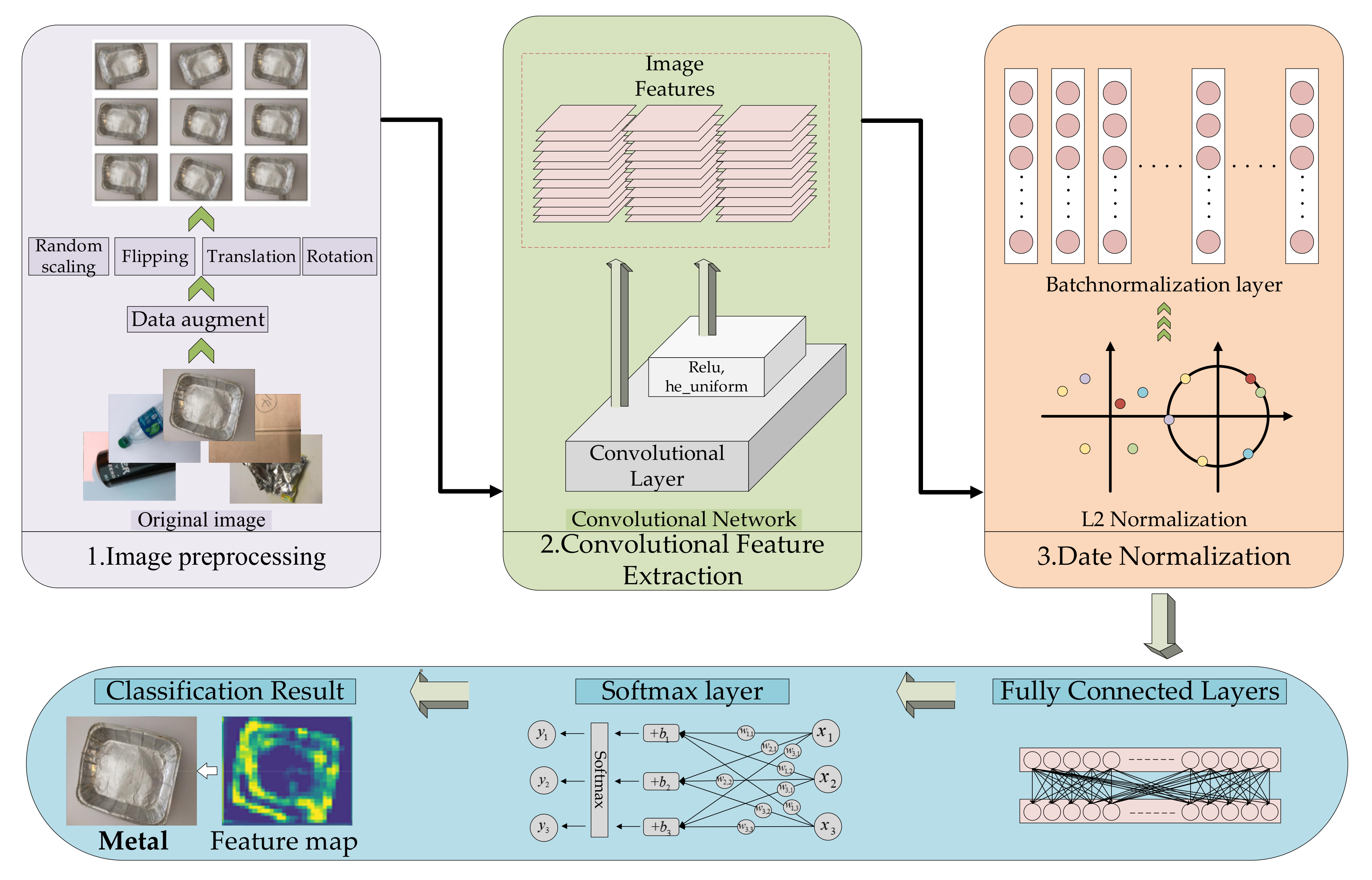

3. Methodology

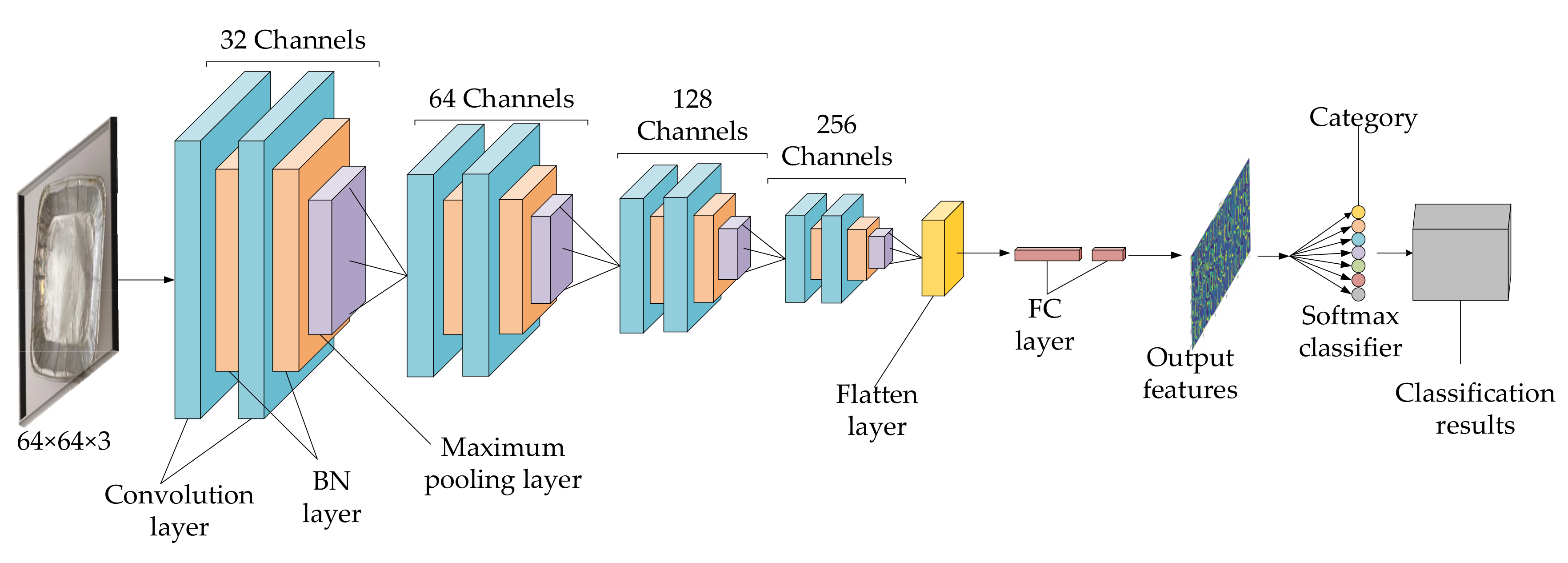

3.1. The Initial Network Modules

3.2. Methods and Improvements

3.3. Selection of Optimizer

4. Experiments and Results Analysis

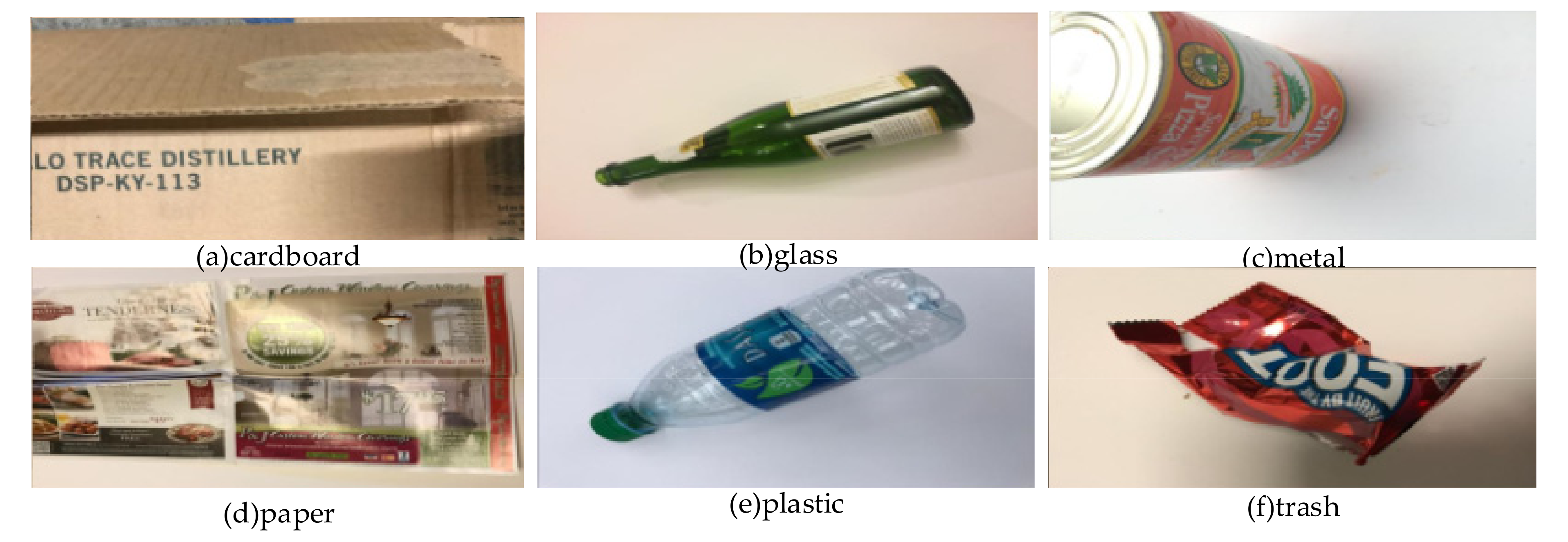

4.1. Dataset Processing

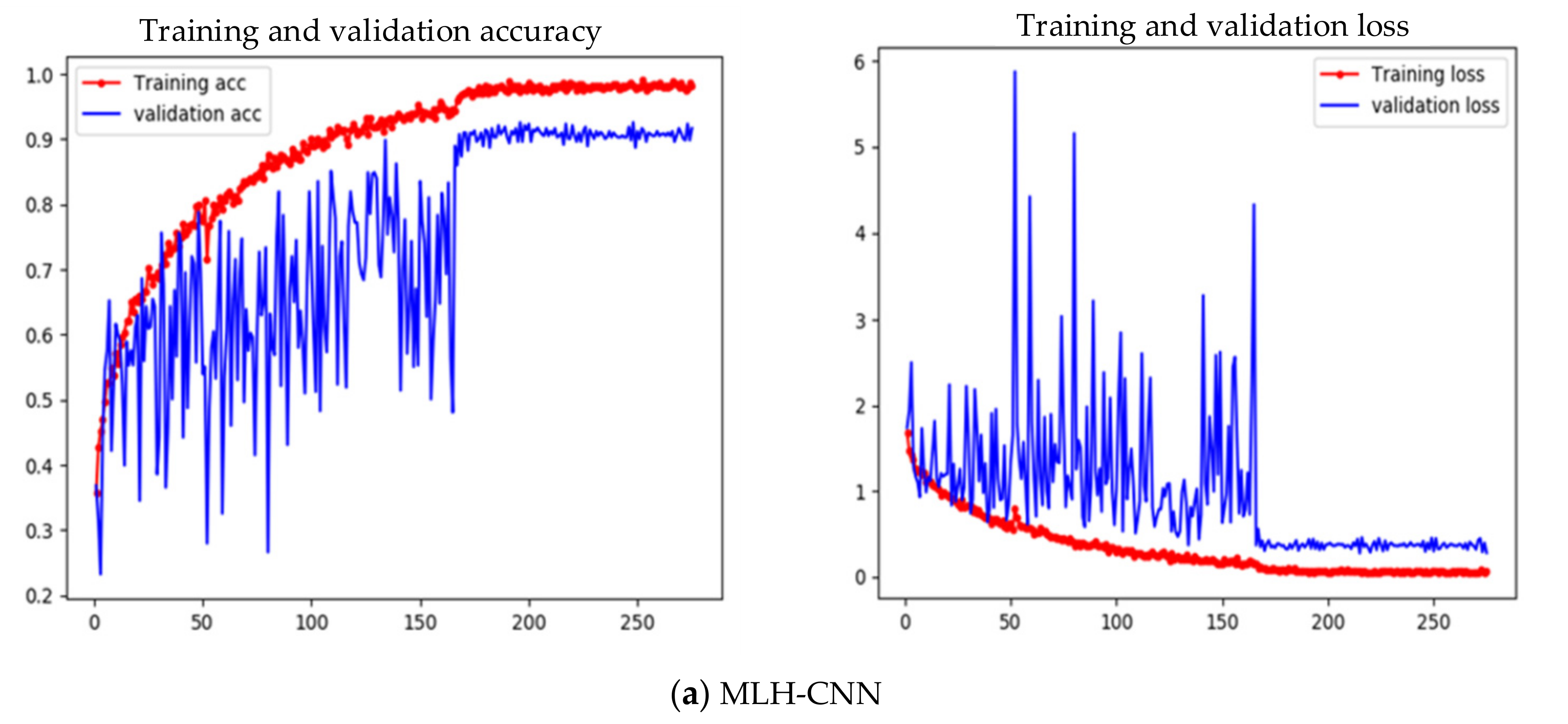

4.2. Training Curve Analysis

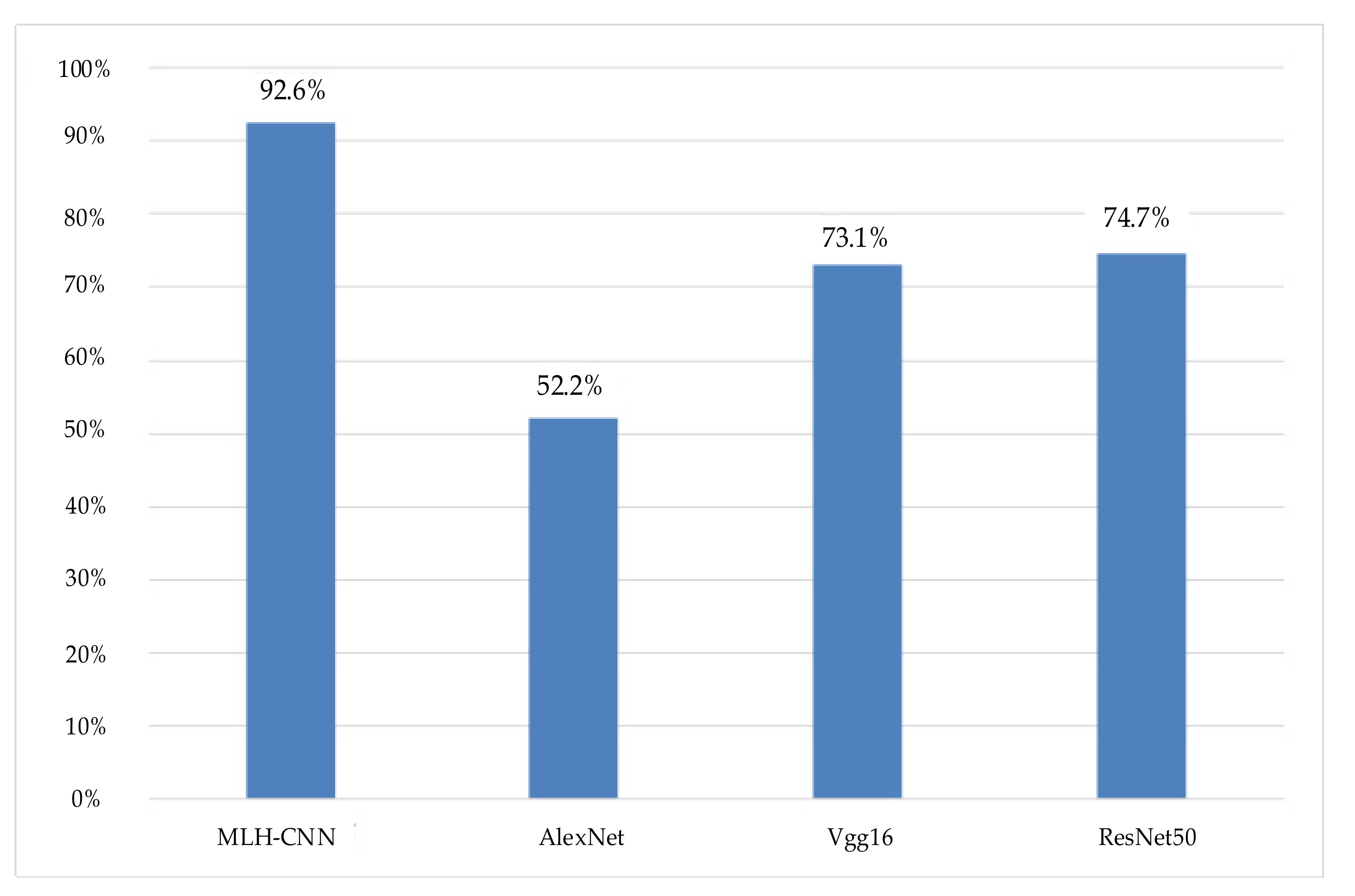

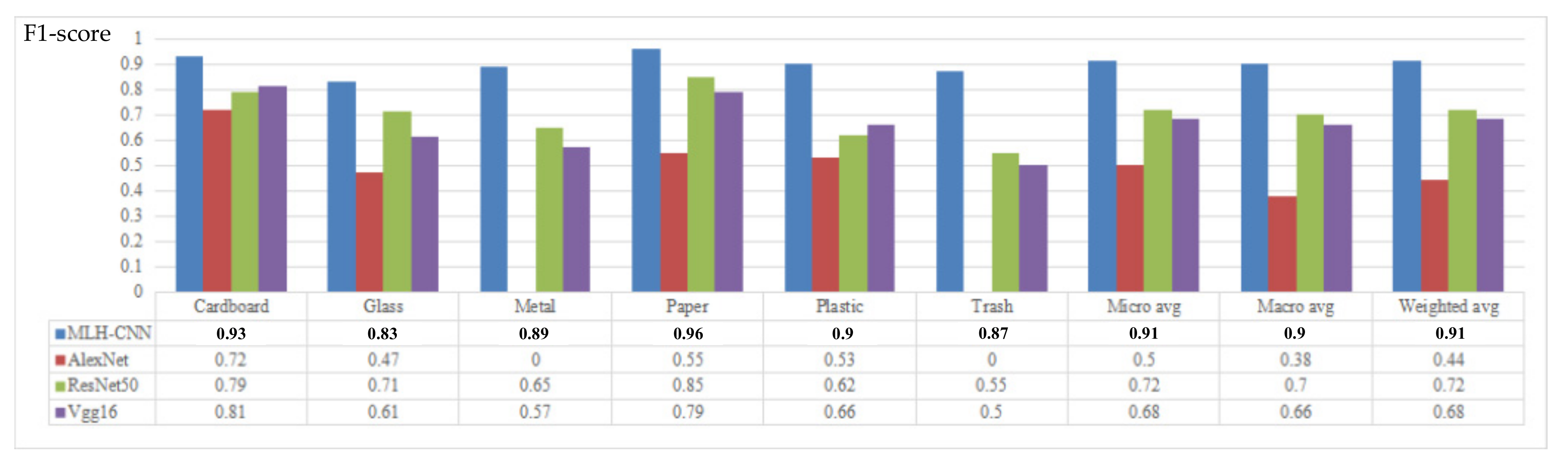

4.3. Classification Index Analysis

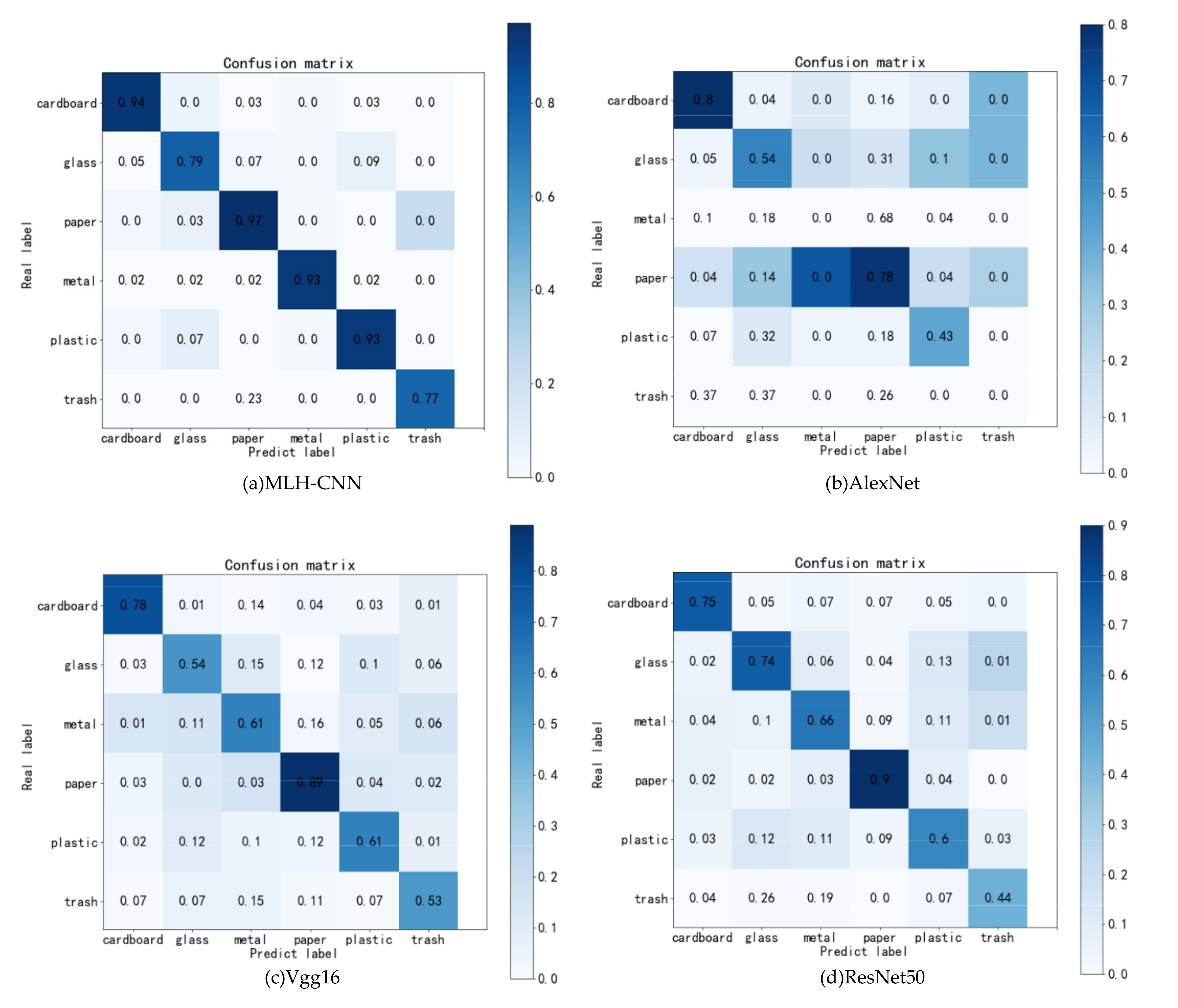

4.4. Confusion Matrix Analysis

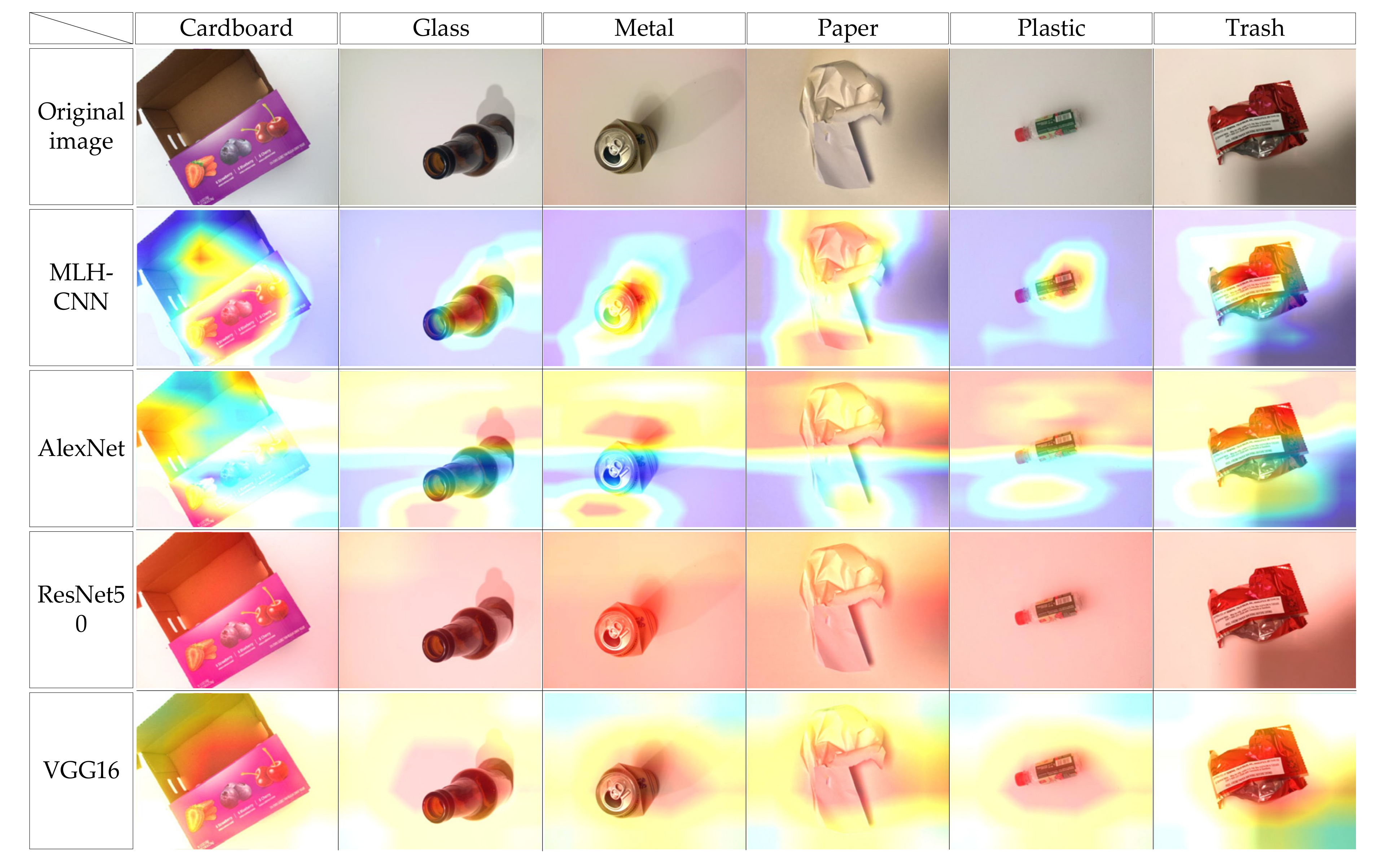

4.5. Heat Map Analysis

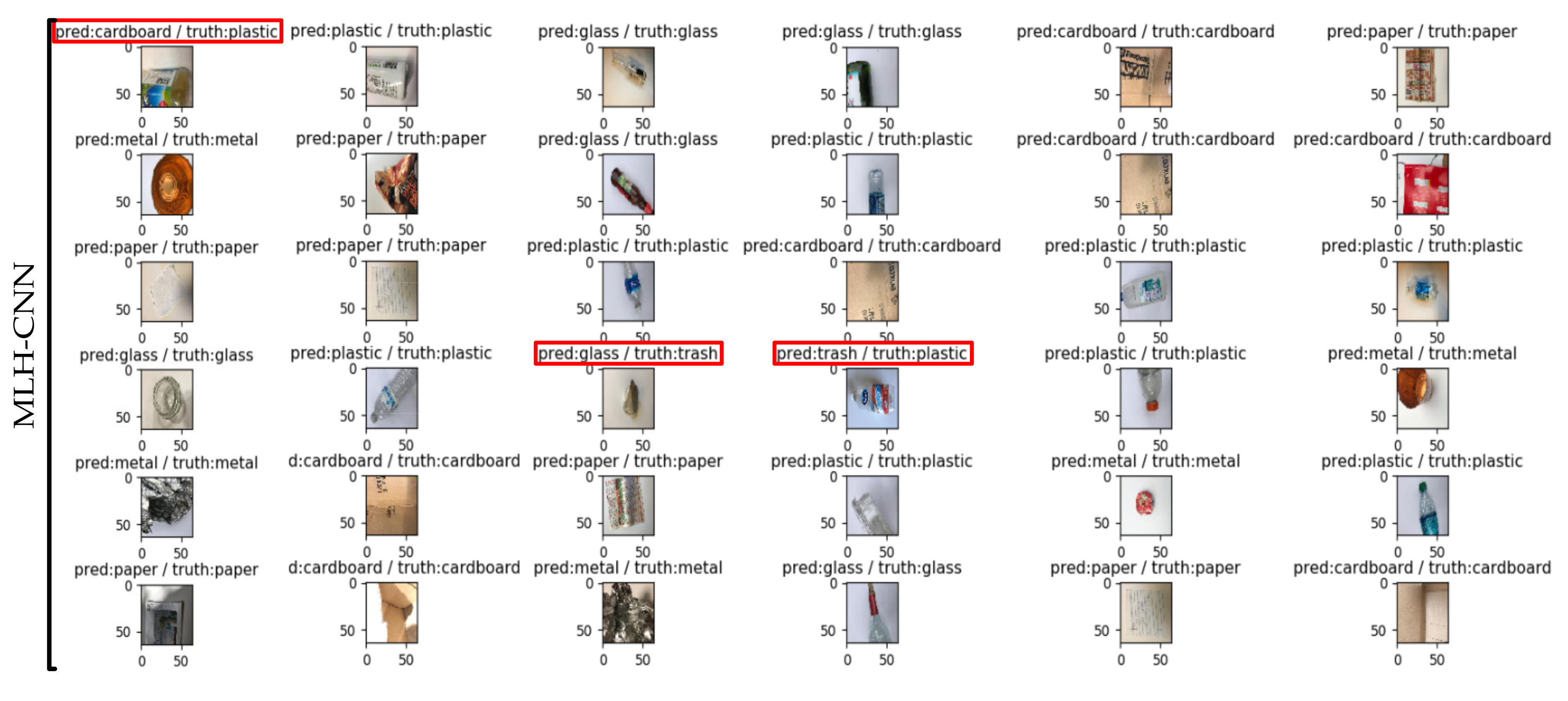

4.6. Analysis of Classification Results

4.7. Partial Occlusion Test Experiment

4.8. Comparison with Related Literature

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhang, S.; Forssberg, E. Intelligent Liberation and classification of electronic scrap. Powder Technol. 1999, 105, 295–301. [Google Scholar] [CrossRef]

- Lui, C.; Sharan, L.; Adelson, E.H.; Rosenholtz, R. Exploring features in a bayesian framework for material recognition. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 239–246. [Google Scholar]

- Bai, J.; Lian, S.; Liu, Z.; Wang, K.; Liu, D. Deep learning based robot for automatically picking up garbage on the grass. IEEE Trans. Consum. Electron. 2018, 64, 382–389. [Google Scholar]

- Lin, I.; Loyola-González, O.; Monroy, R.; Medina-Pérez, M.A. A review of fuzzy and pattern-based approaches for class imbalance problems. Appl. Sci. 2021, 11, 6310. [Google Scholar] [CrossRef]

- Shangitova, Z.; Orazbayev, B.; Kurmangaziyeva, L.; Ospanova, T.; Tuleuova, R. Research and modeling of the process of sulfur production in the claus reactor using the method of artificial neural networks. J. Theor. Appl. Inf. Technol. 2021, 99, 2333–2343. [Google Scholar]

- Picón, A.; Ghita, O.; Whelan, P.F.; Iriondo, P.M. Fuzzy spectral and spatial feature integration for classification of nonferrous materials in hyperspectral data. IEEE Trans. Ind. Inform. 2009, 5, 483–494. [Google Scholar] [CrossRef]

- Shylo, S.; Harmer, S.W. Millimeter-wave imaging for recycled paper classification. IEEE Sens. J. 2016, 16, 2361–2366. [Google Scholar] [CrossRef]

- Rutqvist, D.; Kleyko, D.; Blomstedt, F. An automated machine learning approach for smart waste management systems. IEEE Trans. Ind. Inform. 2020, 16, 384–392. [Google Scholar] [CrossRef]

- Zheng, J.; Xu, M.; Cai, M.; Wang, Z.; Yang, M. Modeling group behavior to study innovation diffusion based on cognition and network: An analysis for garbage classification system in Shanghai, China. Int. J. Environ. Res. Public Health 2019, 16, 3349. [Google Scholar] [CrossRef] [Green Version]

- Chu, Y.; Huang, C.; Xie, X.; Tan, B.; Kamal, S.; Xiong, X. Multilayer hybrid deep-learning method for waste classification and recycling. Comput. Intell. Neurosci. 2018, 1–9. [Google Scholar] [CrossRef] [Green Version]

- Donovan, J. Auto-Trash Sorts Waste Automatically at the TechCrunch Disrupt Hackathon; Techcrunch Disrupt Hackaton: San Francisco, CA, USA, 2018. [Google Scholar]

- Batinić, B.; Vukmirović, S.; Vujić, G.; Stanisavljević, N.; Ubavin, D.; Vukmirović, G. Using ANN model to determine future waste characteristics in order to achieve specific waste management targets-case study of Serbia. J. Sci. Ind. Res. 2011, 70, 513–518. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- O’Toole, M.D.; Karimian, N.; Peyton, A.J. Classification of nonferrous metals using magnetic induction spectroscopy. IEEE Trans. Ind. Inform. 2018, 14, 3477–3485. [Google Scholar] [CrossRef] [Green Version]

- Zhao, D.-E.; Wu, R.; Zhao, B.-G.; Chen, Y.-Y. Research on waste classification and recognition based on hyperspectral imaging technology. Spectrosc. Spectr. Anal. 2019, 39, 917–922. [Google Scholar]

- Yusoff, S.H.; Mahat, S.; Midi, N.S.; Mohamad, S.Y.; Zaini, S.A. Classification of different types of metal from recyclable household waste for automatic waste separation system. Bull. Electr. Eng. Inform. 2019, 8, 488–498. [Google Scholar] [CrossRef]

- Zeng, D.; Zhang, S.; Chen, F.; Wang, Y. Multi-scale CNN based garbage detection of airborne hyperspectral data. IEEE Access 2019, 7, 104514–104527. [Google Scholar] [CrossRef]

- Roh, S.B.; Oh, S.K.; Pedrycz, W. Identification of black plastics based on fuzzy rbf neural networks: Focused on data preprocessing techniques through fourier transform infrared radiation. IEEE Trans. Ind. Inform. 2018, 14, 1802–1813. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Kennedy, T. OscarNet: Using transfer learning to classify disposable waste. In CS230 Report: Deep Learning; Stanford University: Stanford, CA, USA, 2018. [Google Scholar]

- Veit, A.; Wilber, M.J.; Belongie, S. residual networks behave like ensembles of relatively shallow networks. Adv. Neural Inf. Process. Syst. 2016, 29, 550–558. [Google Scholar]

- Kadyrova, N.O.; Pavlova, L.V. Comparative efficiency of algorithms based on support vector machines for binary classification. Biophysics 2015, 60, 13–24. [Google Scholar] [CrossRef]

- OAdedeji, O.; Wang, Z. Intelligent waste classification system using deep learning convolutional neural network. Procedia Manuf. 2019, 35, 607–612. [Google Scholar] [CrossRef]

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J. SiamRPN++: Evolution of siamese visual tracking with very deep networks. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 4277–4286. [Google Scholar]

- Zhihong, C.; Hebin, Z.; Yanbo, W.; Binyan, L.; Yu, L. A vision-based robotic grasping system using deep learning for garbage sorting2017. In Proceedings of the 36th Chinese Control Conference (CCC), Dalian, China, 26–28 July 2017. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Rabano, S.L.; Cabatuan, M.K.; Sybingco, E.; Dadios, E.P.; Calilung, E.J. Common garbage classification using mobilenet. In Proceedings of the 2018 IEEE 10th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment and Management (HNICEM), Baguio City, Philippines, 29 November–2 December 2018; pp. 1–4. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Ruiz, V.; Sánchez, Á.; Vélez, J.F.; Raducanu, B. Automatic image-based waste classification. In International Work-Conference on the Interplay between Natural and Artificial Computation; Springer: Cham, Switzerland, 2019; Volume 11487, pp. 422–431. [Google Scholar]

- Abeywickrama, T.; Cheema, M.A.; Taniar, D. K-Nearest neighbors on road networks: A journey in experimentation and in-memory implementation. Proc. VLDB Endow. 2016, 9, 492–503. [Google Scholar] [CrossRef]

- Costa, B.S.; Bernardes, A.C.; Pereira, J.V.; Zampa, V.H.; Pereira, V.A.; Matos, G.F.; Silva, A.F. Artificial intelligence in automated sorting in trash recycling. In Anais do XV Encontro Nacional de Inteligência Artificial e Computacional; SBC: Brasilia, Brazil, 2018; pp. 198–205. [Google Scholar]

- Miao, N.; Song, Y.; Zhou, H.; Li, L. Do you have the right scissors? Tailoring pre-trained language models via monte-carlo methods. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Washington, DC, USA, 13 July 2020; pp. 3436–3441. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Robbins, H.; Monro, S. A stochastic approximation method. Ann. Math. Stat. 1951, 22, 400–407. [Google Scholar] [CrossRef]

- Yang, M.; Thung, G. Classification of trash for recyclability status. In CS229 Project Report; Publisher Name: San Francisco, CA, USA, 2016. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. In Proceedings of the IEEE Transactions on Pattern Analysis and Machine Intelligence, Las Vegas, NV, USA, 27–30 June 2016; Volume 39, pp. 1137–1149. [Google Scholar]

- Awe, O.; Mengistu, R.; Sreedhar, V. Smart trash net: Waste localization and classification. arXiv 2017, preprint. [Google Scholar]

- Satvilkar, M. Image Based Trash Classification using Machine Learning Algorithms for Recyclability Status. Master’s Thesis, National College of Ireland, Dublin, Ireland, 2018. [Google Scholar]

- Melinte, D.O.; Dumitriu, D.; Mărgăritescu, M.; Ancuţa, P.N. Deep Learning Computer Vision for Sorting and Size Determination of Municipal Waste; Springer: Cham, Switzerland, 2020; Volume 85, pp. 142–152. [Google Scholar]

- Endah, S.N.; Shiddiq, I.N. Xception architecture transfer learning for waste classification. In Proceedings of the 2020 4th International Conference on Informatics and Computational Sciences (ICICoS), Semarang, Indonesia, 10–11 November 2020; pp. 1–4. [Google Scholar] [CrossRef]

| The Proposed Method | Structure | Accuracy | One Iteration Time |

|---|---|---|---|

| The initial model | A module made up of the basic modules, shown in Figure 2 | 86.20% | 114 ms/step |

| The first improved model | The three modules are used for mixing | 87.20% | 189 ms/step |

| The second improved model | The three modules and one basic module are used for mixing | 89.70% | 201 ms/step |

| The third improved model | The four modules are used for mixing | 92.60% | 223 ms/step |

| The fourth improved model | The five modules are used for mixing | 88.50% | 240 ms/step |

| Optimizer | Accuracy | Time One Iteration Takes |

|---|---|---|

| Adam | 90.2% | 235 ms/step |

| SGD | 89.7% | 225 ms/step |

| SGDM + Nesterov | 92.6% | 223 ms/step |

| Optimizer (Momentum Parameter) | SGDM + Nesterov (0.9) |

|---|---|

| Learning rate | 0.1 |

| Patient value | 30 |

| Batch size | 32 |

| Batch Normalization | Momentum = 0.99, epsilon = 0.001 |

| Cardboard | Glass | Paper | Metal | Plastic | Trash | |

|---|---|---|---|---|---|---|

| Train number | 323 | 401 | 476 | 328 | 386 | 110 |

| Test number | 80 | 100 | 118 | 82 | 96 | 27 |

| MLH-CNN | ResNet50 | Vgg16 | AlexNet | |

|---|---|---|---|---|

| The lower right corner is blocked | 83.44% | 67.59% | 65.01% | 45.92% |

| The lower left corner is blocked | 84.75% | 71.37% | 69.38% | 46.52% |

| The top left corner is blocked | 83.01% | 71.77% | 62.82% | 46.92% |

| The top right corner is blocked | 79.96% | 71.77% | 70.58% | 46.9% |

| Dataset | Method | Year | Parameters | Accuracy | Gain |

|---|---|---|---|---|---|

| TrashNet | OscarNet (based on VGG19 pretrained) [20] | 2018 | 13,957,0240 | 88.42% | 4.18% |

| Augmented data to train R-CNN [39] | 2017 | -- | 68.30% | 24.3% | |

| Ref. [23] | 2019 | 22,515,078 | 87.00% | 5.6% | |

| Ref. [30] with Inception-ResNet | 2019 | 29,042,344 | 88.66% | 3.94% | |

| Ref. [33] with KNN | 2018 | -- | 88.00% | 4.6% | |

| Ref. [33] with SVM | 2018 | -- | 80.00% | 12.6% | |

| Ref. [33] with RF | 2018 | -- | 85.00% | 7.6% | |

| Ref. [27] with MobileNet | 2018 | 42,000,000 | 89.34% | 3.26% | |

| Ref. [40] with CNN | 2018 | -- | 89.81% | 2.79% | |

| Ref. [41] | 2020 | 29,000,000 | 88.42% | 4.18% | |

| Ref. [42] | 2020 | 20,875,247 | 88% | 4.6% | |

| Ours (MLH-CNN) | -- | 1,709,926 | 92.60% | -- |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, C.; Tan, C.; Wang, T.; Wang, L. A Waste Classification Method Based on a Multilayer Hybrid Convolution Neural Network. Appl. Sci. 2021, 11, 8572. https://doi.org/10.3390/app11188572

Shi C, Tan C, Wang T, Wang L. A Waste Classification Method Based on a Multilayer Hybrid Convolution Neural Network. Applied Sciences. 2021; 11(18):8572. https://doi.org/10.3390/app11188572

Chicago/Turabian StyleShi, Cuiping, Cong Tan, Tao Wang, and Liguo Wang. 2021. "A Waste Classification Method Based on a Multilayer Hybrid Convolution Neural Network" Applied Sciences 11, no. 18: 8572. https://doi.org/10.3390/app11188572

APA StyleShi, C., Tan, C., Wang, T., & Wang, L. (2021). A Waste Classification Method Based on a Multilayer Hybrid Convolution Neural Network. Applied Sciences, 11(18), 8572. https://doi.org/10.3390/app11188572