Abstract

In the context of Industry 4.0, flexible manufacturing systems play an important role. They are designed to provide the possibility to adapt the production process by reacting to changes and enabling customer specific products. The versatility of such manufacturing systems, however, also needs to be exploited by advanced control strategies. To this end, we present a novel scheduling scheme that is able to flexibly react to changes in the manufacturing system by means of Model Predictive Control (MPC). To introduce flexibility from the start, the initial scheduling problem, which is very general and covers a variety of special cases, is formulated in a modular way. This modularity is then preserved during an automatic transformation into a Petri Net formulation, which constitutes the basis for the two presented MPC schemes. We prove that both schemes are guaranteed to complete the production problem in closed loop when reasonable assumptions are fulfilled. The advantages of the presented control framework for flexible manufacturing systems are that it covers a wide variety of scheduling problems, that it is able to exploit the available flexibility of the manufacturing system, and that it allows to prove the completion of the production problem.

1. Introduction

In the prospect of smart factories, industrial manufacturing is in the middle of an evolution that heads towards increasingly interconnected and digitized production systems. New paradigms evolve which make use of novel technologies from the field of information and communication technology, and their potential is exploited in an effort which is recognized as fourth industrial revolution (Industry 4.0) [1]. This trend is fostered by increased computational power, ongoing miniaturization of computational units, as well as faster and more reliable communication system. Previously purely mechanical machines are equipped with sensors, computational units, and communication interfaces, which enables decentralized reasoning, decision-making, and integration in an interconnected production system, rendering the machines into a cyber-physical production system (CPPSs) that can interact with their environment physically as well as via digital communication [2]. This allows to have instantaneous feedback from the production process which is provided to a digital decision making and scheduling system. Such information can be exploited to react to problems in the production process, perform real-time optimization, and even predict imminent failures and act before they occur [3].

The development on the digital side goes hand in hand with a change from rigid production lines to flexible manufacturing systems (FMSs). This development is driven by a more diverse customer demand ranging towards individualized products and has the goal of enabling the manufacturing of products in lot size one at the cost of mass production [1,4]. The focus of industrial production changes from highly automated mass production to more adaptable manufacturing processes. This requires truly flexible manufacturing systems that are capable of changing according to the present needs. On the one hand, the physical manufacturing units need to be flexible, and on the other hand, also their control and their integration in the overall process require the capability to adapt in order to handle manufacturing orders of different types.

To manage the flexible manufacturing units, the concept of the digital twin is introduced. It describes the notion of having a real-time synchronized virtual equivalent of the real world objects in a digital environment that can be used in the decision-making process [3,5]. The organization of the production, the manufacturing units, and the parts being produced is coordinated digitally by means of their digital twins, which comprises their current status, their abilities, and a digital documentation of their past. The up-to-date status information and the knowledge about the capabilities of the physical system represented in its digital twin allow for digital panning and control and are the prerequisite to formulate the online optimization problem introduced in Section 4.1.

A very promising approach to exploit these information is the concept of skill-based programming, where commands to a robot or machine are abstracted to a higher level [6,7]. It became popular in the field of robot programming, where the high level of abstraction is provided to the user in order to simplify the usage of versatile robots [8,9,10,11,12,13]. On the high abstraction level, the operator can access the skills of the machines and give the desired instructions, which are passed to the machines that interpret and execute the incoming commands according to their capabilities by means of a subordinate logic. This approach enables the flexible use of versatile manufacturing units in complex production scenarios. Although it was initially conceived for easy human machine interaction, it also facilitates the automated interconnection of manufacturing units on the higher abstraction level.

The goal of a factory designed according to Industry 4.0 principles is to achieve a real-time optimal execution of the production process respecting the varying demand for diverse products. The optimization of the production could be done with regards to different criteria as for example work in progress or production time, but the economic goal to generate the maximum monetary profit remains the objective of every commercial production system. In order to achieve this goal, we aim to contribute to this development with control theoretic methods, building up on existing notions and results. In particular, we focus on the dynamic scheduling of customer specific orders in an FMS.

We take the concept of the digital twin as proposed by Grieves [5] as basis for our work and assume that the information on every physical system are always available in the corresponding digital twin and therefore can be used for the digital control of the manufacturing system. For a manufacturing unit, the digital twin particularly holds the manufacturing skills it can perform. Furthermore, a digital twin is initialized for every order that is placed at the manufacturing system. In this case, especially the production plan holding the necessary production steps for the requested product is of interest for our approach. The assignment between orders and manufacturing units is done based on their digital twins. On the one hand, the digital twin of the production orders requires skills of the manufacturing system for being produced and on the other hand, the manufacturing units offer those necessary skills. We formulate this assignment problem between required skills to produce the orders and available skills in the FMS as a scheduling problem.

The solution of the proposed scheduling problem is done on the basis of a Petri Net (PN) model, which is generated automatically from the initial description of the scheduling problem. The generation algorithms exploit the modular formulation of the initial scheduling problem, where the manufacturing units and the production orders can be considered as separate modules, and transforms it into a well-structured PN. The PN offers an algebraic representation that is used to determine the schedule for the FMS.

The result of the scheduling scheme are manufacturing decisions that are intended to be directly applied to the system in real-time. In order to exploit the flexibility of the FMS, it is important to keep the scheduling scheme flexible as well. Therefore, it is formulated in the form of a feedback control law, more precisely in the form of Model Predictive Control (MPC) [14]. MPC has the advantage that an optimization problem, in which the economic objective of the FMS can be considered, is solved while computing the scheduling inputs. The feedback mechanism of the MPC allows to immediately react to changes in the manufacturing system and to adjust its behavior accordingly.

The remainder of the paper is structured as follows. The description that we use for the scheduling problem will be further described in Section 2. It is kept in a rather general way such that it can be used for various specific cases. It is a generalization of the widely discussed job shop problem (JS) [15] and allows more general production plans with respect to the JS. This general form of a scheduling problem has, for example, practical applications in printing shops [16], and it can also be used to describe several special cases and practical applications thereof. A detailed description of the presented scheduling problem together with a classification with respect to a common classification scheme and a comparison with more common scheduling problems in literature can be found in Section 2. In this regard, we especially set the focus on the flexibility of the employed problem description.

In Section 3, we describe the automatic generation of a PN for the considered class of FMSs, which is used as mathematical basis to solve the scheduling problem. In this context, we slightly extend the classical notion of a PN in order to distinguish between autonomously running production processes and conscious production decisions. The generation process is discussed and the resulting PN is analyzed with respect to its relevant properties for the scheduling of the FMS.

Finally, two MPC schemes are introduced in Section 4, which determine the optimal scheduling decisions at the current time step with respect to a given cost function. We relate the presented approach to common control strategies for manufacturing and for PNs from literature. Most importantly, we provide proven guarantees of the MPC schemes that assure the completion of the scheduling problem based on a few natural assumptions.

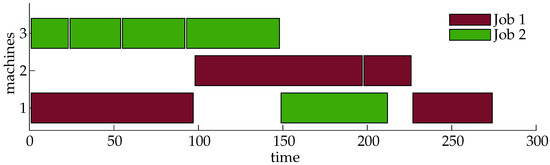

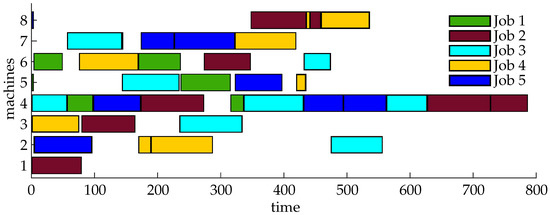

In Section 5, we apply one of the presented MPC schemes in the simulation of two scheduling problems from literature. The results show that decent closed loop performance is achieved.

Section 6 summarizes and discusses the results with respect to the initially formulated objectives. It is explained how the results from Section 4 can be used in order to implement a suitable MPC formulation for a given manufacturing problem. The flexibility of the proposed solution is laid out and possible changes in the problem description are analyzed with respect to their effects on the MPC schemes. Further research opportunities are identified that enhance the presented methods. In Section 7, we draw a brief conclusion of our work.

The paper at hand is an extension of our conference papers [17,18] and repeats previous results in some indicated it paragraphs. Besides many additional explanations and bringing those two related works in their mutual context, our previous work is extended in various ways. On the level of the scheduling problem, this paper adds a more profound classification of the considered problem and describes its properties in a clearer form. For the automatically generated PN description, proofs of some important properties are provided. On the level of the MPC, the previously rather short proof of the main theorem is extended and now provides detailed descriptions of parts that were initially kept short due to page limitations. Additionally, a new MPC formulation is provided that significantly reduces the complexity of the employed optimization problem. Two simulation examples are added to show the applicability of our results with problem setups from literature.

2. Problem Description

The scheduling problem considered in this paper was originally proposed in our previous work [17] and describes an FMS with a given set of manufacturing units in which customer specific orders are fulfilled. It is a general case of the flexible job shop (FJS), which is further discussed in Section 2.3. Every customer specific order constitutes a specific and possibly unique job in the manufacturing system.

In order to arrive at a versatile modeling and control framework, we start from a very abstract description of the manufacturing problem which can be refined to describe several more specific scenarios. We want to exploit the flexibility to combine the skills of the available manufacturing units in the sense of skill based programming [6], in order to create and describe production plans. Therefore, our modeling approach has the skills as a central element. The manufacturing units have the skills to execute tasks, which are the basic building blocks for the jobs that have to be fulfilled by the manufacturing system. A job is completed once all its tasks have been completed. In a more formal way, the basic elements of the manufacturing problem are

- a set of tasks that can be executed in the manufacturing system,

- a set of manufacturing units (robots, machines, automated guided vehicles, etc.) which are called machines for brevity in the rest of this paper; every machine M can only execute a subset of tasks, which is specified as ,

- a set of jobs that need to be fulfilled by the manufacturing system; every job J consists of a set of tasks.

The purpose of the manufacturing system is to complete the jobs with the available machine pool . The assignment between jobs and machines is done based on the tasks, which are the linking element between them. As different jobs J and , which represent the instructions for the production of different goods, may share the same production steps, their sets and may contain the same elements. If we refer to a task of a specific job J, the pair of task and job is called operation . With this, the set of operations is defined as . The term operation is mainly used to abbreviate certain statements, but in many cases we rather refer to the pair of task and job for the sake of clarity.

In the same way different jobs might share the same tasks, also different machines M and might be able to execute the same tasks and hence the corresponding sets and may have elements in common. In this case and when considering the framework of skill-based programming [6], the fact that both machines can execute the same task does not mean that they do it in the same way. The machines might be of a different kind and it is not even necessarily required that the machines have some specific tool, as the introduction of a multipurpose machine by Brucker [15] suggests. In the framework of skill based programming [6], different production times for the same task might even result form different implementations of the same task or skill on different machines. For example, the tightening of a screw can be done with a tool that can rotate infinitely, or with a robotic arm that can only rotate by a limited angle and therefore needs to turn back and forth several times needing more time for a task that requires to tighten a screw. The skill to execute a task can be seen in the most abstract and general way possible. As the way in which a task is executed may vary from machine to machine, the production time describing the amount of time that machine M needs to execute task not only depends on the task but also on the machine. The production times are assumed to be known and fixed for every valid combination of machine and task.

The general setup described above already contains several restrictions by itself and offers a variety of different flexibilities. A more detailed and structured description thereof is provided in the next section.

2.1. Restrictions and Flexibility in the Scheduling Problem

In the considered scheduling problem, there are restrictions concerning the three basic elements, i.e., tasks, machines, and jobs. To begin with, the sequence of tasks in a job is generally free. However, as there might be dependencies between the different steps in the production process of a product, these dependencies needs to be considered in the manufacturing problem such that only production sequences are scheduled which can be executed in the real production process. This is achieved through the following four restrictions in the scheduling problem related to the tasks:

- (R1)

- A task might not be independent, but require all tasks in the set of its required tasks to be finished before it can be started.

- (R2)

- A task in a job J can only depend on tasks in the same job J, i.e., for every task . If a task depends on another task , i.e., , then the operations and exist and it is said that O depends . Operations and in different jobs J and are independent from one another.

- (R3)

- Two tasks and must not be mutually dependent.

- (R4)

- Preemption of tasks is not allowed, i.e., once a task has been started it must be completed without interruption.

The restriction to a non-preemptive setup is the general case in literature and therefore adopted in the considered problem [19,20,21,22,23]. In the description of the dependencies of a task on other tasks (R1), only direct dependencies are provided in the sets . Indirect dependencies with one or more other tasks in between can be recursively determined with an algorithm presented in our previous work [17]. The set of all tasks on which a task depends directly or indirectly is denoted with . From the different sets of the tasks of a job J, a precedence graph can be generated to represent the relations between the tasks of the job by considering the tasks as nodes and constructing the directed edges according to the precedence relations in the sets . Due to Restriction (R3), this graph is acyclic, which is important as otherwise none of the tasks in a cycle could ever start due to a circular wait condition. To summarize, all precedence relations that can be represented by an arbitrary acyclic graph can be considered in the scheduling problem, as it is the case for some closely related scheduling problems [24,25].

Furthermore, the machines and jobs are restricted in the real-world production process. Every machine has a limited production capacity, which we assume to be one for simplicity, and we assume that every machine has an infinite output buffer. For the jobs, we assume that they represent products that can only be at one place, and thus they can only be handled by a single machine at a time. Together with the limited capabilities of the machines, the following restrictions are present, which are all the general case in the literature on scheduling of FJSs, despite they are not always stated explicitly [19,20,21,23]:

- (R5)

- Every machine M can only execute a subset of tasks, which is specified as .

- (R6)

- A machine can only execute one task at a time.

- (R7)

- Only one task per job can be executed at a time.

Despite these restrictions, which are rather few compared with other scheduling problems in manufacturing, the production problem still has a lot of flexibility. As in most scheduling problems, the production of different products, i.e., different jobs, is considered to be independent. This is the case as the tasks in different jobs are independent from one another as specified in Restriction (R2). In line with Nasiri and Kianfar [24], we consider the interdependence between different jobs to be rare in practice. In our setup they only arise as the jobs are manufactured in the same production facility and share the same machine pool. In general, we consider as flexibility of a manufacturing system its ability to adapt, or to be adapted, as a response to changing external conditions [26]. An operator or operating system needs to have the possibility to exploit the flexibility in order to improve the system behavior with respect to some desired criteria as for example the efficiency and profitability of the manufacturing system. The most significant types of flexibility of the considered description of an FMS are

- (F1)

- the possibility to execute the same task on different machines,

- (F2)

- the ability of one machine to execute different tasks,

- (F3)

- the possibility to change the sequence in which the tasks in one job are executed, only restricted by (R1),

- (F4)

- the possibility to change the sequence in which different operations are executed on a machine,

- (F5)

- the possibility to execute different types of jobs in the same manufacturing system.

More in-depth analyses of different types of flexibility in manufacturing were, among others, done by Beach et al. [26], Sethi and Sethi [27], Jain et al. [28]. In the search of a unified taxonomy they identified more than 50 different terms for various types of flexibility and there are different terms for the same type of flexibility as well as different meanings of the same terms [27]. This is why we do not set specific terms for the flexibilities (F1)–(F5), but rather refer to their numbers (F1)–(F5) when referring to the different types of flexibility. With respect to the taxonomy of Sethi and Sethi [27], who distinguishes eleven types of flexibility, the manufacturing system described above offers routing flexibility (F1), machine flexibility (F2), operation flexibility (F3) and (F4), and process, product and production flexibility (F5). Implicitly, also material handling flexibility is assumed as the jobs can be transferred from one machine to another. The setup also allows for expansion flexibility, which allows to introduce new machines to the system, due to the automatic generation of the scheduling problem in Section 3.3.

The benefit of the different types of flexibility is that they offer the potential to find an improved schedule with respect to a nominal one. On the other hand the complexity of the scheduling problem increases and it is harder to find an optimal solution [20,29]. In fact, the considered problem is NP-hard as it is a generalization of the flexible job shop (FJS), which we will discuss in Section 2.3, and since the FJS is NP-hard itself [19,20,25]. Increasing the flexibility of the FJS makes a hard to solve problem even harder.

2.2. Scheduling Objective

The goal of the proposed method is to optimize the throughput through the manufacturing system in order to maximize its profitability. This means that the best possible assignment between operations and machines needs to be found. Moreover, as the system evolves with time as the production proceeds, also the timing of the assignment needs to be considered, so the goal is to find the best possible schedule of jobs in the manufacturing system with a given set of machines. In line with the notion of Birgin et al. [25], a schedule is the assignment of the operations to the machines and a starting time. A schedule is considered to be feasible if the restrictions (R1) and (R4)–(R7) are met. Restrictions (R2) and (R3) refer to the formulation of the jobs and therefore do not need to be explicitly considered during scheduling.

As in real production scenarios, where not all future jobs are known and new jobs may arise at arbitrary points in time, we also consider that jobs may arrive during runtime. Therefore, the goal of the proposed scheme is not necessarily to find the best schedule but to provide a scheduling policy, as, for example, introduced by Pinedo [30], which is able to generate feasible schedules in different states of the manufacturing system that are as good as possible under the given circumstances.

In order to arrive at a schedule that is desirable from a manufacturing point of view, the profit needs to be quantified with a cost function and at least one feasible solution must exist. In general, a cost function has to take into account the criteria that matter in the specific production scenario, which might for example be the usage or waste of material or energy, or storage cost. The design of the cost function offers the possibility to set priorities according to quantifiable criteria. Single objectives as the minimization of the makespan, total flow time, and many more are frequently considered in academic scenarios. As usually multiple criteria need to be considered to capture the true manufacturing cost and reward, multi-objective scheduling problems address real-world scenarios in a better way [23]. As our focus is not to design meaningful scheduling problems but to develop a general scheme to solve them, we assume a meaningful cost function to be given and a feasible solution to exists.

In scheduling literature the assignment between tasks and the machines that are able to process them is viewed from the perspective of the task [15]. To each task a set of machines that are able to process it is assigned. In an Industry 4.0 scenario that is considered over a possibly infinite time horizon, this notion does not seem suitable. When jobs are allowed to enter the manufacturing system and are assumed to be completed and removed, the implications of new jobs or removing old ones need to be considered. Even if a new job requires a novel task that has never been executed in the manufacturing system before, it is reasonable to check which machines M are able to execute it and add the novel task to their sets of executable tasks . The task can then be remembered for the case that a future job also requires it in its production process. The part of the production problem that can be considered to be persistent over time are the machines in the manufacturing system. Thus, an assignment between machines and their production capabilities (the skills to execute tasks) will not be subject to many changes. In contrast to that, the assignment between jobs (or their tasks) and the machines that can execute them needs to be reintroduced with every new job.

2.3. Classification of the Scheduling Problem

A common classification of scheduling problems was introduced by Graham et al. [31] and further refined as then [15,30,32]. It systematically categorizes various scheduling problems by their machine environment, their job characteristics and their optimality criterion. For the classification of the problem at hand we mainly focus on the machine environment, since the job characteristics in the sense of the classification scheme are not completely specified and the optimality criterion can be arbitrary, except for the properties required for the proofs in Section 4.

According to this classification scheme, the machine environment of the scheduling problem described above can be classified as a generalization of the most common specification of the flexible job shop (FJS) [21] and at the same time as a generalization of the partial job shop (PJS) [24]. The FJS as well as the PJS generalize the ordinary job shop (JS) [15], which restricts the problem formulation above in two ways. First, every task can only be executed by one distinct machine, i.e., it does not offer machine flexibility (F1). Secondly, every job has a predefined process plan in the form of a fixed sequence in which the tasks of the job have to be executed, i.e., it does not offer sequence flexibility (F3).

There is a large amount of literature on the FJS and it is not always defined in the exactly the same way [20,21,23]. The most common specification introduces machine flexibility (F1) with respect to the ordinary JS by allowing every task to be executed by multiple machines and is also called job shop with multipurpose machines (JMPM) [15]. In a more restrictive definition by Pinedo [30] the FJS consists of distinct work centers with identical parallel machines. The sequence flexibility (F3) is not introduced and the tasks of every job have a predetermined sequential order in which they have to be executed [21,33].

In the PJS, which was introduced by Nasiri and Kianfar [24] and is rarely considered in literature [34], sequence flexibility (F3) was introduced with respect to the ordinary, non-flexible JS. The fixed sequence of tasks in a job is relaxed such that arbitrary precedence relations in the form of an acyclic graph are allowed. The machine flexibility (F1) to choose on which machine a certain operation will be executed is not available in the PJS.

In order to arrive at an understandable denomination that speaks for itself, we follow the definition of the JMPM and categorize the machine environment in our scheduling problem as job shop with multipurpose machines and sequence flexibility (JMPMSF). It combines the two types of flexibilities (F1) and (F3) that were introduced in the JMPM and the PJS, respectively, with respect to the ordinary JS. In the literature no unique name was given to the JMPMSF. Among other names, it is called extended FJS [25], or FJS with process plan flexibility [35]. Birgin et al. [25] and Özgüven et al. [35] present two different mixed integer linear programming (MILP) models to find a schedule that minimizes the makespan of a JMPMSF. Due to its complexity, however, it is mostly solved by means of heuristic approaches [16,29,36]. Its practical relevance was shown by Lunardi et al. [37] who consider a JMPMSF from online printing industry that is subject to additional challenges. They present a MILP and a constraint programming model for it, after providing a concise literature review on the JMPMSF.

3. Model Generation

For the problem described in Section 2 we generate a mixed integer programming (MIP) model, which does not necessarily need to have a liner cost function, by means of an automated procedure described in Section 3.3. In contrast to existing MIP models for the JMPMSF [25,35,37], we introduce an intermediate step to tackle the complexity of the problem with a modular approach in terms of a Petri Net (PN). The model we use was first described in our previous work [17] and is based on the fundamental form of a PN introduced by Carl Adam Petri in his dissertation [38]. This model represents a discrete time description of the production process. Alternative Petri Net descriptions for the scheduling problem can either directly use the real-valued production time in a Timed Petri Net (TdPN) [39], or ignore the time information and describe the production process as a Discrete-Event System (DES), which again results in a non-timed PN as introduced by Petri [38]. The discussion of the alternative possibilities is beyond the scope of this paper.

3.1. Introduction to Petri Nets

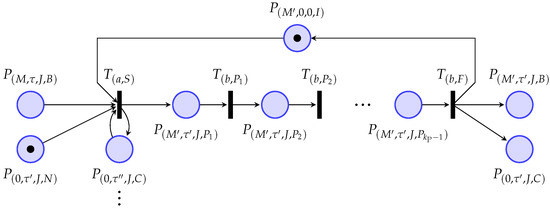

Before describing the automatic generation of the PN model of the JMPMSF, we formally introduce Petri Nets analogous to [17].

Definition 1 (Petri Net).

A Petri Net is a tuple, where

- is a finite set of places, , graphically represented as circles,

- is a finite set of transitions, , graphically represented as bars,

- is a set of arcs from places to transitions and from transitions to places, graphically represented as arrows,

- is an arc weight function, graphically represented as numbers labeling the arcs (if an arc has the weight 1 it is not labeled) and

- is the initial marking of the Petri Net, from now on called initial state; the initial state of a place is graphically indicated by dots (“tokens”) in the circle corresponding to .

The dynamics of a PN is driven by the firing of the transitions and captured by the evolution of the state vector at the time instants and is initialized with . The firing of a transition T removes tokens according to the weights of the arcs from its input places and adds token to its output places according to the weights of the arcs . The number of times each transition fires at instant k is expressed with the firing count vector . We introduce the incidence matrix which is composed of the two matrices and that are defined as

We can now compactly describe the Petri Net dynamics by

As the places are not allowed to have a negative number of tokens, the Petri Net dynamics needs to be further restricted by only allowing transitions to fire if they do not generate negative tokens in any place. We say a transition T is enabled at a state if all of its input places connected through an arc hold as least as many tokens as the arc weight , i.e., . Two transitions, and , might be concurrently enabled at state , but if they both fire simultaneously they would consume the same token resulting in a negative entry in the state vector . This situation is called conflict between the transitions and [39]. To prevent negative tokens in this case, a non-negativity condition on the basis of the firing count vector is introduced. With the matrix and the state vector all allowed firing count vectors must satisfy the non-negativity constraint

3.2. Modified Petri Net Dynamics

So far, we only introduced common notions for Petri Nets that can be found in literature, for example, in [39,40]. However, in order to describe the scheduling problem introduced in Section 2, we will slightly adapt the role of the transitions as proposed in our previous work [17]. In most literature on Petri Nets, the firing of transitions is initiated by predefined rules, considered to be stochastic, or the dynamics is even seen as the set of all possible sequences in which the transitions can fire [39,41]. In contrast to that, we will use a subset of the transitions as controlled inputs to actively influence the dynamics of the Petri Net, which we will call controlled part of the PN, and another subset of the transitions is defined to fire as soon as they are enabled, which we will call independent part of the PN.

As discussed by Giua and Seatzu [42], the PN dynamics (2) is a special case of the dynamics of a linear discrete time system with , where I is the identity matrix of appropriate dimension. We will now exploit the possibility to change the matrix A such that it holds the influence of the so-called independent transitions in the set . The matrix B then merely considers the actively controlled transitions in the set and the control decisions are captured in the firing count vector u. Whether it is possible to represent the firing of a transition “as soon as it is enabled” and without violating the constraint (3) by means of a matrix multiplication of the PN’s state x with a matrix A, depends on its interconnection with the rest of the PN. Therefore, we introduce two restrictions on the independent transitions. A transition T can only be considered to be independent if

- (I1)

- it only has one input place ,

- (I2)

- its only input place has no second output transition,

- (I3)

- the weight of the arc connecting the place to its output transition T must be .

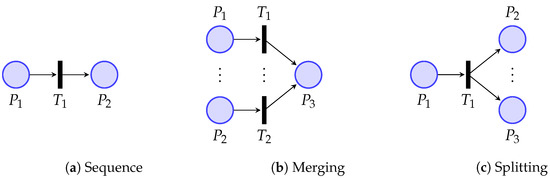

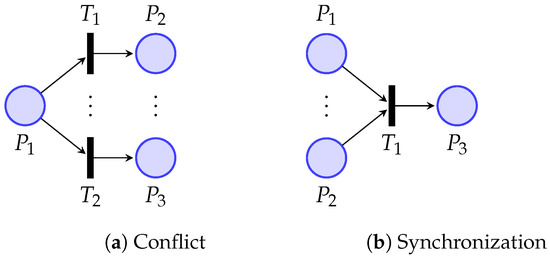

With those restrictions, only the basic Petri Net elements depicted in Figure 1, i.e., sequence, merging, and splitting, can be assigned to the independent part of the Petri Net, i.e., to the set . On the other hand, conflict and synchronization depicted in Figure 2 must be controlled actively and the corresponding transitions always belong to the set . This obviously also holds for the combination of synchronization and conflict in a so called non free-choice conflict [41].

Figure 1.

Elementary Petri Net structures in which the transitions can be assigned to the set of independent transitions .

Figure 2.

Elementary Petri Net structures in which the transitions must be actively controlled and always belong to the set of controlled transitions .

With the distinction between independent transitions and controlled transitions, the Petri Net dynamics is described as

As the places must not hold a negative number of tokens, i.e., constraint (3) must still be satisfied, and as there always has to be an integer number of tokens, for the newly introduced dynamics matrix must hold . The dynamics (4) together with the non-negativity constraint (3) is called state space description or state space form of the PN.

If the output transition of the place is handled as an independent transition, the i-th column of the matrix A is changed, starting from an identity matrix I for a completely controlled PN. The input arc of transition sets the diagonal entry to zero, i.e., , and the output arcs of the transition introduce the weight in the entry . If a place has no independent output transition, a one in the corresponding diagonal entry in A keeps its number of tokens constant when no controlled transition fires.

Example 1.

The matrices corresponding the the elementary PN structures in Figure 1 are

The firing of the independent transitions at the state removes all tokens from the input places through the zero in the corresponding diagonal entry of the matrix A. In the state , tokens are produced in the output places of the independent transitions through the non-zero off-diagonal elements in A.

Note that the criteria (I1)–(I3) are necessary so that a transition can be assigned to the set . If one of them is not fulfilled for a specific transition, it must be assigned to the set . However, it is still possible to assign transitions to if they fulfill the three criteria (I1)–(I3). For example, it is possible to set the transition in Figure 1b as controlled transition and assign it to . In this case, the dynamics of the Petri Net in Figure 1b is

We will exploit the independent transitions to have a production process running autonomously while the scheduling decisions are handled by means of controlled transitions. How the problem formulation in Section 2 can be automatically transformed into a Petri Net will be discussed in the next Section.

3.3. Generation of the Discrete Time Petri Net Model

Form the description of the JMPMSF in Section 2, we will automatically generate a Petri Net model with the algorithms presented in our previous work [17]. The generated PN model will provide all possible manufacturing decisions in the form of controlled transitions in the set . Their implications are captured in the matrix B of the algebraic description (4) and the decisions are taken by means of the firing count vector u. The automatic production process is represented through the independent transitions in the set and handled in the matrix A of the algebraic representation (4), as presented in Section 3.2.

The state of the Petri Net x represents the current status of the manufacturing system. In order to preserve the production related meaning of the elements of the PN components, we introduce distinct identifiers as labels for the places and transitions. By introducing corresponding vectors and , the state x and the input vector u are intuitively understandable and can be related to the elements in the production system. The identifiers hold information on the machine, the task and the job to which the places and transitions refer. Additionally, we introduce a classifying character to indicate the meaning of the places and transitions in the production process. The classifier S relates to “start”, F relates to “finish”, B to “buffer”, I to “idle”, N to “necessary”, and C to “completed”. This set of classifiers was specifically selected for the given production problem together with the desired model of it and the algorithms that translate between them, which will be presented in the sequel. This set of classifiers is certainly not the only possible one to implement a similar model generation. If further characteristics of the production problem are investigated or other restrictions are present, the set of classifiers can be changed and further Petri Net structures can be introduced.

The identifier of a place P has the form , as a place can relate to one machine , one task , one job and has the production related meaning that corresponds to its classifier . With this notation it becomes obvious to which machine, which task and which job a place relates and how its marking can be interpreted. If a place has no specific machine, task or job it relates to, its identifier has a zero at the respective position. For example the marking of the place indicates whether the machine M is idle, which is independent of any specific task or job.

A transition connects at least two places and its production related role can be related to the places connected to it. Its identifier has the form , which indicates that the transition connects places of the form , and . The identifiers of transitions only refer to one job J, since we assume dependencies between different jobs to arise only through the fact that they are produced in the same manufacturing system, as discussed in Section 2 and formulated in Restriction (R2). As a consequence, all places connected to the same transition either relate to the same job or they do not relate to any job at all.

Analogous to the algorithms presented in our previous work [17], the PN model of the manufacturing system is created with the Algorithms 1 and 2. The generated model is a discrete time description of the manufacturing system and the production processes are represented by chains of places , . The number of production steps

for the execution of the task on the machine M is calculated with respect to the sampling time , which is also called sampling period. It is rounded to the next larger integer which means that for a large sampling time the resulting production time might be larger than the true production time , i.e., .

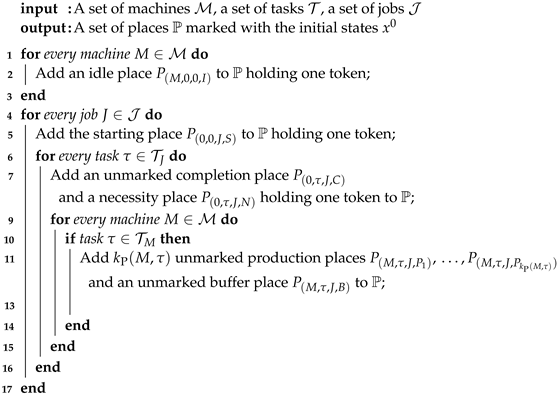

| Algorithm 1: Create Places [17]. |

|

Algorithm 1 generates the set of places for the Petri Net. First, in lines 1–3, the idle places , which indicate whether a machine is working or not, are created for all machines . As it is assumed that all machines are idle at initialization, the idle places are marked with one token. This token is consumed by every transition starting the execution of a task on the respective machine and returned once the production is finished. Due to this mechanism it is guaranteed that every machine only executes one task at a time and therefore respects Restriction (R6).

In lines 4–14, the places for the statuses of the jobs are created. Every job J has a starting place , initialized in line 5 with one token, indicating that the production of the respective job has not yet been started. Once the production of a job starts, its token is moved according to the production process. Despite the token is not labeled and it can not be said that it stays the “same” token, the machine at which the job is being processed or was processed last can be deduced from the identifiers and the markings of the production and buffer places related to it. Those places are created in line 10. For every machine M with , the production places are created. They indicate at which machine M the task of job J is executed and how far its execution already proceeded. At the end of line 10, the buffer places are created. If a buffer place is marked, this shows that the task of the job J was processed on machine M at last and that the semi-finished product of the job J is currently in the output buffer of machine M. By generating the production and buffer places for all possible machines that can execute each task, the selection of the machine that will eventually execute it is not predefined a priori and can be chosen during runtime. This structure enables the Flexibility (F1). In line 7, necessity places and completion places are created for all tasks , which indicate the production progress of the job J. Besides that, the completion places are used to ensure that the precedence relations between the tasks are met and Restriction (R1) is respected. As it is assumed that no task in any job is already completed at initialization, the completion places are initialized unmarked whereas the necessity places hold one token indicating that the respective task needs to be executed once.

| Algorithm 2: Create Transitions and Arcs [17]. |

|

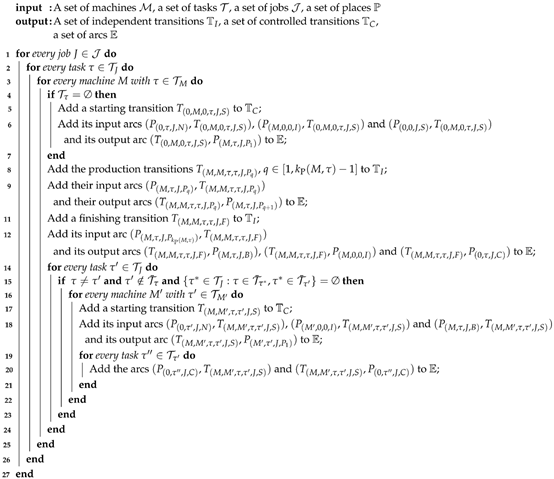

In Algorithm 2, the transitions and arcs that allow the possible evolutions of the system and implement the required constraints are generated. At first, in lines 5 and 6, the starting transitions to start the jobs are created for every possible initial task of the jobs.

The other starting transitions allowing to start all further tasks in an arbitrary order only restricted by the precedence between the tasks, i.e., Restriction (R1), are generated in lines 15–18. Every starting transition is a synchronization of an idle place, a necessity place and a starting or buffer place as illustrated in Figure 3. Its three input places need to be marked before a starting transition is enabled and allowed to fire, which is why it has to be implemented as a controlled transitions. This is not a restriction, however, as actively choosing the sequence in which the different tasks of the different jobs are started on the available machines by means of deciding which starting transitions fire at a given time, exactly implements the desired Flexibilities (F3) and (F4). The starting of a task reserves the machine M that is used for its execution by consuming the token from the idle place . This guarantees that every machine is only used once at a time as required by Restriction (R6). By consuming the token from the necessity place , it is indicated that the task will not be necessary any longer. The consumption of the token from the starting place or the buffer place reserves the semi-finished product of the job J and guarantees that only one task of every job is executed at a time, as required by Restriction (R7). Each starting transition that starts the execution of a task consumes the token from all completion places of the tasks that need to be finished before the task can be started. Thereby it is guaranteed the task can only be started once its precedence constraints according to Restriction (R1) are fulfilled. The tokens are immediately returned to the completion places such that their production status is preserved. Starting transitions create a token in the first production place , indicating that the production starts.

Figure 3.

Illustration of the production sequences for the execution of task of job J on machine as it is generated in the Algorithms 1 and 2, with and . The tokens in the sequence are depicted as they are initialized in Algorithm 1. As at initialization no task or is already completed, the places and are not marked and the transition is not enabled.

The production transitions , which are created in the lines 8 and 9, implement the production process as a sequence of production steps, similar to Figure 1a, as illustrated in Figure 3. They move tokens from one production place to the next production place and are implemented as independent transitions.

The finishing transitions are created in lines 10 and 11 and have the form of a splitting similar to Figure 1c. They are considered to be independent as well. They consume the token from the last production place and return a token to the idle place of the machine M that finishes the production and store the semi-finished product in its output buffer . Finally, they set the task completed by creating a token in the completion place .

The state space description of the Petri Net in the form of the Equations (3) and (4) is created as introduced in Section 3.2. First, the initial state is initialized by assigning the initial markings of all places to it. The effects of the production and finishing transitions are represented in the matrix A. Starting from an identity matrix I, for every production transition the diagonal entry corresponding to its input place is set to zero and a one is introduced in the same column in the line corresponding to its output place . The result is similar to matrix in Example 1.

For every finishing transition , the diagonal entry corresponding to its input place is set to zero and ones are introduced in the same column in the lines corresponding to its output places , , and . The result is similar to matrix in Example 1.

For every starting transition , a column is added to the matrix B through the matrices and as defined in Equation (1). The new columns in and are initialized with zeros. In a one is introduced in the lines corresponding to the input places , , and . The precedence relations among different tasks, which are considered through arcs from the completion places of other tasks , lead to further ones in the lines corresponding to possibly multiple places of the form . In a one is introduced in the line corresponding to the first production place and further ones are introduced in the lines corresponding to the completion places of the precedence relation, in order to keep their marking constant. Note that directly creating the matrix B and omitting the matrices and would make the completion places obsolete and cancel out their effect. Additionally, the matrix is required to formulate the non-negativity constraint (3). The input vector u has one entry for every starting transition.

The result of the Algorithms 1 and 2 is the Petri Net and its state space description given by the matrices and the initial state . The state space description will be used for the scheduling scheme described in Section 4. As it was created by specific Algorithms it has some properties that will be exploited to prove important properties of the scheduling scheme. Those properties are discussed in the next section.

3.4. Analysis and Discussion of the Discrete Time Petri Net Model

Before using the Petri Net model generated in Section 3.3 for the scheduling of the JMPMSF in Section 4, we will analyze its state space description (4) together with the non-negativity constraint (3). Some specific properties that will be exploited to prove important properties of the proposed scheduling scheme in Section 4 are given in the following lemma from our previous work [18].

Lemma 1 (Properties of the automatically generated Petri Net).

For the Petri Netgenerated with the Algorithms 1 and 2, the following properties hold:

- (P1)

- A steady state is a pair of state and inputwith. As there is no steady statewith, we denotethe steady state and omit the input u.The fact that there is no steady state with means that the Petri Net has no T-invariant ([39] Definition 11.4).

- (P2)

- The following statements are equivalent:In the state no production is taking place, i.e., no production place is marked ⇔ is a steady state.

- (P3)

- The initial state generated in Algorithm 1 is a steady state and .

- (P4)

- All parts of a steady state are themselves steady states, i.e.,.

- (P5)

- The precondition of every firing, which needs to be satisfied according to the non-negativity condition (3) in order to start a production process, forms a steady state for all inputs u, i.e., .

- (P6)

- A task which has been completed stays completed, i.e., once the completion places are marked they stay marked.

- (P7)

- (P8)

- Every state x eventually enters a steady state ⇔ there is a for which .

- (P9)

- If an operation , i.e., a specific task of a job J, can be started at a steady state , it can also be started in all steady states reachable from through a legal firing sequence respecting (3), or it was already completed in .

A proof of the Lemma 1 can be found in Appendix A.

The Properties (P1)–(P6) define important features of steady states, that are not only important for theoretical analysis, but also required for a reasonable representation of a production system. If, in contrast to Property (P1), an action is necessary to keep the production system at rest, it tends to be unstable. If, in contrast to Property (P2), a production is going on while the system is at rest, either the model is flawed or the production does not yield any products, which would not be favorable. Initializing the system at rest as stated in Property (P3) is a common way of modeling. If Property (P4) was not fulfilled, there would be a steady state only due to two or more ongoing processes that cancel each other out. This can be reasonable for some models, for example, if the states describe inventory levels. In our case, however, as the individual products are tracked, this would imply that one process is progressing on a product while another one is reversing its effect, which is not desired. The absence of property (P5) would allow the start of a new production process to be only temporarily possible and after some time it would not be possible any more without any external causes. This might be caused by decaying products or tools, which are not considered in the presented setup. Property (P6) describes a production process without disintegrating products. The created PN does not violate the non-negativity constraint (3) by the independent evolution represented through the matrix A as Property (P7) states. This means that the model by itself does not lead to any states which do not have a reasonable real-world representation. In the described production system no endless production processes can be started, which is expressed through Property (P8), and Property (P9) shows that there are no restrictions in the sequence flexibility (F3) of the described JMPMSF, except the ones explicitly modeled through Restriction (R1).

The discrete time model introduced in Section 3.3 describes the evolution of the production process with respect to the sampling time . A smaller sampling time will lead to an increased state dimension, which results from Equation (5) and the creation of the production places in line 10 of Algorithm 1. On the other hand, a larger sampling time might lead to a more significant over approximation of the true production time by the discrete time model through the rounding in Equation (5). As a consequence, the sampling time should be chosen in accordance with the dynamics of the represented processes. If the manufacturing system has processes with vastly different time scales, it might be hard to find a good compromise. However, despite the increasing state dimension, the number of input variables, which has an even greater influence on the runtime of the optimization problem presented in Section 4, is independent of the sampling time. This alleviates the drawbacks of having a small sampling time and a large state dimension.

In the creation of the discrete time Petri Net model, several structures and mechanisms are created to represent the characteristics of the job shop with multipurpose machines and sequence flexibility. In the sequel, we will briefly highlight the most significant ones and discuss simplifications of the PN model for special cases of the JMPMSF as for example for the ordinary job shop problem.

The possibility to execute the same task on different machines, i.e., Flexibility (F1), is introduced through the different production places and buffer places for the same task introduced in the line 10 of Algorithm 1. For an ordinary job shop, the if-statement in line 9 is only true for a single machine leading to a single chain of production places and a single buffer place for every task of every job.

The assumption of having multipurpose machines in the JMPMSF, i.e., flexibility (F2), is considered in the for-loop in line 8 of Algorithm 1 together with the if-statement in line 9, which allows to restrict the possibilities of the machines. For single-purpose machines, those conditions represent the search for the single machine that is able to execute the currently investigated task and could be implemented more efficiently.

The sequence flexibility in the JMPMSF, i.e., Flexibility (F3), is respected through the multitude of starting transitions that are created in the for-loop which starts in line 12 of Algorithm 2. They allow to change the sequence in which the tasks in a job J are executed. If no precedence relation between the tasks and is present, both transitions , starting after , and , starting after , are present in the PN. It is guaranteed that only one of them can fire since each of them requires a token at the buffer place after the execution of the respective other task. Without sequence flexibility, the if-statement in line 13 of Algorithm 2 would only be true for the single task that follows after the task , leading to a single starting transition of and recovering the given production sequence. Furthermore, the necessity places and completion places created in line 7 of Algorithm 1 would be obsolete in this special case.

Remark 1.

The presented mechanism of creating a Petri Net model for a scheduling problem is not unique. With similar algorithms, further mechanisms and properties of a manufacturing system can be represented, as, for example, a limited buffer at every machine, production capacities of the machines larger than one, or maintenance tasks that are represented by transitions that are not related to any job.

Remark 2.

The automatically created Petri Net and the resulting mixed integer programming model is not the smallest one that would be possible for the given JMPMSF. For example, the size of the state vector could be reduced by summarizing all the buffer places for the different tasks of the same job J that can be executed at the same machine M into one buffer place . By that, however, the information which task in job J was executed last is removed from the state of the PN.

4. Model Predictive Control

On the basis of the state space description of the automatically generated Petri Net, we will now develop two similar scheduling schemes for the job shop with multipurpose machines and sequence flexibility (JMPMSF) based on Model Predictive Control (MPC). One of them was first described and analyzed in our previous work [18], and the other one is a more efficient adaption thereof. Although they are specifically designed for JMPMSFs as defined in Section 2, they are exemplary for arbitrary scheduling problems formulated as a PN of the form introduced in Section 3.2.

Using systems and control theory to analyze and influence PNs is not uncommon in the literature [41,42], and also MPC techniques are used [43,44,45]. In the context of MPC for PNs, usually either continuous or hybrid PNs are considered from the start, or fluidification of the Petri Net is used, meaning that the integer constraint is relaxed and the tokens are considered to be a fluid. Instead of a state and a firing count vector , a state and a flow vector are considered. This relaxation is used to avoid a high dimensional state space and simplify the problem [43,45]. It is known that this adaptation changes the properties of the PN and that it is mostly suited for models with a large number of tokens [46,47]. In our particular case, it violates the notion of the production problem defined in Section 2. In order to illustrate this, let us only consider a starting transition that fires by a non-integer amount smaller than one, e.g., at instant . This would mean that a task is only partially started and therefore also only partially executed through the independent part of the PN dynamics (4) with for . The result is a partially completed task. Although it is not completely equivalent, this is comparable to the violation of Restriction (R4), i.e., that a task must not be interrupted, as an interruption would also lead to a partially completed task. If there is a reason why it is not allowed to interrupt a task the same reason usually prohibits to only start it partially.

An MPC formulation for a timed discrete PN was presented by Lefebvre [48,49]. It is tailored to a setup in which a PN needs to be transferred from an initial marking to a desired final marking in minimum time while avoiding critical markings in which uncontrollable transitions could fire. Due to this specific setup, it is not applicable to the our case and especially not to the more general type of scheduling objectives described in Section 2.2.

In the context of discrete manufacturing, MPC was applied for dynamic capacity adjustment of a production system with reconfigurable machine tools and job shop characteristics by Zhang et al. [50]. They consider a continuous optimization problem, which relaxes the integer constraint in the sense of fluidification of the problem. The question how the underlying integer assignment problem can be solved is left as an outlook. An MPC approach for the scheduling of reentrant manufacturing lines motivated by semiconductor manufacturing was considered by Vargas-Villamil and Rivera [51], who optimize a the long-term behavior of a reentrant production system modeled as a discrete-time flow model with respect to a multi-objective cost function by means of linear programming. The result of the MPC is fed to a subordinate controller which coordinates the short-term decisions and applies the integer valued control input to the plant. Cataldo et al. [52] use MPC for the scheduling in a machine environment with parallel identical machines that are fed through transportation lines of different lengths. The goal is to maximize the production output while limiting the energy consumption. The resulting optimization problem is a MIP assigning speeds to the machines and binary inputs to the transportation system. For general job shop problems no alternative MPC approach was found in the literature.

In contrast to problems in scheduling and discrete manufacturing where MPC is rarely used [52], it has a variety of applications in supply chain management and especially in process industry, where continuous models naturally arise [53,54,55,56]. Due to the large body of knowledge on various properties and different implementations of MPC schemes, it became a well-established control method [57].

In the sequel, we start by formulating the first MPC problem according to [18], before we show that the problem is feasible in Section 4.2 and determine a criterion to guarantee the completion of a single operation in the production problem in Section 4.3. The insights form this discussions motivate the formulation of the second MPC formulation in Section 4.4. The guarantees for the completion of the production problem for both MPC formulations are given in Section 4.5.

4.1. Formulation of the MPC Problem

As discussed in Section 2.2, the goal of the proposed production scheduling scheme is to optimize the profitability of the production system. This is done by assigning the different tasks to the most efficient machines and by choosing the production sequence which yields the most reward possible. In order to quantify the profitability of the production system represented as a PN, we introduce a cost function , which is called stage cost, that assigns to every pair of state and input a cost value . It quantifies how much cost the current status of the production system represented through the state x and the decisions taken through the input u cause. In general, the cost may depend arbitrarily on x and u and can also contain couplings between them. As the state of the system changes over time and at every time step new decisions are taken, the cost only represents the cost in the current time interval of length . In order to achieve a good performance with respect to a given cost function c, the system needs to be influenced in a way such that the resulting cost summed up over time is minimal. In the sense of MPC, we formulate this goal as a finite horizon optimal control problem over the prediction horizon N, which is repeatedly solved in a rolling horizon fashion [14]. It is initialized at each time instant k with the most accurate knowledge about the system, which is the state that we assume to be known or measured. This assumption is justified by the concept of the digital twin introduced in Section 1. The optimization problem to determine the MPC control law at time instant k is formulated as

During the prediction, the PN dynamics (4) and the non-negativity of the states (3) need to be respected in the constraints (6b) and (6c). The planned input vectors are only allowed to have non-negative integer values (6d), whereby also the predicted states remain vectors of of non-negative integers and the tokens in the PN are moved in the intended direction. The optimization variables in the MPC problem are the inputs of the planned input trajectory . They allow to choose a new firing count vector at every future time instant and represent potential decisions taken for the production process. The minimizer of (6a) in the MPC problem is the optimal input trajectory . The resulting optimal predicted state trajectory, which results from applying the inputs starting from the initial state , is denoted . The sum of the predicted cost is only minimized over a finite prediction horizon in Equation (6a). This is not only the common case in MPC, but also reasonable under the assumption that the production system changes over time due to unknown or uncertain external influences. Due to such disturbances, the predictions for the distant future are unlikely to be accurate. From the optimal input trajectory , only the first input is applied to the system, before the optimization is repeated with the newly measured state at the next time instant. The resulting nominal closed loop dynamics can be expressed as

where a feedback mechanism is introduced through the repeated solution of the optimization problem (6). This inherent feedback mechanism is one of the key features of the presented approach compared with scheduling techniques from literature [22,23]. It is necessary as, due to external influences and non-modeled effects, the state , which was predicted for time starting from and applying , might be different from the true state . Therefore, for the real closed loop it might be .

In order to provide a proof that the proposed MPC scheme leads to a desirable solution of the scheduling problem defined in Section 2, we first need to show important properties. Repeatedly applying the optimal input determined through a finite horizon optimal control problem such as (6) does not naturally lead to an optimal closed loop performance [58], which in our case is the completion of all jobs in the production problem in the best possible way. In fact, quite the opposite can be the case, and the MPC might not even start a single task if the MPC problem (6) is not formulated carefully and does not fulfill some required conditions. In this respect, the MPC problem is analyzed in the following sections and guarantees are provided that the desired properties, i.e., always having a feasible solution and leading to a state in which the production problem is completed, are fulfilled if some specific conditions are satisfied. We first show that there always exists a solution to the optimization problem (6) in the following section, before we provide conditions on the cost function and the prediction horizon N which guarantee that applying the MPC to the manufacturing system will lead to the completion of every task in every job and therefore to the completion of the scheduling problem.

4.2. Feasibility of the MPC Problem

As a first important property of the MPC problem formulated in Section 4.1, it needs to be guaranteed that the optimization problem (6) always has a feasible solution when it is applied in closed loop (7), which is called recursive feasibility in the context of MPC [14]. Proving recursive feasibility of an MPC problem requires prior assumptions. In our case, the standing assumption is that the PN was generated with the Algorithms 1 and 2 for a manufacturing system as described in Section 2. We first show that under this assumption the MPC optimization problem (6) is feasible for every possible state of the PN, before specifically analyzing the closed loop system (7).

Lemma 2 (Feasibility).

Proof of Lemma 2.

The input trajectory satisfies (6d), and for all it satisfies constraint (6c) due to Property (P7). Constraint (6b) is satisfied with according to the open loop system dynamics (4). Therefore is a feasible candidate solution of the MPC Problem (6) for every . For the PN generated with the Algorithms 1 and 2 it particularly holds that due to Property (P3). □

Lemma 3 (Recursive feasibility).

Proof of Lemma 3.

From Lemma 2 and as due to Property (P3) follows that the input trajectory is a feasible candidate solution at .

That the nominal closed loop system (7) with from problem (6) satisfies the condition (3) follows directly from the fact that holds in the nominal case and since satisfies (6c).

For every feasible input trajectory at time instant k, the input trajectory is a feasible input trajectory to Problem (6) at time with the initial state from the nominal closed loop system (7). The feasibility of the inputs follows directly from the feasibility of at time instant k. The final predicted input is also feasible due to the following reasons. First, it naturally satisfies constraint (6d). The predicted state is a natural number, i.e., , due to the feasibility of and satisfies the constraint (6b) according to the open loop system dynamics (4). For , constraint (6c) is satisfied with due to Property (P7). □

The input trajectory used in the proof of Lemma 2 simply does not start any task in the scheduling problem. This is surely not desired but in the problem described in Section 2 there is no external driver prohibiting this solution. In the same sense, extending an existing feasible input trajectory by a new predicted input in the candidate trajectory for the next time step used in the proof of Lemma 3 does not plan to start any new task in the last step of the prediction.

As explained in Section 2.2, there is a production related objective that requires taking some action due to its economic motivation. This objective needs to be formulated in the form of a cost function depending on the state x and the input u. In the sequel we will investigate properties of such a cost function, which we assume to be known and given, that allow to adjust the prediction horizon N such that the production problem controlled with the MPC is guaranteed to be completed. In this process we exploit the hierarchical nature of the production problem that is composed of jobs, which themselves are composed of tasks. We first provide a sufficient condition for the completion of a single operation, i.e., a single task of a single job. The arguments in the proof of the completion of an operation motivate the formulation of a more efficient MPC scheme. Finally, we provide a condition for the completion of a job and finally of the whole production problem for both MPC schemes.

4.3. Completion of an Operation

Our goal is to achieve optimal performance of the system in closed loop through applying MPC. For a flexible manufacturing system (FMS), the first and most important goal is to produce the requested products. In the formulation of the production problem in Section 2, this means the completion of all the tasks of all jobs. In the model generated in Section 3, this is represented as the convergence to a state in which all completion places are marked. In this section, we will first guarantee the completion of a single operation if some clearly specified condition are fulfilled.

A second goal in an FMS is to minimize production cost or to maximize profit. This can be conveniently formulated with a cost function. In the MPC, this cost function is directly use as objective function of the optimization problem (6a). As the cost function is only optimized over a limited prediction horizon N, the reward of performing actions in the manufacturing system needs to be perceived through evaluating the cost function during this limited time frame. This results in a condition on the cost function and subsequently in a condition on the prediction horizon N. In the sequel we will show how the prediction horizon N must be chosen based on the given manufacturing system as described in Section 2 and a given cost function in order to guarantee completion of a specific operation in the production problem with the MPC. To achieve this result, we first give a general condition that must hold such that the MPC completes any operation.

Lemma 4 (Starting an operation).

Given a Petri Net of a flexible manufacturing system in its state space form (3), (4) is generated with the Algorithms 1 and 2. If for a steady state all optimal input trajectories for the MPC problem (6) initialized at are such that , then at least one operation will be started at time instant k in closed loop (7), the operation O will eventually be completed, and it will remain completed.

Proof of Lemma 4.

In Algorithm 2, the only transitions that are added to the set of controlled transitions are starting transitions . Therefore, every optimal first input fires at least one starting transition and thereby initiates the execution of at least one operation by marking at least one production place . The first input is applied to the closed loop system controlled by the MPC (7) at time instant k. Therefore the production is not only started in the prediction, but also in closed loop. According to Property (P2), the resulting state is no steady state as a production place is marked. Due to Property (P8), eventually another steady state is reached. According to Property (P2), a steady state is only reached when no production place is marked any more. As the only independent transitions that do not mark another production place are finishing transitions , this means that eventually a finishing transition fires. As every finishing transition created in lines 10 and 11 of Algorithm 2 marks a completion place , eventually a completion place is marked. Due to Property (P6), once completion places are marked they stay marked and hence the completed operations stay completed. □

The condition in Lemma 4 can be exploited to guarantee the completion of a given production problem as long as it is finite and feasible, which we state in the following assumptions. As we generally allow the production problem to change, for example, through new jobs in the production problem, we assume that it is finite and feasible at the time when we investigate it. For a production problem that can always be extended, trying to argue that it will be completed a certain point in time is futile. Therefore, we will only analyze the properties of the production problems as it is at a certain point in time.

Assumption 1 (Finite production system).

The given production problem formulated as in Section 2 is finite, meaning that it has a finite number of possible tasks , a finite number of jobs and a finite number of machines at a given time.

We state the central assumption that the production problem is feasible, meaning that all operations can be executed by the given machine pool, on the level of the initial production problem formulated in Section 2 as well as on the level of the Petri Net and its state space description described in Section 3.

Assumption 2

(Feasibility of the production problem [18]). All jobs can be fulfilled by the given production system. This means that for every job and every task there exists at least one machine with .

In terms of the Petri Net, there exists at least one state in which every completion place is marked and which is reachable from the initial state .

In the state space, there exists at least one state with for every i where and which is reachable from from the initial state , i.e., there exists a legal input trajectory leading from to and where the non-negativity constraint (3) is respected for all with .

Lemma 5 (Completion of the production problem).

Given a Petri Net of a flexible manufacturing system in its state space form (3), (4) generated with the Algorithms 1 and 2 that fulfills the Assumptions 1 and 2. If there is only one steady state for which not all optimal input trajectories for the MPC problem (6) are such that , then the production problem will be completed by the MPC and is the final state of production system.

Proof of Lemma 5.

The steady state is the only steady state for which the preconditions of Lemma 4 are not fulfilled. For all other steady states , all optimal input trajectories for the MPC problem (6) are such that . Therefore, Lemma 4 states that in all steady states except at least one operation O is started. As the production problem is feasible due to Assumption 2, all operations can be executed and since it is finite due to Assumption 1, there is only a finite number of possible operations to be started, so eventually all operations had been started. Due to Property (P8) another steady state will be reached. As is the only steady state for which the preconditions of Lemma 4 are not fulfilled, it is the only steady state in which the system can remain. □

The condition in Lemma 5 that there only exists a single final state is more restrictive than necessary. In general, also for a fixed production problem there is a set of final states, in which no further production is possible and all jobs are completed. The convergence to this set can be shown with similar arguments.

Having stated very general conditions when an MPC executes operations and ultimately completes the production problem, we will now formulate more specific conditions on the prediction horizon N and the cost function which guarantee the completion of an operation . As proposed in [18], we will use the cost function in the MPC problem (6) to determine a sufficiently long prediction horizon to guarantee the completion of the operation O for all prediction horizons . In order to proof the finalization of every operation, we first define the sets and to classify the steady states in which an operation O can be started and the steady states in which it was just finished, respectively.

Definition 2

([18]). For every with , the set is defined as the set of steady states in which the operation O can be directly started and which is reachable from the initial state of the production system through a legal input trajectory.

Definition 3

([18]). sFor every with , the set is defined as the set of steady states right after the operation O was completed starting from a state without executing any further task.

Theorem 1

(Completion of an operation [18]). Given a Petri Net of a flexible manufacturing system in its state space form (3), (4) generated with the Algorithms 1 and 2 that fulfills the Assumptions 1 and 2. If for an operation and a cost function holds that for every state there exists a state reachable from with

then there exists a shortest sufficient prediction horizon with the property that for every the operation O will eventually be executed when starting from any and applying the optimal solution to the MPC problem (6) in closed loop (7).

Proof of Theorem 1.

As shown in Lemma 4, an optimal input trajectory with the first predicted input is required such that some operation is started. In order to prove that the investigated operation O is started, we need to show that the closed loop system always enters a state in which the optimal input trajectory has an input as first planned input, where is the set of all input vectors u that start the execution of operation O.

Part I: