Short-Term Load Forecasting Based on Deep Learning Bidirectional LSTM Neural Network

Abstract

:1. Introduction

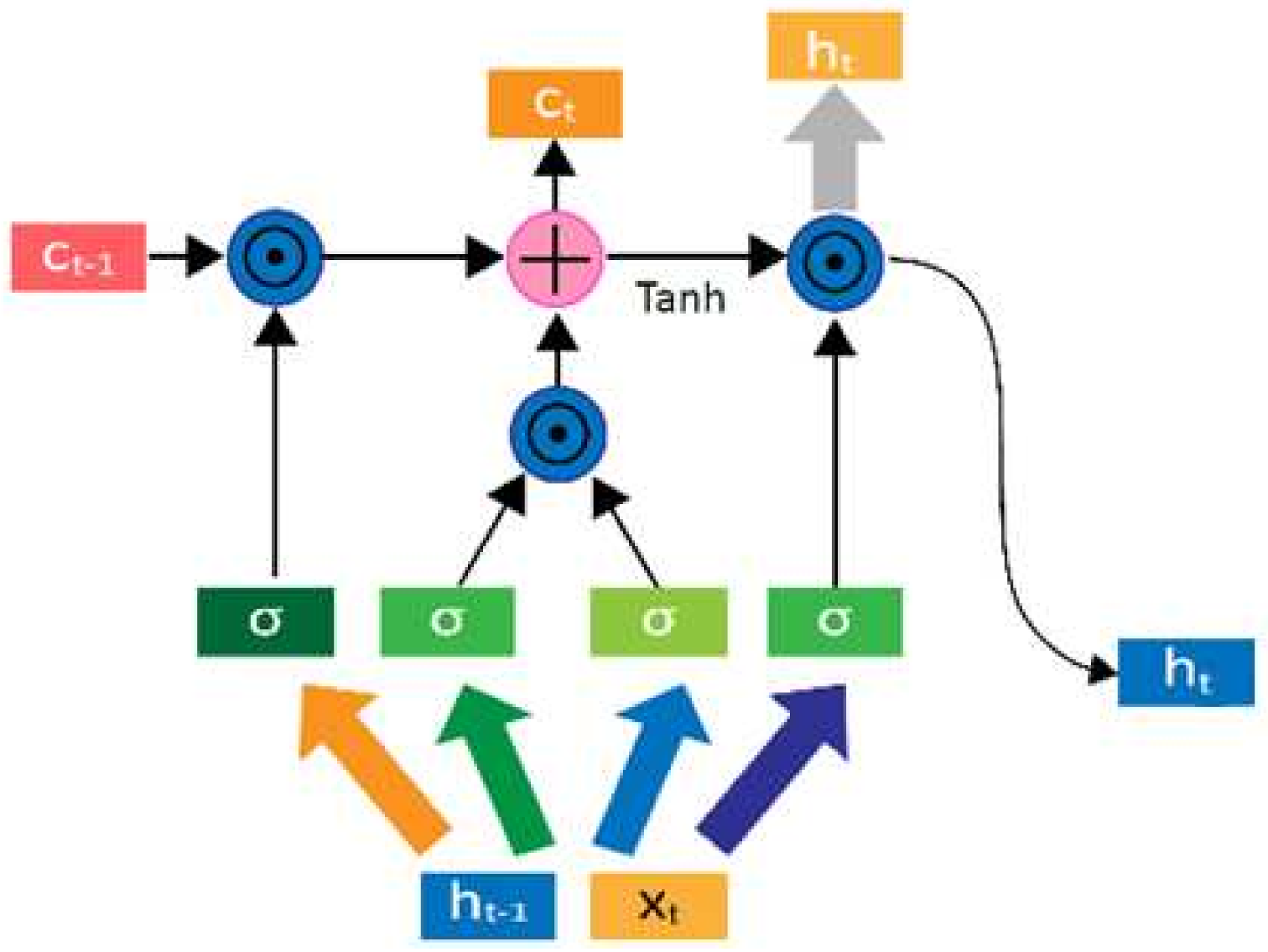

2. LSTM Neural Network

2.1. LSTM Neural Network

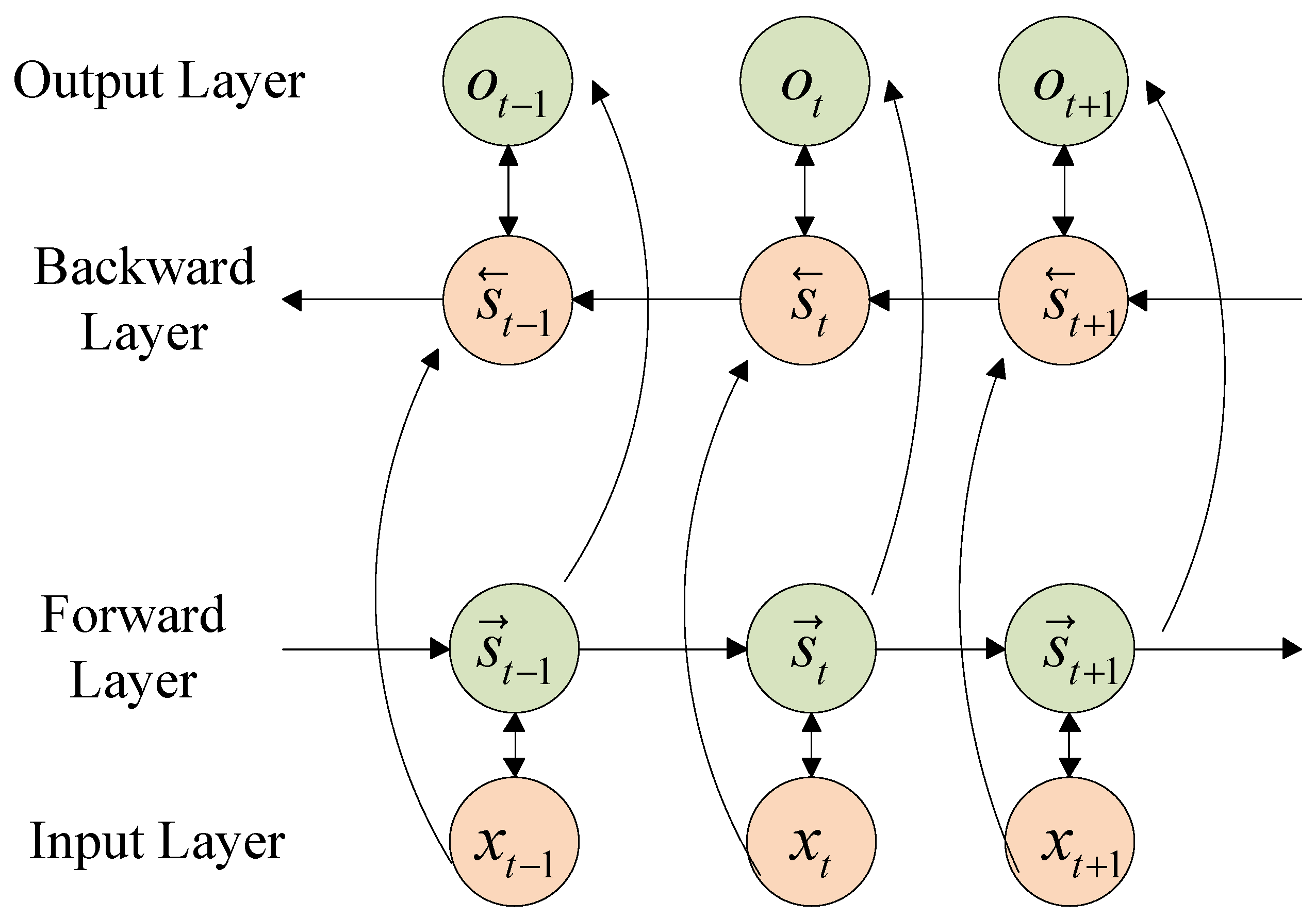

2.2. Bidirectional LSTM Neural Network

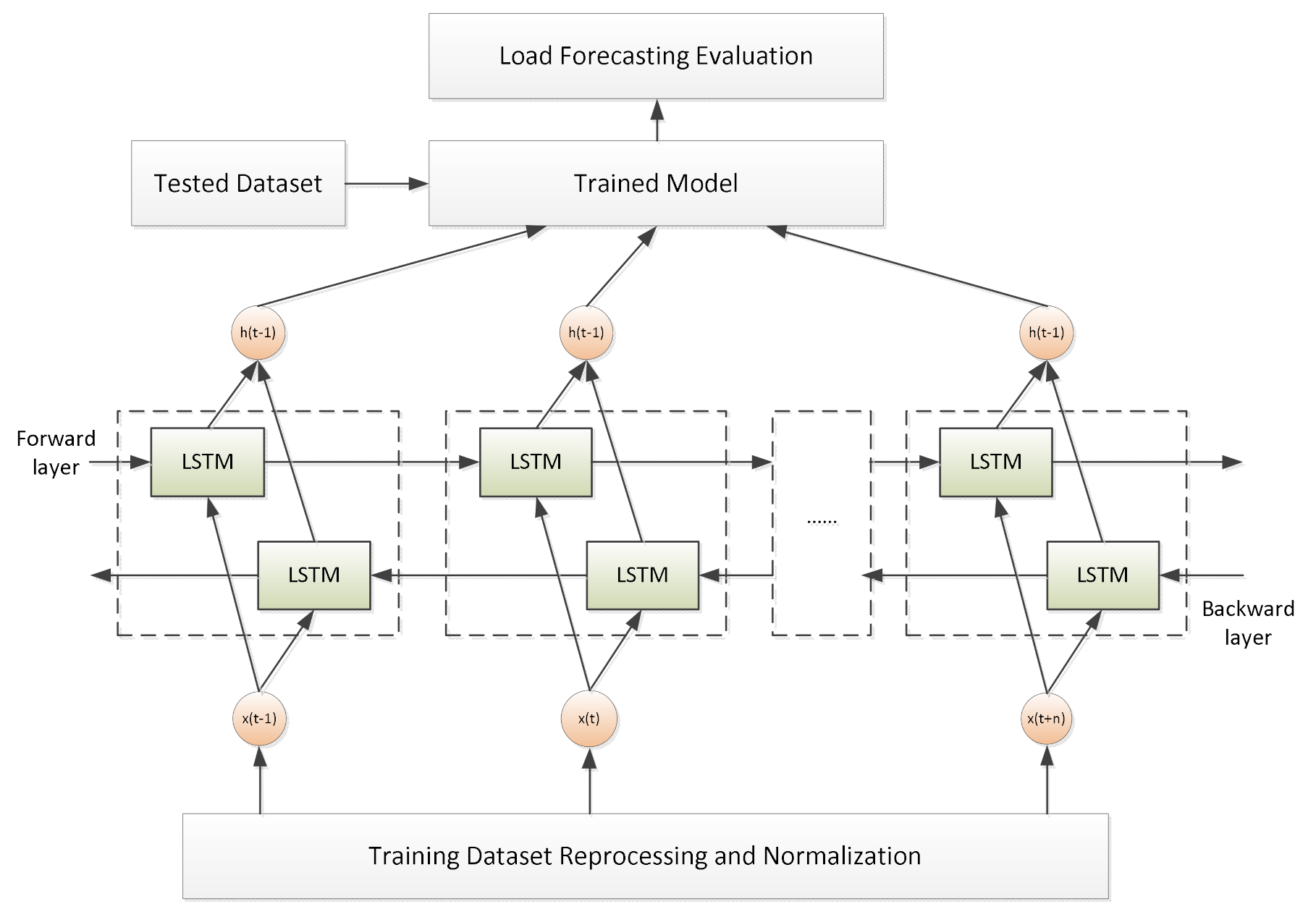

3. Multi-Layer Stacked Bidirectional LSTM Neural Network for Short-Term Load Forecasting

3.1. Multi-Layer Stacked Bidirectional LSTM Neural Network

3.2. Multi-Layer Stacked Bidirectional LSTM Based Load Forecasting

3.3. Evaluation Index

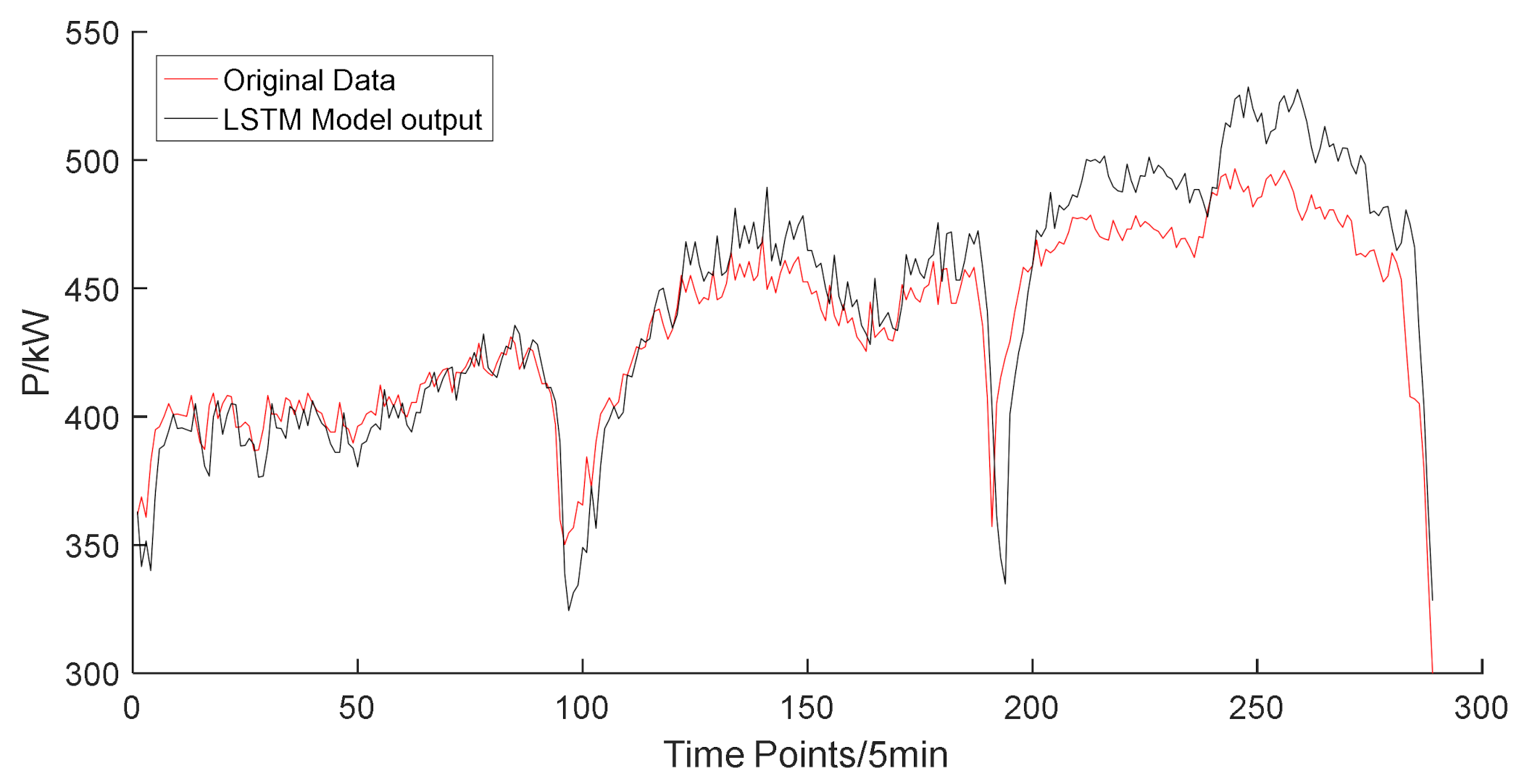

4. Simulation and Experimental Analysis

4.1. Dataset for Load Forecasting

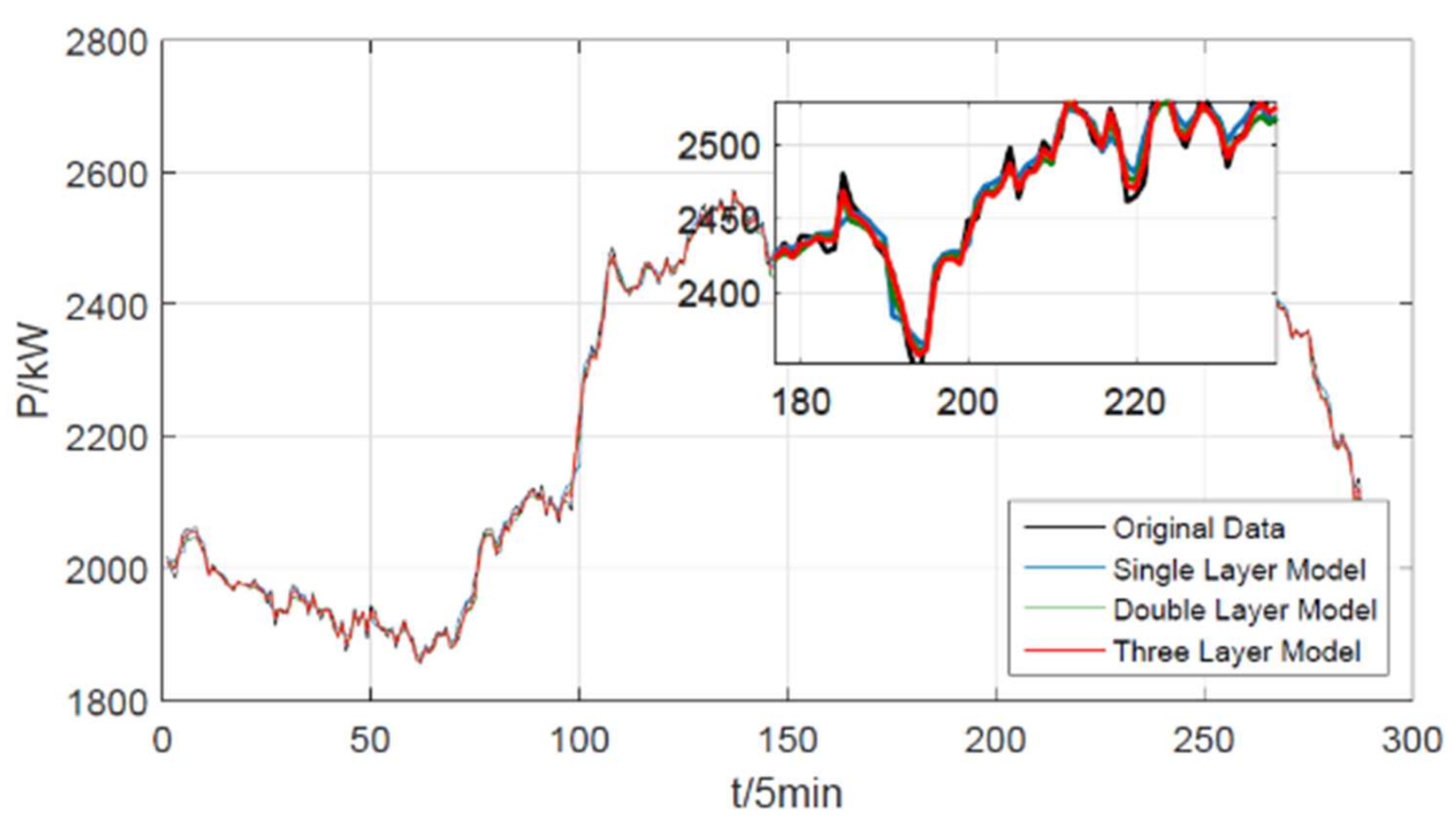

4.2. Neural Network Structure Determine

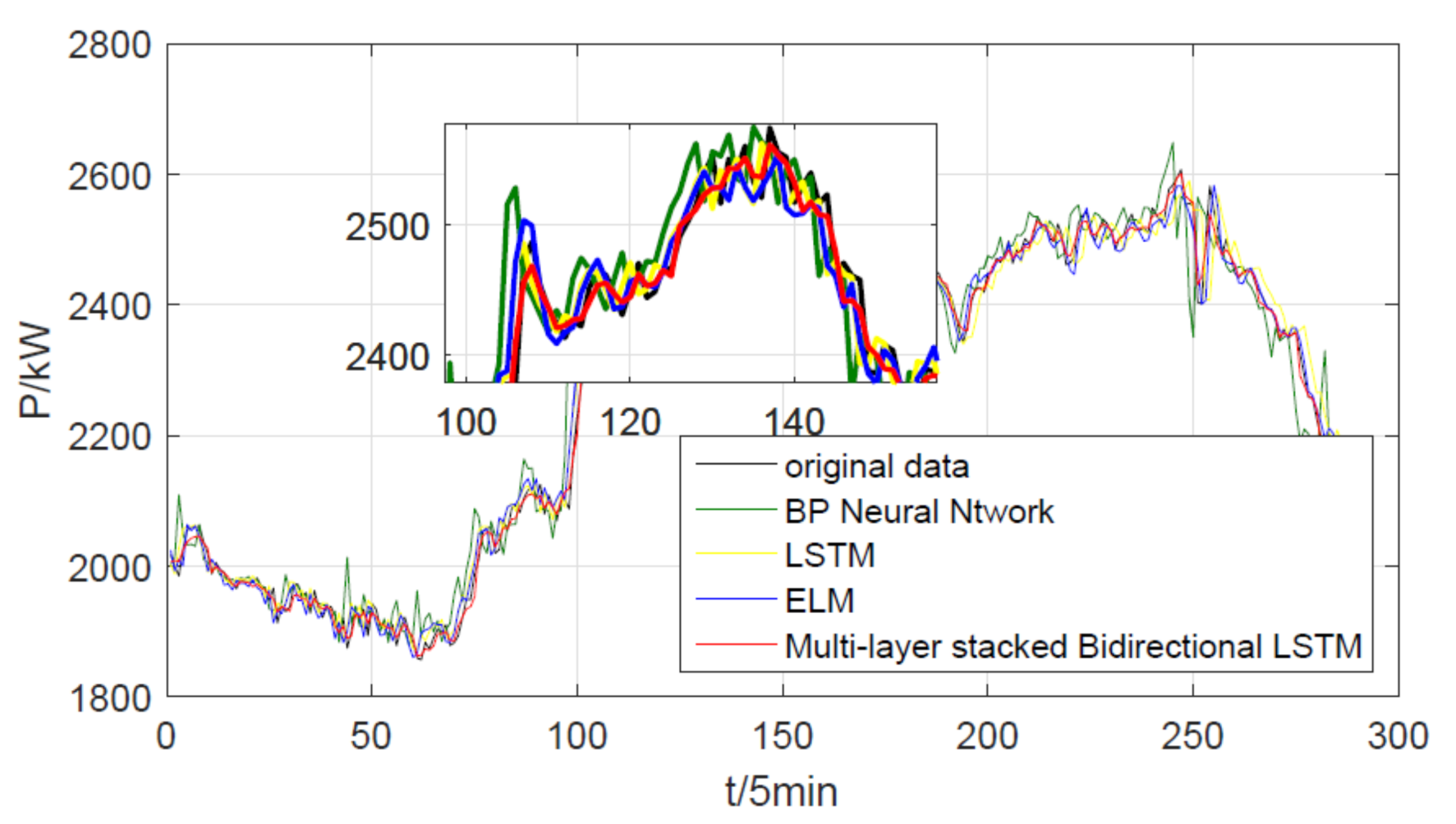

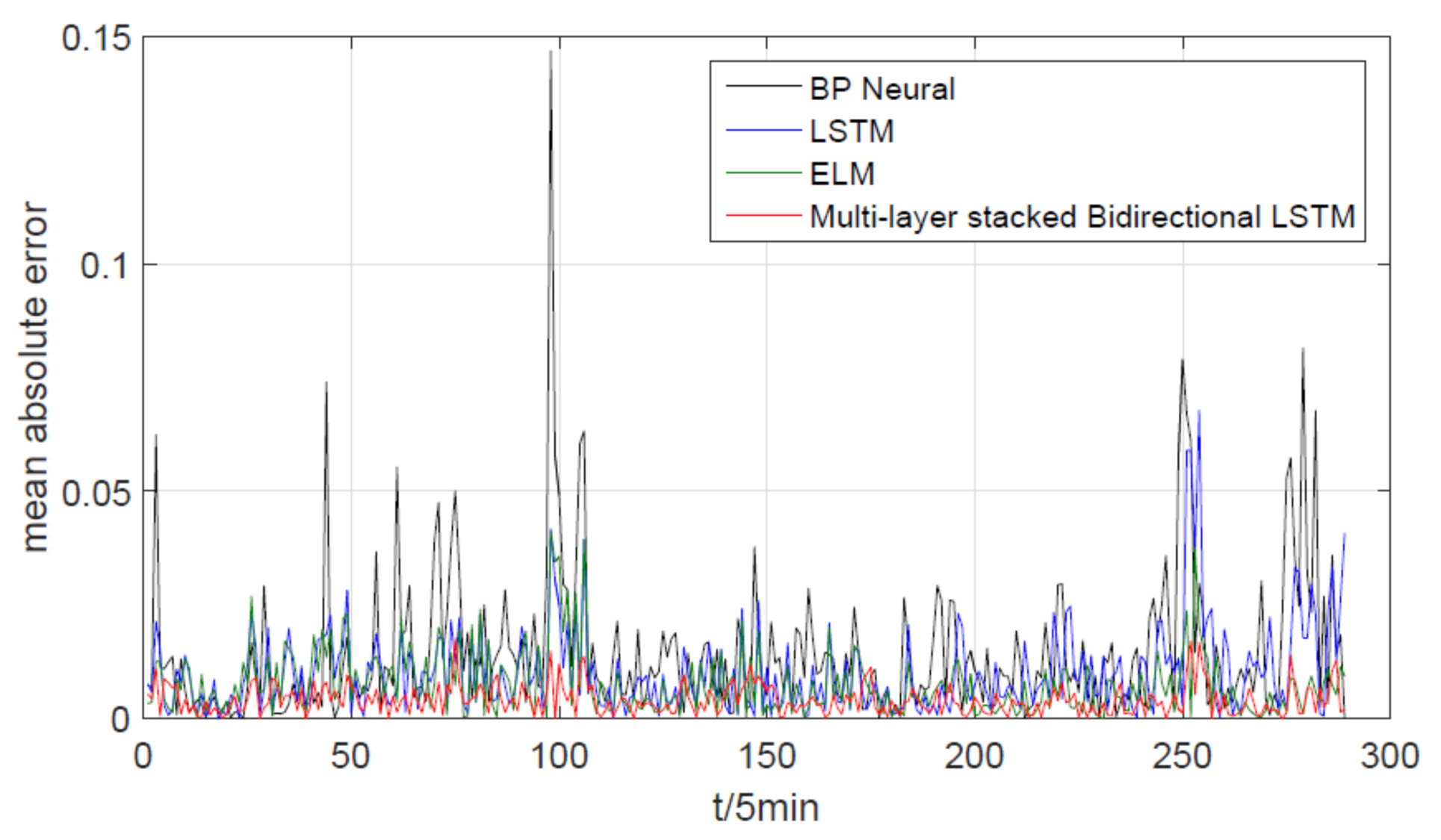

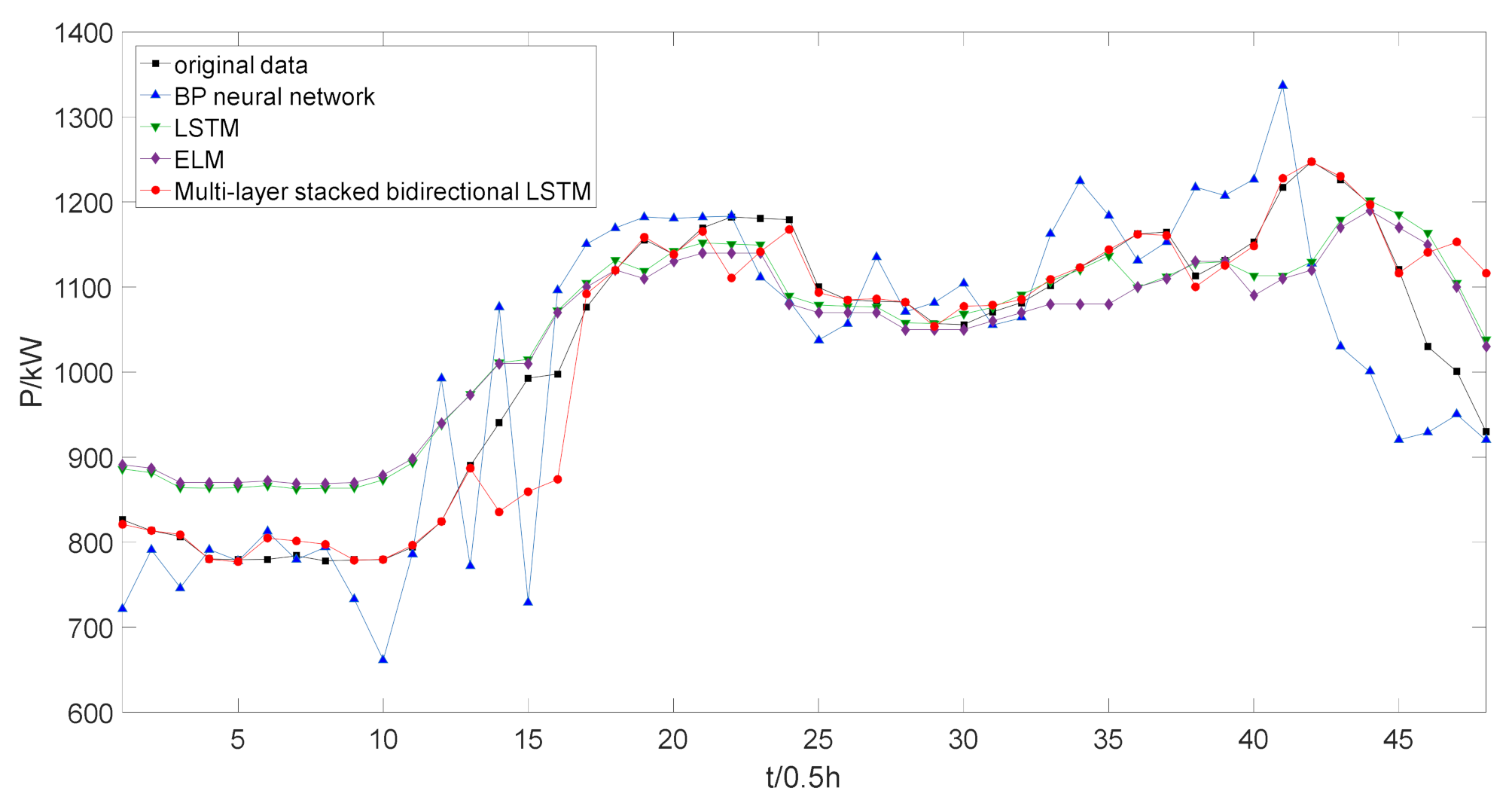

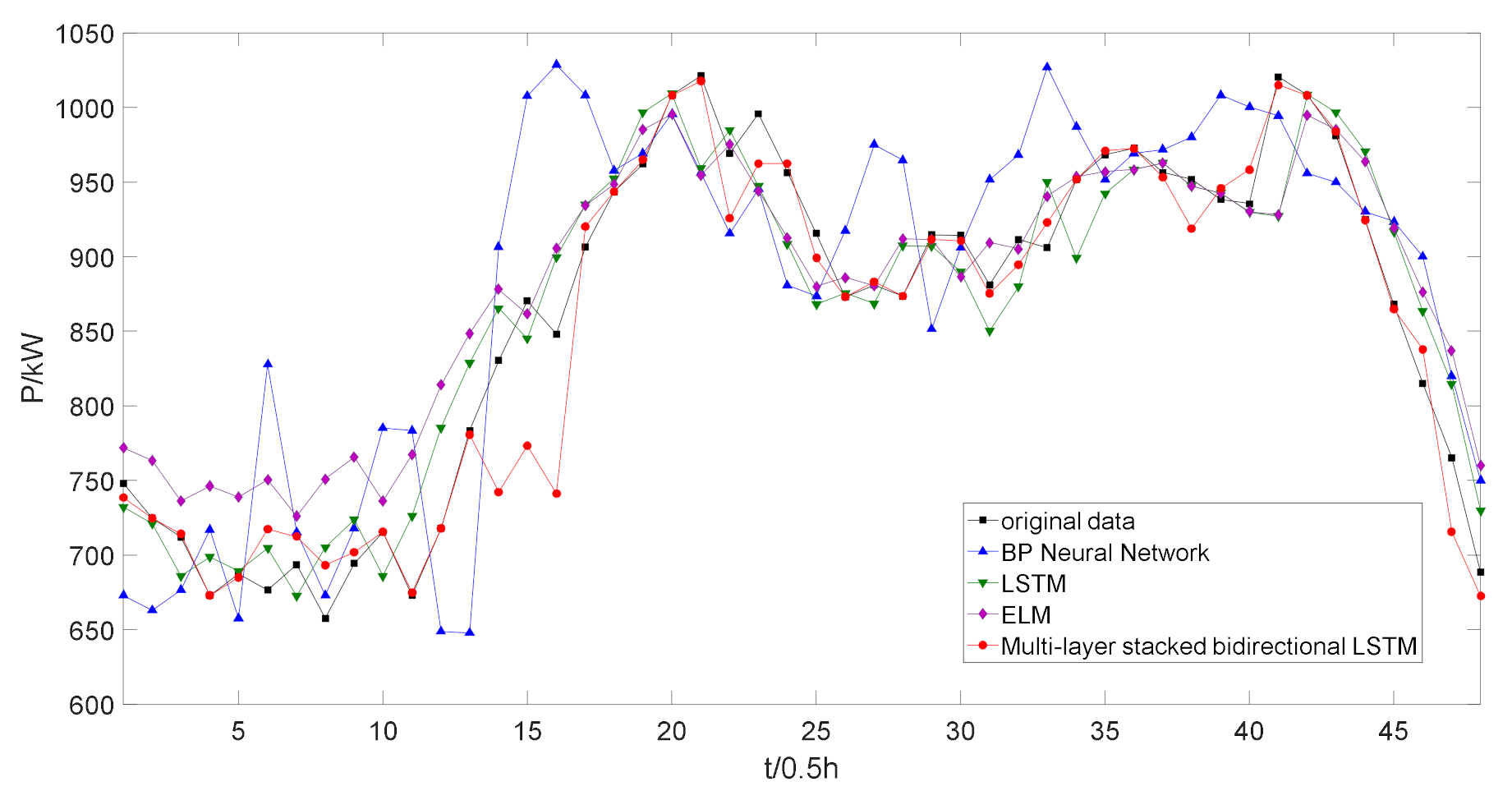

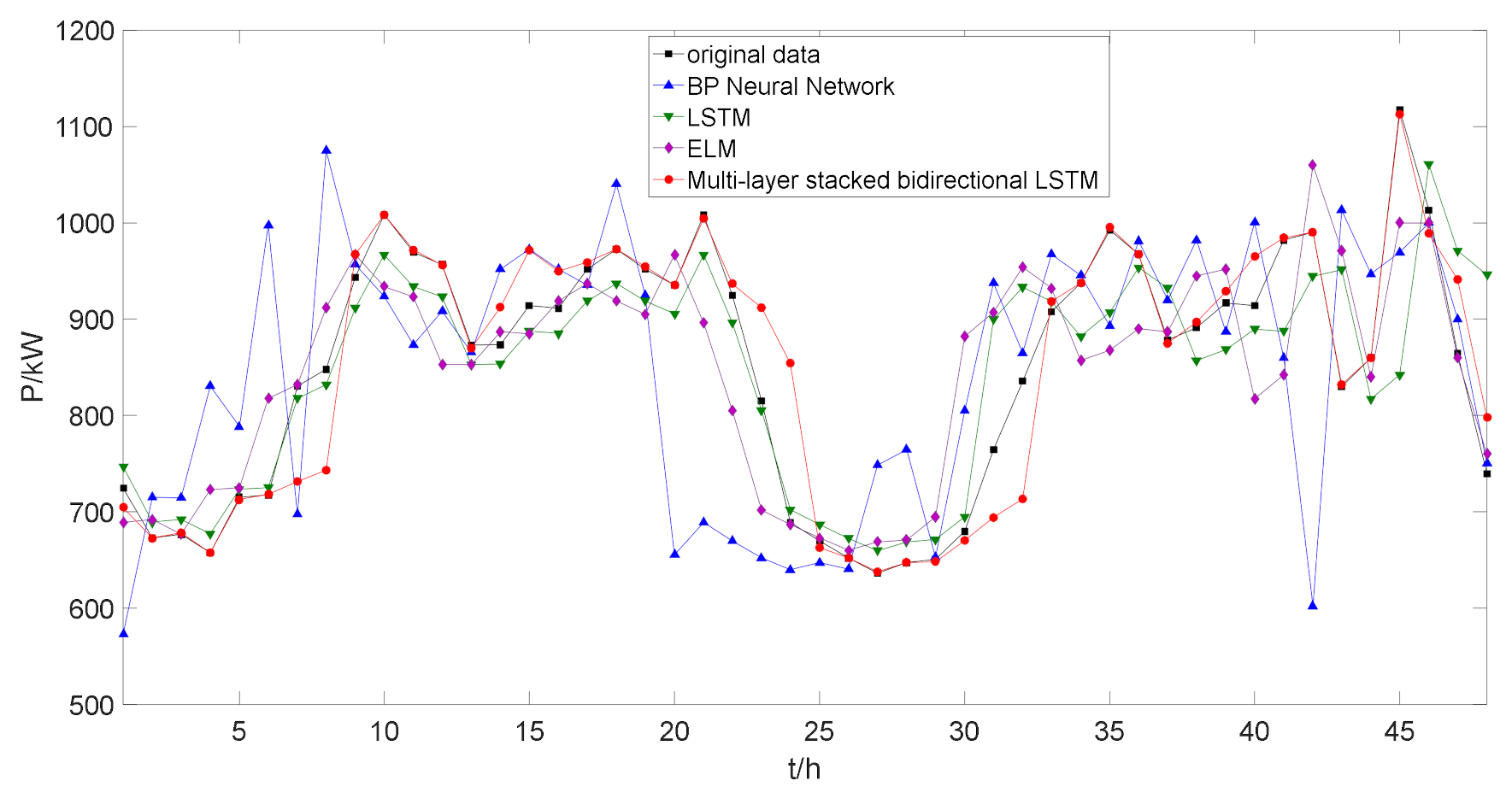

4.3. Method Comparison

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Quilumba, F.L.; Lee, W.J.; Huang, H.; Wang, D.Y.; Szabados, R.L. Using smart meter data to improve the accuracy of intraday load forecasting considering customer behavior similarities. IEEE Trans. Smart Grid 2014, 6, 911–918. [Google Scholar] [CrossRef]

- Xu, D.; Wu, Q.; Zhou, B.; Li, C.; Bai, L.; Huang, S. Distributed multi-energy operation of coupled electricity, heating and natural gas networks. IEEE Trans. Sustain. Energy 2019, 11, 2457–2469. [Google Scholar] [CrossRef]

- Al-Hamadi, H.M.; Soliman, S.A. Short-term electric load forecasting based on Kalman filtering algorithm with moving window weather and load model. Electr. Power Syst. Res. 2004, 68, 47–59. [Google Scholar] [CrossRef]

- Ceperic, E.; Ceperic, V.; Baric, A. A strategy for short-term load forecasting by support vector regression machines. IEEE Trans. Power Syst. 2013, 28, 4356–4364. [Google Scholar] [CrossRef]

- Kaur, A.; Nonnenmacher, L.; Coimbra, C.F. Net load forecasting for high renewable energy penetration grids. Energy 2016, 114, 1073–1084. [Google Scholar] [CrossRef]

- Cecati, C.; Kolbusz, J.; Rozycki, P.; Siano, P.; Wilamowski, B.M. A novel RBF training algorithm for short-term electric load forecasting and comparative studies. IEEE Trans. Ind. Electron. 2015, 62, 6519–6529. [Google Scholar] [CrossRef]

- Teo, T.T.; Logenthiran, T.; Woo, W.L. Forecasting of photovoltaic power using extreme learning machine. In Proceedings of the IEEE Innovative in Smart Grid Technologies-Asia (ISGT ASIA), Bangkok, Thailand, 3–6 November 2015; pp. 1–6. [Google Scholar]

- Teo, T.T.; Logenthiran, T.; Woo, W.L.; Abidi, K. Forecasting of photovoltaic power using regularized ensemble extreme learning machine. In Proceedings of the IEEE Region 10 Conference (TENCON), Singapore, 22–25 November 2016; pp. 455–458. [Google Scholar]

- Huang, C.J.; Kuo, P.H. Multiple-input deep convolutional neural network model for short-term photovoltaic power forecasting. IEEE Access 2019, 7, 74822–74834. [Google Scholar] [CrossRef]

- Zang, H.; Cheng, L.; Ding, T.; Cheung, K.W.; Liang, Z.; Wei, Z.; Sun, G. Hybrid method for short-term photovoltaic power forecasting based on deep convolutional neural network. IET Gener. Transm. Distrib. 2018, 12, 4557–4567. [Google Scholar] [CrossRef]

- Neo, Y.Q.; Teo, T.T.; Woo, W.L.; Logenthiran, T.; Sharma, A. Forecasting of photovoltaic power using deep belief network. In Proceedings of the 2017 IEEE Region 10 Conference (TENCON), Penang, Malaysia, 5–8 November 2017. [Google Scholar]

- Kong, X.; Li, C.; Zheng, F.; Wang, C. Improved deep belief network for short-term load forecasting considering demand-side management. IEEE Trans. Power Syst. 2019, 35, 1531–1538. [Google Scholar] [CrossRef]

- Dedinec, A.; Filiposka, S.; Kocarev, L. Deep belief network based electricity load forecasting: An analysis of Macedonian case. Energy 2016, 115, 1688–1700. [Google Scholar] [CrossRef]

- Chen, K.J.; Chen, K.L.; Wang, Q.; He, Z.; Hu, J.; He, J. Short-term load forecasting with deep residual network. IEEE Trans. Smart Grid 2018, 10, 3943–3953. [Google Scholar] [CrossRef] [Green Version]

- Ergen, T.; Kozat, S.S. Efficient online learning algorithms based on LSTM neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2017, 29, 3772–3783. [Google Scholar] [PubMed] [Green Version]

- Greff, K.; Srivastava, R.K.; Koutník, J.; Steunebrink, B.R.; Schmidhuber, J. LSTM: A search space odyssey. IEEE Trans. Neural Netw. Learn. Syst. 2016, 28, 2222–2232. [Google Scholar] [CrossRef] [Green Version]

- Feng, Y.; Zhang, T.; Sah, A.P.; Han, L.; Zhang, Z. Using appearance to predict pedestrian trajectories through disparity-guided attention and convolutional LSTM. IEEE Trans. Veh. Technol. 2021, 70, 7480–7494. [Google Scholar] [CrossRef]

- Ergen, T.; Kozat, S.S. Online training of LSTM networks in distributed systems for variable length data sequences. IEEE Trans. Neural Netw. Learn. Syst. 2017, 29, 5159–5165. [Google Scholar] [CrossRef] [Green Version]

- Yang, Y.; Zhou, J.; Ai, J.; Bin, Y.; Hanjalic, A.; Shen, H.T.; Ji, Y. Video captioning by adversarial LSTM. IEEE Trans. Image Process. 2018, 27, 5600–5612. [Google Scholar] [CrossRef] [Green Version]

- Gao, L.; Guo, Z.; Zhang, H.; Xu, X.; Shen, H.T. Video captioning with attention-based LSTM and semantic consistency. IEEE Trans. Multimedia 2017, 19, 2045–2055. [Google Scholar] [CrossRef]

- Sano, A.; Chen, W.; Martinez, D.L.; Taylor, S.; Picard, R.W. Multimodal ambulatory sleep detection using LSTM recurrent neural networks. IEEE J. Biomed. Health Inform. 2018, 23, 1607–1617. [Google Scholar] [CrossRef]

- Sahin, S.O.; Kozat, S.S. Nonuniformly sampled data processing using LSTM networks. IEEE Trans. Neural Netw. Learn. Syst. 2018, 30, 1452–1462. [Google Scholar] [CrossRef]

- Mohan, N.; Soman, K.; Kumar, S.S. A data-driven strategy for short-term electric load forecasting using dynamic mode decomposition model. Appl. Energy 2018, 232, 229–244. [Google Scholar] [CrossRef]

- Tang, X.; Dai, Y.; Wang, T.; Chen, Y. Short-term power load forecasting based on multi-layer bidirectional recurrent neural network. IET Gener. Transm. Distrib. 2019, 13, 3847–3854. [Google Scholar] [CrossRef]

- Wang, Y.; Shen, Y.; Mao, S.; Chen, X.; Zou, H. LASSO and LSTM Integrated Temporal Model for Short-Term Solar Intensity Forecasting. IEEE Internet Things J. 2018, 6, 2933–2944. [Google Scholar] [CrossRef]

- Ospina, J.; Newaz, A.; Faruque, M.O. Forecasting of PV plant output using hybrid wavelet-based LSTM-DNN structure model. IET Renew. Power Gener. 2019, 13, 1087–1095. [Google Scholar] [CrossRef]

- Yu, Y.; Cao, J.; Zhu, J. An LSTM short-term solar irradiance forecasting under complicated weather conditions. IEEE Access 2019, 7, 145651–145666. [Google Scholar] [CrossRef]

- Hong, Y.Y.; Martinez, J.J.F.; Fajardo, A.C. Day-ahead solar irradiation forecasting utilizing gramian angular field and convolutional long short-term memory. IEEE Access 2020, 8, 18741–18753. [Google Scholar] [CrossRef]

- Zhou, B.; Ma, X.; Luo, Y.; Yang, D. Wind power prediction based on LSTM networks and nonparametric kernel density estimation. IEEE Access 2019, 7, 165279–165292. [Google Scholar] [CrossRef]

- Abdel-Nasser, M.; Mahmoud, K. Accurate photovoltaic power forecasting models using deep LSTM-RNN. Neural Comput. Appl. 2019, 31, 2727–2740. [Google Scholar] [CrossRef]

- Quek, Y.T.; Woo, W.L.; Logenthiran, T. Load disaggregation using one-directional convolutional stacked long short-term memory recurrent neural network. IEEE Syst. J. 2019, 14, 1395–1404. [Google Scholar] [CrossRef]

| Input Gate | 0.023 | 0.020 | 0.120 | 0.127 | 0.033 | 0.975 | 0.044 | 0.037 | 0.579 | 0.035 |

| 0.044 | 0.049 | 0.017 | 0.012 | 0.034 | 0.025 | 0.041 | 0.001 | 0.043 | 0.037 | |

| 0.027 | 0.025 | 0.135 | 0.128 | 0.070 | 0.975 | 0.049 | 0.043 | 0.540 | 0.007 | |

| 0.025 | 0.047 | 0.042 | 0.029 | 0.034 | 0.025 | 0.048 | 0.043 | 0.005 | 0.040 | |

| Forget Gate | 0.024 | 0.006 | 0.030 | 0.008 | 0.032 | 0.015 | 0.050 | 0.016 | 0.024 | 0.005 |

| 0.012 | 0.015 | 0.013 | 0.046 | 0.013 | 0.045 | 0.041 | 0.048 | 0.050 | 0.019 | |

| 0.049 | 0.044 | 0.046 | 0.005 | 0.000 | 0.023 | 0.015 | 0.046 | 0.019 | 0.037 | |

| 0.007 | 0.014 | 0.030 | 0.007 | 0.043 | 0.016 | 0.044 | 0.025 | 0.047 | 0.037 | |

| Output Gate | 0.030 | 0.002 | 0.043 | 0.038 | 0.005 | −0.033 | 0.049 | 0.001 | 0.012 | 0.014 |

| 0.006 | 0.024 | 0.012 | 0.001 | 0.046 | 0.043 | 0.049 | 0.036 | 0.039 | 0.014 | |

| 0.008 | 0.048 | 0.029 | 0.037 | 0.038 | −0.044 | 0.023 | 0.012 | 0.002 | 0.035 | |

| 0.021 | 0.045 | 0.002 | 0.017 | 0.006 | 0.048 | 0.019 | 0.020 | 0.023 | 0.012 |

| Bi-LSTM Layers | 1 | 2 | 3 | 4 | 5 |

| MAPE (%) | 0.51 | 0.465 | 0.405 | 0.41 | 0.41 |

| Prediction Model | BP | LSTM | ELM | Proposed Method |

|---|---|---|---|---|

| MAPE (%) | 1.485 | 1.03 | 0.77 | 0.405 |

| RMSE | 2.95 | 1.921 | 1.369 | 0.706 |

| MAE | 33.564 | 23.236 | 17.07 | 9.341 |

| Forecast Time Interval | BP [6] | LSTM [21] | ELM [8] | Proposed Method | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MAPE% | RMSE | MAE | MAPE% | RMSE | MAE | MAPE% | RMSE | MAE | MAPE% | RMSE | MAE | |

| 0–2 h | 0.73 | 28.53 | 14.76 | 0.59 | 15.94 | 11.79 | 0.58 | 14.21 | 11.58 | 0.35 | 9.36 | 7.13 |

| 2–4 h | 0.92 | 33.16 | 17.71 | 1.18 | 26.11 | 22.66 | 1.11 | 25.33 | 21.56 | 0.49 | 10.8 | 9.39 |

| 4–6 h | 1.62 | 40.76 | 30.47 | 1.01 | 23.14 | 19.18 | 1.08 | 23.17 | 20.49 | 0.41 | 9.09 | 7.7 |

| 6–8 h | 1.71 | 40.38 | 35.08 | 1.03 | 25.62 | 21.21 | 0.92 | 23.4 | 18.96 | 0.52 | 12.96 | 10.66 |

| 8–10 h | 2.67 | 90.05 | 60.54 | 1.22 | 38.12 | 28.11 | 1.26 | 41.67 | 28.95 | 0.49 | 14.95 | 11.43 |

| 10–12 h | 1.1 | 30.61 | 27.58 | 0.79 | 24.86 | 19.93 | 0.65 | 21.26 | 16.38 | 0.39 | 11.89 | 9.96 |

| 12–14 h | 1.27 | 36.6 | 30.36 | 0.66 | 22.32 | 15.65 | 0.57 | 18.78 | 13.51 | 0.43 | 12.9 | 10.41 |

| 14–16 h | 1.05 | 32.59 | 25.15 | 0.56 | 18.35 | 13.4 | 0.61 | 17.76 | 14.67 | 0.39 | 11.73 | 9.51 |

| 16–18 h | 0.95 | 28.58 | 23.3 | 0.78 | 23.36 | 19.12 | 0.43 | 13.44 | 10.37 | 0.27 | 7.96 | 6.54 |

| 18–20 h | 1.09 | 33.3 | 27.2 | 1.0 | 30.51 | 25.11 | 0.56 | 17.02 | 14.01 | 0.32 | 9.82 | 8.03 |

| 20–22 h | 2.27 | 78.7 | 57.12 | 1.85 | 65.6 | 46.68 | 0.93 | 31.81 | 23.52 | 0.48 | 16.9 | 11.99 |

| 22–24 h | 2.42 | 73.32 | 54.88 | 1.57 | 41.71 | 35.33 | 0.48 | 13.01 | 10.8 | 0.42 | 12.76 | 9.56 |

| Prediction Model | BP | LSTM | ELM | Proposed Method |

|---|---|---|---|---|

| MAPE (%) | 6.77 | 5.44 | 5.61 | 2.39 |

| RMSE | 91.9627 | 64.244 | 67.237 | 50.827 |

| MAE | 69.535 | 51.158 | 56.03 | 23.763 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cai, C.; Tao, Y.; Zhu, T.; Deng, Z. Short-Term Load Forecasting Based on Deep Learning Bidirectional LSTM Neural Network. Appl. Sci. 2021, 11, 8129. https://doi.org/10.3390/app11178129

Cai C, Tao Y, Zhu T, Deng Z. Short-Term Load Forecasting Based on Deep Learning Bidirectional LSTM Neural Network. Applied Sciences. 2021; 11(17):8129. https://doi.org/10.3390/app11178129

Chicago/Turabian StyleCai, Changchun, Yuan Tao, Tianqi Zhu, and Zhixiang Deng. 2021. "Short-Term Load Forecasting Based on Deep Learning Bidirectional LSTM Neural Network" Applied Sciences 11, no. 17: 8129. https://doi.org/10.3390/app11178129

APA StyleCai, C., Tao, Y., Zhu, T., & Deng, Z. (2021). Short-Term Load Forecasting Based on Deep Learning Bidirectional LSTM Neural Network. Applied Sciences, 11(17), 8129. https://doi.org/10.3390/app11178129