Abstract

Efficiently solving a system identification problem represents an important step in numerous important applications. In this framework, some of the most popular solutions rely on the Wiener filter, which is widely used in practice. Moreover, it also represents a benchmark for other related optimization problems. In this paper, new insights into the regularization of the Wiener filter are provided, which is a must in real-world scenarios. A proper regularization technique is of great importance, especially in challenging conditions, e.g., when operating in noisy environments and/or when only a low quantity of data is available for the estimation of the statistics. Different regularization methods are investigated in this paper, including several new solutions that fit very well for the identification of sparse and low-rank systems. Experimental results support the theoretical developments and indicate the efficiency of the proposed techniques.

1. Introduction

The Wiener filter has been an extremely useful tool since its invention by Norbert Wiener [1]. It represents one of the most popular solutions for system identification problems and finds important applications within a wide range of domains [2,3,4,5]. Basically, the Wiener filter is able to estimate a reference (or desired) random process by filtering an observed noisy process, which is somehow related (or correlated) to the reference signal. In this framework, the filtering process is linear and time invariant, all the signals (input, reference, and noise) are considered stationary processes, and the external (additive) noise that usually corrupts the reference signal is uncorrelated with the input signal. Overall, the Wiener filter is a statistical-based approach, where the solution results following an optimization criterion that relies on the minimization of the mean-squared error. In this context, the error is defined as the difference between the reference signal and the output of the filter.

The optimal solution (i.e., the optimal coefficients) corresponding to the Wiener filter can be simply found by solving a linear system of L equations, where L denotes the length of the impulse response. In other words, the vector (of length L) containing the optimal coefficients is obtained by multiplying the inverse of the covariance matrix of the input signal (of size ) with the cross-correlation vector (of length L) between the input and reference signals.

Despite this straightforward mathematical formulation, there are several major challenges related to the use of the Wiener filter in real-world applications. First, the accuracy of the conventional Wiener solution relies on the accuracy of the statistical estimates—namely, the covariance matrix and the cross-correlation vector mentioned previously. In order to reliably estimate these statistics, a large number of data samples (i.e., much larger than L) should be available. However, this is not always the case in practice, since in many applications, a low quantity of data is available, or there is incomplete information about the system. This challenge becomes even more apparent when facing the identification of long-length impulse responses. Second, the matrix inversion operation is also a critical step that should be carefully handled [6]. The basic idea is to avoid an ill-conditioning or a rank-deficient problem, which could result in a bad matrix inversion. For this purpose, the covariance matrix of the input signal needs to be regularized before inversion, usually by adding a positive constant to its main diagonal. It is known that the value of this regularization parameter becomes important in noisy conditions [7]. In other words, the additive noise that usually corrupts the reference signal could significantly influence the overall performance of the Wiener filter, especially in heavy noise environments.

The accuracy of the statistical estimates depends on specific constraints of particular applications, in terms of the availability of the quantity of data. Nevertheless, reformulating a conventional system identification problem using smaller data structures represents a potential solution to improve the behavior of the Wiener filter when dealing with a small quantity of data [8]. In addition, the matrix inversion operation could be avoided by using alternative coordinate descent methods [9]. However, the problem of handling an ill-conditioned matrix remains a critical issue, which can be addressed in terms of using a proper regularization technique, as mentioned before.

The previously mentioned challenges related to the Wiener filter have been addressed in the context of different applications. Recently, in [10], the Kronecker product decomposition of the impulse response was exploited in order to improve the accuracy of the Wiener filter for long-length impulse responses identification. Due to this feature, the resulting solution fits very well in the framework of echo cancellation, for example. In addition, several regularization methods were developed for denoising applications and image restoration, e.g., [11,12,13] and the references therein. Nevertheless, there is still room for performance improvement, especially when using the Wiener filter in challenging conditions, as explained above.

The goal of this paper is to provide new insights into the Wiener filter for system identification problems. The main focus concerns the important practical aspects related to the regularization of this optimal filter in difficult conditions, e.g., working in noisy environments and/or handling a limited set of available data/information. Following this introduction, Section 2 and Section 3 briefly present the system model behind the conventional Wiener filter and some useful background, respectively. Next, Section 4 details different regularization methods of the Wiener filter, also showing how the sparse character of the system can be exploited in this context. Furthermore, a solution obtained using the Kronecker product decomposition of the impulse response is developed in Section 5, which is suitable for low-rank systems identification. In Section 6, the quadratic eigenvalue problem is used for the regularization of the Wiener filter, which can be successfully applied when some a priori knowledge on the impulse response is available. All these techniques and theoretical findings are supported by the simulation results provided in Section 7. Finally, the main conclusions are summarized in Section 8.

2. System Model and the Conventional Wiener Filter

Let us consider a linear single-input/single-output (SISO) system [14], whose output at the discrete-time index k is given by

where is also known as the reference or desired signal,

is a vector containing the most recent L time samples of the zero-mean input signal , the superscript denotes the transpose operator, is the unknown system impulse response of length L, and is the zero-mean additive noise. It is assumed that the signals take real values and that and are uncorrelated. Then, the objective of the so-called SISO system identification problem is to identify or estimate , in the best possible way, given the input signal vector, , and the output signal, . For this purpose, the celebrated Wiener technique is the most appropriate [15,16,17].

From (1), it is clear that the variance of is

where is the covariance matrix of , with denoting mathematical expectation, and is the variance of . It can be deduced from (2) that the signal-to-noise ratio (SNR) of the studied SISO system as presented in (1) is

Even though this SNR depends explicitly on the channel impulse response of the system, in many practical identification problems, this SNR is roughly known even if is not available [18].

Let be a real-valued linear filter of length L, which is also an estimate of , so that is an estimate of . Then, from the minimization of the popular mean-squared error (MSE) criterion

the conventional Wiener filter [3,5] is found

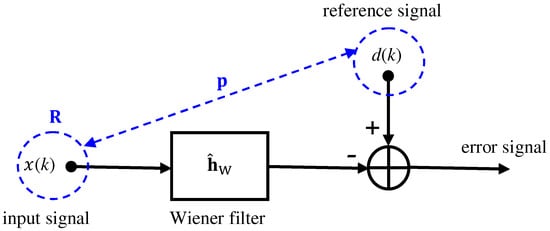

where represents the cross-correlation vector (of length L) between and . The block diagram of the conventional Wiener filter is depicted in Figure 1. While leads to satisfactory results in many important applications [18], there is still room for improvement.

Figure 1.

General block diagram of the conventional Wiener filter.

It should be outlined that in different real-world applications, the presence of outliers (in the input signal and/or external noises) could bias the MSE criterion and, consequently, the solution from (5). This is due to the fact that the basic theory behind the conventional Wiener filter relies on the assumption of zero-mean and stationary signals. In order to cope with different outliers, the conventional approach should further involve additional control and robustness mechanisms, e.g., see [19] and the references therein. The investigation of such particular cases is beyond the scope of this paper.

3. Useful Definitions

In this section, many definitions that will be useful in the rest of this paper are presented.

Let

be a real-value vector of length L. The (or Euclidean) norm can be defined as

The norm is given by

Another very useful vector norm is the norm:

where stands for cardinality. In other words, the norm of is simply the number of nonzero elements of .

Let be a rectangular matrix of size containing real values, where without loss of generality, it is always assumed that . The Frobenius norm of is defined as

where denotes the trace of a square matrix.

The singular value decomposition (SVD) technique [20] is a very powerful tool that can decompose any matrix as

where and are two orthogonal matrices of sizes and , respectively, and is an rectangular diagonal matrix having on its main diagonal nonnegative real numbers. The columns of (resp. ) are known as the left-singular (resp. right-singular) vectors of , whereas the diagonal entries of are called the singular values of with . In the case when the rank of is equal to , i.e., , then this matrix may be decomposed as

From (10), it is deduced that the nuclear norm of is

Let . can be decomposed as

where are short vectors of length each. As a result, the vector of length can be transformed into a rectangular matrix of size :

Therefore, , where by it is denoted the vectorization operation, consisting of converting a matrix into a vector. Furthermore, , where is the inverse of the vectorization operation.

Let be the system impulse response of length L. A good measure of the sparseness of is [21,22]

The closer is to 1, the sparser is .

Let be the system impulse response and its estimate. A good way to evaluate how close is to is via the normalized misalignment,

or, equivalently,

Let be a convex set. The convex envelope of a function is defined as the largest convex function g such that for all [23].

4. Wiener Filter with Different Kinds of Regularization

The Wiener filter can definitely be improved with an appropriate regularization, which should depend on the properties of the system that needs to be identified. In this section, the most useful regularization techniques are exposed.

The most well-known regularization approach is the so-called Tikhonov regularization, which is based on the norm. The Wiener problem is now

where is the regularization parameter, which controls the tradeoff between the fidelity of the original data and large values of the coefficients of the filter. The optimization problem in (18) has obviously a closed-form solution, which is

where is the identity matrix. The filter is much more reliable than , especially when is ill conditioned, and is low.

If the system is sparse, the following optimization problem is more appropriate [24]:

This problem finds the sparsest solution, but unfortunately, it is NP-hard [24]. Clearly, a large value of leads to a sparse filter. Using the fact that the convex envelop of is , can be replaced by in (20) to obtain

which is now a convex problem [25] and is roughly equivalent to (20). The optimization problem in (21) does not have a closed-form solution, but many iterative/adaptive algorithms exist in the literature that converge to the optimal solution, including proportionate-type adaptive filters [22]. The main advantage of (21) is that even if is very ill conditioned, or its estimate is performed with a limited number of observations, if the system is sparse, then a better solution than the conventional Wiener filter can be expected.

Taking the gradient of with respect to and equating the result to zero, it can be found that

where

is a diagonal matrix and are the elements of . As a result, (22) can be rewritten as

From the previous expression, it is possible to derive a simple iterative algorithm. At iteration 0, one may take

Then, at iteration i, it can be written

where is a regularization parameter that is not necessarily equal to . The iterations continue until convergence.

Let with , so that . In the same way, . In many applications, is far to be a full-column rank matrix and this important information should be somehow exploited. In other words, is a low-rank system. Therefore, in the MSE criterion, it makes more sense to apply a regularization based on the rank of , i.e., [26]

Unfortunately, the matrix rank minimization in (27) is NP-hard, in general, due to the combinatorial nature of the function [26]. Interestingly, when the matrix is diagonal, the previous problem reduces to the optimization problem in (20). Consequently, the sparse problem is taken into account in (27). Using the fact that the convex envelop [27] of is , (27) is roughly equivalent to the optimization problem [26]:

Some iterative algorithms exist to solve this problem, but they do not seem to work well for long impulse responses; furthermore, complexity may be prohibitive since an approximate SVD needs to be computed at each iteration. Recent insights can be found in [28] and the references therein.

5. Wiener Filter via Kronecker Product Decomposition

In this section, the investigation on the identification of low-rank systems with the Wiener filter is further discussed. An attempt to minimize the nuclear norm of the system in the regularization part was presented in the previous section, but this approach does not seem very practical, and complexity may be extremely high, especially for large matrices.

Here, instead, it is assumed that the rank of the matrix is known and is much smaller than , i.e., . In this case, can also be expressed as

where and , of lengths and , respectively, are impulse responses. This decomposition of can be explicitly used in the MSE criterion as explained below. As a result

where ⊗ is the Kronecker product. Furthermore, the filter can also be decomposed as

where and are filters having lengths and , respectively. By using the following relationships:

where by and are denoted the identity matrices of sizes and , respectively, into (31), it is obtained that

where and are matrices of sizes and , respectively. The two expressions from (33) may be rewritten as

where

are matrices of sizes and , respectively, and

are vectors of lengths and , respectively. With this Kronecker product decomposition, it can be seen that and need to be identified instead of , i.e., components instead of .

Assume that (or, equivalently, ) is fixed. Then, the MSE criterion is

where

The minimization of with respect to leads to

In the same way, assume that (or, equivalently, ) is fixed. Then, the MSE criterion is

where

The minimization of with respect to leads to

By alternatively iterating between and , an iterative Wiener filter is obtained, that converges to the true solution [10]. This approach, as shown in [10], performs better than the conventional Wiener filter in various contexts of noise and available data for the estimation of the involved second-order statistics.

A more robust iterative Wiener filter must include regularization based on the norm, i.e.,

from which the following optimal solutions are found:

which is equivalent to regularize the covariance matrix . Again, by alternatively iterating between and , a robust iterative Wiener filter is obtained, that converges to the true solution. In order to avoid any potential numerical issues, a very small positive constant should be added to the diagonal of the matrices in (41) and (42) before inversion.

6. Wiener Filter with the Quadratic Eigenvalue Problem

Assume that some knowledge is available on the impulse response, , i.e., with . This information can be used to exactly regularize (with the Tikhonov technique) the Wiener filter with the help of the so-called quadratic eigenvalue problem (QEP) [29,30]. This approach is explained in this section.

The criterion is

From the above criterion, the Lagrange function can be written:

where is a Lagrange multiplier. Differentiating with respect to and and equating to zero yields the equations:

It can be shown [31,32] that the smallest solution of the two previous equations is needed to solve (43).

Assume that is not an eigenvalue of and let

In fact, because , all the eigenvalues of are real and strictly positive. To be in accordance with the regularization technique, also needs to be positive definite. Consequently, needs to always be negative.

It is easily deduced from this second equation that

Developing (48) and using the previous result, it is obtained that

As a consequence, (51) can be rewritten as

or alternatively,

where

and represents the -matrix, which is a degree 2 matrix polynomial. In (53), the QEP [29] can be recognized, where is the right eigenvector corresponding to the eigenvalue . Consequently, the solution of (43) is , where is the smallest eigenvalue of (53).

The QEP represents a generalization of the standard eigenvalue problem (SEP), , and the generalized eigenvalue problem (GEP), . Having a -matrix of size , the QEP has eigenvalues (finite or infinite) containing up to right and left eigenvectors. If there are more than L eigenvectors, then they cannot, of course, be linearly independent [29]. In the current context, since is nonsingular, the QEP has finite eigenvalues. Because , , and are symmetric, the eigenvalues are real or come in pairs [29], where the superscript denotes the complex conjugate operator.

An important observation regarding the QEP is that the linearization process is not unique. It is said that a matrix represents a linearization of the -matrix if the eigenvalues of these matrices are identical. Due to this process, the QEP can be solved with the GEP. One option is to take

It can be checked that if is an eigenvector of , then

is an eigenvector of . This process corresponds to the symmetric linearization since both and are symmetric. The accuracy of this approach will depend on the conditioning of and . Another possibility is to take

where is the identity matrix. This linearization is not symmetric. Different linearizations may yield different results; therefore, choosing the right one is important.

The eigenvalues of can be ordered as

where denotes the real part of a complex number. Then, the regularized Wiener filter with the QEP is

Now, if the eigenvalues with the P smallest real parts are considered, another version of the Wiener filter is obtained

where

7. Simulations

In this section, simulation results are provided to support the main theoretical findings related to the regularization of the Wiener filter, which were presented in Section 4, Section 5 and Section 6. All the experiments were performed using MATLAB R2018b on an Asus GL552VX device (Windows 10 OS), having an Intel Core i7-6700HQ CPU @ 2.60 GHz, with 4 Cores, 8 Logical Processors, and 16 GB of RAM.

7.1. Conventional Wiener Filter with -Norm Regularization

First, it is important to assess the performance of the conventional Wiener filter, which depends on several important factors, such as the accuracy of the statistical estimates, the SNR, and the regularization parameter. The performance measure is the normalized misalignment (in dB), which is evaluated based on (16). The input signal, , is an AR(1) process with a pole at 0.9, while the additive noise, , is white and Gaussian, and different values of the SNR are used. The unknown system, , is the first impulse response from G168 Recommendation [33], which is padded with zeros up to the length . It represents a network echo path, which is sparse in nature. Its sparsity measure can be evaluated based on (15) and results in .

The first critical factor that influences the overall performance of the Wiener filter is the accuracy of the statistical estimates—namely, the covariance matrix, , and the cross-correlation vector, . Let us consider that N data samples are used to estimate these quantities, which result in

A higher value of N (as compared to the filter length L) leads to a reliable set of estimates. On the other hand, when a low quantity of data samples is used for the estimation of the statistics (i.e., ), the conventional Wiener filter is not able to provide an accurate solution. Such a scenario is very challenging in different practical applications, where only an incomplete set of data/information is available. Moreover, in the framework of a low SNR environment, the challenges become even more apparent.

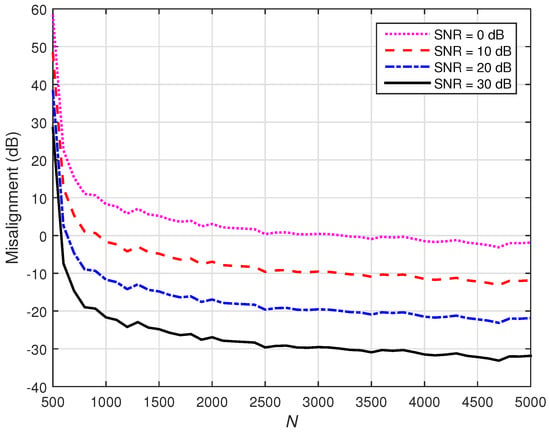

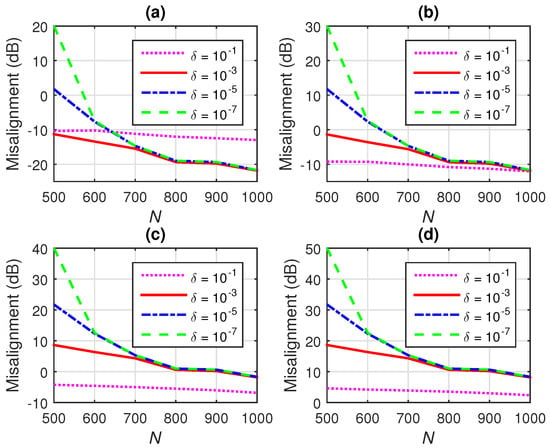

In order to highlight these aspects, the performance of the conventional Wiener filter depending on N and for different SNRs is illustrated. Having available the statistical estimates from (63) and (64), the conventional Wiener solution is obtained based on (19). In this simulation, a very small value of the regularization parameter is used, i.e., . The results are presented in Figure 2. As expected, a larger value of N improves the Wiener solution, while the accuracy is also influenced by a lower SNR. The critical scenario appears for , where the conventional Wiener filter is not able to obtain a reliable solution even for a high SNR.

Figure 2.

Misalignment of the conventional Wiener filter with -norm regularization for different values of N and SNRs, using .

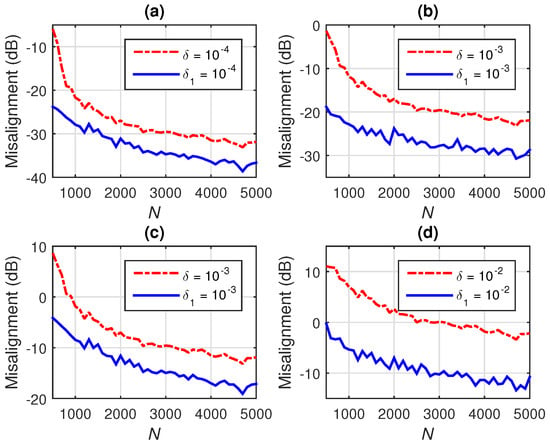

Next, the influence of the regularization parameter, , on the performance of the conventional Wiener filter is evaluated in different SNR conditions. First, in Figure 3a,b, good SNR conditions are considered, by setting dB and dB, respectively, while choosing different values for . As it can be noticed, the influence of the regularization parameter becomes more apparent when using a low quantity of data (e.g., ) to estimate the statistics. In this case, a larger value of can improve the accuracy of the solution. The influence of the regularization parameter becomes less important for ; in these conditions, a larger value of can even bias the Wiener solution. Nevertheless, in low SNR environments, a larger value of the regularization parameter is required. This is supported in Figure 3c,d, in which the is set to 10 dB and 0 dB, respectively. As it can be observed, the largest value of improves the performance for any value of N. However, the -norm regularization used in (19) does not exploit the sparse character of the system, which is taken into account within the -norm framework presented in Section 4. Details of these results [focusing on the initial parts of the plots from Figure 3a–d] are shown in Figure 4a–d.

Figure 3.

Misalignment of the conventional Wiener filter with -norm regularization for different values of N and , using (a) dB, (b) dB, (c) dB, and (d) dB.

Figure 4.

Details of Figure 3 (focusing on the initial parts of the plots), showing the misalignment of the conventional Wiener filter with -norm regularization for different values of N and , using (a) dB, (b) dB, (c) dB, and (d) dB.

7.2. Iterative Wiener Filter with -Norm Regularization

The iterative -norm regularization from (26) can improve the Wiener solution for sparse system identification. This is supported in the next set of experiments, where the iterative -norm regularization is compared to the conventional -norm regularization of the Wiener filter.

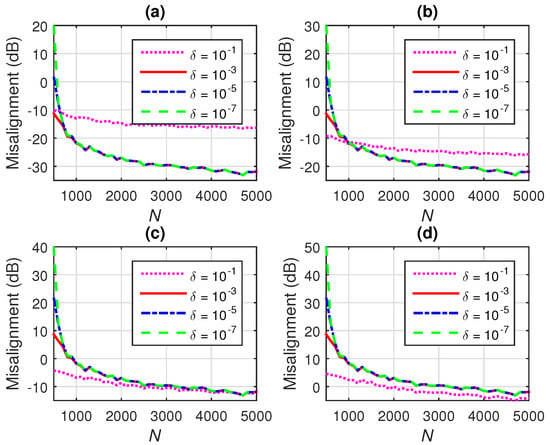

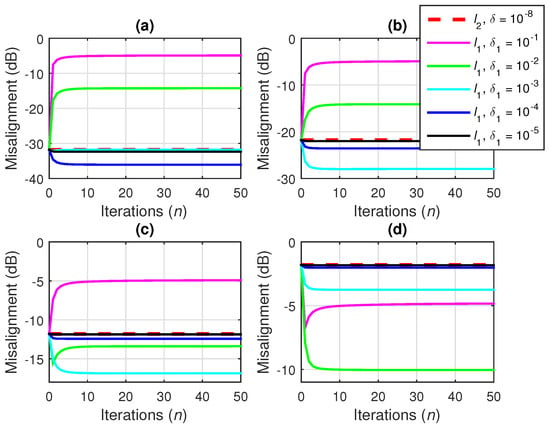

In Figure 5, different are set and a large quantity of data is used to estimate the statistics, i.e., . The conventional Wiener filter uses , while the iterative -norm regularization involves different values of the parameter from (26). As it can be observed in Figure 5 (where ), for some values of , the iterative -norm regularization leads to better performance. Details of these results [focusing on the overlapping curves in Figure 5a–d] are shown in Figure 6a–d.

Figure 5.

Misalignment of the conventional Wiener filter with -norm regularization using and the Wiener filter with iterative -norm regularization using different values of , for and (a) dB, (b) dB, (c) dB, and (d) dB.

Figure 6.

Details of Figure 5 (focusing on the overlapping curves), showing the misalignment of the conventional Wiener filter with -norm regularization using and the Wiener filter with iterative -norm regularization using different values of , for and (a) dB, (b) dB, (c) dB, and (d) dB.

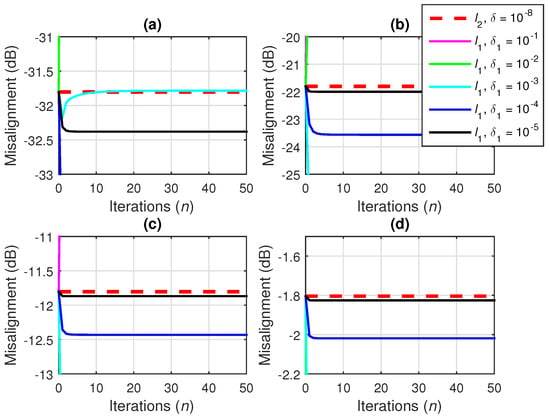

This is more apparent for low values of N, e.g., when , as illustrated in Figure 7. In this case, the conventional -norm regularization is clearly outperformed by the iterative -norm version, for all the values of .

Figure 7.

Misalignment of the conventional Wiener filter with -norm regularization using and the Wiener filter with iterative -norm regularization using different values of , for and (a) dB, (b) dB, (c) dB, and (d) dB.

The SNR also influences the performance of the iterative -norm regularization, and the values of from (26) depend on the noise level. As it can be observed in Figure 5, Figure 6 and Figure 7, a higher value of is required when SNR decreases. On the other hand, the gain of the iterative -norm regularization is more apparent for low SNRs, where it performs much better with respect to the conventional Wiener filter using the -norm regularization.

Finding an optimal value of is beyond the scope of this paper, in which the main purpose is to outline the features and the potential of the -norm regularization for the identification of sparse systems. However, based on the previous experimental results, some empirical ranges for the values of this parameter could be recommended, depending on the SNR. For example, in good SNR conditions (e.g., dB), a reliable choice could be

On the other hand, in moderate or low SNR environments (e.g., dB), another option could be

The validity of these recommended choices are verified in Figure 8, in which the iterative -norm regularization is compared with the conventional -norm regularization in various SNR conditions and for different values of N. Here, , where is computed based on (65) and (66), depending on the SNR. As it can be inferred, the iterative -norm regularization works significantly better in all the cases, but its gain is more apparent for low SNRs. Furthermore, the results indicate a good behavior for low values of N, especially for the critical case when .

7.3. Wiener Filter Based on the Kronecker Product Decomposition

The Wiener filter based on the Kronecker product decomposition (KPD) presented in Section 5 involves smaller data structures with respect to the conventional Wiener filter. While the conventional solution is obtained by directly solving (19), the KPD-based Wiener filter iteratively combines the solutions from (41) and (42). In other words, instead of dealing with a single filter of length (as in the conventional case), the KPD approach handles two shorter filters of lengths and , with . The advantages become more apparent for the identification of long-length impulse responses, such as in echo cancellation [10].

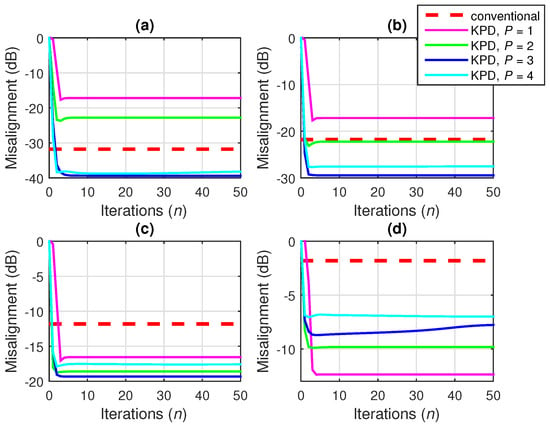

In Figure 9, the conventional Wiener filter is compared with the KPD-based solution using different values of the decomposition parameter P. The regularization parameter is set to for both algorithms. In this simulation, N is set to 5000 and different SNRs are used. As shown in Section 5, the value of P is related to the rank of the matrix , which reshapes the impulse response . In this scenario, the length of is , so that the values and can be used. In this case, . As it can be observed in Figure 9a–c, the performance of the KPD-based Wiener filter is improved as the value of P increases up to the rank of . The advantages become more apparent for lower SNRs, where the KPD solution outperforms the conventional Wiener filter for most of the values of P. It is interesting to notice that in heavy noise conditions (e.g., dB), a lower value of P could be used, as indicated in Figure 9d.

Figure 9.

Misalignment of the conventional Wiener filter and the KPD-based Wiener filter using different values of P, for and (a) dB, (b) dB, (c) dB, and (d) dB.

In terms of the quantity of data available for the estimation of the statistics, the value corresponds to a favorable scenario, since , so that in this case. Therefore, a reliable set of statistical estimates [see (63) and (64)] are available. However, even in this favorable scenario, the performance of the conventional Wiener filter is still influenced by the SNR level, as already supported in Figure 2. In other words, the accuracy of its solution is worse when the SNR decreases, as can also be inferred from Figure 9. On the other hand, the KPD-based Wiener filter operates with smaller data structures, and it is less influenced by the accuracy of the statistical estimates, as compared to the conventional Wiener filter. Consequently, it is also more robust to the SNR level. Especially in noisy conditions, i.e., with moderate and low SNRs (e.g., 10 dB and 0 dB, respectively), the KPD-based Wiener filter (for any value of P) outperforms the conventional Wiener solution.

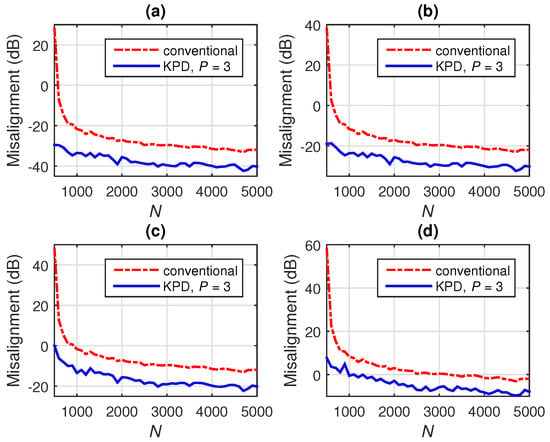

Next, the performance of the KPD-based Wiener filter (using ) is evaluated for different values of N and in different SNR conditions, using the conventional Wiener filter as a benchmark for comparison. As it can be observed in Figure 10, the KPD approach outperforms the conventional solution for all the values of N and in all SNR conditions. The advantages become more apparent for low values of N, i.e., .

Figure 10.

Misalignment of the conventional Wiener filter and the KPD-based Wiener filter using , for different values of N and (a) dB, (b) dB, (c) dB, and (d) dB.

7.4. Wiener Filter with QEP Regularization

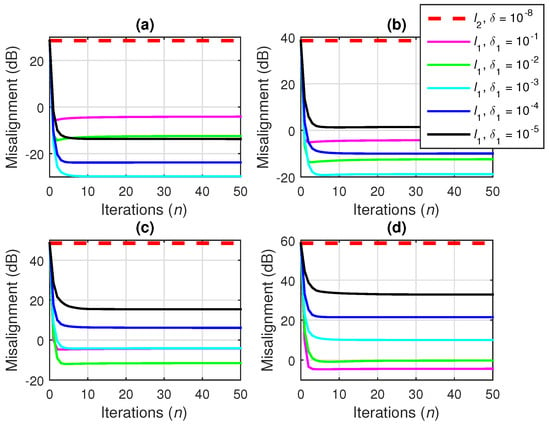

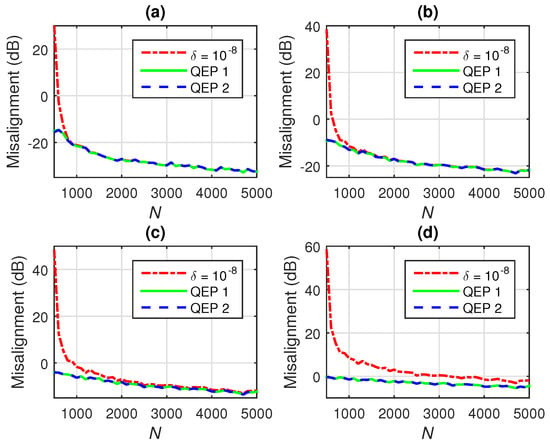

Finally, the last experiment is dedicated to the QEP regularization from (60), which is explained in Section 6. The linearization methods from (54), (55), (57), and (58) are referred in Figure 11 as QEP 1 and QEP 2, respectively. The conventional Wiener filter using is considered for comparison.

In Figure 11, different values of N and different SNRs are used. It can be inferred that the QEP regularization outperforms the regular -norm regularization, especially for low values of N. In heavy noise conditions (e.g., dB), the QEP approach leads to better results for all the values of N, as indicated in Figure 11d. It can also be observed that the two linearization methods perform in a very similar way. It is interesting to compare the results from Figure 11 with the plots from Figure 3. In this context, it can be observed that the QEP regularization is close to the optimal -norm regularization of the Wiener filter.

8. Conclusions

The regularization process is a critical step in any ill-posed problem, especially in noisy environments. This important step is also required in conjunction with the well-known Wiener filter, to avoid an ill-conditioned matrix inversion, as well as to control and improve its performance in low SNR conditions. In this paper, the focus was on several regularization techniques of the Wiener filter.

As shown in Section 4, the classical -norm (or Tikhonov) regularization performs reasonably well only in good or moderate SNR environments. Nevertheless, for sparse systems, the overall performance of the Wiener filter can be improved by using a regularization technique based on the norm. The iterative solution proposed in this paper outperforms the -norm regularization, especially in challenging conditions, i.e., for low SNRs and/or when using less accurate statistical estimates.

The KPD-based approach is also suitable when only a small quantity of data is available for the estimation of the statistics, as supported in Section 5. The resulting iterative KPD-based Wiener filter can lead to significantly better results, as compared to the conventional Wiener filter for the identification of low-rank systems. This KPD-based version combines the solutions provided by two shorter filters, while the conventional Wiener filter operates with a single filter of much longer length. Consequently, the KPD-based approach fits very well for the identification of long-length impulse responses.

Finally, the QEP regularization technique presented in Section 6 improves the performance of the Wiener filter (as compared to the -norm regularization), especially in noisy environments (i.e., low SNRs), but also when using less accurate estimates of the statistics. Experimental results have supported the main theoretical developments and indicated the robust performance of these regularization techniques.

Author Contributions

Conceptualization, J.B.; validation, L.-M.D.; methodology, C.P.; formal analysis, S.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by a grant from the Romanian Ministry of Education and Research, CNCS–UEFISCDI, Project Number PN-III-P1-1.1-PD-2019-0340, within PNCDI III.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wiener, N. Extrapolation, Interpolation, and Smoothing of Stationary Time Series; John Wiley & Sons: New York, NY, USA, 1949. [Google Scholar]

- Ljung, L. System Identification: Theory for the User, 2nd ed.; Prentice-Hall: Upper Saddle River, NJ, USA, 1999. [Google Scholar]

- Haykin, S. Adaptive Filter Theory, 4th ed.; Prentice-Hall: Upper Saddle River, NJ, USA, 2002. [Google Scholar]

- Benesty, J.; Huang, Y. (Eds.) Adaptive Signal Processing—Applications to Real-World Problems; Springer: Berlin, Germany, 2003. [Google Scholar]

- Diniz, P.S.R. Adaptive Filtering: Algorithms and Practical Implementation, 4th ed.; Springer: New York, NY, USA, 2013. [Google Scholar]

- Benesty, J.; Gänsler, T. Computation of the condition number of a nonsingular symmetric Toeplitz matrix with the Levinson–Durbin algorithm. IEEE Trans. Signal Process. 2006, 54, 2362–2364. [Google Scholar] [CrossRef]

- Benesty, J.; Paleologu, C.; Ciochină, S. On regularization in adaptive filtering. IEEE Trans. Audio Speech Lang. Process. 2011, 19, 1734–1742. [Google Scholar] [CrossRef]

- Benesty, J.; Paleologu, C.; Ciochină, S. On the identification of bilinear forms with the Wiener filter. IEEE Signal Process. Lett. 2017, 24, 653–657. [Google Scholar] [CrossRef]

- Zakharov, Y.V.; Tozer, T.C. Multiplication-free iterative algorithm for LS problem. IEE Electron. Lett. 2004, 40, 567–569. [Google Scholar] [CrossRef]

- Paleologu, C.; Benesty, J.; Ciochină, S. Linear system identification based on a Kronecker product decomposition. IEEE ACM Trans. Audio Speech Lang. Process. 2018, 26, 1793–1808. [Google Scholar] [CrossRef]

- Constantin, I.; Richard, C.; Lengellé, R.; Soufflet, L. Nonlinear regularized Wiener filtering with kernels: Application in denoising MEG data corrupted by ECG. IEEE Trans. Signal Process. 2006, 54, 4796–4806. [Google Scholar] [CrossRef]

- Aguena, M.L.S.; Mascarenhas, N.D.A.; Anacleto, J.C.; Fels, S.S. MRI iterative super resolution with Wiener filter regularization. In Proceedings of the XXVI Conference on Graphics, Patterns and Images, Arequipa, Peru, 5–8 August 2013; pp. 155–162. [Google Scholar]

- Li, F.; Lv, X.-G.; Denga, Z. Regularized iterative Weiner filter method for blind image deconvolution. J. Comput. Appl. Math. 2018, 336, 425–438. [Google Scholar] [CrossRef]

- Benesty, J.; Paleologu, C.; Dogariu, L.M.; Ciochină, S. Identification of linear and bilinear systems: A unified study. Electronics 2021, 10, 1790. [Google Scholar] [CrossRef]

- Miller, S.K. Filtering and stochastic control: A historical perspective. IEEE Control Syst. Mag. 1996, 16, 67–76. [Google Scholar]

- Anderson, B.D.O. From Wiener to hidden Markov models. IEEE Control Syst. Mag. 1999, 19, 41–51. [Google Scholar]

- Glentis, G.-O.; Berberidis, K.; Theodoridis, S. Efficient least squares adaptive algorithms for FIR transversal filtering. IEEE Signal Process. Mag. 1999, 16, 13–41. [Google Scholar] [CrossRef]

- Benesty, J.; Sondhi, M.M.; Huang, Y. (Eds.) Springer Handbook of Speech Processing; Springer: Berlin, Germany, 2008. [Google Scholar]

- Pogula, R.; Kumar, T.K.; Albu, F. Robust sparse normalized LMAT algorithms for adaptive system identification under impulsive noise environments. Circuits Syst. Signal Process. 2019, 38, 5103–5134. [Google Scholar] [CrossRef]

- Golub, G.H.; Loan, C.F.V. Matrix Computations, 3rd ed.; The John Hopkins University Press: Baltimore, MD, USA, 1996. [Google Scholar]

- Hoyer, P.O. Non-negative matrix factorization with sparseness constraints. J. Mach. Learn. Res. 2001, 49, 1208–1215. [Google Scholar]

- Paleologu, C.; Benesty, J.; Ciochină, S. Sparse Adaptive Filters for Echo Cancellation; Morgan & Claypool Publishers: San Rafael, CA, USA, 2010. [Google Scholar]

- Hiriart-Urruty, J.B.; Lemaréchal, C. Convex Analysis and Minimization Algorithms II: Advanced Theory and Bundle Methods; Springer: New York, NY, USA, 1993. [Google Scholar]

- Natarajan, B.K. Sparse approximation solutions to linear systems. SIAM J. Comput. 1995, 24, 227–234. [Google Scholar] [CrossRef] [Green Version]

- Donoho, D. Compressed sensing. IEEE Trans. Inf. Theory 2006, 52, 1289–1306. [Google Scholar] [CrossRef]

- Ma, S.; Goldfarb, D.; Chen, L. Fixed point and Bregman iterative methods for matrix rank minimization. Math. Program. Ser. A 2011, 128, 321–353. [Google Scholar] [CrossRef]

- Fazel, M.; Hindi, H.; Boyd, S. A rank minimization heuristic with application to minimum order system approximation. In Proceedings of the American Control Conference, Arlington, VA, USA, 25–27 June 2001; Volume 6, pp. 4734–4739. [Google Scholar]

- Chen, Y.; Chi, Y. Harnessing structures in big data via guaranteed low-rank matrix estimation: Recent theory and fast algorithms via convex and nonconvex optimization. IEEE Signal Process. Mag. 2018, 35, 14–31. [Google Scholar] [CrossRef]

- Tisseur, F.; Meerbergen, K. The quadratic eigenvalue problem. SIAM Rev. 2001, 43, 235–286. [Google Scholar] [CrossRef] [Green Version]

- Chen, X.; Benesty, J.; Huang, G.; Chen, J. On the robustness of the superdirective beamformer. IEEE/ACM Trans. Audio Speech Lang. Process. 2021, 29, 838–849. [Google Scholar] [CrossRef]

- Gander, W. Least squares with a quadratic constraint. Numer. Math. 1981, 36, 291–307. [Google Scholar] [CrossRef]

- Gander, W.; Golub, G.H.; von Matt, U. A constrained eigenvalue problem. Linear Algebra Appl. 1989, 114–115, 815–839. [Google Scholar] [CrossRef] [Green Version]

- Digital Network Echo Cancellers; ITU-T Recommendations G.168; ITU: Geneva, Switzerland, 2002.

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).