An ARCore-Based Augmented Reality Campus Navigation System

Abstract

:1. Introduction

- (1)

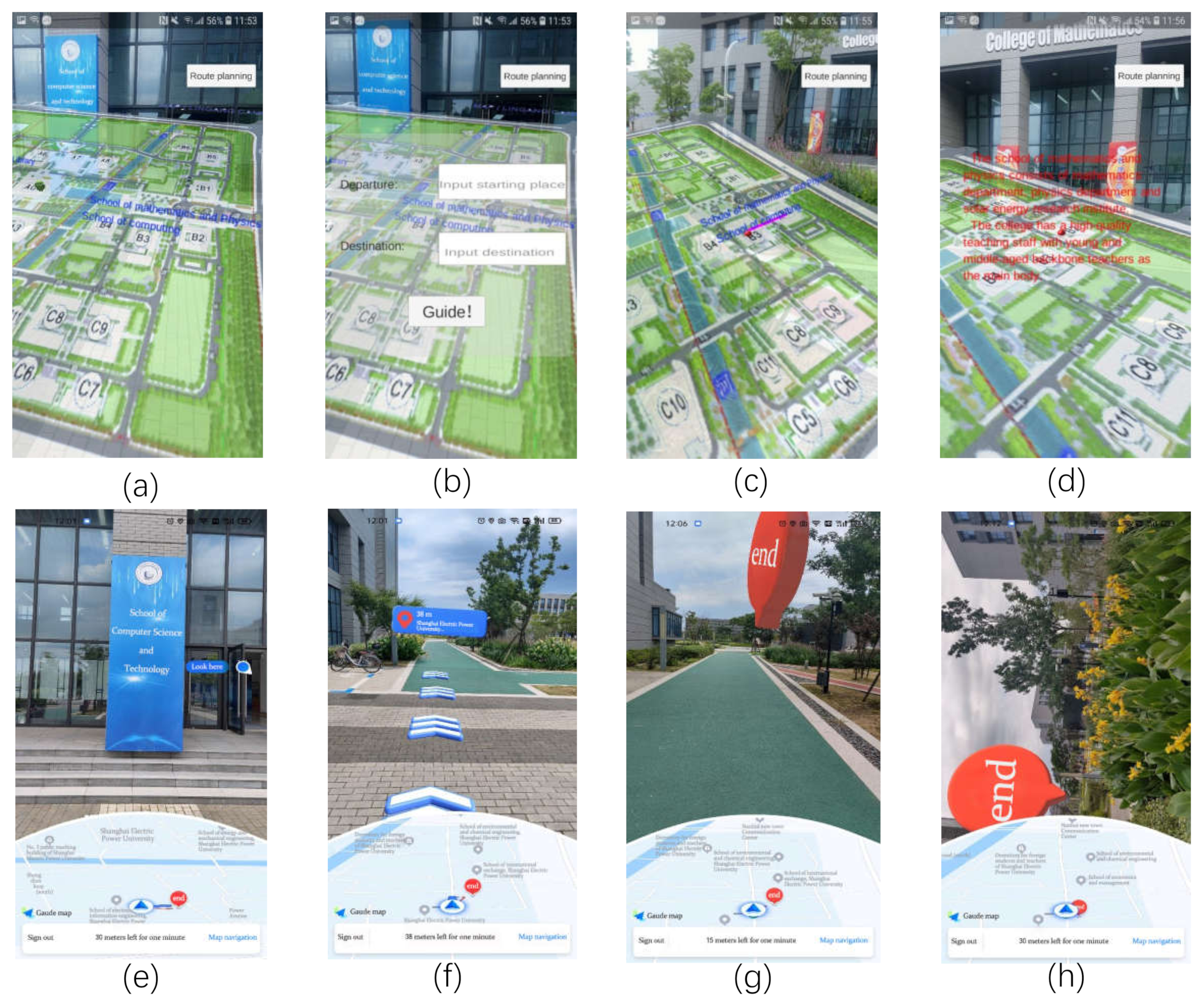

- During the whole process, the system superimposes the virtual route into the real environment by the technology of AR. Meanwhile, 3D augmented information is integrated with the real buildings by using the camera in order to enhance the user’s sensory experience.

- (2)

- The navigation system uses the visual odometry and inertial sensors for the localization and map building in order to solve the problem that the GPS cannot provide users with satisfactory accuracy localization in outdoor situations. Therefore, the system can provide high precision navigation in both indoor and outdoor environments.

- (3)

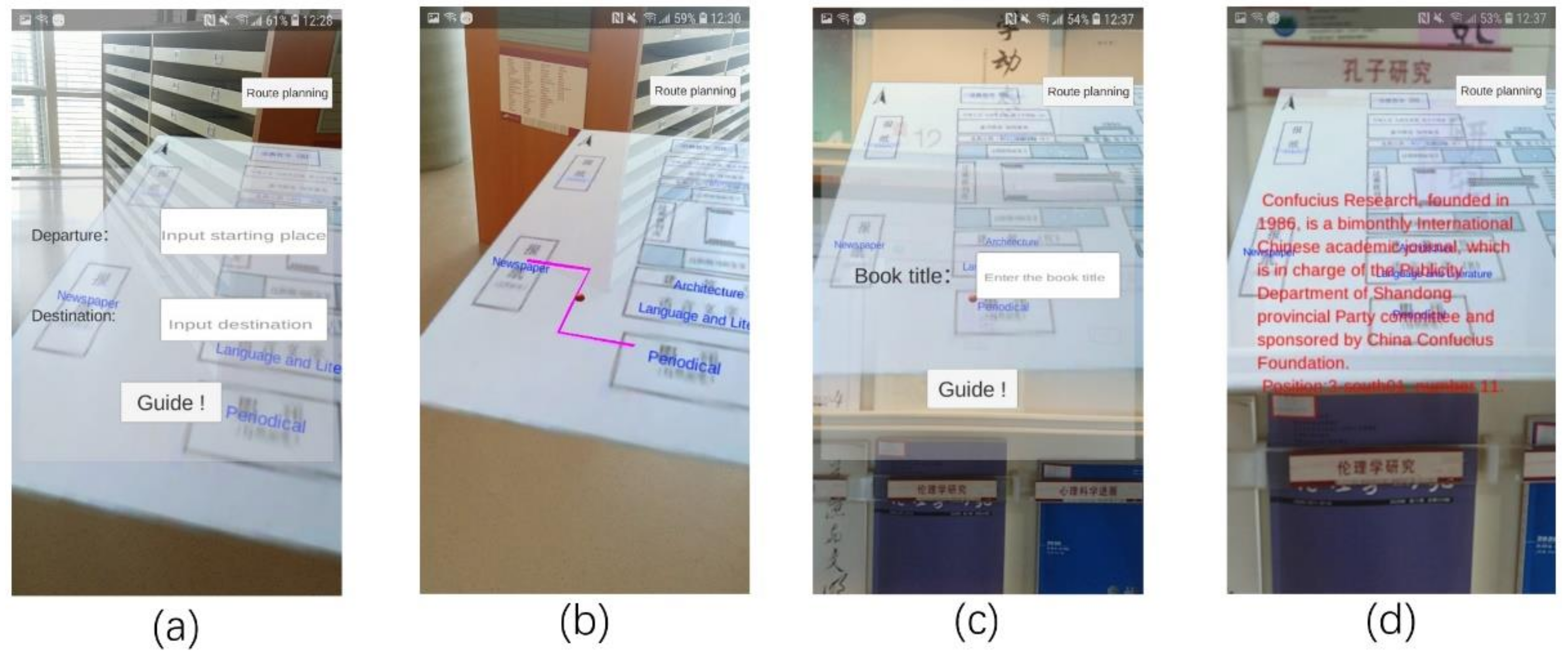

- The system uses Unity as the development platform. By writing corresponding scripts, it can design plentiful human-computer interaction functions according to the different scenarios and user needs.

- (4)

- The system can provide users with a large number of augmented reality content through the database such as 3D text, voice, video and other information so that users will have an interactive feeling during navigation.

2. Related Work

3. Key Technology

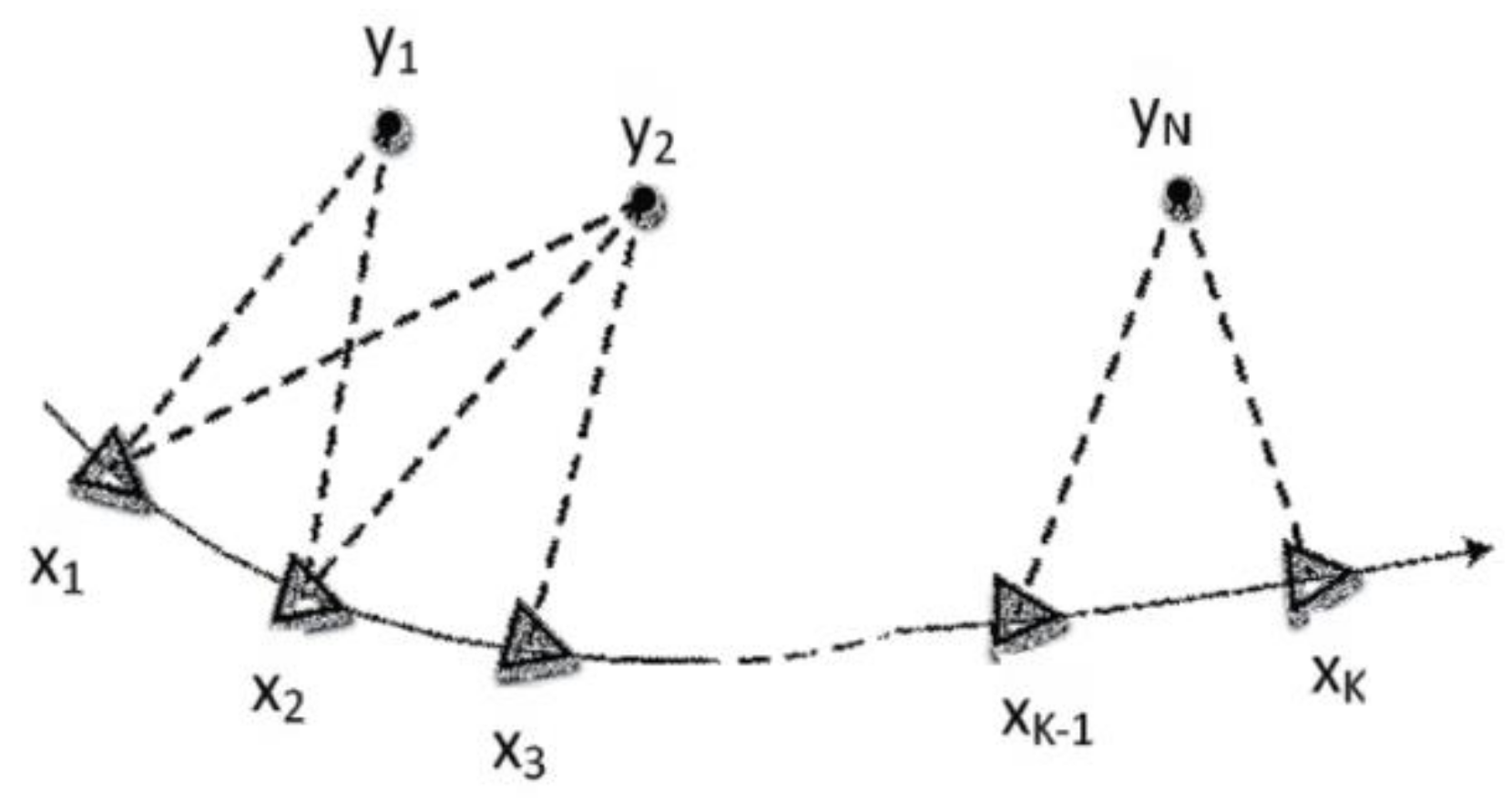

3.1. VIO State Estimation

3.2. Area Learning

4. System Implementation

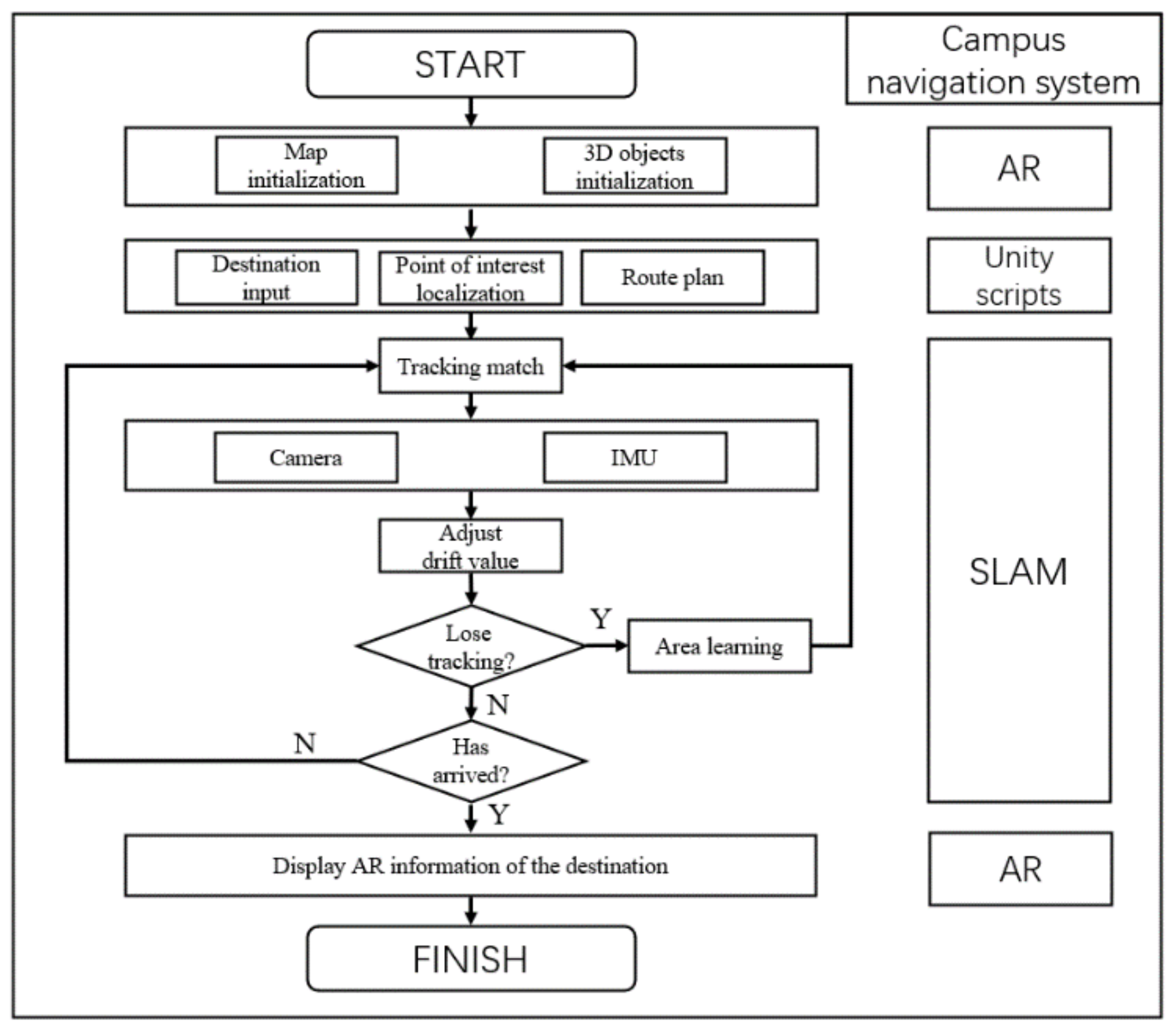

4.1. The Logical Structure of the System

4.2. The Design of System Module

4.2.1. SLAM Navigation Module

- Other Settings > Multithreaded Rendering: Off;

- Other Settings > Minimum API Level: Android 7.0 or higher;

- Other Settings > Target API Level: Android 7.0 or 7.1;

- XR Settings > ARCore (Tango) Supported: On.

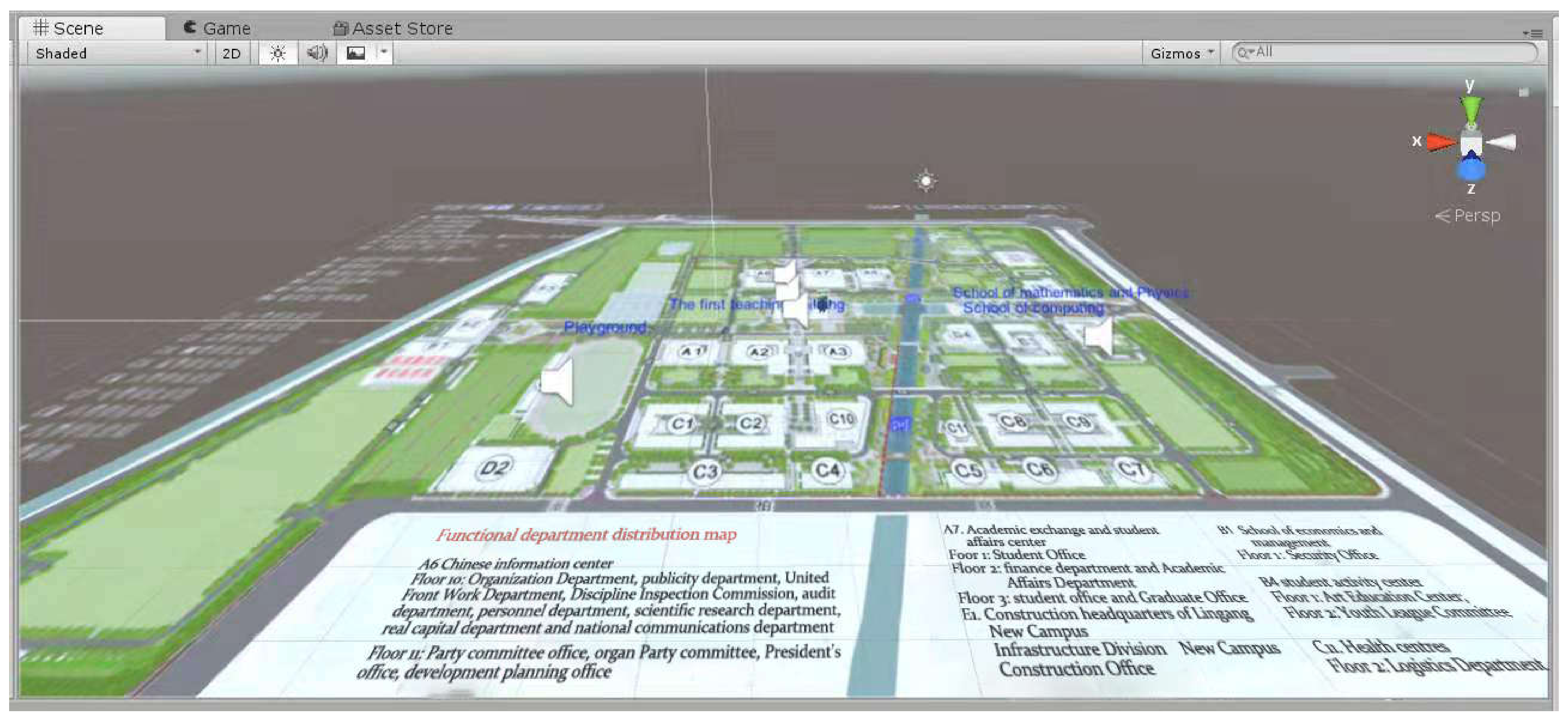

4.2.2. 3D Virtual and Reality Fusion Module

4.2.3. Unity Interactive Module

5. System Test and Result Analysis

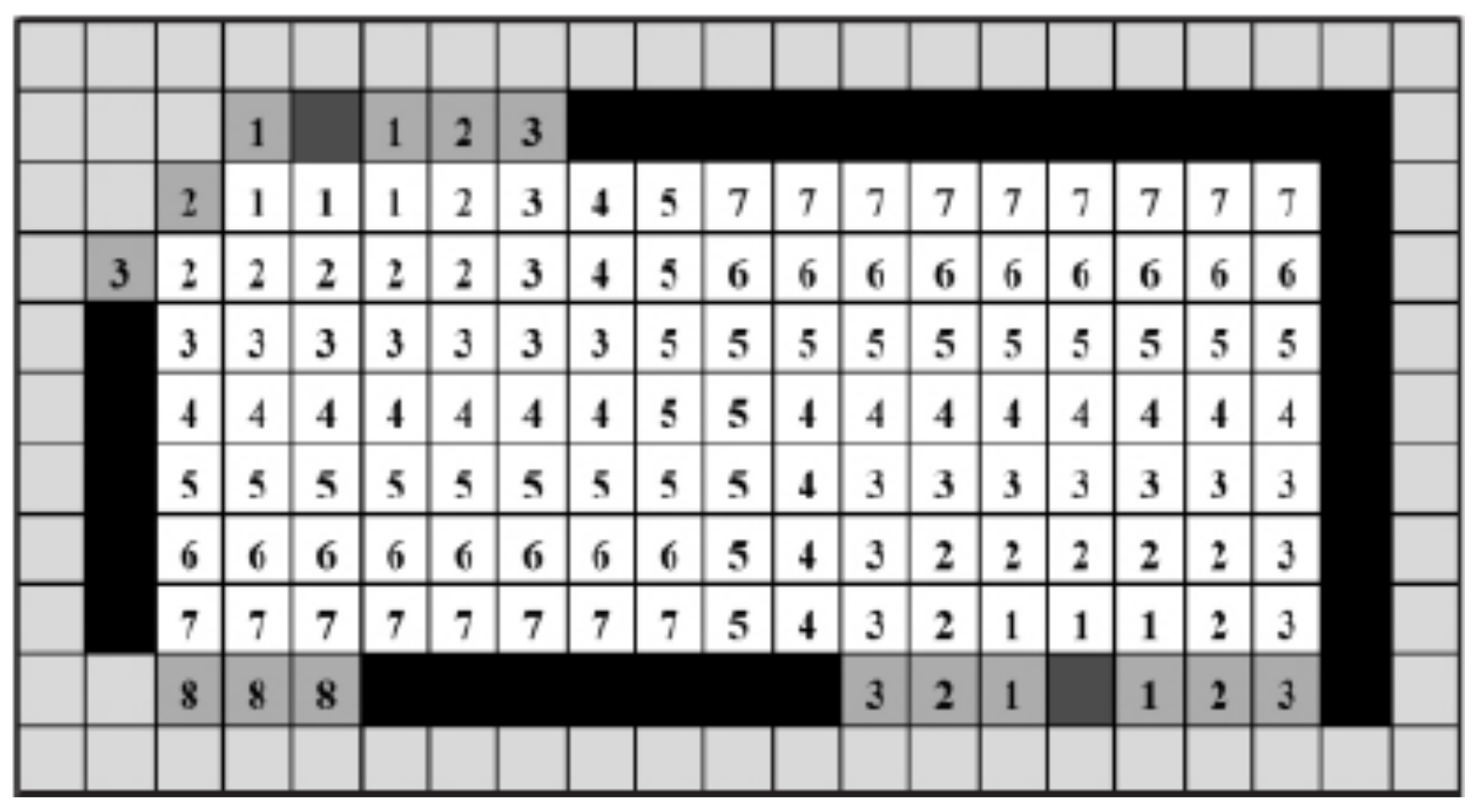

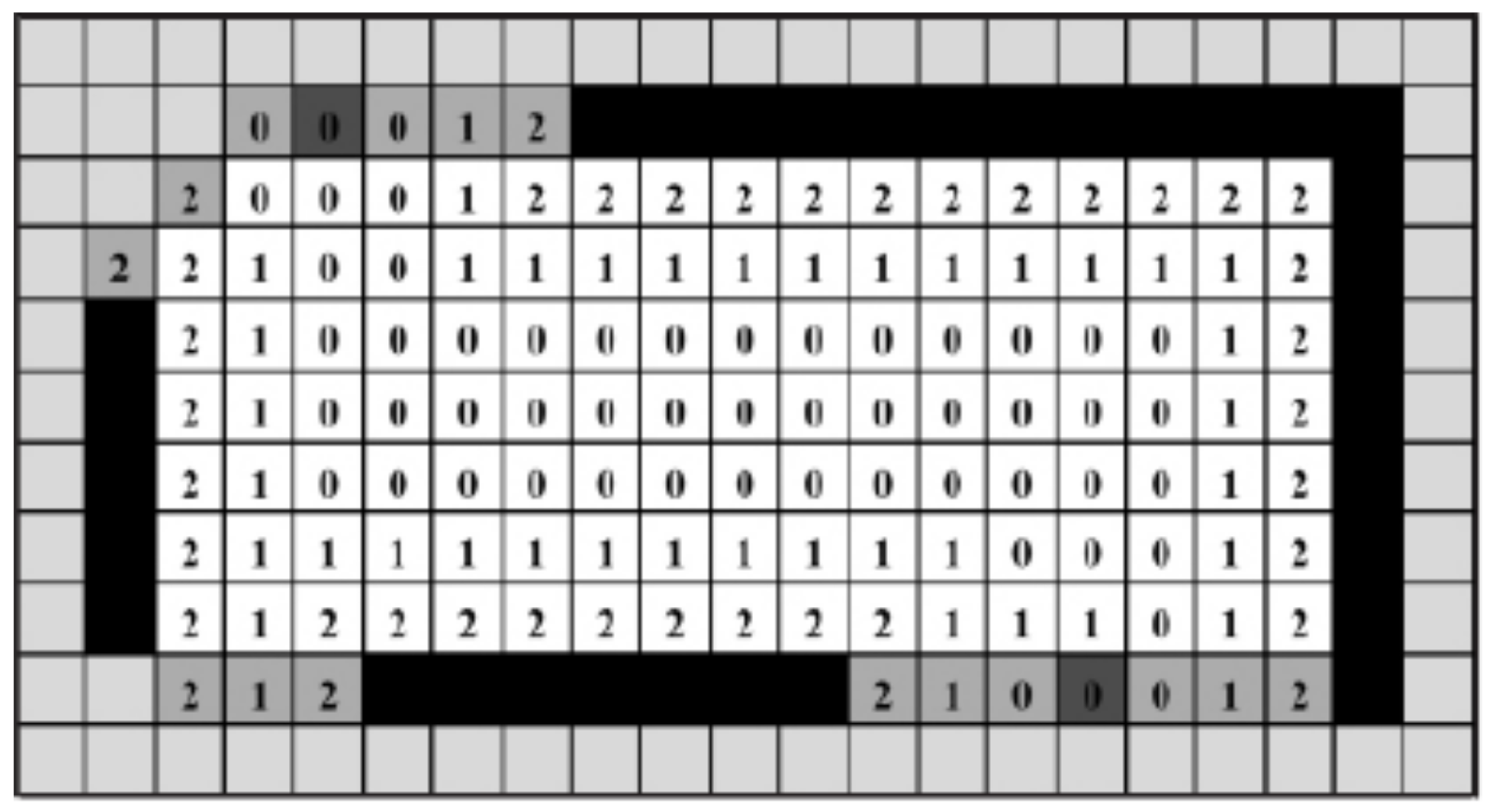

5.1. Outdoor Campus Map Testing

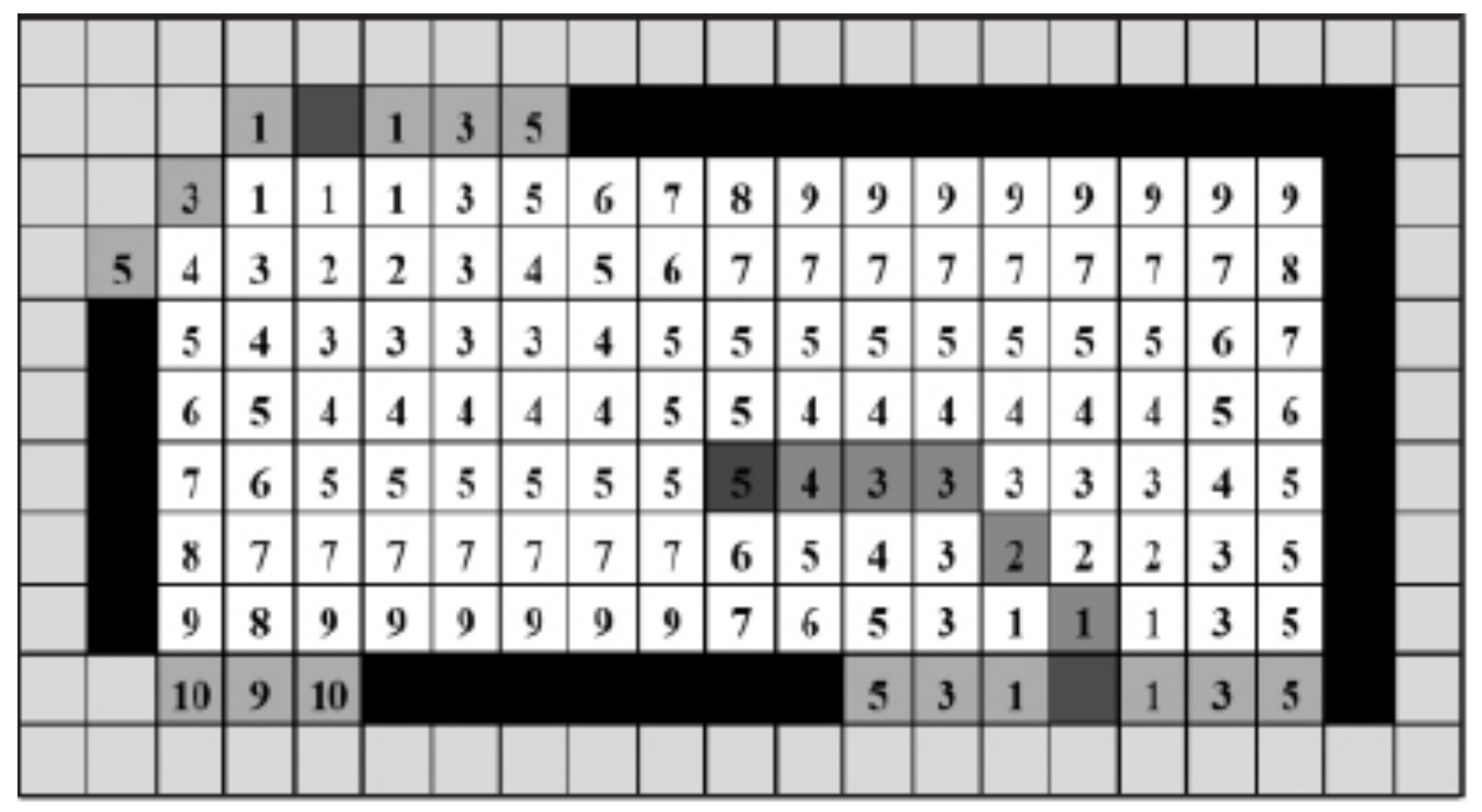

5.2. Indoor Library Floor Map Test

6. Evaluation

6.1. Participants and Test Design

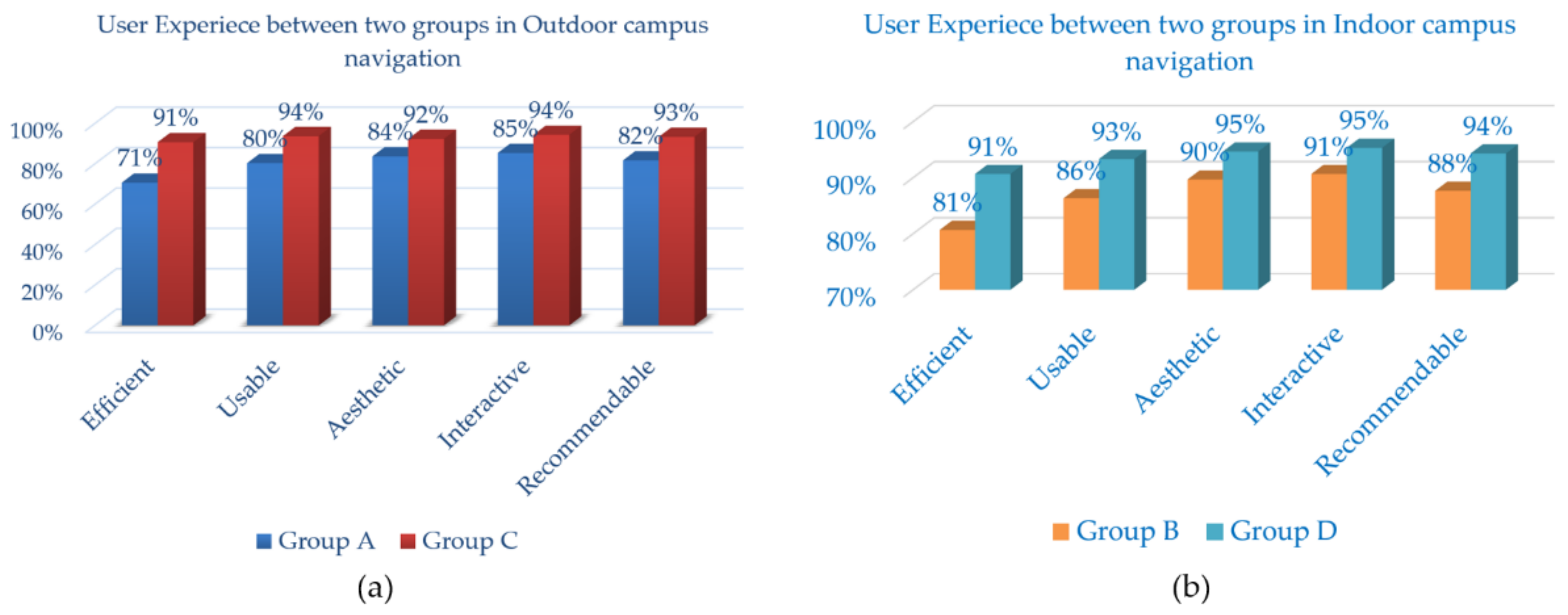

6.2. Results and Analysis

- It is encouraging that more than 71% of participants in each group agreed that the proposed system was better than conventional media in all five aspects;

- Freshmen possess superior evaluation than senior students for outdoor campus navigation in all aspects, which may be attributed to the fact that freshmen are not as familiar with the campus layout, resulting in increased reliance on the outdoor campus navigation system;

- The demand for the outdoor campus navigation system was not urgent by the senior students, which may be due to the fact that they are more familiar with the campus layout and are not completely dependent on the navigation system.

- The p-values of the attitudes towards aesthetic and interactive for group A and C are greater than 0.05, suggesting that the difference of the above aspects between group A and C is insignificant;

- The p-values of the attitudes related to efficiency, usability and recommendability for group A and C are greater than 0.01 and smaller than 0.05, indicating that the difference of the above aspects between group A and C is significant.

- It is encouraging that more than 81% of participants in each group agreed that the proposed system is better than conventional media in all five aspects;

- Not only freshmen but also senior students have higher evaluations for indoor campus navigation with respect to all aspects. Although the senior students are more familiar with the campus layout, they are not very clear about the indoor distribution, such as the exact location of the magazines and books in the library. Therefore, the differences between freshmen and senior students for the demand of indoor campus navigation system are negligible.

- The p-values of the attitudes towards usability, aesthetics, interactiveness and recommendability for group B and D are bigger than 0.05, showing that the differences relative to the above aspects between group B and D are insignificant.

- The p-value of the attitude towards efficiency for group B and D is greater than 0.01 and smaller than 0.05, indicating that the difference relative to the above aspect between group B and D is significant.

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Anning, P.; Kun, Y. Design and Implementation of Campus Comprehensive Information Service System Based on Baidu Map API. Comput. Knowl. Technol. 2016, 12, 72–74. [Google Scholar]

- Sayyad, A.H.; Shinde, S.A. Augmented Reality Based Mobile Tour Guide System. Int. Res. J. Eng. Technol. 2016, 3, 406–412. [Google Scholar]

- Li, Z.G.; Qi, G.L.; Hu, W.K.; Ma, X.Y.; Guo, Q.S. Application of Augmented Reality in Campus Navigation. In Proceedings of the IEEE 6th International Conference on Intelligent Computing and Signal Processing (ICSP 2021), Xi’an, China, 9–11 April 2021; pp. 889–893. [Google Scholar]

- Jacob, R.; Zheng, J.; Ciepłuch, B.; Mooney, P.; Winstanley, A.C. Campus guidance system for international conferences based on OpenStreetMap. In Proceedings of the International Symposium on Web and Wireless Geographical Information Systems, Maynooth, Ireland, 7–8 December 2009; pp. 187–198. [Google Scholar]

- Wenrui, S.; Liansun, Z.; Zhen, Y. School based on Android platform Garden guide software design. Electron. Des. Eng. 2012, 20, 26–28. [Google Scholar]

- Anpat, V.; Shewale, A.; Bhangale, Y. Campus Navigation on Android Platform. Int. J. Sci. Technol. Eng. 2016, 2, 452–458. [Google Scholar]

- Yu, K.-M.; Chiu, J.-C.; Lee, M.-G.; Chi, S.-S. A mobile application for an ecological campus navigation system using augmented reality. In Proceedings of the International Conference on Ubi-Media Computing (UMEDIA), Colombo, Sri Lanka, 24–26 August 2015; pp. 17–22. [Google Scholar]

- Pei, L.S.; Cai, S.; Shi, P.F. Mobile Campus Touring System based on AR and GPS: A Case Study of Campus Cultural Activity. In Proceedings of the 21st International Conference on Computers in Education (ICCE 2013), Denpasar Bali, Indonesia, 18–22 November 2013; pp. 518–526. [Google Scholar]

- Lin, C.-H.; Chung, Y.; Chou, B.-Y.; Chen, H.-Y.; Tsai, C.-Y. A novel campus navigation APP with augmented reality and deep learning. In Proceedings of the IEEE International Conference on Applied System Invention (ICASI), Chiba, Japan, 13–17 April 2018; pp. 1075–1077. [Google Scholar]

- Dutta, S.; Barik, M.S.; Chowdhury, C.; Gupta, D. Divya-Dristi: A smartphone based campus navigation system for the visually impaired. In Proceedings of the Fifth International Conference on Emerging Applications of Information Technology (EAIT), Kolkata, India, 12–13 January 2018; pp. 1–3. [Google Scholar]

- Kourouthanassis, P.E.; Boletsis, C.; Lekakos, G. Demystifying the design of mobile augmented reality applications. Multimed. Tools Appl. 2015, 74, 1045–1066. [Google Scholar] [CrossRef]

- Azuma, R.; Baillot, Y.; Behringer, R.; Feiner, S.; Julier, S.; MacIntyre, B. Recent advances in augmented reality. IEEE Comput. Graph. Appl. 2001, 21, 34–47. [Google Scholar] [CrossRef] [Green Version]

- Azuma, R.T. A survey of augmented reality. Presence: Teleoper. Vir. Tual. Environ. 1997, 6, 355–385. [Google Scholar]

- Chatzopoulos, D.; Bermejo, C.; Huang, Z.; Hui, P. Mobile Augmented Reality Survey: From Where We Are to Where We Go. IEEE Access 2017, 5, 6917–6950. [Google Scholar] [CrossRef]

- Kim, D.; Choi, Y. Applications of Smart Glasses in Applied Sciences: A Systematic Review. Appl. Sci. 2021, 11, 4956. [Google Scholar] [CrossRef]

- Han, D.-I.D.; Tom Dieck, M.C.; Jung, T. Augmented Reality Smart Glasses (ARSG) Visitor Adoption in Cultural Tourism. Leis. Stud. 2019, 38, 618–633. [Google Scholar] [CrossRef]

- Yong, M.; Pauwels, J.; Kozak, F.K.; Chadha, N.K. Application of Augmented Reality to Surgical Practice: A Pilot Study Using the ODG R7 Smart glasses. Clin. Otolaryngol. 2020, 45, 130–134. [Google Scholar] [CrossRef] [PubMed]

- Verma, P.; Agrawal, K.; Sarasvathi, V. Indoor Navigation Using Augmented Reality. In Proceedings of the 4th International Conference on Virtual and Augmented Reality Simulations, Sydney, Australia, 14–16 February 2020; pp. 58–63. [Google Scholar]

- Charles, G.V.; Simon, R. An indoor navigation platform for seeking Internet of Things devices in large indoor environment. In Proceedings of the 5th EAI International Conference on Smart Objects and Technologies for Social Good, Valencia, Spain, 25–27 September 2019; pp. 108–113. [Google Scholar]

- Joaquín, T.S.; Joan, A.; David, R.; Raúl, M.; Sven, C.; Mauri, B.B.; Michael, G.; Joaquín, H. Enhancing Integrated Indoor/Outdoor Mobility in a Smart Campus. Int. J. Geogr. Inf. Sci. 2015, 29, 1955–1968. [Google Scholar]

- Jiang, Y.; Fang, Y.; Yao, C.; Wang, Z. A design of indoor & outdoor navigation system. In Proceedings of the ICCAT2011—IET International Conference on Communication Technology and Application (ICCTA 2011), Beijing, China, 14–16 October 2011. [Google Scholar]

- Vanclooster, A.; Maeyer, P.D. Combining Indoor and Outdoor Navigation: The Current Approach of Route Planners. In Advances in Location-Based Services; Springer: Berlin/Heidelberg, Germany, 2012; pp. 283–303. [Google Scholar]

- Wang, Z.; Niu, L. A Data Model for Using OpenStreetMap to Integrate Indoor and Outdoor Route Planning. Sensors 2018, 18, 2100. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Croce, D.; Giarre, L.; Pascucci, F.; Tinnirello, I.; Valvo, A.L. An Indoor and Outdoor Navigation System for Visually Impaired People. IEEE Access 2019, 7, 170406–170418. [Google Scholar] [CrossRef]

- Zhu, S.; Jiang, J. Research on Application of Extended Kalman Filter Algorithm in Navigation. Autom. Appl. 2019, 11, 53–54. [Google Scholar]

- Gao, J.C.; Yan, K.D.; Han, B. SLAM estimation method for uncertain model noise parameters. Clust. Comput. 2019, 22, 9425–9434. [Google Scholar] [CrossRef]

- He, Y.X.; Hu, T.; Zeng, D. Scan-Flood Fill (SCAFF): An Efficient Automatic Precise Region Filling Algorithm for Complicated Regions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–20 June 2019; pp. 1–3. [Google Scholar]

- Rostami, S.M.H.; Sangaiah, A.K.; Wang, J.; Liu, X. Obstacle avoidance of mobile robots using modified artificial potential field algorithm. EURASIP J. Wirel. Commun. Netw. 2019, 1, 70–88. [Google Scholar] [CrossRef] [Green Version]

| Group A | Group B | Group C | Group D | |

|---|---|---|---|---|

| Participant | 30 | 30 | 30 | 30 |

| Freshman | No | No | Yes | Yes |

| Navigation | AR Outdoor | AR Indoor | AR Outdoor | AR Indoor |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, F.; Zhou, H.; Guo, L.; Chen, J.; Pei, L. An ARCore-Based Augmented Reality Campus Navigation System. Appl. Sci. 2021, 11, 7515. https://doi.org/10.3390/app11167515

Lu F, Zhou H, Guo L, Chen J, Pei L. An ARCore-Based Augmented Reality Campus Navigation System. Applied Sciences. 2021; 11(16):7515. https://doi.org/10.3390/app11167515

Chicago/Turabian StyleLu, Fangfang, Hao Zhou, Lingling Guo, Jingjing Chen, and Licheng Pei. 2021. "An ARCore-Based Augmented Reality Campus Navigation System" Applied Sciences 11, no. 16: 7515. https://doi.org/10.3390/app11167515

APA StyleLu, F., Zhou, H., Guo, L., Chen, J., & Pei, L. (2021). An ARCore-Based Augmented Reality Campus Navigation System. Applied Sciences, 11(16), 7515. https://doi.org/10.3390/app11167515