Abstract

In the paper, orthogonal transforms based on proposed symmetric, orthogonal matrices are created. These transforms can be considered as generalized Walsh–Hadamard Transforms. The simplicity of calculating the forward and inverse transforms is one of the important features of the presented approach. The conditions for creating symmetric, orthogonal matrices are defined. It is shown that for the selection of the elements of an orthogonal matrix that meets the given conditions, it is necessary to select only a limited number of elements. The general form of the orthogonal, symmetric matrix having an exponential form is also presented. Orthogonal basis functions based on the created matrices can be used for orthogonal expansion leading to signal approximation. An exponential form of orthogonal, sparse matrices with variable parameters is also created. Various versions of orthogonal transforms related to the created full and sparse matrices are proposed. Fast computation of the presented transforms in comparison to fast algorithms of selected orthogonal transforms is discussed. Possible applications for signal approximation and examples of image spectrum in the considered transform domains are presented.

1. Introduction

The literature on orthogonal transforms for signal and image processing is extensive, including the often-cited books by Ahmed and Rao [1] and Wang [2]. In this paper, a new orthogonal transform is proposed that can be treated as a generalized Walsh–Hadamard transform [3,4,5,6,7,8,9]. A modification of the proposed transform is also investigated. This transform has a simple structure leading to a fast calculation of both forward and inverse transforms and hence very fast computational algorithms with a significant reduction in the number of calculations required. Indeed, the forward and inverse transforms have the same structure, only differing in the constant coefficient. The proposed transforms could be effectively applied in such areas of signal and image processing as watermarking technology [10,11,12], steganography, intelligent monitoring and others [13,14,15,16]. The general form of this transform enables the possibility of selecting optimal parameters for specific tasks, implying there are potentially other applications. Further investigations are required to determine in which applications it has maximum effectiveness.

2. Orthogonal Generalized Transform Matrix

Let us consider an elementary square matrix consisting of four elements. Assuming the first row of this matrix has different elements and the matrix is symmetric and orthogonal, we have:

where , —any real numbers, .

In the case of the matrix , where the first row consists of four different elements . Such a sequence is referred to as a basis sequence of the matrix. Our aim is to create a symmetric and orthogonal matrix for the given basis sequence . This matrix consists of four elementary matrices , having the structure of (1), as shown below:

The matrix (2) is symmetric, i.e., the forward matrix is equal to its transpose: . In order to obtain the condition for which the matrix (2) is orthogonal it is necessary to consider dot products of any two rows: (or columns) of the matrix (2). Then, each dot product should satisfy the equation: . The following condition for the basis sequence is obtained to create an orthogonal matrix (2):

Our purpose is to obtain a general rule for the creation of a symmetric and orthogonal matrix for any . Let us consider now the basis sequence consisting of N elements represented by any real numbers in order to obtain a symmetric and orthogonal matrix . A general form of such a matrix (having the structure of (1) and (2)) is given by:

Each of the matrices has the size and the structure of matrix (1).

To ensure the orthogonality of matrix for each group of four successive elements of the basis sequence , the following condition holds true:

for

- .

- .

Thus, for the basis sequence consisting of N elements (), the equations defined by (5) should be fulfilled. In this case, the matrix is symmetric and orthogonal, i.e.,

where

- —transpose matrix I—unit matrix.

- —inverse matrix.

- C—coefficients .

It is seen that for the selection of basis sequences that fulfill (5), it is necessary to select only the first three elements of the sequence and then calculate the successive elements as follows:

For instance, to create the basis sequence for the orthogonal matrix we select the first three elements: , e.g., 1, , 2. Then, the next elements are obtained using (7): , etc. Finally, we obtain the basis sequence: 1, , 2, 1, 4, 2, 8, 4 and the matrix is:

The above matrix is symmetric and orthogonal and Equation (5) holds true. It should be emphasized that changing the order of basis sequence elements causes at least one of Equation (5) to be not fulfilled. For instance, by changing the elements 1, to , 1, we obtain the basis sequence for which the matrix is not orthogonal. An example of another sequence matching Equation (5) is the sequence 3, 9, 2, 6, , 4, , or −2, 1, 4, −2, −8, 4, 16, −8. For each subsequent group of four elements of the basis sequence:

there is a relationship:

The coefficient may be chosen arbitrarily as any positive number or by Equation (5). Using this equation we find:

This means that each subsequent group of four elements of the basis sequence is obtained by multiplying the previous group by under condition (3).

In the general case, the basis sequence for the creation of a symmetric and orthogonal matrix has the form:

where

- —positive real number.

3. Exponential Form of the Transform Matrix

If we select the powers of the real number a as elements of matrix defined by Equation (4), the basis sequence for this case is:

The above sequence meets the condition expressed by Equation (5). This condition is also fulfilled for the more general case when the basis sequence is in the form:

where

- a—any real number, .

- k—integer, .

For this case, Equation (5) is:

We now define the general form of the matrix having an exponential form of order N, , for which the following recursive relationship holds:

where and , k—integer.

The matrix defined by Equation (15) is symmetric and orthogonal.

The coefficient C is equal to the energy of the row of matrix and is defined as follows:

Using Equation (15) for and assuming , the matrix is:

For we have:

For we have:

The choice of element a depends on the specific case of the analysis. It should be noted that for the particular case when matrix becomes the Hadamard matrix. For the basis sequence represented by Equations (11) and (13) we have the following relationship:

If two matrices and based on Equation (15) have the basis sequences and , respectively, for then:

where

- .

- , —the elements of basis sequences for matrices and , respectively.

4. Signal Approximation—Orthogonal Expansion

The set of rows of matrix defined by Equation (15) or Equation (4) represents the set of orthogonal discrete basis functions. We assume that these functions are defined within the interval . In relation to the rows of matrix we have:

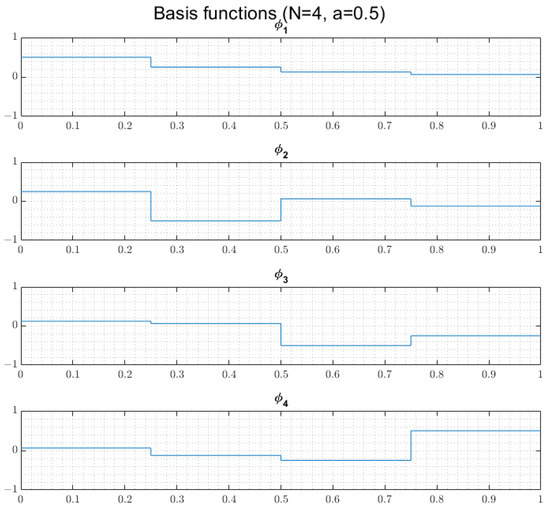

An example of orthogonal basis functions based on matrix for parameter is illustrated in Figure 1.

Figure 1.

Illustration of 4 orthogonal basis functions.

A continuous signal with finite energy can be approximated by linear combinations of N orthogonal basis functions represented by rows of [17]. Such an orthogonal expansion is expressed as follows:

Multiplying both sides of the Equation (21) by , then integrating both sides and accounting for the orthogonality of the basis functions we obtain:

where C is defined by Equation (20).

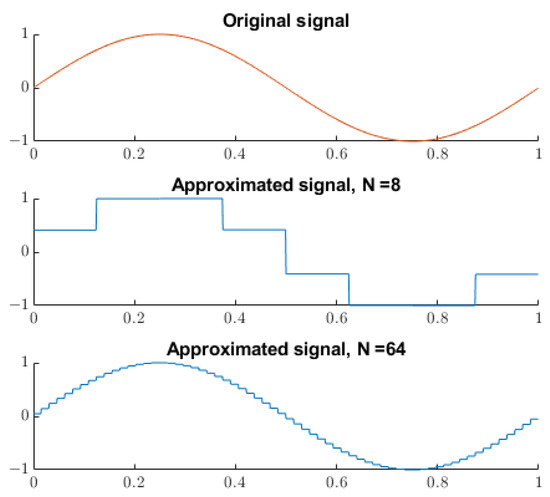

Figure 2.

Approximated signal for different N and .

As can be seen from the results in Figure 1 (as expected), increasing the number of basis functions N decreases the value of the mean square error. For instance, for the equals: , respectively.

5. Proposed Orthogonal Transform and Its Modifications

5.1. One-Dimensional (1D) Transform

Let us consider a discrete one-dimensional signal represented by an N-element vector ,.

A 1D orthogonal Transform based on the matrix defined earlier has the form:

where

- —vector of spectral components.

- —vector of 1D signal.

- —transform matrix defined by Equation (15).

- C—constant defined by Equation (16).

As was shown, the transform matrix is symmetric and orthogonal and . Therefore, the forward transform (FT) differs from the inverse transform (IT) only by the constant C. Another more detailed form of the FT can be written as follows:

for

We generally assume that . Generally, we also assume that the parameter is any real number within the interval . For a particular case the transform (24) becomes the 1D Walsh–Hadamard transform.

5.2. Two-Dimensional (2D) Transform

This case applies to two-dimensional signals represented by the square matrix of N dimensions, , which is mainly associated with image processing. In the general case a 2D transform has the form:

where

- —matrix of spectral components.

- —matrix of 2D signal.

- —transform matrix described by Equation (15).

- C—constant described by Equation (16).

It is seen that the forward and inverse transforms have the same structure—they differ only in the constant C. The 2D transform can be calculated directly using Equation (24) or by grouping the channels as shown in Figure 3e,f. The transform matrix consists of the power of parameter a. For we obtain the 2D Walsh–Hadamard transform. It is obvious that if the transform (25) is used for image processing we are dealing with large values of N. For this reason, large powers of parameter may not be calculated by a computer. Thus, it seems purposeful to propose a sparse matrix with the following form:

where —matrix with dimension

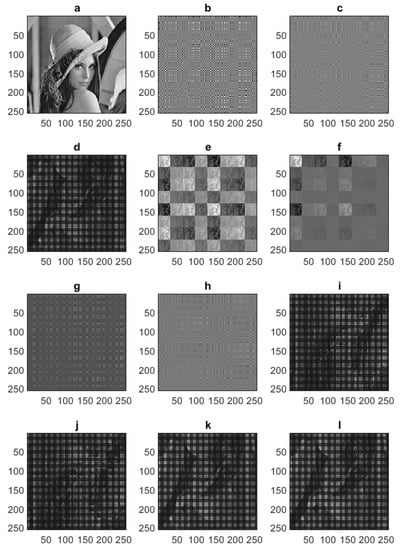

Figure 3.

Input image (a) and its spectra (b–l) obtained for various transform matrices.

It is seen that like matrix is symmetric and orthogonal, so we have:

The matrix consists of many zeros, defined by:

The percentage ratio of the number of zeros to all elements of the matrix is as follows:

For instance, if , more than 99% of the transform matrix elements are zeros.

Matrix (26a) can therefore be used as a transform matrix for the following transform, which is a modified version of transforms (23) and (25) using submatrices .

is defined by Equation (28).

Transforms (31) and (32) with many zeros can be treated as a specific case of compression in the spatial domain. Calculating modified orthogonal transforms using sparse matrices expressed by (31) and (32) is not only simple but can also provide very fast calculations. However, this is the subject considered in the next section.

It is obvious that instead of using matrices (15) in all of the proposed orthogonal transforms (23), (25), (31) and (32) we can also use the symmetric and orthogonal matrix (4) as the transform matrix. In this case, we can consider different versions of the orthogonal transforms, in particular the transforms described by Equations (31) and (32) for which the submatrices are the same or different. It was shown that each submatrix of the matrix is defined by the first three values of the basis sequence. For different submatrices we deal with a matrix of coefficients instead of coefficient C for the inverse transform.

Figure 3 shows: a—the input image for and its spectra obtained for various transform matrices; b—full exponential matrix (); c—full exponential matrix (); d—full non-exponential matrix ( basis sequence: ); e—full exponential matrix after channel grouping ( channels); f—full exponential matrix after channel grouping ( channels), g—sparse exponential matrix (); h—sparse exponential matrix (); i—sparse non-exponential matrix with the same submatrices ( basis sequence: ); j—sparse non-exponential matrix with the same submatrices ( basis sequence: ); k—full non-exponential matrix with arbitrary parameter ( basis sequence: ); and l—full non-exponential matrix with arbitrary parameter ( basis sequence: ).

In all cases after performing the inverse transforms we obtain the original input image.

6. Fast Algorithms

Fast algorithms are used to reduce the number of computations required to determine the transform coefficients in comparison to direct computation of the transform. The main idea of efficient or fast computational algorithms is the ability to subdivide the total computational load into a series of computational steps in such a way that partial results obtained from initial steps can be repeatedly utilized in the subsequent steps.

Fast computation of this matrix can be performed by the well-known techniques of sparse matrix factoring or matrix partitioning. These techniques result in fast algorithms that reduce the computation requirements from additions using direct computation to additions using fast algorithms [2].

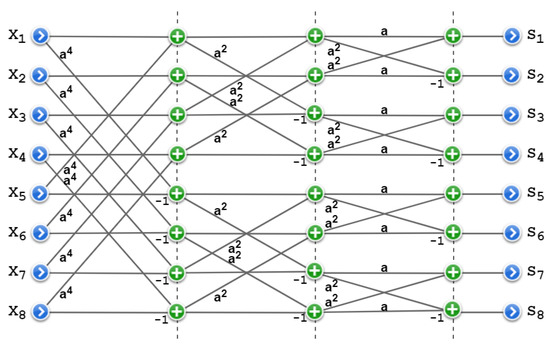

In Figure 4, the flow graph of a fast algorithm for the computation of a full exponential orthogonal matrix for is presented.

Figure 4.

Construction of fast algorithm for the computation of full exponential orthogonal matrix for .

The flow graph has a butterfly structure and is similar to the graph of the fast Walsh–Hadamard transform (WHT) algorithm [2].

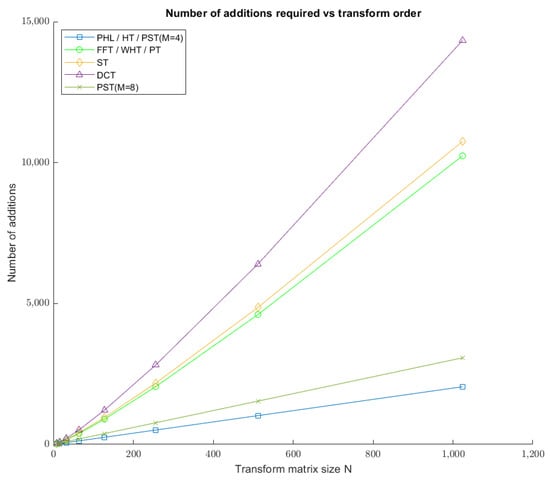

The plot in Figure 5 shows the number of additions required for the proposed transform with respect to its dimensions. This plot also presents the comparison of the proposed transform (PT) and proposed sparse transforms (PST) for and . with other well-known transforms, like periodic Haar piecewise-linear (PHL) [18], fast fourier (FFT), Walsh–Hadamard (WHT), Haar (HT), Slant (ST) and discrete cosine (DCT) transforms.

Figure 5.

Number of additions in relation to transform matrix size.

7. Conclusions

The method of creating symmetric, orthogonal matrices and orthogonal transforms presented in this paper is a generalization of Hadamard matrices and the Walsh–Hadamard transform. The experiments performed show that the proposed transforms can be effectively used for signal and image processing. The advantage of these transforms is simplicity of implementation and a relatively small number of operations necessary for calculations. Moreover, an important feature of the considered transforms is the possibility of forming spectral components of 1D and 2D signals by selecting the transform matrix parameters, in particular the parameter a. This feature, combined with the variety of structures of the considered orthogonal transforms, also offers promising possibilities for further applications.

Funding

This research was funded by the European Union’s Horizon 2020 Research and Innovation Programme, under Grant Agreement No. 830943, the ECHO project.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The author would like to thank Piotr Bogacki from AGH University of Science and Technology, Kraków, for fruitful discussion and carrying out experiments and calculations, and Mariusz Ziółko from AGH University of Science and Technology for valuable discussions and comments.

Conflicts of Interest

The author declares no conflict of interest.

References

- Ahmed, N.; Rao, K.R. Orthogonal Transforms for Digital Signal Processing; Springer: Berlin/Heidelberg, Germany, 1975. [Google Scholar]

- Wang, R. Introduction to Orthogonal Transforms: With Applications in Data Processing and Analysis; Cambridge University Press: Cambridge, UK, 2012. [Google Scholar]

- Johnson, J.; Puschel, M. In search of the optimal Walsh–Hadamard transform. In Proceedings of the 2000 IEEE International Conference on Acoustics, Speech, and Signal Processing, Istanbul, Turkey, 5–9 June 2000; Volume 6, pp. 3347–3350. [Google Scholar]

- Gonzalez, R.; Woods, R. Digital Image Processing, 4th ed.; Pearson: London, UK, 2018. [Google Scholar]

- Madisetti, V. The Digital Signal Processing Handbook; CRC Press: Boca Raton, FL, USA, 2010. [Google Scholar]

- Ashrafi, A. Chapter One—Walsh–Hadamard Transforms: A Review; Advances in Imaging and Electron Physics; Elsevier: Amsterdam, The Netherlands, 2017. [Google Scholar]

- Sayood, K. Chapter 13—Transform Coding. Introduction to Data Compression, 5th ed.; The Morgan Kaufmann Series in Multimedia Information and Systems; Morgan Kaufmann: Burlington, MA, USA, 2018. [Google Scholar]

- Hamood, M.; Boussakta, S. Fast Walsh–Hadamard–Fourier Transform Algorithm. IEEE Trans. Signal Process. 2011, 59, 5627–5631. [Google Scholar] [CrossRef]

- Thompson, A. The Cascading Haar Wavelet Algorithm for Computing the Walsh–Hadamard Transform. IEEE Signal Process. Lett. 2017, 24, 1020–1023. [Google Scholar] [CrossRef]

- Korus, P.; Dziech, A. Efficient Method for Content Reconstruction With Self-Embedding. IEEE Trans. Image Process. 2013, 22, 1134–1147. [Google Scholar] [CrossRef] [PubMed]

- Korus, P.; Dziech, A. Adaptive Self-Embedding Scheme With Controlled Reconstruction Performance. IEEE Trans. Inf. Forensics Secur. 2014, 9, 169–181. [Google Scholar] [CrossRef]

- Kalarikkal Pullayikodi, S.; Tarhuni, N.; Ahmed, A.; Shiginah, F.B. Computationally Efficient Robust Color Image Watermarking Using Fast Walsh Hadamard Transform. J. Imaging 2017, 3, 46. [Google Scholar] [CrossRef]

- Pan, H.; Dabawi, D.; Cetin, A. Fast Walsh–Hadamard Transform and Smooth-Thresholding Based Binary Layers in Deep Neural Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Virtual Conference, 19–25 June 2021. [Google Scholar]

- Subathra, M.S.P.; Mohammed, M.A.; Maashi, M.S.; Garcia-Zapirain, B.; Sairamya, N.J.; George, S.T. Detection of Focal and Non-Focal Electroencephalogram Signals Using Fast Walsh–Hadamard Transform and Artificial Neural Network. Sensors 2020, 20, 4952. [Google Scholar]

- Wang, X.; Liang, X.; Zheng, J.; Zhou, H. Fast detection and segmentation of partial image blur based on discrete Walsh–Hadamard transform. Signal Process. Image Commun. 2019, 70, 47–56. [Google Scholar] [CrossRef]

- Andrushia, A.D.; Thangarjan, R. Saliency-Based Image Compression Using Walsh–Hadamard Transform (WHT). In Biologically Rationalized Computing Techniques for Image Processing Applications; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Aristidi, E. Representation of Signals as Series of Orthogonal Functions. EAS Publ. Ser. 2016, 78–79, 99–126. [Google Scholar] [CrossRef][Green Version]

- Dziech, A.; Ślusarczyk, P.; Tibken, B. Methods of Image Compression by PHL Transform. J. Intell. Robot. Syst. 2004, 39, 447–458. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).