4.1. Optimization

This sub-section provides the results on the optimization of the hyperparameters described in the previous sections.

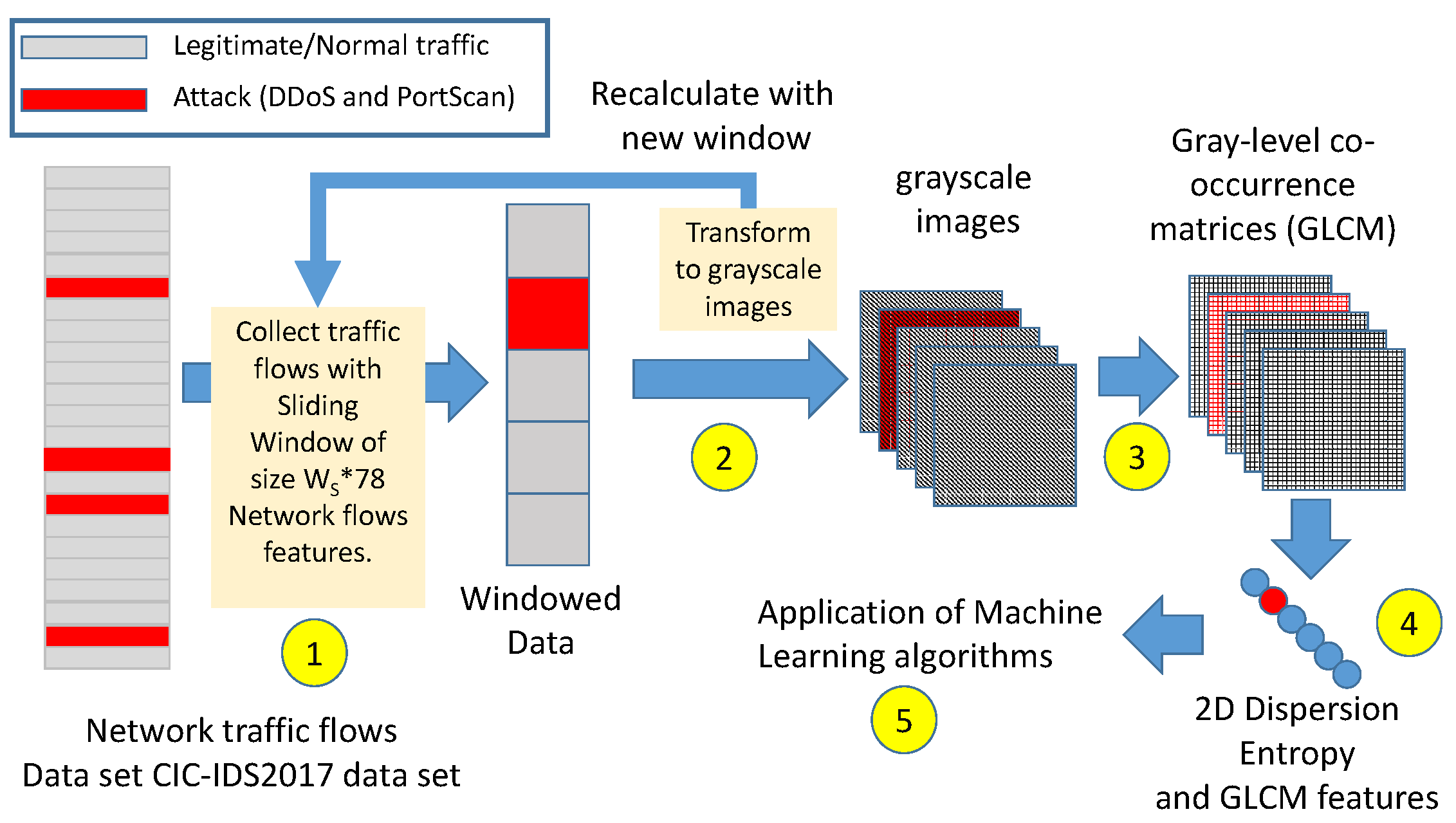

A grid approach was used to determine the optimum values of the hyperparameters. While, other methods (e.g., gradient, meta-heuristics algorithms) could be more efficient, it should be considered that the ranges of values for each hyperparameter are quite limited. In addition, the intention is to show in an explicit way the impact of each hyperparameter for the detection performance. The metric is used to determine the optimal values of the hyperparameters.

The summary of the hyperparameters used in this study, the optimal values and the range of the hyperparameters are shown in

Table 3. In the rest of this sub-section and related figures, we show how a specific hyperparameter impacts the detection accuracy of the threat both for DDoS attack and Port Scan attack. For each presented result, the other hyperparameters are set to the values identified in

Table 3. The Decision Trees (DT) ML algorithm was used to generate the results provided in this sub-section. As shown in

Section 4.3 the DT algorithm has a higher detection performance than the SVM and Naive Bayes algorithms.

The following figures describe the results for the evaluation of the proposed approach for different values of the hyperparameters and for the different features used in the study. In most cases, the evaluation of a single hyperparameter is provided while the other hyperparameters are set to the values described in

Table 3 unless otherwise noted.

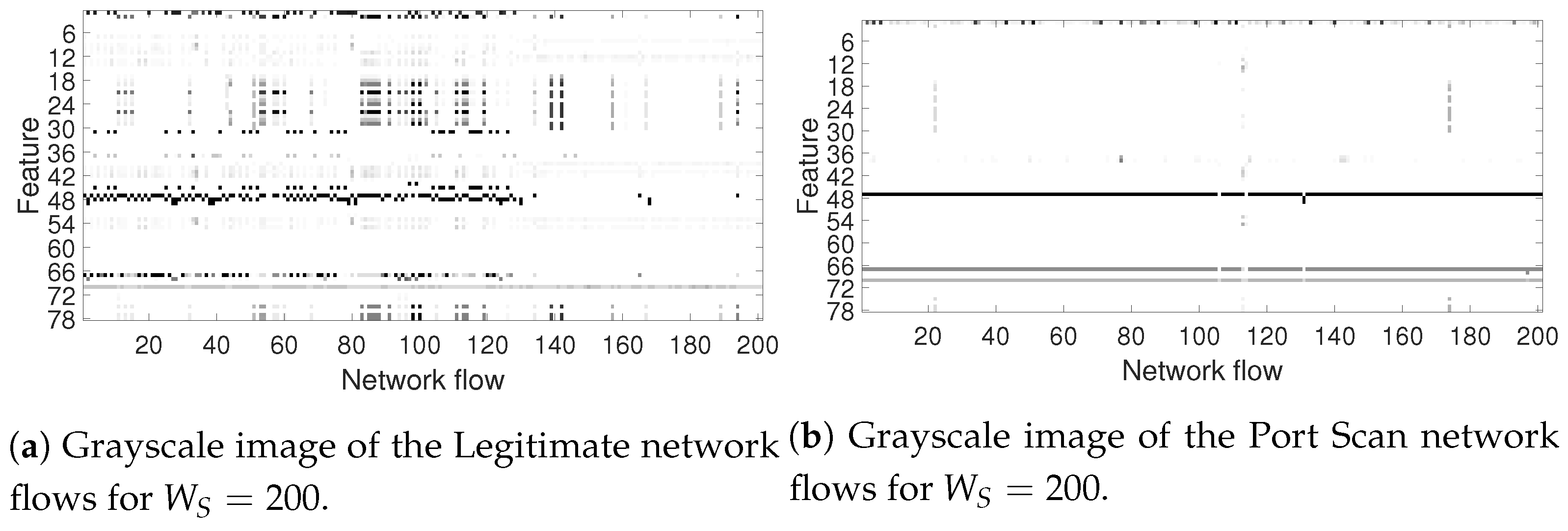

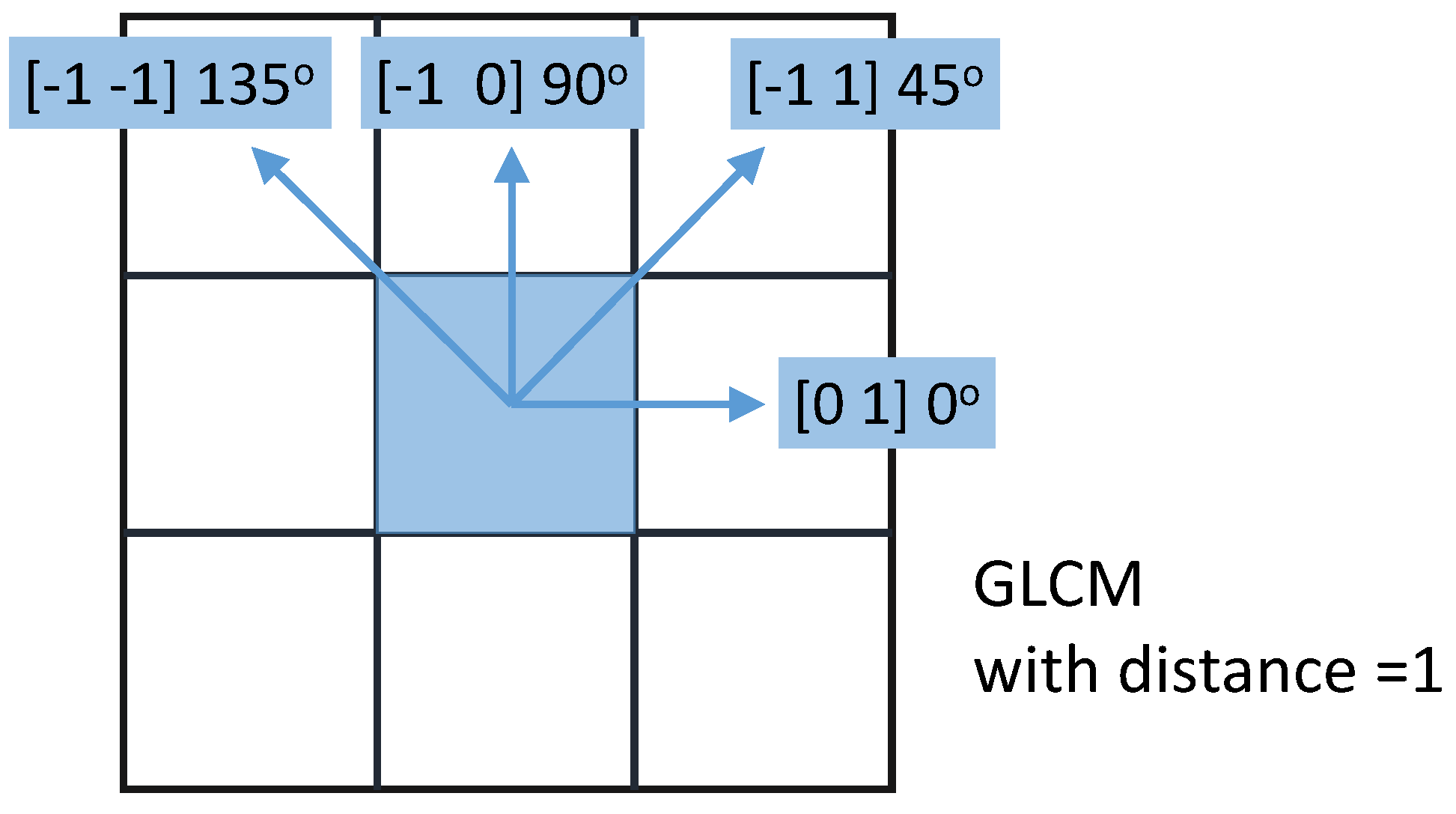

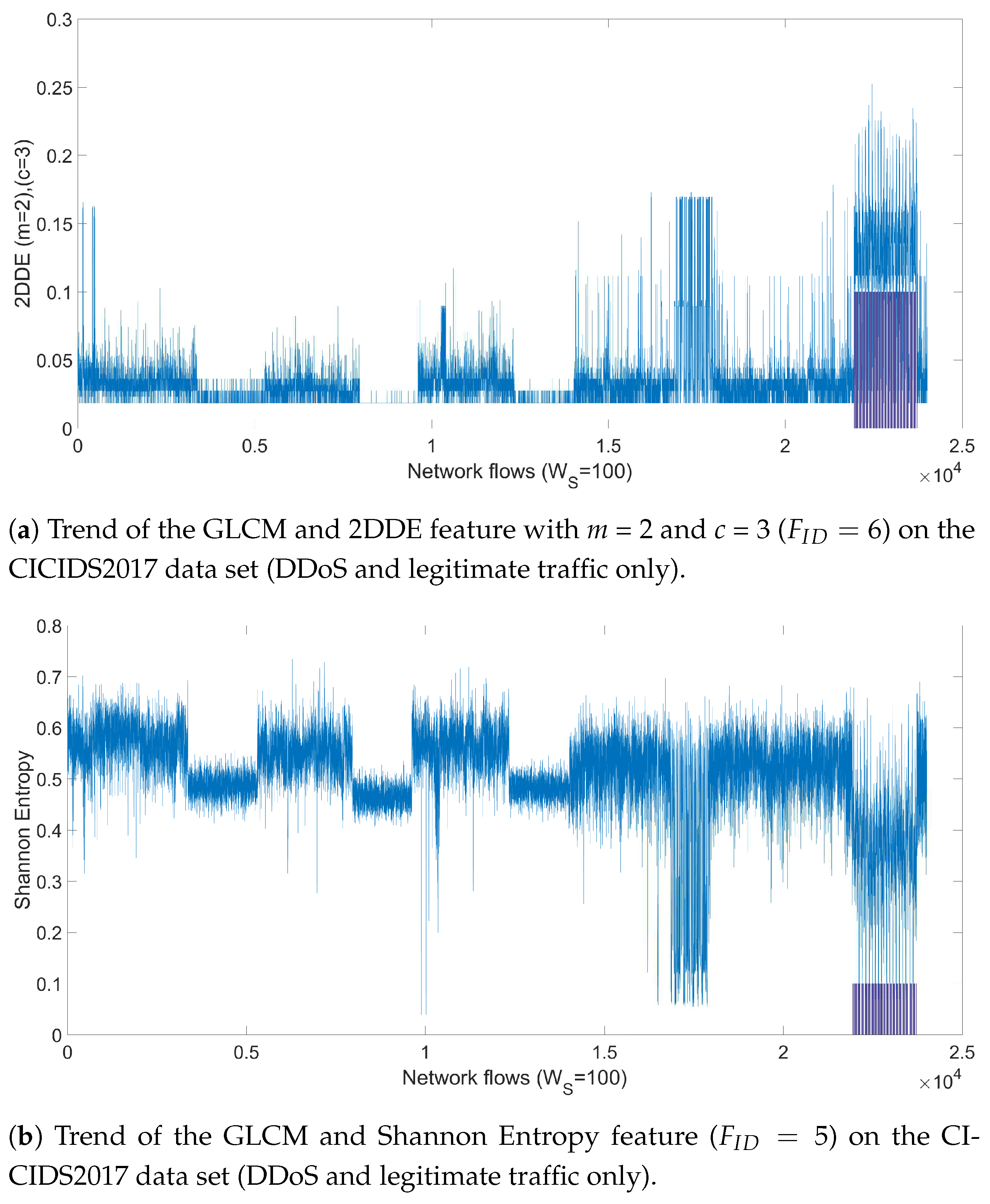

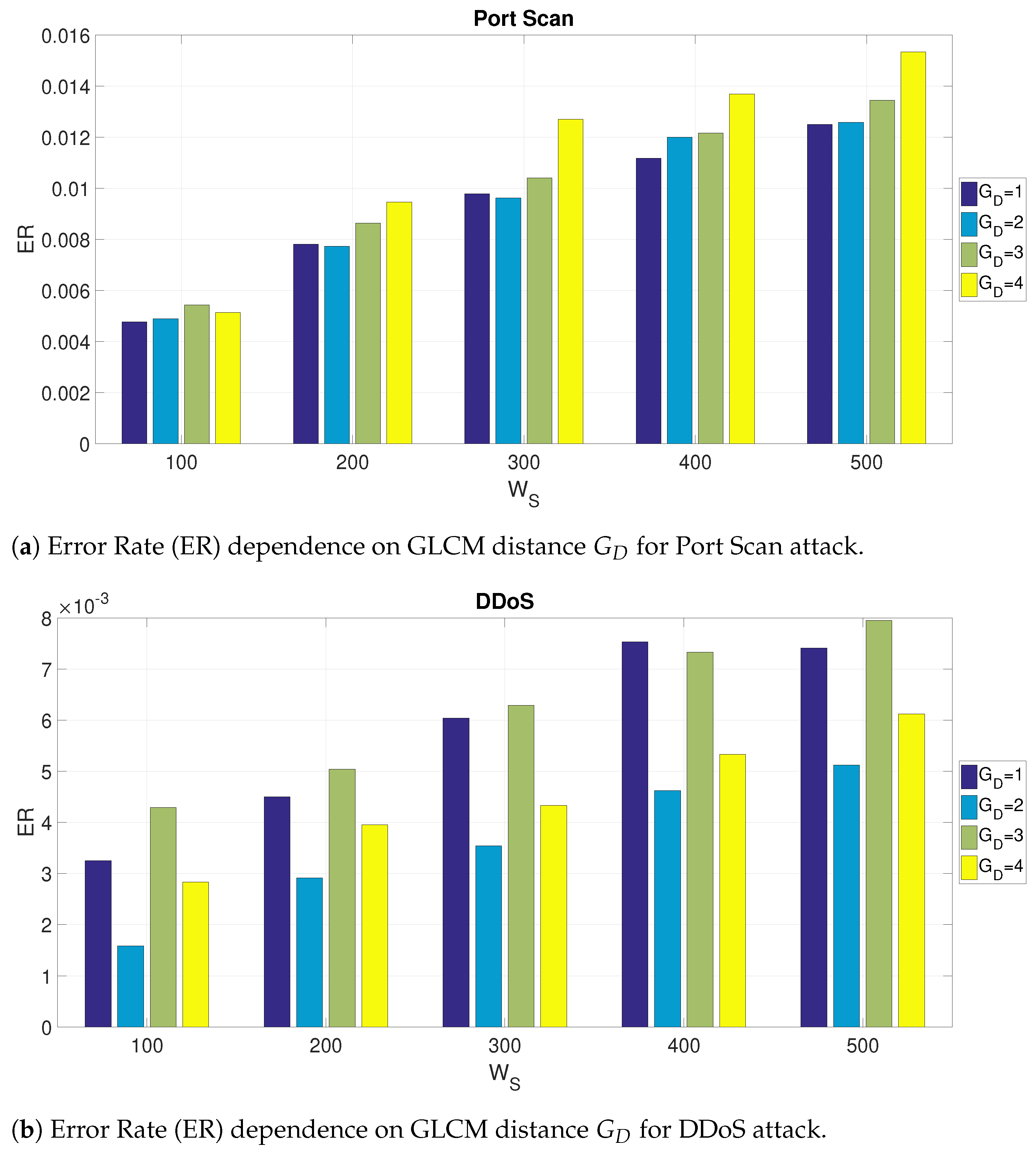

Figure 6a,b show respectively for the Port Scan and the DDoS attacks, the impact of the GLCM distance

for different values of the window size

. These results are obtained using all the 64 features identified in

Table 2. It can be noted that the optimal value of

is 100 network flows, as the ER increases with larger values of

. This may due to the reasons that the difference between legitimate traffic and the traffic related to the attack are more evident when the

is relatively small. On the other side,

is the lower limit of

to allow the GLCM to operate on a grayscale picture large enough to obtain meaningful values.

Figure 6a shows that a value of

is optimal to detect the Port Scan attack, while

Figure 6a shows that a value of

is optimal for the DDoS attack. These results seem to indicate that there is no need to use values of

larger than 2, which would also be more computing intensive.

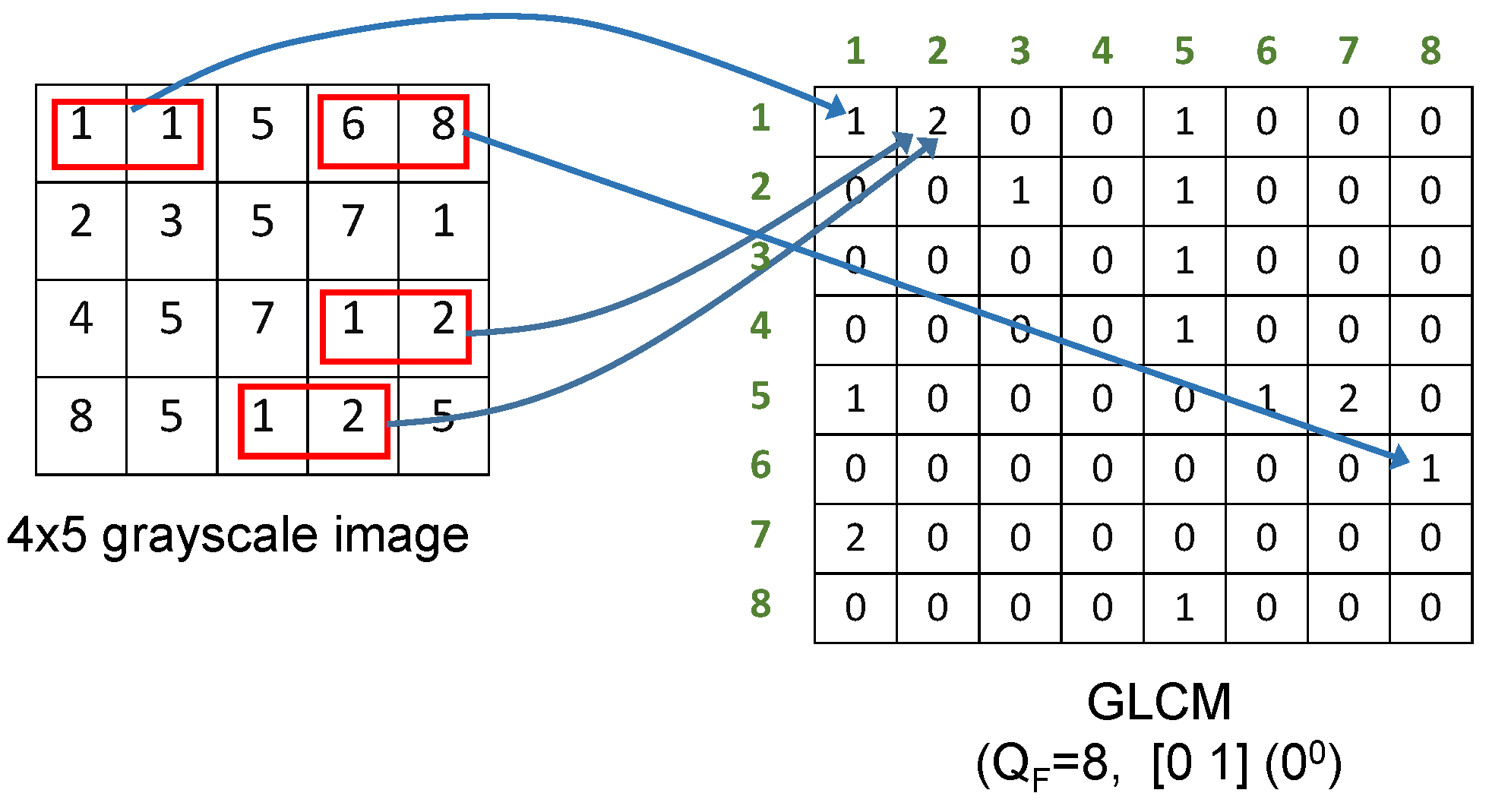

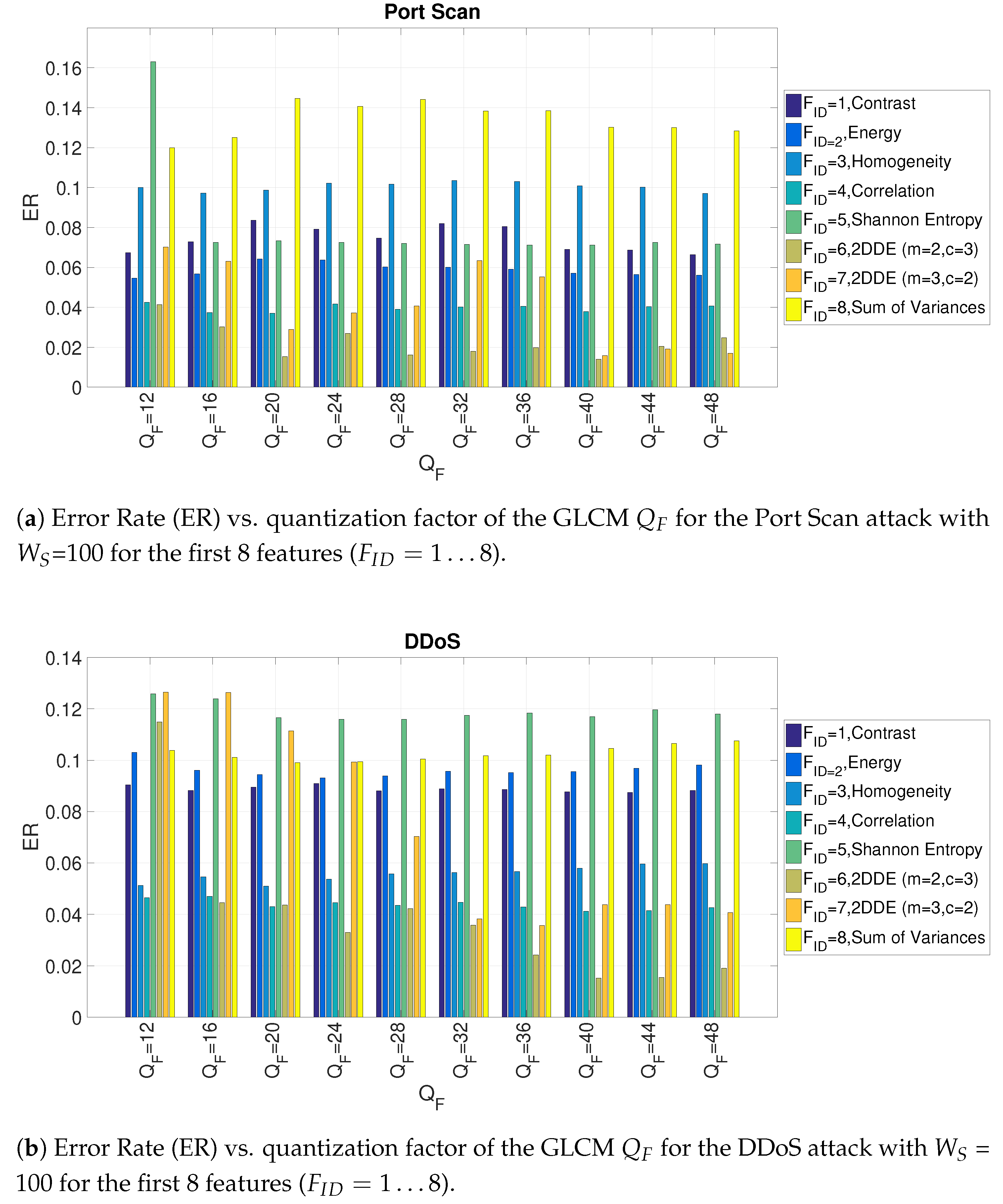

Then, the impact of the quantization factor was evaluated. As described before, the quantization factor in the GLCM definition is an important factor in the application of GLCM. A large value of provides an higher granularity which can be beneficial in the application of the ML algorithm for the detection of the threat. On the other side, a large value of is more computing expensive for the calculation of the GLCM features and 2DDE as the resulting GLCM matrix are larger (the GLCM size is ). This is an important trade-off, which was investigated for each specific feature and for each attack.

Figure 7a,b shows the impact of the

parameter on the detection accuracy respectively for the Port Scan and the DDoS attack for the first 8 features (only the first 8 features are provided in these figures for reasons of space, but subsequent figures will consider all features). The value of

is set to 100 since the previous

Figure 6 has shown that

is the optimal value for attack detection.

Figure 7a,b provide two important results: the first is that they identify the optimal value of the

parameter (

for the Port Scan attack and

for the DDoS attack). The second is that they show that the 2DDE features have a better performance than the other features. This result justifies the assumption done in this paper for the application of 2DDE to the problem of IDS.

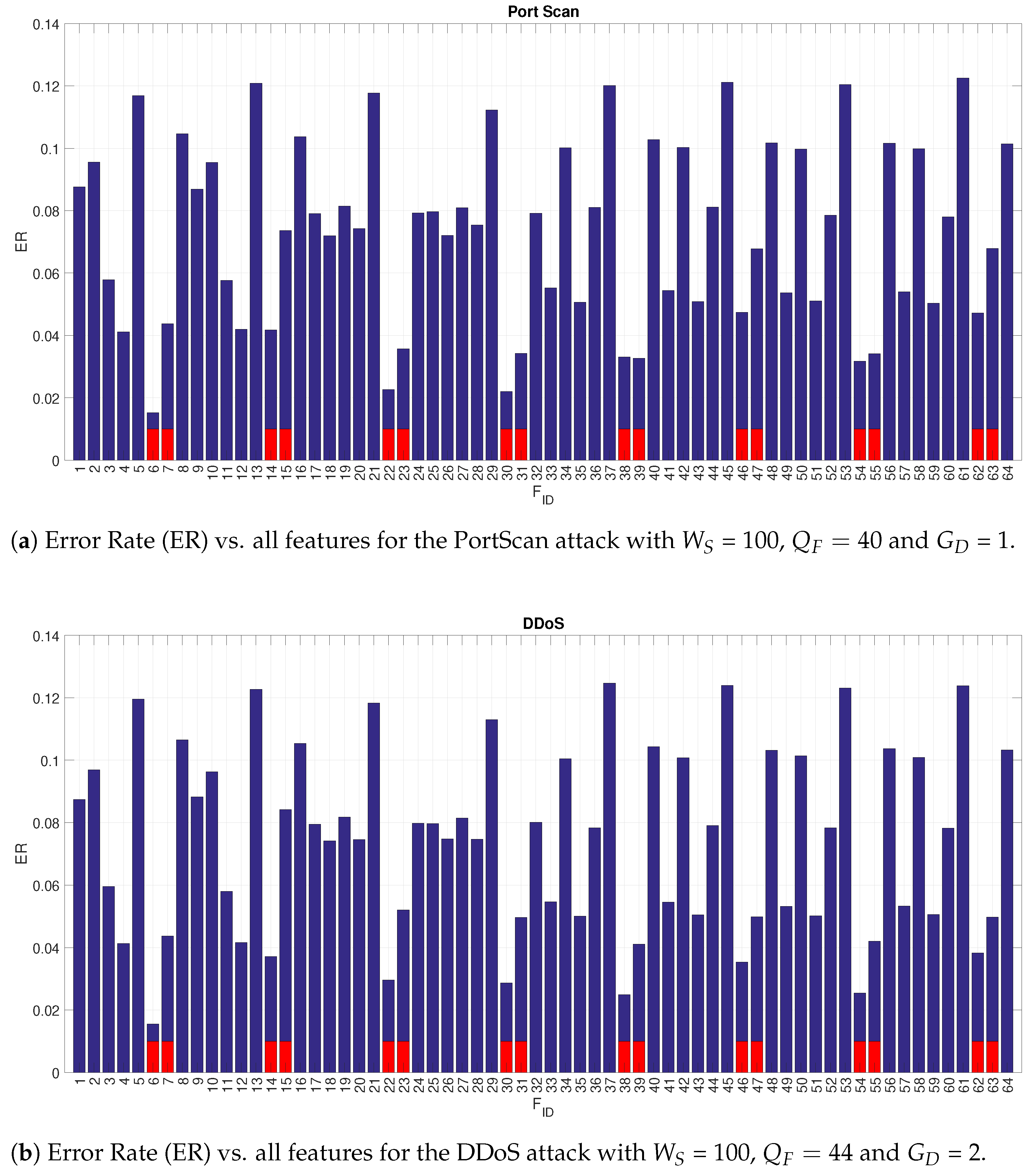

Figure 7 shows only the first 8 features. Then, a more extensive analysis of the detection performance of each of the 64 features was carried on by setting the optimal value of the other hyperparameters (

,

and

). The results are shown in

Figure 8a,b where the ER is reported for each feature identified with the

identifier. To better visualize the features related to 2DDE a red bar is used in the Figures.

Figure 8a,b show that the 2DDE is able to obtain a consistent high detection accuracy in comparison to the other features for all the 64 features. In particular, for both attacks, the values of

m = 2 and

c = 3 in the 2DDE definition provides a better performance than the values of

m = 3 and

c = 2 in the 2DDE definition. This result shows the higher detection performance of 2DDE in comparison to the other features (e.g., Shannon entropy or variance). The results shown in these figures also give an indication on the GLCM angle, which is most performing. In general, the GLCM distance and angle defined by the 2-tuple [0

] (which corresponds to

) provides better results (in terms of detection accuracy) than the other 2-tuples.

The importance of 2DDE in comparison to other features for the IDS problem is also visible, once SFS is applied to select the optimal set of features on the basis of the value of hyperparameters already set. The results of the application of SFS is presented in

Table 4, where the 10 best features are shown respectively for the DDoS and the Port Scan attack. In

Table 4, the 2DDE features are highlighted in

red. It can be seen that the 2DDE features are substantially present among the 10 best features, which shows the the application of 2DDE to this specific problem is an important element to achieve an higher detection accuracy of the attack.

4.2. Optimized Results

On the basis of the best features described in

Table 4 and the optimal values of the hyper-parameters defined in

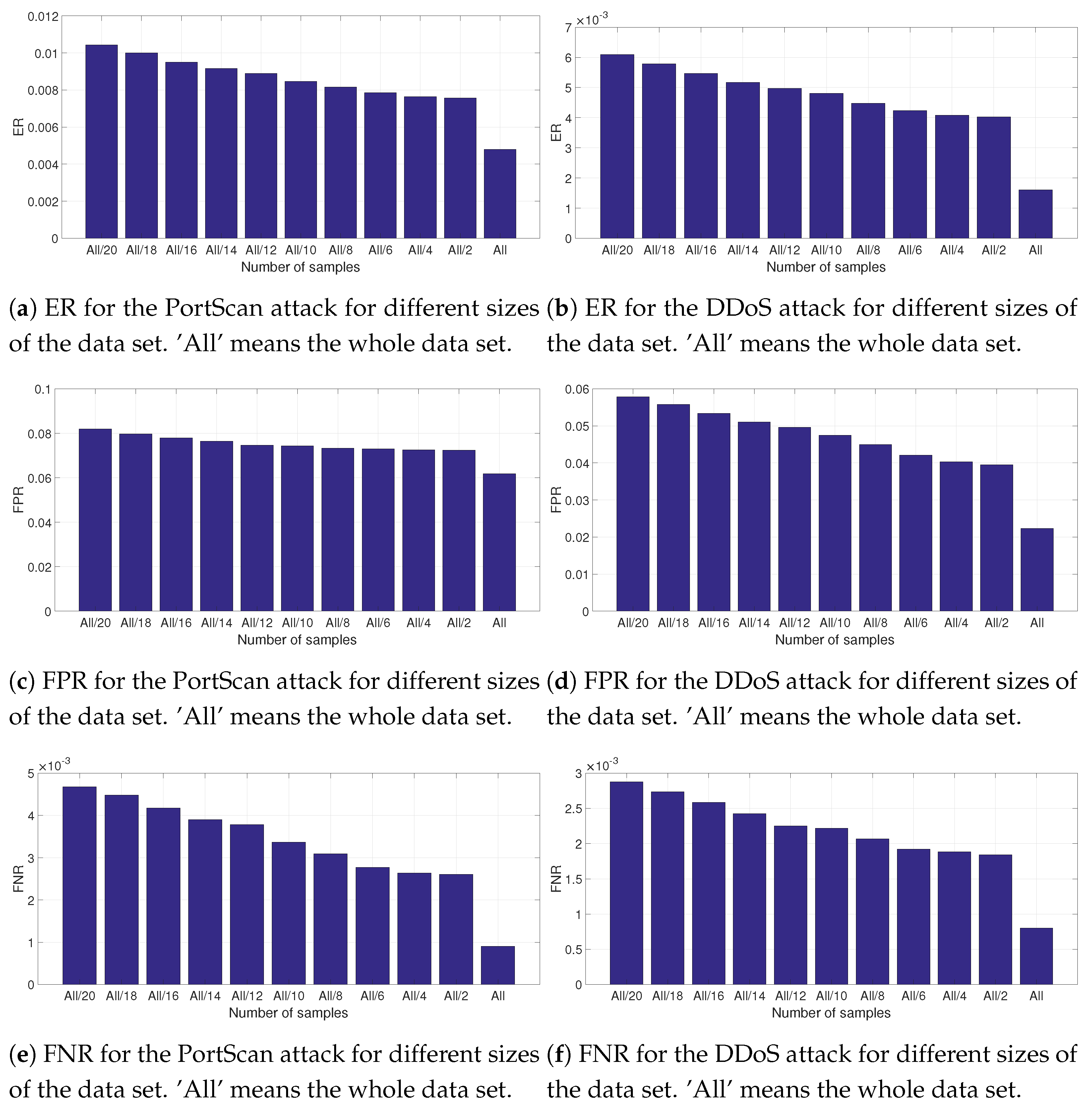

Table 3, the ER, FPR and FNR have been calculated using the Decision Tree algorithm. It was also evaluated the impact of the size of the data set. From the whole data set, a partitions of the whole data set have been selected and the ER, FPR and FNR have been calculated. The results are presented in

Figure 9 and related subfigures where ’All’ means the whole data set and ’All/x’ is a partition by the factor x. The size of ’All’ can be calculated from the values presented in

Table 1. The partition is created by extracting randonmly ’All/x’ elements from the whole data set. To mitigate the risk of bias, the selection of the partition and the calculation of the results is repeated 100 times and the results are averaged.

Both for the PortScan and the DDoS attacks, it can be seen that the performance of the detection of the attack is lower for smaller partitions of the data set because it is more difficult for the algorithm to discriminate the legitimate traffic from the traffic related to the attack. This trend is coherent for all the three metrics (ER, FPR and FNR) and the two attacks.

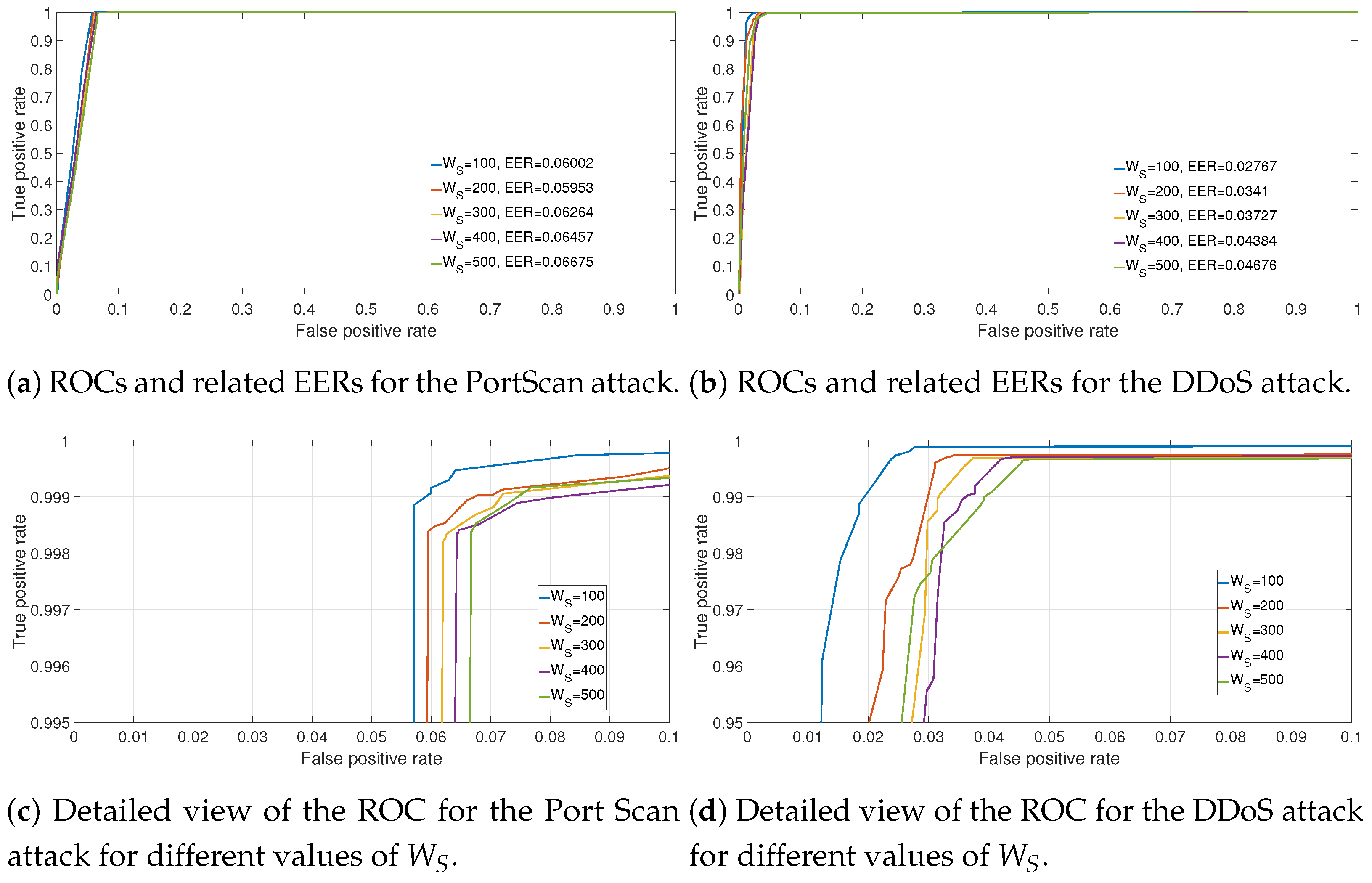

To complete the previous results, the ROCs for the DDoS and the PortScan attacks are presented respectively in

Figure 10a,b. Since the FPR is relatively limited (because the data set is quite unbalanced), a more detailed figure of the same ROCs (i.e., zoom of the previous figures) is presented in

Figure 10a,b respectively for the DDoS and the PortScan attacks. The values of the

for each value of

are also reported. The results from the ROCs confirm the previous result that the optimal value of the window size is

because an increase of

produces slightly worst results in terms of ROCs and EER. It can also been seen that the detection of the PortScan attack is slightly worse than the DDoS attack. This may be due to the reason that PortScan attacks are more difficult to distinguish from legitimate traffic than the DDoS attacks when the entropy measures are applied (especially in the CIC-IDS2017 data set). The structure of the sequences of network flows features in the DDoS attacks can be quite different from legitimate traffic (e.g., since a flooding of messages is implemented) while the PortScan attack traffic may resemble legitimate traffic. The weakness of the proposed approach in achieving an optimal FPR is also discussed in the comparison with the literature results in

Section 4.3. We note that the proposed approach manages to achieve a very competitive FNR instead.

4.3. Comparison with Other Studies

On the basis of the optimization results obtained in the previous

Section 4.1, we have calculated the values of ER, FPR and FNR for the Port Scan and the DDoS attack and we compared these results with the results in literature on the same CICIDS2017 data set. The comparison is indicative because each study may have modified the initial data set in different ways: a subset of the initial 78 features may be used or the data pertaining only to specific attacks has been used. We must also consider that the CICIDS2017 data set is relatively recent and not all the studies using it focused on a specific attack as it was done in this study. The results are presented in

Table 5 where the first three columns identify the value of ER,FPR and FNR. The fourth column provides relevant notes (e.g., the specific adopted algorithm). The fifth column identifies the specific attack (i.e., DDoS or Port Scan) and the related study where the results were produced.

Table 5 does also provides the comparison of the machine learning algorithms: SVM algorithm, Naive Bayes algorithm and Decision Tree.

The results show that the proposed approach is competitive against other approaches proposed in literature. For example, in the case of the DDoS attack, the obtained ER (0.0016) is smaller than the ER obtained by most of the other results with the exception of the study [

7] where it has the same value or the study [

6] where the obtained ER is slightly lower than the result obtained in this study (0.0015 rather than 0.0016). It has to be noted that both [

6,

7] use sophisticated DL algorithms which are more computing demanding than the approach proposed in this paper. In addition, it is noted that the approach proposed in this paper is able to obtain a value of False Negative Rate (FNR) for the DDoS attack (i.e., 0.00079), which is considerable lower than the result obtained by all other approaches. On the other side, the FPR is worse than the value obtained by the other studies. Then, this approach is particularly strong on the FNR performance but it is weaker on the FPR. A potential reason why FNR is so low in comparison to literature is due to the sliding window approach where the presence of only a single network flow labelled as an attack in the data set is magnified to the size of the sliding window. The improvement of the FPR is one of the actions for future developments and investigations on this approach.

The results obtained with the DDoS attack are confirmed by the results obtained by the PortScan attack. The obtained FNR is better than the results obtained in literature while the ER is also smaller than the results presented in other studies. In particular, our approach achieves a similar ER to the results in [

9], which uses a DL approach (i.e., LSTM). On the other side, the FPR obtained with this approach is higher than the results obtained in literature. Another result shown in

Table 5 is that the Decision Tree algorithm has a better detection performance than the SVM and Naive Bayes algorithms. This result is consistent with [

5] where the DT provided the optimal detection accuracy.

An evaluation of the use of all the GLCM angles was also implemented to validate the adoption of only a limited set of GLCM angles as described in

Section 3.3. The results are provided in

Table 6 using the Decision Tree algorithm. The results in

Table 6 show that a subset of the GLCM angles (as selected in this study) provides a better performance than using all angles since the ERs for the subset are smaller than the ERs for all the GLCM angles. The results are consistent for different values of

and for both attacks of PortScan and DDoS.