1. Introduction

Multimedia content accounts for the majority of internet traffic. According to the Cisco Visual Networking Index, 82% of global mobile data traffic will be video by 2022 [

1]. To handle traffic demand related to multimedia, HTTP adaptive streaming (HAS) solutions are often used.

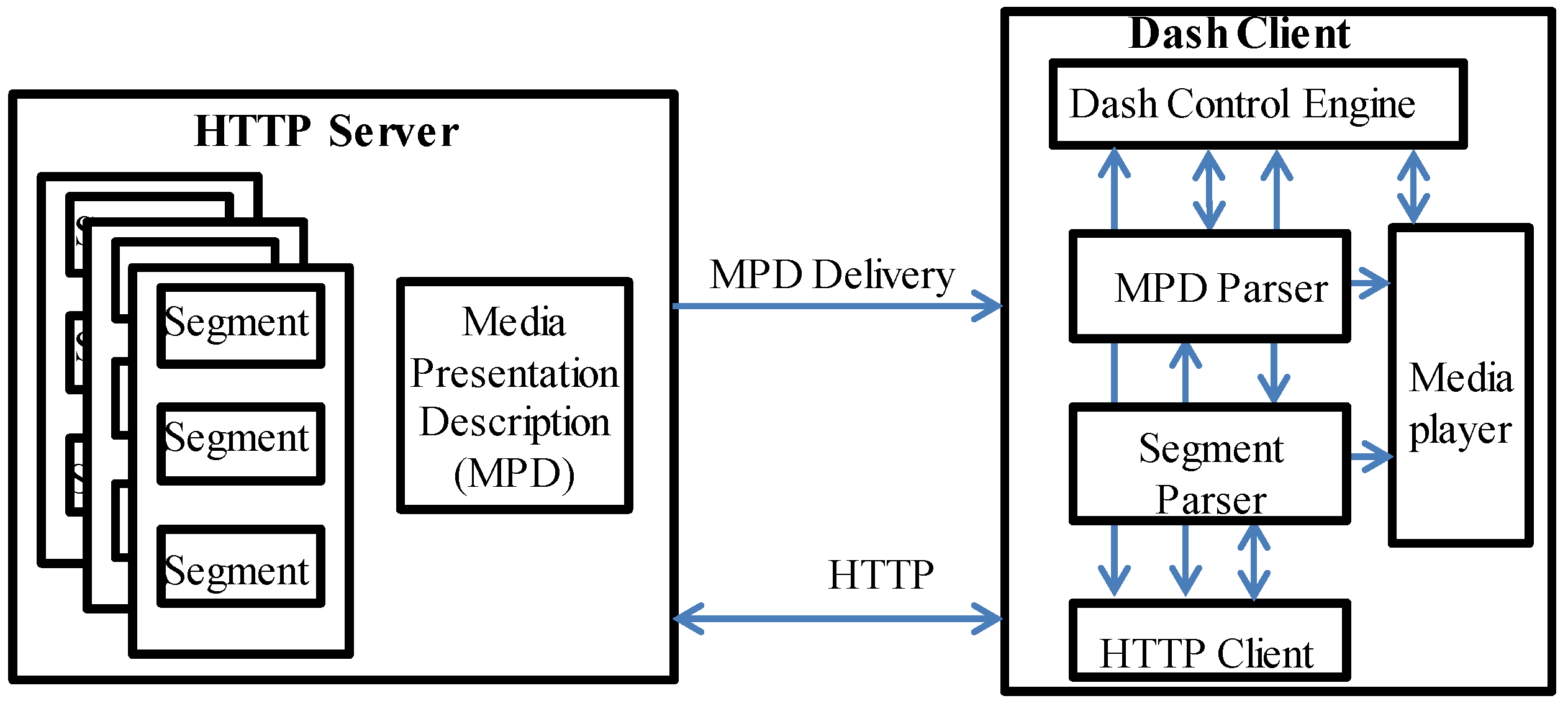

Figure 1 shows a simple MPEG-DASH streaming scenario between a video server and a DASH client. In MPEG-DASH, the video content stored in the server is encoded at multiple video rates and fragmented into small segments of fixed duration,

τ. The video client starts the streaming session by asking for the Media Presentation Description (MPD) file from the server by using HTTP GET requests. The video client downloads the segments at the most suitable video rates available from the server. The adaptive bitrate (ABR) algorithm runs on the HTTP client and dynamically adjusts the video quality based on the network conditions. The objective of the ABR algorithm is to optimize the QoE of video clients. A comprehensive survey on QoE determined the factors that affect user experience [

2]. These QoE objectives include selecting the highest set of video bitrates, avoiding unnecessary video bitrate changes, and avoiding playback interruptions. Playback interruptions and selection of video bitrates affect the user experience the most [

3]. However, these video quality objectives are contradictory. On one hand, selecting the highest available video rate increases the risk of playback interruption; on the other hand, mitigating the risk of interruptions during playback means selecting lower-quality segments. Therefore, the selection of the most feasible video rate is a challenging task.

The majority of existing algorithms employ fixed control rules to pick the video rate based on available throughput [

4,

5], the amount of video in the playback buffer [

6], or a combination of the two [

7,

8,

9,

10,

11]. These algorithms require significant tuning, and performance varies from one client or group of network settings to the other.

Video streaming services deploy segment durations (

τ) differently. Microsoft’s Smooth Streaming and Adobe’s HTTP Dynamic Streaming offer segment durations of two seconds and four seconds, respectively [

12,

13]. The client can buffer a larger number of smaller segments, compared to larger segments, as shown in

Figure 2. More segments reduce the risk of playback interruption. Let us assume the current buffer level is four seconds, and the segment duration is two seconds. This means the total number of segments the buffer can hold is two. If the segment duration is four seconds, then only one segment can fit in the buffer. If throughput is less than the video rate of the segment being downloaded, there is a greater risk of buffer underflow when downloading larger segments. This shows that instead of observing only buffer level, clients should observe the ratio of the buffer level to the segment duration in order to adapt video quality. Moreover, using the smaller segment duration, the client has more opportunities to adapt the video rate, compared to using the larger duration. In a highly unstable network, the client could adjust the video rate more quickly by downloading smaller segments.

Similarly, different video players offer different playback buffer sizes, Bmax. The buffer-based algorithms adapt the video rates aggressively or conservatively based on the playback buffer level. As the buffer level increases, the algorithms select the video rate aggressively. A smaller playback buffer would fill up quickly, compared to a larger buffer. This would allow the adaptation algorithms to aggressively increase the video rate. However, a larger buffer reduces the risk of playback interruption in case of a mismatch between the selected video rate and the available bandwidth. The ABR algorithms should be able to guarantee QoE under different settings. However, the existing algorithms ignore important parameters, such as segment duration and the client’s playback buffer size, when selecting video quality. This results in inconsistent performance from algorithms under different settings.

In this paper, we present a buffer- and segment-aware fuzzy-based ABR algorithm for a single cell with multiple clients in order to optimize the QoE of competing clients. The objective of the proposed algorithm is to achieve optimal performance across a variety of video client settings and QoE objectives. To this end, the proposed algorithm considers segment duration, client playback buffer size, estimated throughput, and the amount of video in the playback buffer to select rates for the next video segments. We conducted extensive experiments to evaluate the performance of the proposed algorithm with multiple segment durations, playback buffer sizes, and video sequences. The results from our extensive experiments reveal that the proposed long video adaptation algorithm outperforms state-of-the-art algorithms, with average improvements in video rate, QoE, and bandwidth utilization, respectively, ranging from 5% to 18%, by about 13% to 30%, and by up to 45%. Moreover, the proposed algorithm manages the clients’ playback buffers to avoid unnecessary rebuffering.

The rest of this paper is organized as follows.

Section 2 reviews the existing video streaming algorithms.

Section 3 describes the QoE maximization problem.

Section 4 describes the proposed fuzzy-based logic controller, while

Section 5 details the quality adaptation algorithm, and

Section 6 presents the simulation results. Finally,

Section 7 concludes the paper.

2. Related Work

Over the last decade, multiple ABR schemes have been proposed to improve QoE. The ABR algorithms can be divided into three methods: (1) throughput-based, (2) buffer-based, and (3) hybrids. Throughput-based algorithms pick the video rate of the segments considering the available throughput,

T(

i), observed during the download of the

ith segment [

4,

14,

15,

16,

17]. In an unstable network, the available throughput may fluctuate quickly. Because the video rate is selected based on throughput, an unstable network would lead to more video rate switches, which degrades the user experience. In order to minimize video rate–switching frequency, the smoothed throughput measure,

Ts(

i), is employed:

where the value of weighing factor

δ is within the range [0, 1]. The authors in [

18] provided pseudocode for the adaptation algorithm of Google’s MPEG-DASH Media Source demo. The algorithm selects video rates only based on the throughput observed during download of the segments. The algorithm uses a moving average of two different bandwidth estimation coefficients to reflect small- and large-scale bandwidth fluctuations. It then selects the smaller of the two as the bandwidth estimate for the next segment. Finally, the algorithm selects the highest available video rate that is lower than the bandwidth estimate.

Because adaptation algorithms ignore buffer level, selecting video rates based on smoothed throughput increases the risk of playback interruption. Li et al. [

19] showed that the throughput-based schemes cannot accurately estimate bandwidth when multiple clients compete for access through a network bottleneck. This leads to degradation in the selected video rates, unfair and inefficient utilization of bandwidth, and a higher frequency of video rate switches.

Some ABR algorithms observe only the playback buffer to select the video quality [

20,

21,

22,

23]. Huang et al. [

6] proposed a buffer-based algorithm (BBA) that divides the playback buffer into multiple predefined thresholds (

B1,

B2,

B3, and

Bmax) such that

B1 <

B2 <

B3 <

Bmax. The algorithm respectively increases or decreases the video rate aggressively or conservatively based on whether the playback buffer level increases above the next higher buffer threshold or decreases below the next lower buffer threshold. In [

21], the authors formulated video rate adaptation as a utility maximization problem. They devised an online algorithm called BOLA that uses Lyapunov optimization to improve the user experience.

Multiple researchers have used a combination of playback buffer and throughput to select video quality [

7,

8,

11,

23,

24,

25,

26,

27,

28]. Such algorithms combine the approaches used by throughput-based and buffer-based algorithms to select the quality of the next segment. Vergados et al. [

25] proposed the Fuzzy-based DASH (FDASH) adaptation algorithm, which considers buffer level and variations in its level to mitigate playback interruption. The authors in [

26] extended the work in [

25] and also considered the available power in the selection of the segments in order to extend the lifetime of mobile video clients. Kim et al. [

26] modified FDASH by employing history-based throughput estimation, a segment bit-rate filtering module (SBFM), and a

Start Mechanism to improve the user experience. In [

28], the authors used segment size in addition to throughput and the playback buffer size for video rate adaptation. Akshan et al. [

29] proposed a fuzzy-based adaptation controller for DASH that takes observed throughput and playback buffer as input to pick the video rates. Multiple studies have shown that when multiple DASH clients compete for bandwidth in a wired network, it results in unfair allocation of bandwidth due to ON-OFF generated traffic [

4,

6]. The mechanism proposed in [

29] also minimizes ON-OFF traffic by selecting video rates higher than the observed throughput to allow the clients to download segments continuously. However, this approach work would lead to poor performance in a cellular network. The reason is that unlike the wired network, the throughput in a cellular network depends on multiple factors, including propagation distance, fading, interference, and user mobility. The authors in [

30] use a fuzzy-based adaptation mechanism that uses the moving average of the observed throughput and playback buffer level variations to minimize the video rate switches. Then, the fuzzy logic system selects the video rate based on the difference between the moving average and real values. Hou et al. [

31] proposed a three-input fuzzy controller including throughput, buffer level, and buffer level variations to enhance the user experience.

The existing algorithms employ fixed control rules to pick the video rate based on the observed throughput and playback buffer level. Furthermore, the algorithms ignore important video client and content characteristics including segment duration and buffer size. This leads to the inconsistent performance of the algorithms under different video content and client settings. Different from existing works, the proposed fuzzy-based algorithm adapts video rates by exploiting not only estimated throughput and playback buffer level but also video player settings and video content characteristics. The objective of the proposed approach is to achieve optimal performance across a variety of video client settings and QoE objectives.

3. QoE Maximization

The HTTP server stores video content encoded into a set of m video rates, R = {R1, R2, R3, …, Rm}. Each representation of a video is split into fixed-duration segments . The segments are downloaded into the playback buffer, which holds the unviewed video. Let Bk ϵ [0, Bmax] be the playback buffer level upon download of the kth segment. The client downloads the kth segment encoded at the ith video rate, Rik. The size of the segment is Rik × . The download time of the kth segment will be (Rik × /Tk) where Tk is the throughput observed when downloading the kth segment.

The objective of ABR algorithms is to improve the QoE of video clients to achieve long-term user engagement [

32]. As mentioned in

Section 1, the key QoE factors that affect user experience include video rate, video rate variations, and playback interruptions.

The average video bitrate over downloaded segments is given by:

where

is the

ith video rate (

k is the segment index), and

S is the total number of segments downloaded by the client.

Magnitude for the changes in the video rate from one segment to another is given by:

The client experiences playback interruption if the download time ( × /Tk) is greater than the current buffer level, Bk. The total interruption time, IR, is .

In this study, we used the same QoE metric used by the authors in [

17], which is defined as follows:

For a video fragmented into

S segments,

q(

) maps the video rate to the quality perceived by the viewer. The authors in [

17] used

q(

) =

and

µ = 3000, signifying that a playback interruption of 1 s receives the same penalty as reducing the bitrate of a segment by 3000 kbps. We used the same values in our evaluation. In this study, we calculated the average QoE per segment (that is, the total QoE metric divided by the number of segments). In

Section 6, we evaluate the QoE of the algorithms by using Equation (4).

In addition, we also calculate mean opinion score (MOS) using the calculations proposed in [

10] to evaluate the performance of the algorithms. These MOS calculations were also used by the authors of [

8] in their study to evaluate the algorithms.

where

Ffreq and

FTavg represent the playback interruption frequency and the average interruption time, respectively.

QLk,

, and

represent the quality level for the

kth segment, average quality level and the standard deviation of the quality level, respectively.

The QoE maximization problem: The problem of video rate adaptation for QoE maximization is given by:

Given the throughput history observed from downloading previous segments, {Tt, t [t1, tk+1]}, the optimization problem provides output for the video rate decision: (R1, …, Rk). The notation (x)+ = max (x, 0) ensures that the term is always positive. Constraint (7) guarantees that the clients do not experience any playback interruptions for the entire streaming duration. Constraint (8) ensures that the buffer level stays between 0 and Bmax. Finally, constraint (9) specifies that the discrete video rate downloaded by the client from the server belongs to the set of available video rates.

4. The Fuzzy Logic Controller

The goal of the proposed fuzzy-based scheme is to select a video rate from

R = {

R1,

R2,

R3, …,

Rm} to improve the user experience. Video rates and playback interruptions are important factors when improving QoE [

2,

3,

32]. In addition, a lot of video rate switches were found to annoy viewers [

33]. We consider the following parameters to improve QoE:

- (1)

Maximize the video rates;

- (2)

Minimize playback interruptions;

- (3)

Minimize the frequency of video rate switches.

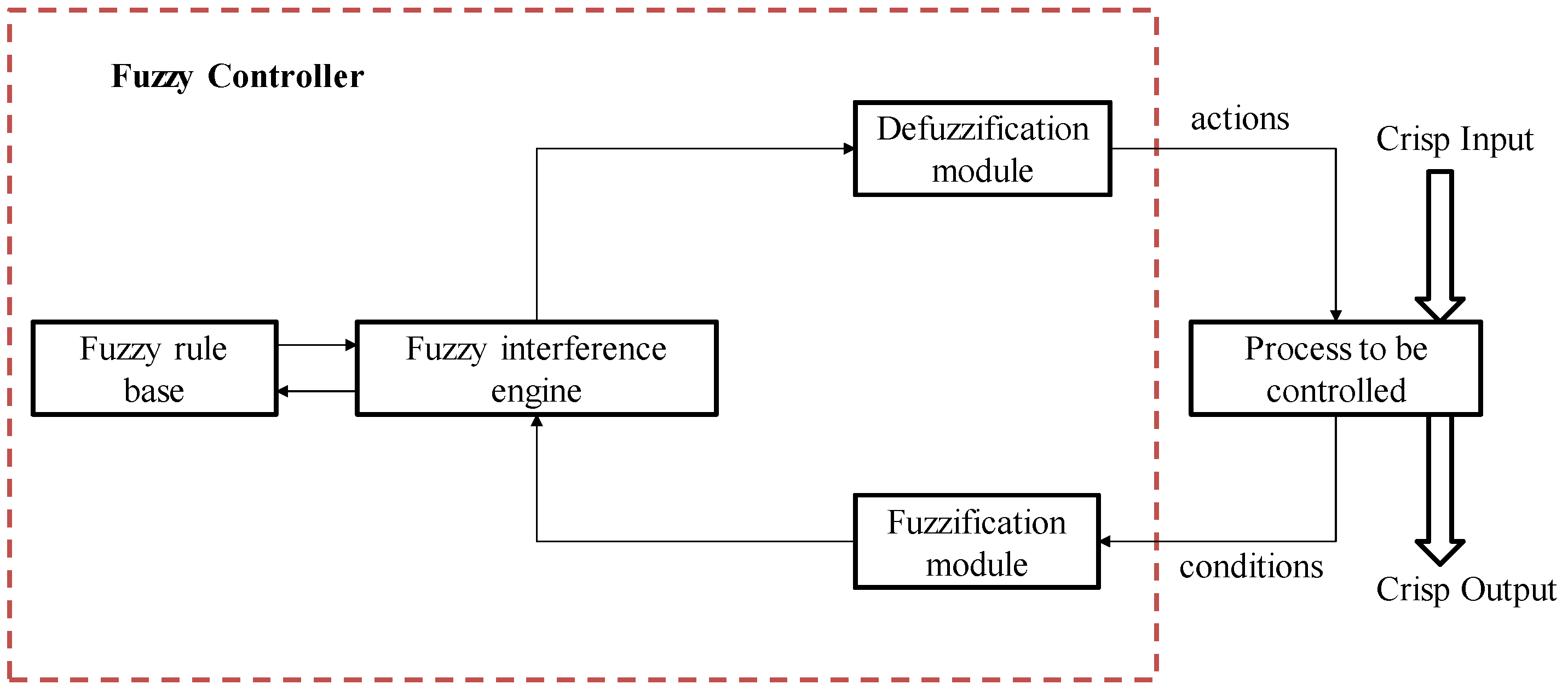

In this section, we discuss the details of the proposed buffer- and segment-aware fuzzy-based quality adaptation algorithm. We also discuss how a fuzzy logic controller (FLC) is incorporated in the client in order to select the most feasible video rate. In this work, we used an FLC based on the Mamdani model [

34]. The structure of the fuzzy logic controller is shown in

Figure 3. It consists of four components: a fuzzification module, a fuzzy interference engine, a fuzzy rule base, and a defuzzification module.

The controller operates by repeating a cycle of following steps:

Step 1: Crisp measurements (inputs) are taken of all variables that represent the conditions of the controller process.

Step 2: These measurements are mapped into corresponding fuzzy sets to express measurement uncertainties. This step is called fuzzification.

Step 3: The fuzzified measurements are then used by the interference engine. The interference engine evaluates the control rules stored in the fuzzy rule base. The output of this evaluation is a fuzzy set.

Step 4: The defuzzification module converts the fuzzy set into a single crisp value. This is the final step, called defuzzification.

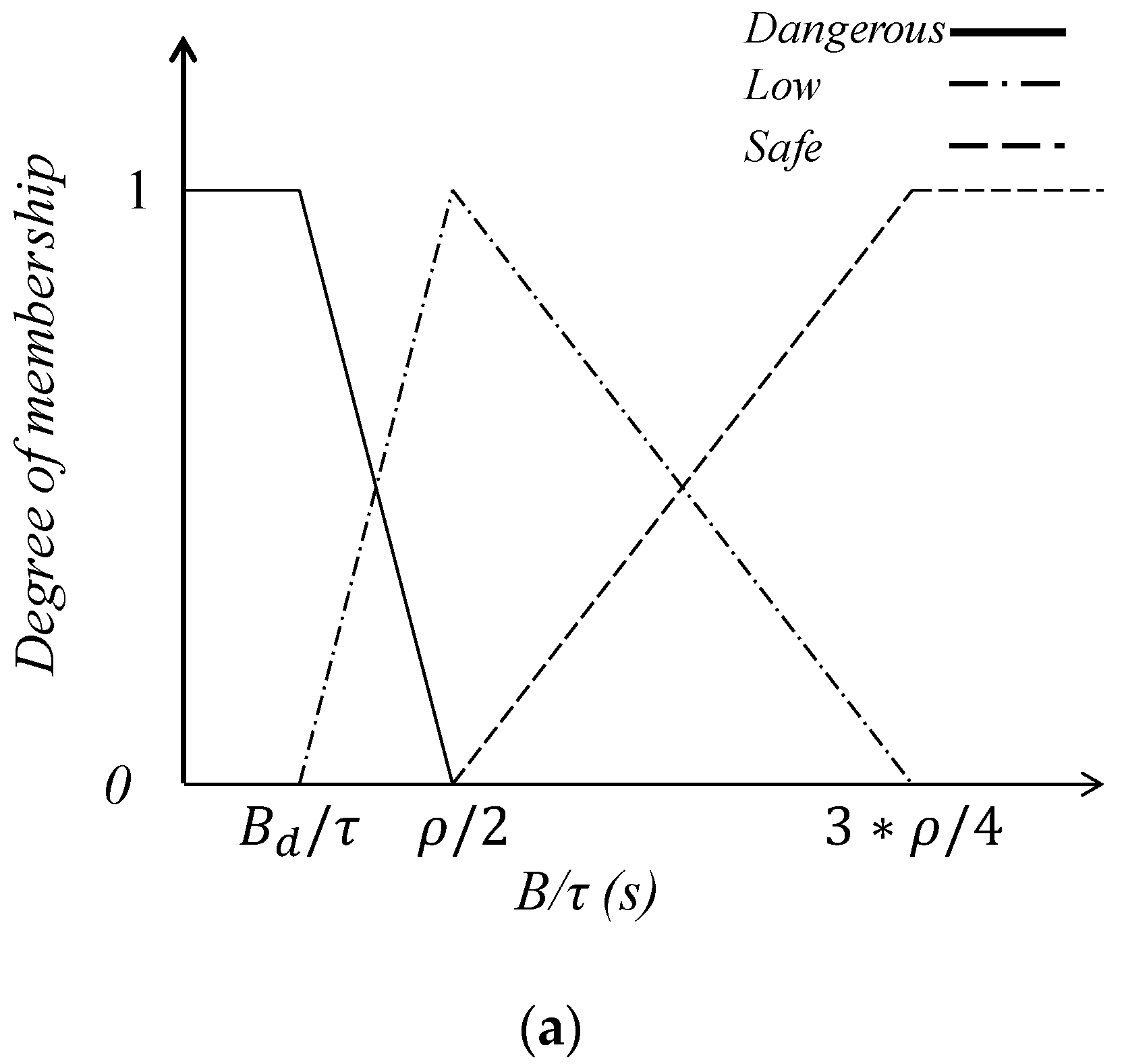

In this work, the FLC applies three variables as input: (1) the ratio of the client’s playback buffer level to the segment duration (B/τ), (2) the ratio of ∆B to the segment duration, (∆B/τ) where ∆B = Bk − Bk−1 is the difference in the buffer level upon download of the last two segments, and (3) the ratio of throughput to the selected video rate (T/Rik).

The existing algorithms select video rates based on the available buffer level. As explained in

Section 1, buffer level alone does not give us complete information. It is important to note here that segment durations change from one video content provider to the next. If the available playback buffer level is eight seconds, and the segment duration is two seconds, four segments can fit in the buffer. However, if the segment duration is four seconds, it means only two segments can fit into the buffer. Therefore, in this work, we consider the ratio of buffer level to segment duration.

The change in the buffer level (∆B) is the result of a mismatch between available throughput and the video rate. ∆B helps the algorithm determine whether to increase or decrease the video rate. However, ∆B alone does not accurately capture the variations in the buffer level during the download of a segment. Let us understand this by using an example. If the segment duration is two seconds, and the change in the buffer level during the download of a segment is four seconds, it means that during the download of the segment, a portion of the buffer equal to twice the segment duration gets filled up. However, if the segment duration is four seconds and the change in the buffer level is also four seconds, the portion of the buffer level that gets filled up is equal to one segment. Therefore, we consider the ratio of ∆B to segment duration as input for the FLC.

In addition to B/τ and ∆B/τ, the ratio of throughput to video rate helps to determine if the video rate should be switched abruptly or gradually. Therefore, the FLC applies the ratio of throughput to video rate as input.

Figure 4a shows the membership functions of the ratio of buffer level to segment duration, in which three linguistic variables are considered: Dangerous, Low, and Safe. When the buffer level is close to empty, video quality is compromised in order to mitigate the risk of playback interruption. As the playback buffer fills up, video quality is increased to improve the user experience.

Figure 4b shows the membership functions of the ratio of ∆

B to segment duration in which three more linguistic variables are considered: Decreasing, Stable, and Increasing. These linguistic variables describe the behavior from the rate of change in the buffer between subsequent segment downloads. Ideally, the buffer level should remain stable. A decrease in the buffer level forces the adaptation algorithm to decrease the video rate, whereas an increase in the buffer level suggests that the available throughput is not being utilized completely. That provides an opportunity to increase the video rate and improve video quality.

Figure 4c shows the membership functions of the ratio of throughput to selected video rate, in which three more linguistic variables are considered: Low, Steady, and High. These linguistic variables describe the extent of the mismatch between throughput and video rate. The adaptation algorithm adapts the video quality as the throughput fluctuates over time. Ideally, the adaptation algorithm selects the highest available video rate that is less than the throughput in order to efficiently utilize bandwidth.

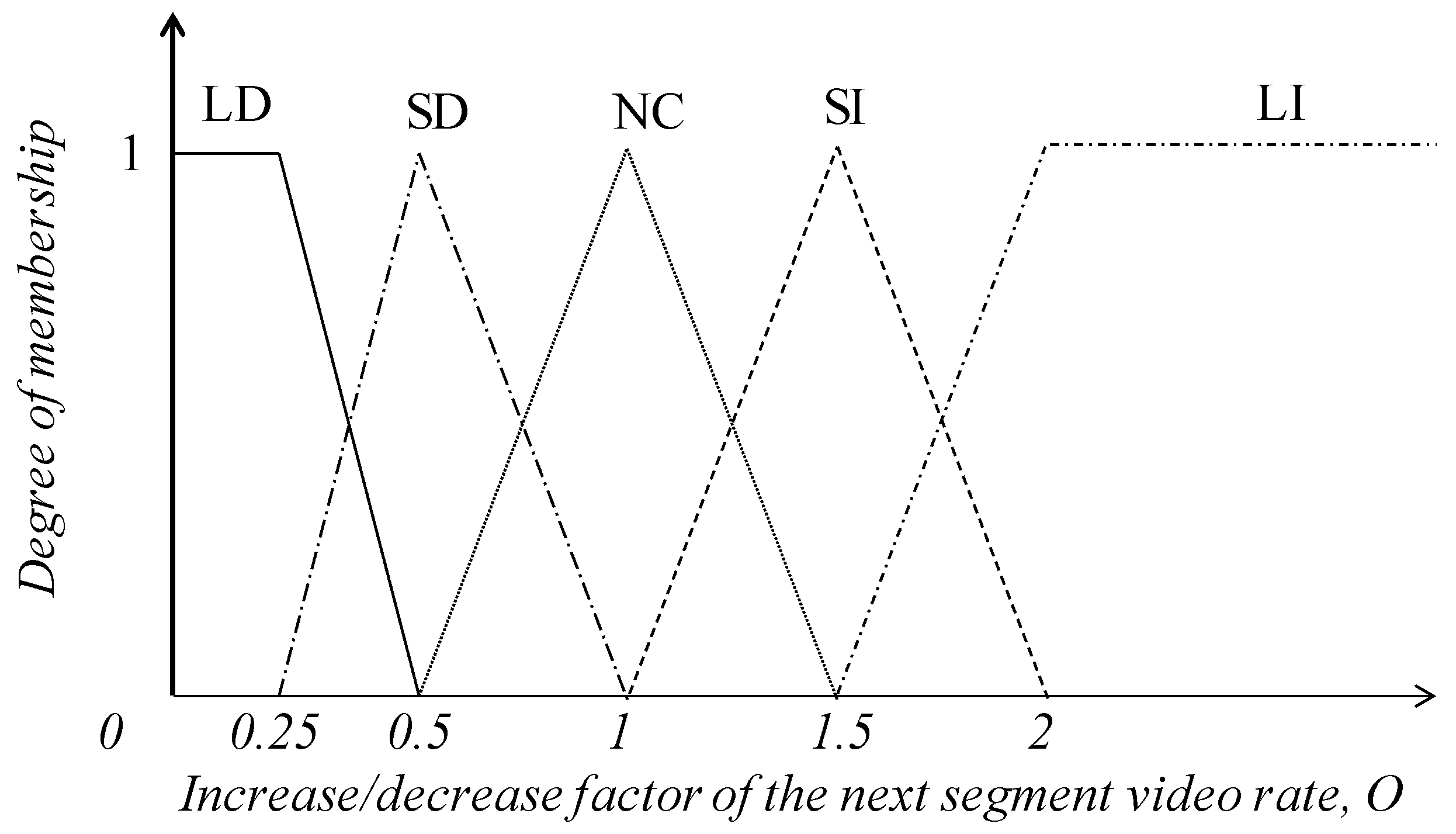

The output of the fuzzy logic controller,

F, represents an increase or decrease in the video rate of the next segment. As shown in

Figure 5, the linguistic variables for the output are Large Decrease (LD), Small Decrease (SD), No Change (NC), Small Increase (SI), and Large Increase (LI).

The fuzzy rules provided in

Table 1 are applied to map fuzzy output to fuzzy input. Each rule represents a fuzzy if–then statement. The output of the rules is aggregated into a fuzzy set. As shown in

Table 1, when

B/

τ is in the dangerous zone, FLC decreases the video rate to minimize the risk of buffer underflow. The FLC observes ∆

B/

τ and

T/

to determine whether to decrease the video rate abruptly or gradually. The FLC decreases the video rate gradually only when both ∆

B/

τ and

T/

are not in the decreasing zone or the low zone, respectively.

As B/τ enters the low zone, the FLC focuses on staying at the current video rate to minimize the frequency of video rate changes. However, when ∆B/τ and T/ are in the decreasing zone and low zone, respectively, the FLC decides to slowly decrease the video rate. When ∆B/τ and T/ are in the increasing zone and high zone, respectively, the FLC decides to slowly increase the video rate.

Finally, when B/τ enters the safe zone, there are enough segments available in the buffer to increase video quality. However, if ∆B/τ and T/ are in the decreasing zone and low zone, respectively, the FLC decides to keep the current quality. That mitigates the risk of the buffer level dropping to the low zone or dangerous zone, and it minimizes video rate switches.

The output,

F, is de-fuzzified to a crisp value using the center of sum method and is calculated as:

where

Aj represents the areas of the respective output membership functions,

j represents the number of output membership functions, and

xj represents the center of the area.

5. Proposed Fuzzy-Based Adaptation Scheme

The DASH client downloads a video stream stored in multiple discrete video rates in the HTTP server. The set of video rates available for the video stream is denoted by

R. The client adaptively selects a video rate from the available video rates in set

R. This is achieved through the fuzzy logic controller. Based on the crisp output,

F, the proposed scheme first selects

Rs as follows:

where

Ts is the smooth throughput calculated using Equation (1), in which the value of weighting factor

δ is set to 0.8. One of the challenges faced by ABR algorithms is that control decisions available to algorithms are coarse-grained because limited video rates are available for a given video. As seen in Equation (14), for the next video rate the proposed scheme selects the highest available video rate that is less than (

).

Next, to minimize video rate switches, Algorithm 1 is invoked after the selection of

Rs. This algorithm first determines if

Rs is greater or smaller than the previously selected video rate,

Rprev. If

Rs is greater than

Rprev, the algorithm checks the buffer level. If the buffer level is within the danger zone, (

Bk <

Bdang) (line 5), then the video rate remains unchanged (line 6). Since the buffer level is already low, increasing the video rate increases the risk of playback interruption. Otherwise, the algorithm selects

Rs as the video rate for the next segment (line 8). The buffer threshold,

Bdang, is equal to the minimum segment duration and part of the buffer size, as follows:

| Algorithm 1: Video Rate Switch Minimization |

| 1 | Input:Rs: Video rate selected based on crisp output of FLC; Rprev: video rate of the last downloaded segment; Bk: current buffer level; Ts: smoothed throughput; D: segment duration; Bmax: buffer size; Bpred: predicted buffer level. |

| 2 | Output:Rnext: Video rate for next segment |

| 3 | ifRs > Rprev |

| 4 | Bpred = Bk + (TS / Rs – 1) * D |

| 5 | if (Bprev <= Bdang) |

| 6 | Rnext = Rprev |

| 7 | else |

| 8 | Rnext = Rs |

| 9 | else ifRs < Rprev |

| 10 | Bpred = Bk + (TS / Rs – 1) * D |

| 11 | if (Bpred >= 0.5 Bmax) |

| 12 | Rnext = Rprev |

| 13 | else |

| 14 | Rnext = Rs |

As explained in

Section 1, video streaming services offer segments of different durations. As the segment duration increases in an unstable environment, the risk of buffer underflow increases. Therefore, segment duration should be considered in the selection of

Bdang. However, with long segment durations and a small buffer size, it is not feasible to select

Bdang based only on segment duration. For example, if the available segment is 10 s, and the buffer size is 20 s, setting

Bdang equal to the segment duration means the client selects the video rate cautiously most of the time. Therefore, buffer size should also be considered in the selection of

Bdang. To this end, we set

Bdang equal to the minimum segment duration plus 20% of the buffer size.

If Rs is less than Rprev (line 9) and the predicted video rate is greater than 0.5 times the buffer size (line 11), the algorithm stays at the video rate selected for the previous segment. The reason is that there is enough buffer available to take the risk of staying at the current video rate. Otherwise, the algorithm reduces the video rate to Rs.

6. Simulation Results

In this section, we implement the proposed buffer- and segment-aware fuzzy-based adaptive video streaming to evaluate its performance. We implemented the experiments by utilizing the ns-3 simulation software. We modified code available from [

35] to perform our experiments. The efficiency of the proposed DASH scheme was evaluated against other adaptation algorithms, namely, FDASH [

25], DBT [

11], QLSA [

16], and DASH-Google [

18]. The topology implemented in this paper is shown in

Figure 6. Clients downloaded segments over an LTE network. Configuration details for the underlying LTE cellular network are in

Table 2. The clients moved at vehicular speed within the cell. A grid-based road topology was used to simulate mobility. The clients’ arrival times were uniformly distributed within the first 30 s.

The HAS server stores two test sequences: Tears of Steel (Dataset 1) and Big Buck Bunny (Dataset 2). The available video segments were produced using trace files of several DASH streams from [

36].

Table 3 presents the client/server settings used to evaluate the algorithms. The experiment was repeated 10 times for each setting, and the average of the results is presented in this section. The videos were streamed for 10 min to evaluate the algorithms. As the experiments detailed in

Table 3 were repeated, the performance of each algorithm was analyzed for 500 min. In the following results, we calculated QoE using Equation (4), and bandwidth inefficiency at time

t was calculated with

[

7], where

W is the total bandwidth available at the base station.

6.1. Dataset 1

Here, we analyze the performance of the proposed algorithm when streaming video segments from Dataset 1. As shown in

Table 3, 10 clients simultaneously competed for the bandwidth. In the first experiment, the buffer size and segment duration were set to 15 s and 2 s, respectively. In the next segment, buffer size and segment duration were increased to 30 s and 4 s, respectively. In the final experiment, buffer size was increased to 60 s.

Table 4 displays the performance of the algorithms when the buffer size was set to 15 s and the segment duration was 2 s. Because the buffer size was small, the risk of playback interruption was high. In case of large fluctuations in the throughput, aggressively selecting the video rate could lead to playback interruption.

Table 4 shows that the proposed algorithm selected the highest video rate from among the competing clients by efficiently utilizing the video rate. However, the proposed algorithm experienced a slightly high number of video rate switches. The reason behind the greater number of video rate switches is that when the buffer level increases, the proposed algorithm quickly increases the video rate by efficiently utilizing the bandwidth. Similarly, when the buffer level drops, the proposed algorithm reacts quickly to decrease the video rate to mitigate playback interruptions. Because the buffer level was small, and the throughput fluctuated as a mobile user moved at vehicular speed, this led to the algorithm downloading high-quality segments at the expense of slightly more video rate switches.

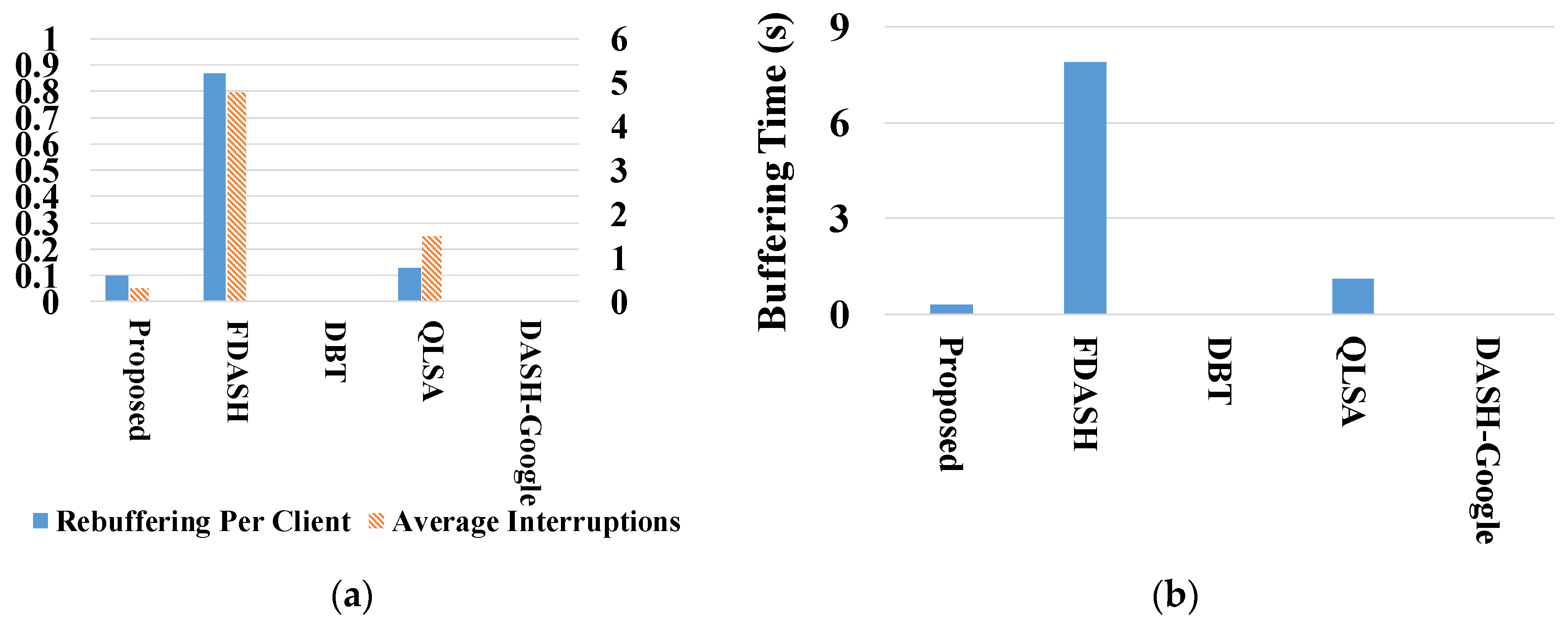

Figure 7 shows that the proposed algorithm avoided unnecessary video playback interruptions while downloading high-quality segments. The rebufferings-per-client metric represents the ratio of clients that experienced a playback interruption to the total number of clients, while average interruptions is the number of times a client experienced a playback interruption.

Table 4 shows that the DBT algorithm achieved a lower video rate than the proposed algorithm; however, the DBT algorithm had slightly higher QoE and MOS values, compared to the proposed algorithm, because it experienced fewer video rate switches. The DBT algorithm avoided switching video rates unless the buffer level increased above or decreased below predefined thresholds, irrespective of fluctuations in bandwidth. This approach helps mitigate unnecessary video rate switches. FDASH is a fuzzy-based algorithm that employs fuzzy logic to select the video rate and control the playback buffer level. In order to minimize video rate switches, the FDASH algorithm switches the video rate only if the predicted buffer level for the next 60 s increases above or decreases below a predefined threshold. If a video client has a small buffer, this approach minimizes video rate switches; however, it leads to more playback interruptions, which degrades QoE.

Table 4 and

Figure 7 indicate that the FDASH experienced the lowest number of video rate switches, but also experienced the greatest number of playback interruptions. The QLSA and DASH-Google algorithms achieved low video rates while experiencing more video rate switches, which degraded QoE.

Next, we set the buffer size and segment duration to 30 s and 4 s, respectively. Although the buffer size increased, the ratio of buffer size to segment duration was the same as in the previous experiment.

Table 5 shows that the proposed algorithm achieved a high video rate by efficiently utilizing the available bandwidth. Moreover, the proposed algorithm avoided playback interruptions.

Table 5 shows that the proposed algorithm achieved the highest QoE and MOS values. The DBT algorithm achieved the highest QoE and MOS values in the previous experiment. However, because the buffer size increased, the performance of the algorithm degraded. The algorithm mitigated unnecessary video rate switches and avoided playback interruptions at the expense of a low video rate. As explained earlier, the DBT algorithm divides the buffer level into predefined buffer thresholds. As the buffer fills up, the algorithm selects video rates more aggressively. The DBT algorithm does not adapt the playback buffer thresholds as the buffer size changes. As the buffer size increases, the distance between the predefined thresholds also increases. This reduces the switching ratio but at the expense of a lower video rate.

Table 5 and

Figure 8 show that the FDASH algorithm achieved the highest video rate and the lowest switching ratio, but at the expense of a large number of rebuffering events. As a result, the QoE of the FDASH algorithm degraded. The QLSA and DASH-Google algorithms achieved high video rates but at the expense of a large number of video rate switches.

Next, we increased the buffer size to 60 s.

Table 6 shows that the proposed algorithm achieved the highest video rate, efficiently utilizing the bandwidth while avoiding playback interruptions. The proposed algorithm achieved the highest QoE and MOS values. The FDASH algorithm achieved a high video rate while mitigating the frequency of video rate switches.

Figure 9 shows that FDASH was the only algorithm to experience playback interruptions. As a result, the FDASH algorithm had a lower video rate than the proposed algorithm.

Table 6 shows that the FDASH algorithm achieved the second highest QoE value; however, its MOS value is the second lowest. The reason is that the playback interruption events heavily impact the MOS values. The DBT algorithm had the fewest video rate switches. The DBT algorithm was able to minimize the switching ratio, but it also had the lowest video rate among the competing clients. The DASH-Google algorithm achieved a high video rate, but also experienced the most video rate switches.

6.2. Dataset 2

Here, we analyze the performance of the algorithms when streaming the Big Buck Bunny video (Dataset 2). In the first experiment, the buffer size and segment duration were set to 15 s and 2 s, respectively. In the next segment, buffer size and segment duration were increased to 60 s and 4 s, respectively.

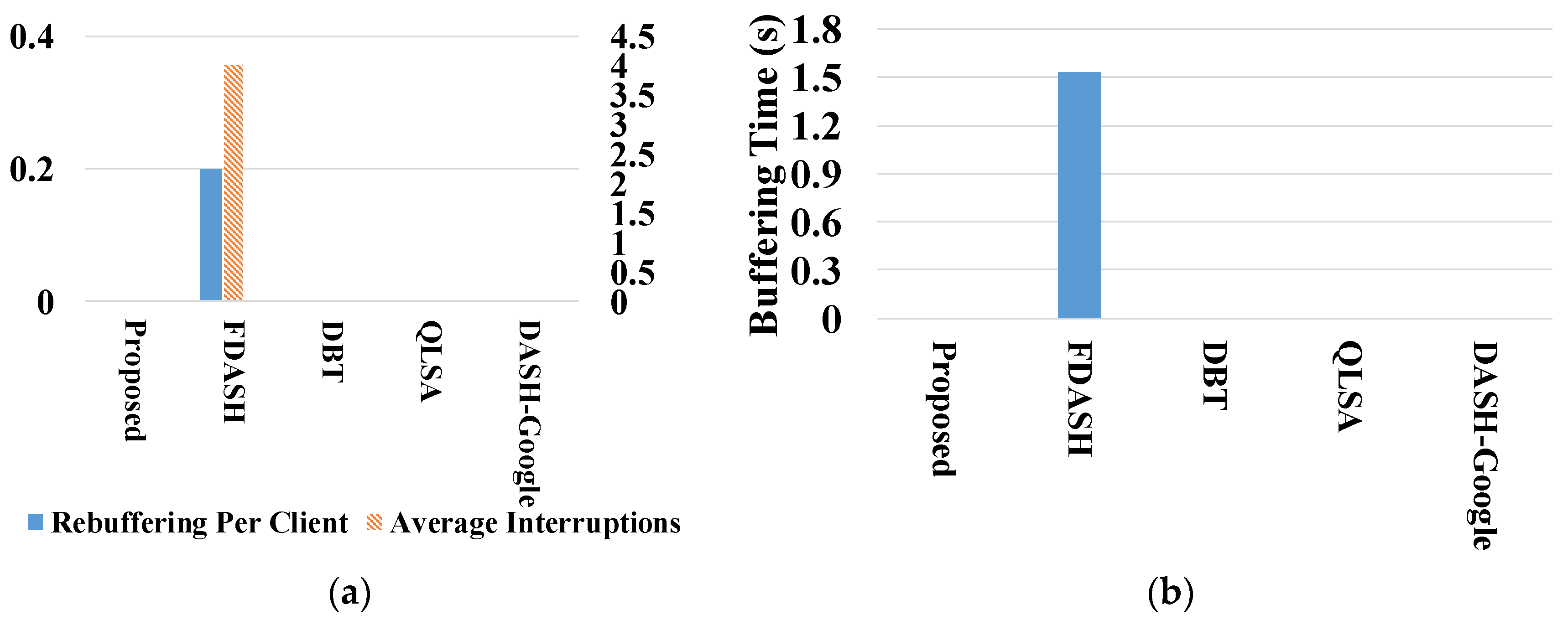

Table 7 shows the performance of the algorithms when the buffer size and segment duration were set to 15 s and 2 s, respectively. Like the previous experiments, the proposed algorithm achieved the highest video rate and had the lowest bandwidth inefficiency. The proposed algorithm was able to avoid rebuffering events and achieved the best user experience. The QLSA and DASH-Google algorithms achieved a high video rate but also experienced a high rate-switching ratio. The DBT algorithm experienced few video rate switches and avoided buffer underflow. However, it had the lowest video rate among the competing algorithms. Similar to the experiments conducted with Dataset 1, the FDASH algorithm experienced the fewest video rate switches but at the expense of more playback interruptions.

Figure 10 shows that only the FDASH algorithm experienced playback interruptions.

When the buffer size and segment duration were set to 15 s and 2 s, respectively, a comparison of

Table 4 and

Table 7 indicates that the DBT algorithm achieved the highest QoE and MOS values when downloading Dataset 1, but it achieved the lowest QoE value and the second lowest MOS value when streaming Dataset 2. Similarly,

Table 4 shows that the QLSA algorithm achieved the second lowest QoE and MOS values among the competing clients while downloading Dataset 1, whereas it achieved the second highest QoE and MOS values when streaming Dataset 2. As explained in

Section 1, the existing algorithms employ fixed control rules to pick the video rate. This results in inconsistent performance from the algorithms as the client settings and video content characteristics change. On the other hand, the proposed algorithm achieved a high QoE, irrespective of client settings or dataset characteristics.

Next, we increased the buffer size and segment duration to 60 s and 4 s.

Table 8 shows that the proposed and QLSA algorithms achieved high video rates. Both algorithms were able to avoid playback interruptions. The proposed algorithm achieved a slightly lower switching ratio, compared to QLSA, which resulted in slightly better user experience. The DBT algorithm achieved the fewest video rate switches at the expense of low video quality. FDASH and DASH-Google achieved similar video rates. However, a high frequency of video rate switches led to low user experience from DASH-Google.

Figure 10 shows that only the FDASH algorithm experienced playback interruptions. A comparison of

Figure 10 and

Figure 11 shows that the number of buffering events and the buffering time decreased with an increase in buffer size.

6.3. Summary

Table 9 shows the average performance of the adaptation algorithms from all the experiments, and demonstrates that the proposed algorithm achieved the highest video rate and the lowest bandwidth inefficiency; this guaranteed the highest user experience among the state-of-the-art algorithms. Furthermore, the proposed algorithm avoided unnecessary playback interruptions during all the experiments. However, it experienced slightly more video rate switches. The reason is that that the proposed algorithm simultaneously focuses on efficiently utilizing bandwidth while mitigating playback interruptions. In order to achieve this, the proposed algorithm quickly reacts to changes in throughput. That results in slightly more video rate switches, but this approach helps the algorithm in downloading high-quality video segments while avoid playback interruptions. DBT and FDASH waited for the buffer volume to increase above (or decrease below) predefined thresholds. That minimized the switching ratio, but compromised video quality. Moreover, the FDASH algorithm estimates the buffer level for the next 60 s. In an environment where the bandwidth is unstable and the buffer size is small, this approach leads to a high frequency of rebuffering events. The throughput-based algorithms such as QLSA and DASH-Google do not have information on the playback buffers; therefore, they did not risk conservative reactions to changes in bandwidth. This resulted in more video rate switches.

The results also indicate that the proposed algorithm guaranteed high QoE irrespective of buffer size, segment duration, and video sequence. However, the performance of the other state-of-the-art algorithms varied from one setting to the other. The reason is that these algorithms employ fixed control strategies for all settings.

In the following summary, consecutive numbers represent the results compared to FDASH, DBT, QLSA and Google-Dash, in that order.

The proposed algorithm

- (1)

increased the average video rate by 5.7%, 18.2%, 6.3%, and 5.2%;

- (2)

increased bandwidth efficiency by 30%, 45%, and 31%, whereas it achieved the same efficiency as the Google-Dash algorithm;

- (3)

outperformed other schemes in terms of QoE by 30%, 12.8%, 12.7%, and 16%.