A Wearable System for the Estimation of Performance-Related Metrics during Running and Jumping Tasks

Abstract

1. Introduction

- To develop a wearable solution based on inertial measurement units (IMUs) which could be worn on different body locations and are suitable for different physical tasks;

- To automatically detect every individual jump performed, as well as segment the running bouts and, as a consequence, each running stride from both legs;

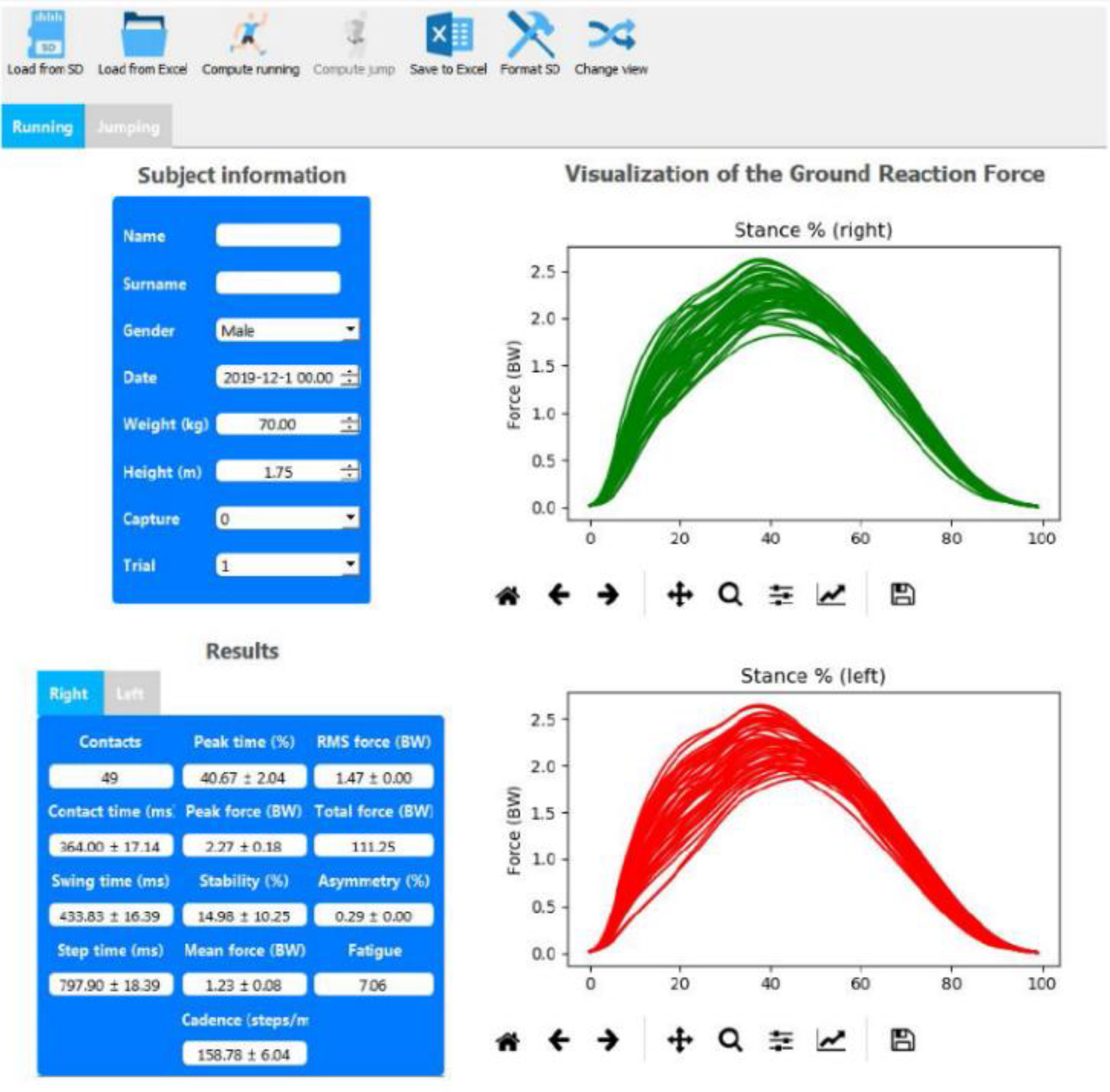

- To provide running performance metrics from the data recorded by the IMUs, such as contact time, step time, mean force, stability, cadence, etc.;

- To provide vertical GRF waveforms for each segmented running stride for both legs and extrapolate the associated metrics;

- To provide jumping metrics from the kinematics recorded by IMU, including flight time, jump height, peak force, mean force, etc., and for the different phases of the jump (eccentric and concentric);

- To provide an easy-to-use graphical interface for an effective visualization of the estimated variables.

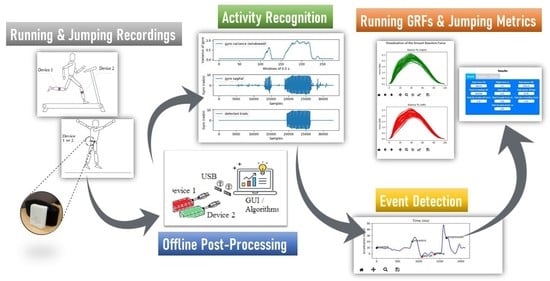

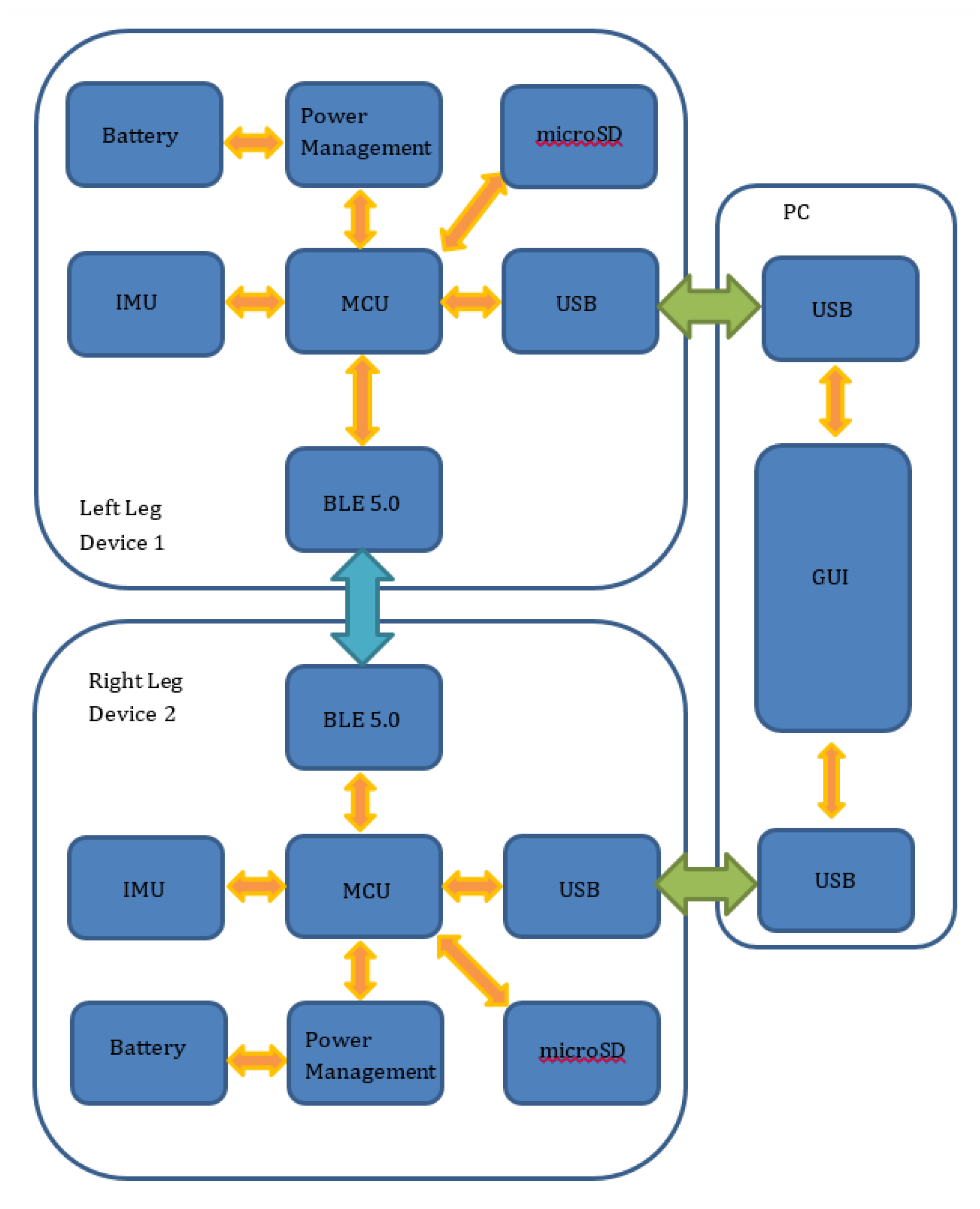

2. System Architecture

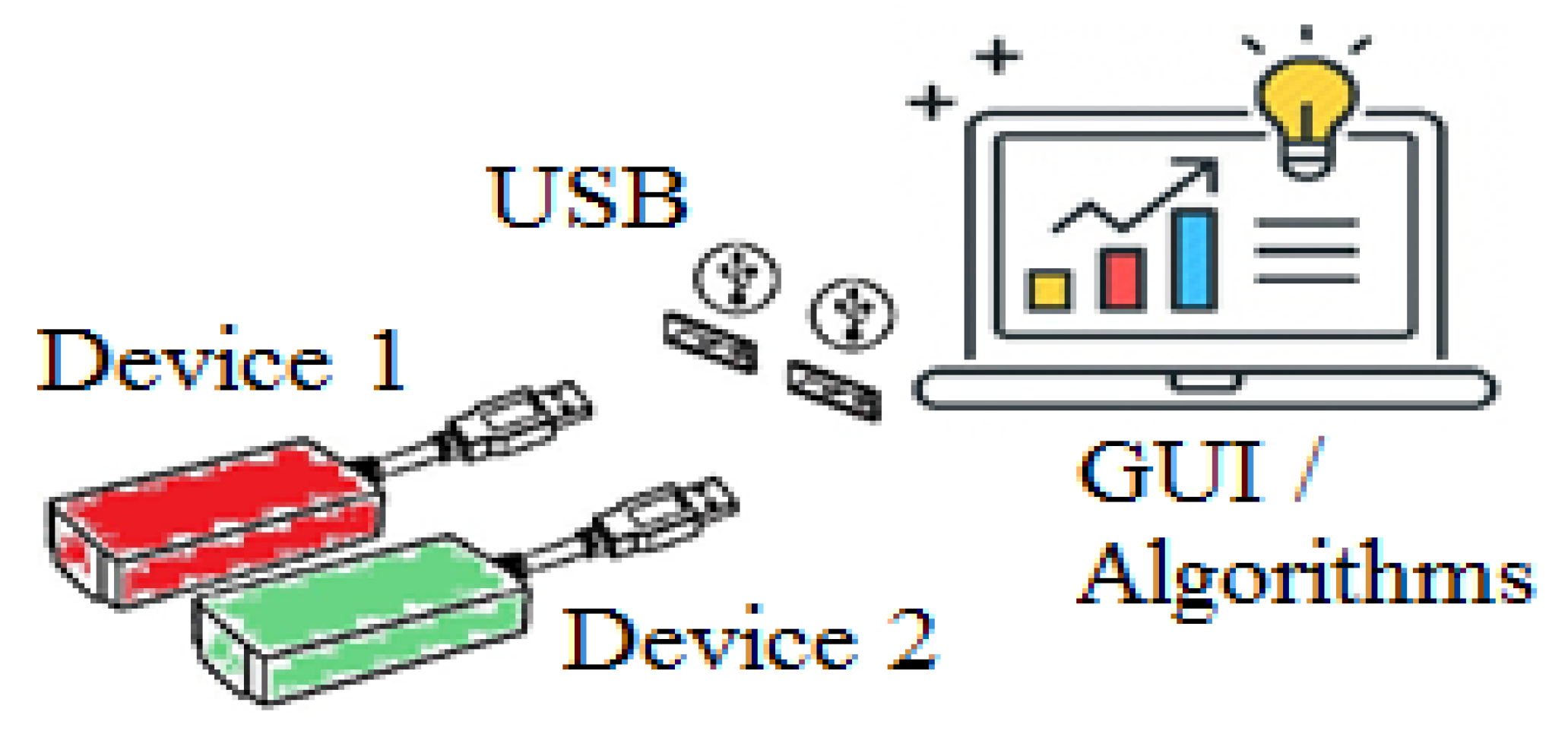

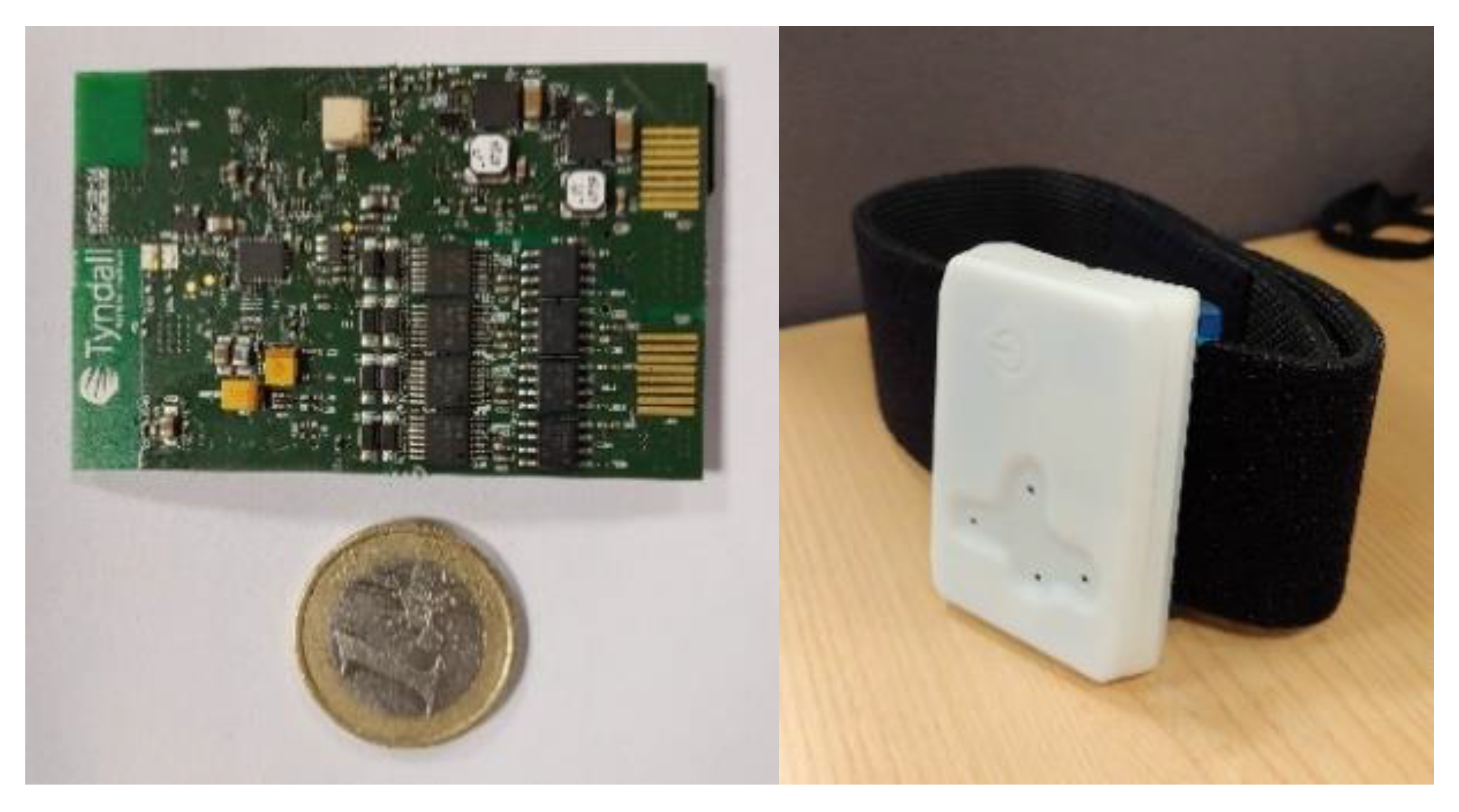

3. Hardware Design

3.1. Hardware Platform

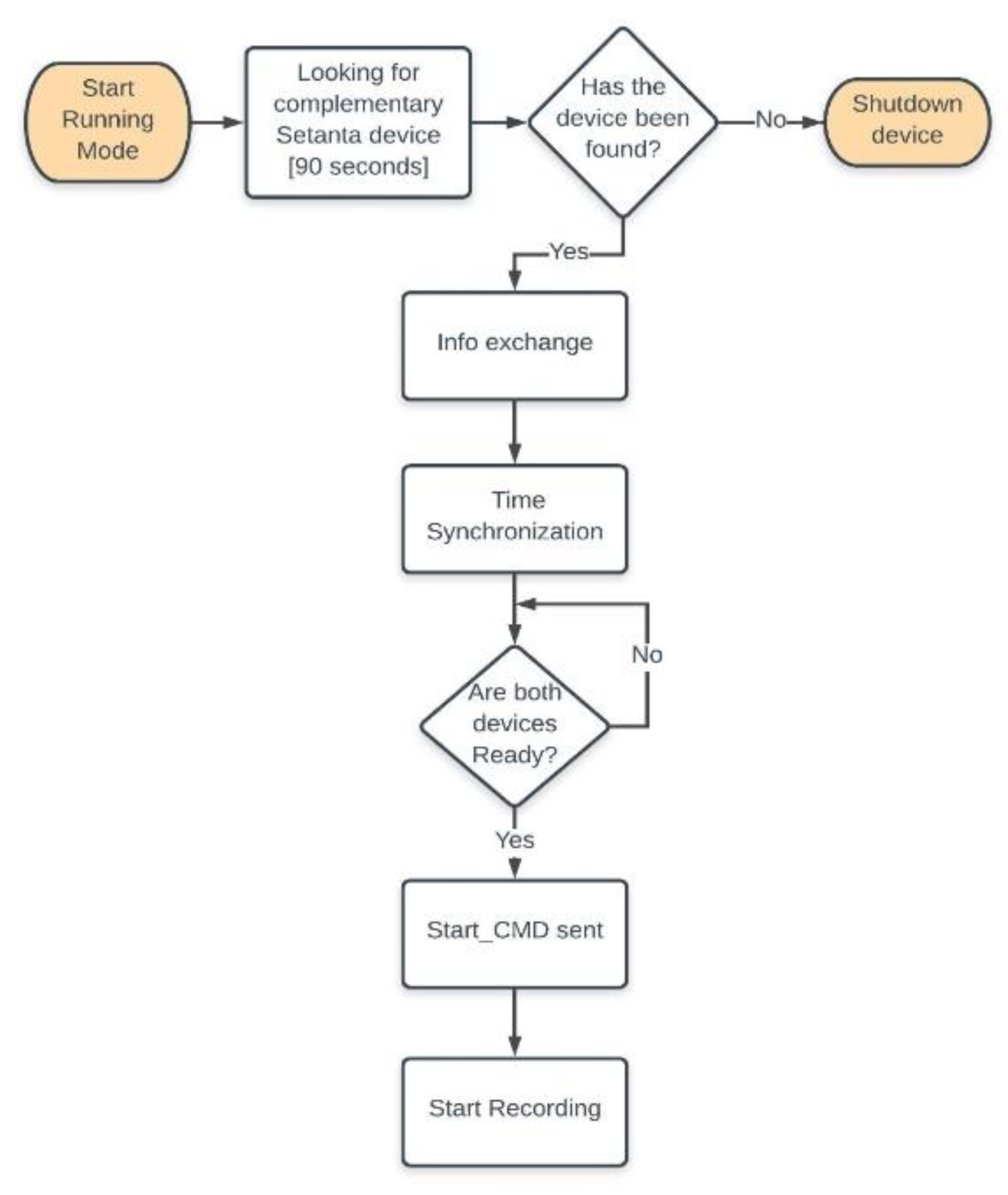

3.2. Hardware Operations

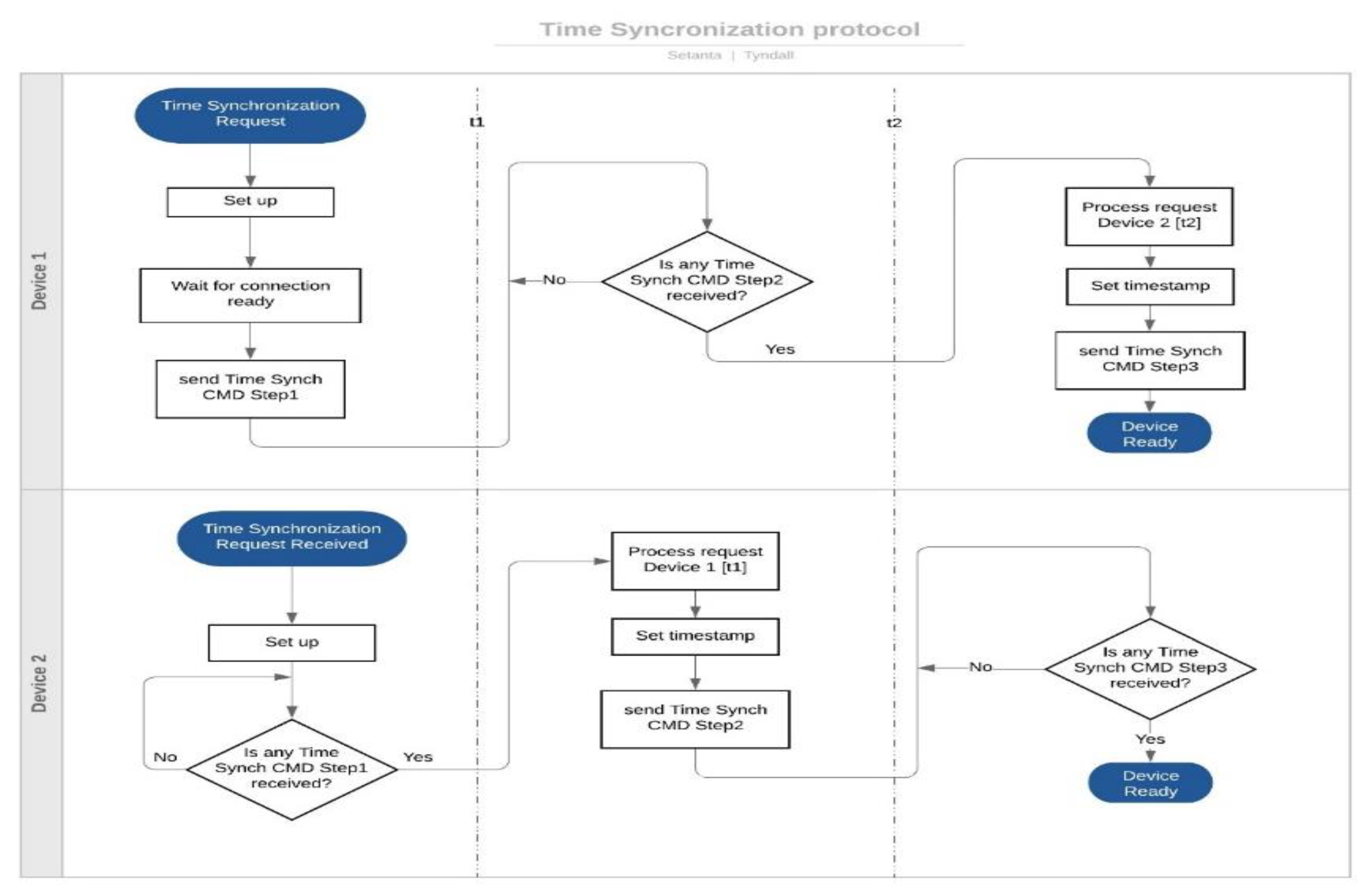

3.3. Wireless Synchronization Protocol

- Set-up: Device1 sends a time_synchronization_request and waits for the Device2 set-up.

- Device2 time synchronization: this phase starts when Device1 sends the CMD_Step1 command (at time t1d1) to Device2 which is received at time t1d2 = t1d1 + δt (δt is the time required for the command to be transmitted between the two devices).

- Device1 time synchronization: after that CDM_Step1 is processed by Device2 (which requires a time slot x), this phase starts when Device2 sends the CMD_Step2 command (at time t2d2 = t1d2 + x) to Device1. Device1 then receives CMD_Step2 at time t2d1 = t2d2 + δt.

- Data recording: after that CDM_Step2 is processed by Device1 (which requires another time slot x), this phase starts when Device1 sends the CMD_Step3 command (at time t3d1 = t2d1 + x) to Device2, and then starts immediately the data recording. Device2 receives CMD_Step3 at time t3d2 = t3d1 + δt and after processing the received packet starts the data recording.

4. Data Processing and Algorithms

4.1. Running Activity Recognition

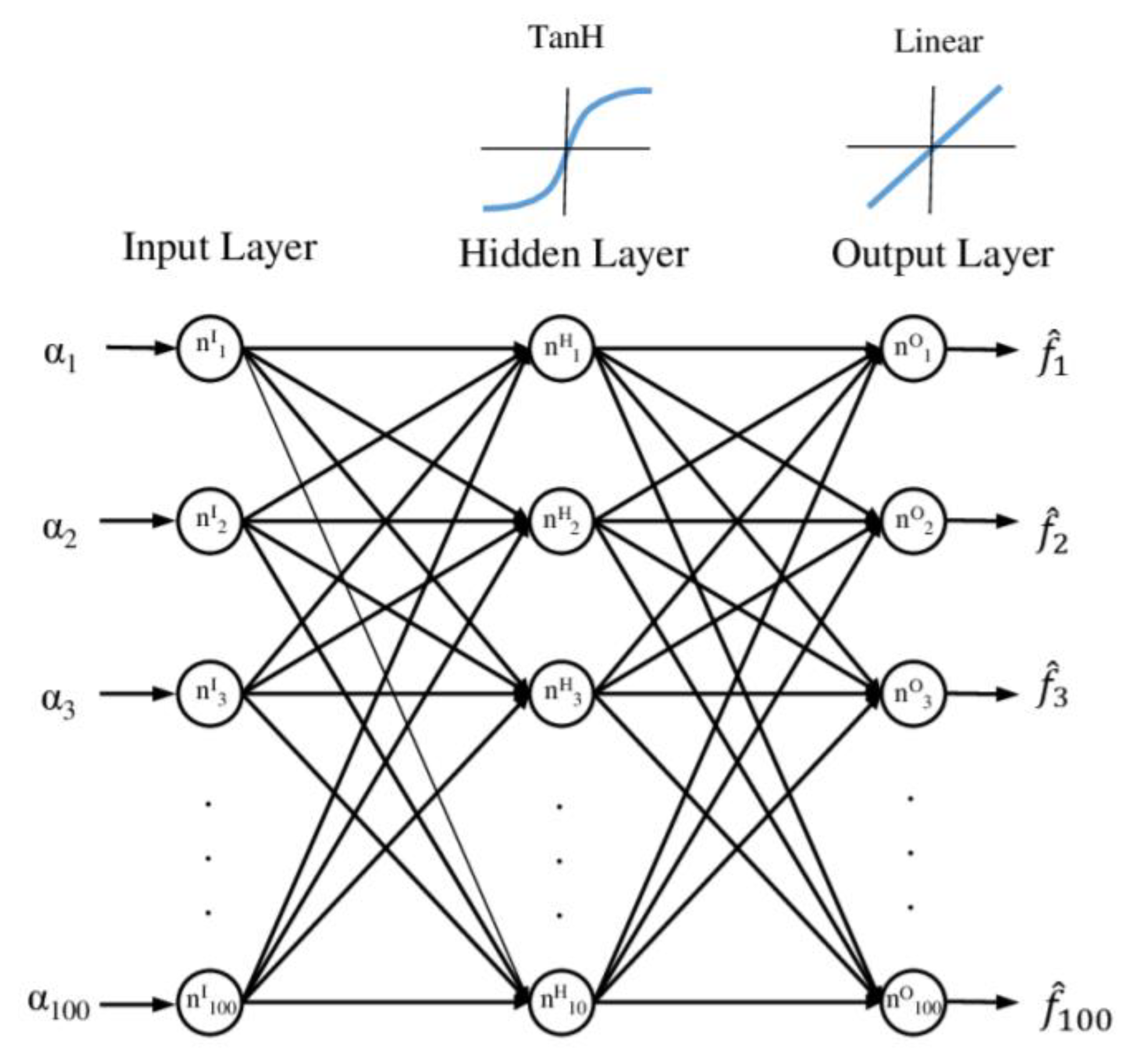

4.2. Running-Related Metrics and Vertical GRF

- Num. Contacts: number of stances in each trial as determined by the event detection algorithm

- Contact time: average stance time of all recorded steps in milliseconds

- Swing time: average time between toe-off and heel-strike for each leg in milliseconds

- Step time: average time between heel-strikes for each leg in milliseconds

- Cadence: number of steps per minute (steps/min)

- Peak time: average time of the maximum force, expressed as percentage of the stance phase

- Peak force: average maximum force during stance, expressed in body weight (BW)

- Mean force: average force during stance, expressed in BW

- RMS force: average root mean square of the force during stance, expressed in BW

- Total force: sum of the peak forces of all stances, expressed in BW

- Asymmetry: average absolute error between the force peaks of both legs in all stances as a percentage [38]. Values closer to 0 indicate stronger symmetry in movements

- Stability: absolute error between the GRF of two consecutive stances expressed as a percentage, and averaged over all the steps [39]. Again, values closer to 0 indicate better stability

- Fatigue: a dimensionless coefficient which is calculated as the slope of the linear regression line that fits the angular rate at the mid-swing events over all gait cycles [40].

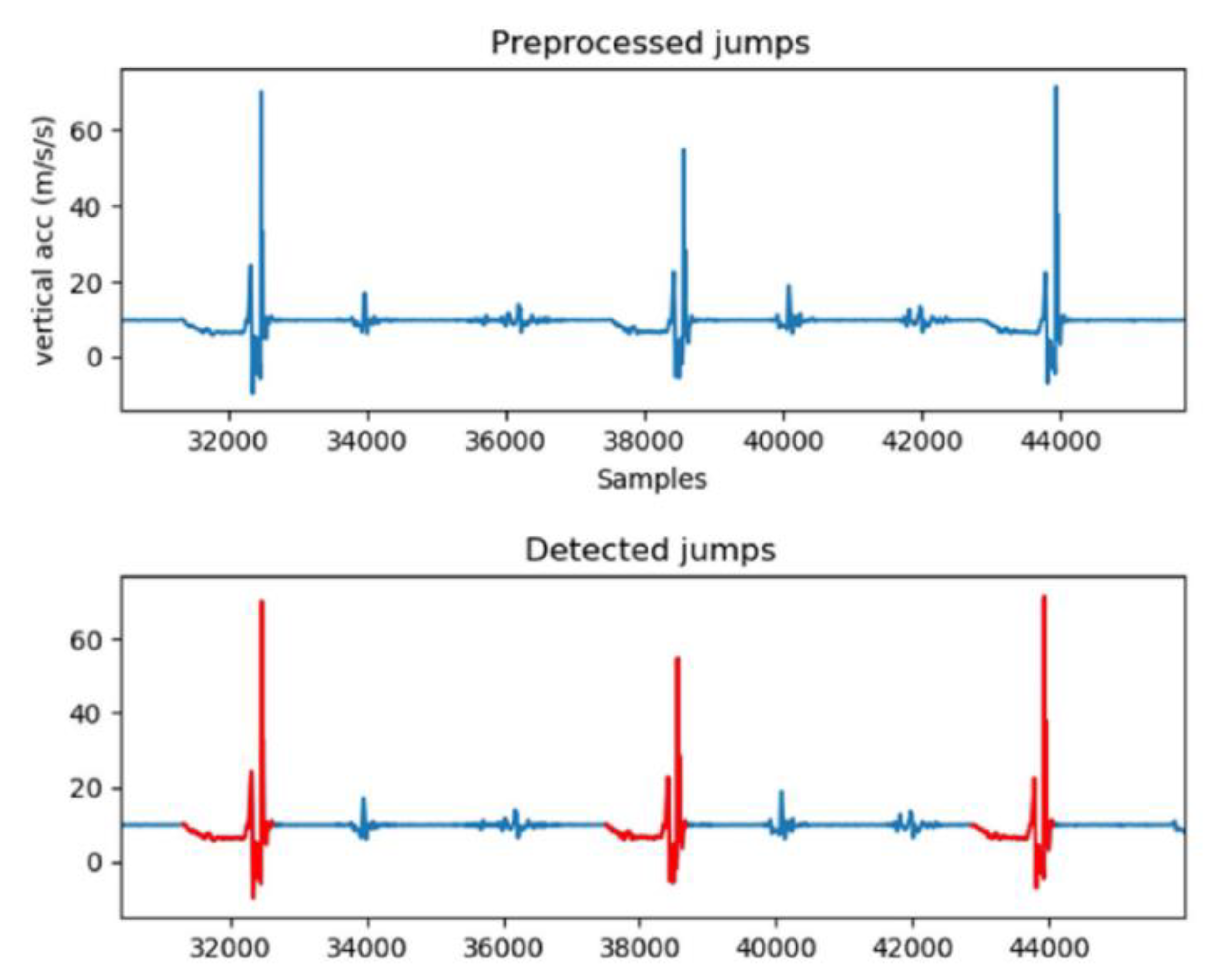

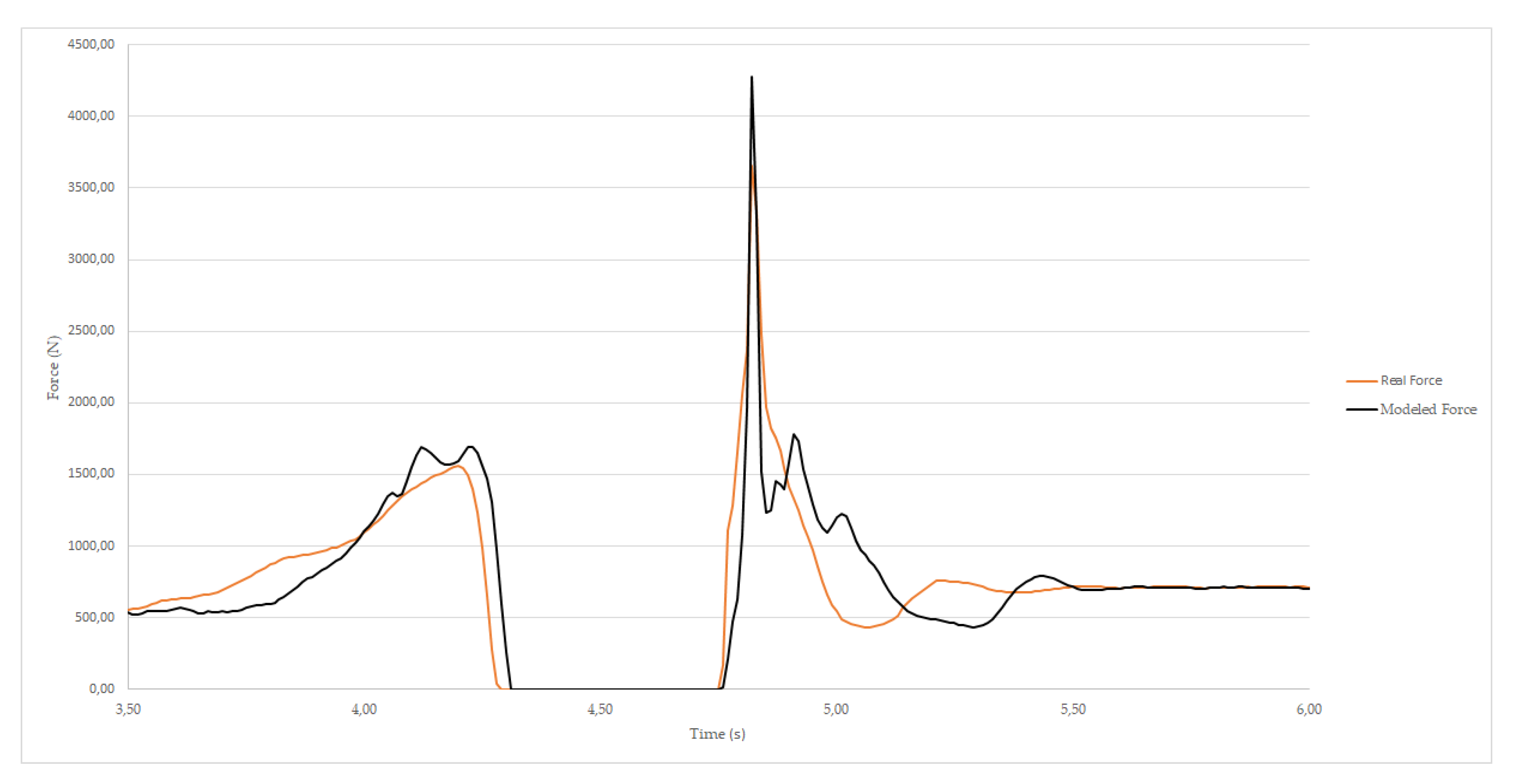

4.3. Jumping Activity Recognition and Event Detection

4.4. Jumping-Related Metrics

5. Graphical User Interface (GUI)

- Load the data collected and stored on-board the SD cards of the hardware platforms (when the boards are connected via USB to the computer). This step will automatically start the activity recognition process with the goal of detecting every data collection carried out and, for each of them, the number of running trials/jumps performed.

- Annotate the demographic/anthropometric information for the athlete under test.

- Analyze a specific running trial and compute the vertical GRFs and the running metrics from that trial showing the results graphically (Figure 12). The average GRF curves are also visible when clicking on the “Change View” Table.

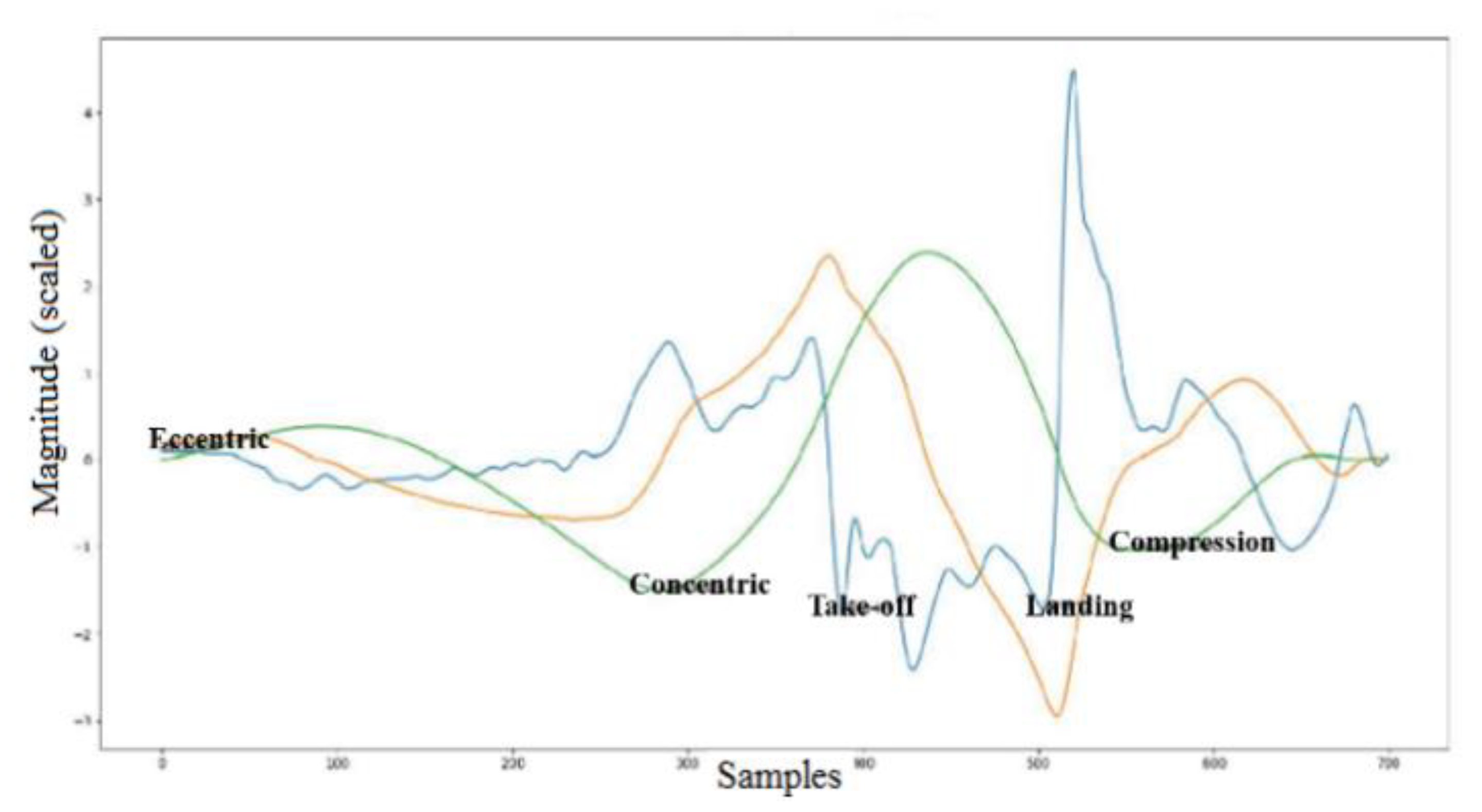

- Analyze a specific jump and compute the related metrics separately for eccentric and concentric phases, as well as visualizing the vertical acceleration, along with the jump events (start of the eccentric phase, start of the concentric phase, take-off, landing, and maximum compression). An example is depicted in Figure 13.

- Export the computed results, subject information, and raw inertial data of a specific running/jumping analysis on an Excel file.

- Load the results of an analysis previously saved on an Excel file.

- Format the SD cards of the hardware platforms, without the need to remove the SD cards from the boards.

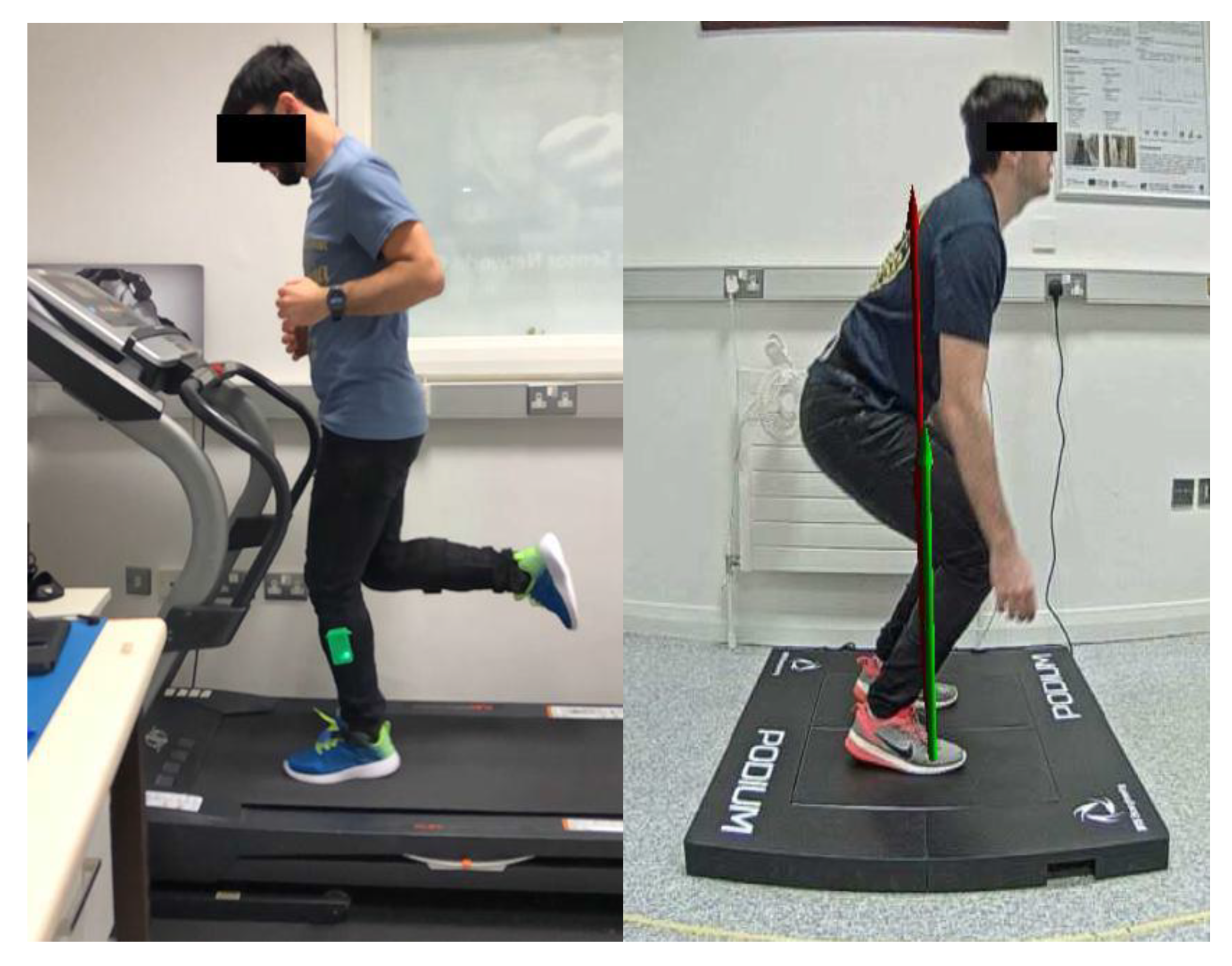

6. System Test and Analysis of Results

6.1. Running Activity Results

6.2. Jumping Activity Results

7. State-of-the-Art Comparison

8. Discussion

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sands, W.A.; Kavanaugh, A.A.; Murray, S.R.; McNeal, J.R.; Jemni, M. Modern Techniques and Technologies Applied to Training and Performance Monitoring. Int. J. Sports Physiol. Perform. 2017, 12, 63–72. [Google Scholar] [CrossRef]

- Thornton, H.R.; Delaney, J.A.; Duthie, G.M.; Dascombe, B.J. Developing athlete monitoring systems in team-sports: Data analysis and visualization. Int. J. Sports Physiol. Perform. 2019, 14, 698–705. [Google Scholar] [CrossRef]

- Seshadri, D.R.; Li, R.T.; Voos, J.E.; Rowbottom, J.R.; Alfes, C.M.; Zorman, C.A.; Drummond, C.K. Wearable sensors for monitoring the internal and external workload of the athlete. NPJ Digit. Med. 2019, 2, 71. [Google Scholar] [CrossRef]

- Papi, E.; Osei-Kuffour, D.; Chen, Y.-M.A.; McGregor, A.H. Use of wearable technology for performance assessment: A validation study. Med. Eng. Phys. 2015, 37, 698–704. [Google Scholar] [CrossRef] [PubMed]

- Adesida, Y.; Papi, E.; McGregor, A.H. Exploring the Role of Wearable Technology in Sport Kinematics and Kinetics: A Systematic Review. Sensors 2019, 19, 1597. [Google Scholar] [CrossRef] [PubMed]

- Camomilla, V.; Bergamini, E.; Fantozzi, S.; Vannozzi, G. Trends Supporting the In-Field Use of Wearable Inertial Sensors for Sport Performance Evaluation: A Systematic Review. Sensors 2018, 18, 873. [Google Scholar] [CrossRef] [PubMed]

- Park, J.; Na, Y.; Gu, G.; Kim, J. Flexible insole ground reaction force measurement shoes for jumping and running. In Proceedings of the 2016 6th IEEE International Conference on Biomedical Robotics and Biomechatronics (BioRob), Singapore, 26–29 June 2016. [Google Scholar]

- Willy, R.W. Innovations and pitfalls in the use of wearable devices in the prevention and rehabilitation of running related injuries. Phys. Ther. Sport 2018, 29, 26–33. [Google Scholar] [CrossRef] [PubMed]

- Gillen, Z.; Miramonti, A.A.; McKay, B.; Leutzinger, T.J.; Cramer, J.T. Test-Retest Reliability and Concurrent Validity of Athletic Performance Combine Tests in 6–15-Year-Old Male Athletes. J. Strength Cond. Res. 2018, 32, 2783–2794. [Google Scholar] [CrossRef]

- Marcote-Pequeño, R.; García-Ramos, A.; Cuadrado-Peñafiel, V.; González-Hernández, J.M.; Gómez, M.Á.; Jiménez-Reyes, P. Association Between the Force–Velocity Profile and Performance Variables Obtained in Jumping and Sprinting in Elite Female Soccer Players. Int. J. Sports Physiol. Perform. 2019, 14, 209–215. [Google Scholar] [CrossRef]

- Chelly, M.S.; Ghenem, M.A.; Abid, K.; Hermassi, S.; Tabka, Z.; Shephard, R.J. Effects of in-Season Short-Term Plyometric Training Program on Leg Power, Jump- and Sprint Performance of Soccer Players. J. Strength Cond. Res. 2010, 24, 2670–2676. [Google Scholar] [CrossRef] [PubMed]

- Xybermind. Available online: http://xybermind.de/achillex/achillex-jumpnrun/ (accessed on 2 August 2020).

- Wundersitz, D.; Josman, C.; Gupta, R.; Netto, K.J.; Gastin, P.B.; Robertson, S. Classification of team sport activities using a single wearable tracking device. J. Biomech. 2015, 48, 3975–3981. [Google Scholar] [CrossRef]

- Marin, F.; Lepetit, K.; Fradet, L.; Hansen, C.; Ben Mansour, K. Using accelerations of single inertial measurement units to determine the intensity level of light-moderate-vigorous physical activities: Technical and mathematical considerations. J. Biomech. 2020, 107, 109834. [Google Scholar] [CrossRef]

- Muniz-Pardos, B.; Sutehall, S.; Gellaerts, J.; Falbriard, M.; Mariani, B.; Bosch, A.; Asrat, M.; Schaible, J.; Pitsiladis, Y.P. Integration of Wearable Sensors Into the Evaluation of Running Economy and Foot Mechanics in Elite Runners. Curr. Sports Med. Rep. 2018, 17, 480–488. [Google Scholar] [CrossRef] [PubMed]

- Adams, D.; Pozzi, F.; Willy, R.W.; Carrol, A.; Zeni, J. Altering cadence or vertical oscillation during running: Effects on running related injury factors. Int. J. Sports Phys. Ther. 2018, 13, 633–642. [Google Scholar] [CrossRef]

- Koldenhoven, R.M.; Hertel, J. Validation of a Wearable Sensor for Measuring Running Biomechanics. Digit. Biomark. 2018, 2, 74–78. [Google Scholar] [CrossRef]

- Ancillao, A.; Tedesco, S.; Barton, J.; O’Flynn, B. Indirect measurement of ground reaction forces and moments by means of wearable inertial sen-sors: A systematic review. Sensors 2018, 18, 2564. [Google Scholar] [CrossRef]

- Wouda, F.J.; Giuberti, M.; Bellusci, G.; Maartens, E.; Reenalda, J.; Van Beijnum, B.-J.F.; Veltink, P.H. Estimation of Vertical Ground Reaction Forces and Sagittal Knee Kinematics During Running Using Three Inertial Sensors. Front. Physiol. 2018, 9, 218. [Google Scholar] [CrossRef]

- Ngoh, K.J.-H.; Gouwanda, D.; Gopalai, A.A.; Chong, Y.Z. Estimation of vertical ground reaction force during running using neural network model and uniaxial accelerometer. J. Biomech. 2018, 76, 269–273. [Google Scholar] [CrossRef]

- Pogson, M.; Verheul, J.; Robinson, M.A.; Vanrenterghem, J.; Lisboa, P. A neural network method to predict task- and step-specific ground reaction force magnitudes from trunk accelerations during running activities. Med Eng. Phys. 2020, 78, 82–89. [Google Scholar] [CrossRef] [PubMed]

- Johnson, W.R.; Mian, A.; Robinson, M.A.; Verheul, J.; Lloyd, D.G.; Alderson, J.A. Multidimensional Ground Reaction Forces and Moments From Wearable Sensor Accelerations via Deep Learning. IEEE Trans. Biomed. Eng. 2021, 68, 289–297. [Google Scholar] [CrossRef]

- Komaris, D.-S.; Pérez-Valero, E.; Jordan, L.; Barton, J.; Hennessy, L.; O’Flynn, B.; Tedesco, S. Predicting three-dimensional ground reaction forces in running by using artificial neural networks and lower body kinematics. IEEE Access 2019, 7, 156779–156786. [Google Scholar] [CrossRef]

- Tedesco, S.; Perez-Valero, E.; Komaris, D.-S.; Jordan, L.; Barton, J.; Hennessy, L.; O’Flynn, B. Wearable Motion Sensors and Artificial Neural Network for the Estimation of Vertical Ground Reaction Forces in Running. In Proceedings of the 2020 IEEE SENSORS, Rotterdam, The Netherlands, 25–28 October 2020. [Google Scholar]

- Komaris, D.-S.; Perez-Valero, E.; Jordan, L.; Barton, J.; Hennessy, L.; O’Flynn, B.; Tedesco, S. A Comparison of Three Methods for Estimating Vertical Ground Reaction Forces in Running. In Proceedings of the International Society of Biomechanics in Sports Proceedings (ISBS 2020), Online, 21–25 July 2020. [Google Scholar]

- Picerno, P.; Camomilla, V.; Capranica, L. Countermovement jump performance assessment using a wearable 3D inertial measurement unit. J. Sports Sci. 2011, 29, 139–146. [Google Scholar] [CrossRef]

- Dowling, A.V.; Favre, J.; Andriacchi, T.P. A wearable system to assess risk for anterior cruciate ligament injury during jump landing: Measurements of temporal events, jump height, and sagittal plane kinematics. J. Biomech. Eng. 2011, 133, 071008. [Google Scholar] [CrossRef]

- Wang, J.; Xu, J.; Schull, P.B. Vertical jump height estimation algorithm based on takeoff and landing identification via foot-worn inertial sensing. J. Biomech. Eng. 2018, 140, 034502. [Google Scholar] [CrossRef]

- Lee, Y.-S.; Ho, C.-S.; Shih, Y.; Chang, S.-Y.; Róbert, F.J.; Shiang, T.-Y. Assessment of walking, running, and jumping movement features by using the inertial measurement unit. Gait Posture 2015, 41, 877–881. [Google Scholar] [CrossRef]

- STMicroelectronics. Available online: https://www.st.com/en/microcontrollers-microprocessors/stm32f417ig.html (accessed on 1 August 2020).

- InvenSense. Available online: https://invensense.tdk.com/download-pdf/mpu-9250-datasheet/ (accessed on 1 August 2020).

- Nordic. Available online: https://www.nordicsemi.com/Products/Low-power-short-range-wireless/Bluetooth-5 (accessed on 1 August 2020).

- Abracon. Available online: https://abracon.com/datasheets/ACAG0801-2450-T.pdf (accessed on 1 August 2020).

- Naruhiro, H.; Robert, N.; Naoki, K.; Michael R, M.; William J, K.; Kazunori, N. Reliability of performance measurements derived from ground reaction force data during countermovement jump and the influence of sampling frequency. J. Strength Cond Res. 2009, 23, 874–882. [Google Scholar]

- Coviello, G.; Avitabile, G.; Florio, A. A Synchronized Multi-Unit Wireless Platform for Long-Term Activity Monitoring. Electronics 2020, 9, 1118. [Google Scholar] [CrossRef]

- Olivares, A.; Ramírez, J.; Górriz, J.M.; Olivares, G.; Damas, M. Detection of (In)activity Periods in Human Body Motion Using Inertial Sensors: A Comparative Study. Sensors 2012, 12, 5791–5814. [Google Scholar] [CrossRef]

- McGrath, D.; Greene, B.R.; O’Donovan, K.J.; Caulfield, B. Gyroscope-based assessment of temporal gait parameters during treadmill walking and running. Sports Eng. 2012, 15, 207–213. [Google Scholar] [CrossRef]

- Dai, B.; Butler, R.J.; Garrett, W.E.; Queen, R.M. Using ground reaction force to predict knee kinetic asymmetry following anterior cruciate ligament reconstruction. Scand. J. Med. Sci. Sports 2014, 24, 974–981. [Google Scholar] [CrossRef]

- Tedesco, S.; Urru, A.; Peckitt, J.; O’Flynn, B. Inertial sensors-based lower-limb rehabilitation assessment: A comprehensive evaluation of gait, kinematic and statistical metrics. Int. J. Adv. Life Sci. 2017, 9, 33–49. [Google Scholar]

- Strohrmann, C.; Harms, H.; Kappeler-Setz, C.; Troster, G. Monitoring Kinematic Changes With Fatigue in Running Using Body-Worn Sensors. IEEE Trans. Inf. Technol. Biomed. 2012, 16, 983–990. [Google Scholar] [CrossRef]

- Cormie, P.; McBride, J.M.; O McCaulley, G. Power-Time, Force-Time, and Velocity-Time Curve Analysis of the Countermovement Jump: Impact of Training. J. Strength Cond. Res. 2009, 23, 177–186. [Google Scholar] [CrossRef]

- Moir, G.L. Three Different Methods of Calculating Vertical Jump Height from Force Platform Data in Men and Women. Meas. Phys. Educ. Exerc. Sci. 2008, 12, 207–218. [Google Scholar] [CrossRef]

- Loadsol. Available online: https://www.novel.de/products/loadsol/ (accessed on 1 August 2020).

- BTSBioengineering. Available online: https://www.btsbioengineering.com/products/podium/ (accessed on 1 August 2020).

- Seiberl, W.; Jensen, E.; Merker, J.; Leitel, M.; Schwirtz, A. Accuracy and precision of loadsol®® insole force-sensors for the quantification of ground reaction force-based biomechanical running parameters. Eur. J. Sport Sci. 2018, 18, 1100–1109. [Google Scholar] [CrossRef]

- Renner, K.E.; Williams, D.B.; Queen, R.M. The Reliability and Validity of the Loadsol®® under Various Walking and Running Conditions. Sensors 2019, 19, 265. [Google Scholar] [CrossRef] [PubMed]

- Tedesco, S.; Sica, M.; Ancillao, A.; Timmons, S.; Barton, J.; O’Flynn, B. Validity Evaluation of the Fitbit Charge2 and the Garmin vivosmart HR+ in Free-Living Environments in an Older Adult Cohort. JMIR mHealth uHealth 2019, 7, e13084. [Google Scholar] [CrossRef]

- New CTS Standards for IoT Advance Functionality of Tech Monitoring of Consumer Health. Available online: https://www.cta.tech/News/Press-Releases/2016/October/New-CTA-Standards-for-IoTAdvance-Functionality-of.aspx (accessed on 1 December 2016).

- Heishman, A.; Daub, B.; Miller, R.; Brown, B.; Freitas, E.; Bemben, M. Countermovement Jump Inter-Limb Asymmetries in Collegiate Basketball Players. Sports 2019, 7, 103. [Google Scholar] [CrossRef]

- Cormack, S.; Newton, R.U.; McGuigan, M.R.; Doyle, T.L. Reliability of Measures Obtained During Single and Repeated Countermovement Jumps. Int. J. Sports Physiol. Perform. 2008, 3, 131–144. [Google Scholar] [CrossRef] [PubMed]

- Winter, D.A. Biomechanics and Motor Control of Human Movement, 4th ed.; Wiley: Hoboken, NJ, USA, 2009. [Google Scholar]

- MarketResearch. Available online: https://www.marketresearch.com/Mordor-Intelligence-LLP-v4018/Global-Wearable-Devices-Sports-Segmented-11643506/ (accessed on 24 December 2020).

- FootPod Garmin. Available online: https://buy.garmin.com/en-ER/ssa/p/15516 (accessed on 24 December 2020).

- Stride Sensor Polar. Available online: https://www.polar.com/en/products/accessories/stride_sensor_bluetooth_smart (accessed on 24 December 2020).

- Axiamo XRUN. Available online: http://www.axiamo.com/xrun/ (accessed on 24 December 2020).

- RunScribe. Available online: https://runscribe.com/ (accessed on 24 December 2020).

- RunTeq. Available online: http://www.runteq.com/ (accessed on 24 December 2020).

- GaitUp. Available online: https://gaitup.com/ (accessed on 2 August 2020).

- SHFT. Available online: http://shft.run/ (accessed on 24 December 2020).

- Moov. Available online: https://welcome.moov.cc/ (accessed on 24 December 2020).

- Tg Force. Available online: https://tgforce.com/ (accessed on 24 December 2020).

- Stryd. Available online: https://www.stryd.com/ (accessed on 2 August 2020).

- IMeasureU. Available online: https://imeasureu.com/ (accessed on 2 August 2020).

- ViPerform. Available online: https://www.dorsavi.com/us/en/viperform/ (accessed on 2 August 2020).

- MyVert. Available online: https://www.myvert.com/ (accessed on 24 December 2020).

- K-50. Available online: https://www.k-sport.tech/en-index.html (accessed on 29 May 2021).

- Waldén, M.; Hägglund, M.; Magnusson, H.; Ekstrand, J. ACL injuries in men’s professional football: A 15-year prospective study on time trends and return-to-play rates reveals only 65% of players still play at the top level 3 years after ACL rupture. Br. J. Sports Med. 2016, 50, 744–750. [Google Scholar] [CrossRef]

- Zadeh, A.; Taylor, D.; Bertsos, M.; Tillman, T.; Nosoudi, N.; Bruce, S. Predicting Sports Injuries with Wearable Technology and Data Analysis. Inf. Syst. Front. 2020, 136, 1–15. [Google Scholar] [CrossRef]

- Lin, F.; Wang, A.; Zhuang, Y.; Tomita, M.R.; Xu, W. Smart Insole: A Wearable Sensor Device for Unobtrusive Gait Monitoring in Daily Life. IEEE Trans. Ind. Inform. 2016, 12, 2281–2291. [Google Scholar] [CrossRef]

- Zhang, H.; Zanotto, D.; Agrawal, S.K. Estimating CoP Trajectories and Kinematic Gait Parameters in Walking and Running Using Instrumented Insoles. IEEE Robot. Autom. Lett. 2017, 2, 2159–2165. [Google Scholar] [CrossRef]

- Ramirez-Bautista, J.A.; Huerta-Ruelas, J.A.; Chaparro-Cardenas, S.L.; Hernandez-Zavala, A. A review in detection and monitoring gait disorders using in-shoe plantar measurement systems. IEEE Rev. Biomed. Eng. 2017, 10, 299–309. [Google Scholar] [CrossRef]

- Matijevich, E.S.; Branscombe, L.M.; Scott, L.R.; Zelik, K.E. Ground reaction force metrics are not strongly correlated with tibial bone load when running across speeds and slopes: Implications for science, sport and wearable tech. PLoS ONE 2019, 14, e0210000. [Google Scholar] [CrossRef]

- Zhuang, Z.; Xue, Y. Sport-Related Human Activity Detection and Recognition Using a Smartwatch. Sensors 2019, 19, 5001. [Google Scholar] [CrossRef]

- Scheurer, S.; Tedesco, S.; Brown, K.N.; O’Flynn, B. Human Activity Recognition for Emergency First Responders via Body-Worn Inertial Sensors. In Proceedings of the 2017 IEEE 14th International Conference on Wearable and Implantable Body Sensor Networks (BSN 2017), Eindhoven, The Netherlands, 9–12 May 2017. [Google Scholar]

- Scheurer, S.; Tedesco, S.; Brown, K.N.; O’Flynn, B. Using domain knowledge for interpretable and competitive multi-class human activity recognition. Sensors 2020, 20, 1208. [Google Scholar] [CrossRef] [PubMed]

- Worsey, M.T.O.; Espinosa, H.G.; Shepherd, J.B.; Lewerenz, J.; Klodzinski, F.S.M.; Thiel, D.V. Features Observed Using Multiple Inertial Sensors for Running Track and Hard-Soft Sand Running: A Comparison Study. In Proceedings of the ISEA 13th Conference of the International Sports Engineering Association (2020), Online, 22–26 June 2020. [Google Scholar]

- Sabti, H.A.; Thiel, D.V. Node Position Effect on Link Reliability for Body Centric Wireless Network Running Applications. IEEE Sens. J. 2014, 14, 2687–2691. [Google Scholar] [CrossRef]

- Robertson, S.; Bartlett, J.D.; Gastin, P.B. Red, Amber, or Green? Athlete Monitoring in Team Sport: The Need for Decision-Support Systems. Int. J. Sports Physiol. Perform. 2017, 12, S2-73–S2-79. [Google Scholar] [CrossRef]

| Speed (km/h) | RMSE |

|---|---|

| 8 | 0.13 (±0.026) |

| 10 | 0.136 (±0.017) |

| 12 | 0.17 (±0.03) |

| All | 0.148 (±0.024) |

| Proposed System (All Speeds) | Actual Results (All Speeds) |

|---|---|

| 2.28 (±0.09) | 2.41 (±0.15) |

| Metric | Error (%) |

|---|---|

| Peak force at concentric | −16.07 |

| Peak force at landing | −7.08 |

| Velocity at landing | 4.5 |

| Flight time | 8.66 |

| Jump height | 15.99 |

| Peak power | 13.05 |

| Start to peak power | −5.58 |

| Products | Sport | Parameters Calculated | Number of Sensors/Body Position | Sampling Frequency (Hz) |

|---|---|---|---|---|

| Foot pod (Garmin) [53] | Running | Distance, cadence, speed | 1 per shoe | NA |

| Stride sensor (Polar) [54] | Running | Duration, distance, cadence, speed, stride length | 1 per shoe | NA |

| Axiamo XRUN [55] | Running | Ground contact time | 1 per shoe | NA |

| RunScribe [56] | Running, walking, hiking | 12 basic metrics (efficiency, shock, motion), 33 advanced metrics (derived, plus, research), 12 sacral metrics (pelvis angles, vertical oscillation) | 1 per shoe (possibility to add 1 on the hip) | 500 |

| RunTeq [57] | Running | 6 body kinematics metrics, 6 workout metrics | 1 per shoe and 1 on chest | NA |

| Achillex jump’n’run (Xybermind) [12] | Jumping, sprinting | Running parameters, and jumping metrics for three different jump forms | 1 on the belt (with magnetic barrier infrastructure) | 400 |

| GaitUp [58] | Running, walking, physical activity, golf, swimming | Running temporal (4 metrics), spatial (4 metrics), and performance (6 metrics) | 1 per shoe | 128 |

| SHFT [59] | Running | 12 full-body metrics (e.g., cadence, ground contact time, step length, g-landing, etc.) | 1 on one shoe and 1 on chest | NA |

| Moov [60] | Running | Cadence, range of motion, tibial impact | 1 on ankle | NA |

| TgForce [61] | Running | Peak acceleration (in g), cadence | 1 on tibia | NA |

| Stryd [62] | Running | Ground contact time, vertical oscillation, running power, distance, leg stiffness, cadence | 1 on shoe | 1 |

| IMeasureU [63] | Jumping, sprinting, counter rotation, swimming, power meter | Steps, cumulative impact load, cumulative bone stimulus | Up to 8 sensors on the body (possibility to sync with VICON MoCap) | 500 |

| ViPerform (DorsaVi) [64] | Functional tests, hamstring tests, knee movement tests, running tests | Symmetry, average ground reaction force, peak acceleration, ground contact time, cadence, distance, speed | 1 per tibia (with possibility to include video) | 100 |

| MyVert [65] | Jumping, running | Jump metrics, landing impact features, drills features, energy feature, run feature, power/intensity features, stress features | 1 on center-of-mass | NA |

| K-50 (K-Sport) [66] | Soccer-related movements | 300 parameters including physical, technical, and tactical information | 1 on the chest (also including GPS, UWB, and physiologic sensing) | 50 |

| Proposed system | Jumping, running | 13 running temporal, spatial, and performance metrics, full vertical GRF waveform, and jumping metrics for two different jump forms | 1 on each tibia (for running), 1 on center-of-mass (for jumping) | 238 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tedesco, S.; Alfieri, D.; Perez-Valero, E.; Komaris, D.-S.; Jordan, L.; Belcastro, M.; Barton, J.; Hennessy, L.; O’Flynn, B. A Wearable System for the Estimation of Performance-Related Metrics during Running and Jumping Tasks. Appl. Sci. 2021, 11, 5258. https://doi.org/10.3390/app11115258

Tedesco S, Alfieri D, Perez-Valero E, Komaris D-S, Jordan L, Belcastro M, Barton J, Hennessy L, O’Flynn B. A Wearable System for the Estimation of Performance-Related Metrics during Running and Jumping Tasks. Applied Sciences. 2021; 11(11):5258. https://doi.org/10.3390/app11115258

Chicago/Turabian StyleTedesco, Salvatore, Davide Alfieri, Eduardo Perez-Valero, Dimitrios-Sokratis Komaris, Luke Jordan, Marco Belcastro, John Barton, Liam Hennessy, and Brendan O’Flynn. 2021. "A Wearable System for the Estimation of Performance-Related Metrics during Running and Jumping Tasks" Applied Sciences 11, no. 11: 5258. https://doi.org/10.3390/app11115258

APA StyleTedesco, S., Alfieri, D., Perez-Valero, E., Komaris, D.-S., Jordan, L., Belcastro, M., Barton, J., Hennessy, L., & O’Flynn, B. (2021). A Wearable System for the Estimation of Performance-Related Metrics during Running and Jumping Tasks. Applied Sciences, 11(11), 5258. https://doi.org/10.3390/app11115258