CYRA: A Model-Driven CYber Range Assurance Platform

Abstract

Featured Application

Abstract

1. Introduction

2. Related Works

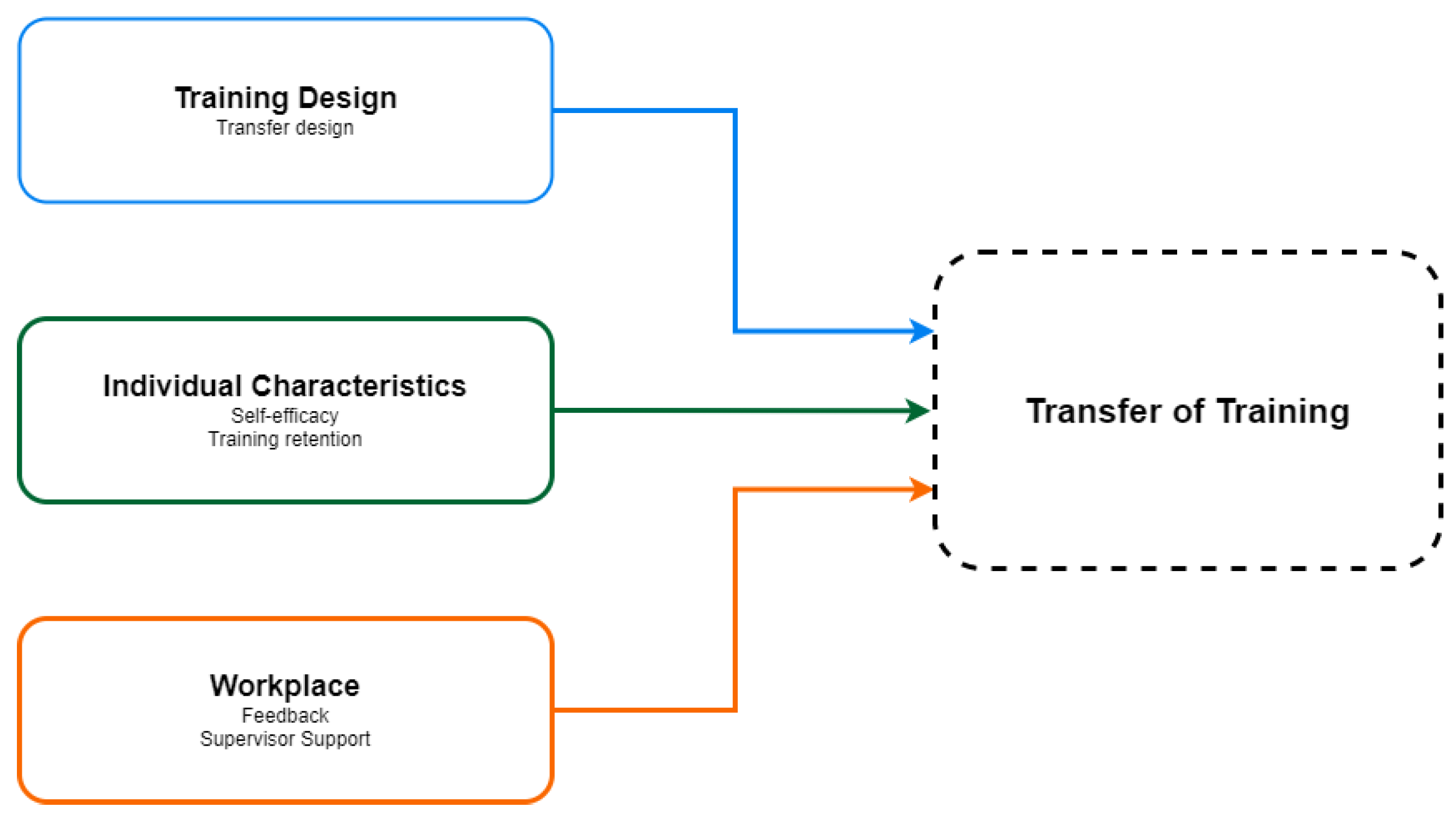

2.1. Adopting Training in the Workplace

2.2. Cyber Ranges Platforms

3. The CYber Range Assurance Platform (CYRA)

- Sphynx’s Security Assurance Platform is a suite of various tools and technologies that enables clients to realise security assessments based on industrial and international standards (e.g., cloud, network, smart metering standards) through continuous monitoring and testing. The platform’s main components are:

- -

- Asset Loader: The component responsible for receiving the cyber system’s asset model for the target organisation. This model includes the assets of the organisation, security properties for these assets, threats that may violate these properties and the security controls that protect the assets.

- -

- Monitoring Module: A runtime monitoring engine built in Java that offers an API for establishing monitoring rules to be checked. This module is made of three sub-modules: a monitor manager, a monitor, and an event collector. The role of the module is to forward the runtime events from the application’s monitored properties and finally obtain the monitoring results.

- -

- Dynamic Testing Module: The Dynamic Testing Module utilises a combination of various open-source penetration testing tools to execute different types of penetration testing assessments. The module actively interacts with a target system to discover vulnerabilities and determine if the vulnerabilities are exploitable. In addition, the module supports the uploading of a report generated from different types of tools and populates the assurance platform based on their results. Finally, as additional functionality, the module can discover and report assets that were not defined in the current asset model of the system.

- -

- Vulnerability Loader Module: The component responsible for loading the known vulnerabilities (of the identified assets) and updating the assurance platform depending on the organisation’s assets included in the assurance model.

- -

- Event Captor: Event Captor is a tool that, based on collected data and triggering events, formulates a rule or a set of rules and pushes the latter towards monitoring the module for evaluation. Data and events are mostly collected through Elastisearch (https://www.elastic.co/ (accessed on 20 April 2021)) based on lightweight shippers (namely Beats), such as Filebeat, MetricBeat, and PacketBeat, that forwards and centralise log data. Data can also be collected through Logstash, an open server-side data processing pipeline that ingests data from a multitude of sources, transforms it, and then sends it to ElasticSearch. Event captor’s are initiated through REST calls from the monitoring module respectively.

- The CTTP Model and Programmes Editor is responsible for creating the CTTP Models and Training Programmes. The editor is offered as a web service through the Cyber Range Platform.

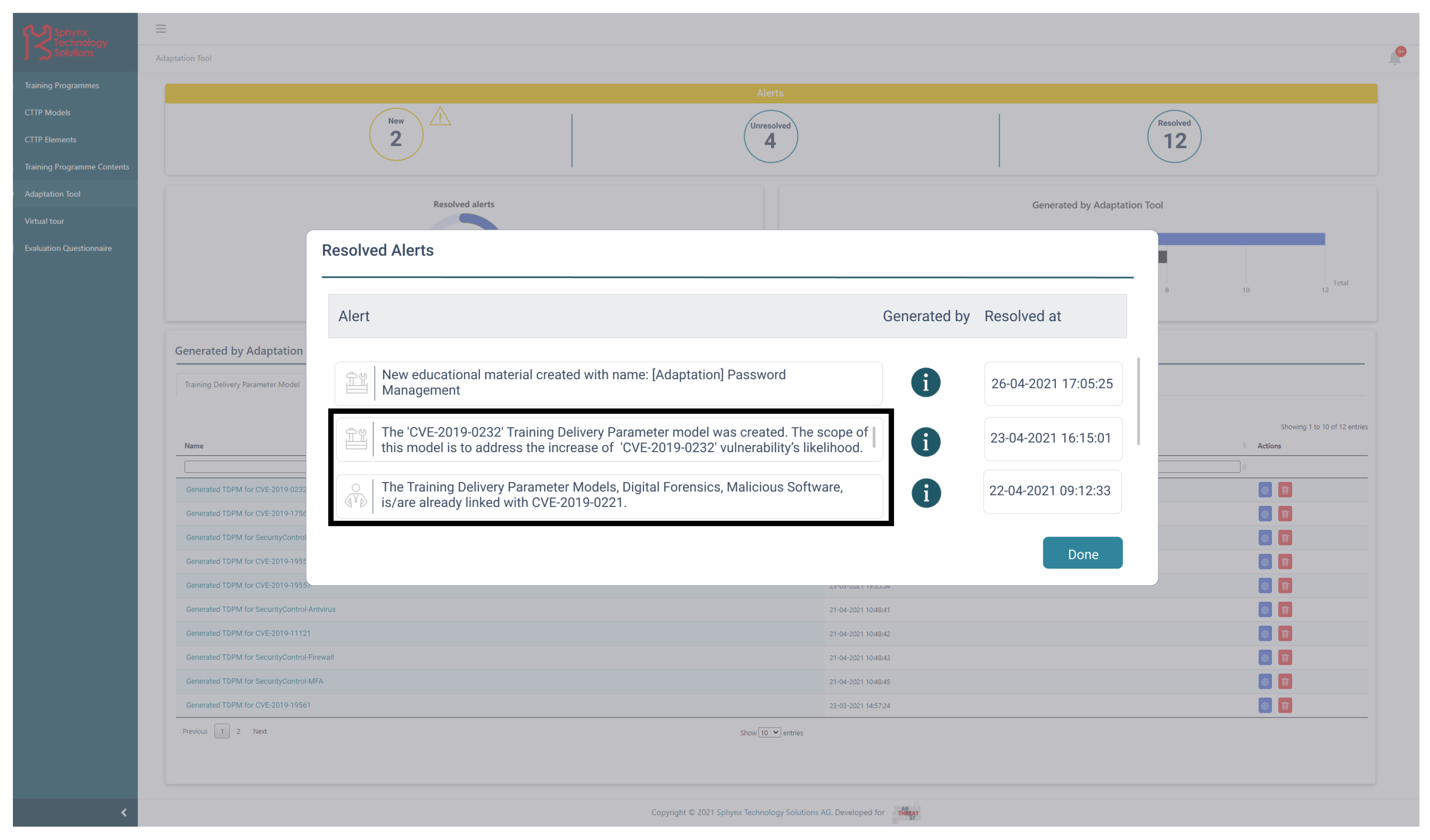

- Lastly, the CTTP Models and Programmes adaptation tool is the tool responsible for adapting the existing training programmes and models or creating new ones in response to upcoming cyber threats and/or changes of the assessed cyber systems.

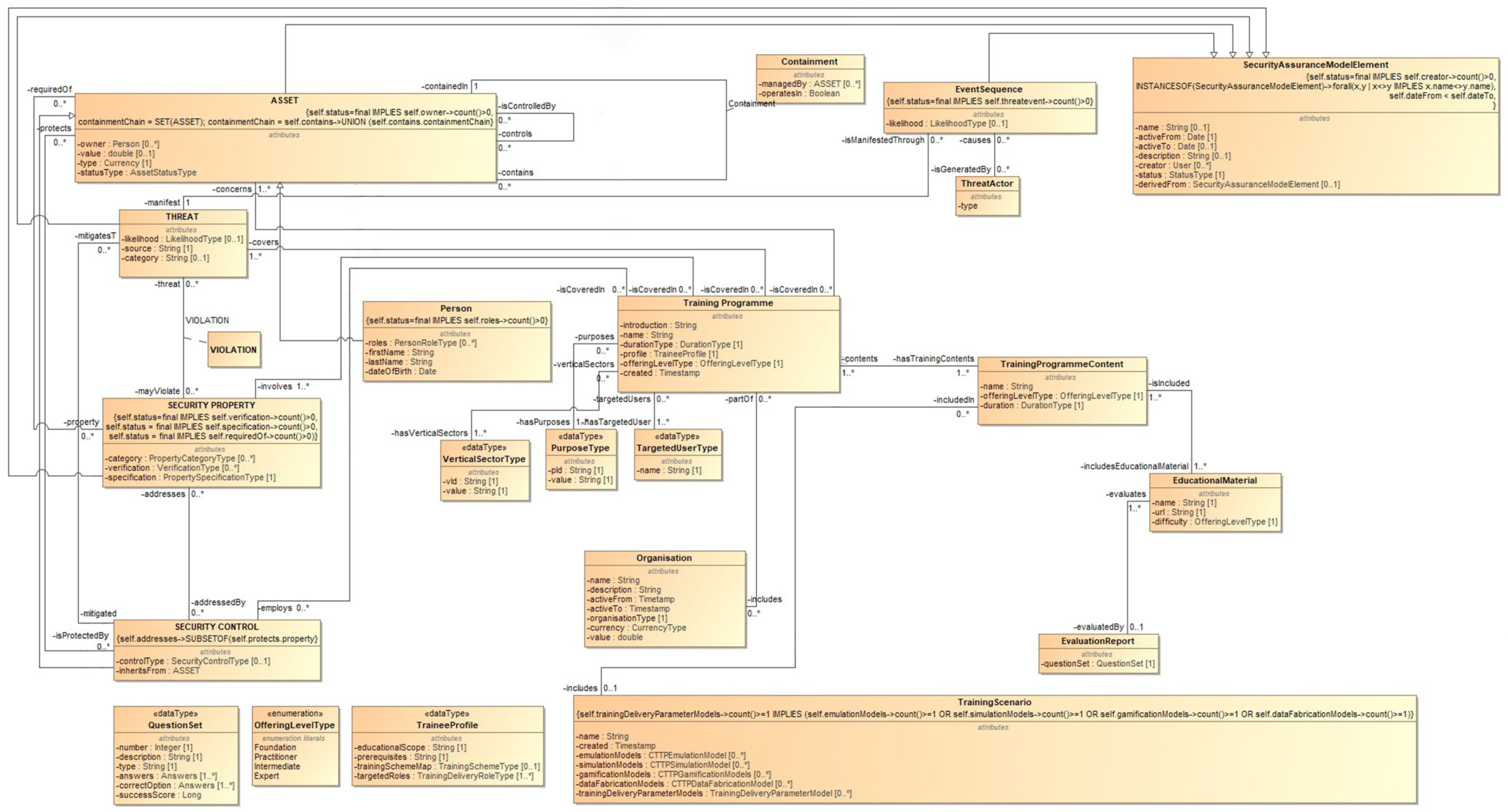

4. Cyber Threat and Training (CTTP) Models and Programmes

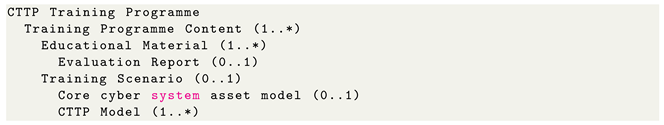

4.1. Training Programme

4.2. Training Programme Content

4.3. Educational Material

4.4. Training Scenario

4.5. CTTP Models

4.5.1. Emulation Model

4.5.2. Simulation Model

4.5.3. Serious Game

4.5.4. Data Fabrication

4.5.5. Training Delivery Parameter Model

5. Cyber Threat and Training Models and Programmes Adaptation Tool

5.1. Adaptation Based on Sphynx’s Security Assurance Platform

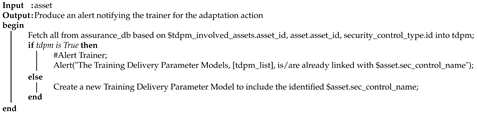

5.1.1. Adaptation Based on Security Controls

| Algorithm 1: Adaptation based on security controls |

|

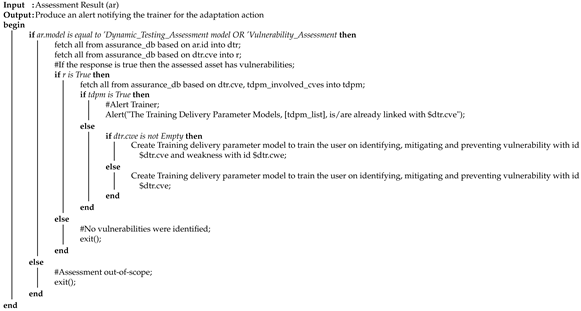

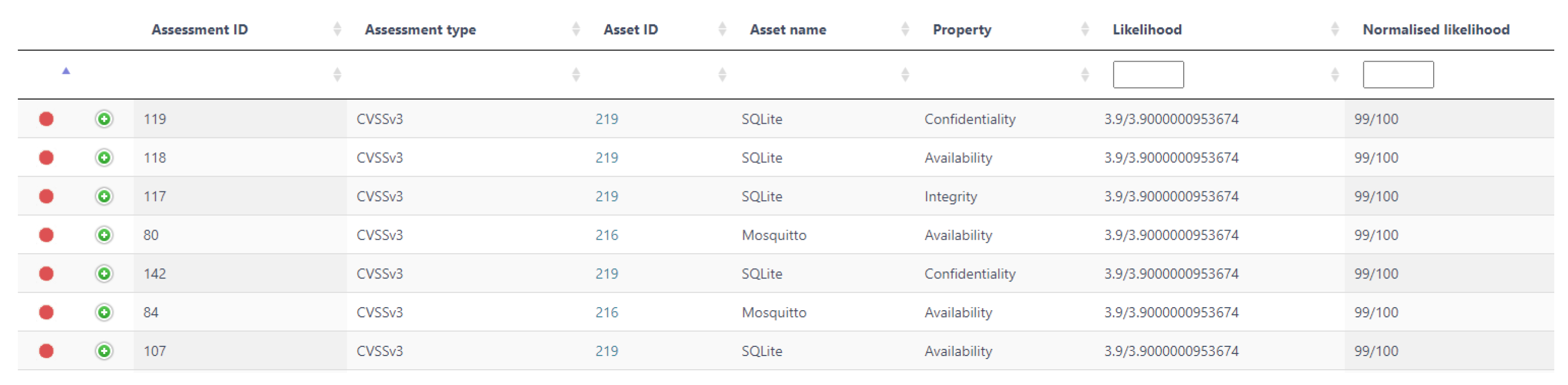

5.1.2. Adaptation Based on Vulnerable Assets

New Vulnerabilities on Existing Assets

| Algorithm 2: Adaptation based on existing asset vulnerabilities |

|

New Vulnerable Asset

| Algorithm 3: Adaptation based on a newly inserted vulnerable asset |

|

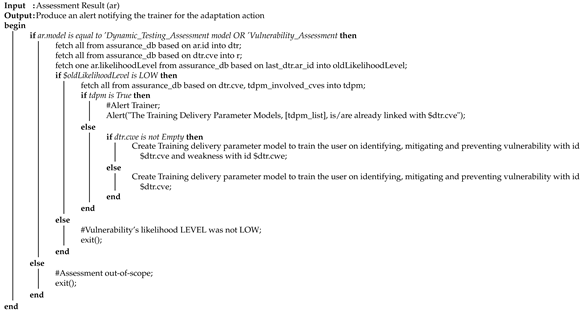

Increased Likelihood of an Existing Vulnerability

| Algorithm 4: Adaptation based on a likelihood alteration |

|

5.1.3. Adaptation Based on Trainee’s Performance

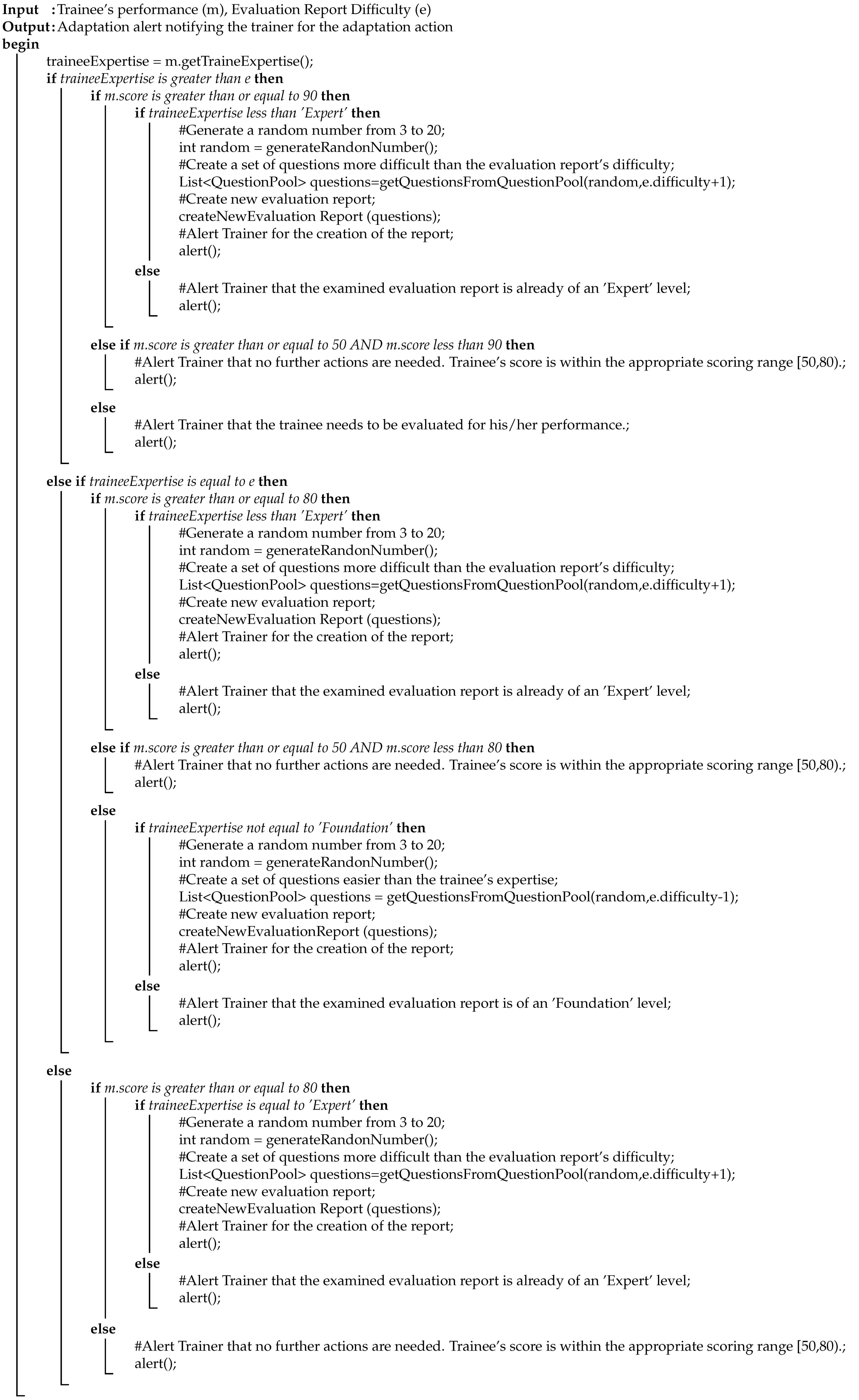

Completion of an Educational Material’s Evaluation Report

| Algorithm 5: Adaptation based on the evaluation report’s score |

|

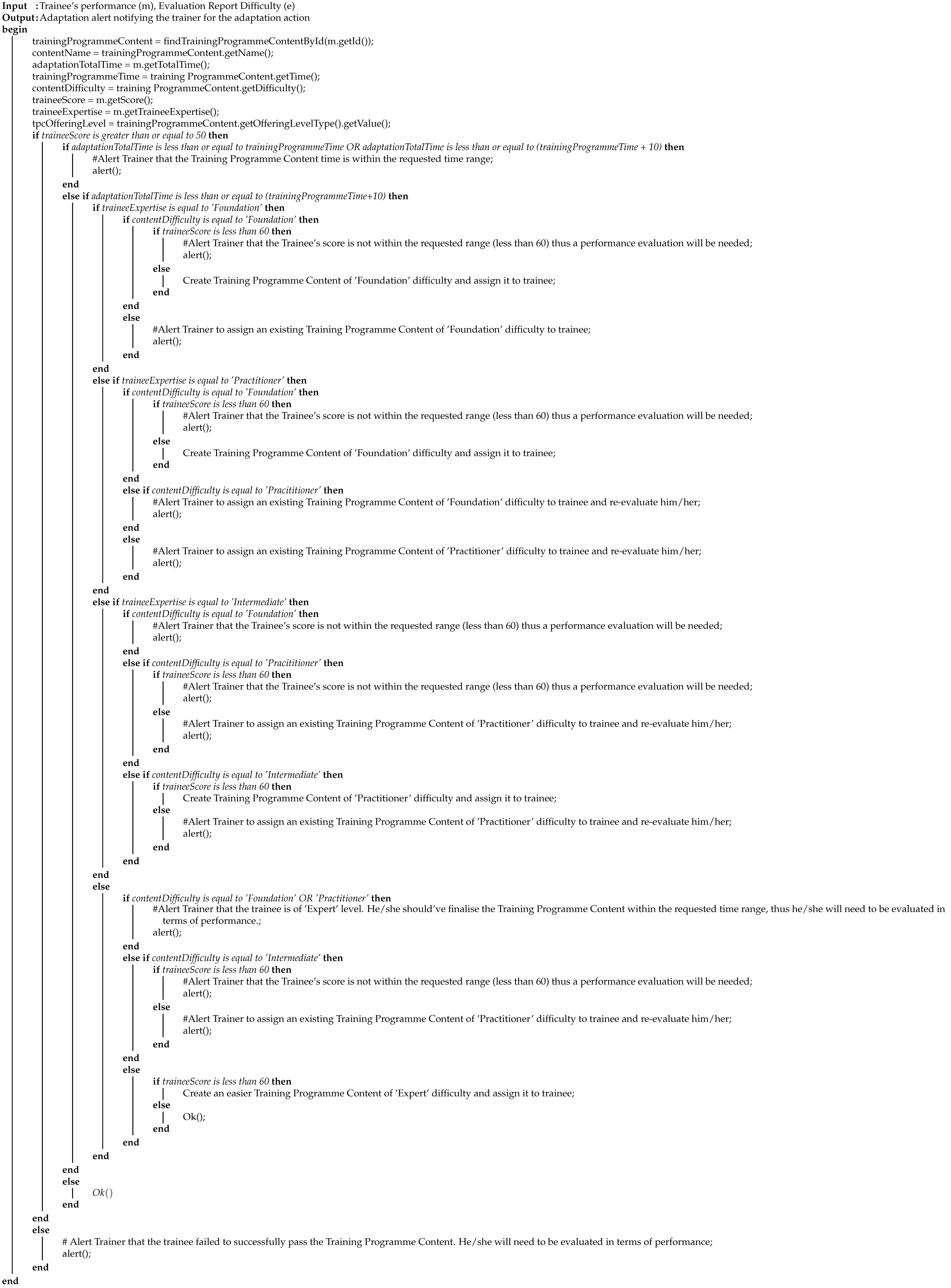

Completion of a Training Programme Content

| Algorithm 6: Adaptation based on the completion time and score of a Training |

|

5.2. Demonstrator

5.2.1. Adaptation Based on Security Controls and New Threats

5.2.2. Adaptation Based on Trainee’s Performance

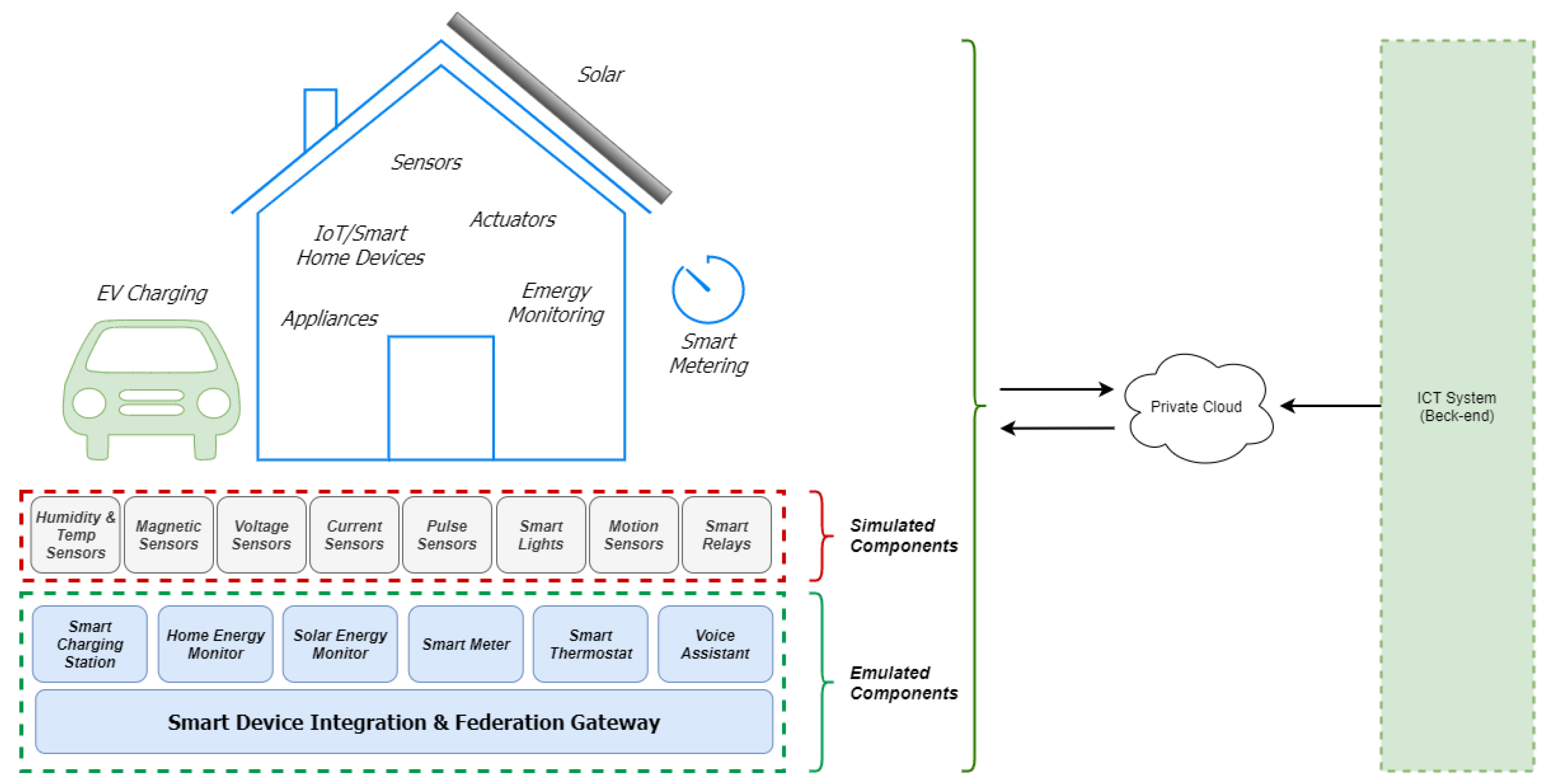

6. The IoT-enabled Smart Home Use Case

6.1. Outline

6.2. Training and Compliance

7. Evaluation Results

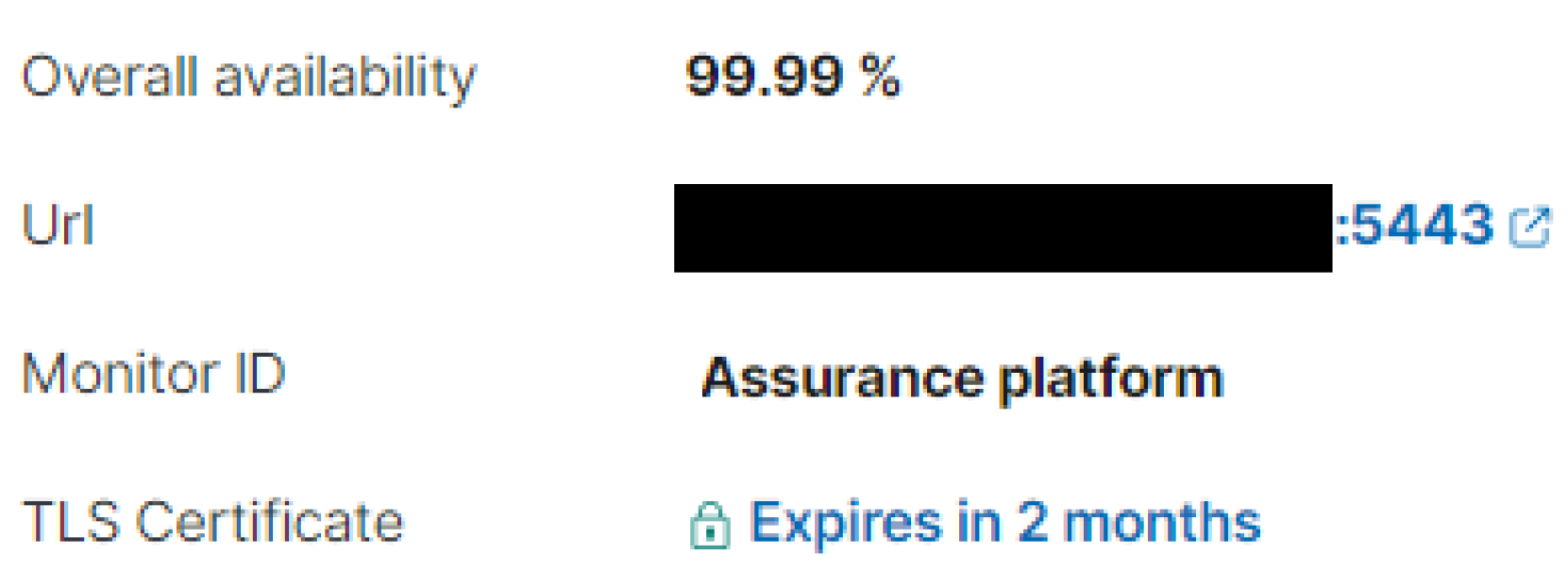

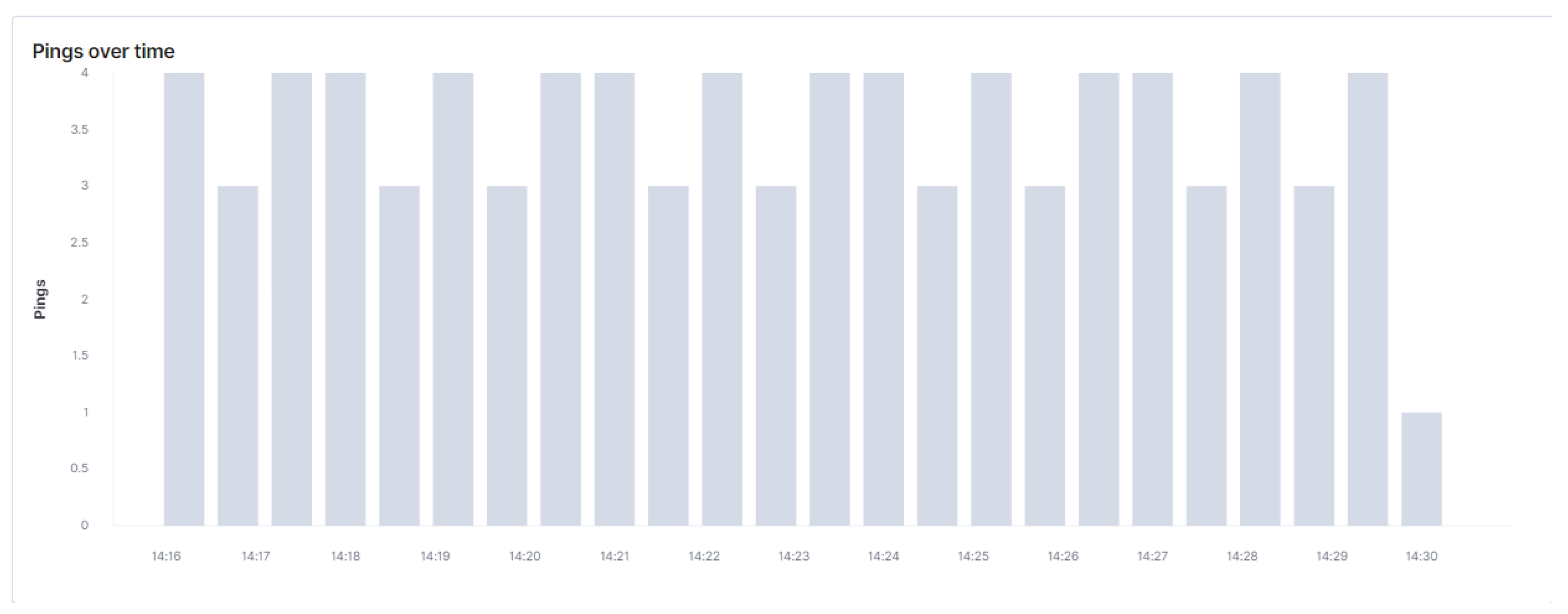

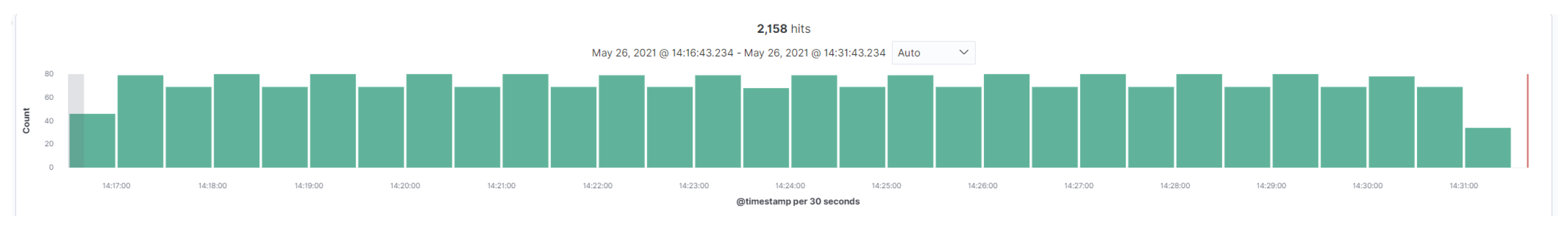

7.1. Platform Statistics

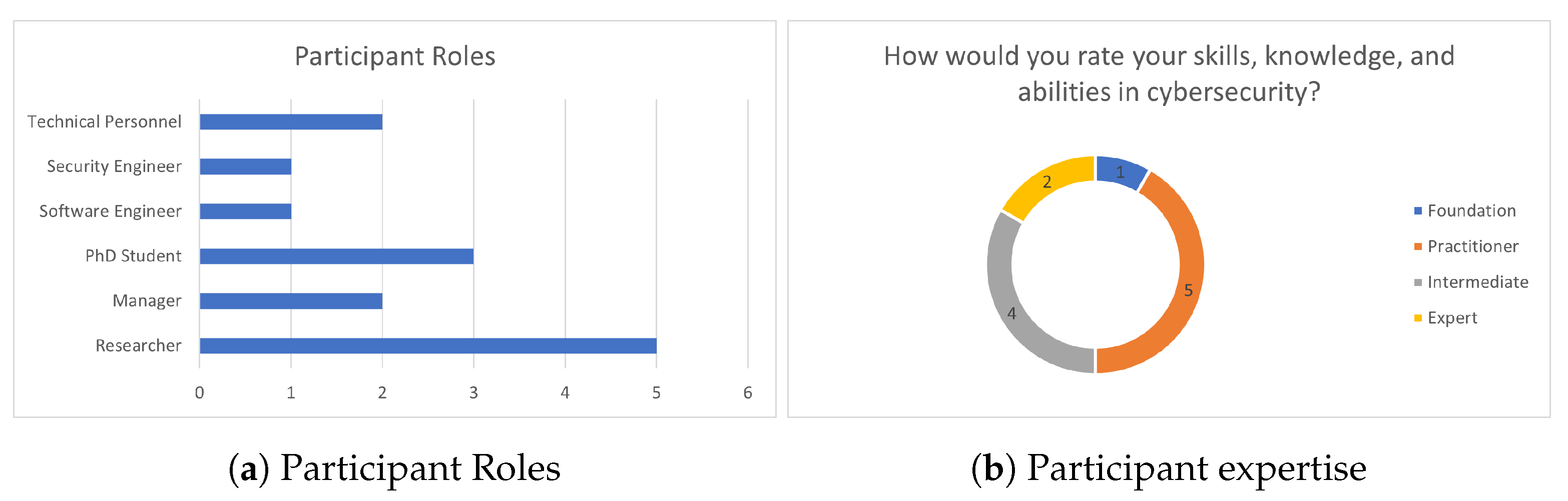

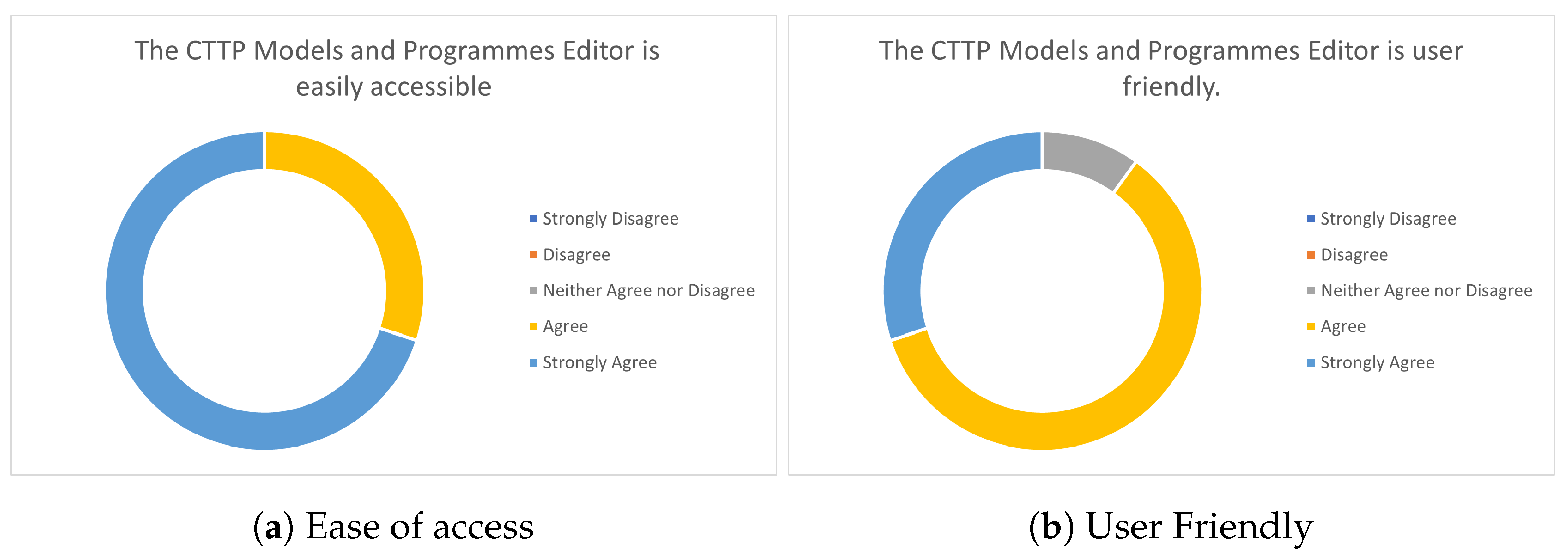

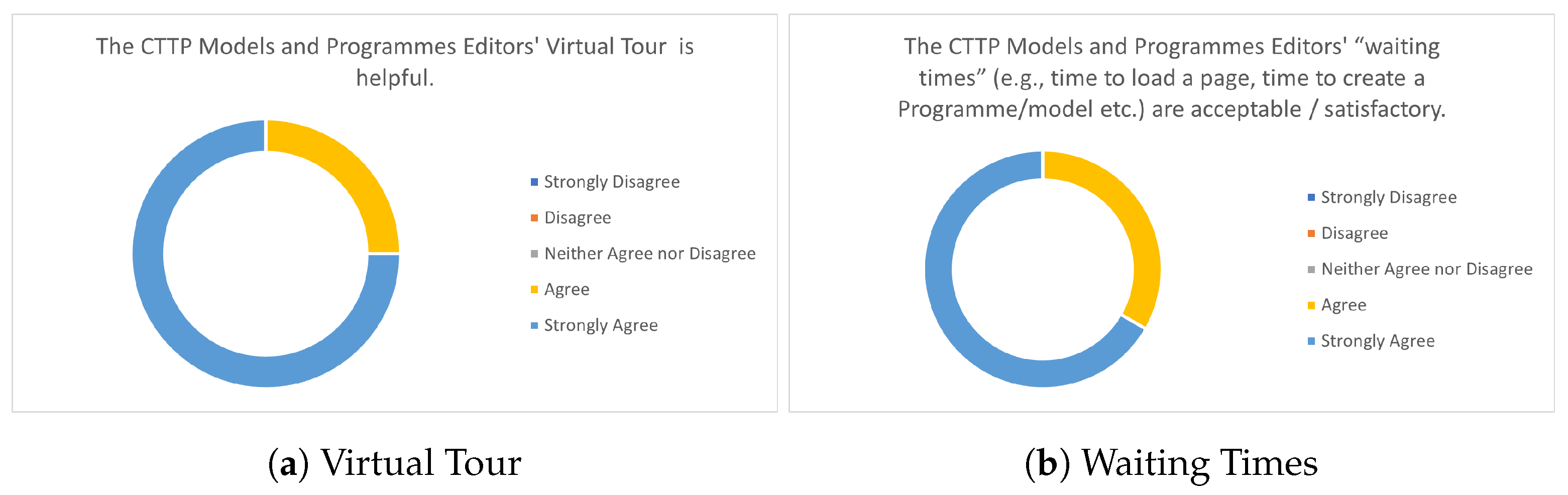

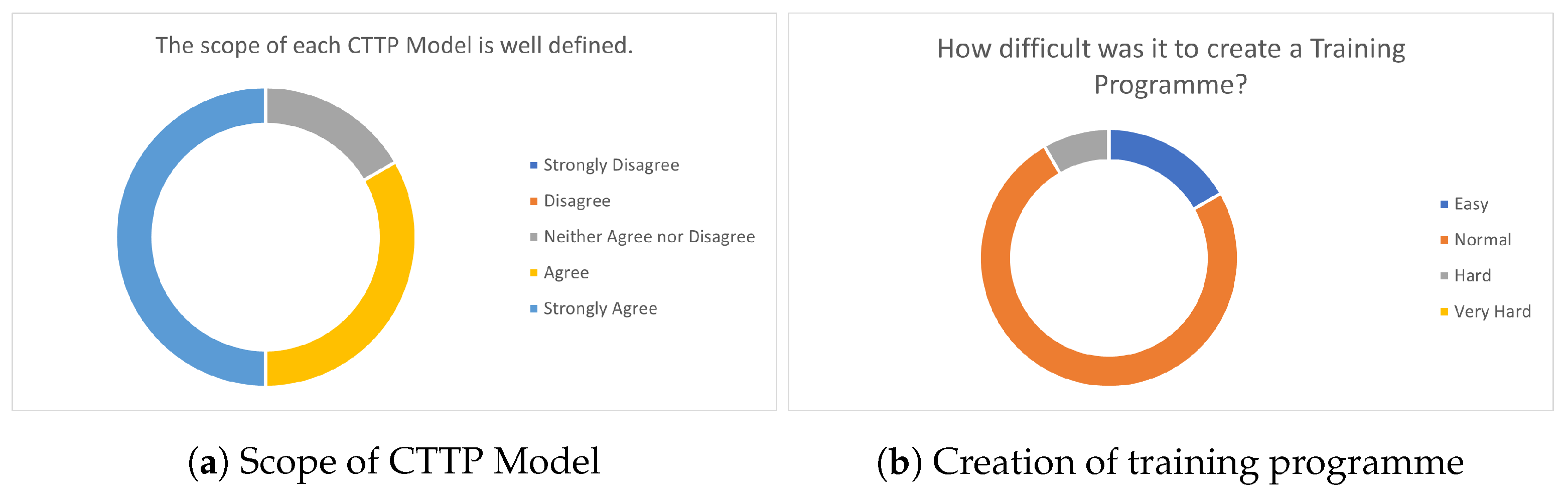

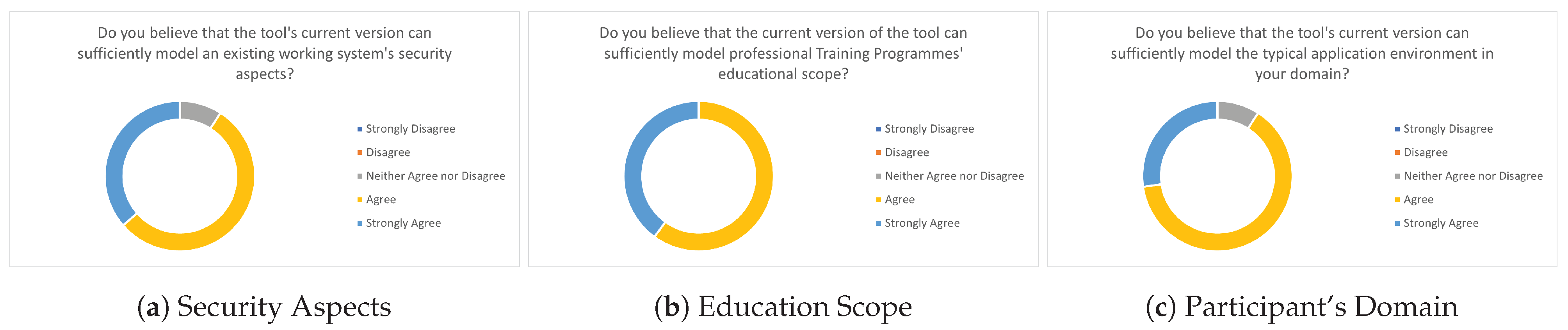

7.2. CTTP Model Editor Evaluation

- Foundation: The trainer has a basic security knowledge and no experience in creating cyber range programmes.

- Practitioner: The trainer has an advanced security knowledge and limited experience in creating cyber range programmes.

- Intermediate: The trainer is a security expert with limited expertise in creating cyber range programmes.

- Expert: The trainer is a security expert with high expertise in creating cyber range programmes.

8. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| CCDCOE | Cooperative Cyber Defence Centre of Excellence |

| CTF | Capture The Flag |

| CTTP | Cyber Threat and Training Preparation |

| CVSS | Common Vulnerability Scoring System |

| CVE | Common Vulnerabilities and Exposures |

| CWE | Common Weakness Enumeration |

| CYRA | CYber Range Assurance platform |

| IoT | Internet of Things |

| KPI | Key Performance Indicator |

| ICT | Information and Communications Technology |

| UEBA | User and Entity Behavioural Analytics |

| VM | Virtual Machine |

References

- Smyrlis, M.; Fysarakis, K.; Spanoudakis, G.; Hatzivasilis, G. Cyber Range Training Programme Specification Through Cyber Threat and Training Preparation Models. In International Workshop on Model-Driven Simulation and Training Environments for Cybersecurity; Springer: Guilford, UK, 2020; pp. 22–37. [Google Scholar]

- Somarakis, I.; Smyrlis, M.; Fysarakis, K.; Spanoudakis, G. Model-driven cyber range training: A cyber security assurance perspective. In Computer Security; Springer: Cham, Switzerland, 2019; pp. 172–184. [Google Scholar]

- Hatzivasilis, G.; Kunc, M. Chasing Botnets: A Real Security Incident Investigation. In 2nd Model-driven Simulation and Training Environments for Cybersecurity (MSTEC), LNCS; Springer: Guilford, UK; Berlin/Heidelberg, Germany, 2007; Volume 12512, pp. 111–124. [Google Scholar]

- Soultatos, O.; Papoutsakis, M.; Fysarakis, K.; Hatzivasilis, G.; Michalodimitrakis, M.; Spanoudakis, G.; Ioannidis, S. Pattern-driven Security, Privacy, Dependability and Interoperability management of IoT environments. In Proceedings of the 24th IEEE International Workshop on Computer Aided Modeling and Design of Communication Links and Networks (CAMAD 2019), Limassol, Cyprus, 11–13 September 2019; pp. 1–6. [Google Scholar]

- Department for Digital, Culture, Media & Sport. Cyber Security Breaches Survey 2021. Available online: https://www.gov.uk/government/statistics/cyber-security-breaches-survey-2021/cyber-security-breaches-survey-2021 (accessed on 30 April 2021).

- Milkovich, D. 15 Alarming Cyber Security Facts and Stats. Available online: https://www.cybintsolutions.com/cyber-security-facts-stats/ (accessed on 30 April 2021).

- Velada, R.; Caetano, A.; Michel, J.W.; Lyons, B.D.; Kavanagh, M.J. The effects of training design, individual characteristics and work environment on transfer of training. Int. J. Train. Dev. 2007, 11, 282–294. [Google Scholar] [CrossRef]

- Cascio, W.F. Costing Human Resources. In The Financial Impact of Behavior in Organizations, 4th ed.; South-Western Publishing Co.: Nashville, TN, USA, 2000; pp. 1–322. [Google Scholar]

- Mathis, R.L.; Jackson, J.H. Human Resource Management. In Gaining a Competitive Advantage, 6th ed.; McGraw-Hill Irwin: Boston, MA, USA, 2006; pp. 1–322. [Google Scholar]

- Peretiatko, R. International Human Resource Management: Managing People in a Multinational Context. Manag. Res. News 2009, 32, 91–92. [Google Scholar] [CrossRef]

- Manifavas, C.; Fysarakis, K.; Rantos, K.; Hatzivasilis, G. DSAPE—Dynamic Security Awareness Program Evaluation. In Human Aspects of Information Security, Privacy and Trust (HCI International 2014), LNCS; Springer: Heraklion/Crete, Greece, 2014; Volume 8533, pp. 258–269. [Google Scholar]

- Abraham, S.; Chengalur-Smith, I. Evaluating the effectiveness of learner controlled information security training. Comput. Secur. 2019, 87, 1–12. [Google Scholar] [CrossRef]

- Spanoudakis, G.; Damiani, M. Maña Certifying services in cloud: The case for a hybrid, incremental and multi-layer approach. In Proceedings of the IEEE 14th International Symposium on High-Assurance Systems Engineering, Omaha, NE, USA, 25–27 October 2012; pp. 17–19. [Google Scholar]

- Burg, D.; Compton, M.; Harries, P.; Hunt, J.; Lobel, M.; Loveland, G.; Nocera, J.; Panson, S.; Waterfall, G. US Cybersecurity: Progress Stalled-Key Findings from the 2015 US State of Cybercrime Survey. 2015. Available online: https://www.pwc.com/us/en/increasing-it-effectiveness/publications/assets/2015-us-cybercrime-survey.pdf (accessed on 30 April 2021).

- Robinson, A. Using Influence Strategies to Improve Security Awareness Programs. 2021. Available online: https://www.sans.org/reading-room/whitepapers/awareness/influence-strategies-improve-security-awareness-programs-34385 (accessed on 30 April 2021).

- Spitzner, L.; de Beaubien, D.; Ideboen, A.; Xu, H.; Zhang, N.; Andrews, H.; Sonaike, A. Cyber Security Breaches Survey 2021. 2019. Available online: https://adcg.org/wp-content/uploads/2020/02/SANS-Security-Awareness-Report-2019.pdf (accessed on 30 April 2021).

- Chouliaras, N.; Kittes, G.; Kantzavelou, I.; Maglaras, L.; Pantziou, G.; Ferrag, M.A. Cyber ranges and testbeds for education, training, and research. Appl. Sci. 2021, 11, 1809. [Google Scholar] [CrossRef]

- Chowdhury, N.; Gkioulos, V. Cyber security training for critical infrastructure protection: A literature review. Comput. Sci. Rev. 2021, 40, 1–20. [Google Scholar] [CrossRef]

- Gustafsson, T.; Almroth, J. Cyber range automation overview with a case study of CRATE. In 25th Nordic Conference on Secure IT Systems (NordSec), LNCS; Virtual Event; Springer: Guilford, UK, 2021; Volume 12556, pp. 192–209. [Google Scholar]

- Hatzivasilis, G.; Ioannidis, S.; Smyrlis, M.; Spanoudakis, G.; Frati, F.; Goeke, L.; Hildebrandt, T.; Tsakirakis, G.; Oikonomou, F.; Leftheriotis, G.; et al. Modern Aspects of Cyber-Security Training and Continuous Adaptation of Programmes to Trainees. Appl. Sci. 2020, 10, 5702. [Google Scholar] [CrossRef]

- Puhakainen, P.; Siponen, M. Improving employees’ compliance through information systems security training: An action research study. MIS Q. 2010, 34, 757–778. [Google Scholar] [CrossRef]

- Baldwin, T.T.; Ford, J.K. Transfer of training: A review and directions for future research. Pers. Psychol. 1988, 41, 63–105. [Google Scholar] [CrossRef]

- Frank, M.; Leitner, M.; Pahi, T. Design considerations for cyber security testbeds: A case study on a cyber security testbed for education. In Proceedings of the 15th Intl Conf on Pervasive Intelligence and Computing, Orlando, FL, USA, 6–10 November 2017; pp. 38–46. [Google Scholar]

- Leitner, M.; Frank, M.; Hotwagner, W.; Langner, G.; Maurhart, O.; Pahi, T.; Reuter, L.; Skopik, F.; Smith, P.; Warum, M. AIT Cyber Range: Flexible Cyber Security Environment for Exercises, Training and Research. In Proceedings of the European Interdisciplinary Cybersecurity Conference (EICC 2020) ACM, Rennes, France, 18 November 2020; pp. 1–6. [Google Scholar]

- Melon, F.; Vaisanen, T.; Pihelgas, M. EVE and ADAM: Situation Awareness Tools for NATO CCDCOE Cyber Exercises. In Systems Concepts and Integration (SCI) Panel SCI- 300 Specialists’ Meeting on Cyber Physical Security of Defense Systems; NATO: Shalimar, FL, USA, 2018; pp. 1–15. [Google Scholar]

- Pihelgas, M. Design and implementation of an availability scoring system for cyber defence exercises. In Proceedings of the 14th International Conference on Cyber Warfare and Security (ICCWS) ACI, Stellenbosch, South Africa, 28 February–1 March 2019; pp. 329–337. [Google Scholar]

- Joonsoo, K.; Youngjae, M.; Moonsu, J. Becoming invisible hands of national live-fire attack-defense cyber exercise. In Proceedings of the IEEE European Symposium on Security and Privacy Workshops (EuroS&PW), Stockholm, Sweden, 17–19 June 2019; pp. 77–84. [Google Scholar]

- Pham, C.; Tang, D.; Chinen, K.; Beuran, R. CyRIS: A cyber range instantiation system for facilitating security training. In Proceedings of the 7th Symposium on Information and Communication (SoICT) ACM, Ho Chi Minh, Vietnam, 8–9 December 2016; pp. 251–258. [Google Scholar]

- Tang, D.; Pham, C.; Chinen, K.; Beuran, R. Interactive cybersecurity defense training inspired by web-based learning theory. In Proceedings of the 9th International Conference on Engineering Education (ICEED), Kanazawa, Japan, 9–10 November 2017; pp. 90–95. [Google Scholar]

- Davis, J.; Magrath, S. A survey of cyber ranges and testbeds. In Defence Science and Technology Organisation (DSTO); Cyber Electronic Warfare Division (Australia): Edinburgh, South Australia, Australia, 2013; pp. 1–38. [Google Scholar]

- Stoller, M.H.R.R.L.; Duerig, J.; Guruprasad, S.; Stack, T.; Webb, K.; Lepreau, J. Large-scale virtualization in the emulab network testbed. In USENIX Annual Technical Conference; USENIX: Boston, MA, USA, 2008; pp. 113–128. [Google Scholar]

- Anderson, D.S.; Hibler, M.; Stoller, L.; Stack, T.; Lepreau, J. Automatic online validation of network conguration in the emulab network testbed. In Proceedings of the International Conference on Autonomic Computing, Dublin, Ireland, 12–16 June 2006; pp. 134–142. [Google Scholar]

- Vykopal, J.; Ošlejšek, R.; Čeleda, P.; Vizvary, M.; Tovarňák, D. KYPO Cyber Range: Design and Use Cases. In 12th International Conference on Software Technologies (ICSOFT); Springer: Madrid, Spain, 2017; pp. 310–321. [Google Scholar]

- Braje, T. Advanced tools for cyber ranges. Linc. Lab. J. 2016, 22, 24–32. [Google Scholar]

- ECSO. Understanding Cyber Ranges: From Hype to Reality. 2020. Available online: https://ecs-org.eu/documents/publications/5fdb291cdf5e7.pdf (accessed on 30 April 2021).

- Armstrong, P. Bloom’s Taxonomy. 2016. Available online: https://cft.vanderbilt.edu/guides-sub-pages/blooms-taxonomy/ (accessed on 1 June 2021).

- Goeke, L.; Quintanar, A.; Beckers, K.; Pape, S. PROTECT—An easy configurable serious game to train employees against social engineering attacks. In Computer Security; Springer: Luxembourg City, Luxembourg, 2019; pp. 156–171. [Google Scholar]

- Pape, S.; Goeke, L.; Quintanar, A.; Beckers, K. Conceptualization of a CyberSecurity Awareness Quiz. In International Workshop on Model-Driven Simulation and Training Environments for Cybersecurity; Springer: Guilford, UK, 2020; pp. 61–76. [Google Scholar]

- D5.1: Real Event Logs Statistical Profiling Module and Synthetic Event Log Generator v1. 2020. Available online: https://www.threat-arrest.eu/html/PublicDeliverables/D5.1-Real_event_logs_statistical_profiling_module_and_synthetic_event_log_generator_v1.pdf (accessed on 1 June 2021).

- Cichonski, P.; Millar, T.; Grance, T.; Scarfone, K. Computer security incident handling guide. NIST Spec. Publ. 2012, 800, 1–147. [Google Scholar]

- Smyrlis, M.; Spanoudakis, G.; Fysarakis, K. Teaching Users New IoT Tricks: A Model-driven Cyber Range for IoT Security Training. IEEE Internet Things (Iot) Mag. 2021, 1–10. [Google Scholar]

- Tsandekidis, M.; Prevelakis, V. Efficient Monitoring of Library Call Invocation. In Proceedings of the 6th International Conference on Internet of Things: Systems, Management and Security (IOTSMS), Granada, Spain, 22–25 October 2019; pp. 387–392. [Google Scholar]

- Papadogiannaki, E.; Deyannis, D.; Ioannidis, S. Head (er) Hunter: Fast Intrusion Detection using Packet Metadata Signatures. In Proceedings of the 25th International Workshop on Computer Aided Modeling and Design of Communication Links and Networks (CAMAD), Virtual Conference, Pisa, Italy, 14–16 September 2020; pp. 1–6. [Google Scholar]

- JMeter, A. Apache JMeter: Glossary. 2021. Available online: https://jmeter.apache.org/usermanual/glossary.html#:~:text=JMeter%20measures%20the%20latency%20from,be%20longer%20than%20one%20byte (accessed on 26 May 2021).

| Content | CTTP Models | Covered Threats/Vulnerabilities and Defence Techniques |

|---|---|---|

| Introduction to Cyber Security | Main lecture and educational material | Introductory knowledge of general security-related issues and the aspects of confidentiality, integrity, availability, authentication, authorisation, non-repudiation, and privacy. |

| Password management | -Main lecture and educational material -Emulation of a virtual lab for the installation and utilisation of a password manager (i.e., KeePass) | Password management issues, including the problems from weak or easily guessed ones and password cracking, as well as aspects of creating strong passwords, password ageing and update policies, and practical and user-convenient practices for password maintenance. |

| Phishing and social engineering | -Main lecture and educational material -Emulation of a virtual lab with an email phishing scenario and the use of OpenPGP software (Kleopatra) -Serious game for targeted social engineering on system administrators with the PROTECT game [37] | The everlasting effects of social-engineering with a focus on phishing attacks, as well as email security authentication, integrity, and confidentiality. |

| Malicious software and patching | -Main lecture and educational material -Emulation of a virtual lab for malicious software analysis and the examination of malware samples with relevant static binary analysis tools (e.g., PEView, EDx, TUBS library analysis [42], etc.) | Software flaws, vulnerabilities, and attacks, as well as relevant protection mechanisms, the need for regular patching, and analysis tools of malicious software and attacker’s tactics. |

| Network monitoring and security | -Main lecture and educational material -Emulation of a virtual lab for secure network configuration and monitoring of networking traffic with the tool Head(er) Hunter [43] | Networking manipulation and attacks, as well as secure configuration, administration, and operation, with a focus on continuous traffic monitoring and classification. |

| Digital Forensics (CTF) | -Main lecture and educational material -Emulation of a virtual lab for the examination of a performed attack on the server (i.e., data breach, crypto-mining, or ransomware based on the activated CTTP model) and the utilisation of forensics software (e.g., glogg, volatility, Wireshark, Nmap, etc.) | Advance attacker’s tactics and familiarisation with actual malware and emulated attacks, including tools and methodologies to conduct a digital forensics analysis as a first-responder to security-related incidents. |

| Attacks on the backend system (Red/Blue team scenario) | -Main lecture and educational material -Emulation of a virtual lab with a vulnerable server that the blue team has to defend (the populated vulnerabilities are defined in the selected CTTP model and are correlated with the defined KPIs) -The red team can be either performed by the trainees via a similar emulated setting or can be completely simulated and triggered automatically or activated manually by the trainer | Acquire advance skills and hands-on experience as the defender and the attacker of a vulnerable system, including tactics and tools to discover the underlying threats and fix them or exploit them, respectively. The red team training involves ethical hacking perspectives and the main benefit for the trainee is to better understand the impacts of attacks on an insecure or poorly safeguarded system and learn how to block or mitigate them in the real working environment. |

| No of. vCPUs | RAM | Storage (Gb) | Bandwidth (Mbps) |

|---|---|---|---|

| 8 | 32 | 120 | 50 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Smyrlis, M.; Somarakis, I.; Spanoudakis, G.; Hatzivasilis, G.; Ioannidis, S. CYRA: A Model-Driven CYber Range Assurance Platform. Appl. Sci. 2021, 11, 5165. https://doi.org/10.3390/app11115165

Smyrlis M, Somarakis I, Spanoudakis G, Hatzivasilis G, Ioannidis S. CYRA: A Model-Driven CYber Range Assurance Platform. Applied Sciences. 2021; 11(11):5165. https://doi.org/10.3390/app11115165

Chicago/Turabian StyleSmyrlis, Michail, Iason Somarakis, George Spanoudakis, George Hatzivasilis, and Sotiris Ioannidis. 2021. "CYRA: A Model-Driven CYber Range Assurance Platform" Applied Sciences 11, no. 11: 5165. https://doi.org/10.3390/app11115165

APA StyleSmyrlis, M., Somarakis, I., Spanoudakis, G., Hatzivasilis, G., & Ioannidis, S. (2021). CYRA: A Model-Driven CYber Range Assurance Platform. Applied Sciences, 11(11), 5165. https://doi.org/10.3390/app11115165