1. Introduction

The cost-effectiveness, ease of access, and mission versatility are the primary compelling qualities of UAVs that attract many aerospace and related sectors. Hence, UAVs are being integrated into tasks such as package delivery, first aid, law enforcement, disaster management, infrastructure inspection, agriculture mechanization, rescue, military intelligence, and many more. As low-altitude aerial vehicles, however, UAVs often encounter obstacles such as trees, mountains, high storey buildings, electric poles, and so on during their missions. Therefore, these aerial vehicles should be equipped with sensors to perceive the environment around them and avoid potential dangers.

To leverage the use of UAVs in cluttered environments, studies have been conducted on the types and ways of integrating various sensors for autonomous navigation. Vehicle localization is one of the pillars of autonomous navigation. In an open-air space, Global Positioning System (GPS) is often used for UAV localization. However, GPS-based UAV localization in cluttered environment is unreliable. In such environment, sensors onboard the UAV are used for localization as well as collision avoidance. Ivan Konovalenko et al. [

1] fused inputs from visual camera and Inertial Navigation System (INS) to localize a UAV. Based on computer simulation, the team analyzed various approaches to vision-based UAV position estimation. Jinling Wang et al. [

2] combined inputs from GPS, INS, and vision sensors to autonomously navigate UAVs. In their report, the inclusion of GPS input reduces vision-based UAV localization errors and hence enhances the accuracy of navigation. Jesus Garcia et al. [

3] presented a methodology of assessing the performance of sensors fusion for autonomous flight of UAVs. Their methodology systematically analyzes the efficiency of input data for accurate navigation of UAVs.

Computer vision technology has evolved over the years to the stage that enables not only UAV localization but also obstacle detection and avoidance. This is realized through the advent of high-performance computers with the ability to process data and perform complex calculations at high speeds. With the promising progress in computer vision technology, many vision-based navigation algorithms have been developing. A comprehensive review of computer vision algorithms and their implementations for UAVs’ autonomous navigation was presented by Abdulla Al-Kaff [

4]. Lidia et al. [

5] provided a detailed analysis on the implementation of computer vision technologies for navigation, control, tracking, and obstacle avoidance of UAVs. Wagoner et al. [

6] also explored various computer vision algorithms and their capabilities to detect and track a moving object such as a UAV in flight.

Alongside computer vision technology, Artificial Intelligence (AI) is being implemented into UAVs navigation system to enable them to acquire humanoid perception. The idea is to train the computer that is either onboard a UAV or integrated with ground-based command system so that it takes control of UAV navigation with little to no human intervention. Su Yeon Choi and Dowan Cha [

7] reviewed the historical development of AI and its implementation to UAVs with a particular focus on UAVs control strategies and object recognition for autonomous flight of UAVs. They also considered machine-learning-based UAV path planning and navigation methods.

The integration of AI and computer vision technology brings a remarkable importance in civilian application of UAVs. Many challenging tasks such as wildlife monitoring, disaster managment, and search and rescue are being addressed by UAVs equipped with AI and computer technology. Luis F. Gonzalez et al. [

8] reported how AI- and computer-vision-enabled UAVs have solved the challenges of wildlife monitoring. The study reported by Christos and Theocharis [

9] reflects the importance of UAVs equipped with AI and computer vision for autonomous monitoring of disaster-stricken areas. Eleftherios et al. [

10] combined AI with a computer vision system onboard a UAV to enable real-time human detection during search and rescue operations.

The integration of the two aforementioned key technologies—AI and computer vision—provides environment acquaintance to UAVs. This helps the UAVs to plan their collision-free paths. For autonomous navigation, a UAV has to have either a predetermined path or a capacity to plan a path in real-time. A mission with predetermined route requires less number of sensors as compared to a mission with real-time path planning. The challenges with real-time path planning are the complexity of multiple sensors integration, input data synchronization, and computational burdens thereof. Valenti et al. [

11] developed techniques to enrich a UAV with capabilities of localizing itself and autonomously navigate in a GPS-denied environment. In their report, stereo cameras on-board the UAV-based vision data were used for UAV localization and to build a 3D map of the surroundings. Based on this information, an improved

path-planning algorithm was implemented for autonomous navigation of the UAV collision-free along the shortest path to the goal.

System-resource-intensive computational burdens on the companion computer on-board a UAV is always a setback to real-time path planning for the UAV. The companion computer has to deal with visual data processing for UAV localization, obstacle detection, and path planning. A comprehensive literature review on vision-based UAV localization, obstacle avoidance, and path planning was reported by Yuncheng et al. [

12]. In their study, the challenges of acquiring real-time data processing for safe navigation of the UAV are reflected. They also reported the challenges of autonomous navigation of a UAV due to intensive computation and high storage consumption of 3D map of the surroundings. Yan et al. [

13] developed a computer-simulation-based deep reinforcement learning technique towards real-time path planning for UAV in dynamic environments. Although this is a promising step towards real-time path planning in dynamic environments, the assumption of predetermined global situational data and the absence of real flight test that verifies the efficiency the technique may degrade its attention.

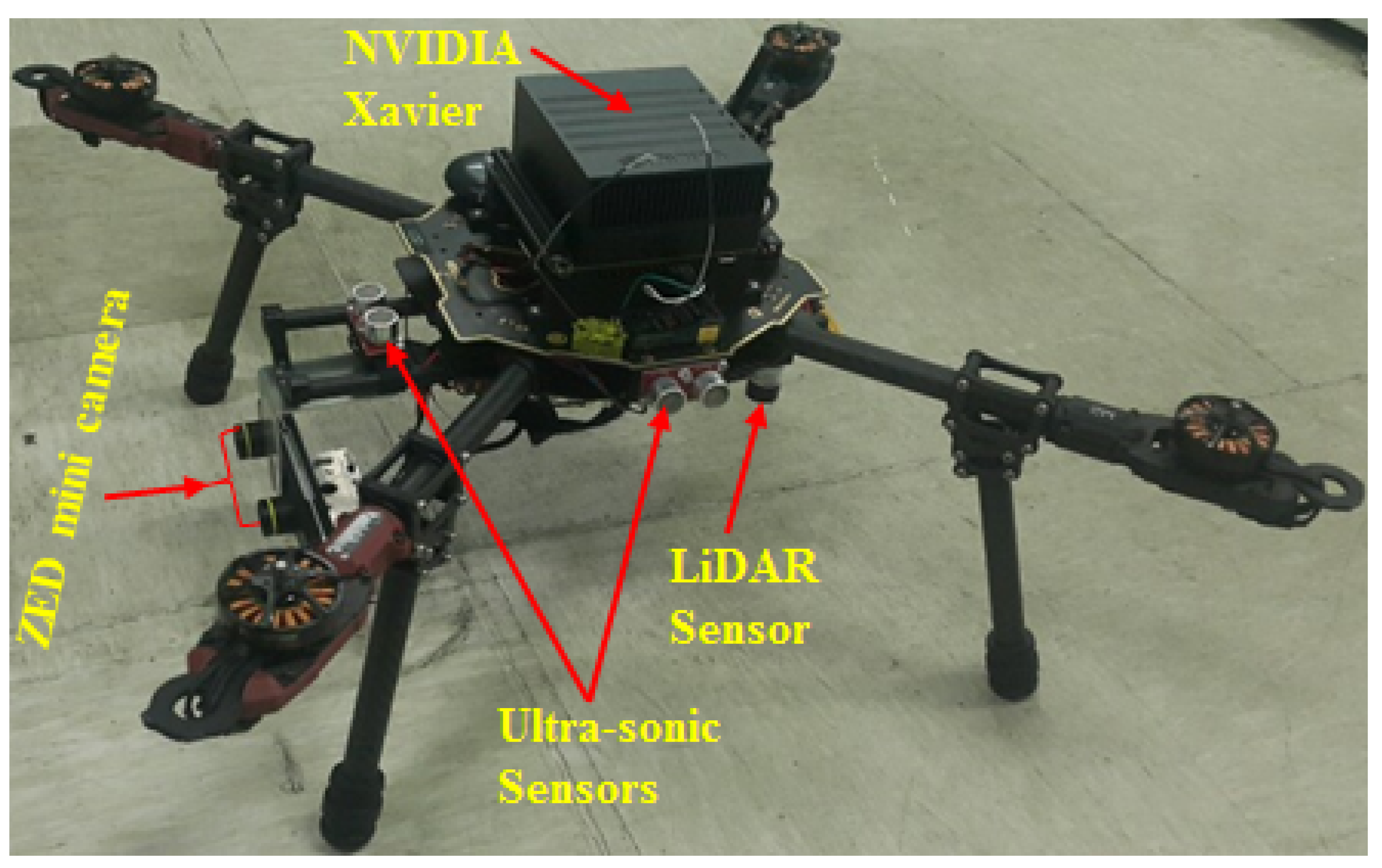

To ease the computational burden on a companion computer dedicated to UAV localization, obstacle detection, and 3D path planning, we propose the integration of the fastest object detection algorithm with a light-weight 3D path planner that relies on few obstacle-free points to generate a 3D path. The proposed 3D path planner is based on AI acquired through YOLO (You Only Look Once ), which is the fastest object detection algorithm.

The study presented in this report is organized into sections. In

Section 2, the problem to be addressed in this study is stated and the implemented methodology is explained. In

Section 3, the overall descriptions of the implemented hardware and software components and their configurations are given. The machine learning approach for object detection is explained in

Section 4. Then, the commonly known 3D path planning algorithms are discussed with their advantages and disadvantages in

Section 5. The developed real-time 3D path planner is detailed in this section, followed by its performance tests in

Section 6. Results and discussion are given in the final

Section 7.

2. Problem Statement

The challenge in autonomous navigation of a UAV in urban environment is recognizing and localizing obstacles at the right time and continuously adjusting the path of the UAV in such a way that it can avoid the obstacles and navigate to the destination safely. To this end, it requires integrating effective object detection and path planning algorithms that run on a companion computer onboard the UAV.

Most of the widely used object detection algorithms are based on scanning the entire environment and discretizing the scanned region to create a dense mesh of grid points from which objects are detected. This process requires a companion computer with high storage capacity and intensive computational power. Moreover, the well-known path-planning algorithms either randomly sample or exhaustively explore the entire consecutive obstacle-free grid points to generate optimal path towards destination. This incurs additional computational burden on companion computer and compromises the real-timeness of the navigation commands. Liang et al. [

14] conducted a comprehensive review on the most popular 3D path planing algorithms. In their review, a detailed analysis of the advantages and disadvantages of these commonly used algorithms is given. They reported that despite the intensive applications of these algorithms, the problem of real-time path planning in a cluttered environment remains unsolved.

Dai et al. [

15] proposed light-weight CNN-based network structure for both object detection and safe autonomous navigation of a UAV in indoor/outdoor environments. However, the whole process of object detection and UAV path planning was performed on a ground-based computer and communication with the UAV was through a Wifi connection. This had a catastrophic drawback on the safe navigation of the UAV in indoor environment where Wifi connection failure is likely. Moreover, the Wifi data transfer rate may create a delay in navigation commands to be sent to the UAV. In an attempt to remove the dependency of the UAV on ground-based commands, Juan et al. [

16] proposed a UAV framework for autonomous navigation in a cluttered indoor environment based on companion computer on-board the UAV. The performance of this framework was validated through hardware-in-the-loop simulation, and it appears to be promising to put an end to ground-based navigation command. However, an occupancy map of the cluttered environment in which the UAV navigated was pre-loaded on the companion computer. This undermines the applicability of the framework in dynamic environment.

To avoid computational burden on the companion computer, Antonio et al. [

17] applied a data-driven approach, where data about the cluttered environment must be collected prior to the UAV mission. As proposed in their work, DroNet makes use of the collected data and safely navigates a UAV in the streets of a city. However, this approach, again, has limitations when it comes to dynamic or unknown environments.

This study, therefore, tends to address the challenges of computational burden subjected to companion computer onboard a UAV by integrating the available fastest object detection algorithm and the proposed light-weight real-time 3D path planner. Such an approach by-passes the challenges of dynamic or unknown environments. In the preliminary performance test, we assumed limited number of objects: pedestrian, window, electric poles, tunnel, trees, and barely visible nets as plausible obstacles that the UAV may encounter in a disaster monitoring scenario. Once the proposed 3D path planner is validated in a complete real-flight tests, further objects will be included in the machine learning process.

Methodology

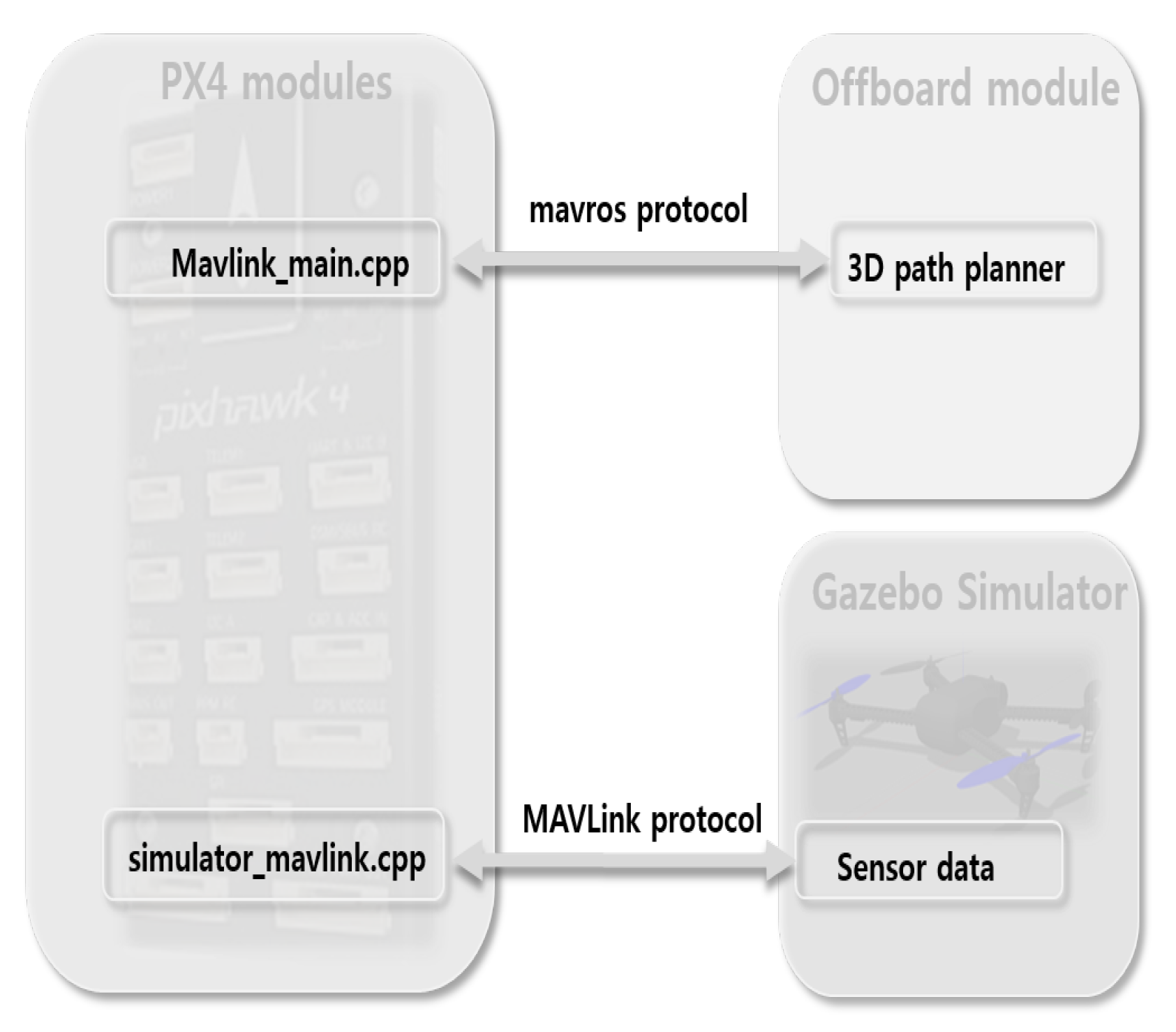

To enable a companion computer onboard a UAV for simultaneous object detection and 3d path planning in real-time, it is essential to integrate the fastest object detection algorithm and 3D path planner that requires less computational burden. YOLO, as explained in

Section 4.1, is selected as the fastest object detection algorithm. In addition to object detection, this algorithm also localizes the object(s). The proposed 3D path planner relies on the relative locations of the detected objects to calculate a collision-free path for the UAV. Although the proposed 3D path planner resembles

path planning algorithm in implementing heuristic function for cost minimization, it avoids an exhaustive search for consecutive collision-free nodes and storage method of

. Unlike

, the proposed 3D path planner maps the current location of the UAV to a few nodes between consecutive obstacles. These few nodes are determined based on the size of the UAV and the gap between consecutive obstacles, as explained in

Section 5.1.1 and

Section 5.1.2. A Euclidean function is used as a heuristic function in this 3D path planner.

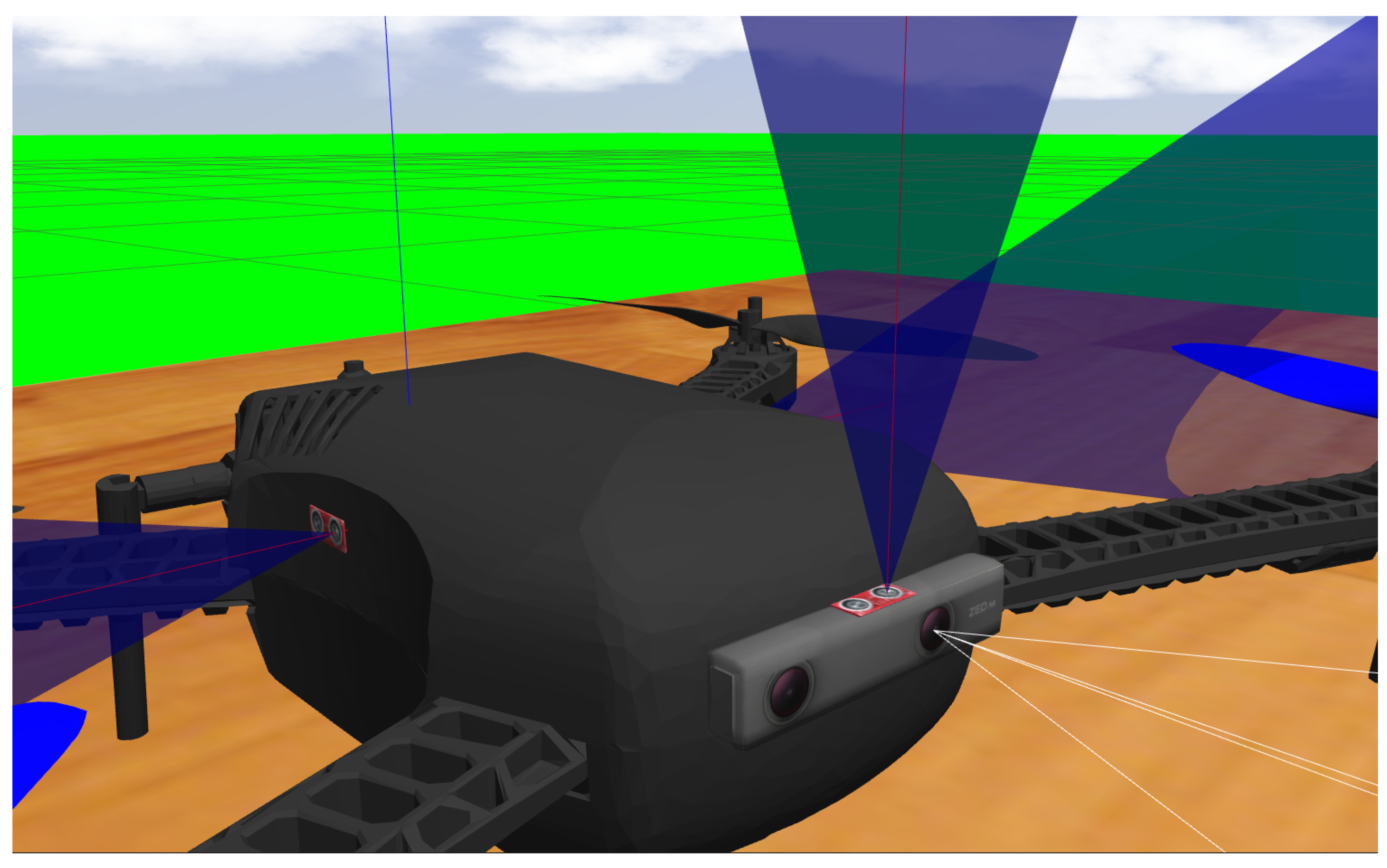

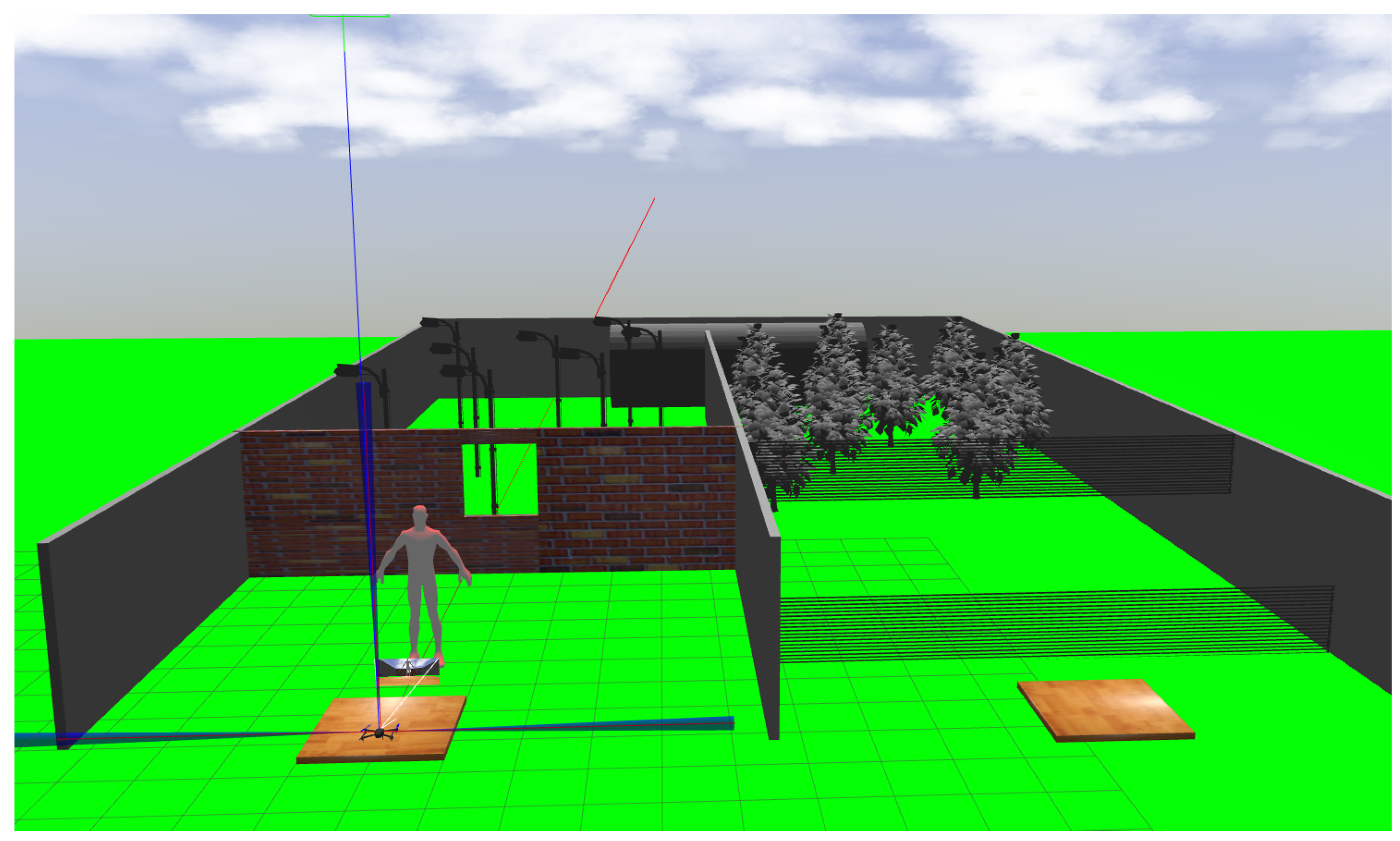

Prior to a real flight test, the performance of the proposed 3D path planner must be checked in a simulated environment. For this performance test, software tools are essential components. One of the software tools specifically designed for such task is Gazebo 3D dynamic environment simulator. This software was primarily designed to evaluate algorithms for robots [

18] and provides realistic rendering of the environment in which the robot navigates. Moreover, it is enriched by various types of simulated sensors. We designed a simulated cluttered 3D environment in Gazebo and used it to test performance of the proposed 3D path planner during its successive development.

5. Three-Dimensional Path Planning Algorithms

The top challenge in autonomous navigation of UAVs is planning an obstacle-free route from the start to the destination. Encountering obstacles is possible, especially for missions like law enforcement, package delivery, and first aid in urban areas. Most of the path planning algorithms for UAVs are derived from pre-existing algorithms designed for ground robots. These algorithms are often 2D and need to be modified into 3D for aerial vehicles. The complexity to design and the demand for high performance computers on-board the UAVs are challenges that incurred by the 3D path planners. The obstacle-free 3D path planning process demands an intensive computational burden that often limits the maximum cruising capability of the UAV. The effect of this computational burden is true for both free and cluttered environments as long as image processing has to occur.

Commonly known 3D path planning algorithms are with its variants, Rapidly– Exploring Random Tree (RRT) with its variants, Probabilistic RoadMaps (PRM), Artificial Potential Field (APF), and Genetic or Evolutionary algorithms. These algorithms can be categorized into two: sampling-based and node/grid-base algorithms. Sampling-based algorithms connect randomly sampled points (subset of all points) all the way from start to the goal points thereby creating random graphs from which a graph with shortest path-length is selected. The algorithms include RRT, PRM, and APF.

Node/grid-based algorithms, unlike sampling-based algorithms, exhaustively explore throughout consecutive nodes. These algorithms include and its variants. In search for an obstacle-free path, the algorithm takes in an image of the environment and discretizse it into grid cells that includes the current (start) location of the UAV and the goal location. The algorithm has two functions to prioritize the cells to be visited. These two functions are the cost function, which calculates the distance from the current cell to the next cell, and the heuristic function, which calculates the distance from the next cell to the cell that contains the goal. With the objective of minimizing the sum of these two functions, the cells to be visited are heuristically prioritized. In the case of 3D search, the cost function calculates distances from the current cell to all 26 neighboring cells, and the heuristic function calculates distance from the 26 cells to the cell that contains the goal. In a cluttered environment with complex occlusion, highly dense grid cells are required, which in turn increase the computational burden, and thus the selected path may not be optimal.

5.1. Machine Learning-Based 3D Path Planner

Training an on-board computer to quickly identify objects and avoid collision with them in an environment in which UAV is set to navigate can be taken as a paradigm shift as it inherits the mechanism that a human being takes to avoid collision. The computational intelligence of a human brain is the degree that it is trained to, as is the artificial intelligence of the computer onboard a UAV. This is why intensive training of on-board computer is compulsory.

Apart from the capabilities of ensuring the presence of objects and their relative locations from the UAV, the companion computer may be required to know the type of objects it detected. The YOLO object detection algorithm installed on the companion computer has such a capability. Strategies to avoid collision with an object may depend on the type of the object. For instance, the avoidance mechanism for a window (open obstacle) is different from the mechanism for a tree (closed obstacle). Our 3D path planner includes those capabilities, as explained below.

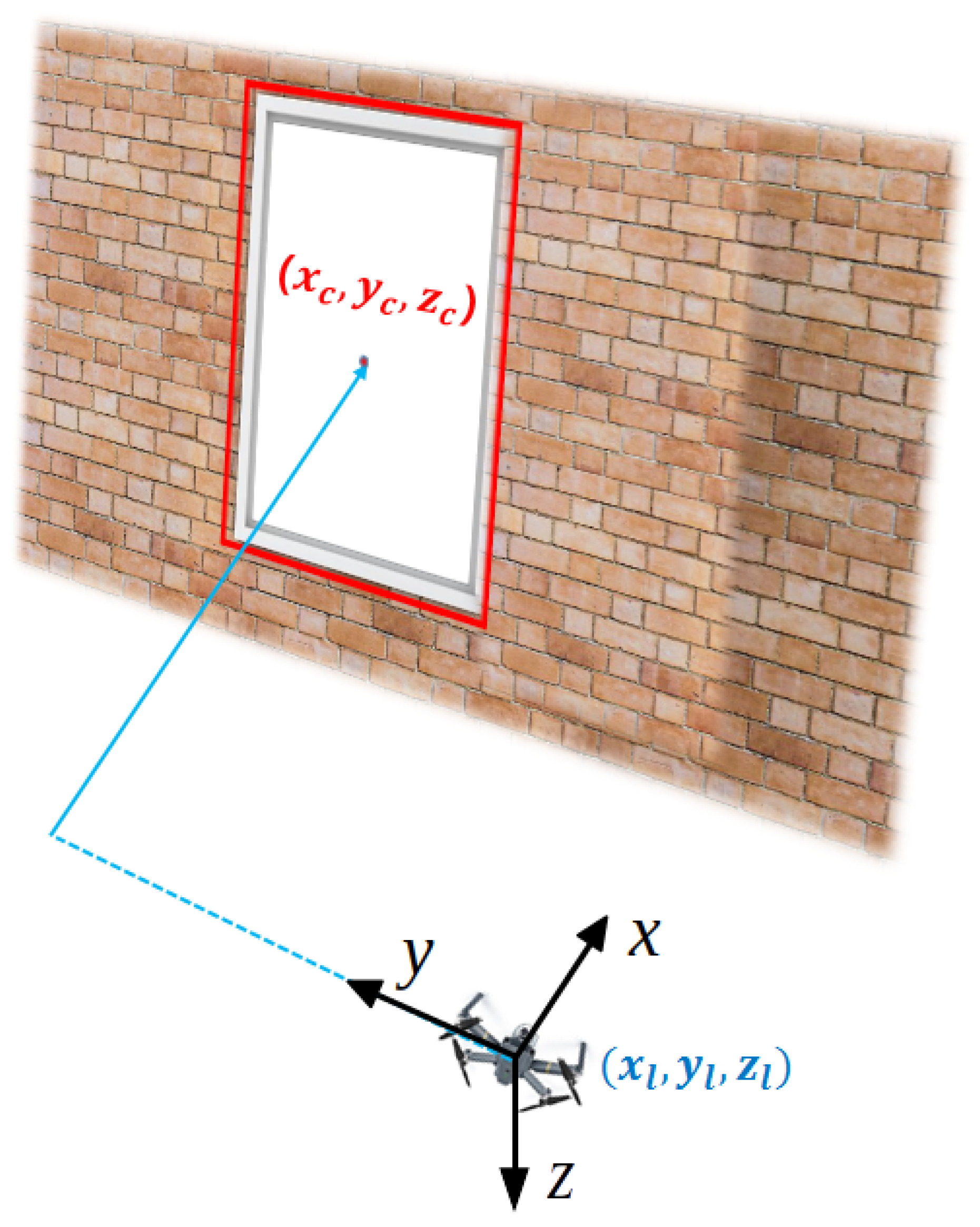

5.1.1. Open Obstacles

In this type of obstacle, there is a possibility in which the UAV has no other option but to pass through the opening, such as in the case when the mission is to enter or exit a closed room through open window. Missions like in-house first aid or disaster monitoring may encounter such a scenario. In this case, the algorithm determines the relative position of the UAV with respect to the center of the bounding box around the obstacle. The center of the bounding box, as shown in

Figure 4, has coordinate axes (

) with respect to the ZED mini stereo camera frame, whose origin is located at the center of left camera.

The

x,

y, and

z axes of this frame point forward, right-to-left, and upward, respectively. Therefore,

x represents the depth of the detected object (e.g.,

depth of the window). The depth information is directly extracted from the ZED mini camera, whereas y and z are derived from the (U,V) coordinate values through coordinate transformation. Information obtained with respect to image frame, including Equations (

1) and (

2), are transformed to the camera frame.

The UAV’s local position (

) is acquired from GPS embedded in the Pixhawk 4 autopilot, LiDAR and ZED mini stereo camera. Before the UAV tries to pass through the window, it has to align itself with a vector normal to the plane of the window through appropriate attitude and altitude changes. In the figure, the setpoint (

) is sent by the 3D path planner to the autopilot to command the UAV to adjust itself before advancing forward. The variables

and

are the y and z axes’ setpoint values, respectively, obtained as follows:

While the UAV is responding to the command, the ultrasonic sensors mounted on the sides of the UAV check whether there are objects or not in the way. Once alignment is done, the UAV advances through the window with the setpoint (

), where

is the relative depth of the bounding box with clearance.

The variable is a minimum object clearance or distance of the UAV behind the window that ensures the UAV has completely passed through the window with clearance. Moreover, to confirm the passage of the UAV through the window, the readings from the ultrasonic sensors mounted on the left and right sides of the UAV are considered. This method is implemented in cases like passing through tunnels or holes alike.

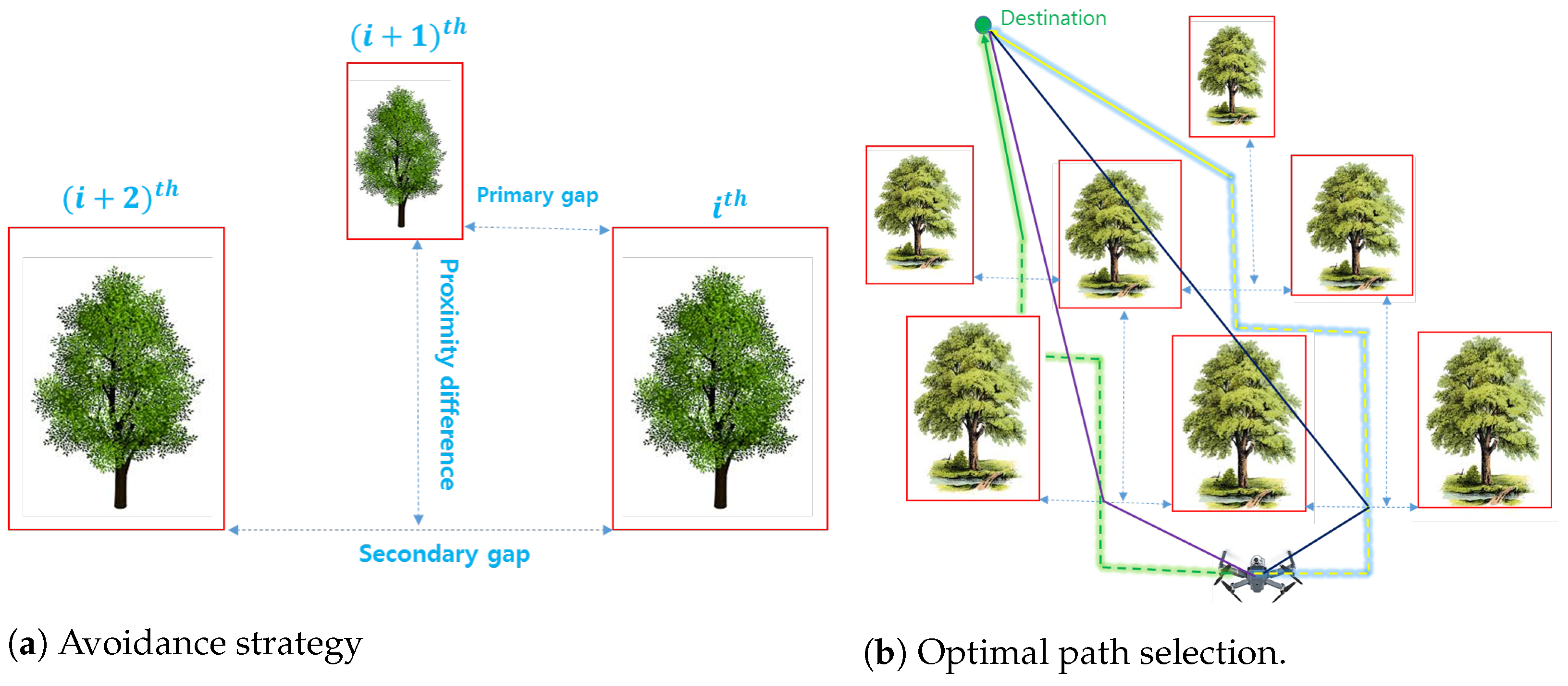

5.1.2. Closed Obstacles

If the obstacle is closed, our path planner considers the pass-by option with a minimum side clearance from the obstacle. The 3D path planning algorithm takes in bounding boxes information of all detected objects and assigns an identity index to each of them based on the locations of their centers along the y-axis. All information about the bounding box are with respect to the camera frame onboard the UAV. As shown in

Figure 5a, the index value increases towards the increasing y-axis of the ZED min stereo camera (in this case, from right to left).

There are three conditions to be considered to determine next setpoint for the UAV. These are searching for

Wide primary gaps: gaps between consecutive obstacles;

Narrow primary gaps but with proximity difference: depth difference between consecutive obstacles; and

Narrow primary gaps with small or no proximity difference.

Based on

Figure 5a, the algorithm calculates the primary gap (between the

and

) and secondary gap (between

and

). The importance of calculating the secondary gap is that if the primary gap is narrow (less than twice UAV width) but with proximity difference more than twice the UAV length, there is the possibility that the UAV can advance forward but should check whether the secondary gap is wide enough or not to let the UAV pass in between. The gaps and proximity differences are calculated as follows:

The pseudo-algorithm of our 3D path planner in the presence of multiple detected obstacles, as shown in

Figure 5b, is given below.

index the bounding boxes of the obstacles based on y-axis values of their centers. The box with the smallest y-axis value is indexed as the box;

calculate and for each bounding box;

calculate the primary gap between the and

if the gap is greater than or equal to twice UAV width;

- –

calculate the midpoint of the gap;

- –

calculate distances from the current location of the UAV to the midpoint and from the midpoint to the goal point. Save the sum of these two distances as path-length;

else if the primary gap is smaller, calculate the proximity difference of the two consecutive bounding boxes and ;

- –

if proximity difference is greater than or equal to twice UAV length, calculate the secondary gap;

- –

if secondary gap is greater than or equal to twice the UAV width, check the following conditions:

- ∗

if the obstacle is closer than the , then set () as a potential setpoint;

- ∗

else, set () as a potential setpoint;

- –

calculate distances from the current location of the UAV to the potential setpoint and from potential setpoint to the goal point. Save the sum of these two distances as path-length;

apply the above steps for the remaining bounding boxes;

compare the path-lengths and set the setpoint that leads to a minimum path length as the next setpoint for the UAV;

else if the secondary gap is less than twice the UAV width, hover at a current altitude and yaw to search for any possible path applying the above procedure;

if no path is discovered, land the UAV.

7. Results and Discussion

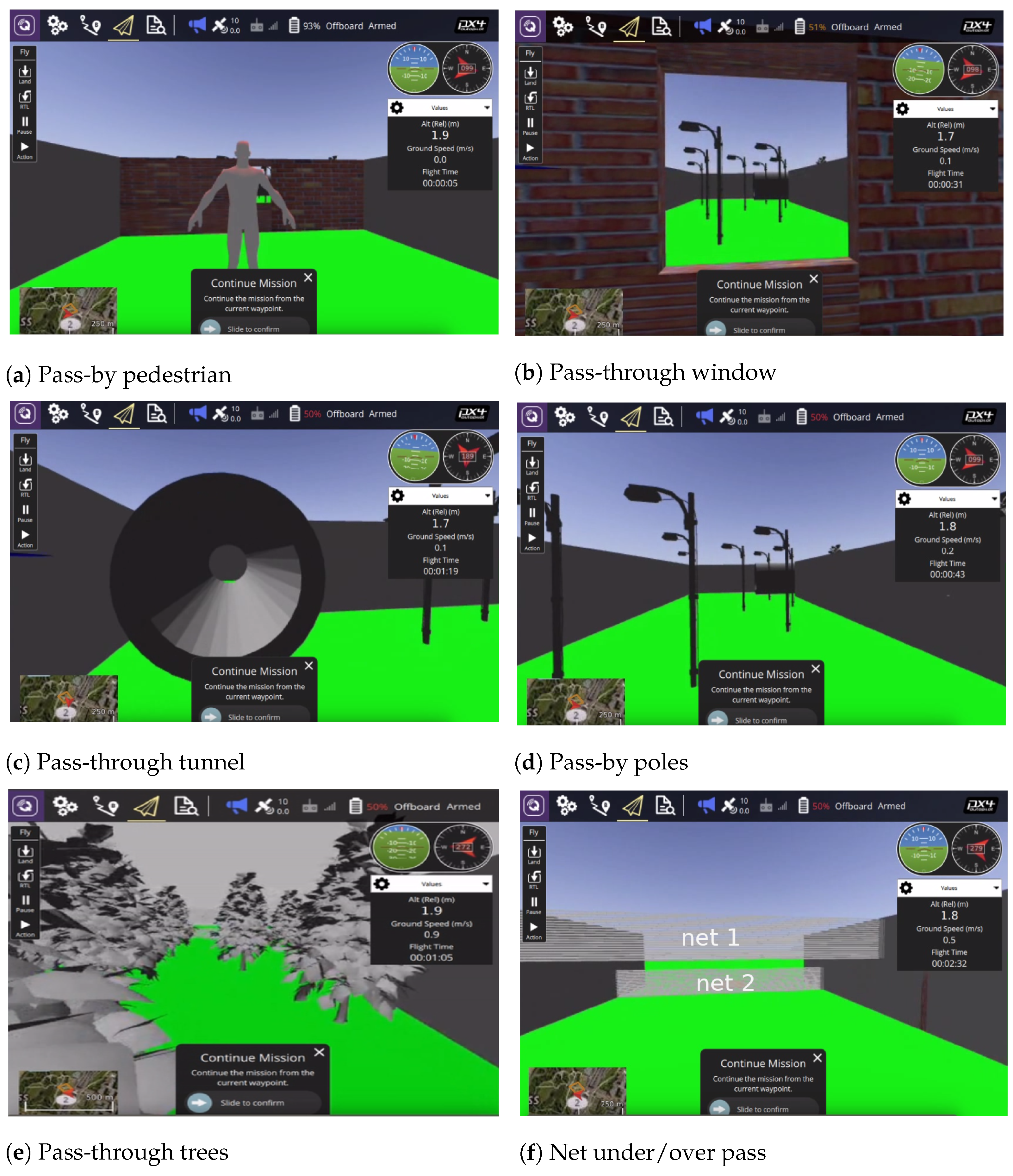

The validation of the developed 3D path planner was conducted through both SITL and preliminary real flight tests. Gazebo 3D model simulation environment was thoroughly used to develop and validate our 3D path planner prior to its upload into Pixhawk autopilot. The Gazebo environment shown in

Figure 6 was set in such a way that it has obstacles like human, window, poles, tunnel, trees, and nets. These obstacles implicate the plausible encounters that the drone may face during missions such as package delivery, disaster monitoring, law enforcement, and first aid. For a complete navigation from the left section to the right section of the environment, the drone has to avoid collision with any of the mentioned obstacles and safely land at the landing pad.

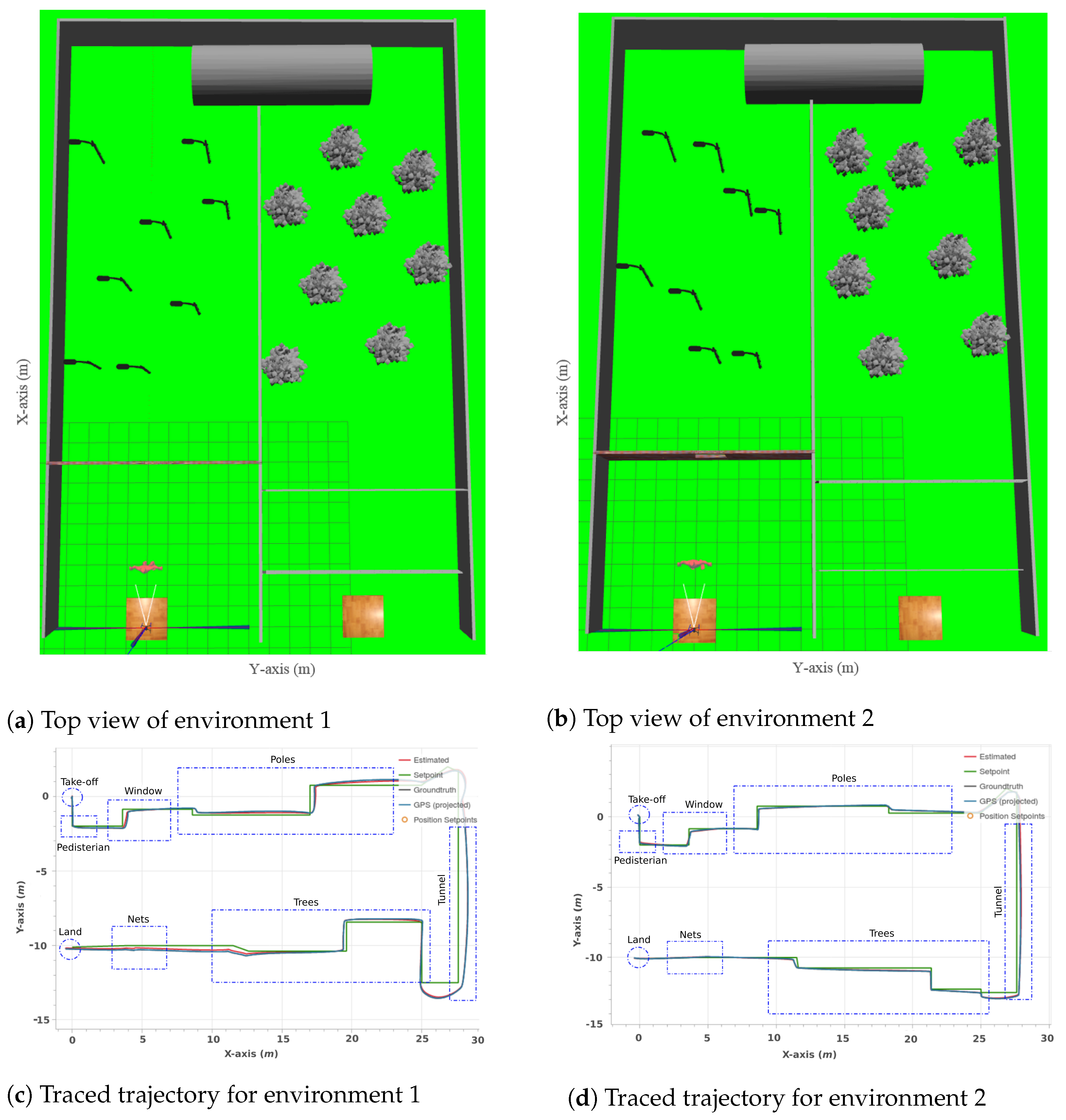

Rigorous simulation tests were done where the two randomly arranged environments shown in

Figure 7 are some of the environments in which the tests were done. The path followed by the quadcopter in the environment in

Figure 7a is shown in

Figure 7c. Similarly, the path followed in the environment in

Figure 7b is shown in

Figure 7d. As can be seen in these figures, the quadcopter followed two different trajectories in response to the two different arrangements of the obstacles in the environments. Moreover, the setpoints sent by the path planner and the estimated locations of the quadcopter overlap throughout the trajectories. This overlap validates the effectiveness of the path planner to autonomously navigate the quadcopter in a cluttered and GPS-denied environment.

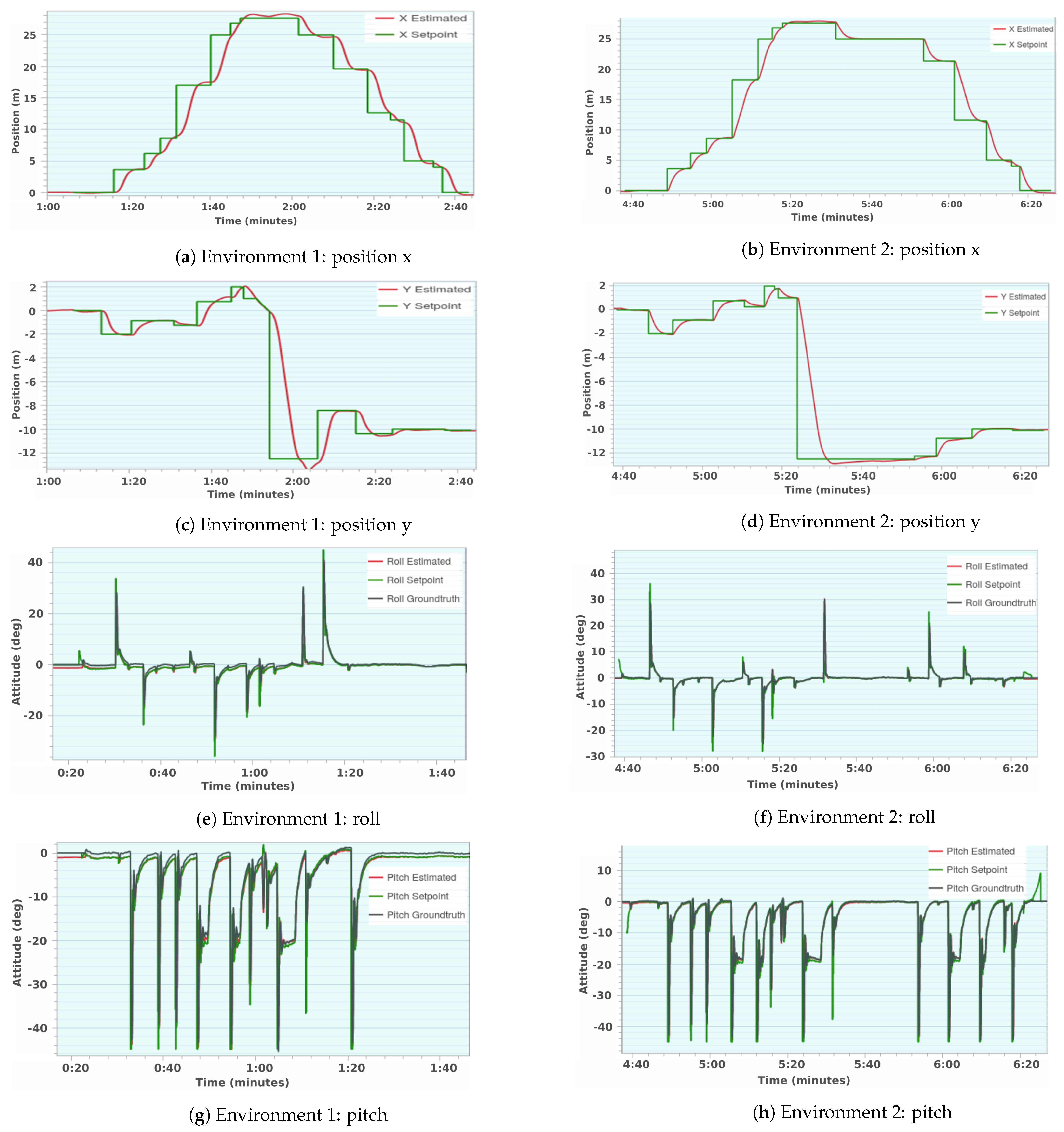

Components of position and attitude responses in the two environments,

Figure 7a,b, are shown in

Figure 10. The well-traced setpoints of both position and attitude prove the efficiency of the path planner. In the path planner, a setpiont acceptance radius is set to 0.30 m. The differences observed at setpoint nodes are due to this acceptance radius. The quadcopter advances to the next setpoint assuming that the current setpoint is achieved at the moment the quadcopter crosses the acceptance radius, though the quadcopter may not reach the actual setpoint. This causes a gap between the estimated position and position setpoint. The attitude estimates of the quadcopter in both environments conform to the setpoints.

The preliminary real flight tests were conducted for collision avoidance with pedestrians. Machine learning was done for a pedestrian with different posture, clothes, and light exposures. As shown in

Figure 9a, the quadcopter attempts to avoid collision with the pedestrian by rolling either right or left, implementing the conditions given in the pseudo-algorithm. For reference, the recorded two short videos on pedestrian collision avoidance are submitted with this manuscript.

For a complete mission test, a real environment, similar to the simulated environment shown in

Figure 6, should have been constructed. This would take time and money. For this report, the real environment was modeled by an ROS node that publishes required information to the 3D path planner. This node publishes simulated locations of obstacles, and the 3D path planner takes those locations and calculates an obstacle-free path. With this, the UAV was commanded to autonomously head to the landing pad avoiding all possible obstacles on its way. The path followed by the UAV during this mission is shown in

Figure 12. The overlap of the estimated quadcopter positions and intended setpoints shows that the 3D path planner effectively executed the mission.

In the real flight test, which was conducted in an open field, the quadcopter localization was limited to GPS and LiDAR. LiDAR is only for altitude estimation. ZED mini stereo camera, combined with GPS for quadcopter localization, does not provide proper localization of the quadcopter in an open field as it is required to get reflected rays from objects in its operation range. Therefore, for localization, the quadcopter in this circumstance relies on GPS whose accuracy is about 2 m. Depending on the number of satellites accessed and the environment in which the quadcopter is, the accuracy of the GPS drifts. The initial location of the quadcopter before takeoff had high drifts as can be seen in

Figure 13c.

The test results obtained so far show that the 3D path planning algorithm is effectively guiding the UAV through collision-free paths. The future work includes the real flight tests in the environment similar to the simulated one as well as in unconstrained environments. Moreover, machine learning for various objects will be conducted based on the mission profile of the UAV.