Traffic Monitoring System Based on Deep Learning and Seismometer Data

Abstract

1. Introduction

2. Methods

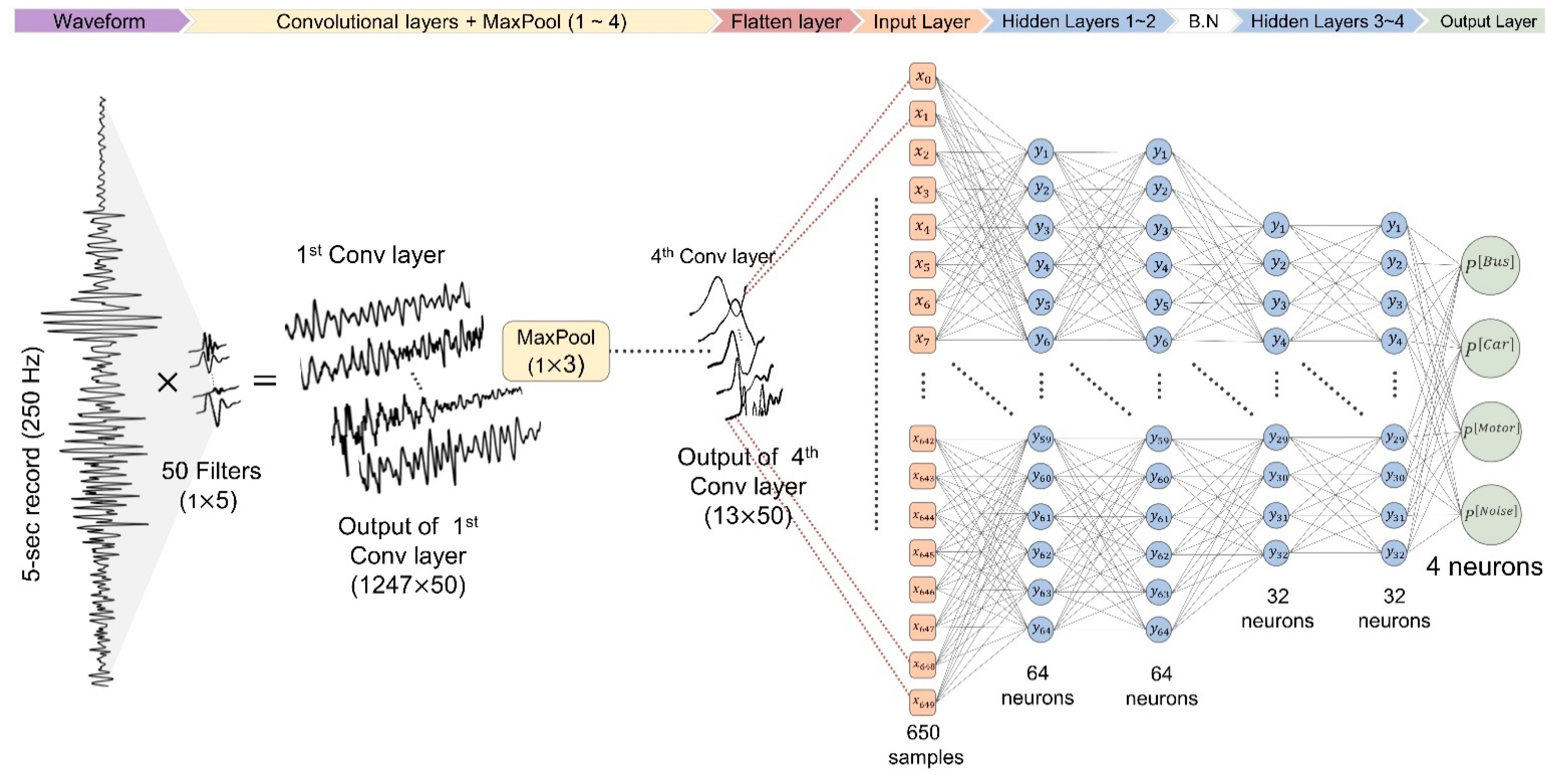

2.1. Deep Neural Network

2.2. Convolutional Neural Network

2.3. Recurrent Neural Network

2.4. Optimization of Weights and Biases

3. Data

3.1. Data Set

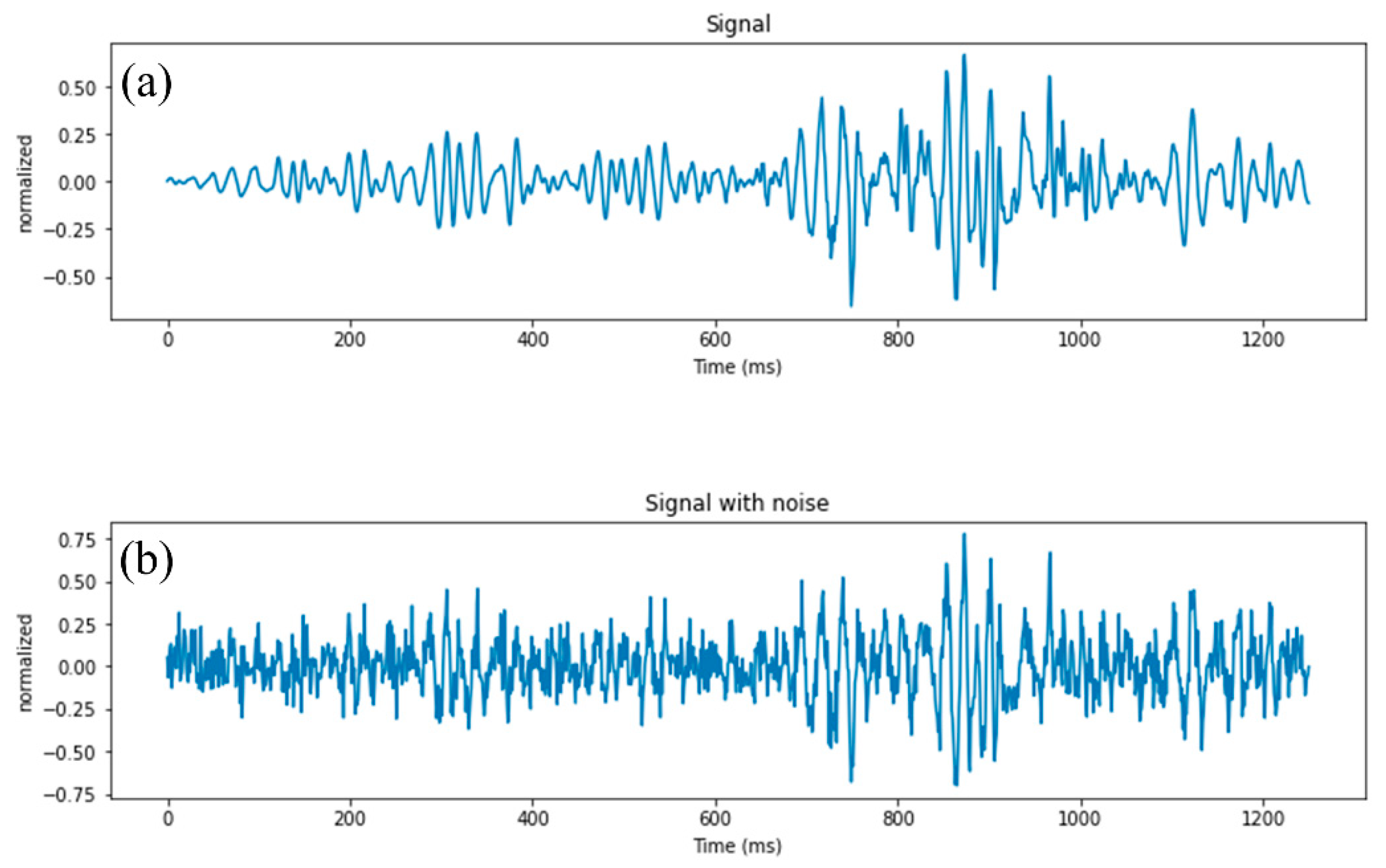

3.2. Training Data Augmentation

4. Results

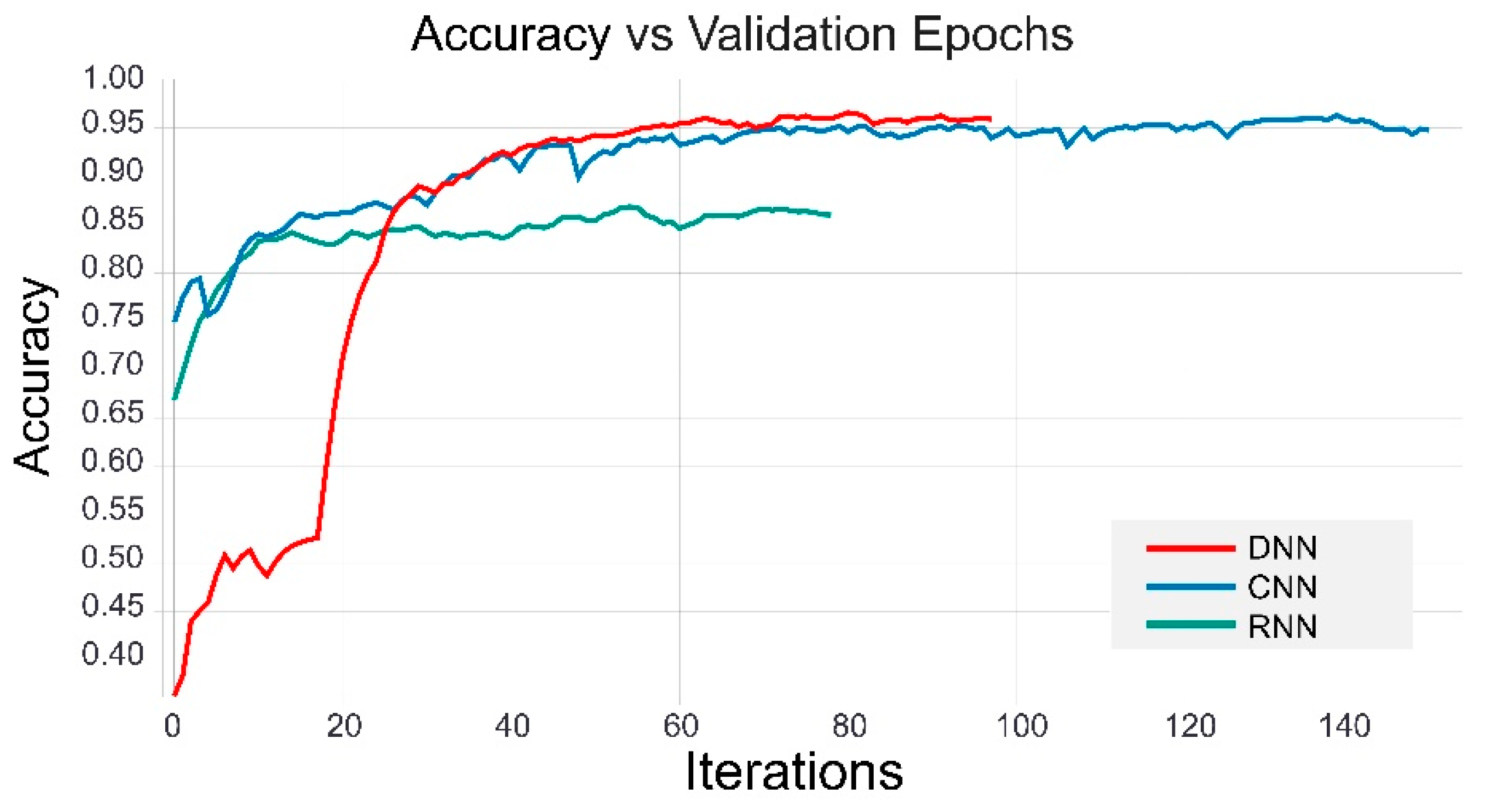

4.1. Training and Validation

4.2. Classification Accuracy

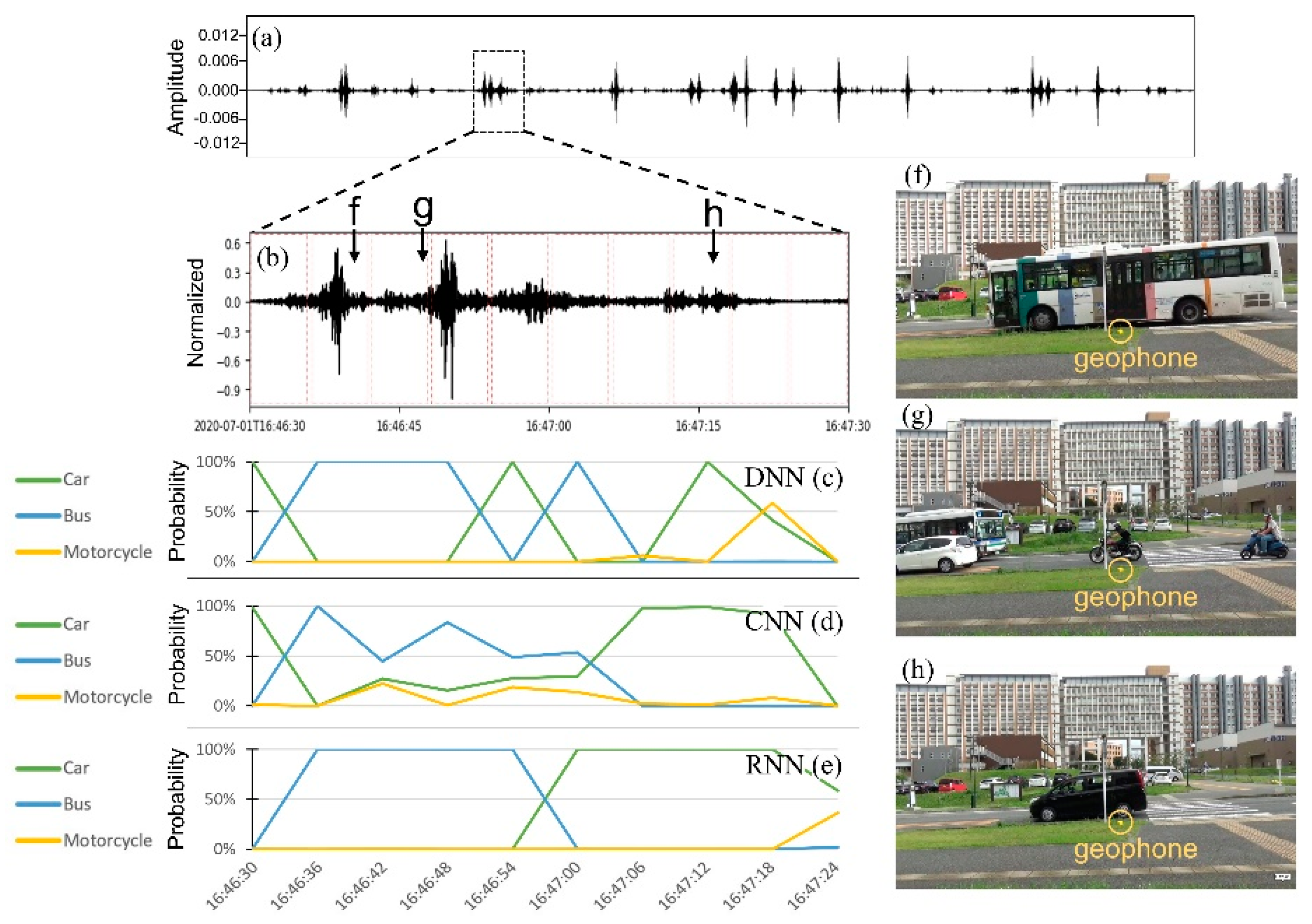

4.3. Vehicle Detection in Continuous Records

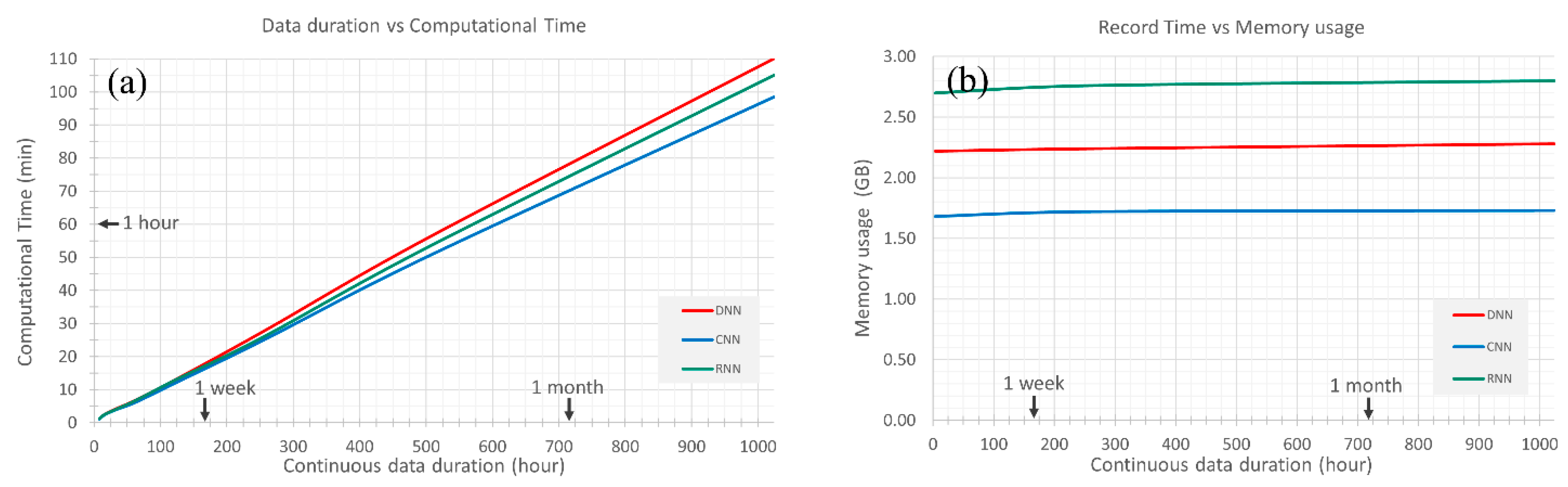

4.4. Scalability to Long Records

5. Discussion

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lee, H.; Coifman, B. Using LIDAR to Validate the Performance of Vehicle Classification Stations. J. Intell. Transp. Syst. Technol. Plan. Oper. 2015, 19, 355–369. [Google Scholar] [CrossRef]

- U.S. Federal Highway Administration (Ed.) Traffic Monitoring Guide—Updated October 2016; Office of Highway Policy Information: Washington, DC, USA, 2016. [Google Scholar]

- Abiodun, O.I.; Jantan, A.; Omolara, A.E.; Dada, K.V.; Mohamed, N.A.E.; Arshad, H. State-of-the-art in artificial neural network applications: A survey. Heliyon 2018, 4, e00938. [Google Scholar] [CrossRef] [PubMed]

- Won, M. Intelligent Traffic Monitoring Systems for Vehicle Classification: A Survey. IEEE Access 2019, 8, 73340–73358. [Google Scholar] [CrossRef]

- Coifman, B.; Neelisetty, S. Improved speed estimation from single-loop detectors with high truck flow. J. Intell. Transp. Syst. Technol. Plan. Oper. 2014, 18, 138–148. [Google Scholar] [CrossRef]

- Jeng, S.T.; Chu, L. A high-definition traffic performance monitoring system with the Inductive Loop Detector signature technology. In Proceedings of the 2014 17th IEEE International Conference on Intelligent Transportation Systems, ITSC 2014, Qingdao, China, 8–11 October 2014; Institute of Electrical and Electronics Engineers Inc.: Piscataway Township, NJ, USA, 2014; pp. 1820–1825. [Google Scholar]

- Wu, L.; Coifman, B. Improved vehicle classification from dual-loop detectors in congested traffic. Transp. Res. Part C Emerg. Technol. 2014, 46, 222–234. [Google Scholar] [CrossRef]

- Wu, L.; Coifman, B. Vehicle length measurement and length-based vehicle classification in congested freeway traffic. Transp. Res. Rec. 2014, 2443, 1–11. [Google Scholar] [CrossRef]

- Balid, W.; Refai, H.H. Real-time magnetic length-based vehicle classification: Case study for inductive loops andwireless magnetometer sensors in Oklahoma state. Transp. Res. Rec. 2018, 2672, 102–111. [Google Scholar] [CrossRef]

- Li, Y.; Tok, A.Y.C.; Ritchie, S.G. Individual Truck Speed Estimation from Advanced Single Inductive Loops. Transp. Res. Rec. 2019, 2673, 272–284. [Google Scholar] [CrossRef]

- Lamas-Seco, J.J.; Castro, P.M.; Dapena, A.; Vazquez-Araujo, F.J. Vehicle classification using the discrete fourier transform with traffic inductive sensors. Sensors 2015, 15, 27201–27214. [Google Scholar] [CrossRef] [PubMed]

- Odat, E.; Shamma, J.S.; Claudel, C. Vehicle Classification and Speed Estimation Using Combined Passive Infrared/Ultrasonic Sensors. IEEE Trans. Intell. Transp. Syst. 2018, 19, 1593–1606. [Google Scholar] [CrossRef]

- Dong, H.; Wang, X.; Zhang, C.; He, R.; Jia, L.; Qin, Y. Improved Robust Vehicle Detection and Identification Based on Single Magnetic Sensor. IEEE Access 2018, 6, 5247–5255. [Google Scholar] [CrossRef]

- Belenguer, F.M.; Martinez-Millana, A.; Salcedo, A.M.; Nunez, J.H.A. Vehicle Identification by Means of Radio-Frequency-Identification Cards and Magnetic Loops. IEEE Trans. Intell. Transp. Syst. 2019, 21, 5051–5059. [Google Scholar] [CrossRef]

- Li, F.; Lv, Z. Reliable vehicle type recognition based on information fusion in multiple sensor networks. Comput. Netw. 2017, 117, 76–84. [Google Scholar] [CrossRef]

- Carli, R.; Dotoli, M.; Epicoco, N.; Angelico, B.; Vinciullo, A. Automated evaluation of urban traffic congestion using bus as a probe. In Proceedings of the 2015 IEEE International Conference on Automation Science and Engineering (CASE), Gothenburg, Sweden, 24–28 August 2015; pp. 967–972. [Google Scholar]

- Ahmed, S.H.; Bouk, S.H.; Yaqub, M.A.; Kim, D.; Song, H.; Lloret, J. CODIE: Controlled Data and Interest Evaluation in Vehicular Named Data Networks. IEEE Trans. Veh. Technol. 2016, 65, 3954–3963. [Google Scholar] [CrossRef]

- Carli, R.; Dotoli, M.; Epicoco, N. Monitoring traffic congestion in urban areas through probe vehicles: A case study analysis. Internet Technol. Lett. 2018, 1, e5. [Google Scholar] [CrossRef]

- Wang, S.; Zhang, X.; Cao, J.; He, L.; Stenneth, L.; Yu, P.S.; Li, Z.; Huang, Z. Computing urban traffic congestions by incorporating sparse GPS probe data and social media data. ACM Trans. Inf. Syst. 2017, 35, 1–30. [Google Scholar] [CrossRef]

- Litman, T. Developing indicators for comprehensive and sustainable transport planning. Transp. Res. Rec. 2007, 2007, 10–15. [Google Scholar] [CrossRef]

- Martin, P.T.; Feng, Y.; Wang, X. Detector Technology Evaluation; Mountain-Plains Consortium: Fargo, ND, USA, 2003. [Google Scholar]

- William, P.E.; Hoffman, M.W. Classification of military ground vehicles using time domain harmonics’amplitudes. IEEE Trans. Instrum. Meas. 2011, 60, 3720–3731. [Google Scholar] [CrossRef]

- Ketcham, S.A.; Moran, M.L.; Lacombe, J.; Greenfield, R.J.; Anderson, T.S. Seismic source model for moving vehicles. IEEE Trans. Geosci. Remote Sens. 2005, 43, 248–256. [Google Scholar] [CrossRef]

- Moran, M.L.; Greenfield, R.J. Estimation of the acoustic-to-seismic coupling ratio using a moving vehicle source. IEEE Trans. Geosci. Remote Sens. 2008, 46, 2038–2043. [Google Scholar] [CrossRef]

- Jin, G.; Ye, B.; Wu, Y.; Qu, F. Vehicle Classification Based on Seismic Signatures Using Convolutional Neural Network. IEEE Geosci. Remote Sens. Lett. 2019, 16, 628–632. [Google Scholar] [CrossRef]

- Zhao, T. Seismic facies classification using different deep convolutional neural networks. In Proceedings of the 2018 SEG International Exposition and Annual Meeting, SEG 2018, Anaheim, CA, USA, 14–19 October 2018; pp. 2046–2050. [Google Scholar]

- Shimshoni, Y.; Intrator, N. Classification of seismic signals by integrating ensembles of neural networks. IEEE Trans. Signal Process. 1998, 46, 1194–1201. [Google Scholar] [CrossRef]

- Titos, M.; Bueno, A.; Garcia, L.; Benitez, C. A Deep Neural Networks Approach to Automatic Recognition Systems for Volcano-Seismic Events. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1533–1544. [Google Scholar] [CrossRef]

- Perol, T.; Gharbi, M.; Denolle, M. Convolutional neural network for earthquake detection and location. Sci. Adv. 2018, 4, e1700578. [Google Scholar] [CrossRef] [PubMed]

- Yuan, S.; Liu, J.; Wang, S.; Wang, T.; Shi, P. Seismic Waveform Classification and First-Break Picking Using Convolution Neural Networks. IEEE Geosci. Remote Sens. Lett. 2018, 15, 272–276. [Google Scholar] [CrossRef]

- Evans, N. Automated Vehicle Detection and Classification Using Acoustic and Seismic Signals. Ph.D. Thesis, University of York, York, UK, 2010. [Google Scholar]

- Grossberg, S.; Rudd, M.E. A neural architecture for visual motion perception: Group and element apparent motion. Neural Netw. 1989, 2, 421–450. [Google Scholar] [CrossRef]

- Nair, V.; Hinton, G.E. Rectified linear units improve Restricted Boltzmann machines. In Proceedings of the ICML 2010—27th International Conference on Machine Learning, Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Shim, K.; Lee, M.; Choi, I.; Boo, Y.; Sung, W. SVD-Softmax: Fast Softmax Approximation on Large Vocabulary Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30, pp. 5463–5473. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32nd International Conference on Machine Learning (ICML 2015), Lille, France, 6–11 July 2015; Volume 1, pp. 448–456. [Google Scholar]

- Waldeland, A.U.; Jensen, A.C.; Gelius, L.-J.; Solberg, A.H.S. Convolutional neural networks for automated seismic interpretation. Lead. Edge 2018, 37, 529–537. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J.L. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Tyagi, V.; Kalyanaraman, S.; Krishnapuram, R. Vehicular Traffic Density State Estimation Based on Cumulative Road Acoustics. IEEE Trans. Intell. Transp. Syst. 2012, 13, 1156–1166. [Google Scholar] [CrossRef]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Skoumal, R.J.; Brudzinski, M.R.; Currie, B.S.; Levy, J. Optimizing multi-station earthquake template matching through re-examination of the Youngstown, Ohio, sequence. Earth Planet. Sci. Lett. 2014, 405, 274–280. [Google Scholar] [CrossRef]

- Davis, J.; Goadrich, M. The relationship between precision-recall and ROC curves. In Proceedings of the 23rd ACM International Conference Proceeding Series, Pittsburgh, PA, USA, 25–29 June 2006; Volume 148, pp. 233–240. [Google Scholar]

| DNN | CNN | RNN | |

|---|---|---|---|

| Number of dense layers | 11 | 4 | 2 |

| Special layer | None | Convolutional layer | LSTM |

| Activation function after dense layers | ReLU | ReLU | ReLU |

| Activation function after the final layer | SoftMax | SoftMax | SoftMax |

| Trainable parameters | 605,572 | 87,170 | 871,684 |

| DNN | CNN | RNN | |

|---|---|---|---|

| Time (s): Total training (Average per epoch 1) | 87 (0.89) | 112 (0.74) | 56 (0.69) |

| Accuracy (%): Training (Validation) | 98.6 (95.6) | 99.1 (94.7) | 99.2 (86.1) |

| Loss: Training (Validation) | 7.80 × 10−2 (0.293) | 2.77 × 10−2 (0.240) | 3.52 × 10−2 (1.070) |

| Mean square error: Training (Validation) | 6.02 × 10−3 (0.019) | 3.58 × 10−3 (0.023) | 2.98 × 10−3 (0.065) |

| Template Matching | DNN | CNN | RNN | |

|---|---|---|---|---|

| Time (ms) | 560 | 74 | 67 | 55 |

| Accuracy (%) | 77.3 | 97.8 | 96.6 | 85.3 |

| Mean square error | N/A | 0.009 | 0.014 | 0.063 |

| Clas | DNN | CNN | RNN | |

|---|---|---|---|---|

| Precision (%) | Big (bus, trucks) | 100 | 100 | 88.8 |

| Medium (light car) | 75.8 | 97.9 | 81.3 | |

| Small (motorcycle) | 90.4 | 90.9 | 80 | |

| Recall (%) | Big (bus, trucks) | 93.8 | 100 | 100 |

| Medium (light car) | 95.9 | 95.2 | 97.9 | |

| Small (motorcycle) | 67.8 | 72.2 | 85.7 | |

| Average Precision (Recall) (%) | 88.7 (85.8) | 96.2 (89.1) | 83.3 (94.5) | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ahmad, A.B.; Tsuji, T. Traffic Monitoring System Based on Deep Learning and Seismometer Data. Appl. Sci. 2021, 11, 4590. https://doi.org/10.3390/app11104590

Ahmad AB, Tsuji T. Traffic Monitoring System Based on Deep Learning and Seismometer Data. Applied Sciences. 2021; 11(10):4590. https://doi.org/10.3390/app11104590

Chicago/Turabian StyleAhmad, Ahmad Bahaa, and Takeshi Tsuji. 2021. "Traffic Monitoring System Based on Deep Learning and Seismometer Data" Applied Sciences 11, no. 10: 4590. https://doi.org/10.3390/app11104590

APA StyleAhmad, A. B., & Tsuji, T. (2021). Traffic Monitoring System Based on Deep Learning and Seismometer Data. Applied Sciences, 11(10), 4590. https://doi.org/10.3390/app11104590