Coupled Convolutional Neural Network-Based Detail Injection Method for Hyperspectral and Multispectral Image Fusion

Abstract

Featured Application

Abstract

1. Introduction

2. Materials and Methods

2.1. Detail Injection Sharpening Framework

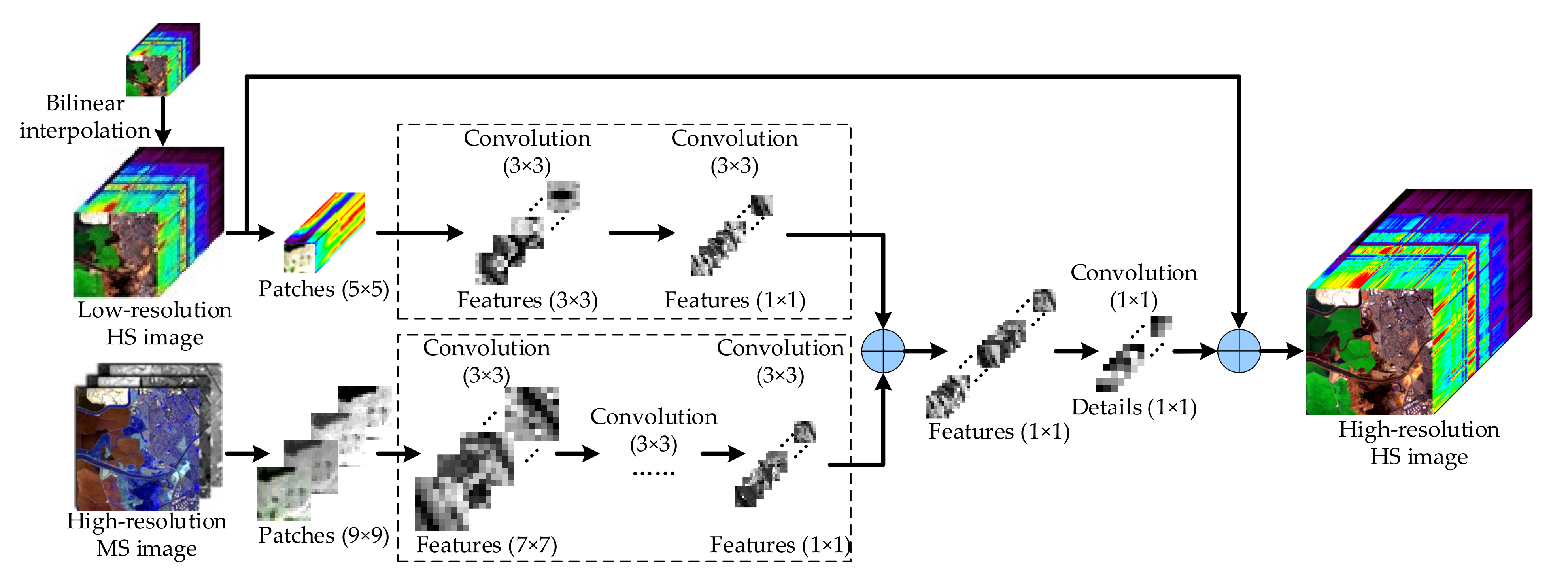

2.2. Proposed Coupled CNN Fusion Approach

3. Experimental Results and Discussion

3.1. Experimental Setup

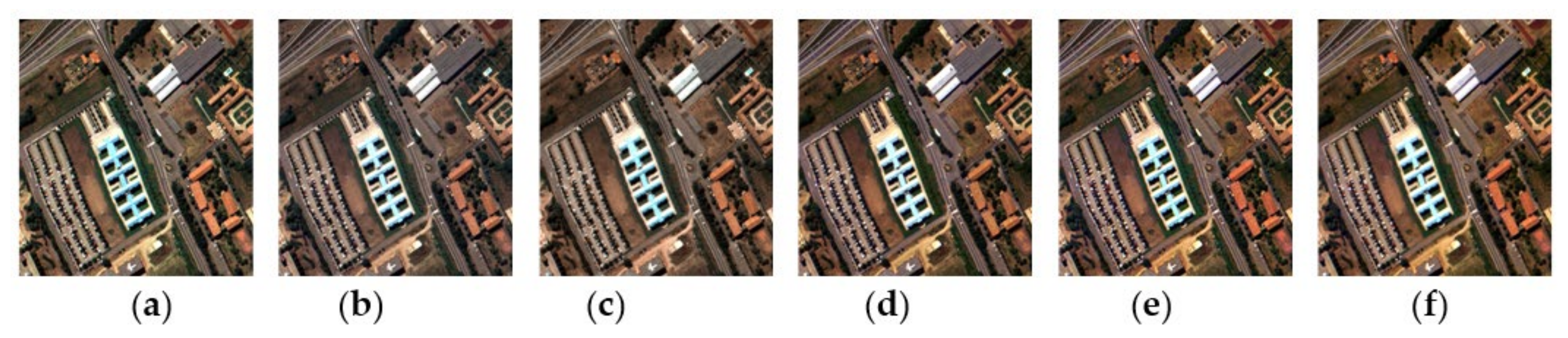

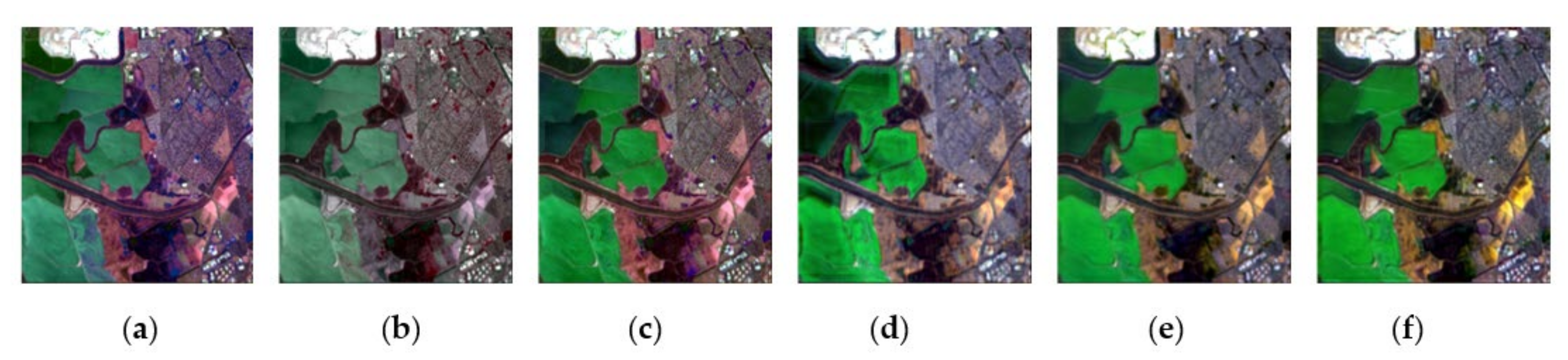

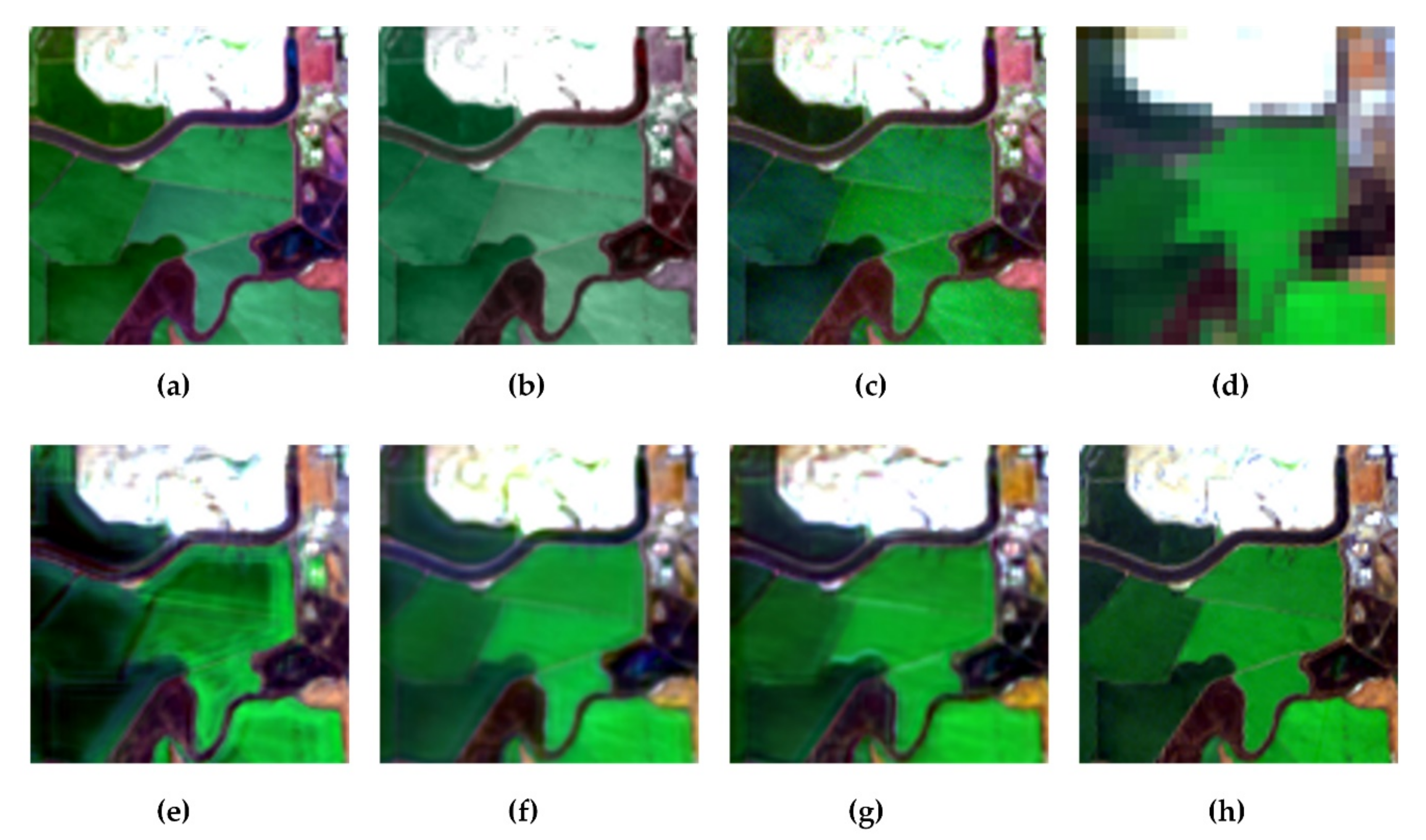

3.2. Results and Analyses

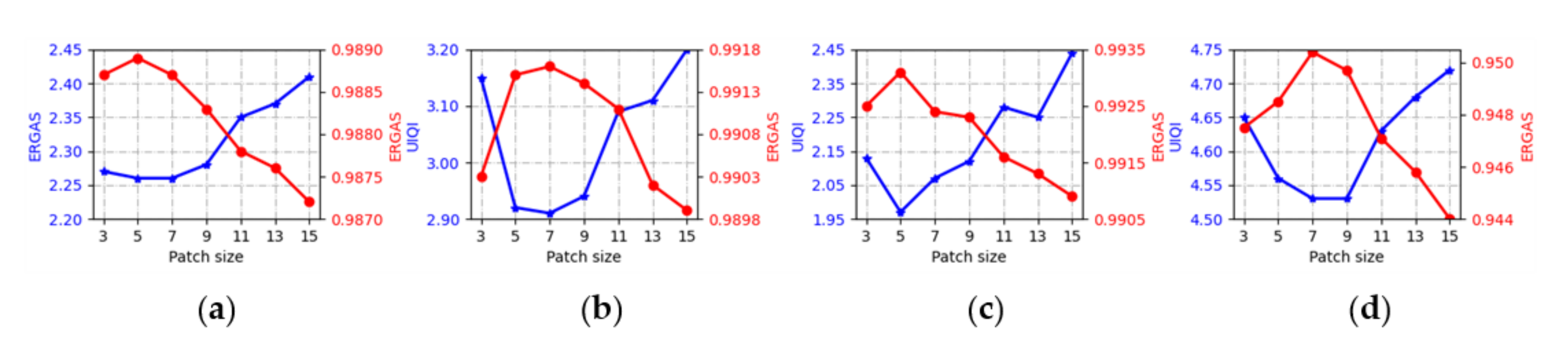

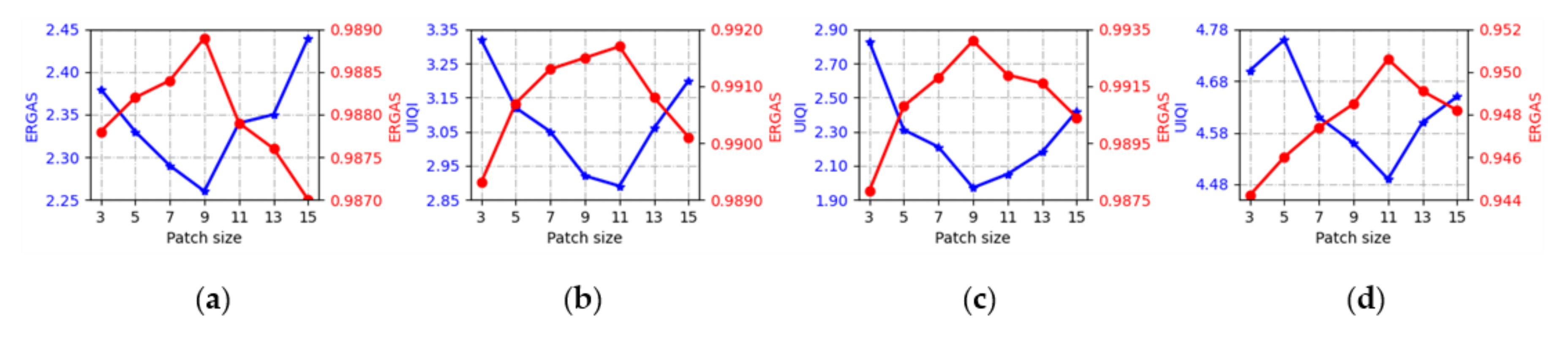

3.3. Parameter Discussion

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Selva, M.; Aiazzi, B.; Butera, F.; Chiarantini, L.; Baronti, S. Hyper-sharpening: A first approach on SIM-GA data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 3008–3024. [Google Scholar] [CrossRef]

- Chen, Z.; Pu, H.; Wang, B.; Jiang, G. Fusion of hyperspectral and multispectral images: A novel framework based on generalization of pan-sharpening methods. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1418–1422. [Google Scholar] [CrossRef]

- Dong, W.; Liang, J.; Xiao, S. Saliency analysis and Gaussian mixture model-based detail extraction algorithm for hyperspectral pansharpening. IEEE Trans. Geosci. Remote Sens. 2020, 58, 5462–5476. [Google Scholar] [CrossRef]

- Li, X.; Yuan, Y.; Wang, Q. Hyperspectral and multispectral image fusion based on band simulation. IEEE Geosci. Remote Sens. Lett. 2020, 17, 479–483. [Google Scholar] [CrossRef]

- Hardie, R.C.; Eismann, M.T.; Wilson, G.L. MAP estimation for hyperspectral image resolution enhancement using an auxiliary sensor. IEEE Trans. Image Process. 2004, 13, 1174–1184. [Google Scholar] [CrossRef]

- Zhang, Y.; Backer, S.; Scheunders, P. Noised-resistant wavelet-based Bayesian fusion of multispectral and hyperspectral images. IEEE Trans. Geosci. Remote Sens. 2009, 47, 3834–3843. [Google Scholar] [CrossRef]

- Palsson, F.; Sveinsson, J.R.; Ulfarsson, M.O.; Benediktsson, J.A. Model-based fusion of multi- and hyperspectral images using PCA and wavelets. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2652–2663. [Google Scholar] [CrossRef]

- Wei, Q.; Dobigeon, N.; Tourneret, J.Y. Fast fusion of multi-band images based on solving a Sylvester equation. IEEE Trans. Image Process. 2015, 24, 4109–4121. [Google Scholar] [CrossRef]

- Wei, Q.; Dobigeon, N.; Tourneret, J.; Bioucas-Dias, J.; Godsill, S. R-FUSE: Robust fast fusion of multiband images based on solving a Sylvester equation. IEEE Signal Process Lett. 2016, 23, 1632–1636. [Google Scholar] [CrossRef]

- Simões, M.; Bioucas-Dias, J.; Almeida, L.B.; Chanussot, J. A convex formulation for hyperspectral image superresolution via subspacebased regularization. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3373–3388. [Google Scholar] [CrossRef]

- Wei, Q.; Bioucas-Dias, J.; Dobigeon, N.; Tourneret, J. Hyperspectral and multispectral image fusion based on a sparse representation. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3658–3668. [Google Scholar] [CrossRef]

- Xing, C.; Wang, M.; Dong, C.; Duan, C.; Wang, Z. Joint sparse-collaborative representation to fuse hyperspectral and multispectral images. Signal Process. 2020, 173, 1–12. [Google Scholar] [CrossRef]

- Yokoya, N.; Yairi, T.; Iwasaki, A. Coupled nonnegative matrix factorization unmixing for hyperspectral and multispectral data fusion. IEEE Trans. Geosci. Remote Sens. 2012, 50, 528–537. [Google Scholar] [CrossRef]

- Song, H.; Huang, B.; Zhang, K.; Zhang, H. Spatio-spectral fusion of satellite images based on dictionary-pair learning. Inf. Fusion. 2014, 18, 148–160. [Google Scholar] [CrossRef]

- Karoui, M.S.; Deville, Y.; Benhalouche, F.Z.; Boukerch, I. Hyper-sharpening by joint-criterion nonnegative matrix factorization. IEEE Trans. Geosci. Remote Sens. 2017, 55, 1660–1670. [Google Scholar] [CrossRef]

- Lu, X.; Zhang, J.; Yu, X.; Tang, W.; Li, T.; Zhang, Y. Hyper-sharpening based on spectral modulation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 1534–1548. [Google Scholar] [CrossRef]

- Borsoi, R.A.; Imbiriba, T.; Bermudez, J.C.M. Super-resolution for hyperspectral and multispectral image fusion accounting for seasonal spectral variability. IEEE Trans. Image Process. 2020, 29, 116–127. [Google Scholar] [CrossRef]

- Li, S.; Dian, R.; Fang, L.; Bioucas-Dias, J.M. Fusing hyperspectral and multispectral images via coupled sparse tensor factorization. IEEE Trans. Image Process. 2018, 27, 4118–4130. [Google Scholar] [CrossRef]

- Zhang, K.; Wang, M.; Yang, S.; Jiao, L. Spatial-spectral graph regularized low-rank tensor decomposition for multispectral and hyperspectral image fusion. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2018, 11, 1030–1040. [Google Scholar] [CrossRef]

- Dian, R.; Li, S.; Fang, L. Learning a low tensor-train rank representation for hyperspectral image super-resolution. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 2672–2683. [Google Scholar] [CrossRef]

- Loncan, L.; De Almeida, L.B.; Bioucas-Dias, J.M.; Briottet, X.; Chanussot, J.; Dobigeon, N.; Liao, W.; Licciardi, G.A.; Simões, M.; Tourneret, J.-Y.; et al. Hyperspectral pansharpening: A Review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 27–46. [Google Scholar] [CrossRef]

- Yokoya, N.; Grohnfeldt, C.; Chanussot, J. Hyperspectral and multispectral data fusion: A comparative review of the recent literature. IEEE Geosci. Remote Sens. 2017, 5, 29–56. [Google Scholar] [CrossRef]

- Ahmad, M. A fast 3D CNN for hyperspectral image classification. arXiv 2020, arXiv:2004.14152. [Google Scholar]

- Yuan, Q.; Wei, Y.; Meng, X.; Shen, H.; Zhang, L. A multiscale and multidepth convolutional neural network for remote sensing imagery pan-sharpening. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2018, 11, 978–989. [Google Scholar] [CrossRef]

- Masi, G.; Cozzolino, D.; Verdoliva, L.; Scarpa, G. Pansharpening by convolutional neural networks. Remote Sens. 2016, 8, 594. [Google Scholar] [CrossRef]

- Kawulok, M.; Benecki, P.; Piechaczek, S.; Hrynczenko, K.; Kostrzewa, D.; Nalepa, J. Deep Learning for Multiple-Image Super-Resolution. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1062–1066. [Google Scholar] [CrossRef]

- Palsson, F.; Sveinsson, J.R.; Ulfarsson, M.O. Multispectral and Hyperspectral Image Fusion Using a 3-D-Convolutional Neural Network. IEEE Geosci. Remote Sens. Lett. 2017, 14, 639–643. [Google Scholar] [CrossRef]

- Dian, R.; Li, S.; Guo, A.; Fang, L. Deep hyperspectral image sharpening. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 5345–5355. [Google Scholar] [CrossRef]

- Yang, J.; Zhao, Q.; Chan, J.C. Hyperspectral and multispectral image fusion via deep two-branches convolutional neural network. Remote Sens. 2018, 10, 800. [Google Scholar] [CrossRef]

- Zhou, F.; Hang, R.; Liu, Q.; Yuan, X. Pyramid fully convolutional network for hyperspectral and multispectral image fusion. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2019, 12, 1549–1558. [Google Scholar] [CrossRef]

- Dian, R.; Li, S.; Kang, X. Regularizing Hyperspectral and multispectral image fusion by CNN denoiser. IEEE Trans Neural Netw. Learn Syst. 2020, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Chen, B.; Lu, R.; Zhang, H.; Liu, H.; Varshney, P.K. FusionNet: An unsupervised convolutional variational network for hyperspectral and multispectral image fusion. IEEE Trans. Image Process. 2020, 29, 7565–7577. [Google Scholar] [CrossRef]

- Xu, S.; Amira, O.; Liu, J.; Zhang, C.X.; Zhang, J.; Li, G. HAM-MFN: Hyperspectral and multispectral image multiscale fusion network with RAP loss. IEEE Trans. Geosci. Remote Sens. 2020, 58, 4618–4628. [Google Scholar] [CrossRef]

- Shen, D.; Liu, J.; Xiao, Z.; Yang, J.; Xiao, L. A twice optimizing net with matrix decomposition for hyperspectral and multispectral image fusion. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2020, 13, 4095–4110. [Google Scholar] [CrossRef]

- Lu, R.; Chen, B.; Cheng, Z.; Wang, P. RAFnet: Recurrent attention fusion network of hyperspectral and multispectral images. Signal Process. 2020, 177. [Google Scholar] [CrossRef]

- Selva, M.; Santurri, L.; Baronti, S. Improving hypersharpening for WorldView-3 Data. IEEE Geosci. Remote Sens. Lett. 2019, 16, 987–991. [Google Scholar] [CrossRef]

- He, L.; Rao, Y.; Li, J.; Chanussot, J.; Plaza, A.; Zhu, J.; Li, B. Pansharpening via detail injection based convolutional neural networks. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2019, 12, 1188–1204. [Google Scholar] [CrossRef]

- Lu, X.; Zhang, J.; Li, T.; Zhang, Y. Pan-sharpening by multilevel interband structure modeling. IEEE Geosci. Remote Sens. Lett. 2016, 13, 892–896. [Google Scholar] [CrossRef]

- Lu, X.; Zhang, J.; Li, T.; Zhang, Y. A novel synergetic classification approach for hyperspectral and panchromatic images based on self-learning. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4917–4928. [Google Scholar] [CrossRef]

| Method | Dataset | SAM | ERGAS | UIQI | Time (s) | Dataset | SAM | ERGAS | UIQI | Time (s) |

|---|---|---|---|---|---|---|---|---|---|---|

| CNMF | University of Pavia | 4.12 | 5.70 | 0.9807 | 39.3 | Pavia City center | 10.11 | 7.51 | 0.9860 | 63.9 |

| JNMF | 4.59 | 5.65 | 0.9774 | 14.2 | 9.10 | 7.49 | 0.9858 | 21.3 | ||

| DPLM | 4.00 | 5.31 | 0.9826 | 49.9 | 10.72 | 8.64 | 0.9815 | 153.4 | ||

| TCNNF | 2.76 | 3.90 | 0.9911 | 3079.0/26.5 | 5.80 | 5.47 | 0.9925 | 4797.9/40.8 | ||

| DiCNN | 3.06 | 4.25 | 0.9902 | 10,553.1/105.8 | 5.60 | 5.67 | 0.9917 | 16,545.7/169.2 | ||

| CpCNN | 2.62 | 3.47 | 0.9927 | 1449.3/8.5 | 5.32 | 4.69 | 0.9943 | 2273.7/13.1 | ||

| CNMF | Washington DC Mall | 2.59 | 4.74 | 0.9777 | 43.4 | San Francisco | 8.89 | 16.31 | 0.8157 | 15.6 |

| JNMF | 3.21 | 6.16 | 0.9724 | 15.2 | 8.96 | 17.71 | 0.8155 | 5.2 | ||

| DPLM | 2.83 | 7.74 | 0.9703 | 56.9 | 4.33 | 10.78 | 0.9305 | 23.7 | ||

| TCNNF | 1.44 | 4.94 | 0.9904 | 3953.1/35.3 | 2.87 | 6.53 | 0.9747 | 1434.4/13.5 | ||

| DiCNN | 1.33 | 2.90 | 0.9958 | 13,573.1/149.7 | 2.45 | 5.56 | 0.9806 | 5140.8/53.2 | ||

| CpCNN | 1.14 | 2.81 | 0.9958 | 1596.3/11.5 | 2.23 | 5.52 | 0.9819 | 603.7/4.6 |

| Method | Dataset | SAM | ERGAS | UIQI | Time (s) | Dataset | SAM | ERGAS | UIQI | Time (s) |

|---|---|---|---|---|---|---|---|---|---|---|

| CNMF | University of Pavia | 4.31 | 3.55 | 0.9709 | 38.3 | Pavia City center | 9.89 | 4.49 | 0.9797 | 61.1 |

| JNMF | 4.73 | 2.93 | 0.9756 | 16.6 | 9.13 | 3.82 | 0.9848 | 26.7 | ||

| DPLM | 4.23 | 2.94 | 0.9782 | 46.0 | 11.81 | 4.75 | 0.9780 | 164.8 | ||

| TCNNF | 3.75 | 2.73 | 0.9823 | 711.5/24.3 | 6.75 | 3.66 | 0.9865 | 1103.5/38.9 | ||

| DiCNN | 3.41 | 2.75 | 0.9843 | 2572.0/107.6 | 8.22 | 3.34 | 0.9891 | 4237.1/170.6 | ||

| CpCNN | 3.34 | 2.26 | 0.9889 | 382.2/8.7 | 6.81 | 2.92 | 0.9915 | 593.3/13.3 | ||

| CNMF | Washington DC Mall | 2.80 | 2.66 | 0.9708 | 41.0 | San Francisco | 8.88 | 8.19 | 0.8046 | 15.9 |

| JNMF | 3.57 | 3.30 | 0.9681 | 17.2 | 8.90 | 9.00 | 0.8030 | 6.5 | ||

| DPLM | 3.27 | 4.04 | 0.9666 | 52.8 | 4.81 | 5.74 | 0.9184 | 30.2 | ||

| TCNNF | 2.14 | 2.93 | 0.9862 | 917.1/33.8 | 4.29 | 5.28 | 0.9245 | 341.6/12.7 | ||

| DiCNN | 1.72 | 2.00 | 0.9922 | 3399.6/145.6 | 4.17 | 5.48 | 0.9415 | 1220.2/49.8 | ||

| CpCNN | 1.62 | 1.97 | 0.9931 | 444.6/11.3 | 3.84 | 4.56 | 0.9485 | 161.2/4.6 |

| Method | Dataset | SAM | ERGAS | UIQI | Time (s) | Dataset | SAM | ERGAS | UIQI | Time (s) |

|---|---|---|---|---|---|---|---|---|---|---|

| CNMF | University of Pavia | 4.40 | 2.98 | 0.9554 | 25.10 | Pavia City center | 10.06 | 3.74 | 0.9680 | 39.53 |

| JNMF | 4.73 | 1.97 | 0.9751 | 11.05 | 9.62 | 2.95 | 0.9799 | 17.21 | ||

| DPLM | 5.19 | 4.40 | 0.9039 | 46.33 | 12.19 | 3.48 | 0.9735 | 180.01 | ||

| TCNNF | 4.62 | 3.09 | 0.9544 | 358.6/27.1 | 8.69 | 3.04 | 0.9798 | 556.5/40.6 | ||

| DiCNN | 4.37 | 2.01 | 0.9800 | 1205.3/106.0 | 9.45 | 2.56 | 0.9856 | 2110.6/168.0 | ||

| CpCNN | 4.55 | 2.46 | 0.9732 | 164.4/8.5 | 7.50 | 2.45 | 0.9876 | 255.9/12.9 | ||

| CNMF | Washington DC Mall | 4.87 | 2.51 | 0.9503 | 24.49 | San Francisco | 8.44 | 5.67 | 0.7811 | 9.93 |

| JNMF | 3.61 | 2.17 | 0.9708 | 10.75 | 8.85 | 5.89 | 0.8010 | 4.34 | ||

| DPLM | 3.49 | 2.81 | 0.9603 | 56.37 | 5.43 | 4.27 | 0.8959 | 22.75 | ||

| TCNNF | 2.43 | 2.79 | 0.9717 | 443.9/35.5 | 5.53 | 4.60 | 0.8823 | 179.6/13.9 | ||

| DiCNN | 3.14 | 2.46 | 0.9786 | 1538.4/148.4 | 8.01 | 5.06 | 0.8928 | 612.7/54.2 | ||

| CpCNN | 1.91 | 1.71 | 0.9889 | 181.9/11.5 | 4.43 | 3.88 | 0.9171 | 72.9/4.5 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, X.; Yang, D.; Jia, F.; Zhao, Y. Coupled Convolutional Neural Network-Based Detail Injection Method for Hyperspectral and Multispectral Image Fusion. Appl. Sci. 2021, 11, 288. https://doi.org/10.3390/app11010288

Lu X, Yang D, Jia F, Zhao Y. Coupled Convolutional Neural Network-Based Detail Injection Method for Hyperspectral and Multispectral Image Fusion. Applied Sciences. 2021; 11(1):288. https://doi.org/10.3390/app11010288

Chicago/Turabian StyleLu, Xiaochen, Dezheng Yang, Fengde Jia, and Yifeng Zhao. 2021. "Coupled Convolutional Neural Network-Based Detail Injection Method for Hyperspectral and Multispectral Image Fusion" Applied Sciences 11, no. 1: 288. https://doi.org/10.3390/app11010288

APA StyleLu, X., Yang, D., Jia, F., & Zhao, Y. (2021). Coupled Convolutional Neural Network-Based Detail Injection Method for Hyperspectral and Multispectral Image Fusion. Applied Sciences, 11(1), 288. https://doi.org/10.3390/app11010288