A Comparative Analysis of Machine Learning Methods for Class Imbalance in a Smoking Cessation Intervention

Abstract

1. Introduction

- Provide a comparative analysis of machine learning methods based on experimental design to determine their predictive performances when used to predict smoking cessation intervention among the Korean population.

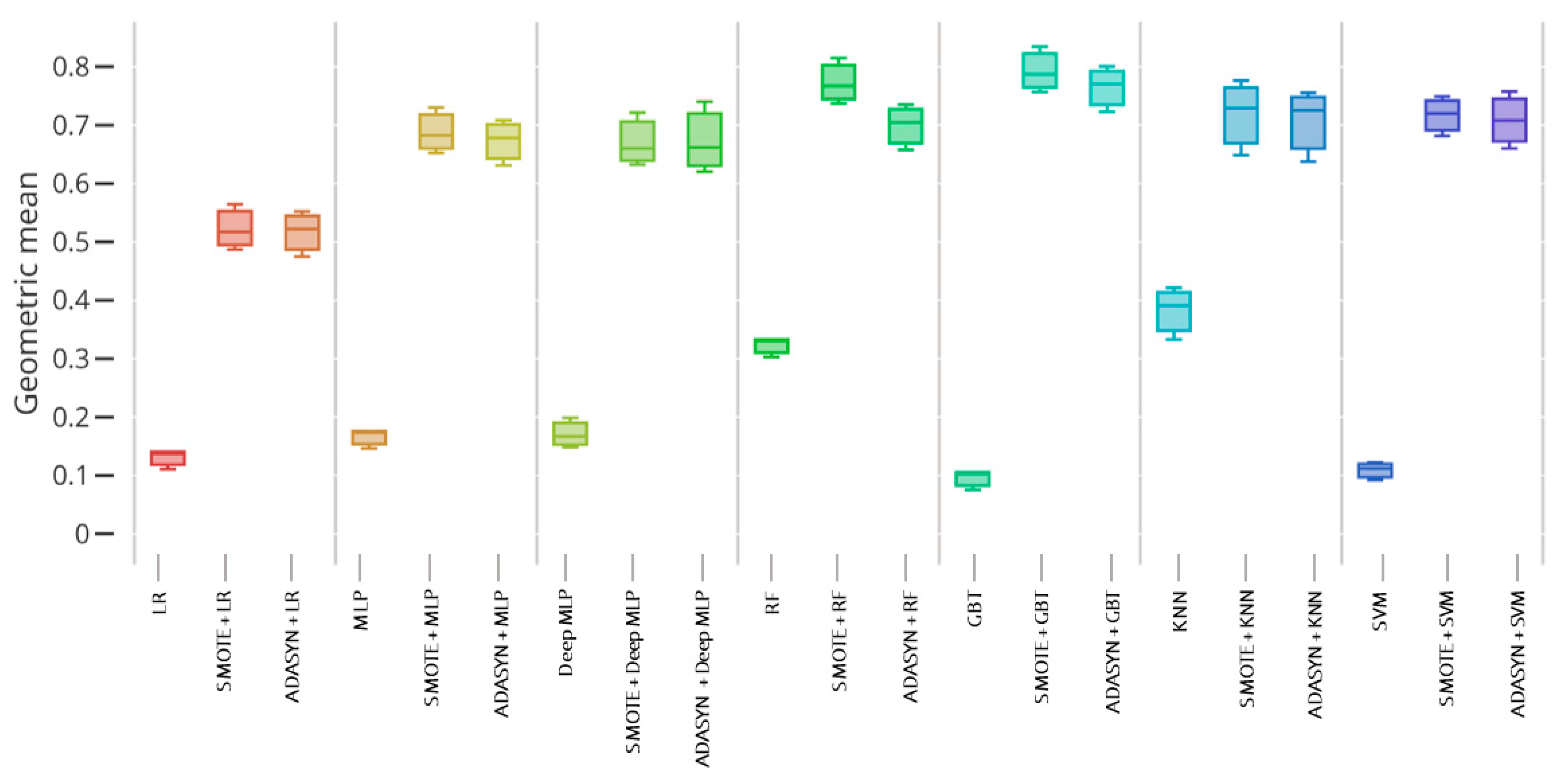

- Experimental results prove that the integration of synthetic oversampling techniques with machine learning classifiers enhance the evaluation performance among all subjects and each gender.

- Important features are defined in terms of lasso and multicollinearity analysis, which can be a substantial benefit for understanding the causes of smoking risk factors.

- SMOTE with GBT and RF models are provided feature importance scores to enhance the model interpretability.

- Empirically, practical models have gone some way towards solving implication for practice class imbalanced problem, and these findings would be significantly important for the public health impact has arisen from the quit smoking.

2. Literature Review

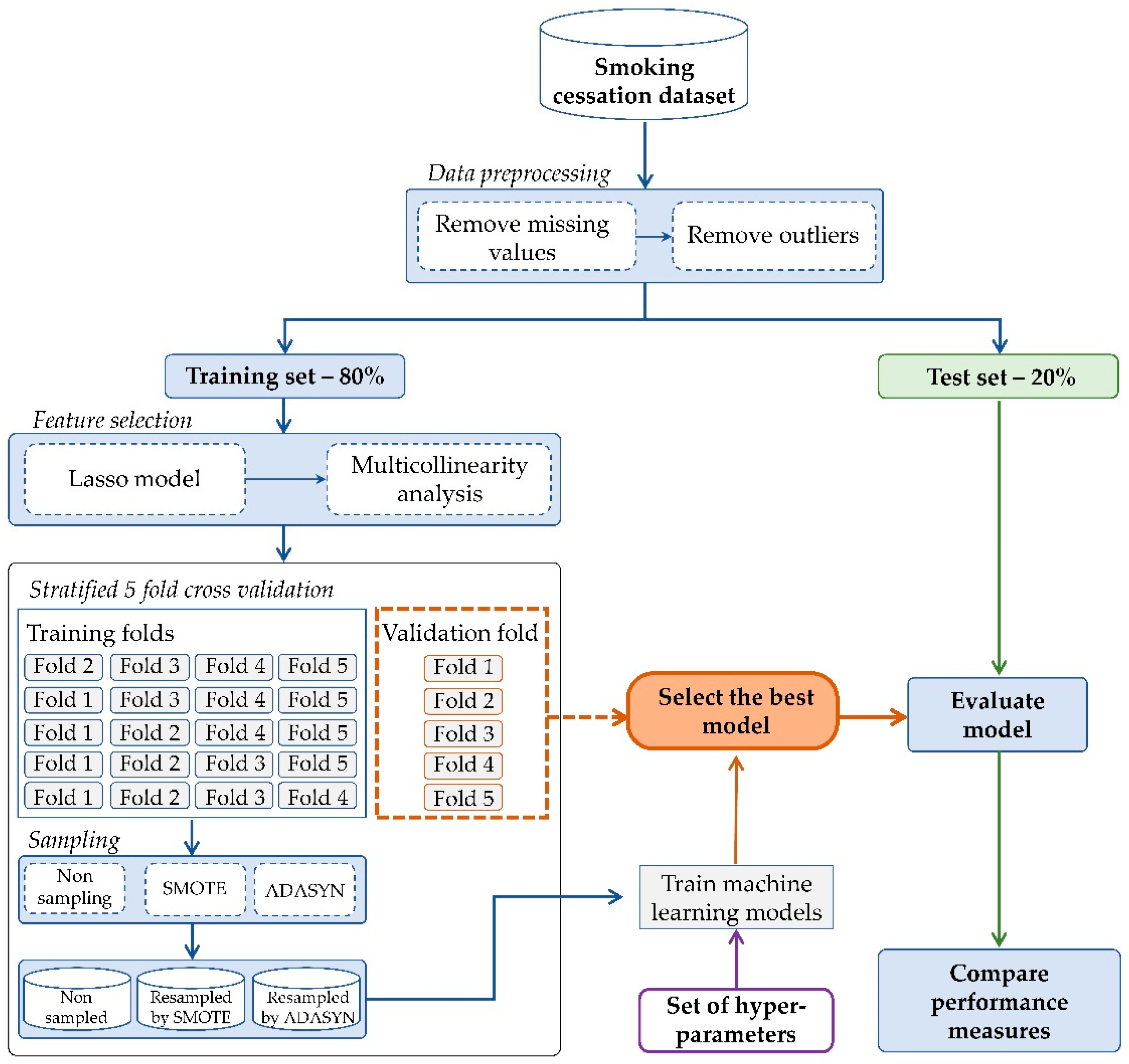

3. Materials and Methods

3.1. Data Preprocessing

3.2. Sampling Techniques

3.3. Machine Learning Classifiers

3.4. Experimental Setup

4. Experiment and Result Analysis

4.1. Subjects and Dataset

- We excluded 53,850 subjects who never smoked.

- We excluded 9271 subjects who never tried to quit smoking cigarettes in their life by smoking cessation methods.

4.2. Feature Selection

4.3. Sampling

4.4. Results and Comparison

4.4.1. Statistical Test

4.4.2. Model Interpretability

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| ADASYN | Adaptive Synthetic |

| GBT | Gradient Boosting Trees |

| KCDC | Korea Centers for Disease Control and Prevention |

| KNHANES | Korea National Health and Nutrition Examination Survey |

| KNN | K-Nearest Neighbors |

| LR | Logistic Regression |

| MLP | Multilayer Perceptron |

| RF | Random Forest |

| SMOTE | Synthetic Minority Over-Sampling Technique |

| SVM | Support Vector Machine |

| VIF | Variance Inflation Factor |

| WHO | World Health Organization |

References

- World Health Organization. WHO Report on the Global Tobacco Epidemic, 2017: Monitoring Tobacco Use and Prevention Policies; WHO: Geneva, Switzerland, 2017. [Google Scholar]

- WHO Tobacco Free Initiative. The Role of Health Professionals in Tobacco Control; World Health Organization: Geneva, Switzerland, 2005. [Google Scholar]

- Campion, J.; Checinski, K.; Nurse, J.; McNeill, A. Smoking by people with mental illness and benefits of smoke-free mental health services. Adv. Psychiatr. Treat. 2008, 14, 217–228. [Google Scholar] [CrossRef]

- Song, Y.M.; Sung, J.; Cho, H.J. Reduction and cessation of cigarette smoking and risk of cancer: A cohort study of Korean men. J. Clin. Oncol. 2008, 26, 5101–5106. [Google Scholar] [CrossRef] [PubMed]

- Li, X.H.; An, F.R.; Ungvari, G.S.; Ng, C.H.; Chiu, H.F.; Wu, P.P.; Xiang, Y.T. Prevalence of smoking in patients with bipolar disorder, major depressive disorder and schizophrenia and their relationships with quality of life. Sci. Rep. 2017, 7, 8430. [Google Scholar] [CrossRef] [PubMed]

- Milcarz, M.; Polanska, K.; Bak-Romaniszyn, L.; Kaleta, D. Tobacco Health Risk Awareness among Socially Disadvantaged People—A Crucial Tool for Smoking Cessation. Int. J. Environ. Res. Public Health 2018, 15, 2244. [Google Scholar] [CrossRef] [PubMed]

- Yang, H.K.; Shin, D.W.; Park, J.H.; Kim, S.Y.; Eom, C.S.; Kam, S.; Seo, H.G. The association between perceived social support and continued smoking in cancer survivors. Jpn. J. Clin. Oncol. 2012, 43, 45–54. [Google Scholar] [CrossRef]

- Rigotti, N.A. Strategies to help a smoker who is struggling to quit. JAMA 2012, 308, 1573–1580. [Google Scholar] [CrossRef]

- Hyndman, K.; Thomas, R.E.; Schira, H.R.; Bradley, J.; Chachula, K.; Patterson, S.K.; Compton, S.M. The Effectiveness of Tobacco Dependence Education in Health Professional Students’ Practice: A Systematic Review and Meta-Analysis of Randomized Controlled Trials. Int. J. Environ. Res. Public Health 2019, 16, 4158. [Google Scholar] [CrossRef]

- Kim, H.; Ishag, M.; Piao, M.; Kwon, T.; Ryu, K.H. A data mining technique for cardiovascular disease diagnosis using heart rate variability and images of carotid arteries. Symmetry 2016, 8, 47. [Google Scholar] [CrossRef]

- Lee, H.C.; Yoon, H.K.; Nam, K.; Cho, Y.; Kim, T.; Kim, W.; Bahk, J.H. Derivation and validation of machine learning techniques to predict acute kidney injury after cardiac surgery. J. Clin. Med. 2018, 7, 322. [Google Scholar] [CrossRef]

- Heo, B.M.; Ryu, K.H. Prediction of Prehypertenison and Hypertension Based on Anthropometry, Blood Parameters, and Spirometry. Int. J. Environ. Res. Public Health 2018, 15, 2571. [Google Scholar] [CrossRef]

- Yang, E.; Park, H.; Choi, Y.; Kim, J.; Munkhdalai, L.; Musa, I.; Ryu, K.H. A simulation-based study on the comparison of statistical and time series forecasting methods for early detection of infectious disease outbreaks. Int. J. Environ. Res. Public Health 2018, 15, 966. [Google Scholar] [CrossRef] [PubMed]

- Zhu, M.; Xia, J.; Jin, X.; Yan, M.; Cai, G.; Yan, J.; Ning, G. Class weights random forest algorithm for processing class imbalanced medical data. IEEE Access 2018, 6, 4641–4652. [Google Scholar] [CrossRef]

- Dal Pozzolo, A.; Caelen, O.; Le Borgne, Y.A.; Waterschoot, S.; Bontempi, G. Learned lessons in credit card fraud detection from a practitioner perspective. Expert Syst. Appl. 2014, 41, 4915–4928. [Google Scholar] [CrossRef]

- Le, T.; Lee, M.; Park, J.; Baik, S. Oversampling techniques for bankruptcy prediction: Novel features from a transaction dataset. Symmetry 2018, 10, 79. [Google Scholar] [CrossRef]

- Haixiang, G.; Yijing, L.; Shang, J.; Mingyun, G.; Yuanyue, H.; Bing, G. Learning from class-imbalanced data: Review of methods and applications. Expert Syst. Appl. 2017, 73, 220–239. [Google Scholar] [CrossRef]

- Douzas, G.; Bacao, F. Effective data generation for imbalanced learning using conditional generative adversarial networks. Expert Syst. Appl. 2018, 91, 464–471. [Google Scholar] [CrossRef]

- Dobbins, C.; Rawassizadeh, R.; Momeni, E. Detecting physical activity within lifelogs towards preventing obesity and aiding ambient assisted living. Neurocomputing 2017, 230, 110–132. [Google Scholar] [CrossRef]

- Han, H.; Wang, W.Y.; Mao, B.H. Borderline-SMOTE: A new over-sampling method in imbalanced data sets learning. In Proceedings of the International Conference on Intelligent Computing, Hefei, China, 23–26 August 2005; Springer: Berlin/Heidelberg, Germany, 2005; pp. 878–887. [Google Scholar]

- Monso, E.; Campbell, J.; Tønnesen, P.; Gustavsson, G.; Morera, J. Sociodemographic predictors of success in smoking intervention. Tob. Control 2001, 10, 165–169. [Google Scholar] [CrossRef]

- Kim, Y.J. Predictors for successful smoking cessation in Korean adults. Asian Nurs. Res. 2014, 8, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Charafeddine, R.; Demarest, S.; Cleemput, I.; Van Oyen, H.; Devleesschauwer, B. Gender and educational differences in the association between smoking and health-related quality of life in Belgium. Prev. Med. 2017, 105, 280–286. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.; Yun, J.E.; Lee, J.K.; Kim, I.S.; Jee, S.H. The Korean prediction model for adolescents’ future smoking intentions. J. Prev. Med. Public Health 2010, 43, 283–291. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.H.; Lee, J.A.; Kim, K.U.; Cho, H.J. Results of an inpatient smoking cessation program: 3-month cessation rate and predictors of success. Korean J. Fam. Med. 2015, 36, 50. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Foulds, J.; Gandhi, K.K.; Steinberg, M.B.; Richardson, D.L.; Williams, J.M.; Burke, M.V.; Rhoads, G.G. Factors associated with quitting smoking at a tobacco dependence treatment clinic. Am. J. Health Behav. 2006, 30, 400–412. [Google Scholar] [CrossRef] [PubMed]

- Smit, E.S.; Hoving, C.; Schelleman-Offermans, K.; West, R.; de Vries, H. Predictors of successful and unsuccessful quit attempts among smokers motivated to quit. Addict. Behav. 2014, 39, 1318–1324. [Google Scholar] [CrossRef]

- Blok, D.J.; de Vlas, S.J.; van Empelen, P.; van Lenthe, F.J. The role of smoking in social networks on smoking cessation and relapse among adults: A longitudinal study. Prev. Med. 2017, 99, 105–110. [Google Scholar] [CrossRef]

- Coughlin, L.N.; Tegge, A.N.; Sheffer, C.E.; Bickel, W.K. A machine-learning technique to predicting smoking cessation treatment outcomes. Nicotine Tob. Res. 2018. [Google Scholar] [CrossRef]

- Poynton, M.R.; McDaniel, A.M. Classification of smoking cessation status with a backpropagation neural network. J. Biomed. Inform. 2006, 39, 680–686. [Google Scholar] [CrossRef]

- Davagdorj, K.; Yu, S.H.; Kim, S.Y.; Huy, P.V.; Park, J.H.; Ryu, K.H. Prediction of 6 Months Smoking Cessation Program among Women in Korea. Int. J. Mach. Learn. Comput. 2019, 9, 83–90. [Google Scholar] [CrossRef]

- Meier, L.; Van De Geer, S.; Bühlmann, P. The group lasso for logistic regression. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 2008, 70, 53–71. [Google Scholar] [CrossRef]

- Salmerón Gómez, R.; García Pérez, J.; López Martín, M.D.M.; García, C.G. Collinearity diagnostic applied in ridge estimation through the variance inflation factor. J. Appl. Stat. 2016, 43, 1831–1849. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- He, H.; Bai, Y.; Garcia, E.A.; Li, S. ADASYN: Adaptive synthetic sampling technique for imbalanced learning. In Proceedings of the 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence), Hong Kong, China, 1–8 June 2008; pp. 1322–1328. [Google Scholar]

- Bagley, S.C.; White, H.; Golomb, B.A. Logistic regression in the medical literature: Standards for use and reporting, with particular attention to one medical domain. J. Clin. Epidemiol. 2001, 54, 979–985. [Google Scholar] [CrossRef]

- Lisboa, P.J. A review of evidence of health benefit from artificial neural networks in medical intervention. Neural Netw. 2002, 15, 11–39. [Google Scholar] [CrossRef]

- Mazurowski, M.A.; Habas, P.A.; Zurada, J.M.; Lo, J.Y.; Baker, J.A.; Tourassi, G.D. Training neural network classifiers for medical decision making: The effects of imbalanced datasets on classification performance. Neural Netw. 2008, 21, 427–436. [Google Scholar] [CrossRef]

- Liaw, A.; Wiener, M. Classification and regression by randomForest. R News 2002, 2, 18–22. [Google Scholar]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Tan, P.N. Introduction to Data Mining, Pearson Education India; Indian Nursing Council: New Delhi, India, 2018. [Google Scholar]

- Chang, C.C.; Lin, C.J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. TIST 2011, 2, 27. [Google Scholar] [CrossRef]

- Zeng, X.; Martinez, T.R. Distribution-balanced stratified cross-validation for accuracy estimation. J. Exp. Theor. Artif. Intell. 2000, 12, 1–12. [Google Scholar] [CrossRef]

- Benavoli, A.; Corani, G.; Mangili, F. Should we really use post-hoc tests based on mean-ranks? J. Mach. Learn. Res. 2016, 17, 152–161. [Google Scholar]

- Goutte, C.; Gaussier, E. A probabilistic interpretation of precision, recall and F-score, with implication for evaluation. In Proceedings of the European Conference on Information Retrieval, Santiago de Compostela, Spain, 21–23 March 2005; Springer: Berlin/Heidelberg, Germany, 2005; pp. 345–359. [Google Scholar]

- Altman, D.G.; Bland, J.M. Diagnostic tests. 1: Sensitivity and specificity. BMJ Br. Med. J. 1994, 308, 1552. [Google Scholar] [CrossRef]

- Lemaître, G.; Nogueira, F.; Aridas, C.K. Imbalanced-learn: A python toolbox to tackle the curse of imbalanced datasets in machine learning. J. Mach. Learn. Res. 2017, 18, 559–563. [Google Scholar]

- McKinney, W. Data structures for statistical computing in python. In Proceedings of the 9th Python in Science Conference, Austin, TX, USA, 28 June–3 July 2010; Volume 445, pp. 51–56. [Google Scholar]

- Oliphant, T.E. A guide to NumPy; Trelgol Publishing: Provo, UT, USA, 2006; Volume 1, p. 85. [Google Scholar]

- Hunter, J.D. Matplotlib: A 2D graphics environment. Comput. Sci. Eng. 2007, 9, 90. [Google Scholar] [CrossRef]

- Virtanen, P.; Gommers, R.; Oliphant, T.E.; Haberland, M.; Reddy, T.; Cournapeau, D.; van der Walt, S.J. SciPy 1.0: Fundamental algorithms for scientific computing in Python. Nat. Methods 2020, 17, 261–272. [Google Scholar] [CrossRef] [PubMed]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Vanderplas, J. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 2825–2830. [Google Scholar]

- Davagdorj, K.; Lee, J.S.; Park, K.H.; Ryu, K.H. A machine-learning approach for predicting success in smoking cessation intervention. In Proceedings of the 2019 IEEE 10th International Conference on Awareness Science and Technology (iCAST), Morioka, Japan, 23–25 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar]

| Classifier | Parameter | Range |

|---|---|---|

| RF | Number of estimators | 250, 500, 750, 1000, 1250, 1500 |

| Criterion | gini; entropy | |

| GBT | Learning rate | 0.15, 0.1, 0.05, 0.01, 0.005, 0.001 |

| Number of estimators | 250, 500, 750, 1000, 1250, 1500 | |

| KNN | Number of k range | 3, 6, 9 |

| Weight options | uniform, distance |

| Features | Former Smoker (%) | Current Smoker (%) |

|---|---|---|

| Gender | ||

| Male | 856 (23.2) | 2447 (66.3) |

| Female | 95 (2.6) | 294 (8.0) |

| Age (year) | ||

| Less than 35 | 129 (3.5) | 786 (21.3) |

| 36–45 | 223 (6.0) | 795 (21.5) |

| 46–55 | 217 (5.9) | 608 (16.5) |

| More than 56 | 382 (10.3) | 552 (15.0) |

| Body mass index | ||

| Lower weight (13.0–19.0) | 169 (4.6) | 643 (17.4) |

| Normal weight (20.0–25.0) | 483 (13.1) | 1296 (35.1) |

| Over or severe obese weight (26.0–40.0) | 299 (8.1) | 802 (21.7) |

| Household income | ||

| Low | 120 (3.3) | 299 (8.1) |

| Low-middle | 222 (6.0) | 703 (19.0) |

| Middle-high | 288 (7.8) | 881 (23.9) |

| High | 321 (8.7) | 858 (23.2) |

| Residence area | ||

| Urban | 869 (23.5) | 2237 (60.6) |

| Rural | 82 (2.2) | 504 (13.7) |

| Education | ||

| Below to elementary school graduate | 161 (4.4) | 312 (8.5) |

| Middle school graduate | 113 (3.1) | 300 (8.1) |

| High school graduate | 337 (9.1) | 1092 (29.6) |

| College graduate or higher | 340 (9.2) | 1037 (38.1) |

| Employment condition | ||

| Full time | 1162 (31.5) | 1444 (39.1) |

| Part time | 500 (13.5) | 442 (12.0) |

| Others | 55 (1.5) | 89 (2.4) |

| Occupation | ||

| Managers, experts or related workers | 192 (5.2) | 520 (14.1) |

| Office workers | 135 (3.7) | 412 (11.2) |

| Service or seller | 146 (4.0) | 523 (14.2) |

| Agriculture, forester or fisher | 136 (3.7) | 233 (6.3) |

| Function, device or machine assembly worker; farmers | 217 (5.9) | 662 (17.9) |

| Labor workers | 112 (3.0) | 279 (7.6) |

| Housewife, students or other | 13 (0.4) | 112 (3.0) |

| Marital status | ||

| Married | 854 (23.1) | 2245 (60.8) |

| Single and others | 97 (2.6) | 496 (13.4) |

| Subjective health status | ||

| Very good | 58 (1.6) | 120 (3.3) |

| Good | 327 (8.9) | 903 (24.5) |

| Normal | 443 (12.0) | 1313 (35.6) |

| Bad | 110 (3.0) | 384 (10.4) |

| Very bad | 13 (3.0) | 21 (0.6) |

| Exercise | ||

| Yes | 24 (0.7) | 494 (13.4) |

| No | 927 (25.1) | 2247 (60.9) |

| Frequency of alcohol consumption in recent 1 year | ||

| Not drink at all in the last year | 118 (3.2) | 200 (5.4) |

| Less than once a month | 105 (2.8) | 287 (7.8) |

| About once a month | 93 (2.5) | 200 (5.4) |

| 2–4 times a month | 270 (7.3) | 825 (22.3) |

| About 2–3 times a week | 231 (6.3) | 829 (22.5) |

| 4 or more times a week | 134 (3.6) | 400 (10.8) |

| Hypertension | ||

| Yes | 464 (12.6) | 844 (22.9) |

| No | 487 (13.2) | 1897 (51.4) |

| Diabetes | ||

| Yes | 878 (23.8) | 2111 (57.2) |

| No | 73 (2.0) | 630 (17.1) |

| Asthma | ||

| Yes | 139 (3.8) | 285 (7.7) |

| No | 812 (22.0) | 2456 (66.5) |

| Average sleep time per day | ||

| Less than 6 h | 60 (1.6) | 165 (4.5) |

| 7–8 h | 1129 (30.6) | 1447 (39.2) |

| More than 9 h | 383 (10.4) | 508 (13.8) |

| Stress level | ||

| Very high | 30 (0.8) | 148 (4.0) |

| Moderate | 197 (5.3) | 713 (19.3) |

| Low | 587 (15.9) | 1576 (42.7) |

| Rarely | 137 (3.7) | 304 (8.2) |

| Long term depression (more than two weeks) | ||

| Yes | 87 (2.4) | 272 (7.4) |

| No | 864 (23.4) | 2469 (66.9) |

| Age of smoking initiation (years) | ||

| Less than 18 | 516 (14.0) | 1394 (37.8) |

| 19–24 | 327 (8.9) | 1070 (29.0) |

| More than 25 | 108 (2.9) | 277 (7.5) |

| Secondhand smoke in the workplace | ||

| Yes | 468 (12.7) | 1178 (31.9) |

| No | 394 (10.7) | 1146 (31.0) |

| No working place | 89 (2.4) | 417 (11.3) |

| Daily smokers at home | ||

| Yes | 49 (1.3) | 320 (8.7) |

| No | 902 | 2741 (74.2) |

| Attendance in smoking prevention or smoking cessation education | ||

| Yes | 55 (1.5) | 296 (8.0) |

| No | 896 (24.3) | 2445 (66.2) |

| Sampling | Model | F-Score | Type I Error | Type II Error | Balanced Accuracy | Geometric Mean |

|---|---|---|---|---|---|---|

| Non- sampling | LR | 0.8016 | 0.9779 | 0.1027 | 0.4597 | 0.1408 |

| MLP | 0.8048 | 0.9673 | 0.0500 | 0.4914 | 0.1763 | |

| Deep MLP | 0.7913 | 0.9701 | 0.0681 | 0.4809 | 0.1669 | |

| RF | 0.8148 | 0.8652 | 0.1763 | 0.4793 | 0.3332 | |

| GBT | 0.8179 | 0.9867 | 0.1600 | 0.4267 | 0.1057 | |

| KNN | 0.7604 | 0.7636 | 0.2490 | 0.4937 | 0.4214 | |

| SVM | 0.8343 | 0.9833 | 0.1040 | 0.4564 | 0.1223 | |

| SMOTE | LR | 0.8171 | 0.6852 | 0.1500 | 0.5824 | 0.5173 |

| MLP | 0.8660 | 0.5125 | 0.0440 | 0.7218 | 0.6827 | |

| Deep MLP | 0.8635 | 0.5456 | 0.0405 | 0.7069 | 0.6603 | |

| RF | 0.8571 | 0.3074 | 0.1500 | 0.7713 | 0.7673 | |

| GBT | 0.8888 | 0.3044 | 0.1100 | 0.7928 | 0.7868 | |

| KNN | 0.8056 | 0.3137 | 0.2264 | 0.7300 | 0.7286 | |

| SVM | 0.8410 | 0.4344 | 0.1300 | 0.7178 | 0.7015 | |

| ADASYN | LR | 0.7498 | 0.6574 | 0.2045 | 0.5691 | 0.5221 |

| MLP | 0.7690 | 0.5052 | 0.0705 | 0.7122 | 0.6782 | |

| Deep MLP | 0.7663 | 0.5311 | 0.0673 | 0.7008 | 0.6613 | |

| RF | 0.8227 | 0.4198 | 0.1433 | 0.7185 | 0.7050 | |

| GBT | 0.8339 | 0.3304 | 0.1141 | 0.7778 | 0.7702 | |

| KNN | 0.7894 | 0.3507 | 0.1900 | 0.7297 | 0.7252 | |

| SVM | 0.8187 | 0.4074 | 0.1550 | 0.7188 | 0.7076 |

| Sampling | Model | F-Score | Type I Error | Type II Error | Balanced Accuracy | Geometric Mean |

|---|---|---|---|---|---|---|

| Non- sampling | LR | 0.4754 | 0.1971 | 0.6579 | 0.5725 | 0.5241 |

| MLP | 0.4499 | 0.1496 | 0.6842 | 0.5831 | 0.5182 | |

| Deep MLP | 0.4522 | 0.1684 | 0.6814 | 0.5751 | 0.5147 | |

| RF | 0.7983 | 0.5000 | 0.2818 | 0.6091 | 0.5992 | |

| GBT | 0.8046 | 0.5055 | 0.2727 | 0.6109 | 0.5997 | |

| KNN | 0.2614 | 0.0474 | 0.8455 | 0.5536 | 0.3836 | |

| SVM | 0.2350 | 0.0365 | 0.8636 | 0.5500 | 0.3625 | |

| SMOTE | LR | 0.8312 | 0.3827 | 0.2042 | 0.7066 | 0.7009 |

| MLP | 0.7461 | 0.3698 | 0.3132 | 0.6585 | 0.6579 | |

| Deep MLP | 0.7305 | 0.3735 | 0.3340 | 0.6463 | 0.6459 | |

| RF | 0.8952 | 0.2405 | 0.1364 | 0.8116 | 0.8099 | |

| GBT | 0.7804 | 0.1970 | 0.3282 | 0.7374 | 0.7345 | |

| KNN | 0.7849 | 0.2307 | 0.2909 | 0.7392 | 0.7386 | |

| SVM | 0.7336 | 0.3523 | 0.3655 | 0.6411 | 0.6411 | |

| ADASYN | LR | 0.8342 | 0.4237 | 0.2028 | 0.6868 | 0.6778 |

| MLP | 0.7607 | 0.3984 | 0.3605 | 0.6206 | 0.6203 | |

| Deep MLP | 0.7527 | 0.4188 | 0.3619 | 0.6097 | 0.6090 | |

| RF | 0.8926 | 0.2627 | 0.1373 | 0.8000 | 0.7975 | |

| GBT | 0.7600 | 0.1558 | 0.3223 | 0.7610 | 0.7564 | |

| KNN | 0.7709 | 0.1842 | 0.3086 | 0.7536 | 0.7510 | |

| SVM | 0.7209 | 0.3263 | 0.3586 | 0.6576 | 0.6574 |

| Sampling | Model | F-Score | Type I Error | Type II Error | Balanced Accuracy | Geometric Mean |

|---|---|---|---|---|---|---|

| Non- sampling | LR | 0.8554 | 0.9756 | 0.0159 | 0.5043 | 0.1550 |

| MLP | 0.8551 | 0.9823 | 0.0167 | 0.5005 | 0.1319 | |

| Deep MLP | 0.8736 | 0.9824 | 0.0358 | 0.4909 | 0.1303 | |

| RF | 0.8130 | 0.9676 | 0.1169 | 0.4578 | 0.1692 | |

| GBT | 0.7874 | 0.9472 | 0.1525 | 0.4502 | 0.2115 | |

| KNN | 0.8154 | 0.9474 | 0.1017 | 0.4755 | 0.2174 | |

| SVM | 0.8299 | 0.9356 | 0.0807 | 0.4919 | 0.2433 | |

| SMOTE | LR | 0.9047 | 0.5263 | 0.0339 | 0.7199 | 0.6765 |

| MLP | 0.8348 | 0.4211 | 0.1864 | 0.6963 | 0.6863 | |

| Deep MLP | 0.8202 | 0.4595 | 0.2067 | 0.6669 | 0.6548 | |

| RF | 0.8065 | 0.7895 | 0.1525 | 0.5290 | 0.4224 | |

| GBT | 0.7627 | 0.7368 | 0.2373 | 0.5130 | 0.4480 | |

| KNN | 0.6275 | 0.5789 | 0.4576 | 0.4818 | 0.4779 | |

| SVM | 0.7833 | 0.7368 | 0.2034 | 0.5299 | 0.4579 | |

| ADASYN | LR | 0.9134 | 0.5263 | 0.0169 | 0.7284 | 0.6824 |

| MLP | 0.9000 | 0.3684 | 0.0847 | 0.7735 | 0.7603 | |

| Deep MLP | 0.8671 | 0.3903 | 0.1356 | 0.7371 | 0.7260 | |

| RF | 0.8160 | 0.7895 | 0.1356 | 0.5375 | 0.4266 | |

| GBT | 0.8130 | 0.7368 | 0.1525 | 0.5554 | 0.4723 | |

| KNN | 0.6214 | 0.6316 | 0.4576 | 0.4554 | 0.4470 | |

| SVM | 0.7934 | 0.7368 | 0.1864 | 0.5384 | 0.4628 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Davagdorj, K.; Lee, J.S.; Pham, V.H.; Ryu, K.H. A Comparative Analysis of Machine Learning Methods for Class Imbalance in a Smoking Cessation Intervention. Appl. Sci. 2020, 10, 3307. https://doi.org/10.3390/app10093307

Davagdorj K, Lee JS, Pham VH, Ryu KH. A Comparative Analysis of Machine Learning Methods for Class Imbalance in a Smoking Cessation Intervention. Applied Sciences. 2020; 10(9):3307. https://doi.org/10.3390/app10093307

Chicago/Turabian StyleDavagdorj, Khishigsuren, Jong Seol Lee, Van Huy Pham, and Keun Ho Ryu. 2020. "A Comparative Analysis of Machine Learning Methods for Class Imbalance in a Smoking Cessation Intervention" Applied Sciences 10, no. 9: 3307. https://doi.org/10.3390/app10093307

APA StyleDavagdorj, K., Lee, J. S., Pham, V. H., & Ryu, K. H. (2020). A Comparative Analysis of Machine Learning Methods for Class Imbalance in a Smoking Cessation Intervention. Applied Sciences, 10(9), 3307. https://doi.org/10.3390/app10093307