Improving Classification Performance of Softmax Loss Function Based on Scalable Batch-Normalization

Abstract

Featured Application

Abstract

1. Introduction

- -

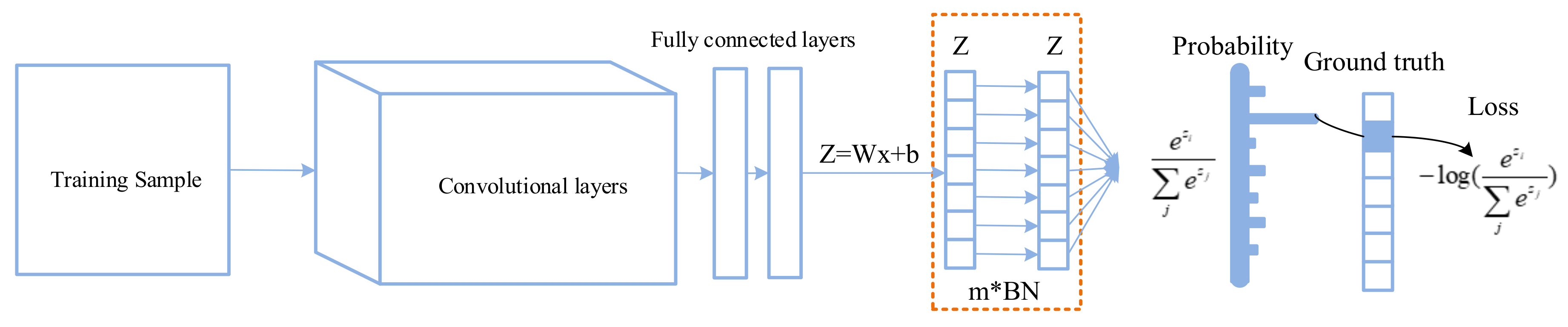

- From the point of view of solving the problem of gradient vanishing, we propose to add a scaled Batch Normalization (BN) layer before Softmax computing to solve the problem during the training stage of convolutional neural network, let the network’s parameter can be optimized continuously.

- -

- We propose to add a scalable Batch Normalization (BN) layer before Softmax computing to improve the performance of Softmax+cross-entropy loss, which can boost the final classification accuracy of the convolutional neural network.

- -

- The experimental results show that the final accuracy of network is improved in different data sets and different network structures compared with existing loss functions.

2. Related Work

2.1. Loss Functions in Classification Supervised Learning

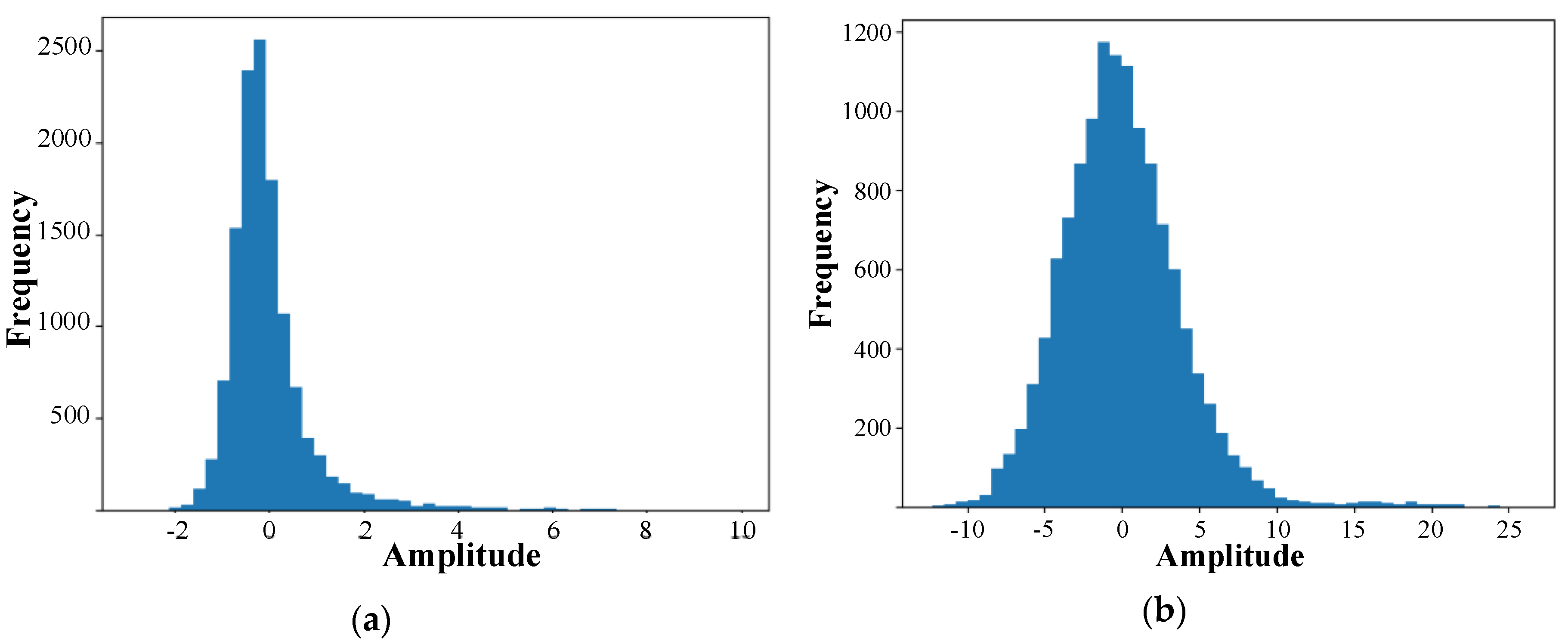

2.2. Batch Normalization

3. Inserting Scalable BN Layer to Improve the Classification Performance of Softmax Loss Function

4. Experiments and Results

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Wen, Y.; Zhang, K.; Li, Z.; Qiao, Y. A Discriminative Feature Learning Approach for Deep Face Recognition. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 499–515. [Google Scholar]

- Liu, W.; Wen, Y.; Yu, Z.; Yang, M. Large-margin softmax loss for convolutional neural networks. ICML 2016, 2, 7. [Google Scholar]

- Liu, W.; Wen, Y.; Yu, Z.; Li, M.; Raj, B.; Song, L. Sphereface: Deep Hypersphere Embedding for Face Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 212–220. [Google Scholar]

- Wang, F.; Cheng, J.; Liu, W.; Liu, H. Additive margin softmax for face verification. IEEE Signal Proc. Lett. 2018, 25, 926–930. [Google Scholar] [CrossRef]

- Zhu, Q.; Zhang, P.; Wang, Z.; Ye, X. A New Loss Function for CNN Classifier Based on Predefined Evenly-Distributed Class Centroids. IEEE Access 2019, 8, 10888–10895. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images; Technical Report TR-2009; University of Toronto: Toronto, ON, Canada, 2009; Volume 7. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A Large-Scale Hierarchical Image Database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Cun, Y.L.; Bottou, L.; Orr, G.; Muller, K. Efficient Backprop. Neural Networks: Tricks of the Trade; Springer: Berlin/Heidelberg, Germany, 2012; pp. 9–48. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Facebook. Pytorch. Available online: https://pytorch.org/ (accessed on 3–12 January 2020).

- Wang, F.; Xiang, X.; Cheng, J.; Yuille, A.L. Normface: L2 Hypersphere Embedding for Face Verification. In Proceedings of the 25th ACM international conference on Multimedia, Mountain View, CA, USA, 23–27 October 2017; pp. 1041–1049. [Google Scholar]

| CIFAR-100 Results | VGG | ResNet-18 | ResNet-50 |

|---|---|---|---|

| Cross-entropy Loss | 71.86% (±0.14%) | 75.10% (±0.34%) | 75.95% (±0.32%) |

| AM-SoftMax Loss (s = 7.5, m = 0.35) | 72.85% (±0.18%) | 74.97% (±0.08%) | 75.68% (±0.14%) |

| AM-SoftMax Loss (s = 10, m = 0.5) | 71.97% (±0.54%) | 74.40% (±0.38%) | 75.61% (±0.24%) |

| PEDCC-Loss (s = 7.5, m = 0.35) | 72.53% (±0.32%) | 74.86% (±0.51%) | 76.19% (±0.14%) |

| PEDCC-Loss (s = 10, m = 0.5) | 71.98% (±0.31%) | 73.84% (±0.48%) | 75.41% (±0.17%) |

| Our Loss (m = 0.8) | 73.57% (±0.25%) | 76.12% (±0.31%) | 76.56% (±0.13%) |

| Our Loss (m = 0.9) | 74.13% (±0.24%) | 76.10% (±0.12%) | 76.62% (±0.17%) |

| Our Loss (m = 1) | 73.24% (±0.06%) | 76.08% (±0.30%) | 76.32% (±0.51%) |

| TinyImagenet Results | VGG | ResNet-18 | ResNet-50 |

|---|---|---|---|

| Cross-entropy Loss | 54.05% (±0.19%) | 59.38% (±0.18%) | 62.35% (±0.27%) |

| AM-SoftMax Loss (s = 7.5, m = 0.35) | 54.57% (±0.28%) | 59.48% (±0.25%) | 61.83% (±0.27%) |

| AM-SoftMax Loss (s = 10, m = 0.5) | 54.62% (±0.31%) | 59.26% (±0.32%) | 62.40% (±0.06%) |

| PEDCC-Loss (s = 7.5, m = 0.35) | 54.91% (±0.17%) | 59.13% (±0.38%) | 62.38% (±0.52%) |

| PEDCC-Loss (s = 10, m = 0.5) | 54.22% (±0.21%) | 59.04% (±0.08%) | 6242% (±0.24%) |

| Our Loss (m = 0.8) | 55.02% (±0.09%) | 60.34% (±0.32%) | 63.72% (±0.08%) |

| Our Loss (m = 0.9) | 55.13% (±0.25%) | 60.12% (±0.07%) | 63.09% (±0.15%) |

| Our Loss (m = 1) | 55.86% (±0.26%) | 60.61% (±0.23%) | 63.27% (±0.30%) |

| Facescrub Results | VGG | ResNet-18 | ResNet-50 |

|---|---|---|---|

| Cross-entropy Loss | 93.54% (±0.30%) | 89.65% (±0.13%) | 90.01% (±0.31%) |

| AM-SoftMax Loss (s = 7.5, m = 0.35) | 94.12% (±0.08%) | 91.24% (±0.24%) | 90.84% (±0.35%) |

| AM-SoftMax Loss (s = 10, m = 0.5) | 93.95% (±0.19%) | 91.01% (±0.29%) | 91.67% (±0.50%) |

| PEDCC-Loss (s = 7.5, m = 0.35) | 93.68% (±0.37%) | 90.50% (±0.25%) | 90.47% (±0.34%) |

| PEDCC-Loss (s = 10, m = 0.5) | 93.82% (±0.27%) | 90.27% (±0.30%) | 90.98% (±0.36%) |

| Our Loss (m = 0.8) | 94.73% (±0.16%) | 92.32% (±0.16%) | 92.28% (±0.46%) |

| Our Loss (m = 0.9) | 94.36% (±0.08%) | 92.47% (±0.09%) | 92.00% (±0.61%) |

| Our Loss (m = 1) | 94.44% (±0.37%) | 92.36% (±0.30%) | 92.38% (±0.14%) |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, Q.; He, Z.; Zhang, T.; Cui, W. Improving Classification Performance of Softmax Loss Function Based on Scalable Batch-Normalization. Appl. Sci. 2020, 10, 2950. https://doi.org/10.3390/app10082950

Zhu Q, He Z, Zhang T, Cui W. Improving Classification Performance of Softmax Loss Function Based on Scalable Batch-Normalization. Applied Sciences. 2020; 10(8):2950. https://doi.org/10.3390/app10082950

Chicago/Turabian StyleZhu, Qiuyu, Zikuang He, Tao Zhang, and Wennan Cui. 2020. "Improving Classification Performance of Softmax Loss Function Based on Scalable Batch-Normalization" Applied Sciences 10, no. 8: 2950. https://doi.org/10.3390/app10082950

APA StyleZhu, Q., He, Z., Zhang, T., & Cui, W. (2020). Improving Classification Performance of Softmax Loss Function Based on Scalable Batch-Normalization. Applied Sciences, 10(8), 2950. https://doi.org/10.3390/app10082950