Instance Hard Triplet Loss for In-video Person Re-identification

Abstract

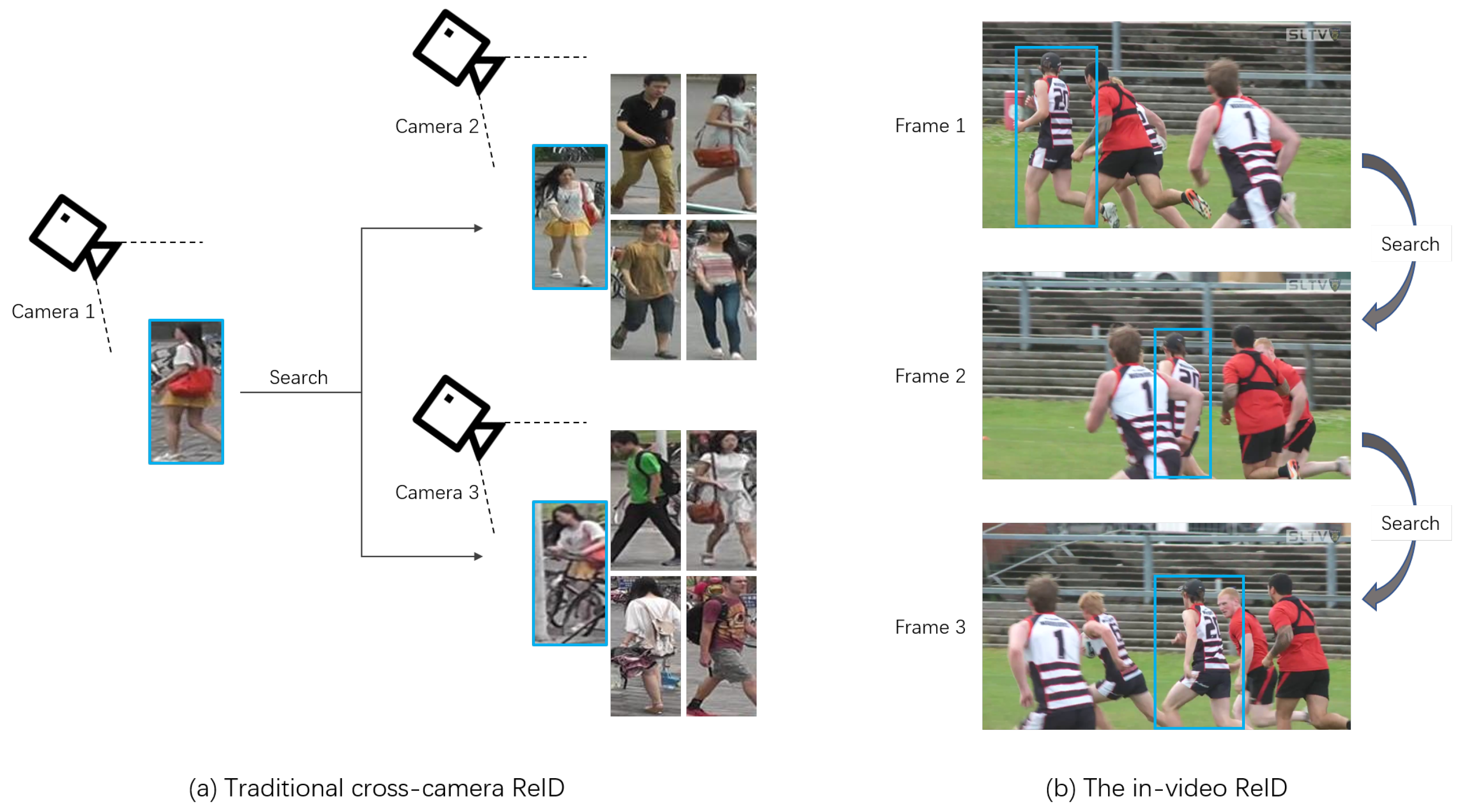

1. Introduction

- (1)

- Single-camera view: Cross-camera ReID needs to identify persons appearing in multiple cameras; thus, it forces the network to extract features consistent in all camera views and drop the camera-specific features, leading to limited available features, while in-video ReID can fully utilize all features.

- (2)

- Short-term: Cross-camera ReID tries to construct a long-term association that inevitably discards transient clues. On the contrary, we will demonstrate that those transient clues are very critical for in-video ReID.

- (3)

- Background: As we mentioned above, temporary clues like the background always act as a distractor, and those features are often discarded in cross-camera ReID. For instance, MGCAM [9] learns to predict a foreground mask of the human body area to suppress background distraction. However, for in-video ReID, the background of a person usually does not change dramatically within a few subsequent frames and can help to distinguish people with a similar appearance.

- (4)

- (1)

- A new large-scale video-based in-video ReID dataset with full images available. To the best of our knowledge, no such in-video dataset has been released before. A ReID-Head network is also designed to extract features for the in-video ReID task efficiently;

- (2)

- A new loss function called Instance Hard Triplet (IHT) loss is proposed, which is suitable for both the cross-camera ReID task and the in-video ReID task. Compared with the widely-used Batch Hard Triplet (BHT) loss [14] for cross-camera ReID, it achieves competitive performance and saves more than 30% of the training time (see Section 4.4, Table 2);

- (3)

- Labeling ReID data is expensive and time consuming; thus, we also propose an unsupervised method for automatically associating persons in the same video with the same identity through reciprocal matching, so that an in-video ReID model can be trained using these associated data.

2. Related Works

2.1. Representation Learning Based

2.2. Metric Learning Based

2.3. ReID for Tracking

3. Our Approach

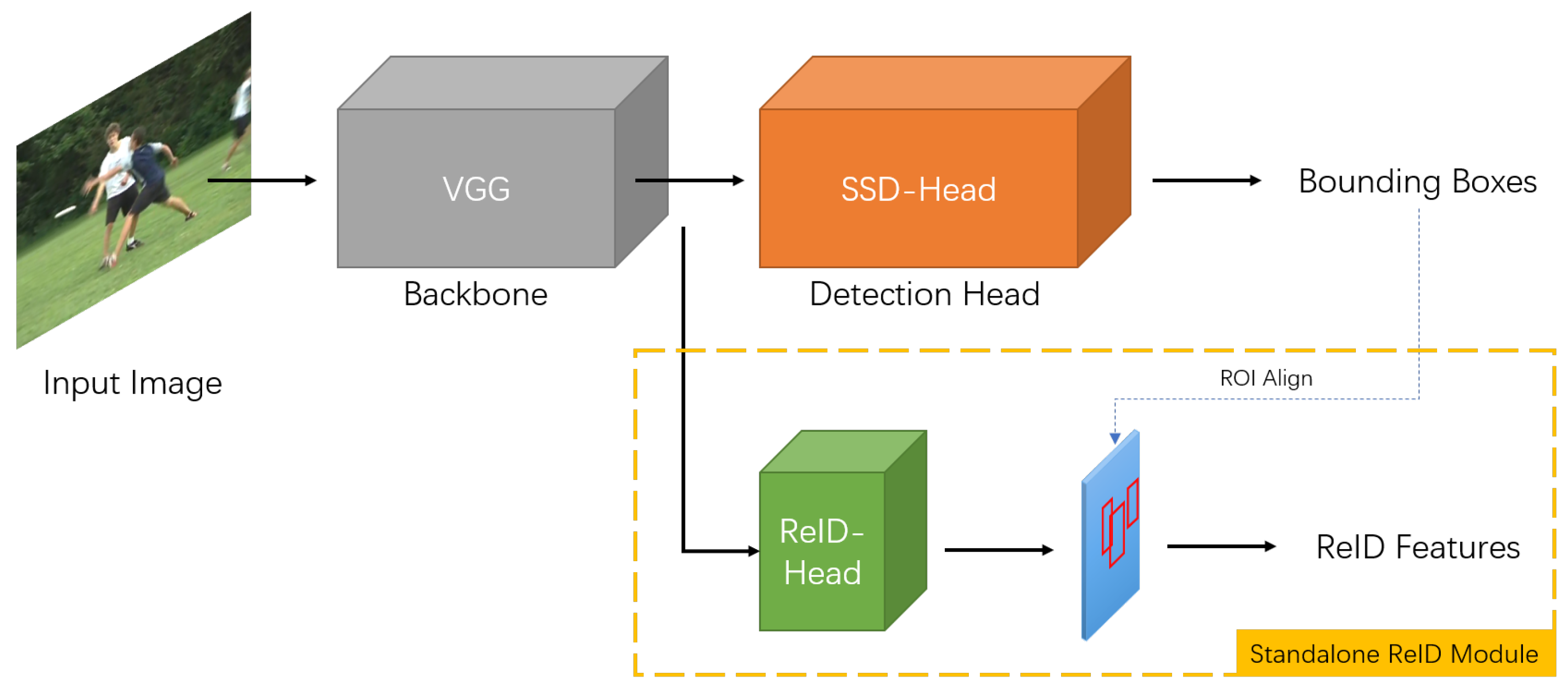

3.1. ReID-Head Network

- (1)

- It can jointly get detection and ReID results in a single network, and the network can be trained end-to-end.

- (2)

- (3)

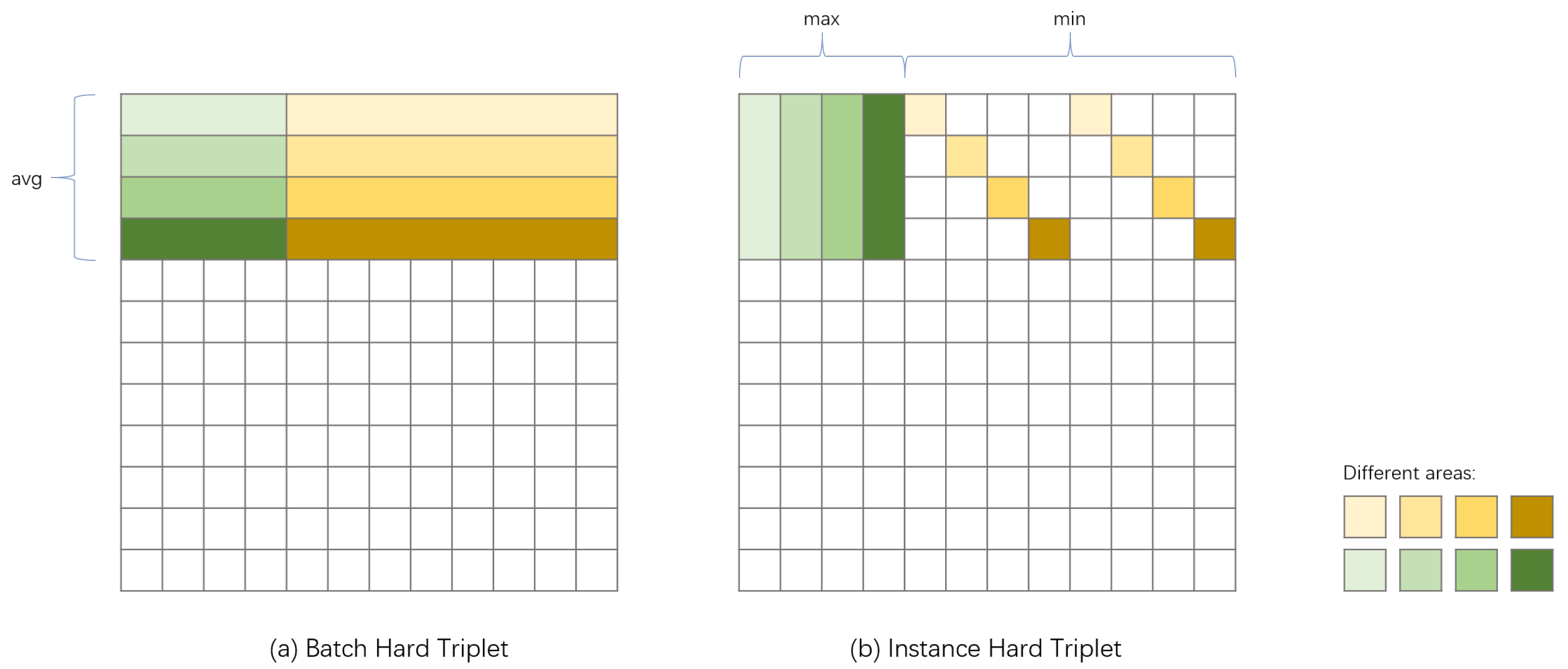

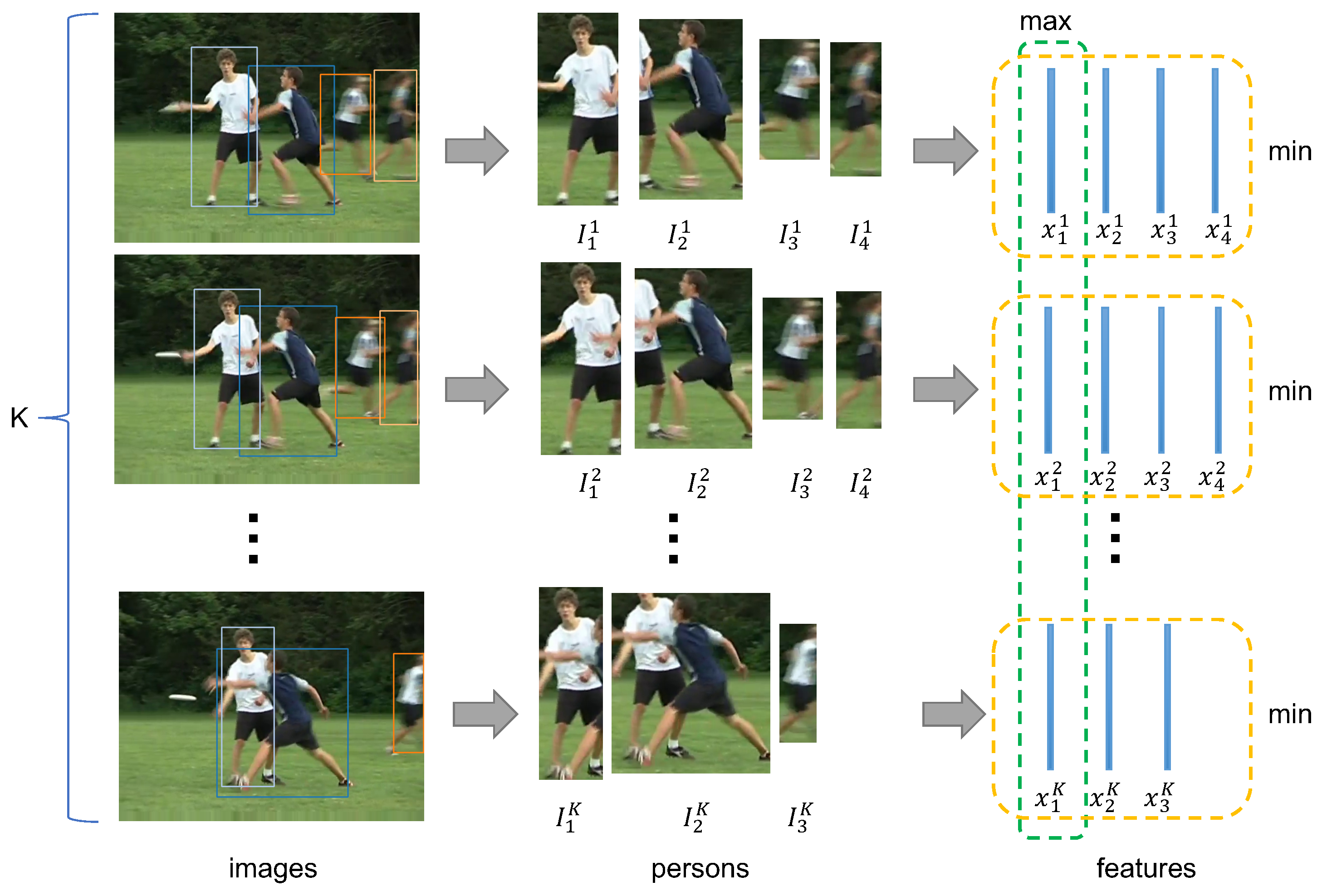

3.2. Instance Hard Triplet Loss

- (1)

- Imbalance: As shown in Equation (2) and Figure 3a, there is an imbalance between positive and negative samples that only K positive pairs, but negative pairs are compared, resulting in a harder negative sample mining.

- (2)

- Computation: This requires a huge computation overhead. For samples in a batch, it needs comparisons to generate triplets, leading to a computational complexity of .

- (1)

- more balanced between positive and negative pairs;

- (2)

- faster with a smaller computational complexity of compared to ;

- (3)

- competitive in performance, which can be seen in the experimental part of this paper;

- (4)

- more general for both the cross-camera and in-video ReID problem, the image-based and video-based ReID problem, as well as training samples with an indefinite quantity persons.

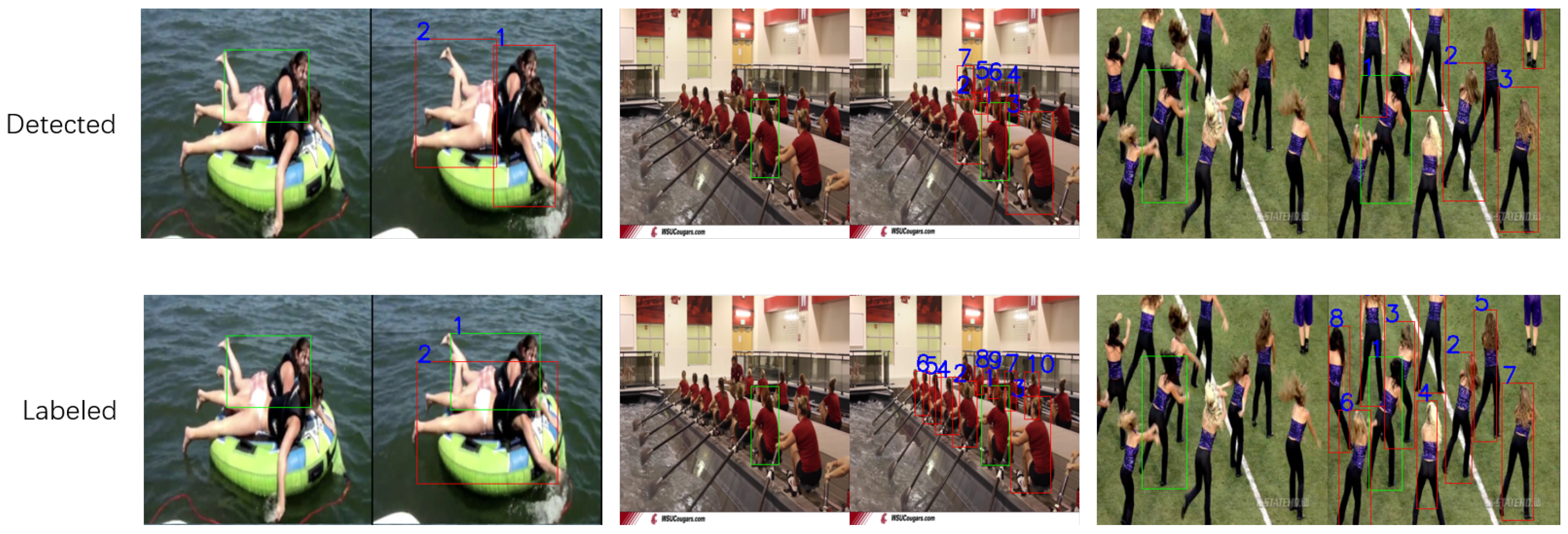

3.3. Unsupervised In-video ReID

4. Experiments

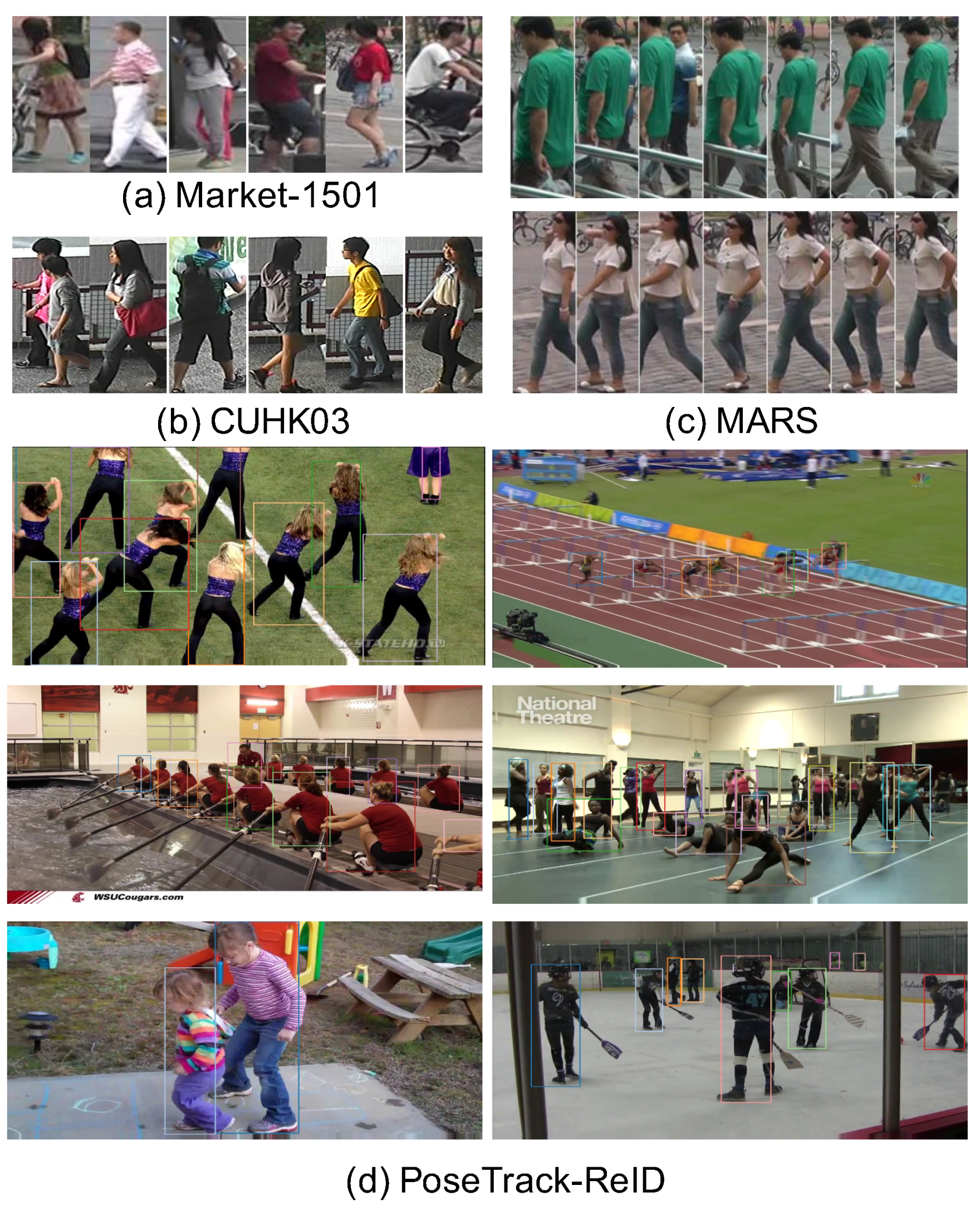

4.1. Datasets

4.2. Evaluation Protocol

4.3. Implementation Details

4.4. Cross-camera ReID Results

4.5. In-video ReID Results

- (1)

- Even a state-of-the-art model trained on a popular large-scale cross-camera ReID dataset still performed badly on the PoseTrack-ReID dataset, because in-video ReID was a different problem with cross-camera ReID, and we needed to train a new model to fit for the new job, that is why we proposed a new dataset, a new network structure, and a new loss function to answer how to train for the new in-video problem;

- (2)

- Unlike ReID-Head, the performance of PCB did not descend with a larger G, because cross-camera ReID is a long-term problem, which discards short-term clues, while in-video ReID is a short-term problem;

- (3)

- Our light-weight ReID-Head was much faster than the PCB model. Unlike the traditional two-stage way with independent detection and the ReID model, our ReID-Head could achieve real-time multi-person ReID feature extracting with almost no increasing time by fully reusing features when the number of persons in a video increased. When bounding box number was larger than the maximum batch size of the GPU, traditional ReID models would need multiple forwards, costing even more time.

4.6. Unsupervised In-video ReID Results

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Zheng, L.; Shen, L.; Tian, L.; Wang, S.; Wang, J.; Tian, Q. Scalable Person Re-Identification: A Benchmark. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Sun, Y.; Zheng, L.; Yang, Y.; Tian, Q.; Wang, S. Beyond Part Models: Person Retrieval with Refined Part Pooling (and A Strong Convolutional Baseline). In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Ristani, E.; Tomasi, C. Features for Multi-Target Multi-Camera Tracking and Re-Identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6036–6046. [Google Scholar]

- Zhang, Z.; Wu, J.; Zhang, X.; Zhang, C. Multi-Target, Multi-Camera Tracking by Hierarchical Clustering: Recent Progress on DukeMTMC Project. arXiv 2017, arXiv:1712.09531. [Google Scholar]

- Ning, G.; Huang, H. LightTrack: A Generic Framework for Online Top-down Human Pose Tracking. arXiv 2019, arXiv:1905.02822. [Google Scholar]

- Li, W.; Zhao, R.; Xiao, T.; Wang, X. DeepReID: Deep Filter Pairing Neural Network for Person Re-Identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 24–27 June 2014. [Google Scholar]

- Zheng, Z.; Zheng, L.; Yang, Y. Unlabeled Samples Generated by Gan Improve the Person Re-Identification Baseline in Vitro. arXiv 2017, arXiv:1701.07717. [Google Scholar]

- Ristani, E.; Solera, F.; Zou, R.; Cucchiara, R.; Tomasi, C. Performance Measures and a Data Set for Multi-Target, Multi-Camera Tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016. [Google Scholar]

- Song, C.; Huang, Y.; Ouyang, W.; Wang, L. Mask-Guided Contrastive Attention Model for Person Re-Identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Ge, Y.; Li, Z.; Zhao, H.; Yin, G.; Yi, S.; Wang, X.; Li, H. FD-GAN: Pose-Guided Feature Distilling GAN for Robust Person Re-Identification. In Proceedings of the Advances in Neural Information Processing Systems 31 (NIPS 2018), Montréal, QC, Canada, 3–8 December 2018. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Proceedings of the Advances in Neural Information Processing Systems 27 (NIPS 2014), Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Xiao, B.; Wu, H.; Wei, Y. Simple Baselines for Human Pose Estimation and Tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Raaj, Y.; Idrees, H.; Hidalgo, G.; Sheikh, Y. Efficient Online Multi-Person 2D Pose Tracking with Recurrent Spatio-Temporal Affinity Fields. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Hermans, A.; Beyer, L.; Leibe, B. In Defense of the Triplet Loss for Person Re-Identification. arXiv 2017, arXiv:1703.07737. [Google Scholar]

- Geng, M.; Wang, Y.; Xiang, T.; Tian, Y. Deep transfer learning for person re-identification. arXiv 2016, arXiv:1611.05244. [Google Scholar]

- Matsukawa, T.; Suzuki, E. Person re-identification using CNN features learned from combination of attributes. In Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; pp. 2428–2433. [Google Scholar]

- Lin, Y.; Zheng, L.; Zheng, Z.; Wu, Y.; Yang, Y. Improving person re-identification by attribute and identity learning. arXiv 2017, arXiv:1703.07220. [Google Scholar] [CrossRef]

- Fan, X.; Luo, H.; Zhang, X.; He, L.; Zhang, C.; Jiang, W. SCPNet: Spatial-Channel Parallelism Network for Joint Holistic and Partial Person Re-Identification. In Proceedings of the Asian Conference on Computer Vision, ACCV, Singapore, 1–5 November 2018. [Google Scholar]

- Zheng, L.; Zhang, H.; Sun, S.; Chandraker, M.; Yang, Y.; Tian, Q. Person Re-Identification in the Wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Chen, W.; Chen, X.; Zhang, J.; Huang, K. Beyond triplet loss: A deep quadruplet network for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; Volume 2. [Google Scholar]

- Cheng, D.; Gong, Y.; Zhou, S.; Wang, J.; Zheng, N. Person re-identification by multi-channel parts-based cnn with improved triplet loss function. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1335–1344. [Google Scholar]

- Liu, H.; Feng, J.; Qi, M.; Jiang, J.; Yan, S. End-to-end comparative attention networks for person re-identification. IEEE Trans. Image Process. 2017, 26, 3492–3506. [Google Scholar] [CrossRef]

- Varior, R.R.; Haloi, M.; Wang, G. Gated siamese convolutional neural network architecture for human re-identification. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 791–808. [Google Scholar]

- Bai, X.; Yang, M.; Huang, T.; Dou, Z.; Yu, R.; Xu, Y. Deep-Person: Learning Discriminative Deep Features for Person Re-Identification. arXiv 2017, arXiv:1711.10658. [Google Scholar] [CrossRef]

- Zhang, X.; Luo, H.; Fan, X.; Xiang, W.; Sun, Y.; Xiao, Q.; Jiang, W.; Zhang, C.; Sun, J. Alignedreid: Surpassing human-level performance in person re-identification. arXiv 2017, arXiv:1711.08184. [Google Scholar]

- Zhao, H.; Tian, M.; Sun, S.; Shao, J.; Yan, J.; Yi, S.; Wang, X.; Tang, X. Spindle Net: Person Re-Identification With Human Body Region Guided Feature Decomposition and Fusion. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Wei, L.; Zhang, S.; Yao, H.; Gao, W.; Tian, Q. Glad: Global-local-alignment descriptor for pedestrian retrieval. In Proceedings of the 2017 ACM on Multimedia Conference, Mountain View, CA, USA, 23–27 October 2017; ACM: New York, NY, USA, 2017; pp. 420–428. [Google Scholar]

- Li, J.; Wang, J.; Tian, Q.; Gao, W.; Zhang, S. Global-Local Temporal Representations for Video Person Re-Identification. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–3 November 2019. [Google Scholar]

- Zhao, Y.; Shen, X.; Jin, Z.; Lu, H.; Hua, X.S. Attribute-Driven Feature Disentangling and Temporal Aggregation for Video Person Re-Identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Zheng, L.; Yang, Y.; Hauptmann, A.G. Person Re-Identification: Past, Present and Future. arXiv 2016, arXiv:1610.02984. [Google Scholar]

- Ding, S.; Lin, L.; Wang, G.; Chao, H. Deep feature learning with relative distance comparison for person re-identification. Pattern Recognit. 2015, 48, 2993–3003. [Google Scholar] [CrossRef]

- Ahmed, E.; Jones, M.; Marks, T.K. An improved deep learning architecture for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3908–3916. [Google Scholar]

- Xiao, Q.; Luo, H.; Zhang, C. Margin Sample Mining Loss: A Deep Learning Based Method for Person Re-identification. arXiv 2017, arXiv:1710.00478. [Google Scholar]

- Yu, R.; Dou, Z.; Bai, S.; Zhang, Z.; Xu, Y.; Bai, X. Hard-Aware Point-to-Set Deep Metric for Person Re-Identification. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Ye, M.; Li, J.; Ma, A.J.; Zheng, L.; Yuen, P.C. Dynamic Graph Co-Matching for Unsupervised Video-Based Person Re-Identification. IEEE Trans. Image Process. 2019, 28, 2976–2990. [Google Scholar] [CrossRef] [PubMed]

- Zajdel, W.; Zivkovic, Z.; Krose, B. Keeping track of humans: Have I seen this person before? In Proceedings of the 2005 IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18–22 April 2005; pp. 2081–2086. [Google Scholar]

- Zhou, S.; Ke, M.; Qiu, J.; Wang, J. A Survey of Multi-object Video Tracking Algorithms. In Proceedings of the International Conference on Applications and Techniques in Cyber Security and Intelligence, Shanghai, China, 11–13 July 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 351–369. [Google Scholar]

- Cho, Y.J.; Kim, S.A.; Park, J.H.; Lee, K.; Yoon, K.J. Joint Person Re-identification and Camera Network Topology Inference in Multiple Cameras. arXiv 2017, arXiv:1710.00983. [Google Scholar] [CrossRef]

- Jiang, N.; Bai, S.; Xu, Y.; Xing, C.; Zhou, Z.; Wu, W. Online inter-camera trajectory association exploiting person re-identification and camera topology. In Proceedings of the 2018 ACM Multimedia Conference on Multimedia Conference, Seoul, Korea, 22–26 October 2018; ACM: New York, NY, USA, 2018; pp. 1457–1465. [Google Scholar]

- Xiao, T.; Li, S.; Wang, B.; Lin, L.; Wang, X. Joint Detection and Identification Feature Learning for Person Search. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Xiao, T.; Li, S.; Wang, B.; Lin, L.; Wang, X. End-to-End Deep Learning for Person Search. arXiv 2016, arXiv:1604.01850. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Law, H.; Deng, J. CornerNet: Detecting Objects as Paired Keypoints. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R.B. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision ECCV, Amsterdam, The Netherlands, 8–16 October 2016. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Xie, S.; Girshick, R.; Dollar, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Wei, S.E.; Ramakrishna, V.; Kanade, T.; Sheikh, Y. Convolutional Pose Machines. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Cao, Z.; Simon, T.; Wei, S.E.; Sheikh, Y. Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Weinberger, K.Q.; Saul, L.K. Distance Metric Learning for Large Margin Nearest Neighbor Classification. J. Mach. Learn. Res. (JMLR) 2009, 10, 207–244. [Google Scholar]

- Schroff, F.; Kalenichenko, D.; Philbin, J. Facenet: A Unified Embedding for Face Recognition and Clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Zhong, Z.; Zheng, L.; Cao, D.; Li, S. Re-Ranking Person Re-Identification with k-Reciprocal Encoding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.; Ramanan, D. Object Detection with Discriminatively Trained Part-Based Models. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1627–1645. [Google Scholar] [CrossRef]

- Andriluka, M.; Iqbal, U.; Insafutdinov, E.; Pishchulin, L.; Milan, A.; Gall, J.; Schiele, B. PoseTrack: A Benchmark for Human Pose Estimation and Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Hirzer, M.; Beleznai, C.; Roth, P.M.; Bischof, H. Person re-identification by descriptive and discriminative classification. In Proceedings of the Scandinavian Conference on Image Analysis, Ystad, Sweden, 23–25 May 2011; pp. 91–102. [Google Scholar]

- Wang, T.; Gong, S.; Zhu, X.; Wang, S. Person Re-Identification by Video Ranking. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Zheng, L.; Bie, Z.; Sun, Y.; Wang, J.; Su, C.; Wang, S.; Tian, Q. Mars: A Video Benchmark for Large-Scale Person Re-Identification. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (Voc) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Zheng, L.; Huang, Y.; Lu, H.; Yang, Y. Pose Invariant Embedding for Deep Person Re-Identification. IEEE Trans. Image Process. 2019, 28, 4500–4509. [Google Scholar] [CrossRef] [PubMed]

- Sun, Y.; Zheng, L.; Deng, W.; Wang, S. SVDNet for Pedestrian Retrieval. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Zheng, Z.; Zheng, L.; Yang, Y. Pedestrian Alignment Network for Large-Scale Person Re-Identification. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 3037–3045. [Google Scholar] [CrossRef]

- Zhong, Z.; Zheng, L.; Kang, G.; Li, S.; Yang, Y. Random Erasing Data Augmentation. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Zhong, Z.; Zheng, L.; Luo, Z.; Li, S.; Yang, Y. Invariance Matters: Exemplar Memory for Domain Adaptive Person Re-Identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Zheng, Z.; Yang, X.; Yu, Z.; Zheng, L.; Yang, Y.; Kautz, J. Joint Discriminative and Generative Learning for Person Re-Identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Yang, W.; Huang, H.; Zhang, Z.; Chen, X.; Huang, K.; Zhang, S. Towards Rich Feature Discovery With Class Activation Maps Augmentation for Person Re-Identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

| Name | #identities | #bboxes | Video? | Full Image? |

|---|---|---|---|---|

| CUHK03 [6] | 1467 | 13,164 | ||

| Market-1501 [1] | 1501 | 32,217 | ||

| DukeMTMC-reID [7] | 1812 | 36,441 | ✓ | |

| PRID2011 [60] | 934 | 24,541 | ✓ | |

| iLIDS-VID [61] | 300 | 42,495 | ✓ | |

| MARS [62] | 1261 | 1,191,003 | ✓ | |

| PoseTrack-ReID | 3088 | 84,443 | ✓ | ✓ |

| Method | Market-1501 | DukeMTMC-reID | CUHK03 | Time |

|---|---|---|---|---|

| BHT [14] | 85.9 | 78.1 | 58.6 | 0.81s |

| IHT (ours) | 87.0 | 80.3 | 59.2 | 0.53s |

| K | G = 1 | G = 5 | G = 10 | G = 15 |

|---|---|---|---|---|

| 2 | 39.1 | 37.9 | 36.3 | 34.9 |

| 4 | 39.9 | 39.2 | 37.8 | 36.4 |

| 6 | 40.0 | 39.2 | 37.8 | 35.9 |

| 8 | 40.0 | 39.0 | 37.9 | 36.3 |

| Bounding Boxes | G = 1 | G = 5 | G = 10 | G = 15 |

|---|---|---|---|---|

| detected | 40.0 | 39.2 | 37.8 | 35.9 |

| labeled | 99.5 | 93.6 | 87.1 | 81.1 |

| Method | G = 1 | G = 5 | G = 10 | G = 15 | t/bbox | t/img |

|---|---|---|---|---|---|---|

| PCB [2] | 20.2 | 20.6 | 20.9 | 20.8 | 10.5 ms | 14.7 ms |

| ReID-Head | 40.0 | 39.2 | 37.8 | 35.9 | 1.7 ms | 1.7 ms |

| Training Data | Test Bbox | G = 1 | G = 5 | G = 10 | G = 15 |

|---|---|---|---|---|---|

| Human-labeled | detected | 40.0 | 39.2 | 37.8 | 35.9 |

| RIA-generated | detected | 40.2 | 39.2 | 37.9 | 36.4 |

| Human-labeled | labeled | 99.5 | 93.6 | 87.1 | 81.1 |

| RIA-generated | labeled | 99.4 | 92.6 | 84.8 | 76.9 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fan, X.; Jiang, W.; Luo, H.; Mao, W.; Yu, H. Instance Hard Triplet Loss for In-video Person Re-identification. Appl. Sci. 2020, 10, 2198. https://doi.org/10.3390/app10062198

Fan X, Jiang W, Luo H, Mao W, Yu H. Instance Hard Triplet Loss for In-video Person Re-identification. Applied Sciences. 2020; 10(6):2198. https://doi.org/10.3390/app10062198

Chicago/Turabian StyleFan, Xing, Wei Jiang, Hao Luo, Weijie Mao, and Hongyan Yu. 2020. "Instance Hard Triplet Loss for In-video Person Re-identification" Applied Sciences 10, no. 6: 2198. https://doi.org/10.3390/app10062198

APA StyleFan, X., Jiang, W., Luo, H., Mao, W., & Yu, H. (2020). Instance Hard Triplet Loss for In-video Person Re-identification. Applied Sciences, 10(6), 2198. https://doi.org/10.3390/app10062198