Abstract

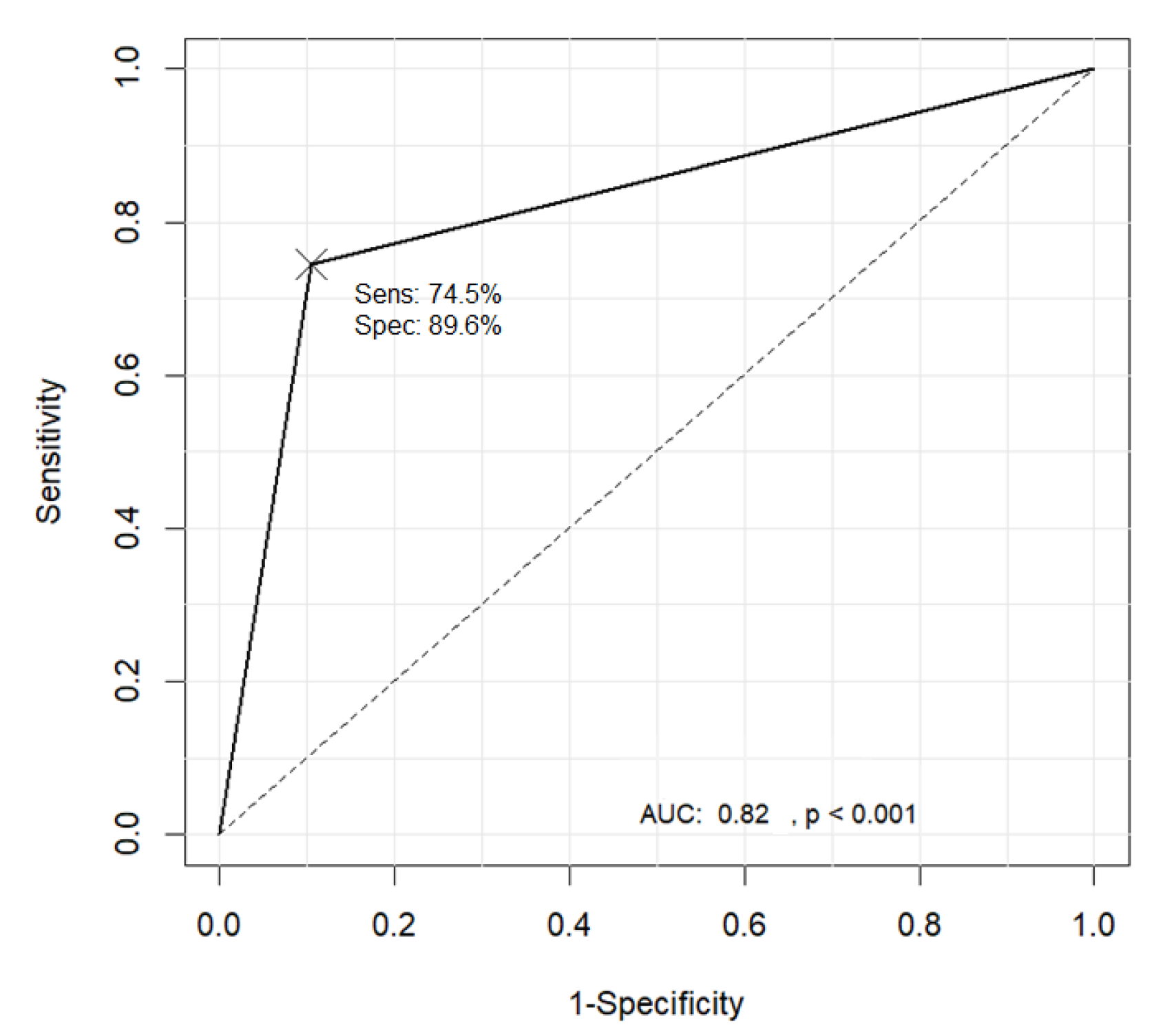

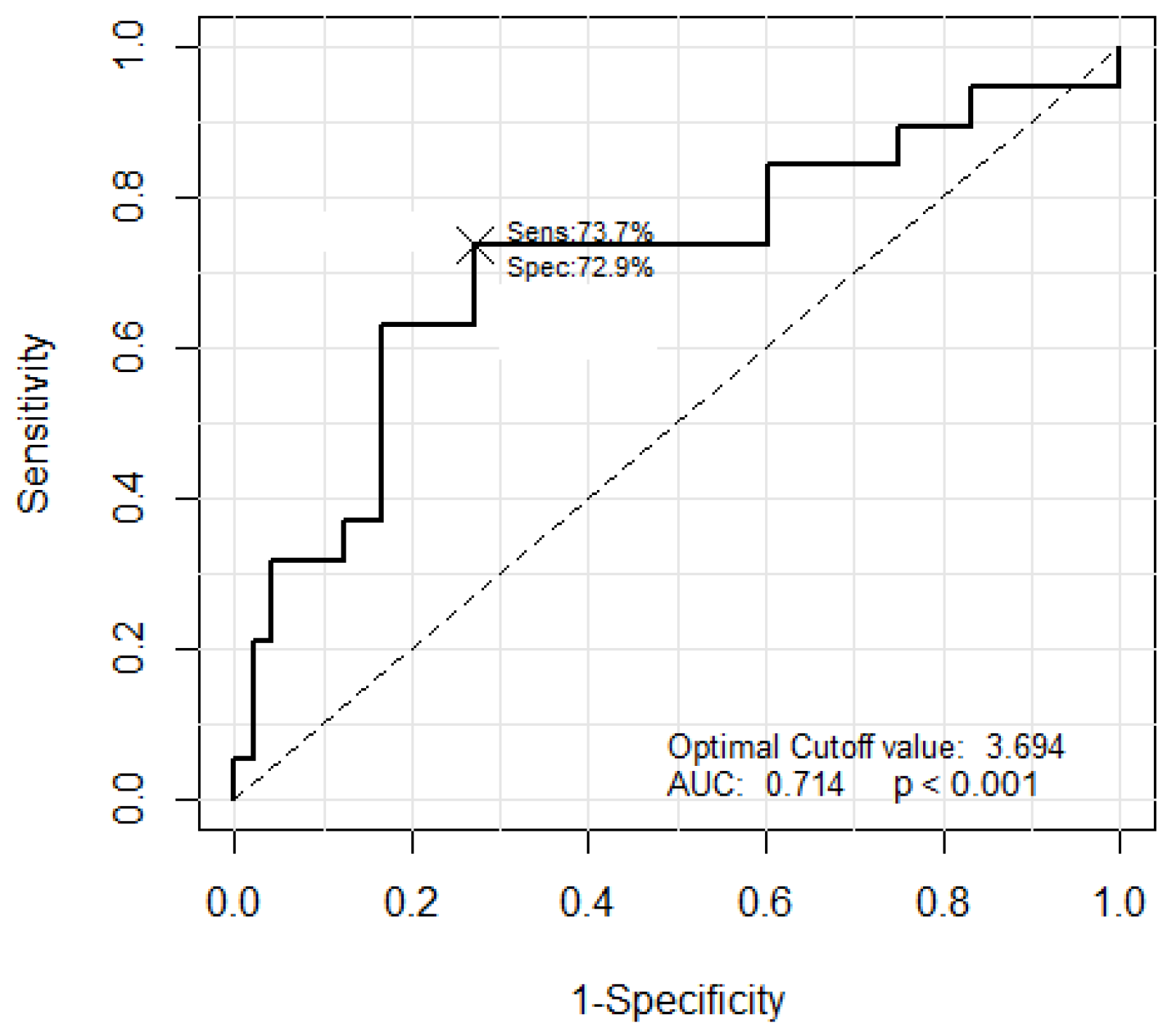

Predicting the depth of invasion of superficial esophageal squamous cell carcinomas (SESCCs) is important when selecting treatment modalities such as endoscopic or surgical resections. Recently, the Japanese Esophageal Society (JES) proposed a new simplified classification for magnifying endoscopy findings of SESCCs to predict the depth of tumor invasion based on intrapapillary capillary loops with the SESCC microvessels classified into the B1, B2, and B3 types. In this study, a four-step classification method for SESCCs is proposed. First, Niblack’s method was applied to endoscopy images to select a candidate region of microvessels. Second, the background regions were delineated from the vessel area using the high-speed fast Fourier transform and adaptive resonance theory 2 algorithm. Third, the morphological characteristics of the vessels were extracted. Based on the extracted features, the support vector machine algorithm was employed to classify the microvessels into the B1 and non-B1 types. Finally, following the automatic measurement of the microvessel caliber using the proposed method, the non-B1 types were sub-classified into the B2 and B3 types via comparisons with the caliber of the surrounding microvessels. In the experiments, 114 magnifying endoscopy images (47 B1-type, 48 B2-type, and 19 B3-type images) were used to classify the characteristics of SESCCs. The accuracy, sensitivity, and specificity of the classification into the B1 and non-B1 types were 83.3%, 74.5%, and 89.6%, respectively, while those for the classification of the B2 and B3 types in the non-B1 types were 73.1%, 73.7%, and 72.9%, respectively. The proposed machine learning based computer-aided diagnostic system could obtain the objective data by analyzing the pattern and caliber of the microvessels with acceptable performance. Further studies are necessary to carefully validate the clinical utility of the proposed system.

1. Introduction

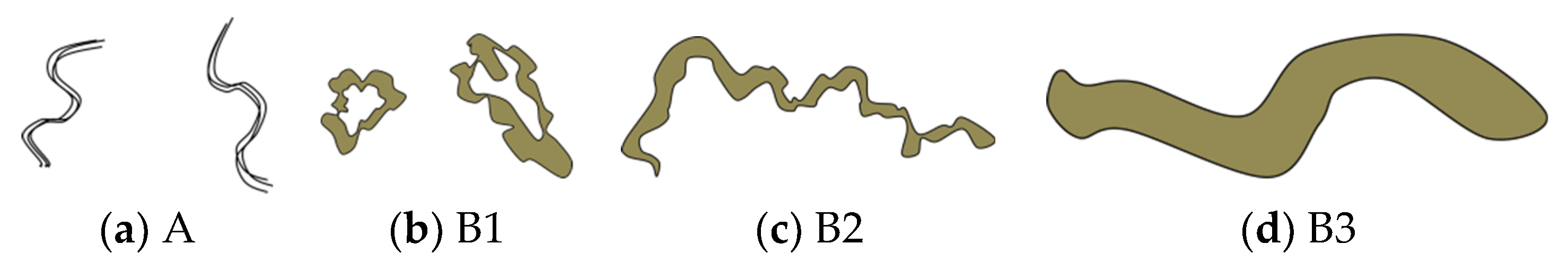

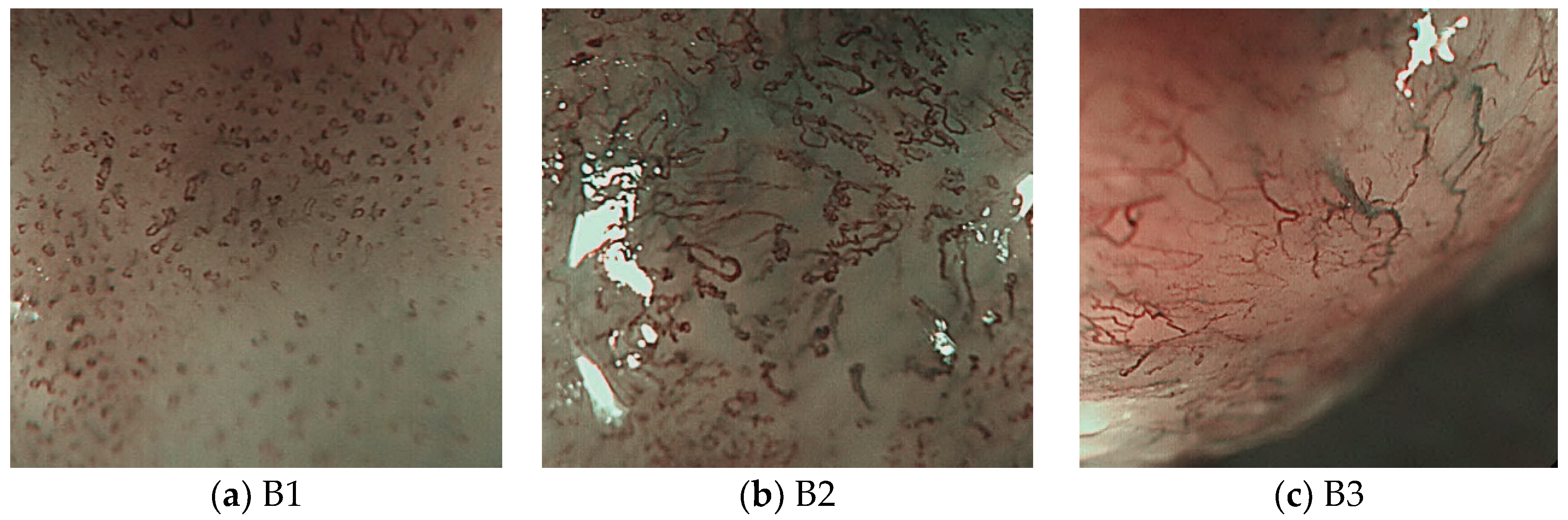

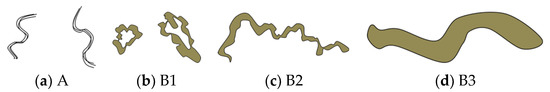

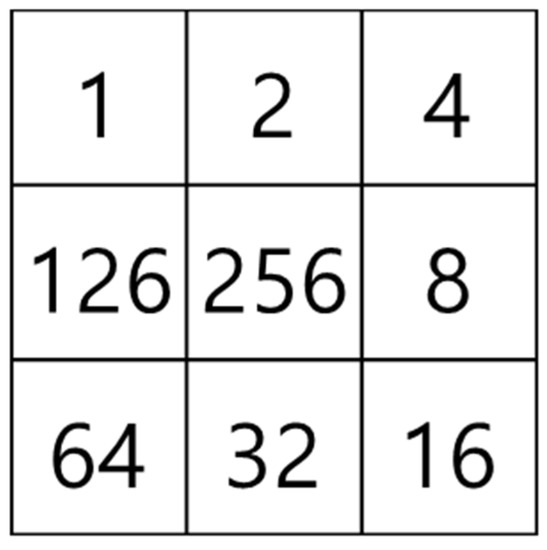

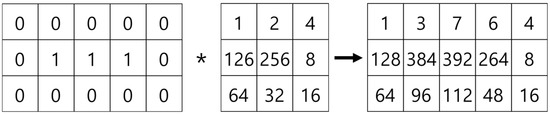

Initial changes in superficial esophageal squamous cell carcinomas (SESCCs) begin from the morphological alteration of intrapapillary capillary loops (IPCLs) in the esophageal mucosa. In the normal esophageal mucosa, IPCLs with regular morphologies are observed in magnifying endoscopy; however, the IPCLs are dilated with irregular morphologies and their calibers change at the esophageal cancer site. These changes in IPCLs are known to differ depending on the invasion depth of esophageal cancer [1]. In clinical practice, the prediction of the invasion depth of SESCCs is a critical factor to determine the appropriate treatment modality such as endoscopic resection or surgery. Recently, the Japan Esophageal Society (JES) proposed a simplified classification method based on the IPCL patterns for estimating the invasion depth of SESCCs [2,3]. The classification criteria for the microvessels in SESCCs are based on the IPCL morphology (Figure 1). The A type indicates normal IPCLs, while the B-types indicate abnormal IPCLs which are suggestive of esophageal cancer. The B1 type consists of abnormal microvessels with a conserved loop-like formation; the B2 type consists of stretched and markedly elongated transformations without a loop-like formation; and the B3 type consists of highly dilated, irregular vessels with a caliber that appears more than three times greater than that of the B2-type vessels. The accuracy of the JES classification ranged from 78.6%–90.5% in recent research studies [2,3].

Figure 1.

Schema of intrapapillary capillary loops (IPCLs) according to the Japan Esophageal Society (JES) magnifying endoscopy classification in superficial esophageal squamous cell carcinomas. Adapted from Oyama et al. [2].

However, determining the morphological changes of IPCLs with the JES classification has non-negligible interobserver variability, as such decisions depend on the knowledge and experience of the endoscopist. To exclude this subjectivity, automatic software that analyzes the features of IPCLs to predict the invasive depth of SESCCs is required. Hence, if the index of the feature patterns automatically extracted by such software matches with the proven clinical results, more accurate prediction of the invasive depth of SESCCs can be provided to the endoscopists.

In this study, a method is proposed for classifying and analyzing SESCCs using image processing and machine learning techniques on the IPCL patterns related to the invasion depth of the tumor obtained from the magnifying endoscopy images of patients with SESCCs. To achieve the proposed goal, several machine learning algorithms, such as the adaptive resonance theory 2 (ART2) algorithm, were applied to isolate the vessel area from the image and the support vector machine (SVM) to classify the IPCL patterns according to the JES classification in the magnifying endoscopy images. An algorithm for blood vessel thickness measurement is also proposed. The morphological features of IPCLs extracted from the magnifying endoscopy images of SESCCs are first classified into the B1 and non-B1 types using the learning patterns of the SVM algorithm with the non-B1 type subsequently classified into the B2 and B3 types based on the thicknesses of the IPCLs.

The overall structure of this paper is organized as follows. Section 2 describes related studies such as the SVM and conventional vessel thickness measurement algorithms employed in this study. Section 3 describes the proposed method. Section 4 describes the experiments and analysis results of the magnifying endoscopy images of SESCCs. Finally, Section 5 presents the discussion of this study and some future research directions.

2. Related Works

2.1. Esophageal Cancer Classification Method

The classification method for predicting the invasion depth of SESCCs is a critical factor to determine the appropriate treatment modality. Conventional IPCL classifications include Inoue’s [4] and Arima’s [5] classifications. These classifications are based on the IPCL patterns that exhibit various morphologies depending on the degree of tissue dysplasia and the tumor invasion depth. Inoue’s classification includes five types depending on the dilation, tortuosity, irregular caliber, and form variation of the IPCLs. Recently, the JES classification has been established which simplifies these two complex classifications. The JES classification classifies the IPCL patterns of SESCCs into three types, namely, B1, B2, and B3. Thus, this method has the advantage of increasing the interobserver concordance rate by simplifying Inoue’s and Arima’s existing classifications. This paper proposes a method for automatically classifying and deciphering patterns based on the JES classification.

2.2. Support Vector Machine Algorithm

The support vector machine (SVM) is a supervised learning algorithm based on the concept of hyperplanes that aims to separate a set of objects with maximum margin. First proposed by Cortez and Vapnik [6], the SVM performs binary classification tasks based on statistical learning theory. Unlike the empirical risk minimization (ERM) method, used in conventional classifiers, the SVM learns by minimizing errors using the structural risk minimization (SRM) method. In this study, the SVM model used for image processing was employed [7,8].

Let X {x1, x2, ..., xN} be a training set containing N feature vectors in d-dimensional feature space and associated with two class labels {−1, +1}. The linear SVM determines two classes that are linearly separable by defining a hyperplane that separates these feature vectors as follows.

A hyperplane can be expressed by the following Equation (1):

where is the weight vector, is the input vector, and is the bias constant. A parallel hyperplane can be expressed by Equations (2) and (3):

If the learning data can be separated linearly, the distance between two hyperplanes is .

The algorithm searches for a hyperplane that minimizes while satisfying Equations (4) and (5).

Equations (4) and (5) can be combined as Equation (6):

To obtain a better separation among the classes, this distance should be maximized by minimizing using the Lagrangian function with the most suitable optimal hyperplane obtained using Equation (7).

where αi is the Lagrange multiplier of a dual optimization problem that describes the separating hyperplane with kernel function and b is the threshold parameter of the hyperplane. If f(x) ≥ 0, then x is classified as a member of the class +1; else, it is classified as a member of the second class (−1). If the data space is linearly separable, the linear kernel function is sufficient to solve this optimization problem. Otherwise, other nonlinear kernel functions, such as the radial basis function (RBF) and sigmoid function, can be applied. However, nonlinear kernel function might require the complex tuning of hyperparameters which has values that can affect the performance of the SVM classifiers significantly.

Thus, the SVM applied in this study uses the linear kernel function. The SVM algorithm is essentially a binary classifier which classifies objects into the positive and negative classes. The one-against-all (OAA) SVM [9], which can handle multiple classes, was employed to classify the three classes (i.e., B1, B2, and B3) in this study. The OAA SVM classifies one class as +1 (positive), while the other classes are classified as −1 (negative). Unlike the B1 type, any microvessel with the morphologies shown in Figure 1 is classified as either the B2 or B3 type. Therefore, when the OAA SVM learning method is applied, the B1 type is classified as positive, while the B2 and B3 types, which have mixed types of microvessels, are classified as negative.

2.3. Microvessel Thickness Measurement Algorithm Using the Least Squares Method

The least squares method is an easy intuitive method to measure the thickness of the microvessels extracted from the magnifying endoscopy images of the stomach as previously reported in Reference [10]. The least squares method expresses the data as a linear equation. This method essentially determines a linear equation that minimizes the sum of the squares of differences between the data and value of the linear equation. The conventional microvessel thickness measurement method extracts a candidate microvessel area by binarizing images with the threshold values obtained using a fuzzy membership function from the magnifying endoscopy images of the stomach. The microvessel thickness is then measured using the least squares method. Thickness measurement using the least squares method is simple and computationally fast. However, the microvessel thickness cannot be measured accurately as the centerline of the microvessel is represented by only one straight line.

2.4. Extraction of Retinal Vessels and Thickness Measurement in Fundus Images

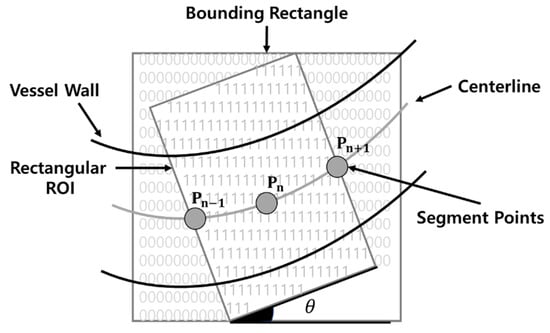

James Lowell [11] proposed a method for measuring the caliber of a retinal vessel from fundus images. After obtaining the centerline of the blood vessel to be measured, the branch points of the vessel that interfere with the vessel caliber measurement are removed.

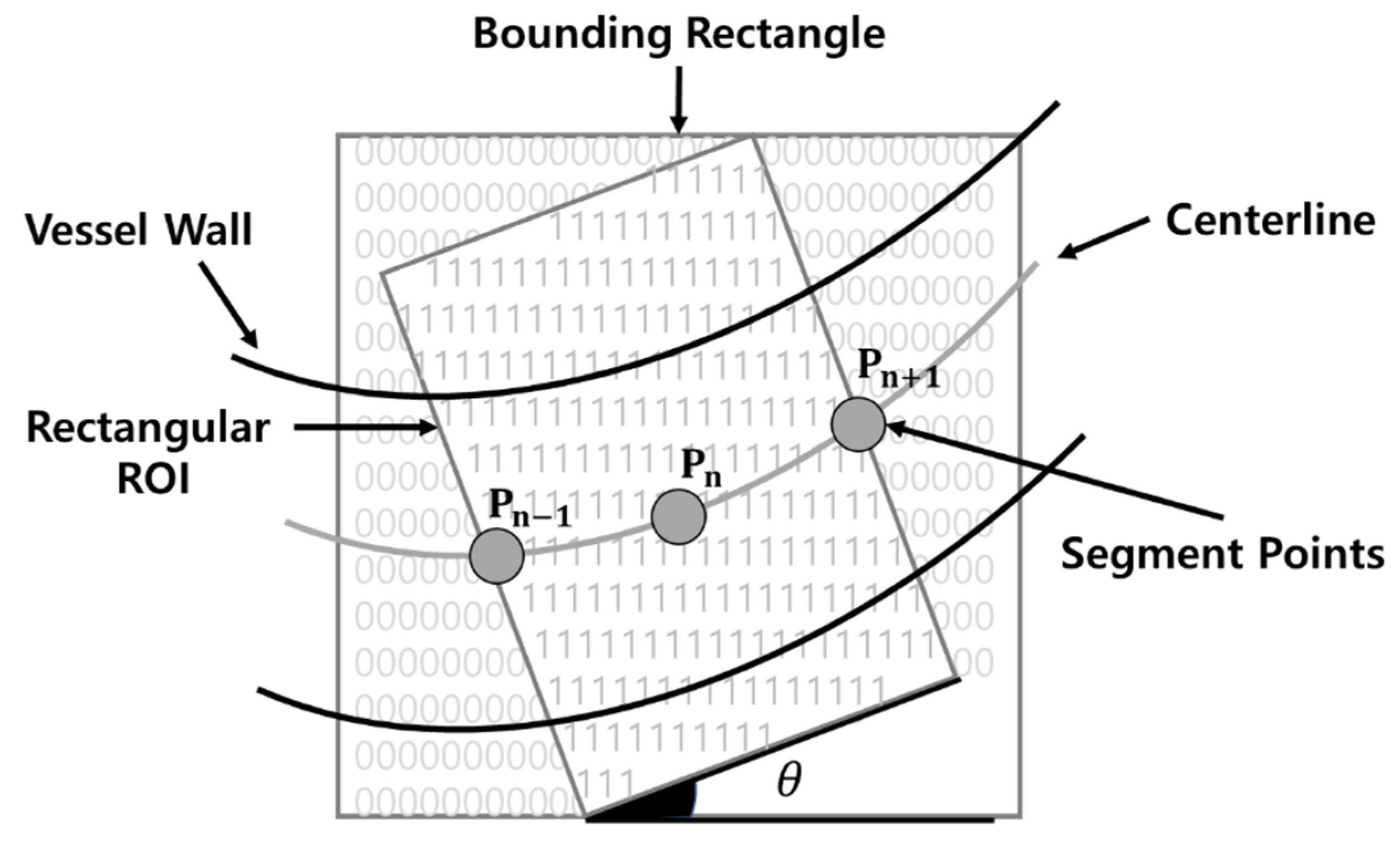

Furthermore, after setting the estimated angle of the vessel in the region of interest (ROI) rectangle, as shown in Figure 2, the caliber measurement point is determined. Subsequently, the vessel caliber is measured after the angle of the measurement point is determined. In addition, the vessel caliber is measured, while the ROI rectangle is moved along the centerline of the vessel. As the method proposed by James Lowell determines the caliber of the retinal vessel, its morphological features are considerably different from those of the IPCLs in SESCCs. One disadvantage of this method is that it requires a significant amount of time, as the angle is determined while moving after setting the ROI rectangle along the centerline of the vessel.

Figure 2.

Region of interest (ROI) rectangle proposed by James Lowell.

3. Materials and Methods

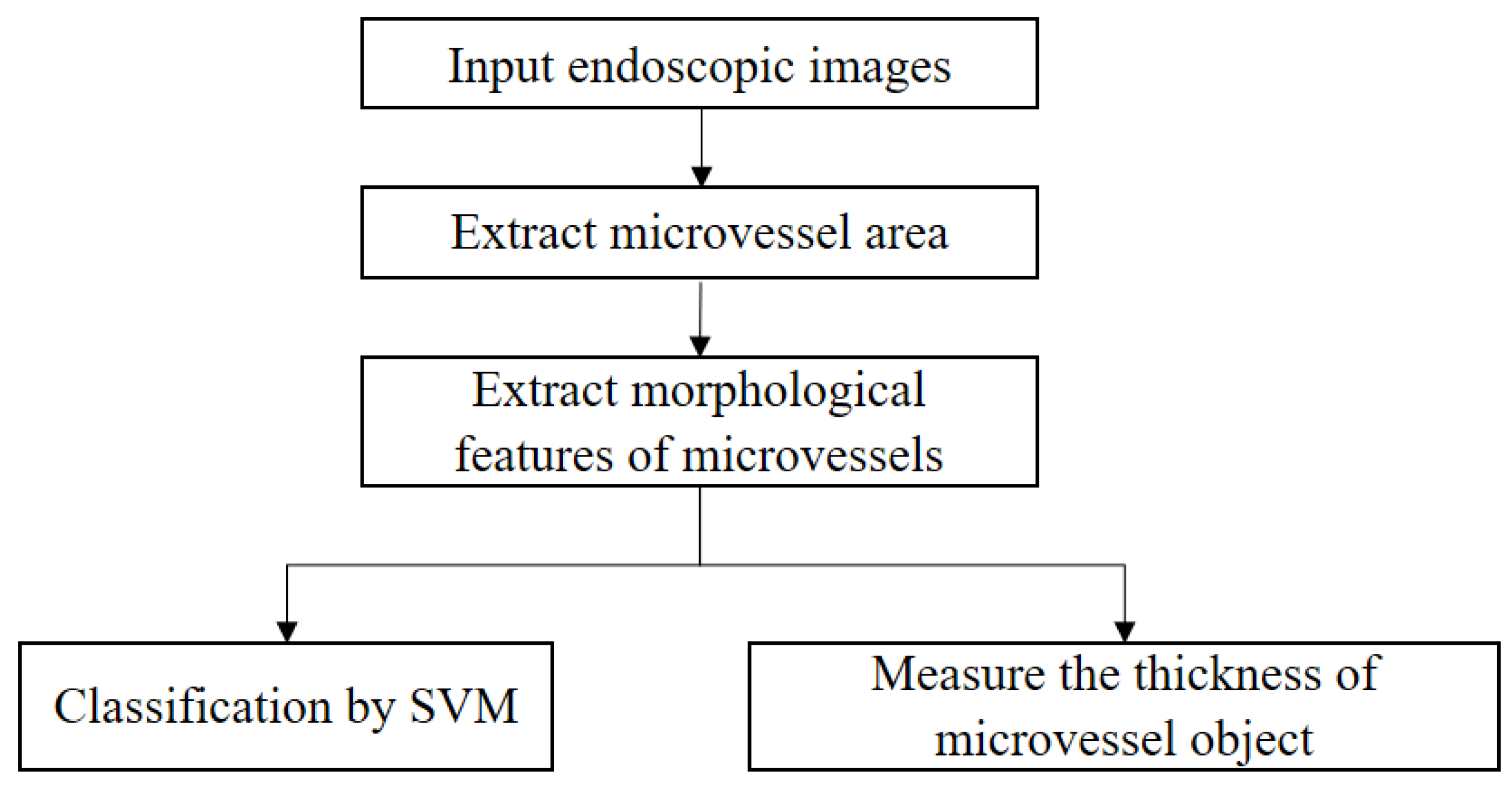

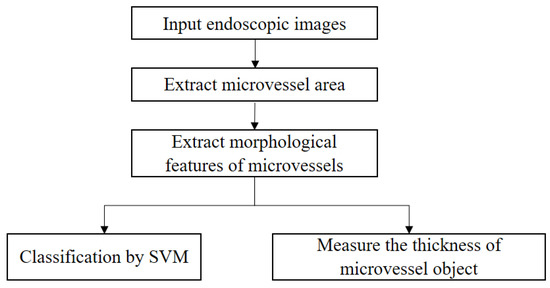

The proposed IPCL classification method for SESCCs can be summarized as shown in Figure 3.

Figure 3.

Proposed IPCL feature extraction and measurement process for analysis of magnifying endoscopy images of superficial esophageal squamous cell carcinoma.

On magnifying endoscopy images of the esophagus, it is sometimes impossible to distinguish the microvessels from the background with a single threshold value due to the reflections and shadows that occur in the images. Thus, the ROI is extracted by removing unnecessary information, such as the image capture conditions, from the endoscopy images. The fuzzy stretching technique is applied to the extracted ROI to emphasize the contrast between the microvessel (low brightness value) and background area (high brightness value). Niblack’s binarization technique is applied to extract a candidate microvessel area from the ROI with stretched contrast. Subsequently, noise regions are removed from the extracted candidate microvessel area. A fast Fourier high-frequency filter is applied to the ROI with stretched contrast to extract the microvessel boundaries and remove them from the candidate microvessel area. In addition, the ART2-based quantization technique is applied to the ROI with stretched contrast to extract the background area. The final microvessel area is then extracted by removing the background from the candidate microvessel area. Efficient and accurate noise removal is essential as the proposed method relies on pixel clustering to separate the target organ area. Fuzzy stretching and ART2-based quantization procedures are applied to separate the microvessel area accurately as explained in Section 3.1 and Section 3.2.

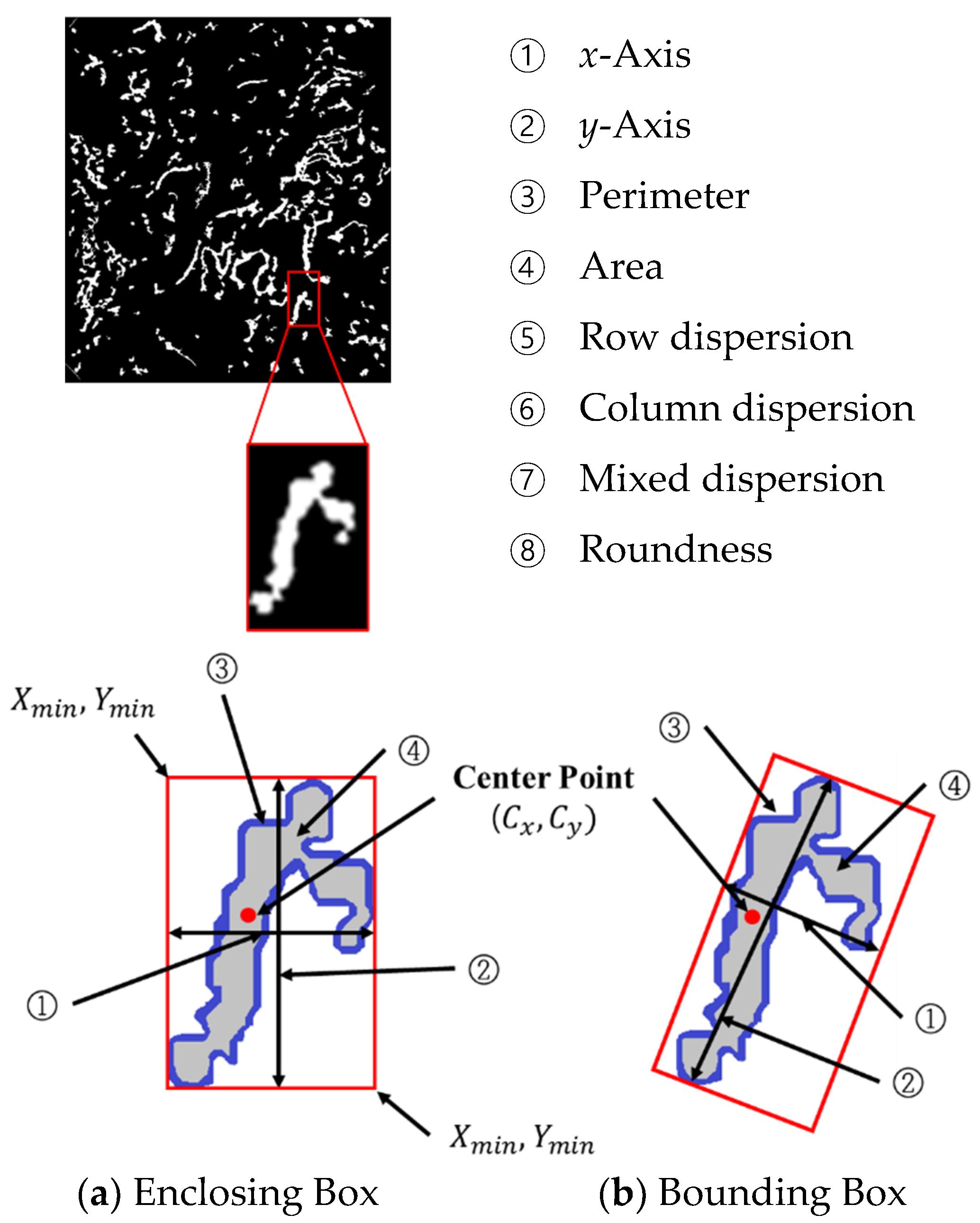

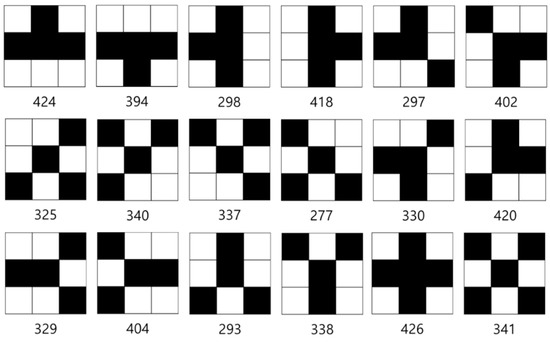

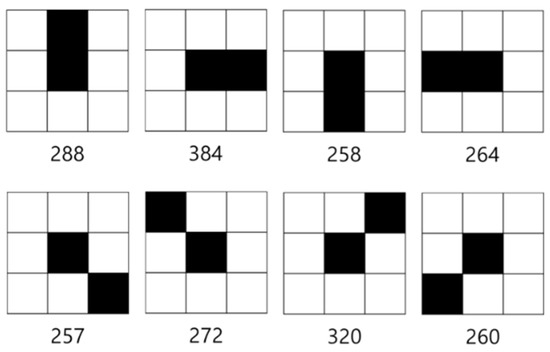

Eight morphological features such as the x-axis, y-axis, perimeter, area, row dispersion, column dispersion, mixed dispersion, and roundness derived from the extracted microvessel area are input to the SVM algorithm as learning data to classify the data into the B1 and non-B1 types (i.e., B2 and B3 types) as explained in Section 3.4. In addition, as the thickness of the B-type microvessels is more than three times that of the B2-type microvessels, which is the criterion suggested by the JES, the B2 and B3 types were classified from the non-B1 type of previously learned data. To use this morphological characteristic, five microvessels of the B2 type are selected based on the ratio of their area to perimeter, and the thickness measurement method is applied, as explained in Section 3.5. After measuring the microvessel thickness, the largest thickness value among 10 representative microvessels is compared with the average thickness of the selected B2-type microvessel. If this ratio is ≥ 3.7, the microvessel is classified as the B3 type.

3.1. Contrast Enhancement of the ROI Using the Fuzzy Stretching Technique

Endoscopy images exhibit irregular distributions of brightness values for the microvessels and background due to the reflections and shadows that occur during the examination; IPCLs cannot be extracted sometimes due to the low contrast between the objects and background. Therefore, in this study, the contrast of endoscopy images is emphasized/enhanced using a stretching technique. Among the stretching techniques, fuzzy stretching determines the brightness adjustment rate by calculating the distance between the lowest and highest pixel values based on the average brightness value of the images. Using these brightness adjustment rates, the upper and lower limits of the triangular membership function are calculated to set the membership function of the fuzzy set, which is then applied to the image for contrast stretching [12].

In Equation (8), are the RGB values, and and are the horizontal and vertical lengths of the image, respectively. The middle brightness values can be determined by applying Equation (8).

The distance values of the brightest pixels, , , and , and those of the darkest pixels, , , and , can be calculated from Equation (9) using the middle brightness values, , , and , determined from Equation (8).

The , , , , , and values determined from Equation (9) are then applied to Equation (10) to determine the brightness adjustment rate ().

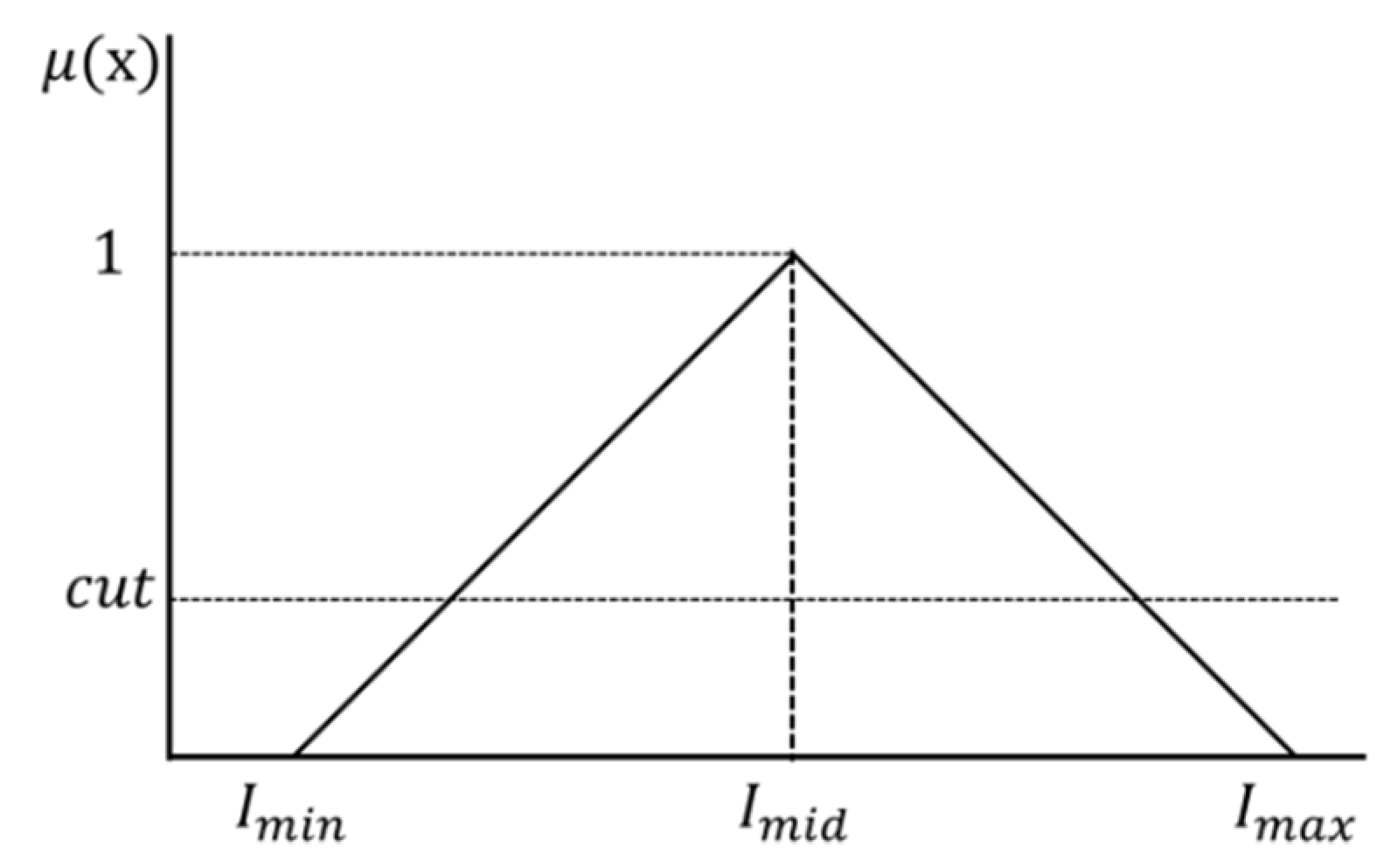

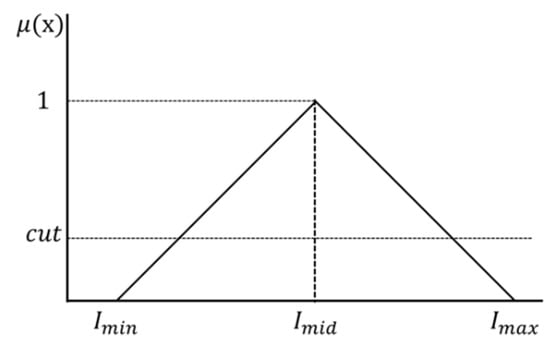

The middle brightness value (, , and brightness adjustment rate ( are applied to Equation (11) to determine the maximum brightness value (,,) and minimum brightness value (, , ). Furthermore, the maximum brightness value (,,) and minimum brightness value (, , ) are applied to the triangular membership function shown in Figure 4.

Figure 4.

Triangular membership function.

The middle brightness value () at which the membership degree becomes 1 is determined by applying the maximum brightness value (,,) and minimum brightness value (, , ) of the membership function shown in Figure 4 to Equation (12).

In addition, , , and to be applied to the membership function are determined using Equation (13), and the membership degree is determined using the membership function of each channel (RGB).

The upper and lower limits can be determined by applying the membership degree obtained from Equation (13) to which were determined from Equation (14). The image is stretched by applying each upper limit ( and lower limit ( to Equation (15). The values in Equation (15) represent the previous brightness values, while , represent the new brightness values obtained after stretching.

3.2. Vein Area Extraction and Background Area Removal

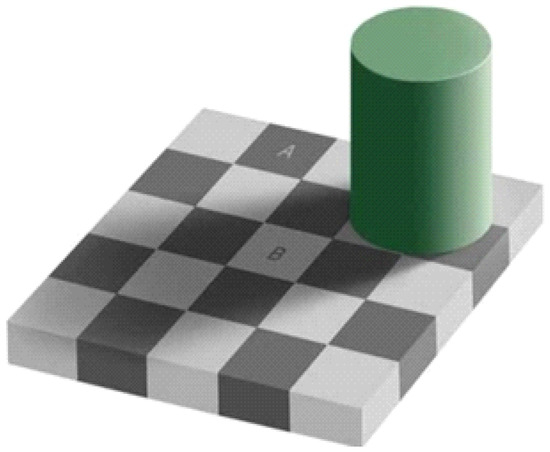

3.2.1. Extraction of the Microvessel Candidate Region Using Niblack’s Binarization

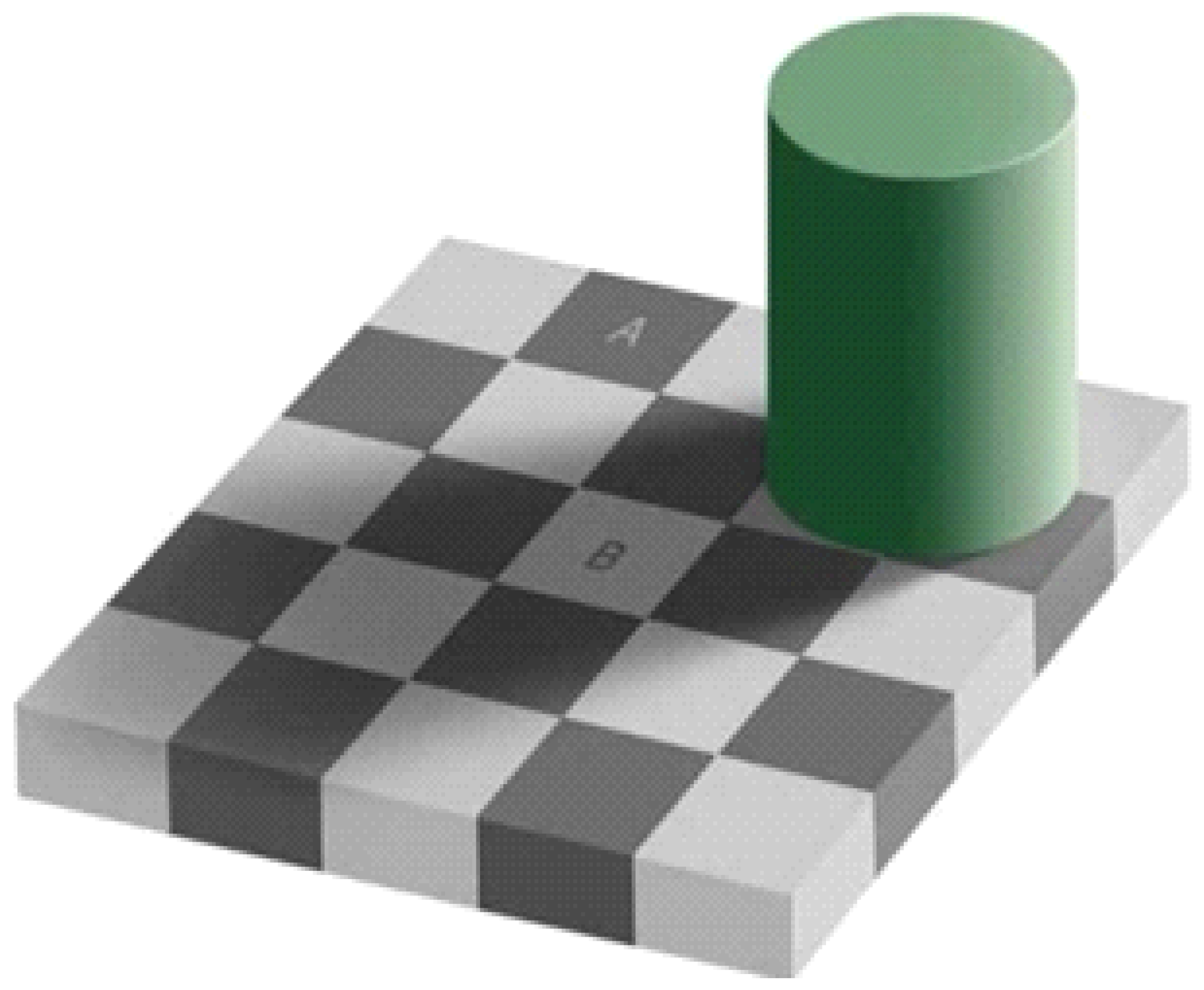

Microvessels might not be extracted from the endoscopy images owing to the small brightness contrast between the microvessels and background, of which can be attributed to the reflections and shadows in the captured images. However, unlike computers, human eyes can easily distinguish the microvessels from the background in the endoscopy images. This is called the color constancy phenomenon which is illustrated in Figure 5. In this figure, A and B are of the same color, but appear different due to the influence of the background. Humans can perceive color differences relatively consistently even when the illumination and observation conditions are changed by color constancy [13].

Figure 5.

Optical illusion attributable to color constancy.

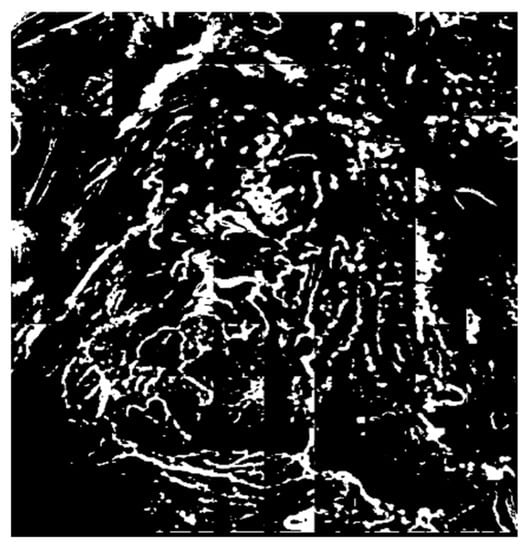

In the endoscopy images, microvessels have lower chroma values than the background. Thus, using the principle of color constancy, a local area that has a lower brightness value than the average is defined as a candidate microvessel area. Niblack’s binarization algorithm, which is a traditional binarization technique, is used to extract the candidate vessel area [14]. Niblack’s binarization sets the threshold using the average and standard deviation values in a predefined window. This technique is widely employed to document images. In this study, the window size in the endoscopy image was divided into sections. Figure 6 shows the result of applying the Niblack’s binarization method to an ROI subject to fuzzy stretching.

Figure 6.

Extraction of the candidate microvessel areas after binarization.

3.2.2. Noise Elimination in the Vascular Boundary Region Using a Fast Fourier High-Frequency Filter

The candidate microvessel areas extracted via binarization include noise and microvessel areas. Therefore, background areas not containing the microvessels should be removed to extract the microvessel areas accurately. First, a fast Fourier transform (FFT) is applied to separate the microvessel and background areas from the ROI with enhanced contrast. The FFT is the most general method of transforming an image into frequency space with subsequent filtering of the images. In general, a continuous Fourier transform cannot be applied, as the images contain digital data. Thus, the digital signals are transformed into the frequency domain using the discrete Fourier transform [15]. The Fourier transform for two-dimensional images is given by Equation (16), where , , , is the original image, and and are the width and length of the image, respectively. , which is the transformed function in frequency space, is a complex-valued function. can be transformed back into the original image using the inverse Fourier transform as shown in Equation (17).

multiplication operations are required when the discrete Fourier transform is applied to an image with width M and length N, resulting in a computational complexity of , which requires significant computation time. Therefore, the FFT, which has low computational complexity, was applied in this study. The fast discrete Fourier transform algorithm has improved speed compared with the discrete Fourier transform and is applicable only when the number of input data is a multiple of 2. As the computational complexity is , the FFT is more effective as the number of data increases.

Equation (18) defines in Equation (17), and the number of input data N as a multiple of 2.

The fast discrete Fourier transform calculates the even and odd data separately by default. Thus, Equation (19) can be separately defined for the even and odd data as shown as Equation (20).

In Equation (20), and represent the calculation results for the even and odd data, respectively. can be expressed as shown in Equation (21).

The complex coefficient has periodicity and symmetry as shown in Equation (22).

is substituted in Equation (21) instead of , then this satisfies and . Therefore, can be expressed as shown in Equation (23).

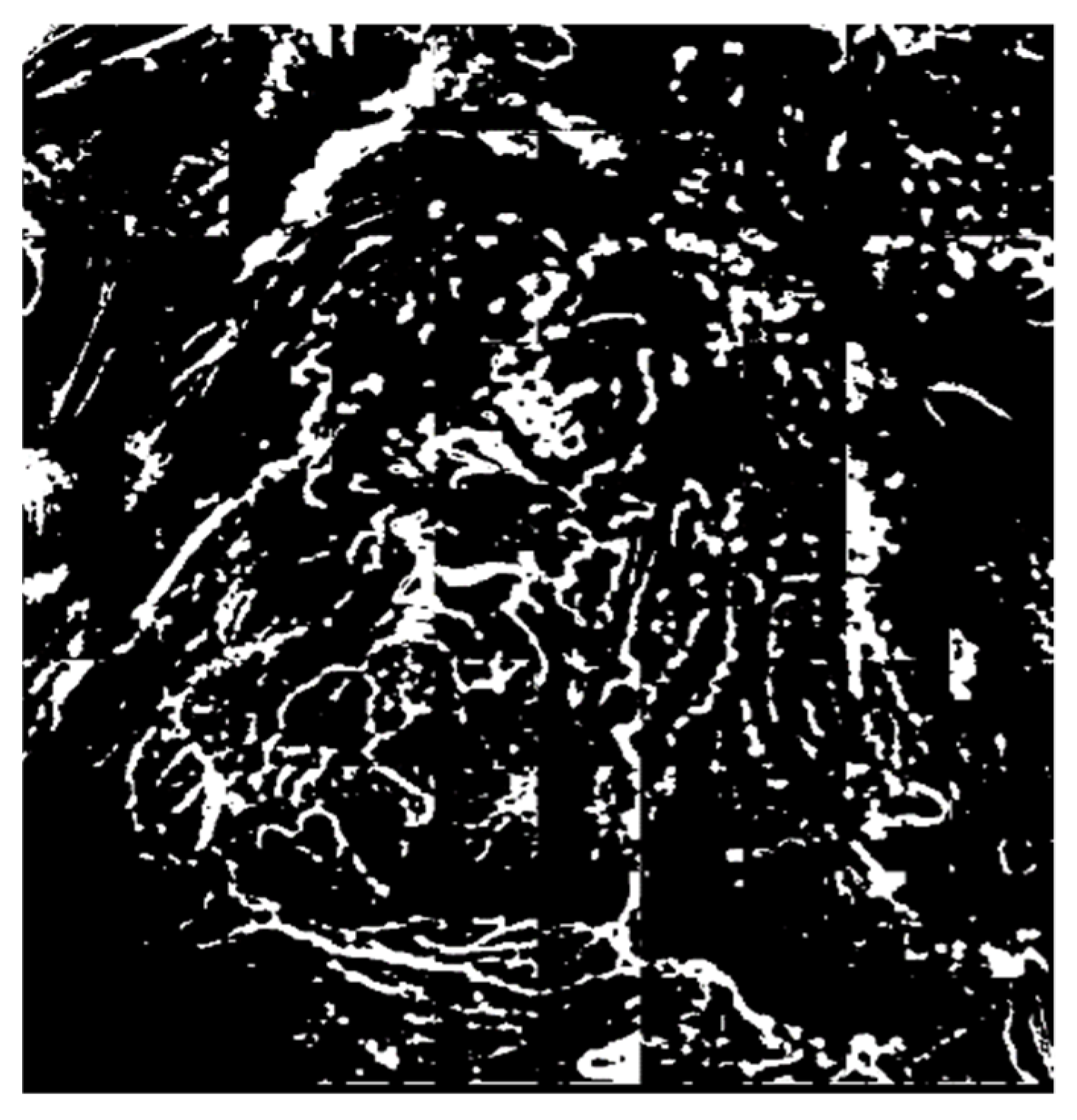

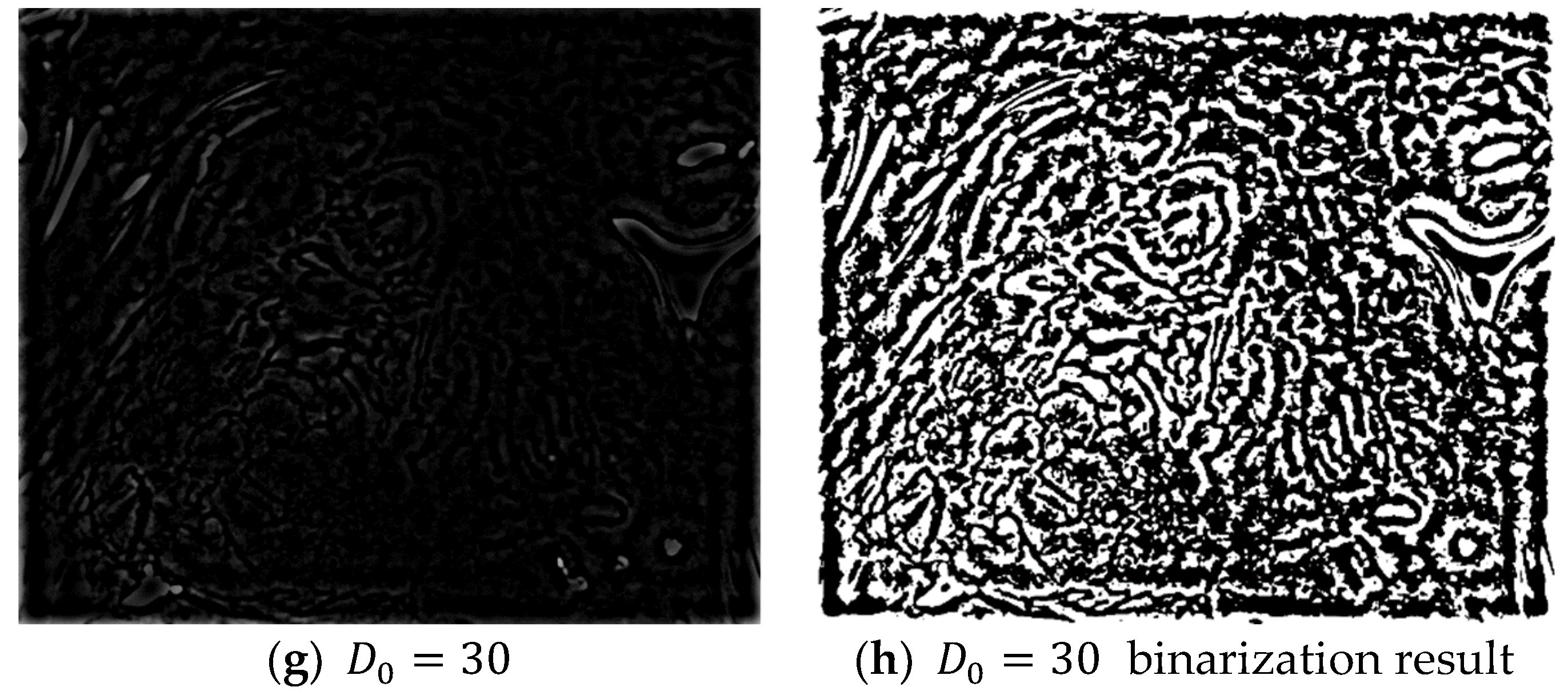

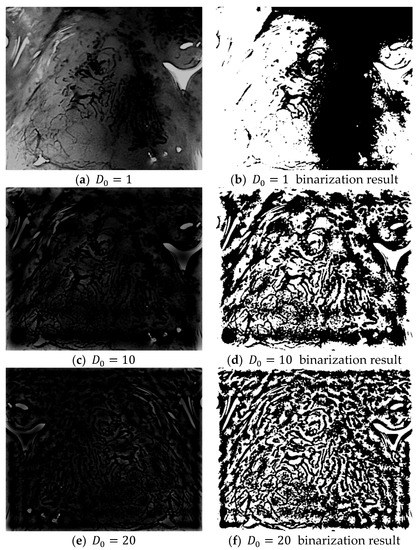

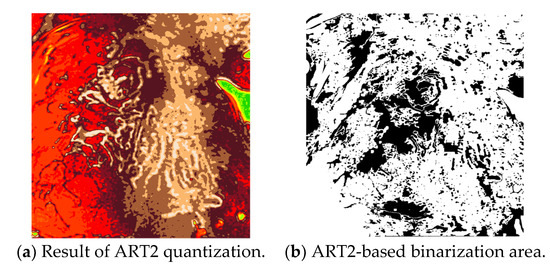

In Equation (23), if K Fourier transforms are applied to the even and odd data, the results from the K Fourier transforms can be easily obtained without a complex computational process. Four layers are created when a Gaussian high-pass filter with cut-off frequencies = {1, 10, 20, 30} is applied to the frequency domain image obtained after an FFT. The high-pass filter only passes the high-frequency region and blocks/filters the low-frequency region. The resulting frequency domain image can then be obtained by subtracting the low-frequency region from 1 as shown in Equation (24):

The application of a high-pass filter tends to sharpen the resulting image. A high-pass filter can effectively distinguish the boundary between the microvessels and background, regardless of the morphologies of the microvessels. Figure 7 shows the extraction results for the boundary area in each image and the microvessel to which the high-pass filter was applied. Based on the characteristic feature of the background around the microvessels having a high brightness in the images processed with a high-pass filter, the microvessel boundary area can be extracted by setting the threshold as the average pixel value in the high-pass filtered image.

Figure 7.

Binarization results for various high-pass filter cut-off frequencies ().

The binarization results for the cut-off frequencies shown in Figure 7b,d,f,h are combined to first extract the microvessel boundary areas and then remove the boundary areas of the extracted microvessels as shown in Figure 8.

Figure 8.

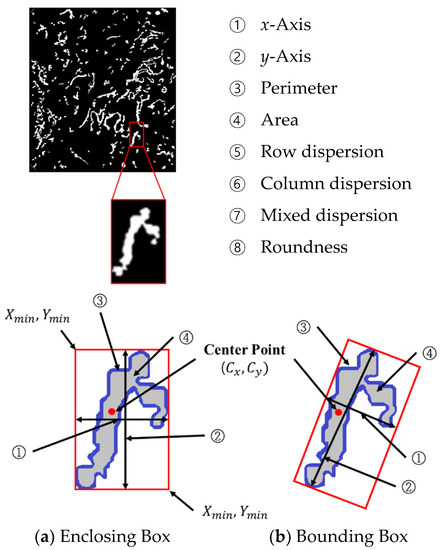

Microvessel boundary areas extracted using the fast Fourier transform (FFT).

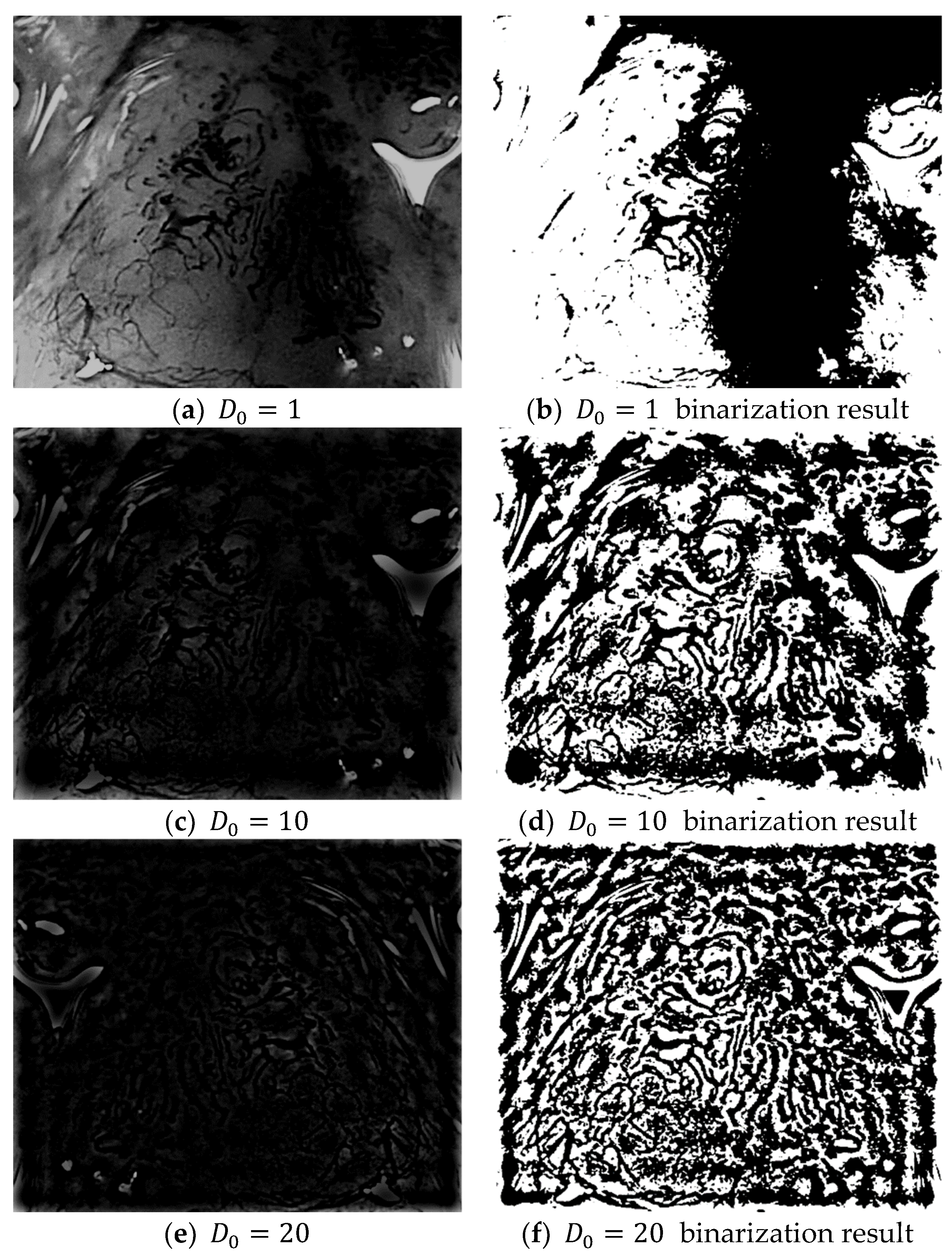

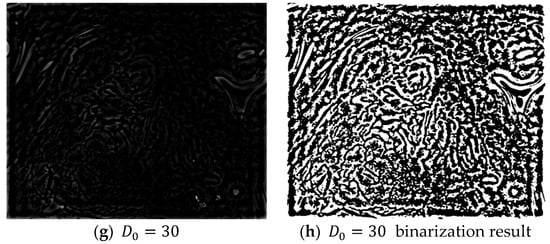

3.2.3. Removal of the Background Area Using the ART2 Algorithm

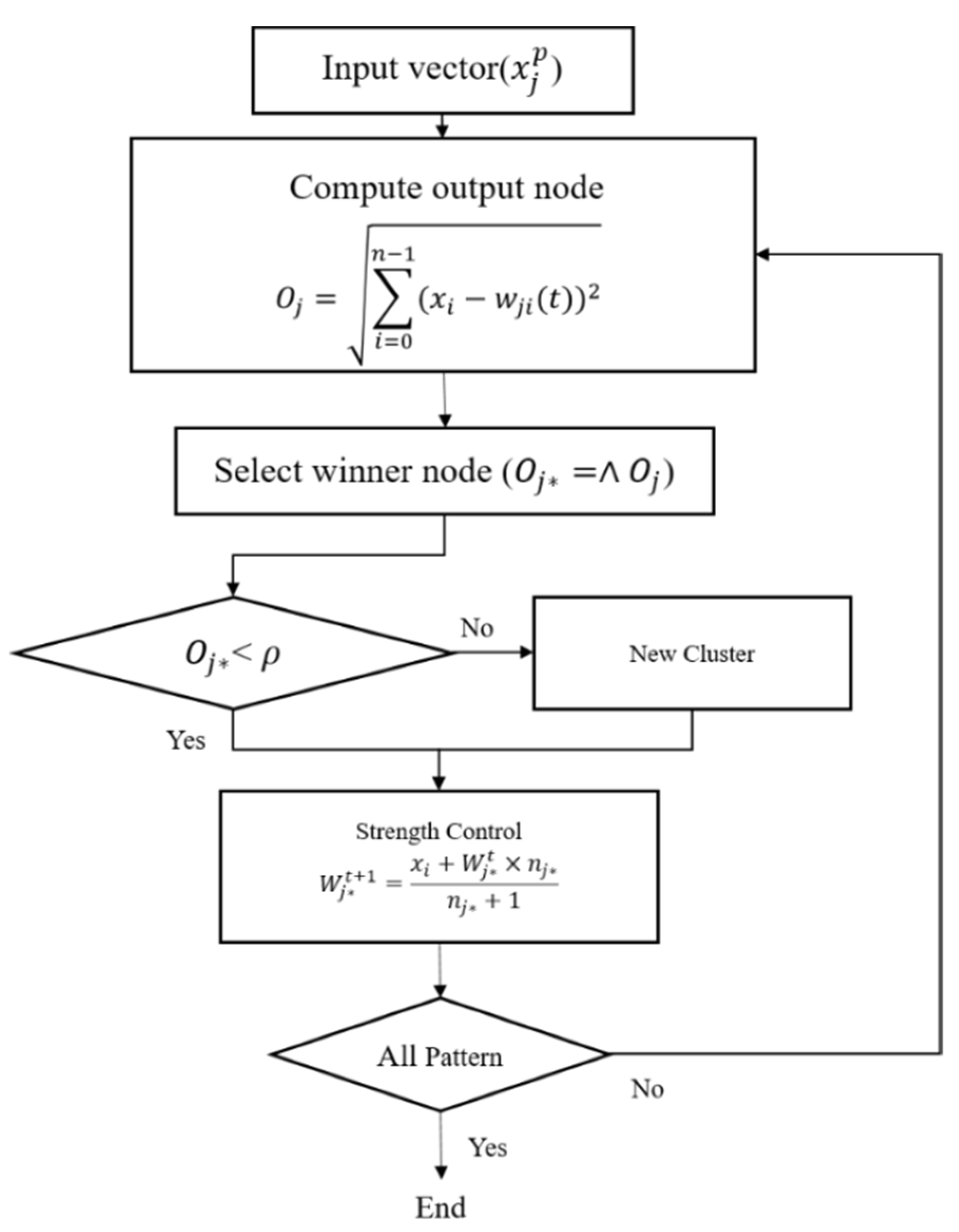

Although the FFT can effectively remove noise at the microvessel boundaries and background areas via a high-pass filter, the entire background area cannot be removed. Therefore, the ART2-based quantization technique was employed to extract the candidate background areas [16]. The ART2 is an unsupervised learning algorithm that builds clusters of similar features by autonomously learning the input patterns. The ART2 algorithm has stability because it memorizes the already learned patterns and has adaptability, as it can learn new patterns. Furthermore, the high speed of the algorithm permits real-time learning. Figure 9 shows the flowchart of the ART2 algorithm.

Figure 9.

Flowchart of the adaptive resonance theory 2 (ART2) algorithm.

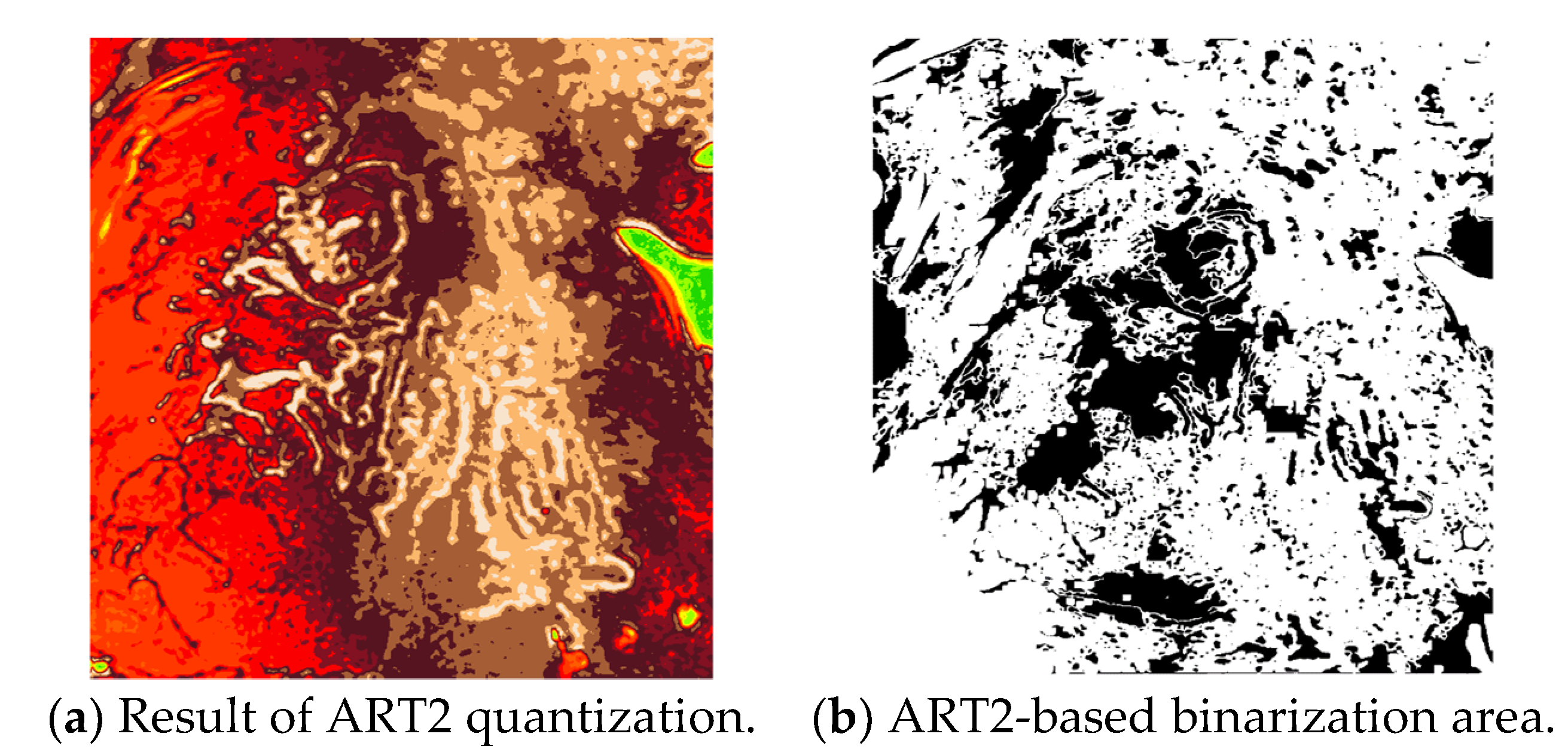

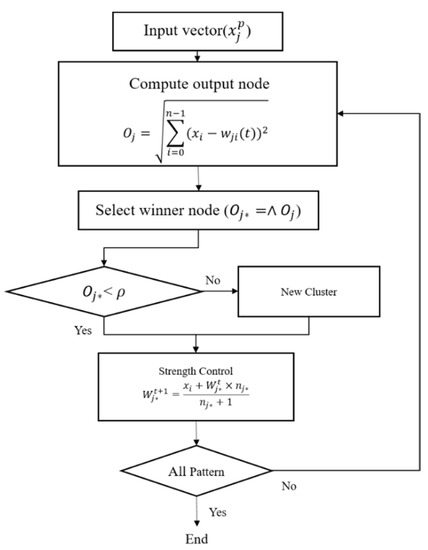

In this study, the vigilance parameter of the ART2 algorithm was set to 0.1. Among the clusters, the pixels that belong to a cluster with high brightness are set as the background area, and the image is binarized to distinguish the background and other areas. Figure 10 shows the quantization and binarization results obtained using the ART2 algorithm.

Figure 10.

Results of the ART2-based quantization and binarization.

The final microvessel area is extracted by removing the background area, shown in Figure 10b, from the candidate microvessel area from which the boundary of the microvessel has been removed.

3.3. Extraction of Object Information from the Microvessel Area

Contour Tracing Algorithm

Contour tracing is a method used to search for contour coordinates while moving along the contour of an object. Contour tracing is a widely applied effective method for acquiring object information from binary images. Table 1 presents the contour tracing algorithm [17].

Table 1.

Contour tracing algorithm.

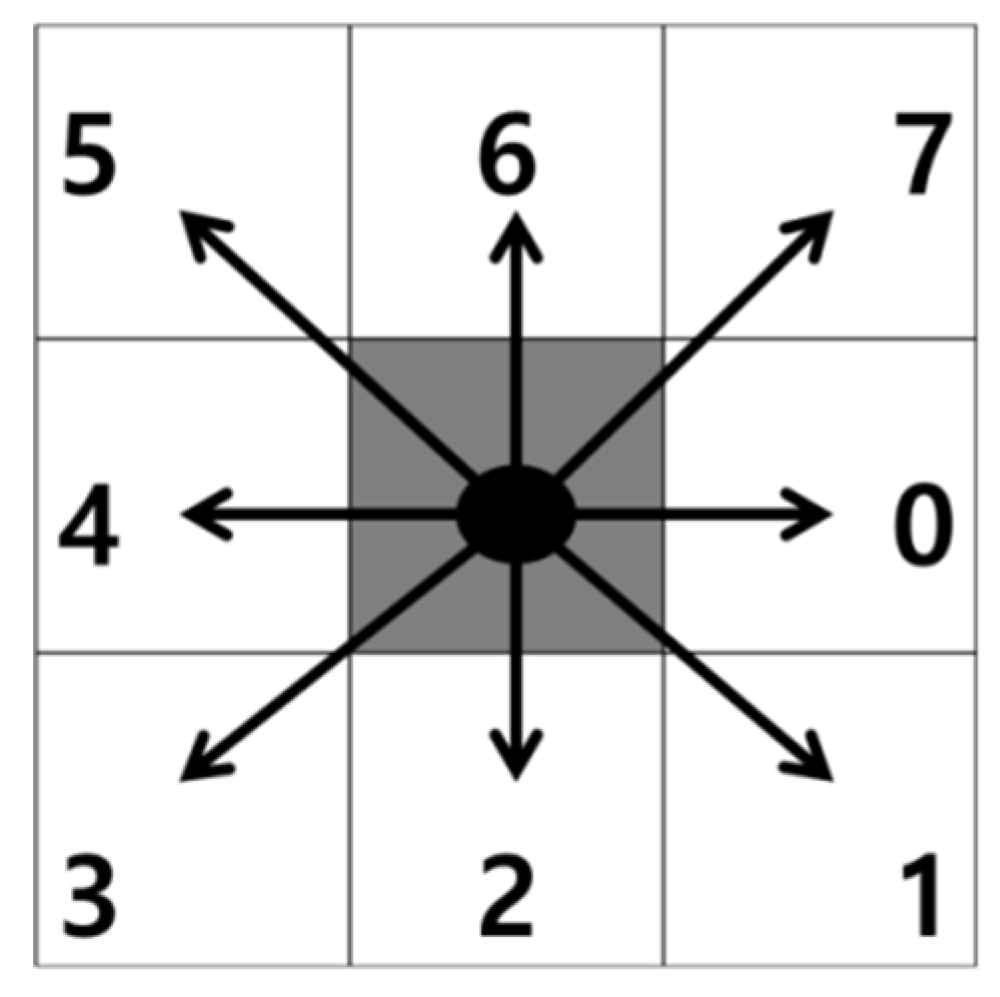

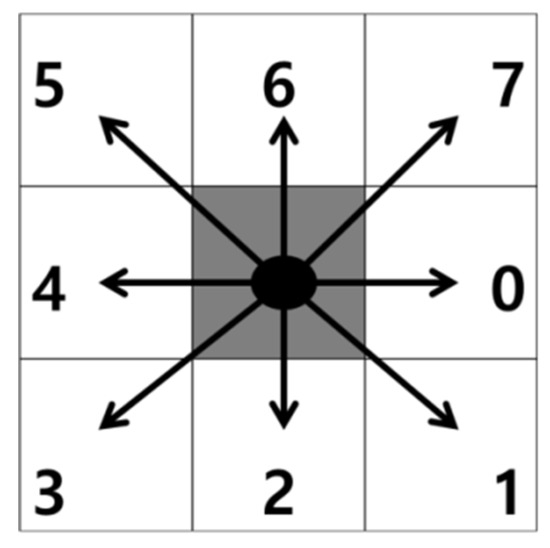

Figure 11.

Contour tracing directions.

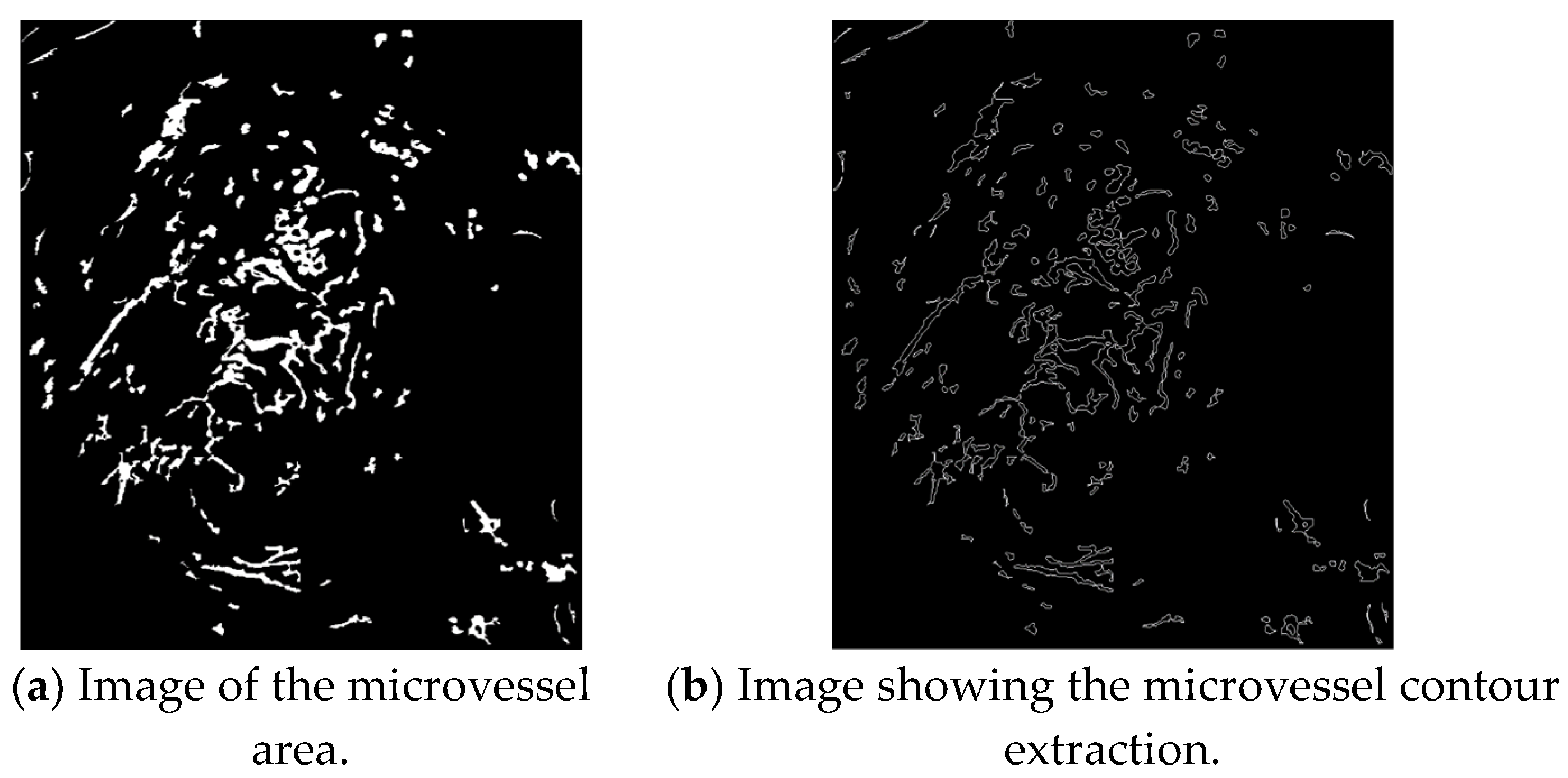

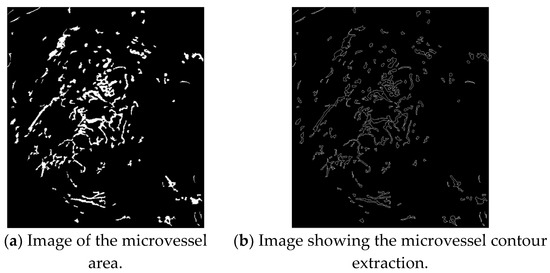

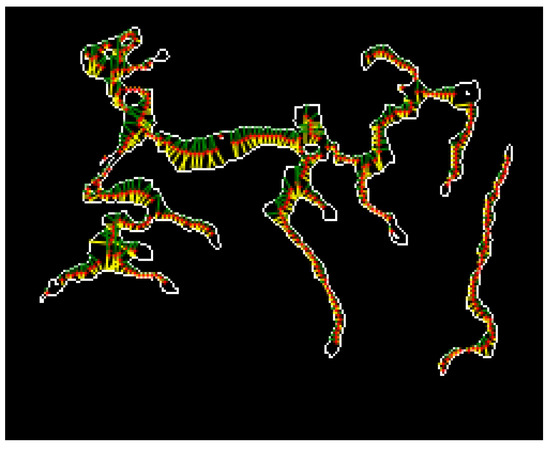

The contours of the extracted microvessels shown in Figure 12b can be obtained by applying the contour tracing algorithm to the image of the microvessel area shown in Figure 12a.

Figure 12.

Contour extraction images.

Figure 13 shows the magnifying endoscopy images of SESCCs according to the JES classification. As shown in Figure 13b,c, the endoscopy images of SESCCs exhibit various types of microvessels combined. For example, the B1 and B2 microvessels coexist in the B2-type image shown in Figure 13b. Therefore, in this study, as the B2- and B3-type microvessels are slenderer and longer than the B1 type, this morphological feature was exploited to extract the microvessel contours. The proposed microvessel extraction method extracts the 10 largest microvessels in the aligned contour following rearrangement of the microvessel contours. Subsequently, these 10 microvessels are set as the representative microvessels in the endoscopy images.

Figure 13.

B-type images according to the JES classification.

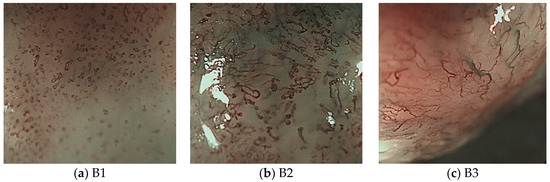

3.4. Extraction of Morphological Information from the Microvessels

To classify the different types of microvessels as the B1 and non-B1 types, the learning data input to the SVM are composed of eight data points from the microvessels as shown in Figure 14. The objects can be largely divided into an enclosing box. The enclosing box method is used to enclose an object within () and () as shown in Figure 14a, with the bounding box used to find the long and short axes of the object and surround the object with minimum area. To measure the aspect ratio of the microvessels accurately, the microvessel information is extracted using the bounding box, as shown in Figure 14b.

Figure 14.

Morphological features of the microvessels.

The center point , shown in Figure 14, can be calculated using the following equation:

P in Equations (25) and (26) is the perimeter of object ③ in Figure 14, and is the pixel count along the object perimeter. and are the and coordinates of the perimeter, respectively.

① The x-axis is the object length, ② the y-axis is the object width, ③ the perimeter is the pixel count along the object contour, ④ the area is the pixel count enclosed by the boundary of the object, ⑤ the row dispersion, ⑥ column dispersion, ⑦ mixed dispersion, and ⑧ roundness are calculated from Equation (26) with denoting the area of the object.

3.5. Microvessel Thickness Measurement

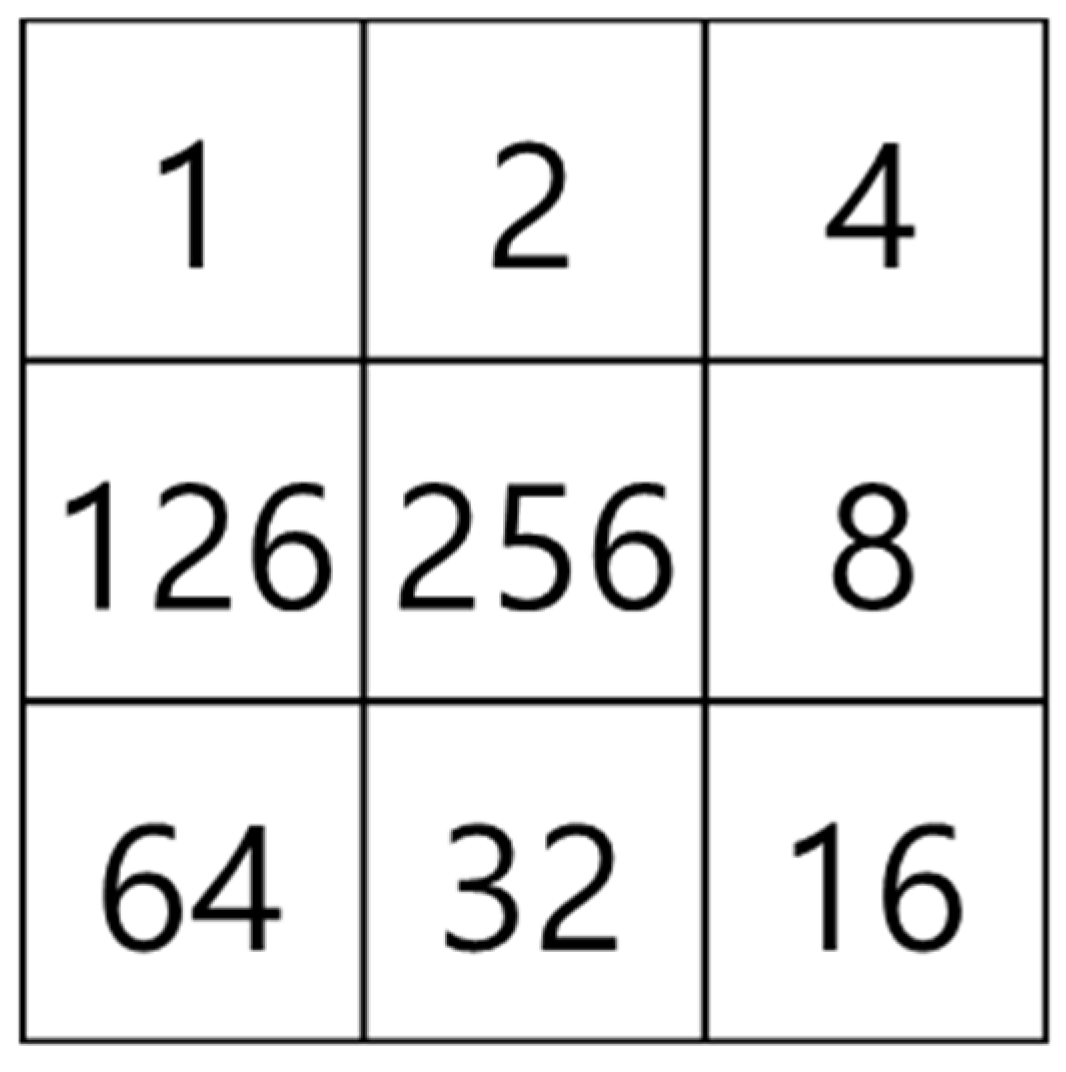

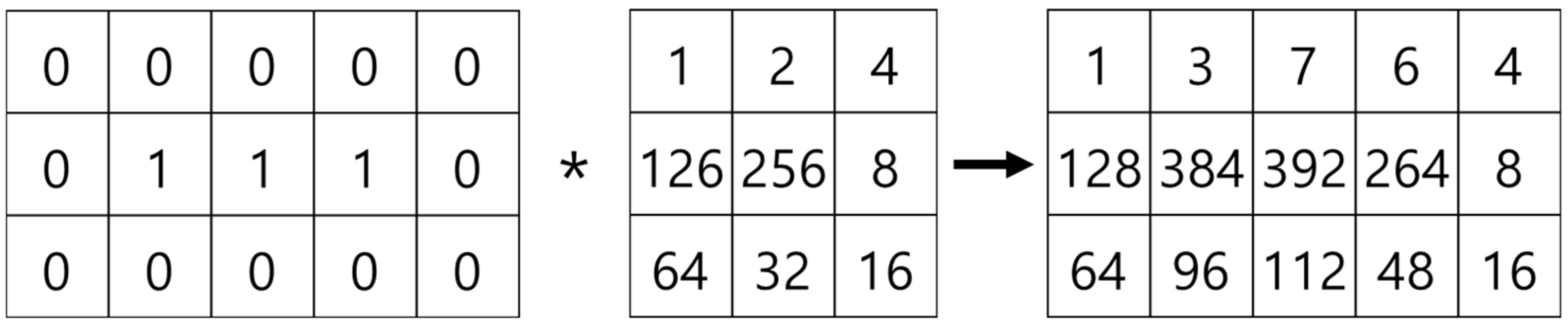

3.5.1. Extraction of the Microvessel Center Axis

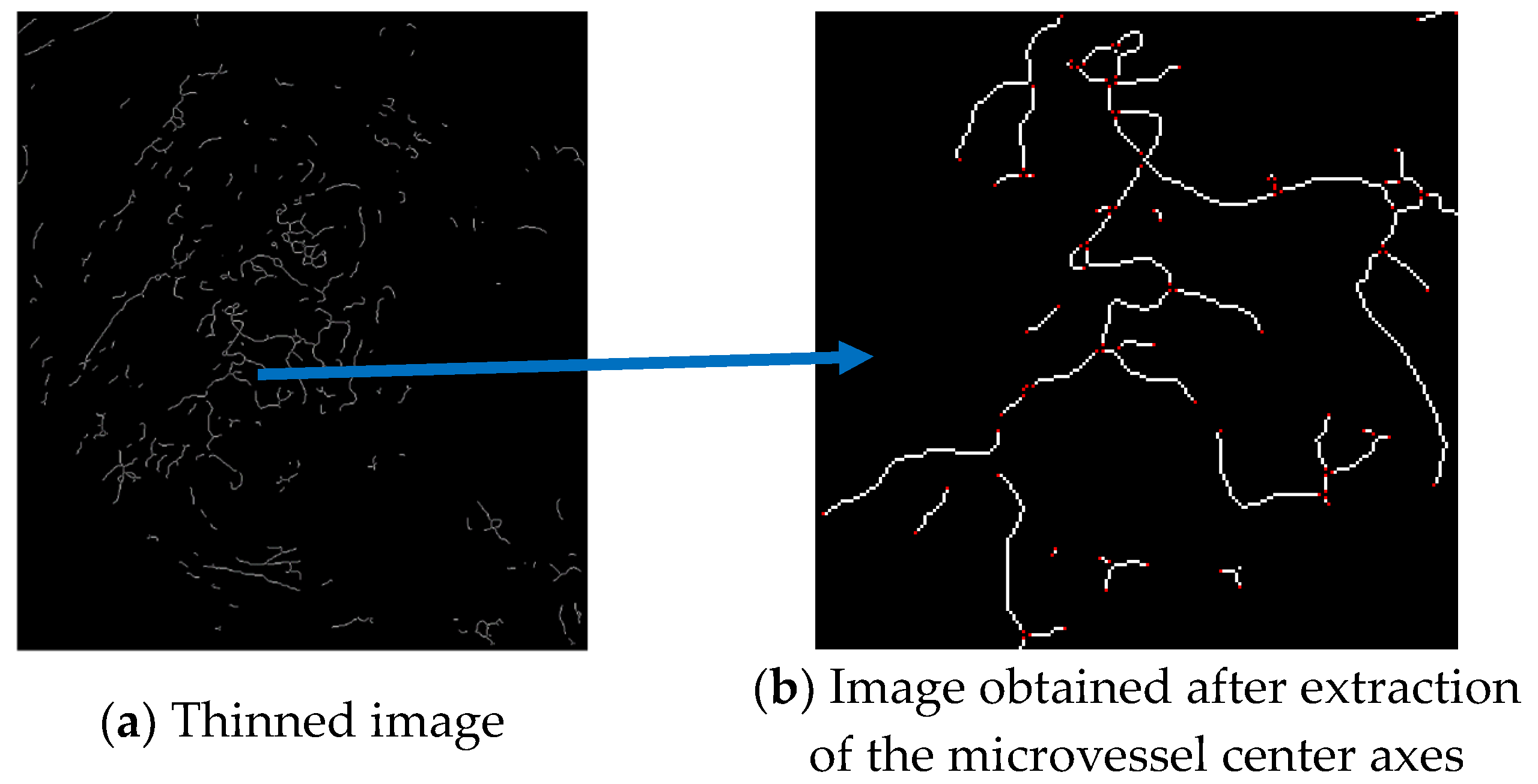

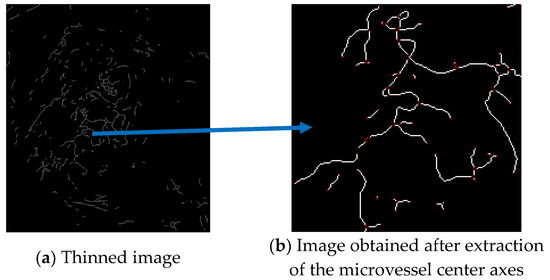

As the first step in measuring the microvessel thickness, the center axis of a microvessel is extracted by applying the thinning algorithm to the candidate microvessel area. As the microvessels in SESCCs have irregular morphologies, every point passing through the center axis of the microvessel is measured to obtain an accurate thickness measurement. Therefore, the microvessel to which the thinning algorithm is applied has branch points that form a branch shape. The branch points are removed because it is difficult to determine the search direction based on the center axis.

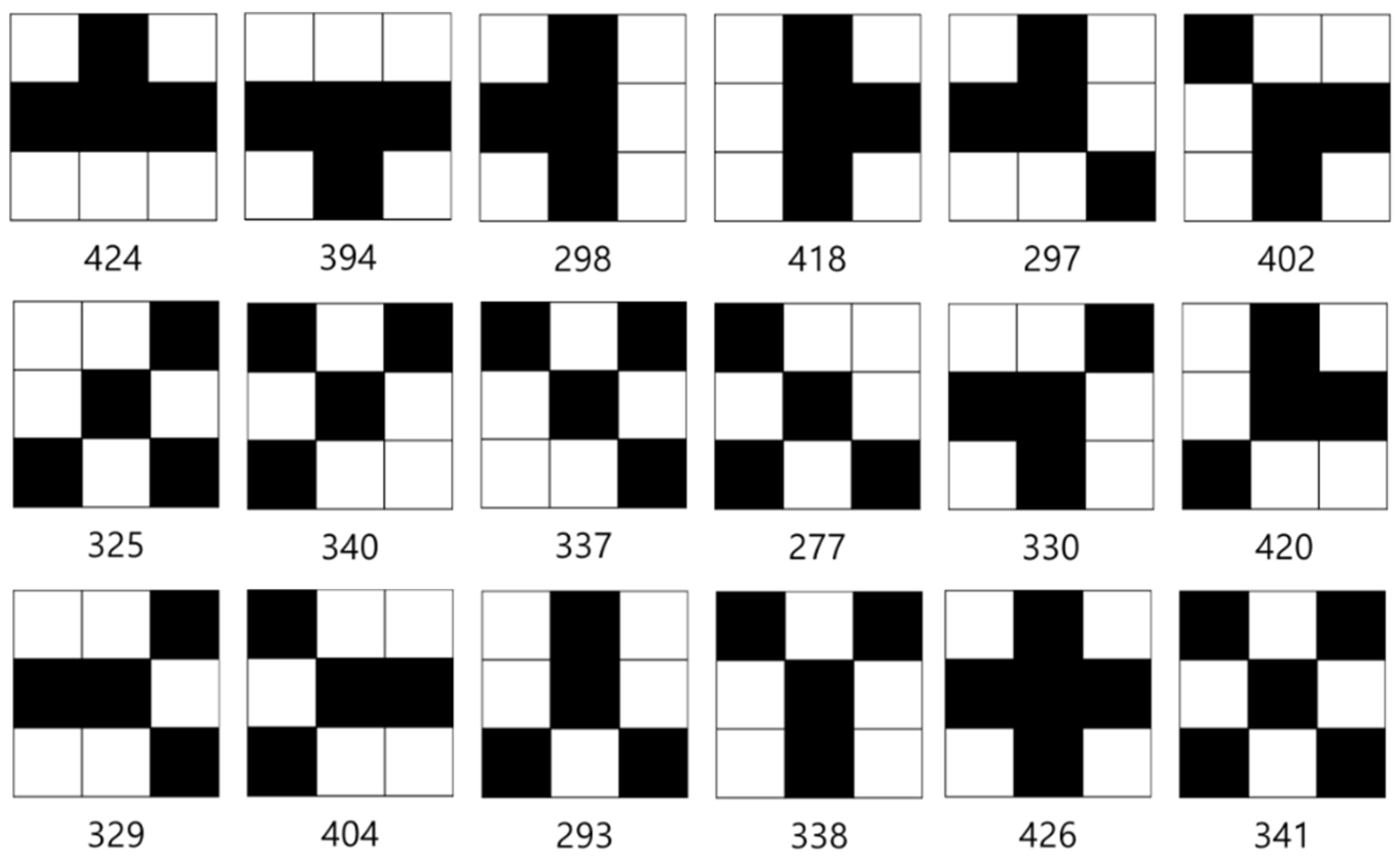

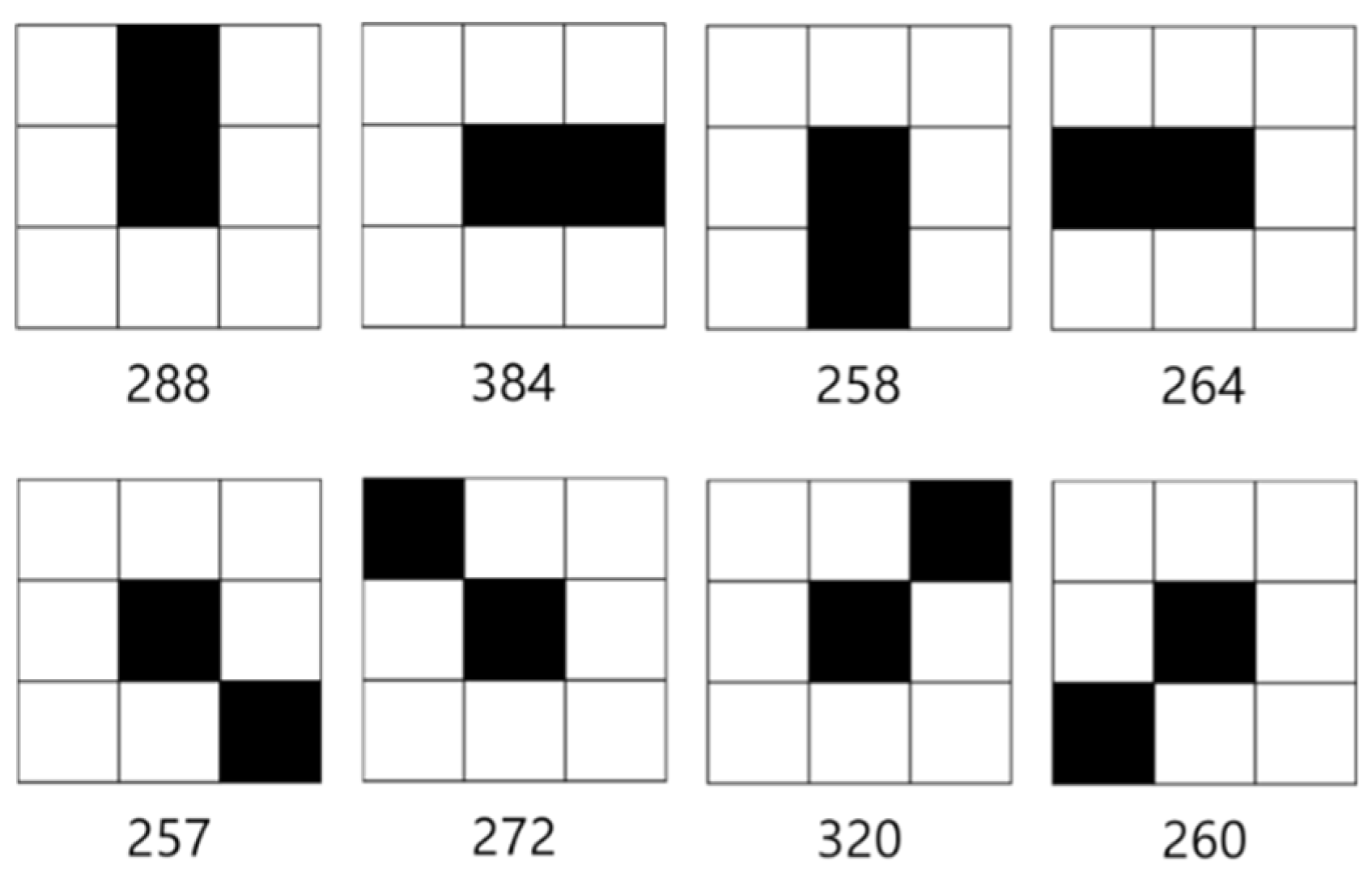

The mask shown in Figure 15, which was proposed by Olsen [18], is applied to determine the microvessel end points and branch points. Figure 16 shows the operation process for thinning the image by applying the mask shown in Figure 15. Figure 17 shows all possible patterns of the branch points in the thinned image and the operation results. Figure 18 shows all the end points and the corresponding operation results in the thinned image.

Figure 15.

Feature detection mask.

Figure 16.

Operation process using the feature detection mask.

Figure 17.

Branch point patterns.

Figure 18.

End point patterns.

Figure 19a shows the thinned image of the microvessels, while Figure 19b shows the microvessel center axes extracted by removing the branch points using the mask proposed by Olsen.

Figure 19.

Microvessel center axes extracted by removing branch points from the thinned image.

3.5.2. Microvessel Thickness Measurement

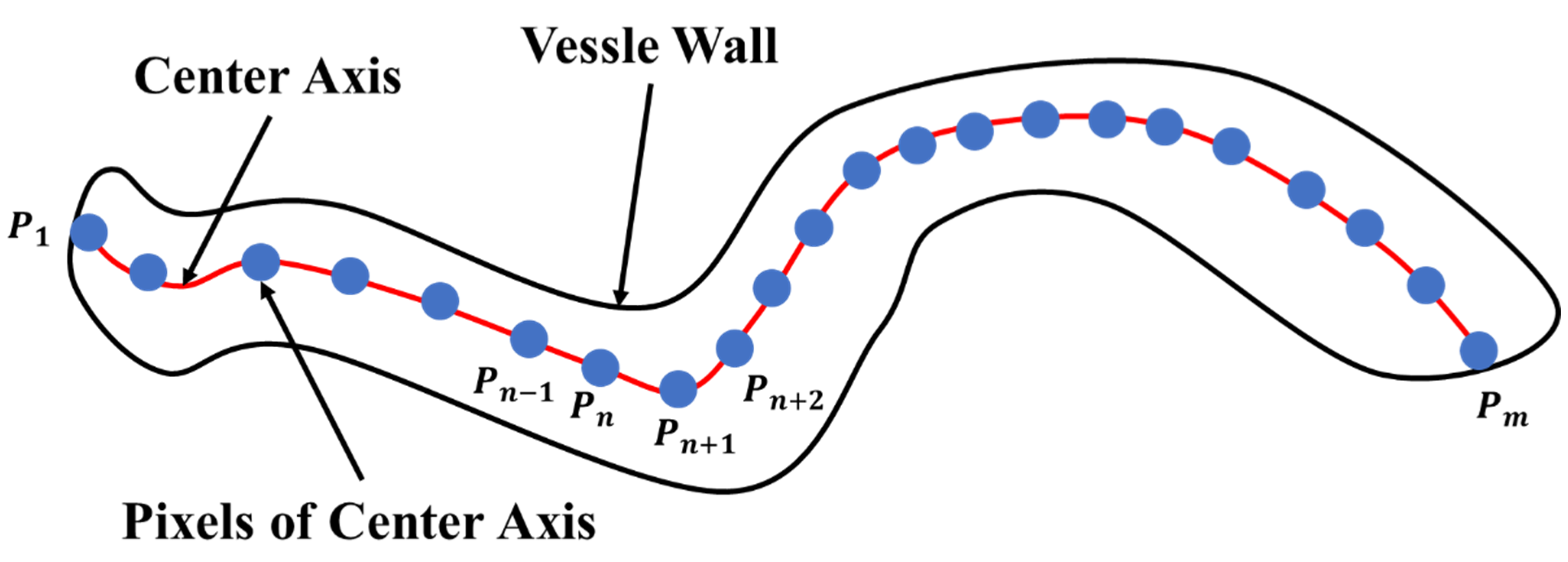

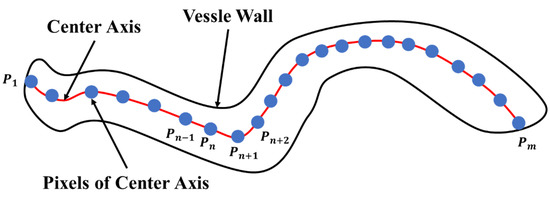

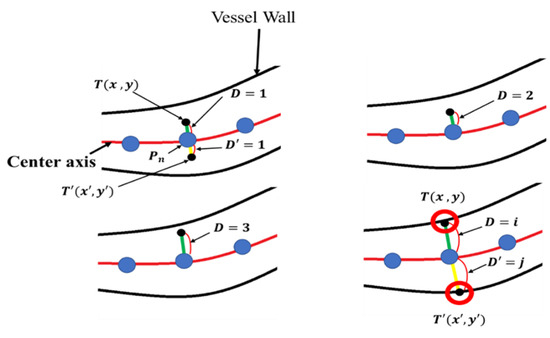

In the microvessel object shown in Figure 20, the red, solid line represents the microvessel center axis, and the blue points represent the pixels along the center axis. For example, the pixels along the center axis are indicated by 1, …, , where and represent the end points of the microvessel object.

Figure 20.

Schematic view of a microvessel.

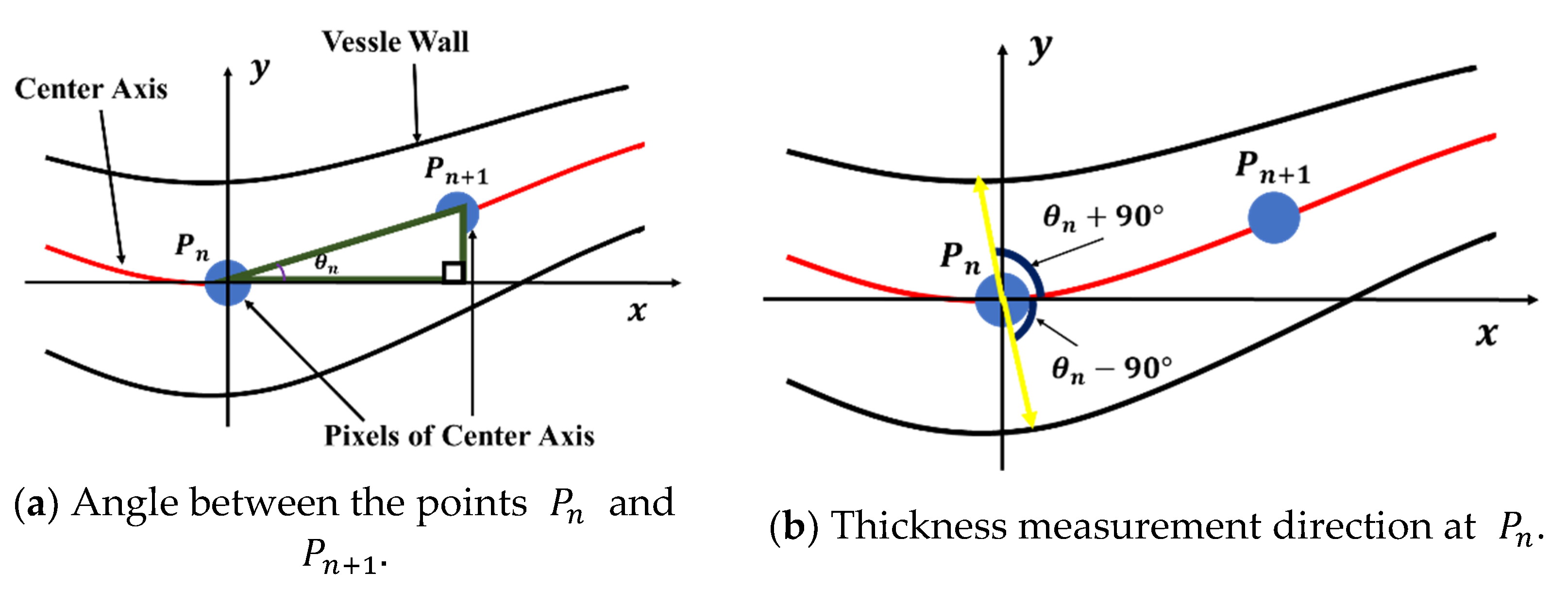

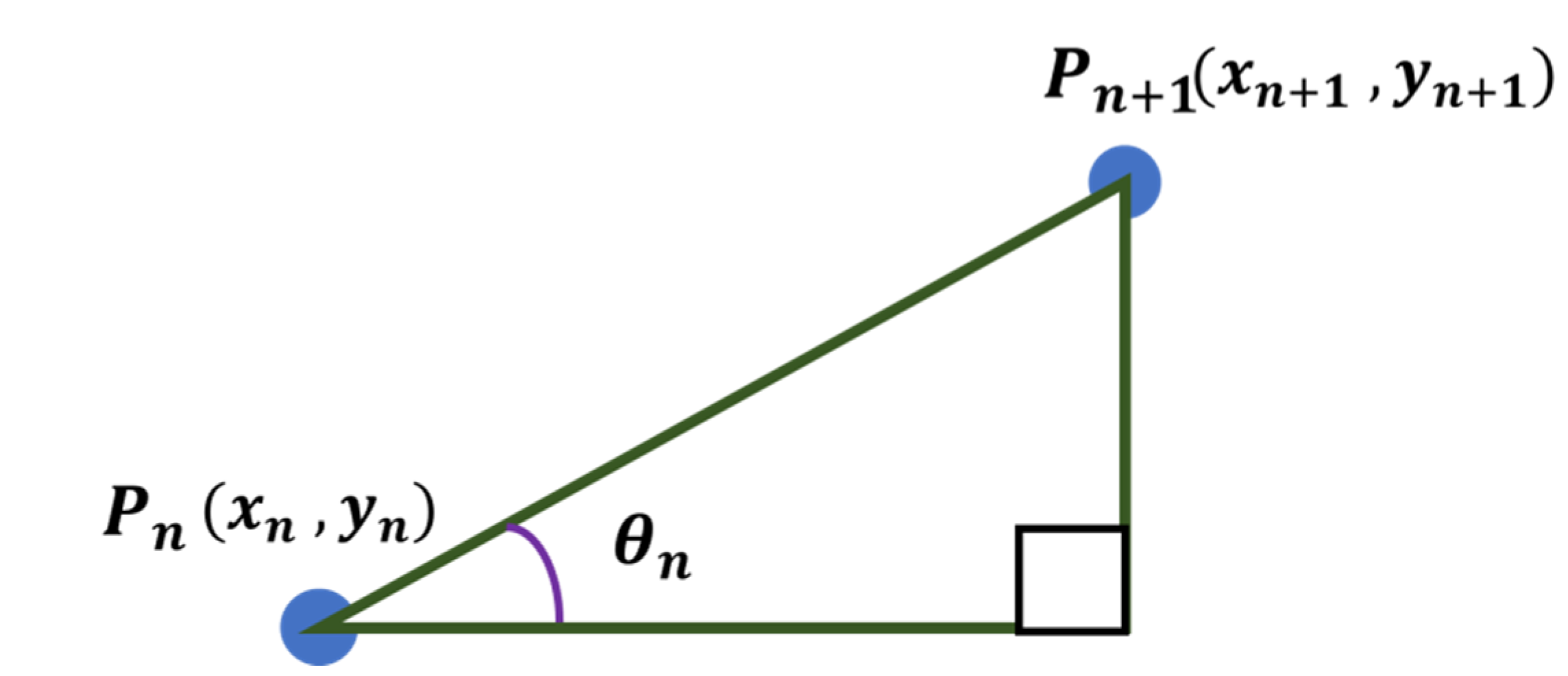

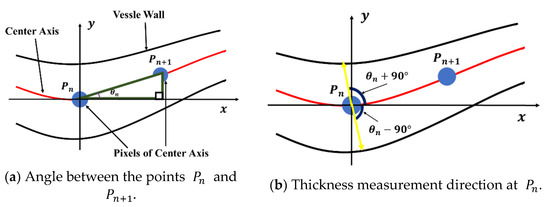

The microvessel thickness at point is calculated using the angle defined by and . In Figure 21b, the microvessel thickness is given by the length of the straight line passing through and . To calculate the angle , the points of each center axis are arranged by distance. Thus, the distance from to along the center axis of the microvessel can be calculated using Equation (27). In this study, the Euclidean distance method was applied to calculate the distance.

Figure 21.

Thickness measurement method using the angle defined by and .

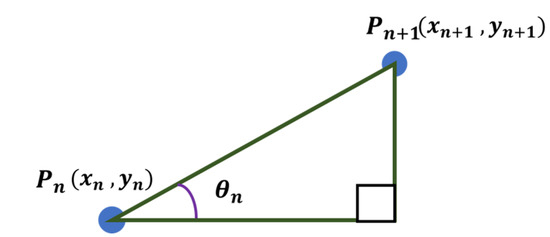

Assuming that the adjacent points and exist among the points arranged around the center point, is defined as shown in Figure 22 and can be calculated using Equation (28).

Figure 22.

Angle between any two points.

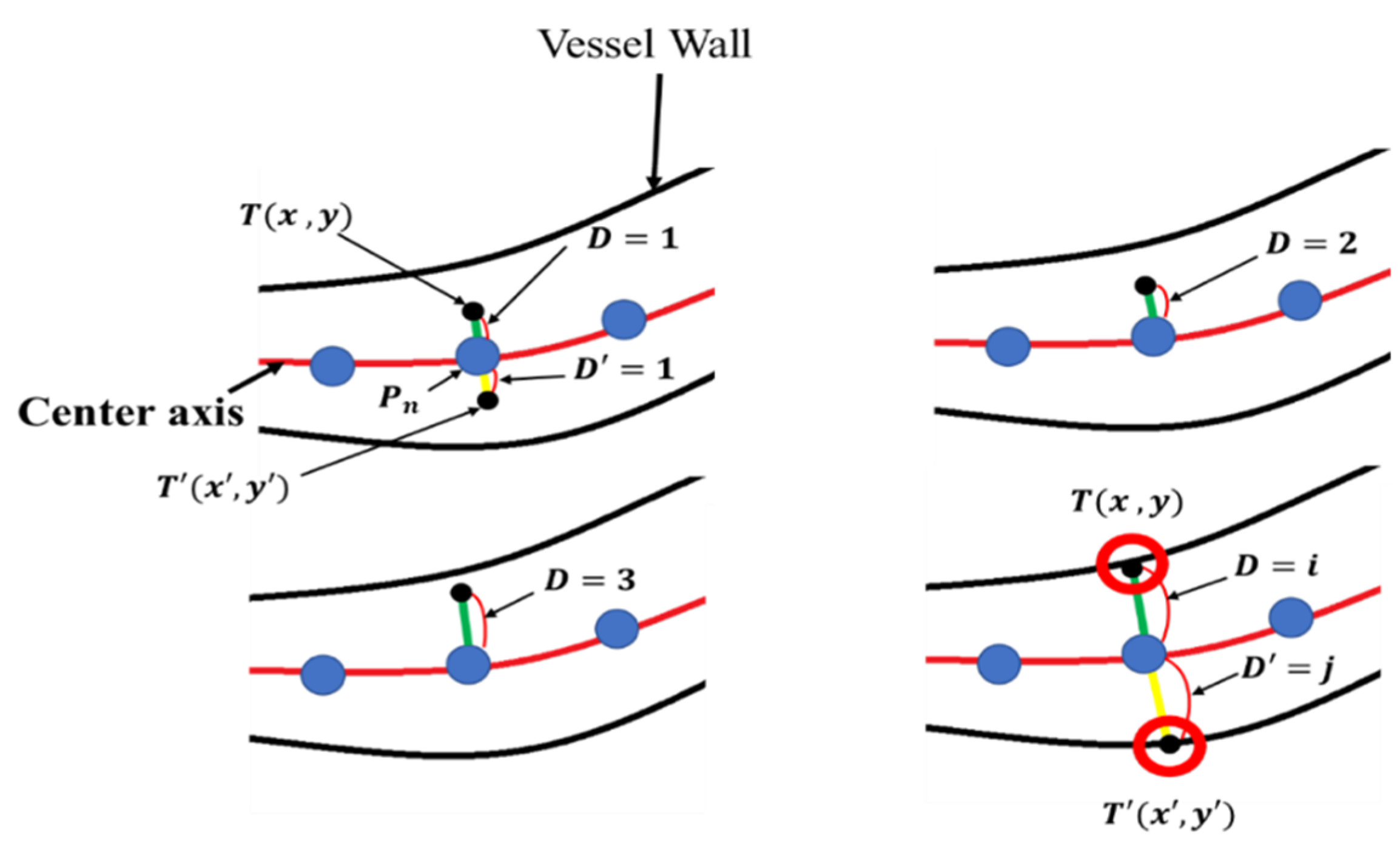

The microvessel thickness measurement method using is illustrated in Figure 23. The green line in Figure 23 represents , which is the length to be measured at , while the yellow line represents which is the line to be measured at .

Figure 23.

Microvessel thickness measurement method.

is the coordinate of the point at a distance D from , and is the coordinate of the point at a distance from . and are increased by 1 until the microvessel wall is encountered. and can be obtained using Equation (29).

Table 2.

Flowchart of the thickness measurement method.

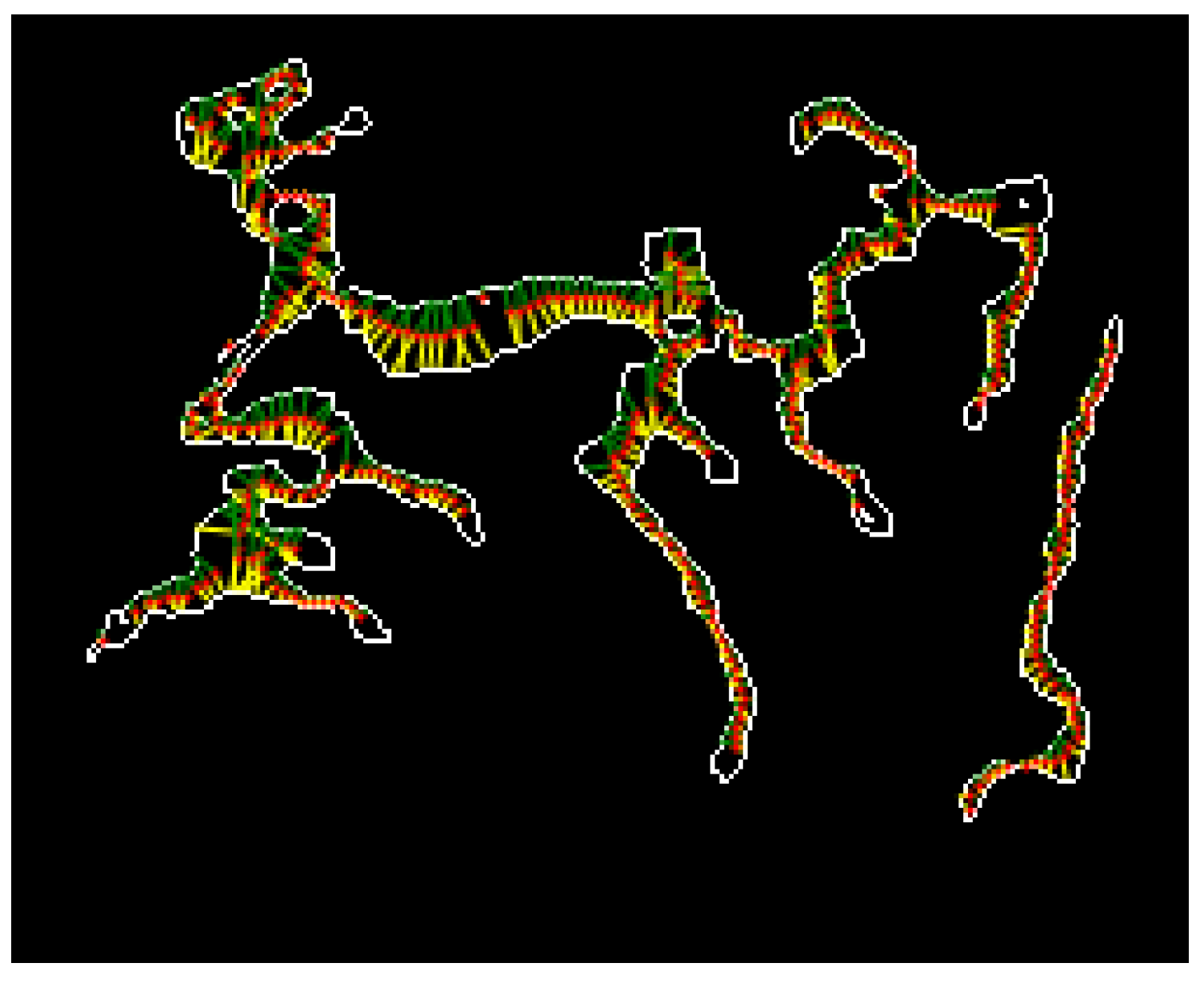

The thickness measurement results are shown in Figure 24. The red points represent the pixels along the center axis of the microvessel. The green and yellow lines indicate the length measurements at and from the pixels along the microvessel center axis, respectively.

Figure 24.

Microvessel thickness measurement results.

4. Results

4.1. Environment

Herein, experiments were conducted with 114 images (47 B1 type, 48 B2 type, and 19 B3 type images) to classify features in the magnifying endoscopy images of SESCCs. The magnifying endoscopy images were collected using a video endoscopy system with narrow-band imaging function (EVIS-LUCERA SPECTRUM system; Olympus, Tokyo, Japan) and a magnifying endoscope (GIF-H260Z; Olympus, Tokyo, Japan). The control and analysis software was developed using Visual Studio 2015 C# on a PC with an Intel Core i7-3500U CPU and 12.0 GB RAM.

4.2. Experiment Results

4.2.1. Classification Experiment Results for B1 and Non-B1 Types

In this study, the linear kernel was selected from among the various types of SVM kernels for simplicity. The SVM learning model was combined with the OAA method which is a multi-class learning technique [19]. The SVM learning was performed by setting one class of data as positive and the other classes as negative. Herein, the B1 type was selected as the positive class, while the B2 and B3 types were the negative classes. The fivefold validation method was adopted to verify the learning data. Accuracy, sensitivity, and specificity were employed as performance indices, as shown in Equation (30), to determine the pattern classification performance for SESCCs. The TP in Equation (30) represents a true positive (i.e., an actual positive was correctly predicted), while TN represents a true negative (i.e., an actual negative was correctly analyzed). Furthermore, FP represents a false negative (i.e., an actual negative was analyzed as positive), while FN represents a false negative (i.e., an actual positive was predicted as negative).

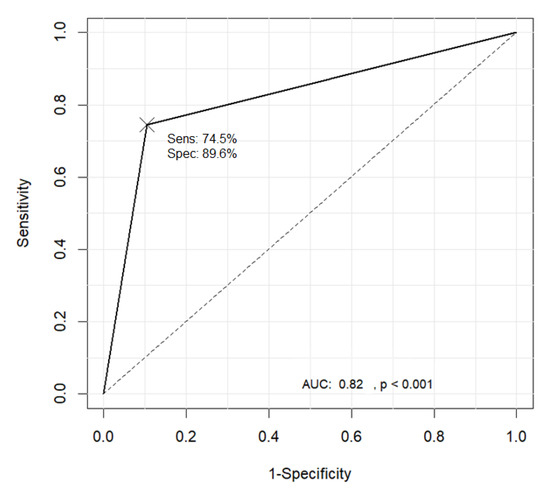

Table 3 shows the pattern classification results obtained using the proposed method where the ground truth was provided by a domain expert. Substituting the TP, TN, FP, and FN values from Table 3 into Equation (30) resulted in an accuracy of 83.3%, with a sensitivity and specificity of 74.5% and 89.6%, respectively. The graph showing the receiver operating characteristic (ROC) curve in Figure 25 was drawn using the R software.

Table 3.

Classification performance results for the B1 versus non-B1 types (114 cases).

Figure 25.

Receiver operating characteristic (ROC) curve for the classification results of the B1 and non-B1 types.

4.2.2. Classification Experiment Results for the B2 and B3 Types

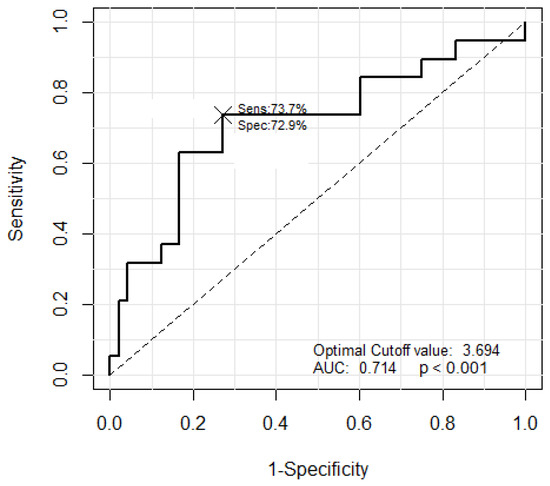

The JES classification was used to classify the B2 and B3 types, based on the morphological criterion that the thickness of the B3 type microvessel is more than three times that of the B2 type microvessels. The JES classification was applied based on the average measured thickness value of the microvessels. The largest thickness value among the measurements was not used, as this might lead to inaccurate results in the presence of an outlier. Thus, the average of the five largest measured thickness values was set as the representative thickness of a thick microvessel. Next, the B2 type microvessels were extracted to facilitate comparisons with the representative microvessel. The B2 type microvessels have long and slender morphological features. Therefore, five microvessels with the smallest area to perimeter ratio were selected as the B2 type among 20 microvessels with an object size ranking between 11 and 30, excluding the representative microvessels. The ratio of the thickness of the representative thick microvessels to the average thickness of the five extracted B2 type microvessels was compared and analyzed. The optimal cut-off value was 3.694. The microvessel classification performance of the B2 and B3 types is shown in Figure 26.

Figure 26.

The ROC curve corresponding to the classification results of the B2 and B3 types.

The classification results for the B2 and B3 types with the optimal cut-off value of 3.694 are shown in Table 4. Substituting the TP, TN, FP, and FN values from Table 4 into Equation (30) resulted in an accuracy of 71.6% with a sensitivity and specificity of 73.7% and 70.8%, respectively.

Table 4.

Classification results of the B2 and B3 types with the optimal cut-off value.

The clinical classification results for the B2 and B3 types with a cut-off-value of 3.7 instead of 3.694 are shown in Table 5. The TP, TN, FP, and FN values from Table 5 resulted in an accuracy of 73.1% with a sensitivity and specificity of 73.7% and 72.9%, respectively.

Table 5.

Feature classification results for the B2 and B3 types with a cut-off value of 3.7.

5. Discussion

In this study, an automatic classification method for IPCL patterns based on the JES classification was proposed to estimate the invasion depth of a tumor by extracting microvessels from the magnifying endoscopy images of SESCCs. The microvessels were extracted using the features of IPCLs in the endoscopy images, and the SVM algorithm and proposed thickness measurement method were applied to the extracted microvessels to classify them into the B1, B2, and B3 types satisfying the JES classification. The SVM algorithm employed for classification into the B1 and non-B1 types was trained using a linear kernel. To evaluate the performance of the proposed method, experiments were conducted with 114 magnifying endoscopy images of SESCCs. The results showed an accuracy of 83.3%, with a sensitivity and specificity of 74.5% and 89.6%, respectively. Furthermore, the microvessel thickness was measured and compared to facilitate the classification of the non-B1 type into the B2 and B3 types. When the cut-off ratio of the largest thickness of the representative microvessels to the average thickness of five B2-type microvessels was set to 3.7, the experiment results showed an accuracy of 73.1% with a sensitivity and specificity of 73.7% and 72.9%, respectively. This indicated that the proposed method can provide an objective interpretation of the magnifying endoscopy images of SESCCs. Thus, this study demonstrates the feasibility of the proposed method as a computer-aided diagnosis system in clinical practice.

A recent study on artificial intelligence for the classification of IPCL patterns in the endoscopic diagnosis of SESCCs analyzed 7046 magnifying endoscopy images from 17 patients (10 SESCC, 7 normal) using a convolutional neural network (CNN) [20]. The accuracy, sensitivity, and specificity for abnormal IPCL patterns were 93.7%, 89.3%, and 98%, respectively. Another recent study introduced a computer-aided diagnosis system for the real-time automated diagnosis of precancerous lesions and SESCCs in 6473 narrow-band imaging images [21]. The sensitivity and specificity for diagnosing precancerous lesions and SESCCs were 98.0% and 95.0%, respectively. These outcomes were also observed in video datasets. However, the main output/outcome of these two studies was to only differentiate between the normal and abnormal. Detailed classification into the B1, B2, and B3 types, as presented in this study, was not reported. In addition, the non-B1 type was sub-classified into the B2 and B3 types using the microvessel thickness measurement method developed in this study.

This study has several limitations. First, the evaluated dataset had a relatively small number of images (114), and all magnifying endoscopy images were retrospectively obtained from a single center. The age-standardized incidence rate was 5.9 per 100,000 population in 2013, and the proportion of SESCCs in whole esophageal cancers was less than 10% in Korea [22]. Furthermore, only high-quality magnifying endoscopy images were employed. The proposed system might produce erroneous results for images that are blurred or out of focus. Therefore, only a small number of SESCC images were included in this study. Therefore, the ability of the proposed automatic classification method to evaluate poor-quality endoscopy images should be investigated after validating its performance on a large dataset of high-quality images.

Despite these limitations, the results demonstrated the ability of the proposed machine learning based computer-aided diagnostic system to obtain objective data by analyzing the pattern and caliber of the microvessels with acceptable performance. Future work will focus on a large-scale, multi-center study to validate the diagnostic ability of the proposed system to predict the invasion depth of the tumor in SESCCs.

Furthermore, to stabilize the performance of the proposed system and validate its clinical efficacy, further research is necessary to enhance the processing speed and improve diagnostic consistency between the endoscopists’ evaluation and the proposed method. This can potentially be achieved using the deep Boltzmann machine algorithm to increase the accuracy of the pattern classification of SESCCs.

Author Contributions

Conceptualization, K.B.K. and G.H.K.; methodology, K.B.K., G.Y.Y., and G.H.K.; software, K.B.K. and G.Y.Y.; validation, G.H.K and H.K.J.; formal analysis, G.Y.Y.; resources, G.H.K.; data curation, G.Y.Y. and G.H.K.; writing—Original draft preparation, K.B.K., G.Y.Y., and G.H.K.; writing—Review and editing, K.B.K., G.Y.Y., G.H.K., D.H.S., and H.K.J.; visualization, D.H.S.; supervision, K.B.K. and G.H.K.; project administration, K.B.K.; funding acquisition, G.H.K. and H.K.J. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by Biomedical Research Institute Grant (2017B004), Pusan National University Hospital.

Conflicts of Interest

The authors declare no conflict of interest regarding the publication of this paper.

References

- Kumagai, Y.; Toi, M.; Kawada, K.; Kawano, T. Angiogenesis in superficial esophageal squamous cell carcinoma: Magnifying endoscopic observation and molecular analysis. Dig. Endosc. 2010, 22, 259–267. [Google Scholar] [CrossRef] [PubMed]

- Oyama, T.; Inoue, H.; Arima, M.; Omori, T.; Ishihara, R.; Hirasawa, D.; Takeuchi, M.; Tomori, A.; Goda, K. Prediction of the invasion depth of superficial squamous cell carcinoma based on microvessel morphology: Magnifying endoscopic classification of the Japan Esophageal Society. Esophagus 2017, 14, 105–112. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.J.; Kim, G.H.; Lee, M.W.; Jeon, H.K.; Baek, D.H.; Lee, B.H.; Song, G.A. New magnifying endoscopic classification for superficial esophageal squamous cell carcinoma. World J. Gastroenterol. 2017, 23, 4416–4421. [Google Scholar] [CrossRef] [PubMed]

- Sato, H.; Inoue, H.; Ikeda, H.; Sato, C.; Onimaru, M.; Hayee, B.; Phlanusi, C.; Santi, E.G.R.; Kobayashi, Y.; Kudo, S. Utility of intrapapillary capillary loops seen on magnifying narrow-band imaging in estimating invasive depth of esophageal squamous cell carcinoma. Endoscopy 2015, 47, 122–128. [Google Scholar] [CrossRef] [PubMed]

- Arima, M.; Tada, T.; Arima, H. Evaluation of microvascular patterns of superficial esophageal cancers by magnifying endoscopy. Esophagus 2005, 2, 191–197. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-Vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Ebrahimi, M.A.; Khoshtaghaza, M.H.; Minaei, S.; Jamshidi, B. Vision-Based pest detection based on SVM classification method. Comput. Electron. Agric. 2017, 137, 52–58. [Google Scholar] [CrossRef]

- Sun, F.; Xu, Y.; Zhou, J. Active learning SVM with regularization path for image classification. Multimed. Tools Appl. 2016, 75, 1427–1442. [Google Scholar] [CrossRef]

- Yang, X.; Yu, Q.; He, L.; Guo, T. The one-against-all partition based binary tree support vector machine algorithms for multi-class classification. Neurocomputing 2013, 113, 1–7. [Google Scholar] [CrossRef]

- Gutierrez, M.A.; Pilon, P.E.; Lage, S.G.; Kopel, L.; Carvalho, R.T.; Furuie, S.S. Automatic measurement of carotid diameter and wall thickness in ultrasound images. Comput. Cardiol. 2002, 29, 359–362. [Google Scholar]

- Lowell, J.; Hunter, A.; Steel, D.; Basu, A.; Ryder, R.; Lee Kennedy, R. Measurement of retinal vessel widths from fundus images based on 2-D modeling. IEEE Trans. Med. Imaging 2004, 23, 1196–1204. [Google Scholar] [CrossRef] [PubMed]

- Kim, K.B.; Song, Y.S.; Park, H.J.; Song, D.H.; Choi, B.K. A fuzzy C-means quantization based automatic extraction of rotator cuff tendon tears from ultrasound images. J. Intell. Fuzzy Syst. 2018, 35, 149–158. [Google Scholar] [CrossRef]

- Saxena, L.P. Niblack’s binarization method and its modifications to real-time applications: A review. Artif. Intell. Rev. 2019, 51, 673–705. [Google Scholar] [CrossRef]

- Samorodova, O.A.; Samorodov, A.V. Fast implementation of the Niblack binarization algorithm for microscope image segmentation. Pattern Recognit. Image Anal. 2016, 26, 548–551. [Google Scholar] [CrossRef]

- Broughton, S.; Bryan, K. Discrete Fourier Analysis and Wavelets: Applications to Signal and Image Processing; Wiley-Interscience: Hoboken, NJ, USA, 2008. [Google Scholar]

- Park, J.; Song, D.H.; Han, S.S.; Lee, S.J.; Kim, K.B. Automatic extraction of soft tissue tumor from ultrasonography using ART2 based intelligent image analysis. Curr. Med. Imaging 2017, 13, 447–453. [Google Scholar] [CrossRef]

- Kim, K.B.; Kim, S. A passport recognition and face verification using enhanced fuzzy ART based RBF network and PCA algorithm. Neurocomputing 2008, 71, 3202–3210. [Google Scholar] [CrossRef]

- Olsen, M.A.; Hartung, D.; Busch, C.; Larsen, R. Convolution approach for feature detection in topological skeletons obtained from vascular patterns. In Proceedings of the 2011 IEEE Workshop on Computational Intelligence in Biometrics and Identity Management (CIBIM), Paris, France, 11–15 April 2011; pp. 163–167. [Google Scholar]

- Kang, C.; Huo, Y.; Xin, L.; Tian, B.; Yu, B. Feature selection and tumor classification for microarray data using relaxed Lasso and generalized multi-class support vector machine. J. Theor. Biol. 2019, 463, 77–91. [Google Scholar] [CrossRef] [PubMed]

- Everson, M.; Herrera, L.; Li, W.; Luengo, I.M.; Ahmad, O.; Banks, M.; Magee, C.; Alzoubaidi, D.; Hsu, H.M.; Graham, D.; et al. Artificial intelligence for the real-time classification of intrapapillary capillary loop patterns in the endoscopic diagnosis of early oesophageal squamous cell carcinoma: A proof-of-concept study. United Eur. Gastroenterol. J. 2019, 7, 297–306. [Google Scholar] [CrossRef] [PubMed]

- Guo, L.; Xiao, X.; Wu, C.; Zeng, X.; Zhang, Y.; Du, J.; Bai, S.; Xie, J.; Zhang, Z.; Li, Y.; et al. Real-Time automated diagnosis of precancerous lesions and early esophageal squamous cell carcinoma using a deep learning model (with videos). Gastrointest. Endosc. 2020, 91, 41–51. [Google Scholar] [CrossRef]

- Jung, H.K. Epidemiology of and risk factors for esophageal cancer in Korea. Korean J. Helicobacter Up. Gastrointest. Res. 2019, 19, 145–148. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).