Measurement of the Permissible Range of Consistency between Visual and Tactile Presentations of Line Grating Textures

Abstract

1. Introduction

2. Materials and Methods

2.1. Measurement Method

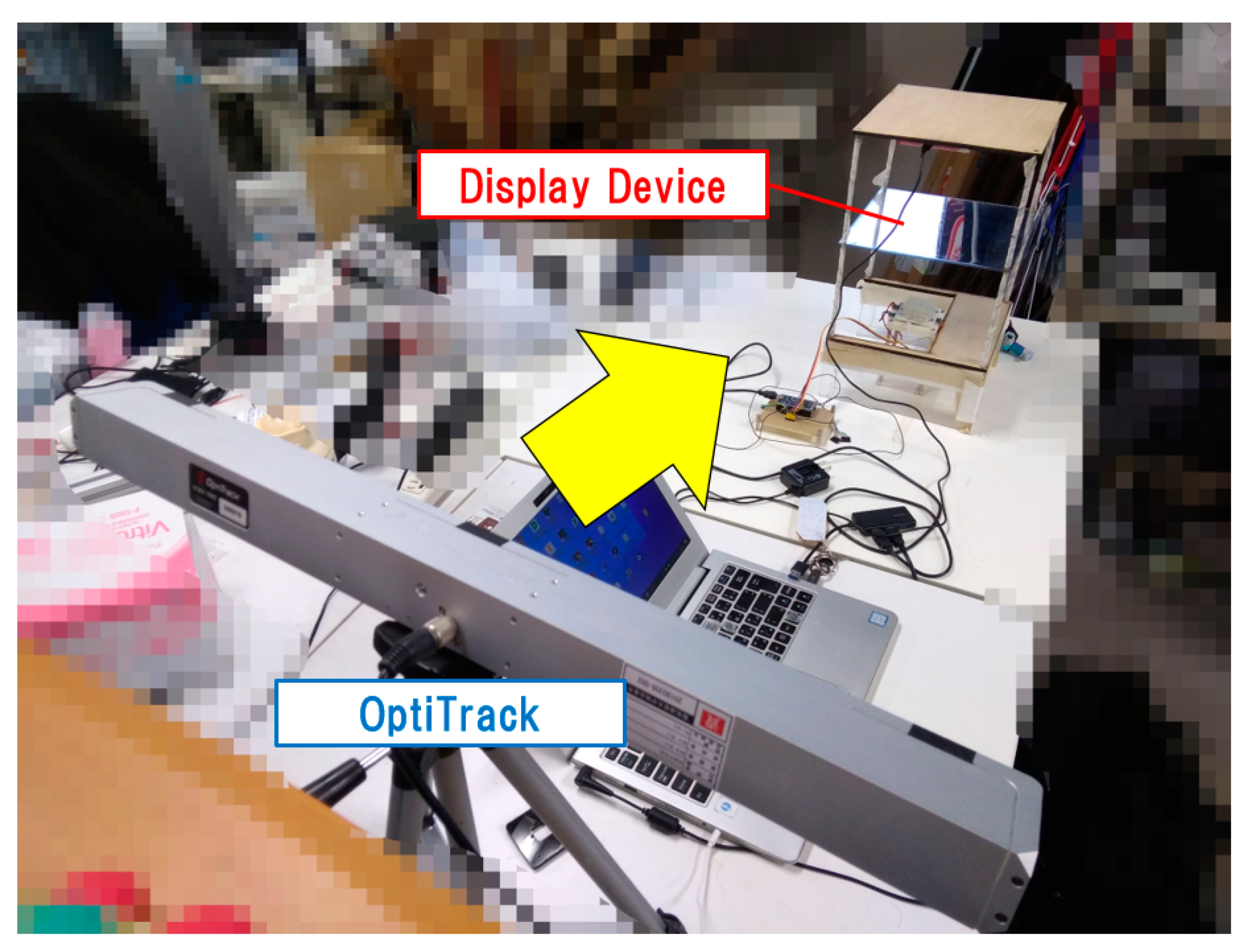

2.1.1. Display Equipment

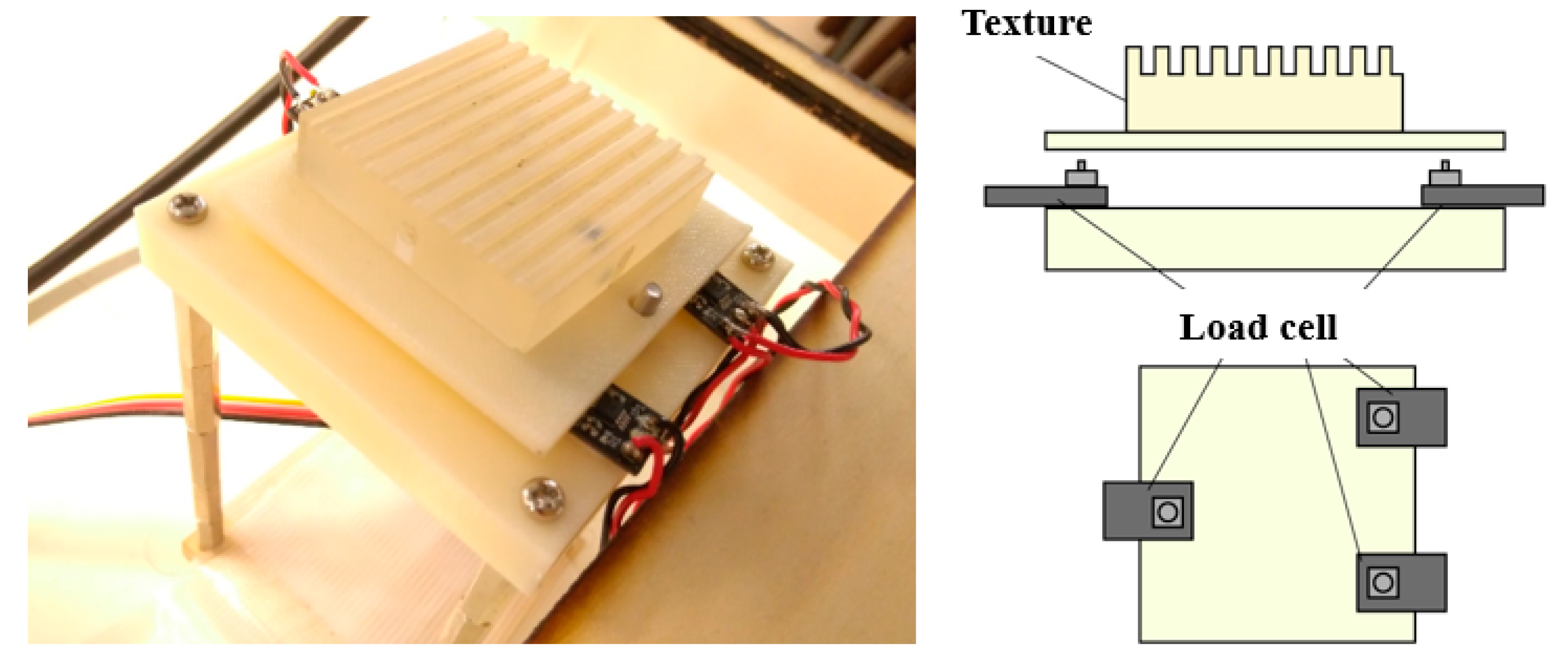

2.1.2. Real Textures

2.1.3. Visual Textures

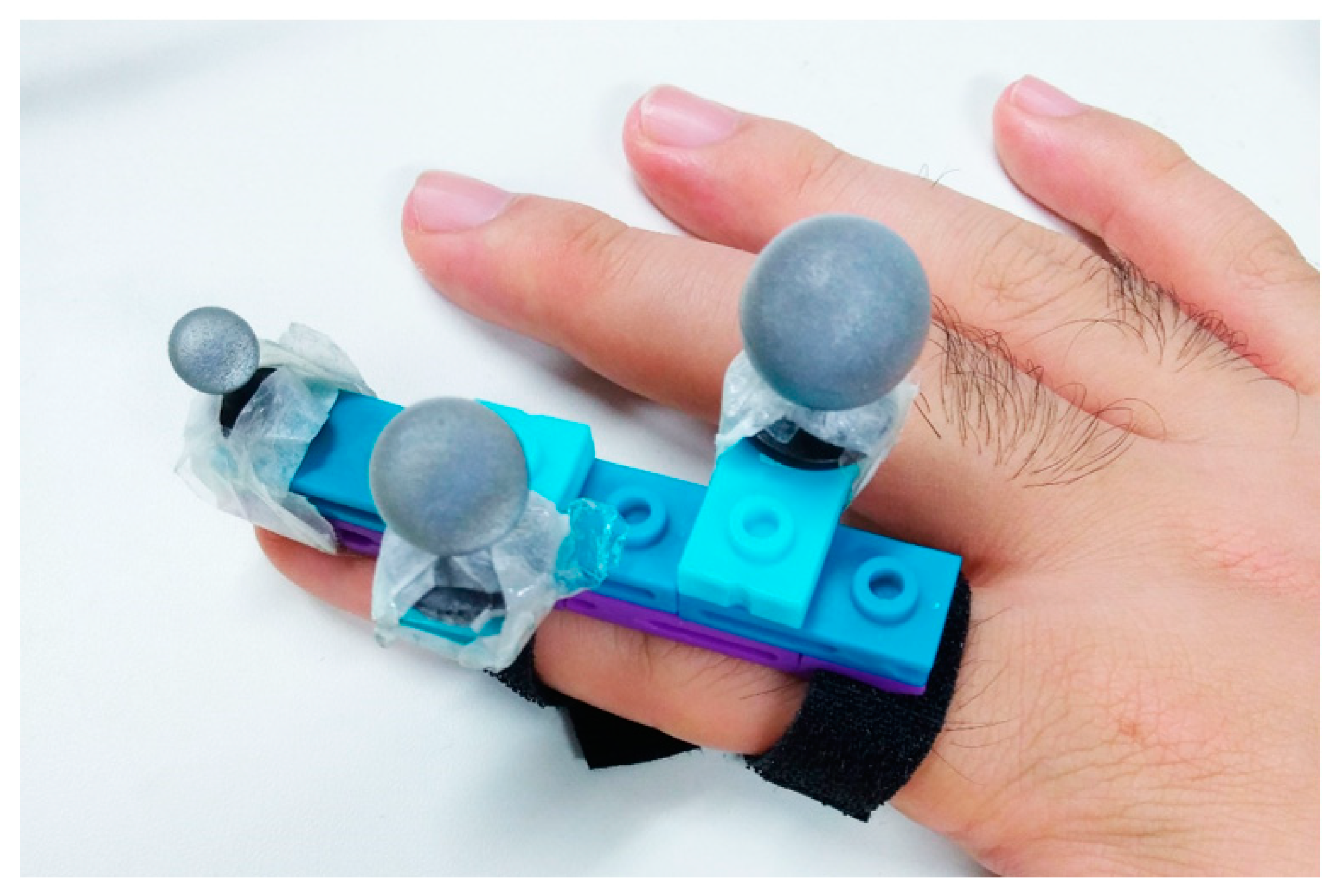

2.1.4. Tracking Fingertip and Measuring Pressure

2.2. Procedure

- Each trial started with an image with a size of “real texture + 32 px (0.939 mm)” or size of “real texture − 32 px”. For example, when the size of the real texture was 1.6 mm, the trial started with an image in which the stripes were 2.6 mm or 0.6 mm wide. In the case of real textures 2.6 mm or 3.0 mm in width, preliminary experiments confirmed that “real texture − 32 px” was likely to be near the threshold, so the starting point was set “real texture − 49 px” (1.54 mm). When the actual texture was 0.6 mm or 1.0 mm, the equal width of stripes was 19 px and 31 px, so the starting point was 2 px, which is the minimum stripe width that can be drawn. In addition, when the real texture was 0.2 mm width, it was difficult to make a decision even when compared with 2 px, so trials starting with stripes smaller than the real texture were omitted.

- Participants touched the real texture (reference stimulus) while watching the image (comparison stimulus) reflected on the mirror surface. The time to experience the texture was not specified. Participants were asked to trace the texture in the front-rear direction (orthogonal to the texture stripes). After evaluating the stimulus, the participants were asked to report whether the spacing of the grating in the image was larger or smaller than that of the real texture they were touching.

- The grating spacing of the image was gradually changed to approach the spatial period of the real texture. When the participant’s response changed with respect to the previous response (i.e., from “large” to “small” or from “small” to “large”), the spacing was changed in the direction away from the spatial period of the real texture. In the phase in which the direction of change was away from the spatial period of the real texture, when the participants’ response changed, the spacing was changed in the direction towards the spatial period of the real texture.

- When decreasing the stripe spacing of the visual texture, if the response did not change even when the visual texture was 2 px, we forcibly increased the stripe spacing of the image. However, this event did not occur throughout this experiment.

- The amount of change in the grating spacing in the image for each step was initially set to 12 px (0.376 mm). When the response changed in step 3, it was reduced to 6 px (0.188 mm), 3 px (0.0941 mm), 2 px (0.0626 mm), and 1 px (0.0313 mm). After the fourth switch, the amount of change was fixed at 1 px (0.0313 mm). Because the 1down-3up method was used, in the phase in which the direction of change was away from the spatial period of the real texture, the amount of change was three times the size of each step. The actual changes were 12 px, 18 px (6 px × 3), 3 px, 6 px (2 px × 3), 1 px, and 3 px (1 px × 3).

- At the point at which a change in response had occurred six times since the start of one trial, we regarded this point as the 75% threshold, based on the previous literature [21].

2.3. Experimental Conditions

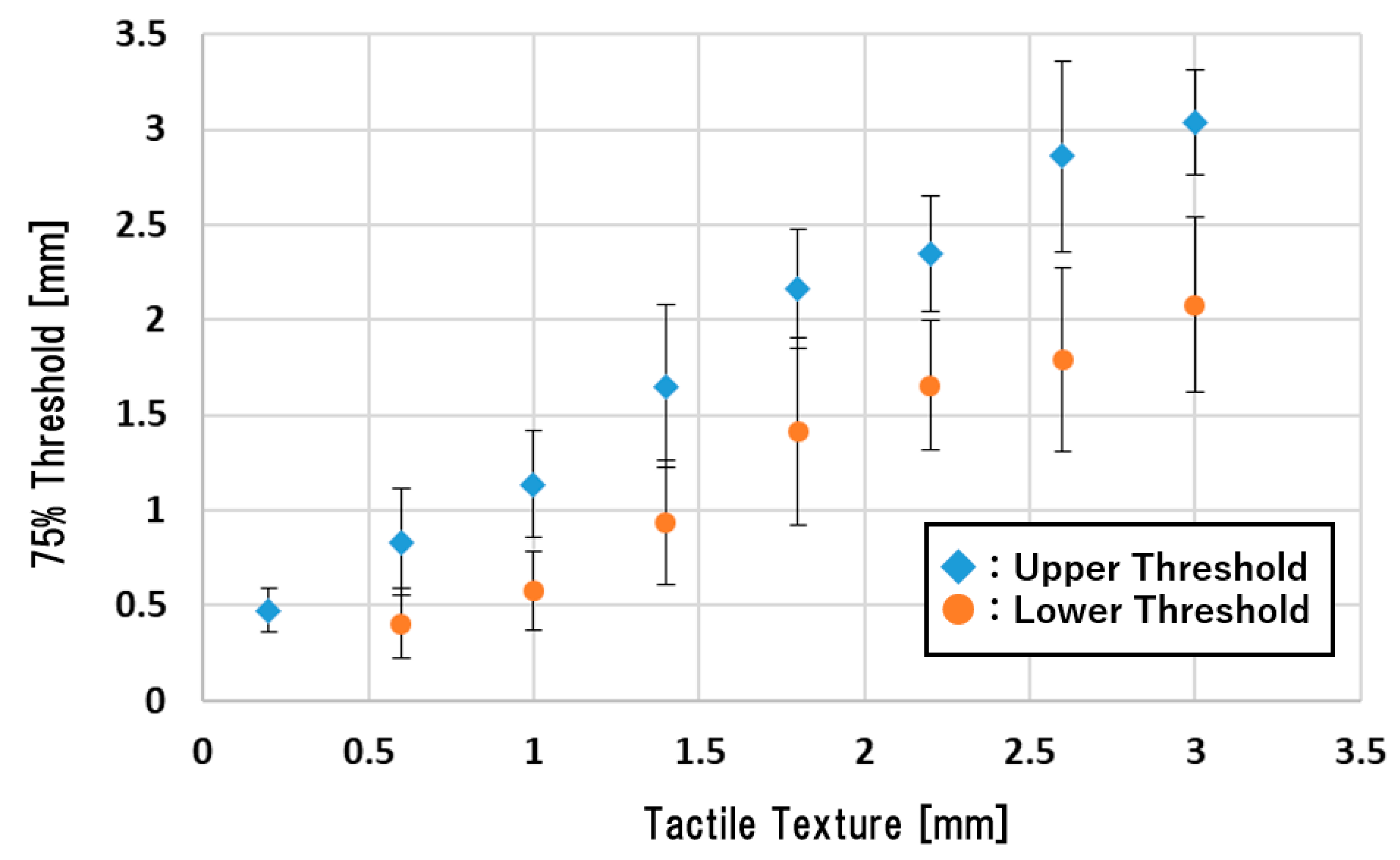

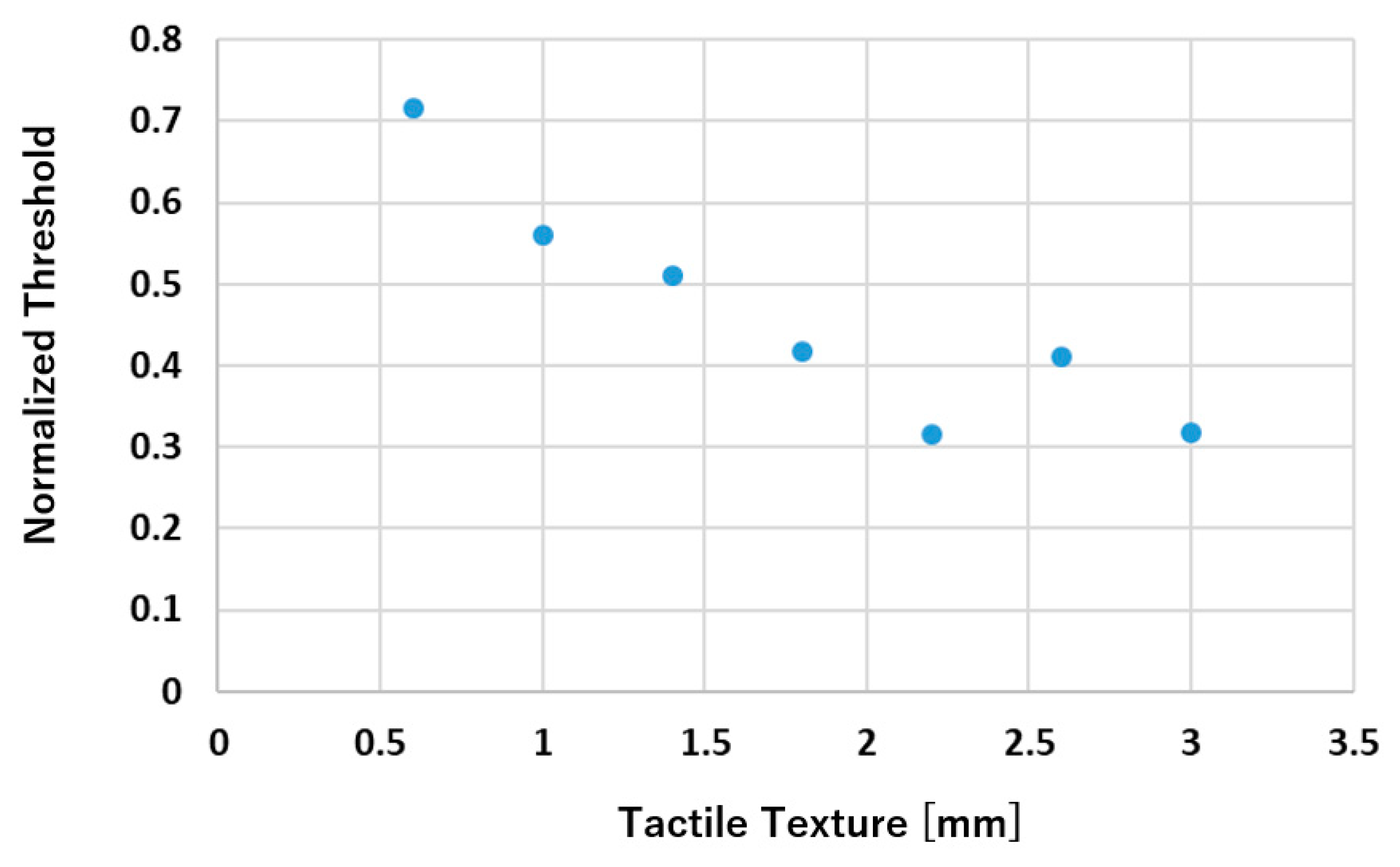

3. Results

4. Discussion

4.1. Width of Permissible Range with Respect to the Amount of Stimulation

4.2. Relationship between Finger Behavior and Threshold

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Kajimoto, K.; Kawakami, N.; Tachi, S.; Inami, M. SmartTouch: Electric skin to touch the untouchable. IEEE Comput. Graph. Appl. 2004, 24, 36–43. [Google Scholar] [CrossRef] [PubMed]

- Martínez, J.; García, A.; Oliver, M.; Molina, J.P.; González, P. Identifying 3D geometric shapes with a vibrotactile glove. IEEE Comput. Graph. Appl. 2016, 36, 42–51. [Google Scholar] [CrossRef] [PubMed]

- Massie, T.H.; Salisbury, J.K. The PHANTOM haptic interface: A device for probing virtual objects. Dyn. Syst. Contr. 1994, 1, 295–300. [Google Scholar]

- Buoguila, L.; Cai, Y.; Sato, M. New haptic device for human scale virtual environment scaleable—SPIDAR. In Proceedings of the ICAT’97, Tokyo, Japan, 3–5 December 1997; pp. 93–98. [Google Scholar]

- Tachi, S.; Maeda, T.; Hirata, R.; Hoshino, H. A construction method of virtual haptic space. In Proceedings of the ICAT/VRST 95, Chiba, Japan, 21–22 November 1995; pp. 131–138. [Google Scholar]

- Insko, B.E. Passive Haptics Significantly Enhances Virtual Environments. Ph.D. Thesis, University of North Carolina, Chapel Hill, NC, USA, 2001. [Google Scholar]

- Klatzky, R.L.; Lederman, S.J.; Reed, C.L. Haptic integration of object properties: Texture, hardness and planar contour. J. Exp. Psychol. Hum. Percept. Perform. 1989, 15, 45–57. [Google Scholar] [CrossRef] [PubMed]

- Ernst, M.O.; Banks, M.S. Humans integrate visual and haptic information in a statistically optimal fashion. Nature 2002, 415, 429–433. [Google Scholar] [CrossRef] [PubMed]

- Heller, M.A. Texture perception in sighted and blind observers. Percept. Psychophys. 1989, 45, 49–54. [Google Scholar] [CrossRef] [PubMed]

- Tiest, B.W.M.; Kappers, A.M.L. Haptic and visual perception of roughness. Acta Psychol. 2007, 124, 177–189. [Google Scholar] [CrossRef] [PubMed]

- Natsume, M.; Tanaka, Y.; Tiest, B.W.M.; Kappers, A.M.L. Skin vibration and contact force in active perception for roughness ratings. In Proceedings of the 26th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Lisbon, Portugal, 28 August–1 September 2017; pp. 1479–1484. [Google Scholar]

- Skedung, L.; Arvidsson, M.; Chung, J.Y.; Stafford, C.M.; Berglund, B.; Rutland, M.W. Feeling small: Exploring the tactile perception limits. Sci. Rep. 2013, 3, 1–6. [Google Scholar] [CrossRef] [PubMed]

- Kuroki, S.; Sawayama, M.; Nishida, S. Haptic texture perception on 3D-printed surfaces transcribed from visual natural textures. In Proceedings of the EuroHaptics 2018, Pisa, Italy, 13–16 June 2018; pp. 102–112. [Google Scholar]

- Cellini, C.; Kaim, L.; Drewing, K. Visual and haptic integration in the estimation of softness of deformable objects. i-Perception 2013, 4, 516–531. [Google Scholar] [CrossRef] [PubMed]

- Kwon, E.; Kim, G.J.; Lee, S. Effects of sizes and shapes of props in tangible augmented reality. In Proceedings of the IEEE International Symposium on Mixed and Augmented Reality, Orlando, FL, USA, 19–22 October 2009; pp. 201–202. [Google Scholar]

- Simeone, A.L.; Velloso, E.; Gellersen, H. Substitutional reality: Using the physical environment to design virtual reality experiences. In Proceedings of the 33rd Annual CHI Conference on Human Factors in Computing Systems, Seoul, Korea, 18–23 April 2015; pp. 3307–3316. [Google Scholar]

- Kitahara, I.; Nakahara, M.; Ohta, Y. Sensory properties in fusion of visual/haptic stimuli using mixed reality. Adv. Haptics 2010, 565–581. [Google Scholar]

- Yamaguchi, S.; Kaneko, S.; Kajimoto, H. Allowable range of consistency between the visual and tactile presentations of a linear grating texture. In Proceedings of the AsiaHaptics 2018, Songdo, Korea, 14–16 November 2018. [Google Scholar]

- Available online: https://processing.org/ (accessed on 27 January 2020).

- OptiTrack—Motion Capture System. Available online: https://www.optitrack.com/ (accessed on 27 January 2020).

- Kaernbach, C. Simple adaptive testing with the weighted up-down method. Percept. Psychophys. 1991, 49, 227–229. [Google Scholar] [CrossRef] [PubMed]

- Hachisu, T.; Kajimoto, H. Vibration feedback latency affects material perception during rod tapping interactions. IEEE Trans. Haptics 2017, 10, 288–295. [Google Scholar] [CrossRef] [PubMed]

| Tactile Texture (mm) | Visual Condition (px) | 75% Threshold (mm) | Permissible Range (mm) | S.D. |

|---|---|---|---|---|

| 0.2 | 38 (1.19 mm) | 0.473 | − | 0.114 |

| 0.6 | 2 (0.0626 mm) | 0.404 | 0.429 | 0.184 |

| 51 (1.60 mm) | 0.834 | 0.278 | ||

| 1.0 | 2 (0.0626mm) | 0.574 | 0.561 | 0.206 |

| 63 (1.97 mm) | 1.135 | 0.280 | ||

| 1.4 | 11 (0.344 mm) | 0.937 | 0.715 | 0.323 |

| 76 (2.38 mm) | 1.652 | 0.430 | ||

| 1.8 | 25 (0.783 mm) | 1.413 | 0.752 | 0.492 |

| 89 (2.79 mm) | 2.166 | 0.311 | ||

| 2.2 | 38 (1.19 mm) | 1.655 | 0.693 | 0.341 |

| 102 (3.19 mm) | 2.347 | 0.302 | ||

| 2.6 | 33 (1.03 mm) | 1.790 | 1.069 | 0.482 |

| 114 (3.57 mm) | 2.858 | 0.498 | ||

| 3.0 | 46 (1.44 mm) | 2.078 | 0.956 | 0.461 |

| 127 (3.98 mm) | 3.034 | 0.277 |

| Participant | Threshold (mm) | Experience Time (s) | Average Force (N) | Average Speed (mm/s) |

|---|---|---|---|---|

| A (Experimenter) | 2.695 | 6.335 | 1.076 | 31.33 |

| B | 2.476 | 4.803 | 0.217 | 36.82 |

| C | 3.228 | 4.520 | 0.381 | 40.38 |

| D | 2.131 | 3.645 | 0.384 | 35.07 |

| E | 2.570 | 3.309 | 0.188 | 7.338 |

| F | 3.071 | 1.738 | 0.670 | 34.93 |

| G | 3.040 | 4.790 | 0.676 | 41.77 |

| I | 3.259 | 1.729 | 0.537 | 34.69 |

| J | 3.761 | 1.101 | 0.186 | 56.81 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yamaguchi, S.; Kaneko, S.; Kajimoto, H. Measurement of the Permissible Range of Consistency between Visual and Tactile Presentations of Line Grating Textures. Appl. Sci. 2020, 10, 2494. https://doi.org/10.3390/app10072494

Yamaguchi S, Kaneko S, Kajimoto H. Measurement of the Permissible Range of Consistency between Visual and Tactile Presentations of Line Grating Textures. Applied Sciences. 2020; 10(7):2494. https://doi.org/10.3390/app10072494

Chicago/Turabian StyleYamaguchi, Shun, Seitaro Kaneko, and Hiroyuki Kajimoto. 2020. "Measurement of the Permissible Range of Consistency between Visual and Tactile Presentations of Line Grating Textures" Applied Sciences 10, no. 7: 2494. https://doi.org/10.3390/app10072494

APA StyleYamaguchi, S., Kaneko, S., & Kajimoto, H. (2020). Measurement of the Permissible Range of Consistency between Visual and Tactile Presentations of Line Grating Textures. Applied Sciences, 10(7), 2494. https://doi.org/10.3390/app10072494