Adaptive Dynamic Disturbance Strategy for Differential Evolution Algorithm

Abstract

1. Introduction

2. Standard Differential Evolution Algorithms

2.1. Initialization

2.2. Mutation Operation

2.3. Crossover Operation

2.4. Selection Operation

3. An adaptive Dynamic Disturbance Strategy for Differential Evolution Algorithm

3.1. Population Initialization of Chaotic Maps

3.2. Adaptive Adjustment Strategies for Zoom Factor and Crossover Probability

3.3. Weighted Dynamic Mutation Strategy

3.4. Disturbance Mutation Strategy

3.5. Algorithm for Implementation Process

- Step 1

- Initialize each parameter. The number of populations , the dimension of the solution , the maximum evolutionary generation , the upper and lower bounds of the individual variables , , the maximum and minimum of the scaling factor , , the maximum and minimum of the mutation probability , , the precocious cycle , the precision value and the variance threshold value .

- Step 2

- Initialize the population. The chaotic mapping strategy was used to generate NP initial populations, and the fitness values of the individuals were calculated and ranked in order of magnitude. From the NP populations, the top N fitness values were selected as the initial population of the algorithm.

- Step 3

- Calculate , , according to Equations (9), (10) and (12).

- Step 4

- Mutation operation. Calculate variant individuals according to Equation (11).

- Step 5

- Crossover operation. Find a new variant individual. according to Equation (6).

- Step 6

- Selection operation. The next generation is obtained from Equation (7).

- Step 7

- Update the local and global optimal values.

- Step 8

- Check whether the population is precocious or not; if it isprecocious, the mutation operation will be carried out again.If and , for to , Calculate new individuals according to Equations (15) and (16), and update the optimal value.

- Step 9

- Repeat Steps 4–8 for N times.

- Step 10

- If g does not reach the maximum number of iterations , then go to Step 3, otherwise output , .

4. Simulation Experiment and Algorithm Performance Analysis

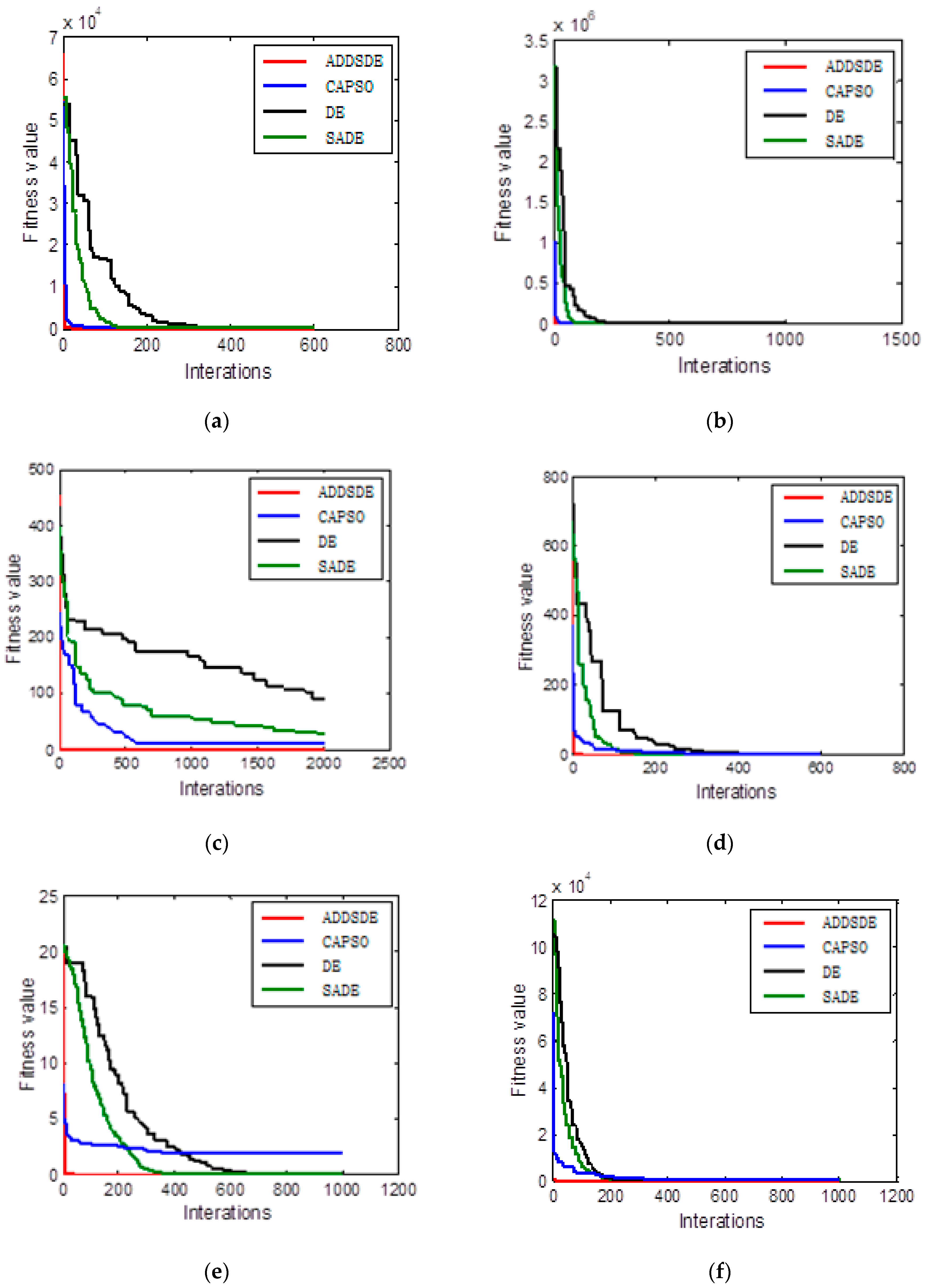

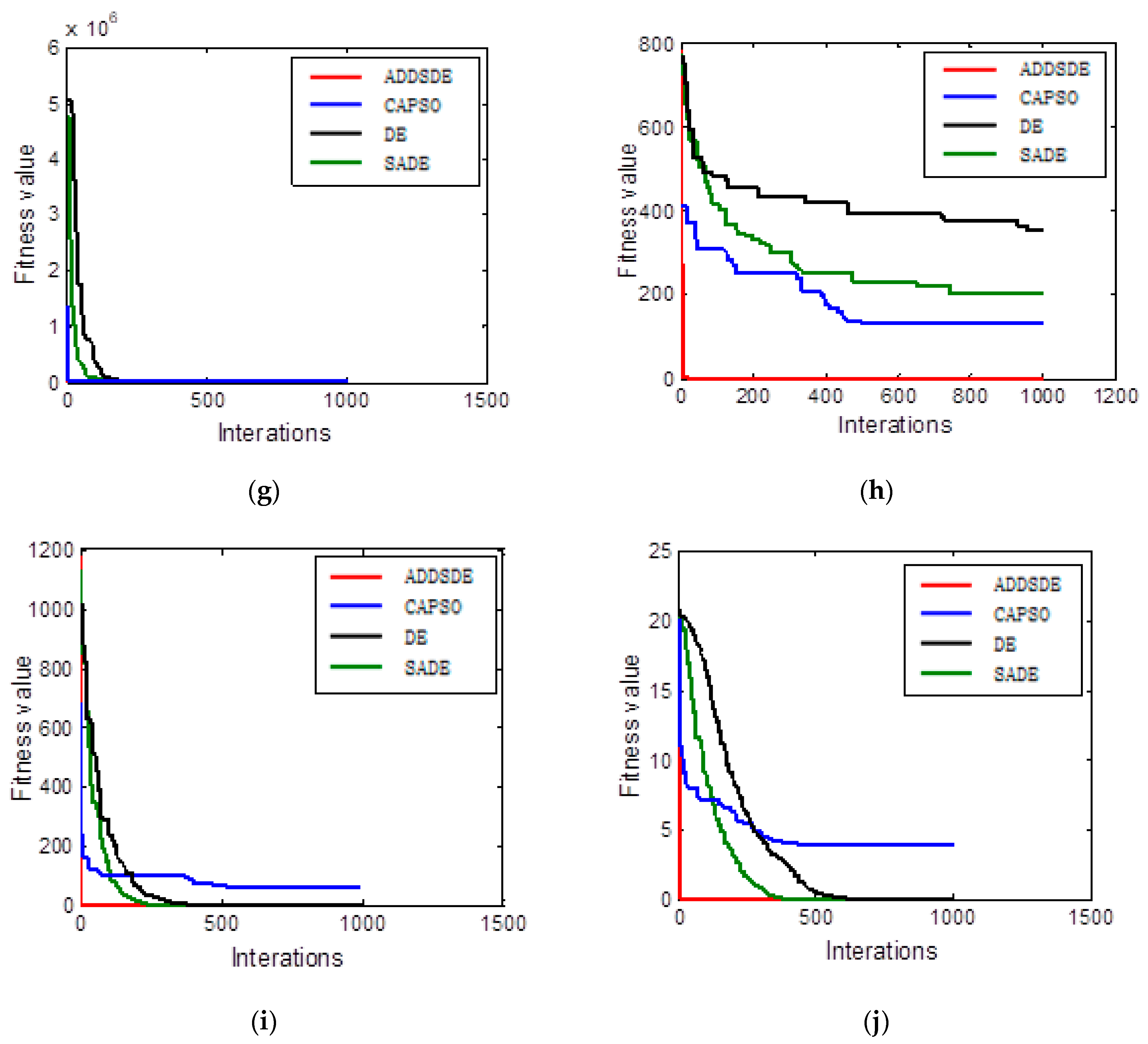

4.1. Test Function and Comparison of Algorithms

4.2. Analysis of Results

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Storn, R.; Price, K. Differential evolution a simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Price, K.; Storn, R.M.; Lampinen, J.A. Differential Evolution: A Practical Approach to Global Optimization; Natural Computing Series; Springer: New York, NY, USA, 2005. [Google Scholar]

- Bas, E. The Training Of Multiplicative Neuron Model Based Artificial Neural Networks With Differential Evolution Algorithm For Forecasting. J. Artif. Intell. Soft Comput. Res. 2016, 6, 5–11. [Google Scholar] [CrossRef]

- Bao, J.; Chen, Y.; Yu, J.S. A regeneratable dynamic differential evolution algorithm for neural networks with integer weights. Front. Inf. Technol. Electron. Eng. 2010, 11, 939–947. [Google Scholar] [CrossRef]

- Lakshminarasimman, L.; Subramanian, S. A modified hybrid differential evolution for short-term scheduling of hydrothermal power systems with cascaded reservoirs. Energy Convers. Manag. 2008, 49, 2513–2521. [Google Scholar] [CrossRef]

- Xu, Y.; Dong, Z.Y.; Luo, F.; Zhang, R.; Wong, K.P. Parallel-differential evolution approach for optimal event-driven load shedding against voltage collapse in power systems. IET Gener. Transm. Distrib. 2013, 8, 651–660. [Google Scholar] [CrossRef]

- Berhan, E.; Krömer, P.; Kitaw, D.; Abraham, A.; Snavel, V. Solving Stochastic Vehicle Routing Problem with Real Simultaneous Pickup and Delivery Using Differential Evolution. In Innovations in Bio-inspired Computing and Applications, Proceedings of the 4th International Conference on Innovations in Bio-Inspired Computing and Applications, IBICA 2013, Ostrava, Czech Republic, 22–24 August 2013; Springer: Berlin/Heidelberg, Germany, 2014; Volume 237, pp. 187–200. [Google Scholar]

- Teoh, B.E.; Ponnambalam, S.G.; Kanagaraj, G. Differential evolution algorithm with local search for capacitated vehicle routing problem. Int. J. Bio Inspired Comput. 2015, 7, 321–342. [Google Scholar] [CrossRef]

- Pu, E.; Wang, F.; Yang, Z.; Wang, J.; Li, Z.; Huang, X. Hybrid Differential Evolution Optimization for the Vehicle Routing Problem with Time Windows and Driver-Specific Times. Wirel. Pers. Commun. 2017, 95, 1–13. [Google Scholar] [CrossRef]

- Lai, M.Y.; Cao, E.B. An improved differential evolution algorithm for vehicle routing problem with simultaneous pickups and deliveries and time windows. Eng. Appl. Artif. Intell. 2010, 23, 188–195. [Google Scholar]

- Al-Turjman, F.; Deebak, B.D.; Mostarda, L. Energy Aware Resource Allocation in Multi-Hop Multimedia Routing via the Smart Edge Device. IEEE Access 2019, 7, 151203–151214. [Google Scholar] [CrossRef]

- Jazebi, S.; Hosseinian, S.H.; Vahidi, B. DSTATCOM allocation in distribution networks considering reconfiguration using differential evolution algorithm. Energy Convers. Manag. 2011, 52, 2777–2783. [Google Scholar] [CrossRef]

- Wu, K.J.; Li, W.Q.; Wang, D.C. Bifurcation of modified HR neural model under direct current. J. Ambient Intell. Humaniz. Comput. 2019. [Google Scholar] [CrossRef]

- Kotb, Y.; Ridhawi, I.A.; Aloqaily, M.; Baker, T.; Jararweh, Y.; Tawfik, H. Cloud-Based Multi-Agent Cooperation for IoT Devices Using Workflow-Nets. J. Grid Comput. 2019, 17, 625–650. [Google Scholar] [CrossRef]

- Reddy, S.S. Optimal power flow using hybrid differential evolution and harmony search algorithm. Int. J. Mach. Learn. Cybern. 2018, 10, 1–15. [Google Scholar] [CrossRef]

- Sangaiah, A.K.; Medhane, D.V.; Han, T.; Hossain, M.S.; Muhammad, G. Enforcing Position-Based Confidentiality with Machine Learning Paradigm Through Mobile Edge Computing in Real-Time Industrial Informatics. IEEE Trans. Ind. Inform. 2019, 15, 4189–4196. [Google Scholar] [CrossRef]

- Sangaiah, A.K.; Samuel, O.W.; Li, X.; Abdel-Basset, M.; Wang, H. Towards an efficient risk assessment in software projects–Fuzzy reinforcement paradigm. Comput. Electr. Eng. 2017. [Google Scholar] [CrossRef]

- Qiu, T.; Wang, H.; Li, K.; Ning, H.; Sangaiah, A.K.; Chen, B. SIGMM: A Novel Machine Learning Algorithm for Spammer Identification in Industrial Mobile Cloud Computing. IEEE Trans. Ind. Inform. 2019, 15, 2349–2359. [Google Scholar] [CrossRef]

- Jamdagni, A.; Tan, Z.Y.; He, X.J.; Nanda, P.; Liu, R.P. RePIDS: A Multi Tier Real-time Payload-Based Intrusion Detection System. Comput. Netw. 2013, 57, 811–824. [Google Scholar] [CrossRef]

- Autili, M.; Mostarda, L.; Navarra, A.; Tivoli, M. Synthesis of decentralized and concurrent adaptors for correctly assembling distributed component-based systems. J. Syst. Softw. 2008, 81, 2210–2236. [Google Scholar] [CrossRef]

- Zhang, S.; Liu, Y.; Li, S.; Tan, Z.; Zhao, X.; Zhou, J. FIMPA: A Fixed Identity Mapping Prediction Algorithm in Edge Computing Environment. IEEE Access 2020, 8, 17356–17365. [Google Scholar] [CrossRef]

- Ambusaidi, M.A.; He, X.; Nanda, P.; Tan, Z. Building an Intrusion Detection System Using a Filter-Based Feature Selection Algorithm. IEEE Trans. Comput. 2016, 65, 2986–2998. [Google Scholar] [CrossRef]

- Aljeaid, D.; Ma, X.; Langensiepen, C. Biometric identity-based cryptography for e-Government environment. In Proceedings of the Science & Information Conference, London, UK, 27–29 August 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 581–588. [Google Scholar]

- Ramirez, R.C.; Vien, Q.T.; Trestian, R.; Mostarda, L.; Shah, P. Multi-path Routing for Mission Critical Applications in Software-Defined Networks. In Proceedings of the International Conference on Industrial Networks and Intelligent Systems, Da Nang, Vietnam, 27–28 August 2018; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Brest, J.; Greiner, S.; Boskovic, B.; Mernik, M.; Zumer, V. Self-Adapting Control Parameters in Differential Evolution: A Comparative Study on Numerical Benchmark Problems. IEEE Trans. Evol. Comput. 2006, 10, 646–657. [Google Scholar] [CrossRef]

- Wainwright, M.J. Structured Regularizers for High-Dimensional Problems: Statistical and Computational Issues. Annu. Rev. Stat. Its Appl. 2014, 1, 233–253. [Google Scholar] [CrossRef]

- Sun, G.; Peng, J.; Zhao, R. Differential evolution with individual-dependent and dynamic parameter adjustment. Soft Comput. 2017, 22, 1–27. [Google Scholar] [CrossRef]

- Chiou, J.P.; Chang, C.F.; Su, C.T. Variable scaling hybrid differential evolution for solving network reconfiguration of distribution systems. IEEE Trans. Power Syst. 2005, 20, 668–674. [Google Scholar] [CrossRef]

- Wang, H.; Wu, Z.; Rahnamayan, S. Enhanced opposition-based differential evolution for solving high-dimensional continuous optimization problems. Soft Comput. 2011, 15, 2127–2140. [Google Scholar] [CrossRef]

- Ali, M.Z.; Awad, N.H.; Suganthan, P.N. Multi-population differential evolution with balanced ensemble of mutation strategies for large-scale global optimization. Appl. Soft Comput. 2015, 33, 304–327. [Google Scholar] [CrossRef]

- Trivedi, A.; Srinivasan, D.; Biswas, S.; Reindl, T. A genetic algorithm—Differential evolution based hybrid framework: Case study on unit commitment scheduling problem. Inf. Sci. 2016, 354, 275–300. [Google Scholar] [CrossRef]

- Ou, C.M. Design of block ciphers by simple chaotic functions. Comput. Intell. Mag. IEEE 2008, 3, 54–59. [Google Scholar] [CrossRef]

- Shen, Y.; Wang, Y. Operating Point Optimization of Auxiliary Power Unit Using Adaptive Multi-Objective Differential Evolution Algorithm. IEEE Trans. Ind. Electron. 2016, 64, 115–124. [Google Scholar] [CrossRef]

- Qin, A.K.; Huang, V.L.; Suganthan, P.N. Differential Evolution Algorithm with Strategy Adaptation for Global Numerical Optimization. IEEE Trans. Evol. Comput. 2009, 13, 398–417. [Google Scholar] [CrossRef]

- Ying, W.; Zhou, J.; Lu, Y.; Qin, H.; Wang, Y. Chaotic self-adaptive particle swarm optimization algorithm for dynamic economic dispatch problem with valve-point effects. Energy Convers. Manag. 2011, 38, 14231–14237. [Google Scholar]

| Type | Function Name | Formula | Range of Optimization | Optimal Value |

|---|---|---|---|---|

| Unimodal | Sphere | [−100,100] | 0 | |

| Rosenbrock | [−10,10] | 0 | ||

| Multi-peak | Rastrigin | [−5.12,5.12] | 0 | |

| Griewank | [−600,600] | 0 | ||

| Ackley | [−32,32] | 0 |

| Function | Algorithm | Optimal Value | Average Optimum | Standard Deviation |

|---|---|---|---|---|

| DE | 8.10 × 10−6 | 1.02 × 10−5 | 2.84 × 105 | |

| SADE | 1.10 × 10−11 | 3.67 × 10−11 | 8.41 × 10−12 | |

| CAPSO | 5.72 × 10−13 | 1.29 × 10−12 | 4.91 × 10−13 | |

| ADDSDE | 0 | 0 | 0 | |

| DE | 2.57 × 101 | 3.59 × 101 | 7.96 × 100 | |

| SADE | 1.83 × 101 | 2.65 × 101 | 6.33 × 100 | |

| CAPSO | 5.42 × 100 | 7.56 × 100 | 1.41 × 100 | |

| ADDSDE | 0 | 0 | 0 | |

| DE | 7.94 × 101 | 1.19 × 102 | 2.91 × 101 | |

| SADE | 3.01 × 101 | 3.59 × 101 | 6.21 × 100 | |

| CAPSO | 2.10 × 101 | 2.53 × 101 | 5.31 × 100 | |

| ADDSDE | 0 | 0 | 0 | |

| DE | 0 | 3.69 × 10−4 | 1.65 × 10−3 | |

| SADE | 0 | 0 | 0 | |

| CAPSO | 8.62 × 10−1 | 9.52 × 10−1 | 6.80 × 10−2 | |

| ADDSDE | 0 | 0 | 0 | |

| DE | 1.10 × 10−9 | 9.86 × 10−9 | 1.37 × 10−8 | |

| SADE | 5.48 × 10−11 | 6.55 × 10−11 | 4.39 × 10−11 | |

| CAPSO | 3.05 × 100 | 3.48 × 100 | 2.61 × 100 | |

| ADDSDE | 9.56 × 10−16 | 9.56 × 10−16 | 0 |

| Function | Algorithm | Optimal Value | Average Optimum | Standard Deviation |

|---|---|---|---|---|

| DE | 1.20 × 10−4 | 1.11 × 10−3 | 1.29 × 10−3 | |

| SADE | 1.79 × 10−5 | 2.55 × 10−5 | 8.47 × 10−6 | |

| CAPSO | 4.11 × 102 | 6.13 × 10−2 | 1.65 × 102 | |

| ADDSDE | 0 | 0 | 0 | |

| DE | 4.48 × 101 | 8.94 × 101 | 4.62 × 101 | |

| SADE | 4.70 × 101 | 6.44 × 101 | 1.64 × 101 | |

| CAPSO | 1.15 × 102 | 2.45 × 102 | 1.09 × 102 | |

| ADDSDE | 0 | 0 | 0 | |

| DE | 3.45 × 102 | 3.62 × 102 | 1.98 × 101 | |

| SADE | 1.67 × 102 | 1.94 × 102 | 1.54 × 101 | |

| CAPSO | 1.16 × 102 | 1.63 × 102 | 2.98 × 101 | |

| ADDSDE | 0 | 0 | 0 | |

| DE | 5.17 × 10−4 | 3.85 × 10−2 | 3.98 × 10−2 | |

| SADE | 2.97 × 10−5 | 4.53 × 10−5 | 2.37 × 10−5 | |

| CAPSO | 5.92 × 101 | 9.83 × 101 | 2.52 × 101 | |

| ADDSDE | 0 | 0 | 0 | |

| DE | 1.01 × 10−3 | 1.13 × 10−3 | 8.75 × 10−4 | |

| SADE | 4.34 × 10−7 | 1.02 × 10−6 | 6.11 × 10−7 | |

| CAPSO | 3.76 × 100 | 3.98 × 100 | 3.71 × 10−1 | |

| ADDSDE | 9.56 × 10−16 | 9.56 × 10−16 | 0 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, T.; Wu, K.; Du, T.; Cheng, X. Adaptive Dynamic Disturbance Strategy for Differential Evolution Algorithm. Appl. Sci. 2020, 10, 1972. https://doi.org/10.3390/app10061972

Wang T, Wu K, Du T, Cheng X. Adaptive Dynamic Disturbance Strategy for Differential Evolution Algorithm. Applied Sciences. 2020; 10(6):1972. https://doi.org/10.3390/app10061972

Chicago/Turabian StyleWang, Tiejun, Kaijun Wu, Tiaotiao Du, and Xiaochun Cheng. 2020. "Adaptive Dynamic Disturbance Strategy for Differential Evolution Algorithm" Applied Sciences 10, no. 6: 1972. https://doi.org/10.3390/app10061972

APA StyleWang, T., Wu, K., Du, T., & Cheng, X. (2020). Adaptive Dynamic Disturbance Strategy for Differential Evolution Algorithm. Applied Sciences, 10(6), 1972. https://doi.org/10.3390/app10061972