Binaural Rendering with Measured Room Responses: First-Order Ambisonic Microphone vs. Dummy Head

Abstract

1. Introduction

2. Experiment I: Dynamic Rendering of Multiple-Orientation Binaural Signals

2.1. Linear Interpolation with Switched High-Frequency Phase (LISHPh)

2.2. Listening Experiment: Design

2.3. Listening Experiment: Implementation and Settings

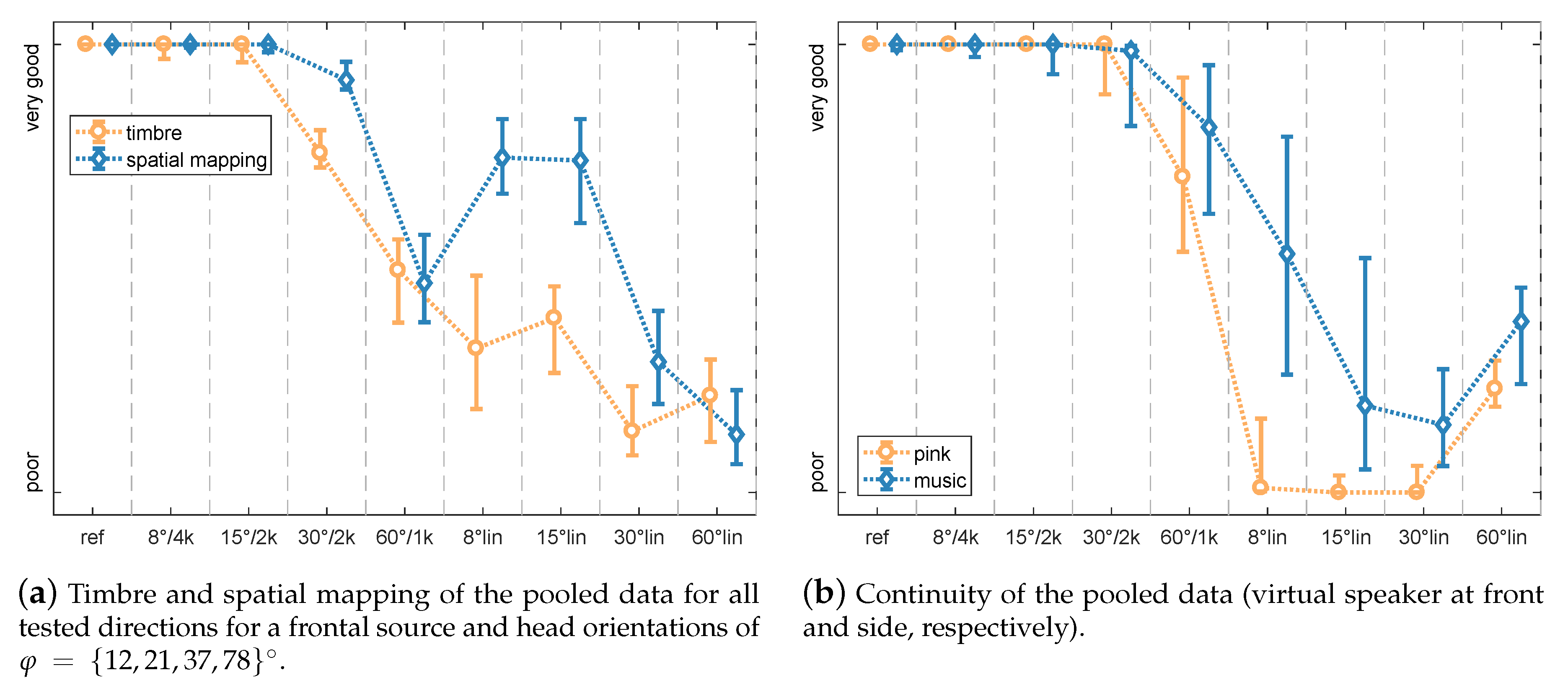

2.4. Results and Discussion

3. Experiment II: Dummy-Head MOBRIR vs. ARIR

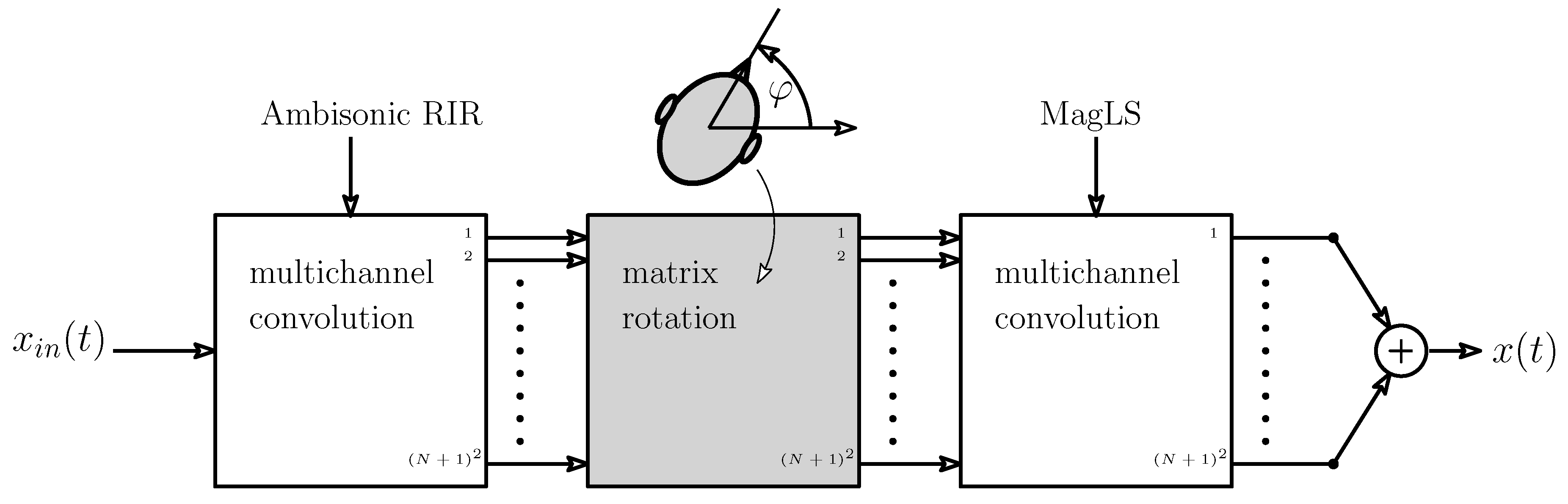

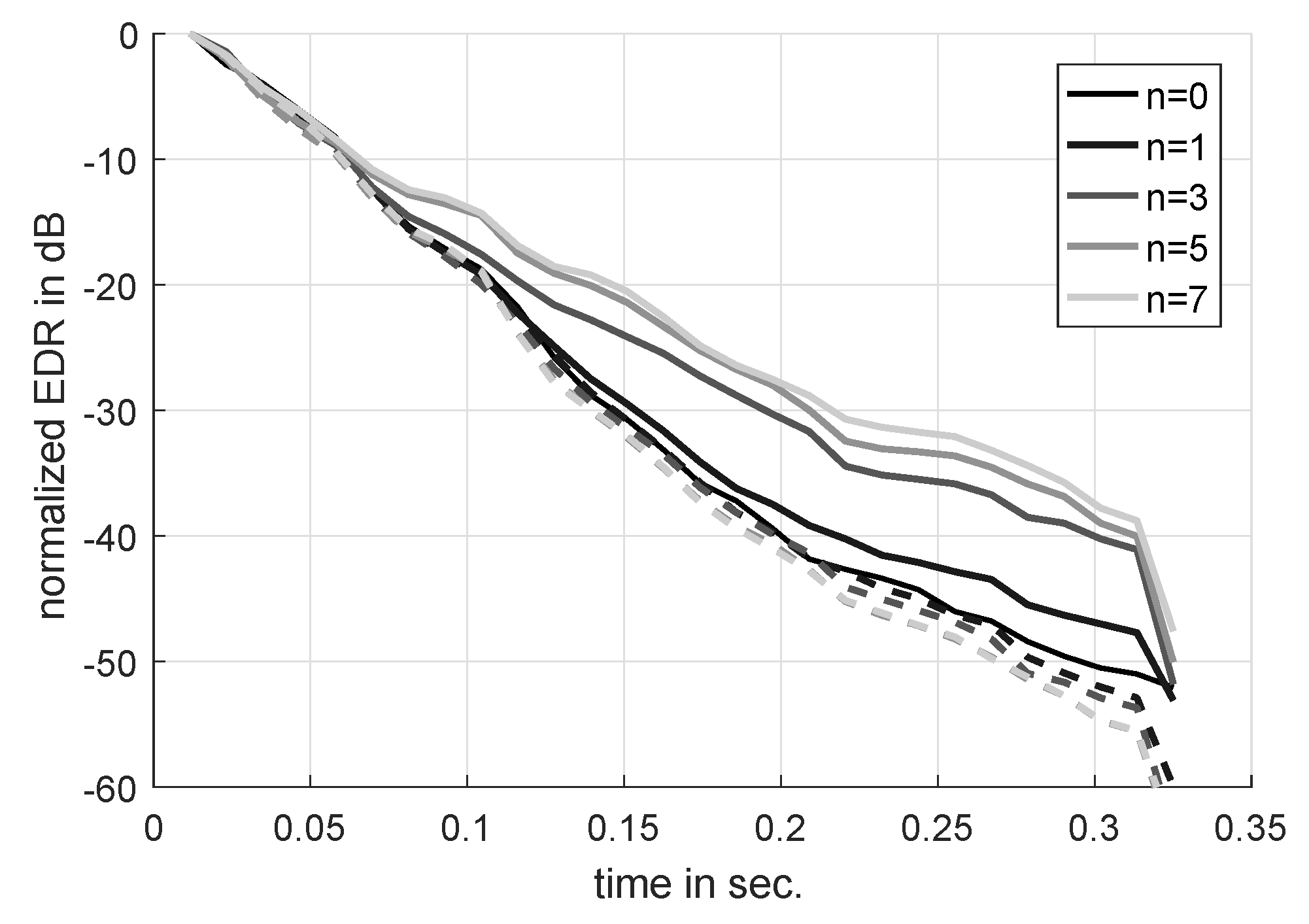

3.1. Rendering with Measured Ambisonic RIR and the Ambisonic Spatial Decomposition Method (ASDM)

3.2. Listening Experiment: Design

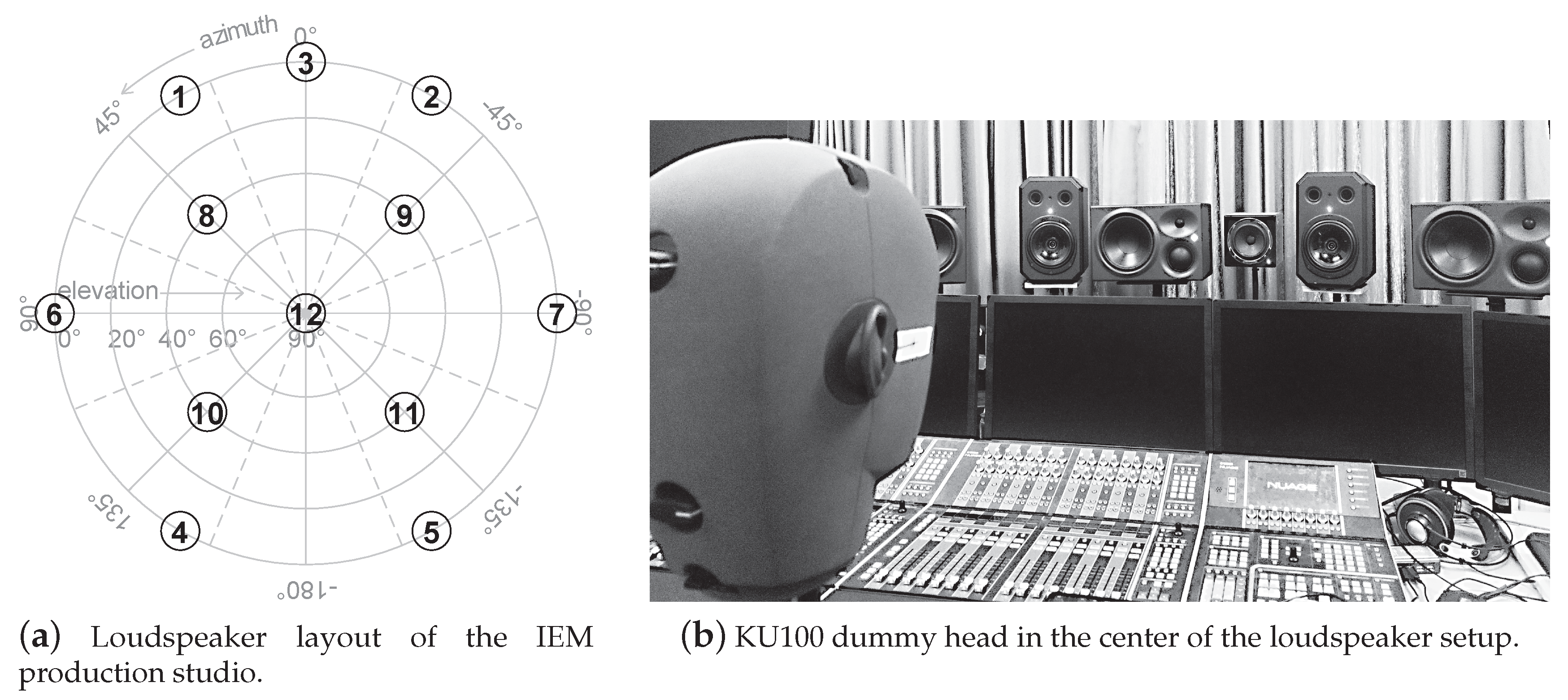

3.3. Listening Experiment: Implementation

3.4. Results and Discussion

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

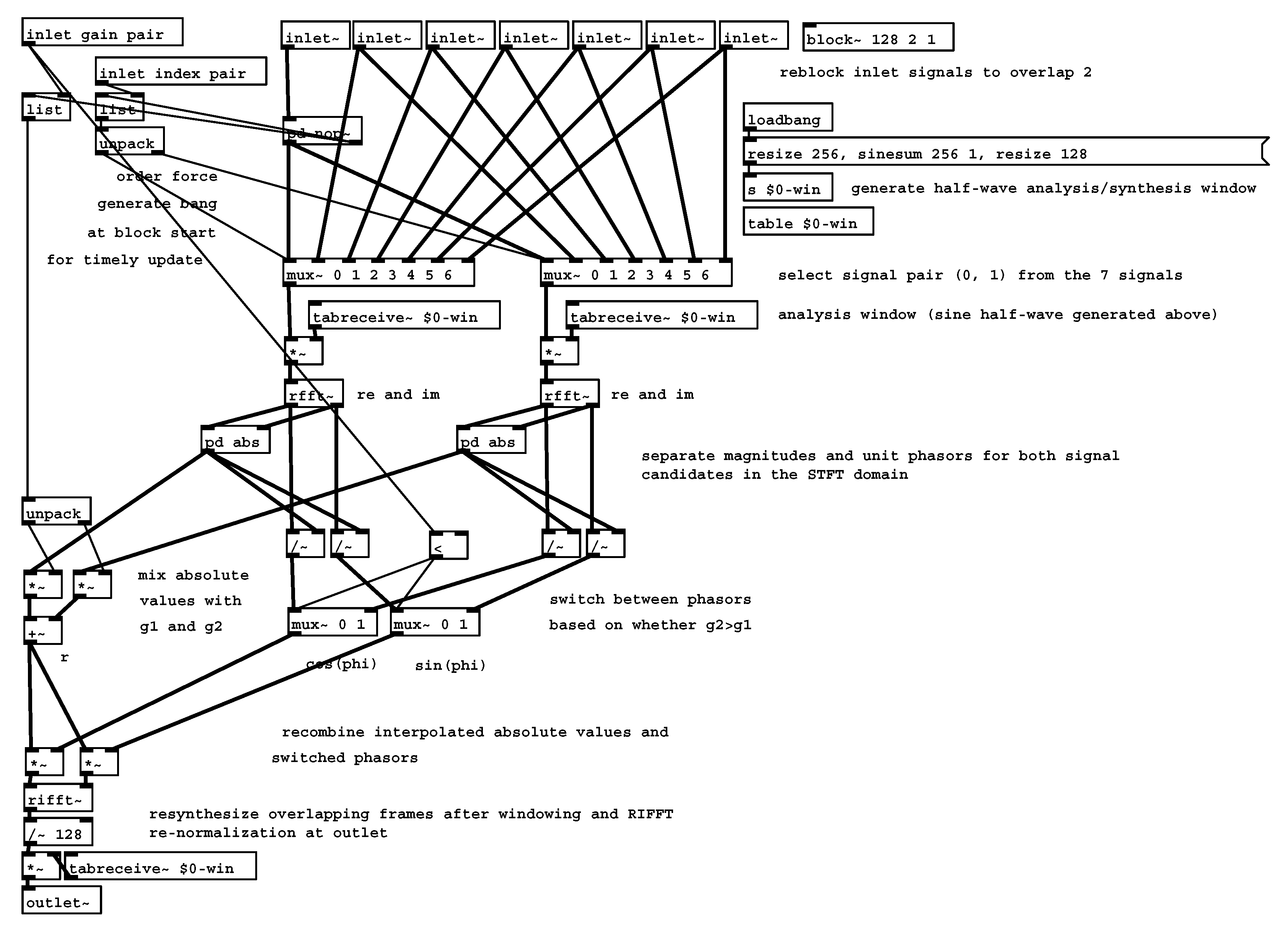

Appendix A. MOBRIR PureData Real-Time Processing Patch

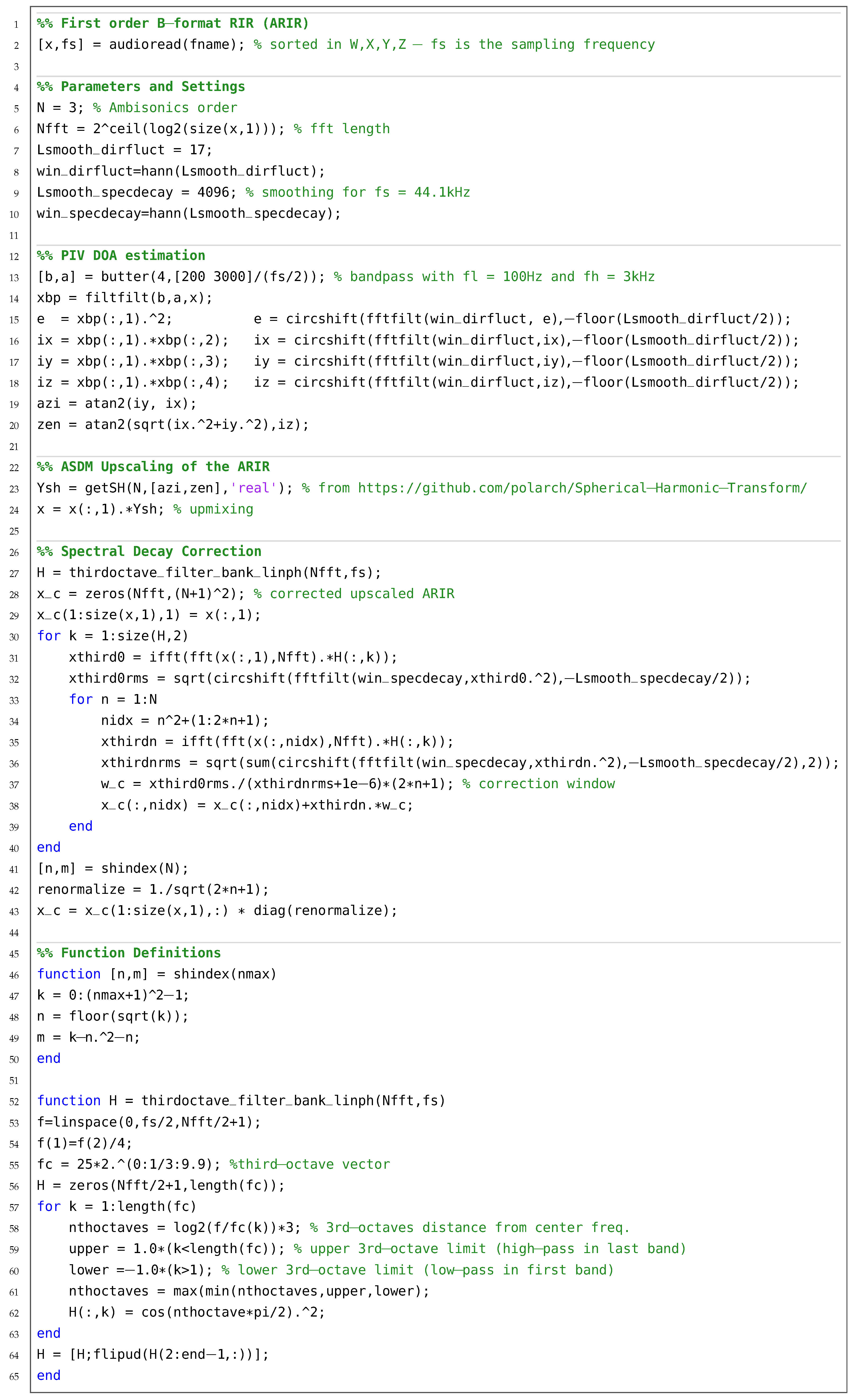

Appendix B. ASDM MATLAB Source Code

References

- Møller, H. Fundamentals of binaural technology. Appl. Acoust. 1992, 36, 171–218. [Google Scholar] [CrossRef]

- Pollack, I.; Trittipoe, W. Binaural listening and interaural noise cross correlation. J. Acoust. Soc. Am. 1959, 31, 1250–1252. [Google Scholar] [CrossRef]

- Okano, T.; Beranek, L.L.; Hidaka, T. Relations among interaural cross-correlation coefficient (IACCE), lateral fraction (LFE), and apparent source width (ASW) in concert halls. J. Acoust. Soc. Am. 1998, 104, 255–265. [Google Scholar] [CrossRef] [PubMed]

- Lindau, A. Binaural Resynthesis of Acoustical Environments-Technology and Perceptual Evaluation. Ph.D. Thesis, TU Berlin, Berlin, Germany, 2014. [Google Scholar]

- Smyth, S.M. Personalized Headphone Virtualization. U.S. Patent 7,936,887, 3 May 2011. [Google Scholar]

- Smyth, S.; Smyth, M.; Cheung, S. Headphone Surround Monitoring for Studios. In Proceedings of the Convention of the Audio Engineering Society, London, UK, 9 April 2008; pp. 1–7. [Google Scholar]

- Satongar, D.; Lam, Y.W.; Pike, C. Measurement and Analysis of a Spatially Sampled Binaural Room Impulse Response Dataset. In Proceedings of the 21st International Congress on Sound and Vibration, Beijing, China, 13–17 July 2014; pp. 1–8. [Google Scholar]

- Romanov, M.; Berghold, P.; Rudrich, D.; Zaunschirm, M.; Frank, M.; Zotter, F. Implementation and evaluation of a low-cost head-tracker for binaural synthesis. In Proceedings of the Convention of the Audio Engineering Society, Berlin, Germany, 20–23 May 2017. [Google Scholar]

- Begault, D.R.; Wenzel, E.M.; Anderson, M.R. Direct Comparison of the Impact of Head Tracking, Reverberation, and Individualized Head-Related Transfer Functions on the Spatial Perception of a Virtual Speech Source. J. Audio Eng. Soc. 2001, 49, 904–916. [Google Scholar] [PubMed]

- Brungart, D.S.; Kordik, A.J.; Simpson, B.D. Effects of headtracker latency in virtual audio displays. J. Audio Eng. Soc. 2006, 54, 32–44. [Google Scholar]

- Hendrickx, E.; Stitt, P.; Messonnier, J.C.; Lyzwa, J.M.; Katz, B.F.; de Boishéraud, C. Influence of head tracking on the externalization of speech stimuli for non-individualized binaural synthesis. J. Acoust. Soc. Am. 2017, 141, 2011–2023. [Google Scholar] [CrossRef] [PubMed]

- Zaunschirm, M.; Baumgartner, C.; Schörkhuber, C.; Frank, M.; Zotter, F. An Efficient Source-and-Receiver-Directional RIR Measurement Method. In Proceedings of the Fortschritte der Akustik-DAGA, Kiel, Germany, 6–9 March 2017; pp. 1343–1346. [Google Scholar]

- Pörschmann, C.; Wiefling, S. Perceptual Aspects of Dynamic Binaural Synthesis based on Measured Omnidirectional Room Impulse Responses. In Proceedings of the International Conference on Spatial Audio, Seattle, WA, USA, 26–30 May 2015. [Google Scholar]

- Menzer, F. Binaural Audio Signal Processing Using Interaural Coherence Matching. Ph.D. Thesis, EPFL, Lausanne, Switzerland, 2010. [Google Scholar]

- Lindau, A.; Maempel, H.J.; Weinzierl, S. Minimum BRIR grid resolution for dynamic binaural synthesis. J. Acoust. Soc. Am. 2008, 123, 3498. [Google Scholar] [CrossRef]

- Algazi, V.R.; Duda, R.O.; Thompson, D.M. Motion-tracked binaural sound. J. Audio Eng. Soc. 2004, 52, 1142–1156. [Google Scholar]

- Pruša, Z.; Balazs, P.; Søndergaard, P.L. A Non-iterative Method for STFT Phase (Re)Construction. IEEE/ACM Trans. Audio Speech Lang. Process. 2016, 25, 1154–1164. [Google Scholar] [CrossRef]

- Hom, R.C.M.; Algazi, V.R.; Duda, R.O. High-Frequency Interpolation for Motion-Tracked Binaural Sound. In Proceedings of the Convention of the Audio Eng. Soc. 121, San Francisco, CA, USA, 5–8 October 2006. [Google Scholar]

- Lindau, A.; Roos, S. Perceptual evaluation of discretization and interpolation for motion-tracked binaural (MTB) recordings. In Proceedings of the 26th Tonmeistertagung, Leipzig, Germany, 25–28 November 2010; pp. 680–701. [Google Scholar]

- Zaunschirm, M.; Frank, M.; Franz, Z. Perceptual Evaluation of Variable-Orientation Binaural Room Impulse Response Rendering. In Proceedings of the Conference of the Audio Eng. Soc.: 2019 AES International Conference on Immersive and Interactive Audio, York, UK, 27–29 March 2019. [Google Scholar]

- Bernschütz, B. A Spherical Far Field HRIR/HRTF Compilation of the Neumann KU 100. In Proceedings of the Fortschritte der Akustik—AIA-DAGA, Merano, Italy, 18–21 March 2013; pp. 592–595. [Google Scholar]

- Zaunschirm, M.; Frank, M.; Zotter, F. BRIR Synthesis Using First-Order Microphone Arrays. In Proceedings of the Conference of the Audio Eng. Soc. 144, Milan, Italy, 23–26 May 2018; pp. 1–10. [Google Scholar]

- Zotter, F.; Frank, M. Ambisonics: A Practical 3D Audio Theory for Recording, Studio Production, Sound Reinforcement, and Virtual Reality; SpringerOpen: Berlin, Germany, 2019. [Google Scholar] [CrossRef]

- Zaunschirm, M.; Schörkhuber, C.; Höldrich, R. Binaural rendering of Ambisonic signals by head-related impulse response time alignment and a diffuseness constraint. J. Acoust. Soc. Am. 2018, 143, 3616–3627. [Google Scholar] [CrossRef] [PubMed]

- International Telecommunication Union. ITU-R BS.1534-3, Method for the Subjective Assessment of Intermediate Quality Level of Audio Systems; ITU-R Recommendation; International Telecommunication Union: Geneva, Switzerland, 2015; p. 1534. [Google Scholar]

- Karjalainen, M.; Piirilä, E.; Järvinen, A.; Huopaniemi, J. Comparisonof Loudspeaker Equalization Methods Based on DSP Techniques. J. Audio Eng. Soc. 1999, 47, 14–31. [Google Scholar]

- Lipshitz, S.P.; Vanderkooy, J. in-Phase Crossover Network Design. J. Audio Eng. Soc. 1986, 34, 889–894. [Google Scholar]

- D’Appolito, J. Active realization of multiway all-pass crossover systems. J. Audio Eng. Soc. 1987, 35, 239–245. [Google Scholar]

- Wilcoxon, F. Individual comparisons by ranking methods. In Breakthroughs in Statistics; Springer: Berlin, Germany, 1992; pp. 196–202. [Google Scholar]

- Holm, S. A simple sequentially rejective multiple test procedure. Scandinavian J. Stat. 1979, 6, 65–70. [Google Scholar]

- Rayleigh, L. On our perception of sound direction. Philos. Mag. Ser. 6 1907, 13, 214–232. [Google Scholar] [CrossRef]

- Ivanic, J.; Ruedenberg, K. Rotation matrices for real spherical harmonies. direct determination by recursion. J. Phys. Chem. 1996, 100, 6342–6347. [Google Scholar] [CrossRef]

- Pinchon, D.; Hoggan, P.E. Rotation matrices for real spherical harmonics: General rotations of atomic orbitals in space-fixed axes. J. Phys. A Math. Theor. 2007, 40, 1597–1610. [Google Scholar] [CrossRef]

- Tervo, S.; Pätynen, J.; Kuusinen, A.; Lokki, T. Spatial decomposition method for room impulse responses. J. Audio Eng. Soc. 2013, 61, 17–28. [Google Scholar]

- Jarrett, D.P.; Habets, E.A.P.; Naylor, P.A. 3D Source localization in the spherical harmonic domain using a pseudointensity vector. In Proceedings of the European Signal Processing Conference, Aalborg, Denmark, 23–27 August 2010; pp. 442–446. [Google Scholar]

- Frank, M.; Zotter, F. Spatial impression and directional resolution in the reproduction of reverberation. In Proceedings of the Fortschritte der Akustik-DAGA, Aachen, Germany, 14–17 March 2016; pp. 1304–1307. [Google Scholar]

- Schörkhuber, C.; Zaunschirm, M.; Höldrich, R. Binaural Rendering of Ambisonic Signals via Magnitude Least Squares. In Proceedings of the Fortschritte der Akustik-DAGA, Munich, Germany, 19–22 March 2018; Volume 44, pp. 339–342. [Google Scholar]

| Method | ref | k | k | k | k | lin | lin | lin | lin |

| ref | - | 0.11 | 0.11 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| k | 0.13 | - | 0.95 | 0.00 | 0.00 | 0.0 | 0.00 | 0.00 | 0.00 |

| k | 0.58 | 0.35 | - | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| k | 0.00 | 0.02 | 0.00 | - | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| k | 0.00 | 0.00 | 0.00 | 0.00 | - | 0.72 | 0.36 | 0.00 | 0.00 |

| lin | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | - | 0.95 | 0.03 | 0.16 |

| lin | 0.00 | 0.00 | 0.00 | 0.01 | 0.01 | 0.58 | - | 0.00 | 0.00 |

| lin | 0.00 | 0.00 | 0.00 | 0.00 | 0.02 | 0.00 | 0.00 | - | 0.95 |

| lin | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.13 | - |

| Method | ref | k | k | k | k | lin | lin | lin | lin |

| ref | - | 2.19 | 1.69 | 0.90 | 0.02 | 0.00 | 0.00 | 0.00 | 0.00 |

| k | 1.00 | - | 1.78 | 1.69 | 0.15 | 0.0 | 0.00 | 0.00 | 0.00 |

| k | 1.06 | 1.70 | - | 0.44 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| k | 0.86 | 0.82 | 1.33 | - | 0.02 | 0.00 | 0.00 | 0.00 | 0.00 |

| k | 0.09 | 0.15 | 0.90 | 0.86 | - | 0.00 | 0.00 | 0.00 | 0.00 |

| lin | 0.01 | 0.03 | 0.02 | 0.09 | 0.83 | - | 0.16 | 1.38 | 0.39 |

| lin | 0.01 | 0.00 | 0.02 | 0.03 | 0.09 | 0.90 | - | 2.19 | 0.00 |

| lin | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.02 | 0.88 | - | 0.00 |

| lin | 0.00 | 0.00 | 0.01 | 0.00 | 0.00 | 1.06 | 1.70 | 0.33 | - |

| Method | ref | lin | lin | lin | k | k | k | 5th | 3rd | 1st |

| ref | - | 0.01 | 0.01 | 0.01 | 0.10 | 0.01 | 0.01 | 0.22 | 0.18 | 0.01 |

| lin | 0.01 | - | 0.36 | 1.55 | 0.01 | 0.06 | 0.25 | 0.01 | 0.03 | 1.14 |

| lin | 0.01 | 2.46 | - | 1.55 | 0.01 | 0.01 | 0.01 | 0.01 | 0.01 | 0.13 |

| lin | 0.01 | 2.53 | 2.83 | - | 0.01 | 0.01 | 0.01 | 0.01 | 0.01 | 0.60 |

| k | 1.02 | 0.01 | 0.01 | 0.01 | - | 0.22 | 0.03 | 1.30 | 0.95 | 0.07 |

| k | 1.06 | 0.01 | 0.01 | 0.01 | 1.76 | - | 0.07 | 0.07 | 0.76 | 0.04 |

| k | 0.71 | 0.00 | 0.00 | 0.00 | 0.04 | 0.97 | - | 0.03 | 0.07 | 0.50 |

| 5th | 2.54 | 0.01 | 0.01 | 0.01 | 1.05 | 0.97 | 0.68 | - | 0.56 | 0.02 |

| 3rd | 0.97 | 0.01 | 0.01 | 0.01 | 2.66 | 2.83 | 1.06 | 1.05 | - | 0.01 |

| 1st | 0.01 | 1.11 | 1.10 | 0.68 | 0.03 | 0.19 | 0.55 | 0.01 | 0.03 | - |

| Method | ref | lin | lin | lin | k | k | k | 5th | 3rd | 1st |

| ref | - | 0.01 | 0.01 | 0.01 | 0.39 | 1.72 | 0.15 | 1.43 | 1.99 | 0.03 |

| lin | 0.01 | - | 0.00 | 0.03 | 0.00 | 0.01 | 0.01 | 0.01 | 0.01 | 0.01 |

| lin | 0.01 | 0.01 | - | 0.15 | 0.01 | 0.01 | 0.01 | 0.01 | 0.01 | 0.06 |

| lin | 0.01 | 0.19 | 0.64 | - | 0.01 | 0.01 | 0.01 | 0.01 | 0.01 | 0.08 |

| k | 1.67 | 0.01 | 0.01 | 0.01 | - | 1.57 | 1.89 | 0.98 | 1.99 | 0.05 |

| k | 1.86 | 0.01 | 0.01 | 0.01 | 0.39 | - | 1.44 | 1.72 | 1.89 | 0.02 |

| k | 0.08 | 0.01 | 0.05 | 0.01 | 0.09 | 0.26 | - | 0.16 | 0.63 | 0.16 |

| 5th | 1.86 | 0.01 | 0.01 | 0.01 | 1.17 | 1.72 | 0.12 | - | 1.61 | 0.01 |

| 3rd | 0.08 | 0.01 | 0.04 | 0.01 | 1.34 | 1.67 | 1.66 | 0.15 | - | 0.03 |

| 1st | 0.01 | 0.12 | 1.72 | 0.37 | 0.01 | 0.02 | 0.02 | 0.01 | 0.07 | - |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zaunschirm, M.; Frank, M.; Zotter, F. Binaural Rendering with Measured Room Responses: First-Order Ambisonic Microphone vs. Dummy Head. Appl. Sci. 2020, 10, 1631. https://doi.org/10.3390/app10051631

Zaunschirm M, Frank M, Zotter F. Binaural Rendering with Measured Room Responses: First-Order Ambisonic Microphone vs. Dummy Head. Applied Sciences. 2020; 10(5):1631. https://doi.org/10.3390/app10051631

Chicago/Turabian StyleZaunschirm, Markus, Matthias Frank, and Franz Zotter. 2020. "Binaural Rendering with Measured Room Responses: First-Order Ambisonic Microphone vs. Dummy Head" Applied Sciences 10, no. 5: 1631. https://doi.org/10.3390/app10051631

APA StyleZaunschirm, M., Frank, M., & Zotter, F. (2020). Binaural Rendering with Measured Room Responses: First-Order Ambisonic Microphone vs. Dummy Head. Applied Sciences, 10(5), 1631. https://doi.org/10.3390/app10051631