Attention Neural Network for Water Image Classification under IoT Environment

Abstract

1. Introduction

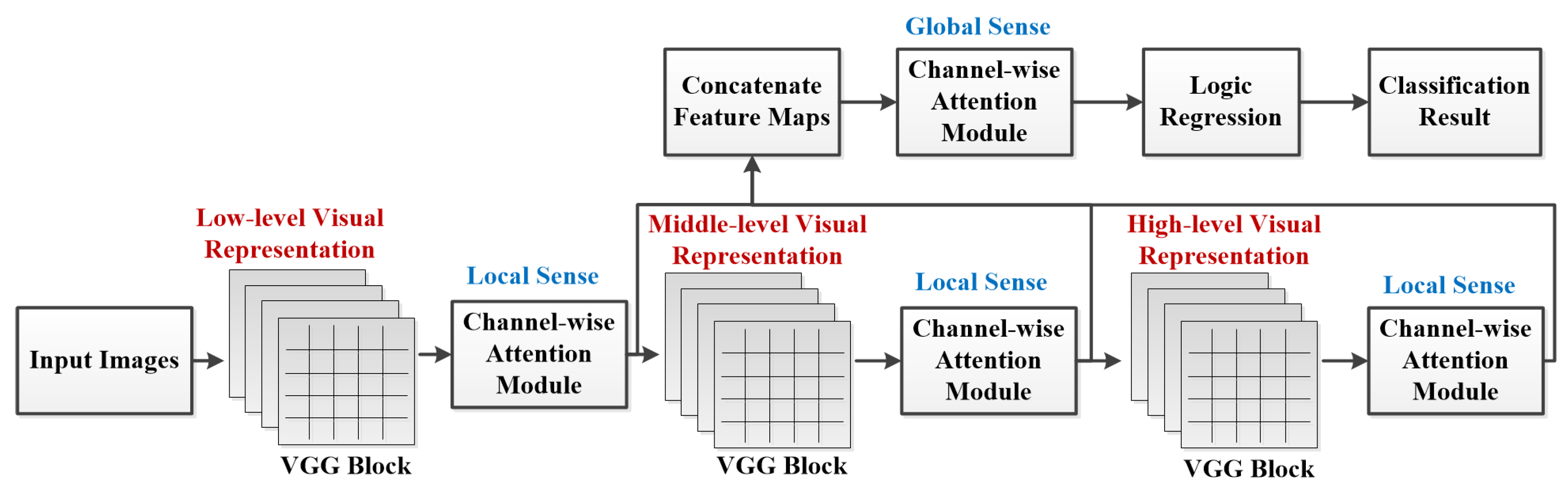

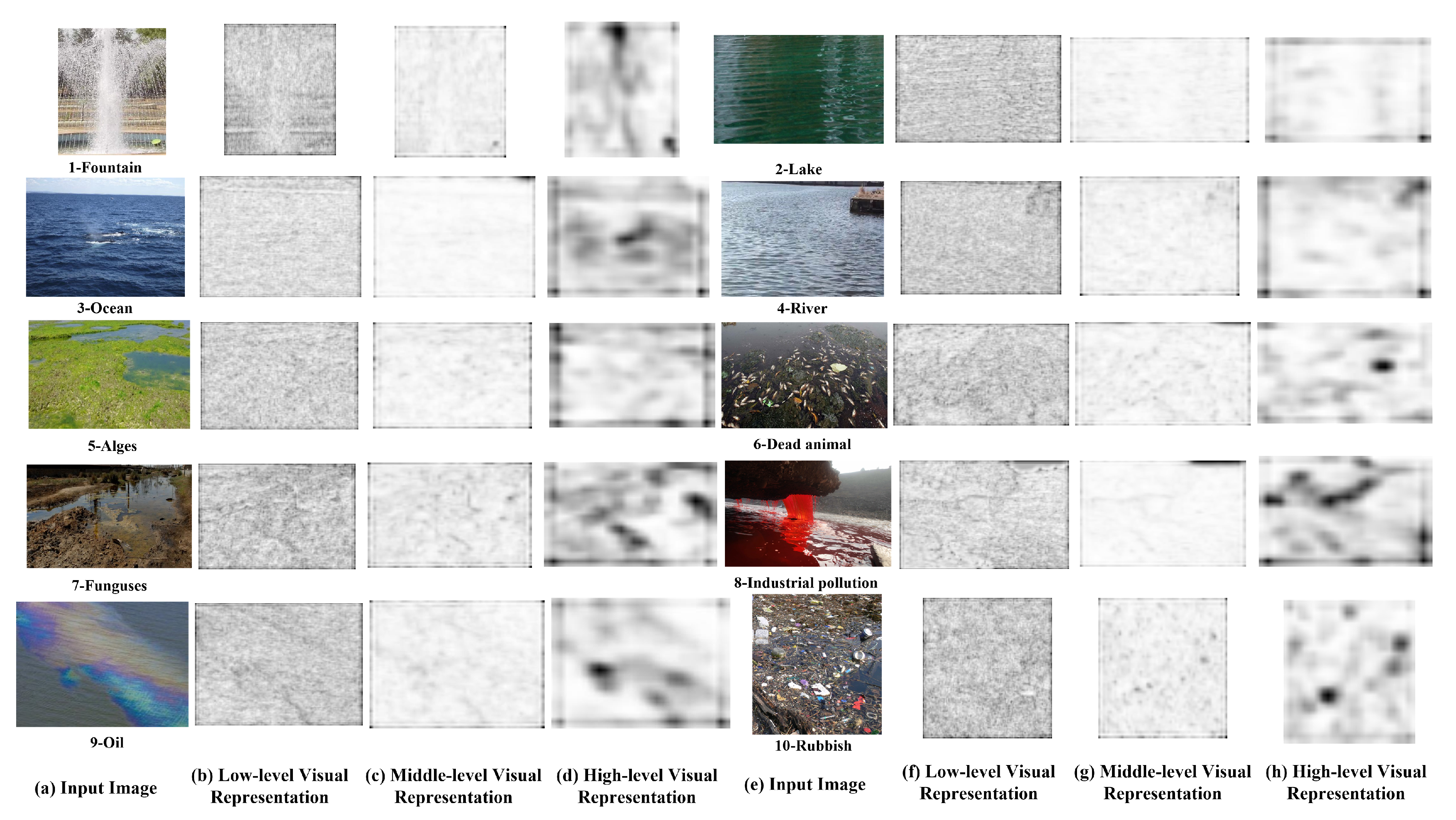

- A context attention neural network for water image classification task is proposed, in which a task-specific attention model is proposed to encode channel-wise and multi-layer characteristics into features.

- The proposed model introduces channel-wise attention gate structure and builds hierarchical attention structure by utilizing multiple attention gates in different layers.

- Since the proposed attention neural network is simple to be implemented and deployed, we believe it can be powerful and easy to help detect inherent patterns for solving semantical problems, even facing ambiguous classification.

2. Related Work

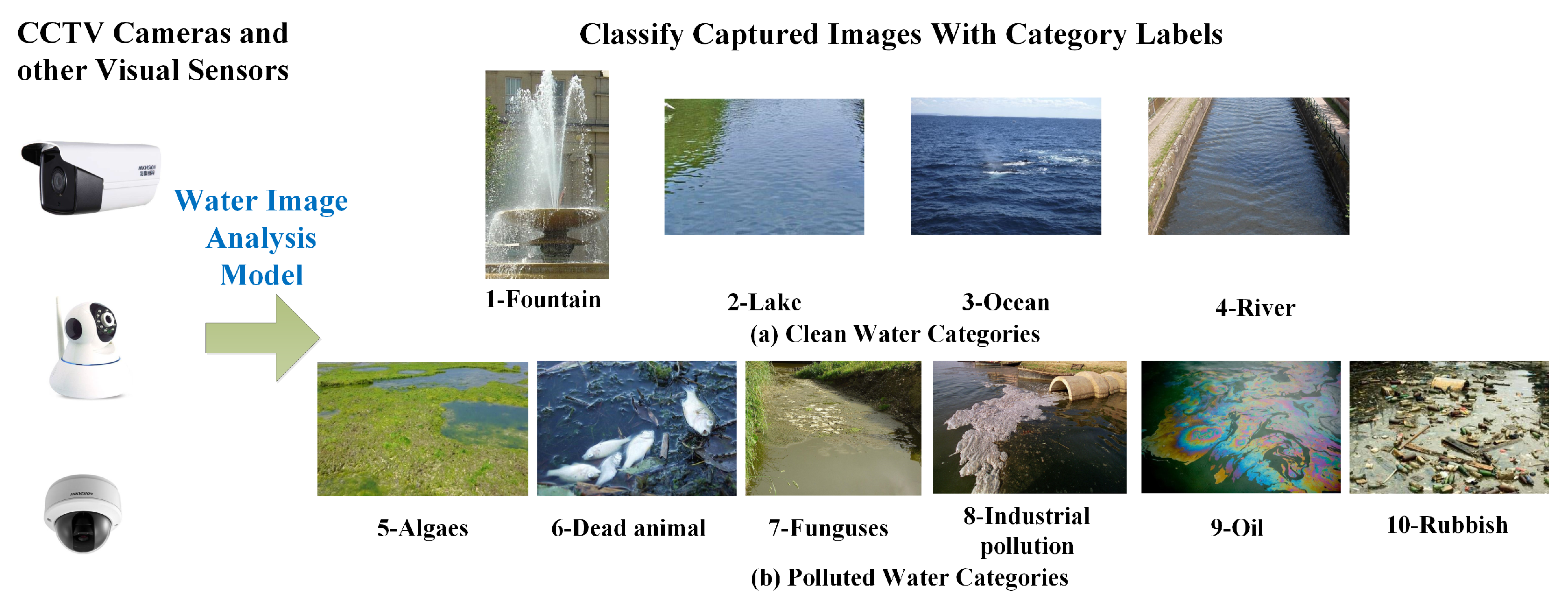

2.1. Water Image Classification

2.2. Attention Mechanism

3. Methods

3.1. Network Architecture Design

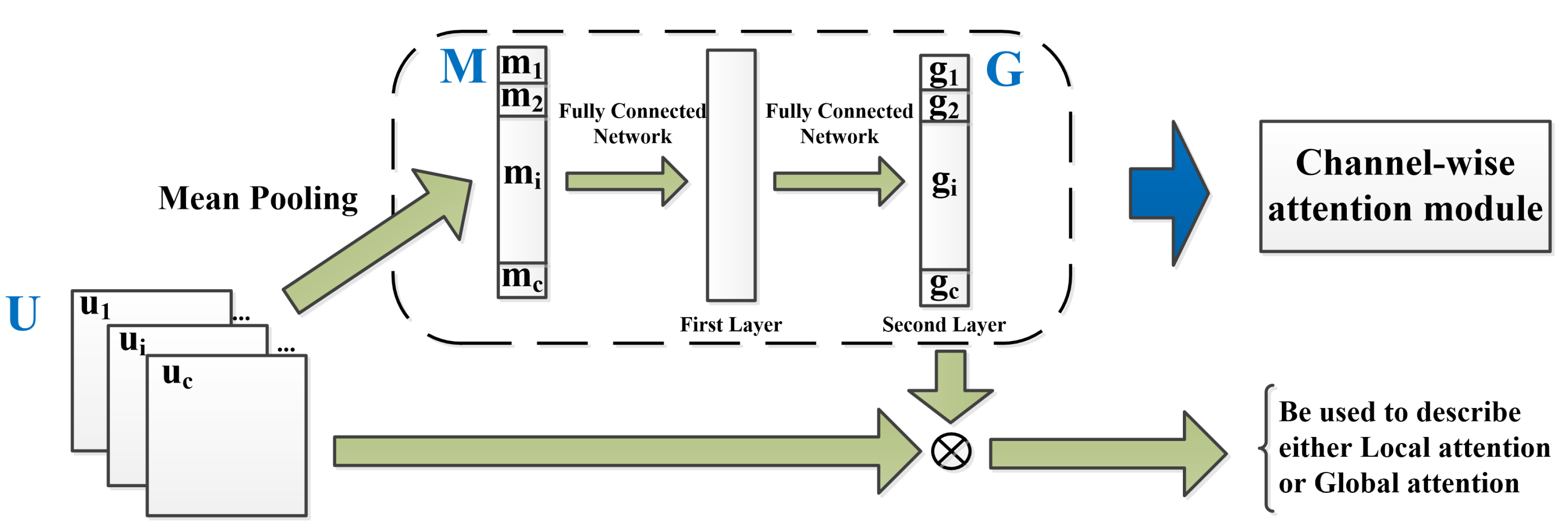

3.2. Design of Channel-wise Attention Gate

3.3. Design of Objective Function

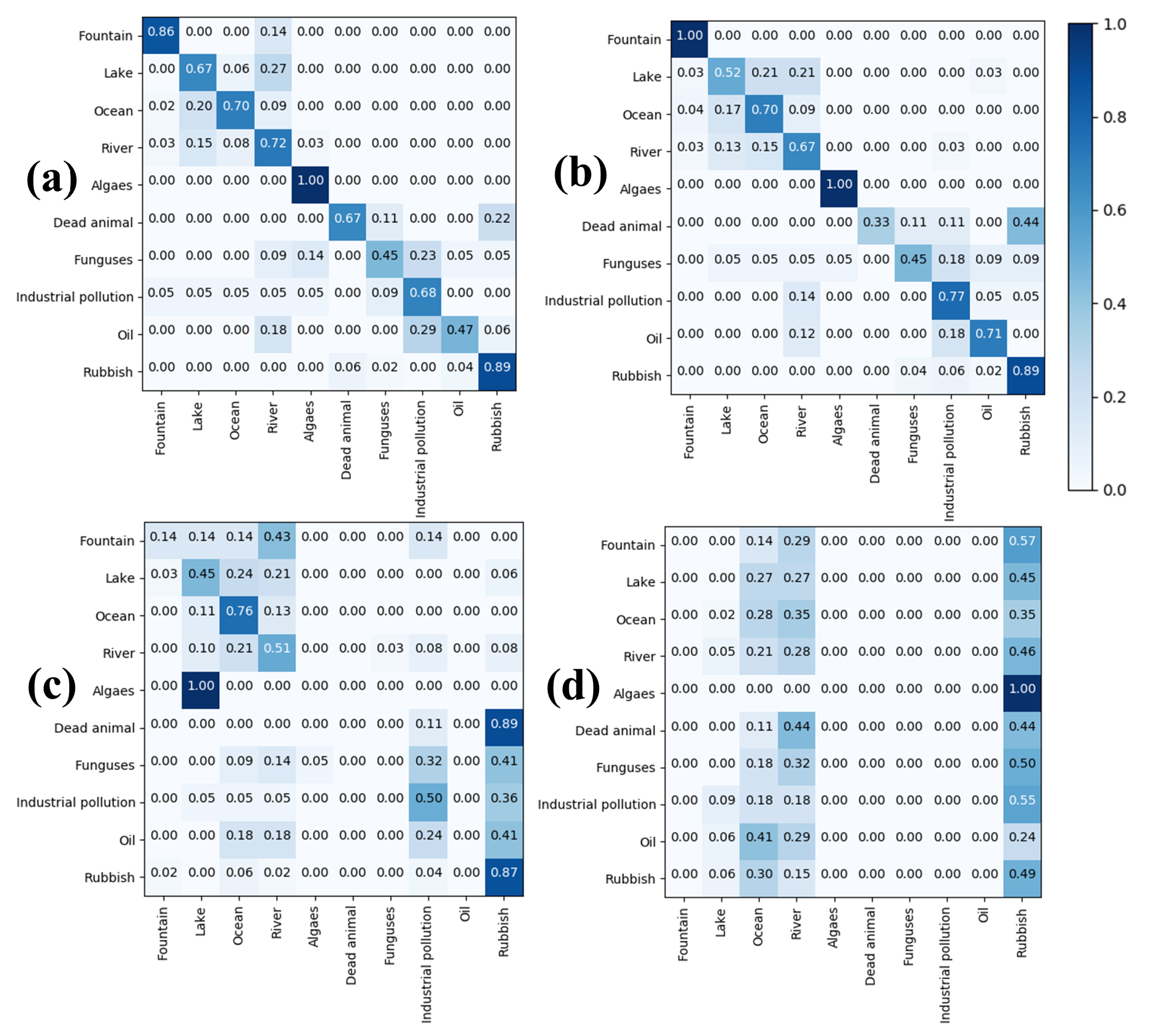

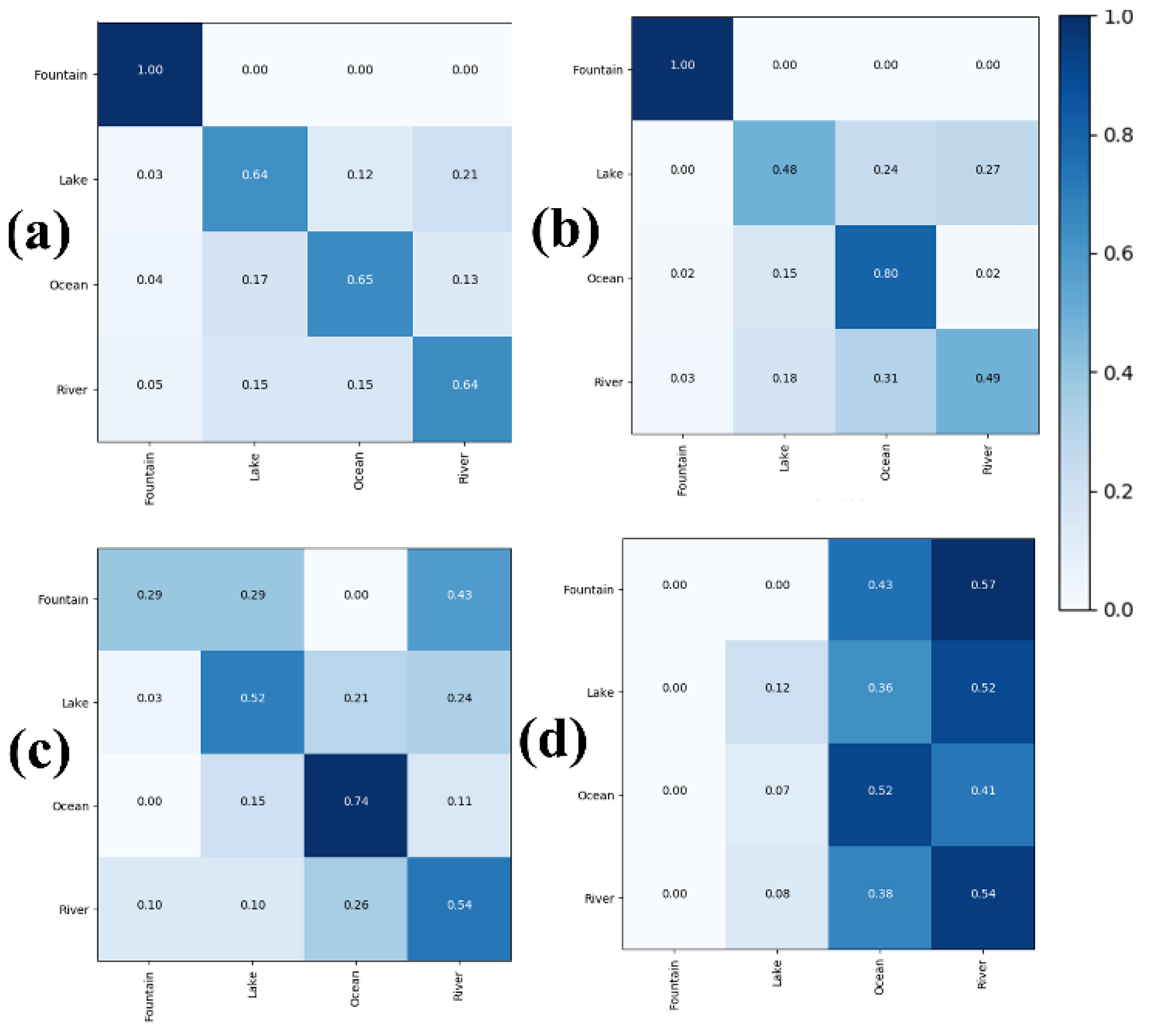

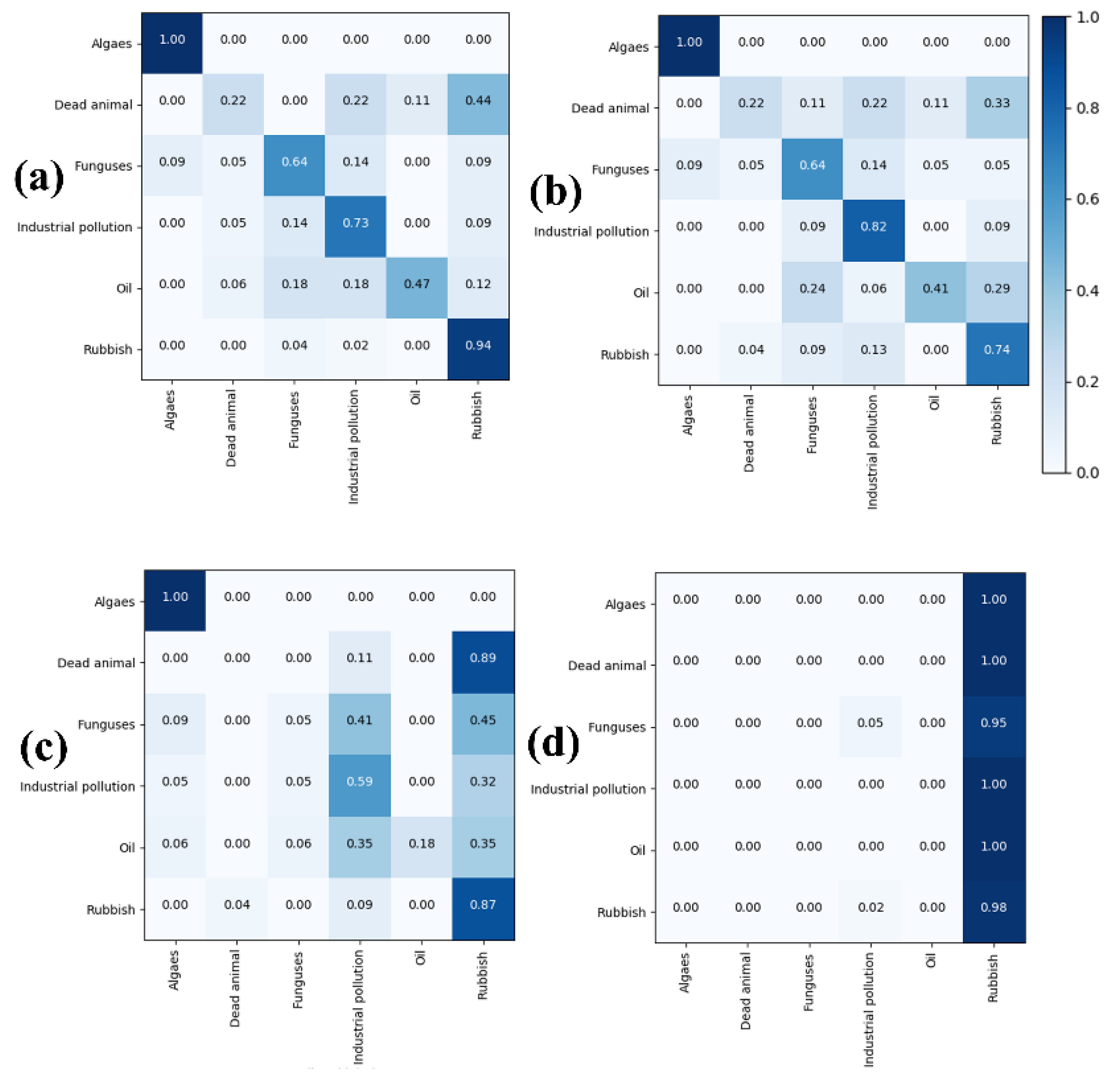

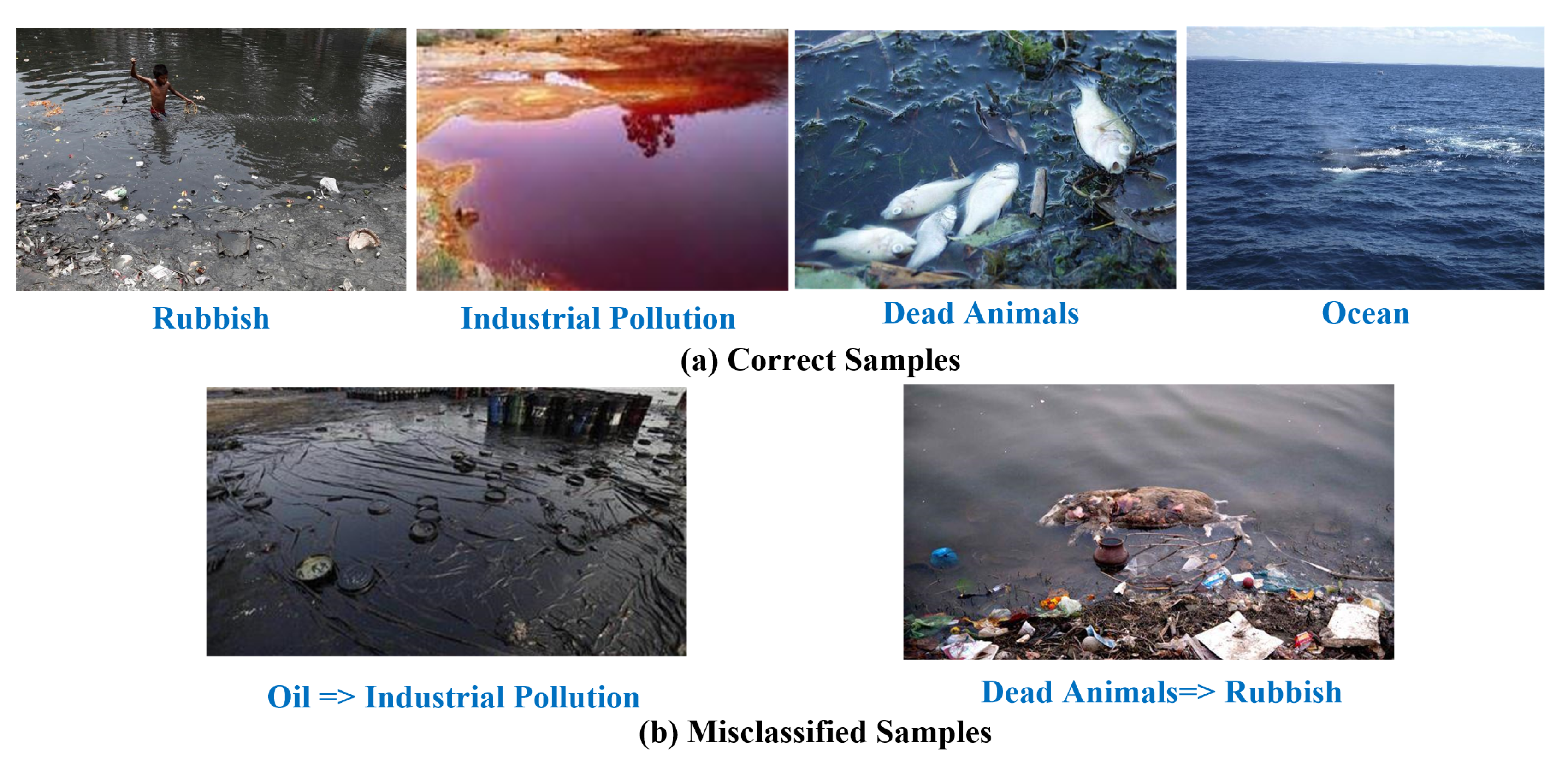

4. Results and Discussion

4.1. Dataset and Measurement

4.2. Performance Analysis

4.3. Implementation Details

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Qi, L.; Zhang, X.; Dou, W.; Hu, C.; Yang, C.; Chen, J. A two-stage locality-sensitive hashing based approach for privacy-preserving mobile service recommendation in cross-platform edge environment. Future Gener. Comput. Syst. 2018, 88, 636–643. [Google Scholar] [CrossRef]

- Wang, H.; Guo, C.; Cheng, S. LoC—A new financial loan management system based on smart contracts. Future Gener. Comput. Syst. 2019, 100, 648–655. [Google Scholar] [CrossRef]

- Qi, L.; He, Q.; Chen, F.; Dou, W.; Wan, S.; Zhang, X.; Xu, X. Finding All You Need: Web APIs Recommendation in Web of Things Through Keywords Search. IEEE Trans. Comput. Soc. Syst. 2019, 6, 1063–1072. [Google Scholar] [CrossRef]

- Xu, X.; Fu, S.; Qi, L.; Zhang, X.; Liu, Q.; He, Q.; Li, S. An IoT-oriented data placement method with privacy preservation in cloud environment. J. Netw. Comput. Appl. 2018, 124, 148–157. [Google Scholar] [CrossRef]

- Wang, H.; Ma, S.; Dai, H.N. A rhombic dodecahedron topology for human-centric banking big data. IEEE Trans. Comput. Soc. Syst. 2019, 6, 1095–1105. [Google Scholar] [CrossRef]

- Khan, M.; Wu, X.; Xu, X.; Dou, W. Big data challenges and opportunities in the hype of Industry 40. In Proceedings of the IEEE International Conference on Communications, Paris, France, 21–25 May 2017; pp. 1–6. [Google Scholar]

- Prasad, M.G.; Chakraborty, A.; Chalasani, R.; Chandran, S. Quadcopter-based stagnant water identification. In Proceedings of the Fifth National Conference on Computer Vision, Pattern Recognition, Image Processing and Graphics (NCVPRIPG), Patna, India, 16–19 December 2015; pp. 1–4. [Google Scholar]

- Mettes, P.; Tan, R.T.; Veltkamp, R.C. Water detection through spatio-temporal invariant descriptors. Comput. Vis. Image Underst. 2017, 154, 182–191. [Google Scholar] [CrossRef]

- Qi, X.; Li, C.G.; Zhao, G.; Hong, X.; Pietikäinen, M. Dynamic texture and scene classification by transferring deep image features. Neurocomputing 2016, 171, 1230–1241. [Google Scholar] [CrossRef]

- Zhao, W.; Du, S. Spectral–spatial feature extraction for hyperspectral image classification: A dimension reduction and deep learning approach. IEEE Trans. Geosci. Remote. Sens. 2016, 54, 4544–4554. [Google Scholar] [CrossRef]

- Xu, X.; Xue, Y.; Qi, L.; Yuan, Y.; Zhang, X.; Umer, T.; Wan, S. An edge computing-enabled computation offloading method with privacy preservation for internet of connected vehicles. Future Gener. Comput. Syst. 2019, 96, 89–100. [Google Scholar] [CrossRef]

- Xu, Y.; Qi, L.; Dou, W.; Yu, J. Privacy-preserving and scalable service recommendation based on simhash in a distributed cloud environment. Complexity 2017, 2017, 343785. [Google Scholar] [CrossRef]

- Zhang, H.; Guo, X.; Cao, X. Water reflection detection using a flip invariant shape detector. In Proceedings of the IEEE International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 633–636. [Google Scholar]

- Rankin, A.L.; Matthies, L.H.; Bellutta, P. Daytime water detection based on sky reflections. In Proceedings of the ICRA, Shanghai, China, 9–13 May 2011; pp. 5329–5336. [Google Scholar]

- Santana, P.; Mendonça, R.; Barata, J. Water detection with segmentation guided dynamic texture recognition. In Proceedings of the IEEE International Conference on Robotics and Biomimetics (ROBIO), Guangzhou, China, 11–14 December 2012; pp. 1836–1841. [Google Scholar]

- Rokni, K.; Ahmad, A.; Solaimani, K.; Hazini, S. A new approach for surface water change detection: Integration of pixel level image fusion and image classification techniques. Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 226–234. [Google Scholar] [CrossRef]

- Wang, H.; Wu, Y.; Min, G.; Xu, J.; Tang, P. Data-driven dynamic resource scheduling for network slicing: A Deep reinforcement learning approach. Inf. Sci. 2019, 498, 106–116. [Google Scholar] [CrossRef]

- Zuo, Y.; Wu, Y.; Min, G.; Cui, L. Learning-based network path planning for traffic engineering. Future Gener. Comput. Syst. 2019, 92, 59–67. [Google Scholar] [CrossRef]

- Liu, H.; Kou, H.; Yan, C.; Qi, L. Link prediction in paper citation network to construct paper correlation graph. EURASIP J. Wirel. Commun. Netw. 2019, 2019, 1–12. [Google Scholar] [CrossRef]

- Zhao, J.; Guo, W.; Cui, S.; Zhang, Z.; Yu, W. Convolutional Neural Network for SAR image classification at patch level. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 945–948. [Google Scholar]

- Pan, B.; Shi, Z.; Xu, X. R-VCANet: A new deep-learning-based hyperspectral image classification method. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2017, 10, 1975–1986. [Google Scholar] [CrossRef]

- Chen, Y.; Fan, R.; Yang, X.; Wang, J.; Latif, A. Extraction of urban water bodies from high-resolution remote-sensing imagery using deep learning. Water 2018, 10, 585. [Google Scholar] [CrossRef]

- Gong, W.; Qi, L.; Xu, Y. Privacy-aware multidimensional mobile service quality prediction and recommendation in distributed fog environment. Wirel. Commun. Mob. Comput. 2018, 2018, 3075849. [Google Scholar] [CrossRef]

- Xu, X.; Li, Y.; Huang, T.; Xue, Y.; Peng, K.; Qi, L.; Dou, W. An energy-aware computation offloading method for smart edge computing in wireless metropolitan area networks. J. Netw. Comput. Appl. 2019, 133, 75–85. [Google Scholar] [CrossRef]

- Pan, J.; Yin, Y.; Xiong, J.; Luo, W.; Gui, G.; Sari, H. Deep learning-based unmanned surveillance systems for observing water levels. IEEE Access 2018, 6, 73561–73571. [Google Scholar] [CrossRef]

- He, T.; Huang, W.; Qiao, Y.; Yao, J. Text-Attentional Convolutional Neural Network for Scene Text Detection. IEEE Trans. Image Process. 2016, 25, 2529–2541. [Google Scholar] [CrossRef]

- Ramanathan, V.; Tang, K.; Mori, G.; Fei-Fei, L. Learning temporal embeddings for complex video analysis. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4471–4479. [Google Scholar]

- Liu, J.; Wang, G.; Hu, P.; Duan, L.; Kot, A.C. Global Context-Aware Attention LSTM Networks for 3D Action Recognition. In Proceedings of the IEEE Conference on Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3671–3680. [Google Scholar]

- Zhao, X.; Sang, L.; Ding, G.; Han, J.; Di, N.; Yan, C. Recurrent Attention Model for Pedestrian Attribute Recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 December–1 January 2019; pp. 9275–9282. [Google Scholar]

- Chen, L.; Zhang, H.; Xiao, J.; Nie, L.; Shao, J.; Liu, W.; Chua, T.S. Sca-cnn: Spatial and channel-wise attention in convolutional networks for image captioning. arXiv 2016, arXiv:1611.05594. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. arXiv 2017, arXiv:1709.01507. [Google Scholar]

- Anderson, P.; He, X.; Buehler, C.; Teney, D.; Johnson, M.; Gould, S.; Zhang, L. Bottom-Up and Top-Down Attention for Image Captioning and Visual Question Answering. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, Lake City, UT, USA, 18–22 June 2018; pp. 6077–6086. [Google Scholar]

- Wu, X.; Shivakumara, P.; Zhu, L.; Lu, T.; Pal, U.; Blumenstein, M. Fourier Transform based Features for Clean and Polluted Water Image Classification. In Proceedings of the International Conference on Pattern Recognition, Beijing, China, 20–24 August 2018. [Google Scholar]

- Qi, L.; Chen, Y.; Yuan, Y.; Fu, S.; Zhang, X.; Xu, X. A QoS-aware virtual machine scheduling method for energy conservation in cloud-based cyber-physical systems. World Wide Web 2019, 1–23. [Google Scholar] [CrossRef]

- Wang, H.; Ma, S.; Dai, H.N.; Imran, M.; Wang, T. Blockchain-based data privacy management with nudge theory in open banking. Future Gener. Comput. Syst. 2019. [Google Scholar] [CrossRef]

- Xu, X.; Liu, Q.; Luo, Y.; Peng, K.; Zhang, X.; Meng, S.; Qi, L. A computation offloading method over big data for IoT-enabled cloud-edge computing. Future Gener. Comput. Syst. 2019, 95, 522–533. [Google Scholar] [CrossRef]

- Xu, X.; Liu, Q.; Zhang, X.; Zhang, J.; Qi, L.; Dou, W. A Blockchain-Powered Crowdsourcing Method With Privacy Preservation in Mobile Environment. IEEE Trans. Comput. Soc. Syst. 2019, 6, 1407–1419. [Google Scholar] [CrossRef]

| Method | Clean Water | Polluted Water | Total | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F-Score | Accuracy | Precision | Recall | F-Score | Accuracy | Accuracy | |

| The Proposed (WA) | 0.65 | 0.69 | 0.67 | 66.4% | 0.65 | 0.67 | 0.66 | 73.6% | 71.2% |

| The Proposed (WoA) | 0.65 | 0.69 | 0.67 | 63.2% | 0.60 | 0.64 | 0.62 | 65.6% | 69.2% |

| Wu et al. [33] | 0.52 | 0.52 | 0.52 | 52.0% | 0.44 | 0.45 | 0.44 | 59.2% | 51.2% |

| Mettes et al. [8] | 0.30 | 0.30 | 0.30 | 41.6% | 0.07 | 0.16 | 0.10 | 35.2% | 20.0% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Y.; Zhang, X.; Xiao, Y.; Feng, J. Attention Neural Network for Water Image Classification under IoT Environment. Appl. Sci. 2020, 10, 909. https://doi.org/10.3390/app10030909

Wu Y, Zhang X, Xiao Y, Feng J. Attention Neural Network for Water Image Classification under IoT Environment. Applied Sciences. 2020; 10(3):909. https://doi.org/10.3390/app10030909

Chicago/Turabian StyleWu, Yirui, Xuyun Zhang, Yao Xiao, and Jun Feng. 2020. "Attention Neural Network for Water Image Classification under IoT Environment" Applied Sciences 10, no. 3: 909. https://doi.org/10.3390/app10030909

APA StyleWu, Y., Zhang, X., Xiao, Y., & Feng, J. (2020). Attention Neural Network for Water Image Classification under IoT Environment. Applied Sciences, 10(3), 909. https://doi.org/10.3390/app10030909