Image Completion with Hybrid Interpolation in Tensor Representation

Abstract

1. Introduction

2. Preliminary

2.1. Image Completion with Tucker Decomposition

2.2. RBF Interpolation

3. Proposed Algorithm

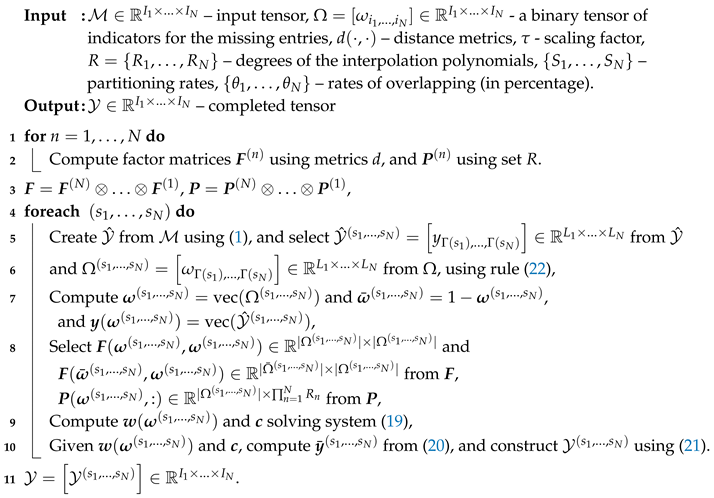

| Algorithm 1: Tensorial Interpolation for Image Completion (TI-IC) |

|

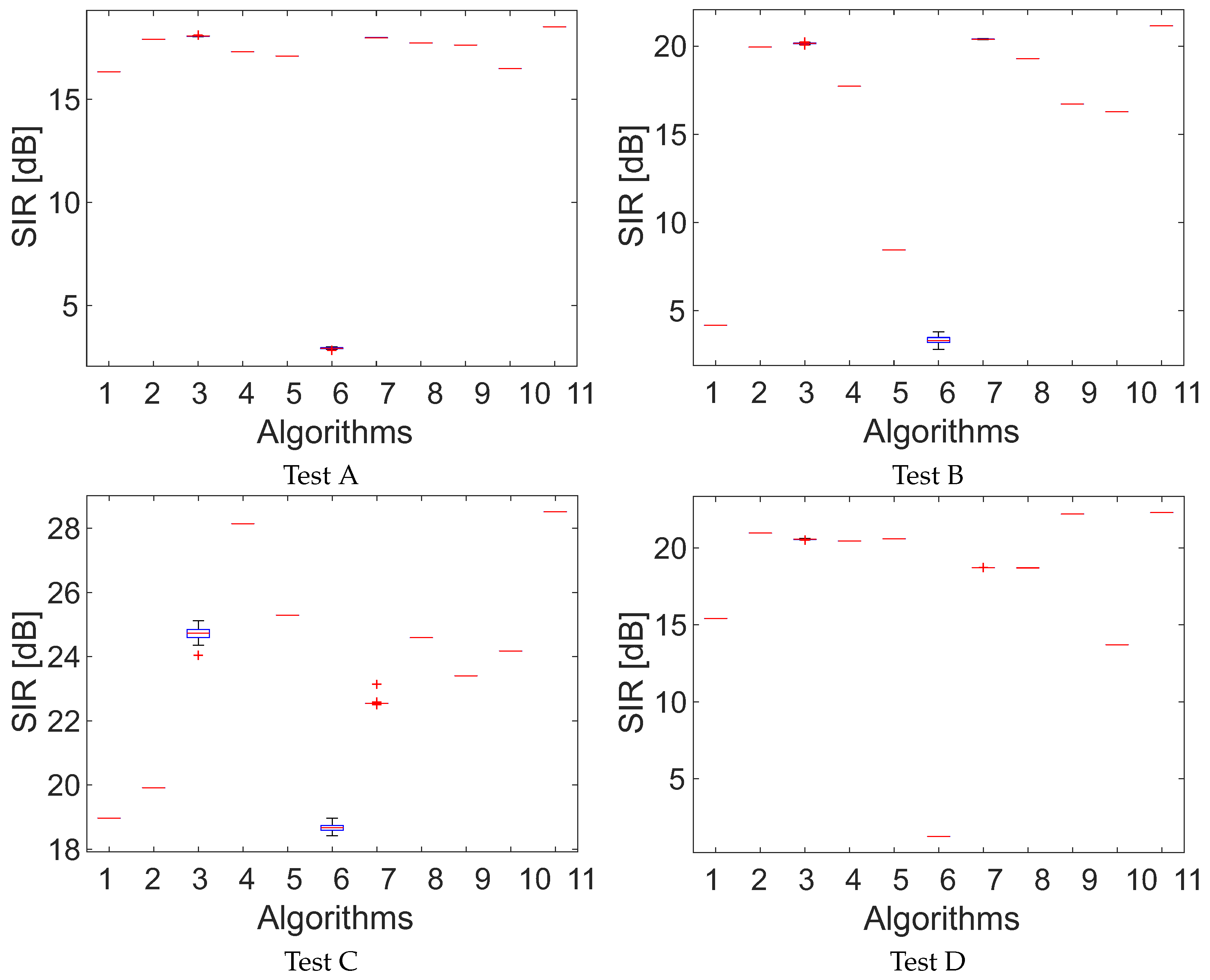

4. Results

4.1. Setup

- A: 90% uniformly distributed random missing tensor fibers in its third mode (color), which corresponds to 90% missing pixels (“dead pixels”),

- B: 95% uniformly distributed random missing tensor entries (“disturbed pixels”),

- C: 200 uniformly distributed random missing circles—created in the same way as in the first case, but the disturbances are circles with a random radius not exceeding 10 pixels,

- D: resolution up-scaling—an original image was down-sampled twice by removing the pixels according to a regular grid mask with edges equal to 1 pixel. The aim was to recover the missing pixels on the edges.

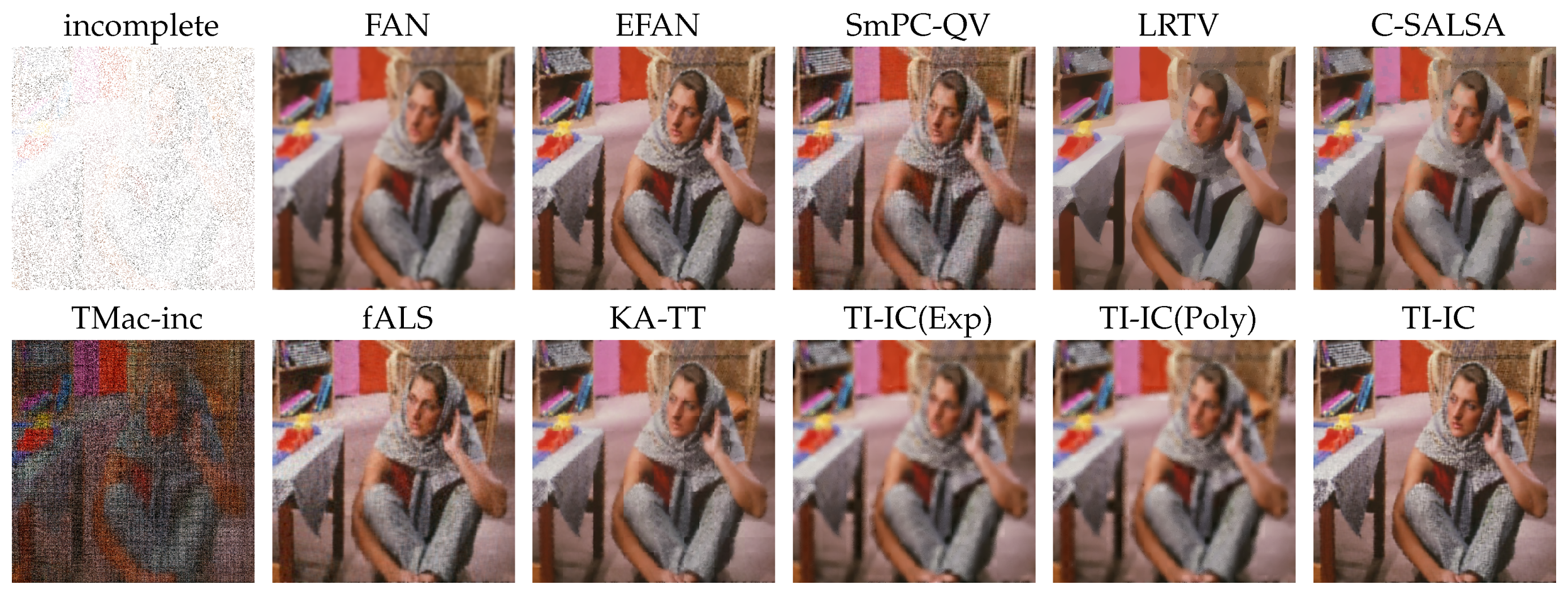

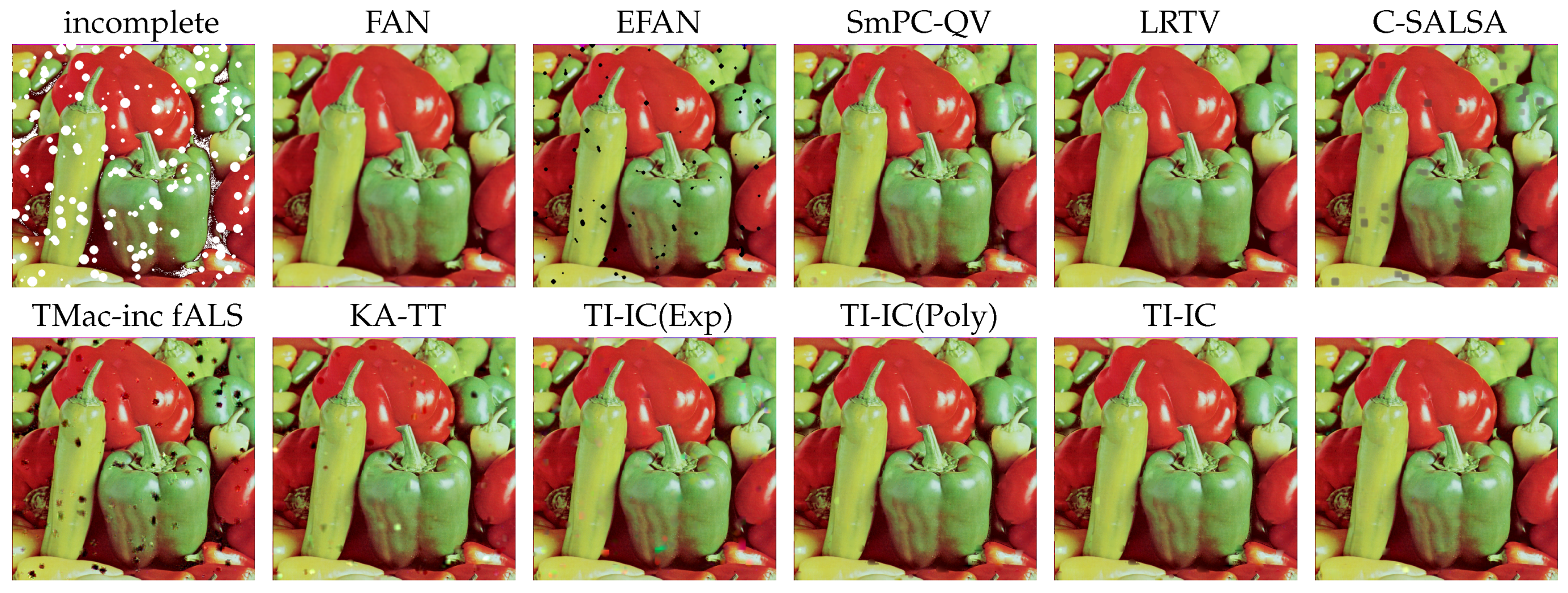

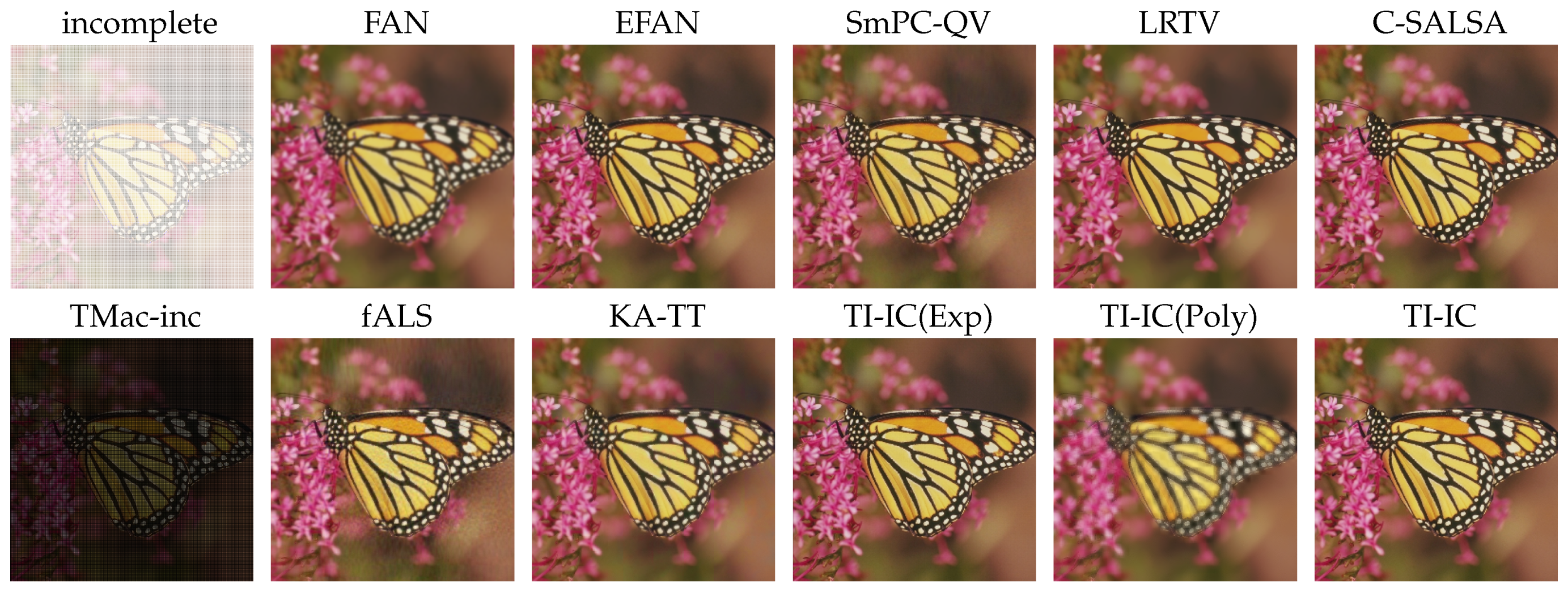

4.2. Image Completion

4.3. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Zarif, S.; Faye, I.; Rohaya, D. Image Completion: Survey and Comparative Study. Int. J. Pattern Recognit. Artif. Intell. 2015, 29, 1554001. [Google Scholar] [CrossRef]

- Hu, W.; Tao, D.; Zhang, W.; Xie, Y.; Yang, Y. The Twist Tensor Nuclear Norm for Video Completion. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 2961–2973. [Google Scholar] [CrossRef] [PubMed]

- Ebdelli, M.; Meur, O.L.; Guillemot, C. Video Inpainting with Short-Term Windows: Application to Object Removal and Error Concealment. IEEE Trans. Image Process. 2015, 24, 3034–3047. [Google Scholar] [CrossRef] [PubMed]

- He, W.; Yokoya, N.; Yuan, L.; Zhao, Q. Remote Sensing Image Reconstruction Using Tensor Ring Completion and Total Variation. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8998–9009. [Google Scholar] [CrossRef]

- Lakshmanan, V.; Gomathi, R. A Survey on Image Completion Techniques in Remote Sensing Images. In Proceedings of the 4th International Conference on Signal Processing, Communication and Networking (ICSCN), Piscataway, NJ, USA, 16–18 March 2017; pp. 1–6. [Google Scholar]

- Bertalmio, M.; Sapiro, G.; Caselles, V.; Ballester, C. Image Inpainting. In Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques, New Orleans, LA, USA, 23–28 July 2000; ACM Press: New York, NY, USA, 2000; pp. 417–424. [Google Scholar]

- Efros, A.A.; Leung, T.K. Texture Synthesis by Non-Parametric Sampling. In Proceedings of the International Conference on Computer Vision (ICCV), Kerkyra, Corfu, Greece, 20–25 September 1999; Volume 2, pp. 1033–1038. [Google Scholar]

- Bertalmio, M.; Vese, L.; Sapiro, G.; Osher, S. Simultaneous Structure and Texture Image Inpainting. Trans. Img. Proc. 2003, 12, 882–889. [Google Scholar] [CrossRef]

- Criminisi, A.; Perez, P.; Toyama, K. Object Removal by Exemplar-based Inpainting. In Proceedings of the IEEE Computer Vision and Pattern Recognition (CVPR), Madison, WI, USA, 16–22 June 2003. [Google Scholar]

- Sun, J.; Yuan, L.; Jia, J.; Shum, H.Y. Image Completion with Structure Propagation. ACM Trans. Graph. 2005, 24, 861–868. [Google Scholar] [CrossRef]

- Darabi, S.; Shechtman, E.; Barnes, C.; Goldman, D.B.; Sen, P. Image Melding: Combining Inconsistent Images Using Patch-based Synthesis. ACM Trans. Graph. 2012, 31, 82:1–82:10. [Google Scholar] [CrossRef]

- Buyssens, P.; Daisy, M.; Tschumperle, D.; Lezoray, O. Exemplar-Based Inpainting: Technical Review and New Heuristics for Better Geometric Reconstructions. IEEE Trans. Image Process. 2015, 24, 1809–1824. [Google Scholar] [CrossRef]

- Hesabi, S.; Jamzad, M.; Mahdavi-Amiri, N. Structure and Texture Image Inpainting. In Proceedings of the International Conference on Signal and Image Processing, Chennai, India, 15–17 December 2010; pp. 119–124. [Google Scholar]

- Jia, J.; Tang, C.-K. Image Repairing: Robust Image Synthesis by Adaptive ND Tensor Voting. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Madison, WI, USA, 18–20 June 2003; Volume 1, p. 7762318. [Google Scholar]

- Drori, I.; Cohen-Or, D.; Yeshurun, H. Fragment-based Image Completion. ACM Trans. Graph. 2003, 22, 303–312. [Google Scholar] [CrossRef]

- Shao, X.; Liu, Z.; Li, H. An Image Inpainting Approach Based On the Poisson Equation. In Proceedings of the Second International Conference on Document Image Analysis for Libraries (DIAL’06), Lyon, France, 27–28 April 2006. [Google Scholar]

- Iizuka, S.; Simo-Serra, E.; Ishikawa, H. Globally and Locally Consistent Image Completion. ACM Trans. Graph. 2017, 36, 107:1–107:14. [Google Scholar] [CrossRef]

- Pathak, D.; Krähenbühl, P.; Donahue, J.; Darrell, T.; Efros, A. Context Encoders: Feature Learning by Inpainting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Guo, J.; Liu, Y. Image completion using structure and texture GAN network. Neurocomputing 2019, 360, 75–84. [Google Scholar] [CrossRef]

- Zhao, D.; Guo, B.; Yan, Y. Parallel Image Completion with Edge and Color Map. Appl. Sci. 2019, 9, 3856. [Google Scholar] [CrossRef]

- Liu, C.; Peng, Q.; Xun, W. Recent Development in Image Completion Techniques. In Proceedings of the IEEE International Conference on Computer Science and Automation Engineering, Shanghai, China, 10–12 June 2011; Volume 4, pp. 756–760. [Google Scholar]

- Atapour-Abarghouei, A.; Breckon, T.P. A comparative review of plausible hole filling strategies in the context of scene depth image completion. Comput. Graph. 2018, 72, 39–58. [Google Scholar] [CrossRef]

- Candès, E.J.; Recht, B. Exact Matrix Completion via Convex Optimization. Found. Comput. Math. 2009, 9, 717. [Google Scholar] [CrossRef]

- Cai, J.F.; Candès, E.J.; Shen, Z. A Singular Value Thresholding Algorithm for Matrix Completion. SIAM J. Optim. 2010, 20, 1956–1982. [Google Scholar] [CrossRef]

- Zhang, D.; Hu, Y.; Ye, J.; Li, X.; He, X. Matrix completion by Truncated Nuclear Norm Regularization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 2192–2199. [Google Scholar]

- Zhang, M.; Desrosiers, C. Image Completion with Global Structure and Weighted Nuclear Norm Regularization. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 4187–4193. [Google Scholar]

- Wen, Z.; Yin, W.; Zhang, Y. Solving a low-rank factorization model for matrix completion by a nonlinear successive over-relaxation algorithm. Math. Program. Comput. 2012, 4, 333–361. [Google Scholar] [CrossRef]

- Lee, D.D.; Seung, H.S. Learning the parts of objects by non-negative matrix factorization. Nature 1999, 401, 788–791. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, Y. Image Inpainting via Weighted Sparse Non-Negative Matrix Factorization. In Proceedings of the 18th IEEE International Conference on Image Processing, Brussels, Belgium, 11–14 September 2011; pp. 3409–3412. [Google Scholar]

- Sadowski, T.; Zdunek, R. Image Completion with Smooth Nonnegative Matrix Factorization. In Proceedings of the 17th International Conference on Artificial Intelligence and Soft Computing ICAISC, Zakopane, Poland, 3–7 June 2018; Part II. pp. 62–72. [Google Scholar]

- Cichocki, A.; Zdunek, R.; Phan, A.H.; Amari, S.I. Nonnegative Matrix and Tensor Factorizations: Applications to Exploratory Multi-way Data Analysis and Blind Source Separation; Wiley and Sons: Chichester, UK, 2009. [Google Scholar]

- Tomasi, G.; Bro, R. PARAFAC and missing values. Chemom. Intell. Lab. Syst. 2005, 75, 163–180. [Google Scholar] [CrossRef]

- Acar, E.; Dunlavy, D.M.; Kolda, T.G.; Mørup, M. Scalable Tensor Factorizations with Missing Data. In Proceedings of the SIAM International Conference on Data Mining, Columbus, OH, USA, 29 April–1 May 2010; pp. 701–712. [Google Scholar]

- Liu, J.; Musialski, P.; Wonka, P.; Ye, J. Tensor Completion for Estimating Missing Values in Visual Data. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 208–220. [Google Scholar] [CrossRef]

- Song, Q.; Ge, H.; Caverlee, J.; Hu, X. Tensor Completion Algorithms in Big Data Analytics. ACM Trans. Knowl. Discov. Data 2019, 13, 6:1–6:48. [Google Scholar] [CrossRef]

- Gao, B.; He, Y.; Lok Woo, W.; Yun Tian, G.; Liu, J.; Hu, Y. Multidimensional Tensor-Based Inductive Thermography with Multiple Physical Fields for Offshore Wind Turbine Gear Inspection. IEEE Trans. Ind. Electron. 2016, 63, 6305–6315. [Google Scholar] [CrossRef]

- Zhao, Q.; Zhang, L.; Cichocki, A. Bayesian CP Factorization of Incomplete Tensors with Automatic Rank Determination. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1751–1763. [Google Scholar] [CrossRef] [PubMed]

- Yokota, T.; Zhao, Q.; Cichocki, A. Smooth PARAFAC Decomposition for Tensor Completion. IEEE Trans. Signal Process. 2016, 64, 5423–5436. [Google Scholar] [CrossRef]

- Gui, L.; Zhao, Q.; Cao, J. Brain Image Completion by Bayesian Tensor Decomposition. In Proceedings of the 22nd International Conference on Digital Signal Processing (DSP), London, UK, 23–25 August 2017; pp. 1–4. [Google Scholar]

- Geng, X.; Smith-Miles, K. Facial Age Estimation by Multilinear Subspace Analysis. In Proceedings of the 2009 IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP ’09, Taipei, Taiwan, 19–24 April 2009; pp. 865–868. [Google Scholar]

- Chen, B.; Sun, T.; Zhou, Z.; Zeng, Y.; Cao, L. Nonnegative Tensor Completion via Low-Rank Tucker Decomposition: Model and Algorithm. IEEE Access 2019, 7, 95903–95914. [Google Scholar] [CrossRef]

- Wang, W.; Aggarwal, V.; Aeron, S. Efficient Low Rank Tensor Ring Completion. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 5698–5706. [Google Scholar]

- Bengua, J.A.; Phien, H.N.; Tuan, H.D.; Do, M.N. Efficient Tensor Completion for Color Image and Video Recovery: Low-Rank Tensor Train. IEEE Trans. Image Process. 2017, 26, 2466–2479. [Google Scholar] [CrossRef] [PubMed]

- Ko, C.Y.; Batselier, K.; Yu, W.; Wong, N. Fast and Accurate Tensor Completion with Tensor Trains: A System Identification Approach. arXiv 2018, arXiv:1804.06128. [Google Scholar]

- Silva, C.D.; Herrmann, F.J. Hierarchical Tucker Tensor Optimization—Applications to Tensor Completion. In Proceedings of the SAMPTA, Bremen, Germany, 1–5 July 2013. [Google Scholar]

- Zhou, P.; Lu, C.; Lin, Z.; Zhang, C. Tensor Factorization for Low-Rank Tensor Completion. IEEE Trans. Image Process. 2018, 27, 1152–1163. [Google Scholar] [CrossRef]

- Latorre, J.I. Image Compression and Entanglement. arXiv 2005, arXiv:quant-ph/0510031. [Google Scholar]

- Huo, X.; Tan, J.; He, L.; Hu, M. An automatic video scratch removal based on Thiele type continued fraction. Multimed. Tools Appl. 2014, 71, 451–467. [Google Scholar] [CrossRef]

- Karaca, E.; Tunga, M.A. Interpolation-based image inpainting in color images using high dimensional model representation. In Proceedings of the 24th European Signal Processing Conference (EUSIPCO), Budapest, Hungary, 29 August–2 September 2016; pp. 2425–2429. [Google Scholar]

- Sapkal, M.S.; Kadbe, P.K.; Deokate, B.H. Image inpainting by Kriging interpolation technique for mask removal. In Proceedings of the 2016 International Conference on Electrical, Electronics, and Optimization Techniques (ICEEOT), Palanchur, India, 3–5 March 2016; pp. 310–313. [Google Scholar]

- He, L.; Xing, Y.; Xia, K.; Tan, J. An Adaptive Image Inpainting Method Based on Continued Fractions Interpolation. Discret. Dyn. Nat. Soc. 2018, 2018. [Google Scholar] [CrossRef]

- Guariglia, E. Primality, Fractality, and Image Analysis. Entropy 2019, 21, 304. [Google Scholar] [CrossRef]

- Guariglia, E. Harmonic Sierpinski Gasket and Applications. Entropy 2018, 20, 714. [Google Scholar] [CrossRef]

- Gao, B.; Lu, P.; Woo, W.L.; Tian, G.Y.; Zhu, Y.; Johnston, M. Variational Bayesian Subgroup Adaptive Sparse Component Extraction for Diagnostic Imaging System. IEEE Trans. Ind. Electron. 2018, 65, 8142–8152. [Google Scholar] [CrossRef]

- Frongillo, M.; Riccio, G.; Gennarelli, G. Plane wave diffraction by co-planar adjacent blocks. In Proceedings of the Loughborough Antennas Propagation Conference (LAPC), Loughborough, UK, 14–15 November 2016; pp. 1–4. [Google Scholar]

- Ghassemian, H. A review of remote sensing image fusion methods. Inf. Fusion 2016, 32, 75–89. [Google Scholar] [CrossRef]

- Prautzsch, H.; Boehm, W.; Paluszny, M. Tensor Product Surfaces. In Bézier and B-Spline Techniques; Springer: Berlin/Heidelberg, Germany, 2002; pp. 125–140. [Google Scholar]

- Tucker, L.R. The Extension of Factor Analysis to Three-Dimensional Matrices. In Contributions to Mathematical Psychology; Gulliksen, H., Frederiksen, N., Eds.; Holt, Rinehart and Winston: New York, NY, USA, 1964; pp. 110–127. [Google Scholar]

- Buhmann, M.D. Radial Basis Functions—Theory and Implementations, Volume 12; Cambridge Monographs on Applied and Computational Mathematics; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar]

- Achanta, R.; Arvanitopoulos, N.; Susstrunk, S. Extreme Image Completion. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 1333–1337. [Google Scholar]

- Yokota, T.; Hontani, H. Simultaneous Visual Data Completion and Denoising Based on Tensor Rank and Total Variation Minimization and Its Primal-Dual Splitting Algorithm. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3843–3851. [Google Scholar]

- Xu, Y.; Hao, R.; Yin, W.; Su, Z. Parallel matrix factorization for low-rank tensor completion. Inverse Probl. Imaging 2015, 9, 601–624. [Google Scholar] [CrossRef]

- Afonso, M.V.; Bioucas-Dias, J.M.; Figueiredo, M.A.T. Fast Image Recovery Using Variable Splitting and Constrained Optimization. IEEE Trans. Image Process. 2010, 19, 2345–2356. [Google Scholar] [CrossRef]

- Sadowski, T.; Zdunek, R. Image Completion with Filtered Alternating Least Squares Tucker Decomposition. In Proceedings of the IEEE SPA Conference: Algorithms, Architectures, Arrangements, and Applications, Poznan, Poland, 18–20 September 2019; pp. 241–245. [Google Scholar]

- Zdunek, R.; Fonal, K.; Sadowski, T. Image Completion with Filtered Low-Rank Tensor Train Approximations. In Proceedings of the 15th International Work-Conference on Artificial Neural Networks, IWANN, Gran Canaria, Spain, 12–14 June 2019; pp. 235–245. [Google Scholar]

| Algorithm/Test | Test A | Test B | Test C | Test D |

|---|---|---|---|---|

| FAN | 0.25 ± 0.08 | 0.25 ± 0.06 | 0.24 ± 0.04 | 0.2 ± 0.04 |

| EFAN | 0.06 ± 0.01 | 0.06 ± 0.01 | 0.15 ± 0.01 | 0.07 ± 0.01 |

| SmPC-QV | 534.67 ± 91.82 | 579.12 ± 109.36 | 416.68 ± 82.97 | 507.74 ± 101.19 |

| LRTV | 876.64 ± 89.25 | 943.6 ± 78.45 | 966.72 ± 87.01 | 977.86 ± 117.85 |

| C-SALSA | 73.87 ± 17.08 | 86.47 ± 22.52 | 66.33 ± 18.84 | 354.58 ± 91.99 |

| TMac-inc | 122.37 ± 21.75 | 120.8 ± 40.98 | 440.05 ± 54.35 | 193.22 ± 23.29 |

| fALS | 545.39 ± 63.67 | 478.98 ± 47.47 | 879.41 ± 74.83 | 206.23 ± 74.93 |

| KA-TT | 433.8 ± 81.18 | 379.54 ± 63.55 | 392.59 ± 54.09 | 419.3 ± 70.94 |

| TI-IC(Exp) | 3.77 ± 1.03 | 1.39 ± 0.17 | 133.14 ± 10.92 | 15.08 ± 1.97 |

| TI-IC(Exp) * | 1.7 ± 0.12 | 1.14 ± 0.04 | 15.39 ± 0.56 | 2.81 ± 0.1 |

| TI-IC(Poly) | 0.57 ± 0.11 | 0.13 ± 0.02 | 1.73 ± 0.11 | 0.71 ± 0.1 |

| TI-IC(Poly) * | 0.49 ± 0.03 | 0.36 ± 0.01 | 0.58 ± 0.02 | 0.49 ± 0.01 |

| TI-IC | 6.01 ± 1.49 | 2.91 ± 0.78 | 406.75 ± 51.51 | 24.59 ± 4.77 |

| TI-IC * | 1.96 ± 0.05 | 1.19 ± 0.04 | 40.98 ± 1.79 | 4.15 ± 0.18 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zdunek, R.; Sadowski, T. Image Completion with Hybrid Interpolation in Tensor Representation. Appl. Sci. 2020, 10, 797. https://doi.org/10.3390/app10030797

Zdunek R, Sadowski T. Image Completion with Hybrid Interpolation in Tensor Representation. Applied Sciences. 2020; 10(3):797. https://doi.org/10.3390/app10030797

Chicago/Turabian StyleZdunek, Rafał, and Tomasz Sadowski. 2020. "Image Completion with Hybrid Interpolation in Tensor Representation" Applied Sciences 10, no. 3: 797. https://doi.org/10.3390/app10030797

APA StyleZdunek, R., & Sadowski, T. (2020). Image Completion with Hybrid Interpolation in Tensor Representation. Applied Sciences, 10(3), 797. https://doi.org/10.3390/app10030797