Abstract

A machine is taught by finding the minimum value of the cost function which is induced by learning data. Unfortunately, as the amount of learning increases, the non-liner activation function in the artificial neural network (ANN), the complexity of the artificial intelligence structures, and the cost function’s non-convex complexity all increase. We know that a non-convex function has local minimums, and that the first derivative of the cost function is zero at a local minimum. Therefore, the methods based on a gradient descent optimization do not undergo further change when they fall to a local minimum because they are based on the first derivative of the cost function. This paper introduces a novel optimization method to make machine learning more efficient. In other words, we construct an effective optimization method for non-convex cost function. The proposed method solves the problem of falling into a local minimum by adding the cost function in the parameter update rule of the ADAM method. We prove the convergence of the sequences generated from the proposed method and the superiority of the proposed method by numerical comparison with gradient descent (GD, ADAM, and AdaMax).

1. Introduction

Machine learning is a field of computer science that gives computer systems the ability to learn with data, without being explicitly programmed. For machines to learn data, a machine learns how to minimize it by introducing a cost function. The cost function is mostly made up of the difference between the true value and the value calculated from the Artificial Neural Network (ANN) [1,2,3,4,5,6,7]. Therefore, the cost function varies with the amount of training data, non-linear activation function in ANN, and the structure of ANN. These changes generate both a singularity and local minimum within the cost function. We know that all the differentiable functions at this point (singularity or local minimum) have a first derivative value of zero.

The gradient-based optimization (gradient descent optimization) is widely used to find the minimum value of the cost function [8,9,10,11,12,13,14,15]. Gradient descent (GD) is a method that was first introduced and uses a fixed-point method to make the first derivative of the cost function zero. This method works somewhat well; however, it causes many difficulties in complex ANNs. To overcome this difficulty, a method called ADAM (Adaptive moment estimation) [15] was introduced to add the momentum method and to control the distance of each step. In other words, this method uses the sum of the gradient values multiplied by the weights calculated in the past, which is the idea of momentum. This is called the ’first momentum’, and the sum of squares of the gradient is calculated in the same way. This is called the ’second momentum’, and the ratio of the first and the second momentum values is calculated and the minimum value is searched for according to the ratio. More detailed information can be found in [16,17,18,19,20,21,22]. This method is still widely used and works well in most areas. There is a more advanced method called AdaMax (Adaptive moment estimation with Maximum). The AdaMax method uses the maximum value of the calculation method of the second momentum part in ADAM. This provides a more stable method. Various other methods exist. We are particularly interested in GD, ADAM, and AdaMax, because GD was the first to be introduced, ADAM is still widely used, and AdaMax is a modified method of ADAM. It has been empirically observed that these algorithms fail to converge toward an optimal solution [23]. We numerically analyze the most basic GD method, the most widely used ADAM method, and the modified AdaMax method. As a result of these numerical interpretations, we introduce the proposed method. The existing methods based on gradient descent operate by changing the parameter so that the first derivative of the cost function becomes zero, which results in finding the minimum value of the cost function. For this method to be established, it is assumed that the cost function has a convex property [24,25]. However, the first derivative of the cost function is also zero at a local minimum. Therefore, this existing method may converge to the local minimum in the cost function where the local minimum exists. If the value of the cost function at the local minimum is not as small as is desired, the value of the parameter should change. To solve this problem, we solve the optimization problem by using the first derivative of the cost function and the cost function itself. In this way, if the value of the cost function is non-zero, the parameter changes even if the first derivative of the cost function becomes zero. This is the reason for adding the cost function. Here, we also use the idea of the ADAM method by using these data to add to the parameter changes, and also demonstrate the convergence of the created sequence.

In summary, our research question is why neural networks are often poorly trained by known optimization methods. Our research goal is to find a new optimization method which resolve this phenomenon. For this, first we prove the convergence of the new method. Next, we use the simplest cost function to visualize the movements of our method and basic methods near a local minimum. In addition, then, we compare performances of our method and ADAM on practical datasets such as MNIST and CIFAR10.

This paper is organized as follows. In Section 2, the definition of the cost function and the cause of the non-convex cost function are explained. In Section 3, we briefly describe the known Gradient-Descent-based algorithms. In particular, we introduce the first GD (Gradient Descent) method, the most recently used ADAM method, and finally, the improved ADAM method. In Section 4, we explain the proposed method, the convergence of the proposed method, and other conditions. In Section 5, we present several numerical comparisons between the proposed method and the discussed methods. The first case is the numerical comparison of a one variable non-convex function. We then perform numerical comparisons of non-convex functions in a two-dimensional space. The third case is a numerical comparison between four methods in two-dimensional region separation. Finally, we test with MNIST (Modified National Institute of Standards and Technology) and CIFAR10 (The Canadian Institute for Advanced Research)—the most basic examples of image analysis. MNIST classifies 10 types of grayscale images, as seen in Figure 1, and this experiment shows that our method is also efficient at analyzing images (https://en.wikipedia.org/wiki/MNIST_database). CIFAR10 (The Canadian Institute for Advanced Research) is a dataset that classifies 10 types like MNIST (Modified National Institute of Standards and Technology), but CIFAR10 has RGB images. Therefore, CIFAR10 requires more computation than MNIST. Through numerical comparisons, we confirm that the proposed method is more efficient than the existing methods described in this paper. In Section 6, we present the conclusions and future work.

Figure 1.

Examples of MNIST dataset.

2. Cost Function

In this section, we explain basic machine learning among the various modified machine learning algorithms. For convenience, machine learning refers to basic machine learning. To understand the working principle of machine learning, we try to understand the principle from the structure with one neuron. Let x be input data and be output data, which is obtained by

where w is weight, b is bias, and is a sigmoid function (it is universally called an activation function, and various functions can be used). Therefore, the result of the function H is a value between 0 and 1. Non-linearity is added to the cost function by using the non-linear activation function. For machine learning, let be the set of learning data and let be the number of the size of . In other words, when the first departure point of learning data is 1, the learning dataset is , where is a real number and is a value between 0 and 1. From , we can define a cost function as follows:

The machine learning is completed by finding w and b, which satisfies the minimum value of the cost function. Unfortunately, there are several local minimum values of the cost function, because the cost function is not convex. Furthermore, the deepening of the structure of an ANN means that the activation function performs the synthesis many times. This results in an increase in the number of local minimums of the cost function. More complete interpretations and analysis are currently being made in more detail and these will be reported soon.

3. Learning Methods

In this section, we provide a brief description of the well-known optimization methods—GD, ADAM, and AdaMax.

3.1. Gradient Descent Method

GD is the most basic method and the first introduced. In GD, a fixed-point iteration method is introduced with the first derivative of the cost function. A parameter is changed in each iteration as follows.

The pseudocode version of this method is as follows in Algorithm 1.

| Algorithm 1: Pseudocode of Gradient Descent Method. |

| : Learning rate |

| : Cost function with parameters w |

| : Initial parameter vector |

| (Initialize time step) |

| not converged |

| (Resulting parameters) |

As in the above formula, if the gradient is zero, the parameter does not change and does not continue to the local minimum.

3.2. ADAM Method

The ADAM method is the most widely used method based on the GD method and the momentum method, and additionally, a variation of the interval. The first momentum is obtained by

The second momentum is obtained by

where and . The pseudocode version of this method is as follows in Algorithm 2.

| Algorithm 2: Pseudocode of ADAM Method. |

| : Learning rate |

| : Exponential decay rates for the moment estimates |

| : Cost function with parameters w |

| : Initial parameter vector |

| (Initialize timestep) |

| not converged |

| (Resulting parameters) |

The ADAM method is a first-order method. Thus, it has low time complexity. As the parameter changes repeat, the learning rate becomes smaller due to the influence of and varies slowly around the global minimum.

3.3. AdaMax Method

The AdaMax method is based on the ADAM method and uses the maximum value of the calculation of the second momentum.

The pseudocode version of this method is as follows in Algorithm 3.

| Algorithm 3: Pseudocode of AdaMax. |

| : Learning rate |

| : Exponential decay rates for the moment estimates |

| : Cost function with parameters w |

| : Initial parameter vector |

| (Initialize time step) |

| not converged |

| (Resulting parameters) |

4. The Proposed Method

The main idea starts with a fixed-point iteration method, the condition of the cost function (), and the condition of the first derivative of the cost function. We define an auxiliary function such as

where is determined to be positive or negative according to the initial sign of . We make w change if the value of is large even if it falls to a local minimum using .

Optimization

The iteration method is

where

, and .

Theorem 1.

The iteration method in (1) is a way to satisfy convergence.

Proof.

Here, is introduced to exclude the case of dividing by 0, and it is 0 unless it is divided by 0. As a result of a simple calculation, the following relation can be obtained:

For more information on the relation between and , we explain the calculation process at Corollary 1. From Equation (3), we have

Using the relation between and ,

Here, since is to prevent division by zero, it can be considered to be zero in the calculation. After a sufficiently large number , a sufficiently small value , and using the Taylor’s theorem, we can obtain

where

and is negligibly small. This is possible because we set the value to a very small value.

Looking at the relationship between and , and using (4),

We have

Therefore,

If the initial condition is defined as a fairly large value, the following equation can be obtained:

where

Through a similar process, we have

where is and can remain constant because of a sufficiently small value.

If the initial condition is defined as a fairly large value, the following equation can be obtained:

where

Assuming that the initial values and are sufficiently large, Equation (3) can be changed as follows:

For to converge (a Cauchy sequence), the following condition should be satisfied:

To satisfy this condition, the larger the value of is, the better. However, if the value of is larger than 1, the value of becomes negative and we should compute the complex value. As a result, is preferably as close to 1 as possible. Conversely, the smaller the value of is, the better. However, if the value of is small, the convergence of is fast and the change of is small, so the convergence value that we want cannot be achieved. As a result, is also preferably as close to 1 as possible. Therefore, it is better to decide in the range of . Generally, is 0.9 and is 0.999. After computing, we have . As the iteration continues, the value of converges to zero. Therefore, after a sufficiently large number (greater than ) and are equal. □

Corollary 1.

The relationship between and is

Proof.

Using the general Cauchy–Schwarz inequality, we have

□

Theorem 2.

The limit of satisfies that .

Proof.

When the limit of is , using (5) and continuity of , the following equation is obtained as

The effect of is to avoid making the denominator zero, so the denominator part of the above equation is not zero. We can get . Since , , and converges to assuming that * is a fairly large number, and are close, and can be regarded as 0 after , where is an appropriate large integer. Therefore, we can get

The extreme value of the sequence becomes a variable value that brings the cost function close to zero. □

5. Numerical Tests

In these numerical tests, we perform several experiments to show the novelty of the proposed method. The GD method, the ADAM method, the AdaMax method, and the proposed method are compared according to each experiment. Please note that some of the techniques such as batch, epoch, and drop out are not included. and used in ADAM and AdaMax are fixed as 0.9 and 0.999, respectively, and is used as . These values are the default of , , and .

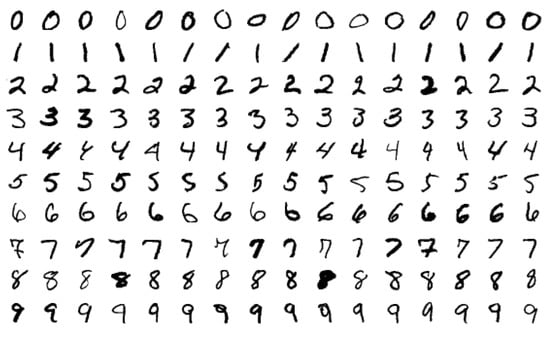

5.1. One Variable Non-Convex Function Test

Since the cost function has a non-convex property, we test each method with a simple non-convex function in this experiment. The cost function is and has the global minimum at . The starting point, , is initialized at and the iteration number is 100. The reason this cost function is divided by 800 is that if you do not divide it, the degree of this function is 4, so the value of the function becomes too big and it is too far away from the real problem.

Figure 2 shows the change in the cost function () according to the change in w.

Figure 2.

Cost function with one local minimum.

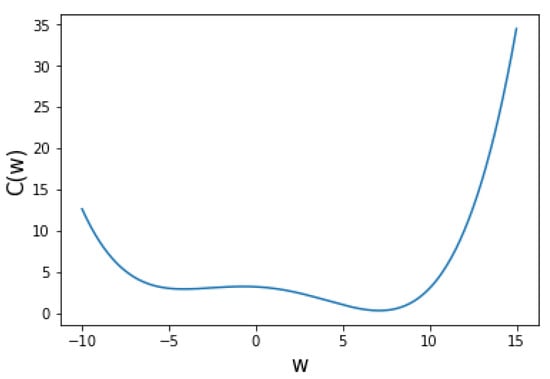

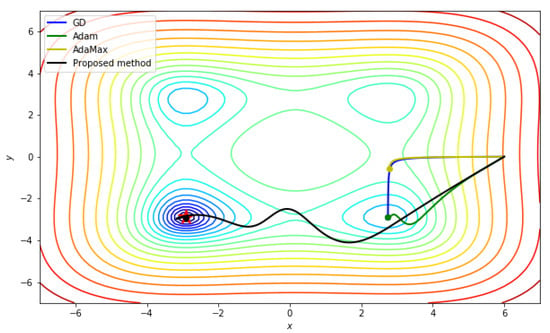

Figure 3 shows the iterations of four methods over with . In this experiment, GD, ADAM, and AdaMax fall into a local minimum and it is confirmed that there is no motion. On the other hand, the proposed method is confirmed to settle at the global minimum beyond the local minimum. Although the global minimum is near 7, the other methods stayed near .

Figure 3.

Compared to the other scheme, the learning rate is 0.2 and the , , used in the proposed method are 0.95, 0.9999, .

5.2. Two Variables Non-Convex Function Test

In this section, we experiment with three two-variable cost functions. The first and second experiments are to find the global minimum of the Beale function and the Styblinski–Tang function, respectively, and the third experiment is to test whether the proposed method works effectively at the saddle point.

5.2.1. Beale function

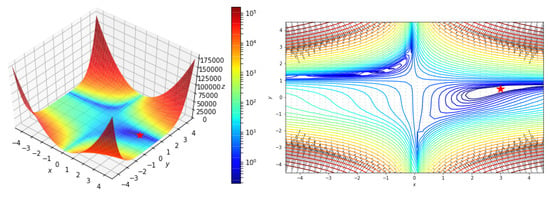

The Beale function is defined by and has the global minimum at (3, 0.5). Figure 4 shows the Beale function.

Figure 4.

Beale Function.

Figure 5 shows the results of each method. GD’s learning late was set to , which is different from other methods because only GD has a very large gradient and weight divergence. We confirm that all methods converge well because this function is convex around the given starting point.

Figure 5.

Result of the Beale function with an initial point of (2, 2). and the iteration number are 1000. The learning rate is 0.1 and the , , and used in proposed method are 0.9, 0.999, and , respectively. GD’s learning late was set to .

Table 1 shows the weight change of each method according to the change of iteration. As you can see from this table, the proposed method shows the best performance.

Table 1.

Change of weights of each method.

To see the results from another starting point, we experiment with the same cost function using a different starting point , hyperparameter and the iteration number = 50,000.

Figure 6 shows the results of each method. In this experiment, we confirm that GD, ADAM, and AdaMax fall into a local minimum and stop there, whereas the proposed method reaches the global minimum effectively.

Figure 6.

Result of Beale function with initial point .

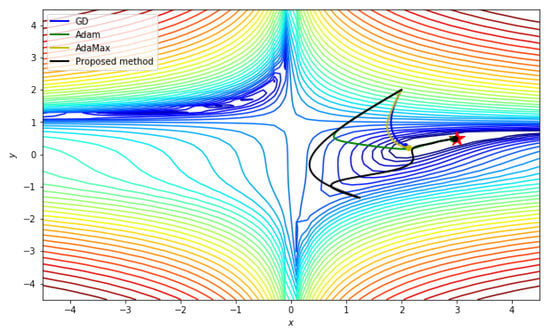

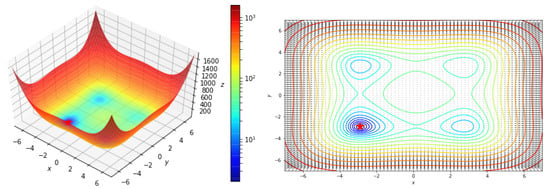

5.2.2. Styblinski–Tang function

The Styblinski–Tang function is defined by

and has the global minimum at .

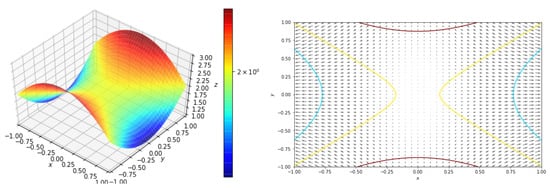

Figure 7 shows the Styblinski–Tang function. In this experiment, we present a result with the starting point . Please note that a local minimum point is located between and the global minimum point.

Figure 7.

Styblinski–Tang Function.

Figure 8 shows the results of each method. Only the Proposed method find the global minimum, and other methods could not avoid a local minimum.

Figure 8.

Result of Styblinski–Tang function with initial point . The learning rate is 0.1 and the , , used in Proposed method are 0.95, 0.9999, 0.2 and GD’s learning late was set to .

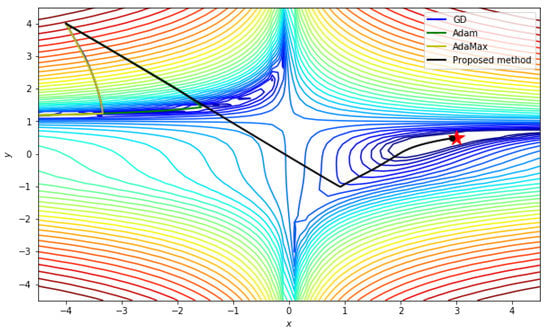

5.2.3. Function with a Saddle Point

The cost function is shown in Figure 9. A Hessian matrix of this cost function is , so this cost function has a saddle point at (0, 0).

Figure 9.

Cost function .

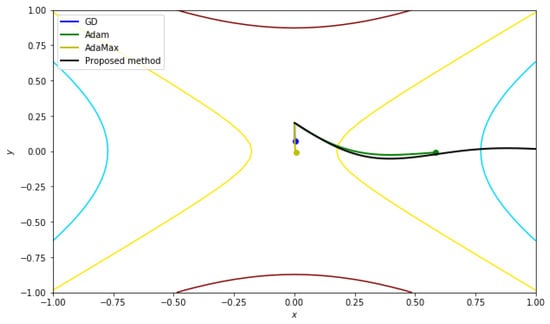

In this experiment, we present results based on two different starting points. The first starting point is .

Figure 10 and Table 2 shows the results of each method. In this experiment, we see that the proposed method also changes the parameters more rapidly than the other three methods near the saddle point.

Figure 10.

The results near the saddle point with an initial point of (0.001, 2). The iteration number is 50, the learning rate is , and the , , and used in the proposed method are 0.95, 0.9999, and , respectively.

Table 2.

Change values of each method.

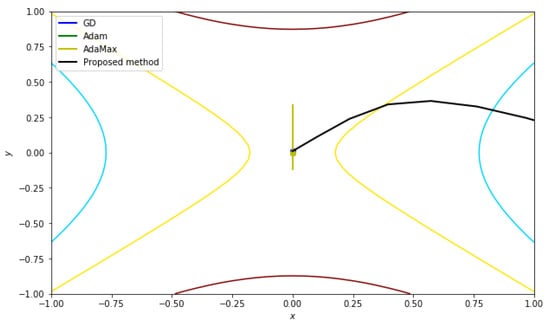

Then, we performed an experiment with the same cost function, but with another starting point (0, 0.01), and with an iteration number of 100. The hyper parameters in this experiment are the same as above. The only thing that was changed was the starting point.

Figure 11 shows the result of this experiment with an initial point of (0, 0.01). In this experiment, since one of the coordinates of the initial value is 0, the other methods do not work.

Figure 11.

The result of the saddle point with an initial point of (0, 0.01).

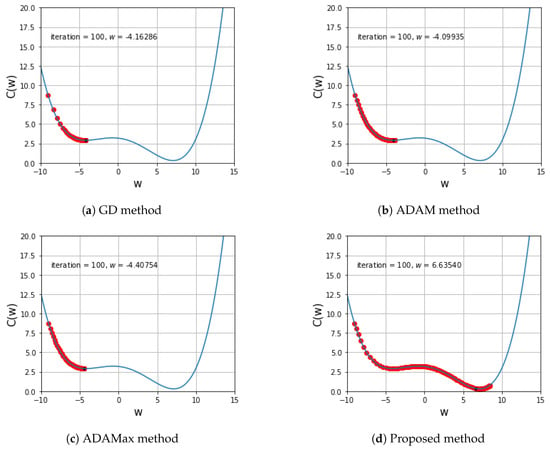

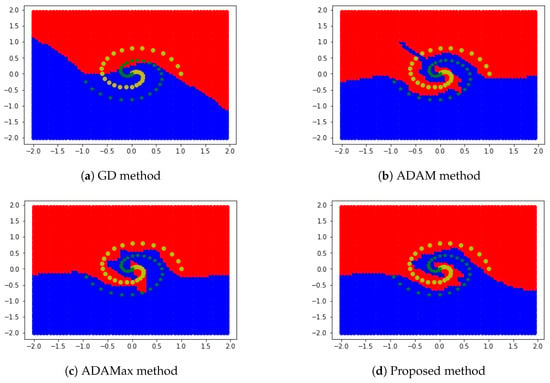

5.3. Two-Dimensional Region Segmentation Test

To see how one can divide the area in a more complicated situation, we introduce a problem of region separation in the form of the shape of a whirlwind. The value of 0 and 1 are given along the shape of the whirlwind, and the regions are divided by learning according to each method. Table 3b is the pseudo code for making the learning dataset for this experiment and Table 3a is a visualization of the dataset.

Table 3.

Learning dataset.

To solve this problem, we use a 2-layer and 25-row neural network. First, the neural network is learned by each method. After that, is divided into 60 equal sections, and each of the 3600 coordinates are checked to decide the parts where they belong.

The results are presented in Figure 12. The proposed method is better expressed in dividing the region according to the direction of the whirlwind, compared with other schemes.

Figure 12.

Comparing proposed scheme with other schemes.

In the same artificial neural network structure, different results were obtained for each method, which is related to the accuracy of location learning.

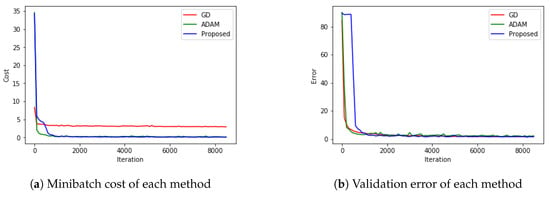

5.4. MNIST with CNN

In the previous sections, we have seen simple cases of classification problems using ANN. Here, we try a more practical experiment using a CNN (Convolutional Neural Network). As we all know, we use an ANN to increase the number of layers to solve more difficult and complex problems. However, as the number of layers increases, the number of parameters also increases, and the memory and operating time of the computer, likewise, also increase. For this reason, a CNN was introduced and the performance is shown at The ImageNet Large Scale Visual Recognition Challenge (ILSVRC) [11]. Therefore, in this experiment, we want to see how our method works with the CNN structure. In this experiment, TensorFlow was used, and for the fairness of the experiment, we used the MNIST problem using a CNN, which is one of the basic examples provided in TensorFlow (see https://github.com/tensorflow/models/tree/master/tutorials/image/mnist). There were 8600 iterations, which output the Minibatch cost and validation error of each method as a result. The results are shown in Figure 13. As shown in Figure 13, all methods were found to reduce both cost and error.

Figure 13.

Comparing the proposed scheme with other schemes.

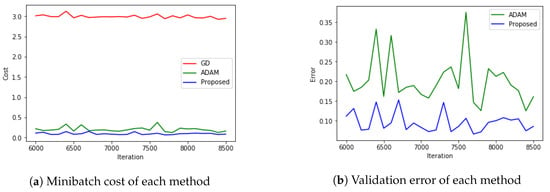

For further investigation, we magnify the data from Figure 13 from the 6000th iteration, and show it in Figure 14.

Figure 14.

Comparing the proposed scheme with other schemes with an iteration number over 6000.

Figure 14a shows that GD converges the slowest and the proposed method is more accurate than ADAM. Figure 14b shows the accuracies of the proposed method and the ADAM method, as measured on the test data. From this, we see that the proposed method works better for the classification of images than ADAM.

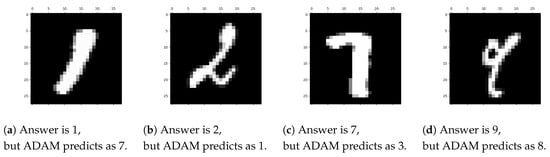

Figure 15 shows four sample digits from the MNIST test data that the proposed method classifies effectively but that ADAM failed to classify in this experiment.

Figure 15.

Examples that the proposed method predicted correctly, while the ADAM method does not.

5.5. CIFAR-10 with RESNET

We show in Section 5.4 that the proposed method is more effective than ADAM in the CNN structure using the MNIST dataset. Since the MNIST dataset is a grayscale image, the size of one image is . In this section, we show that the proposed method is more effective than ADAM when using a dataset larger than the MNIST, such as the CIFAR10 (https://www.cs.toronto.edu/~kriz/cifar.html). CIFAR10 is a popular dataset for classifying 10 categories (airplane, automobile, bird, cat, deer, dog, frog, horse, ship, and truck) size RGB images. This dataset consists of 50,000 training data and 10,000 test data. In this experiment, as in Section 5.4, we used the example code (the same model and the same hyperparameter settings) provided by TensorFlow (see https://github.com/tensorflow/models/tree/master/tutorials/image/cifar10_estimator). In Section 5.4, we used a simple network using a CNN, but in this subsection, we use a more complex and high-performance network called RESNET (Residual network) based on CNN. RESNET is a well-known network that has good performance in ILSVRC, and we used RESNET 44 in this experiment.

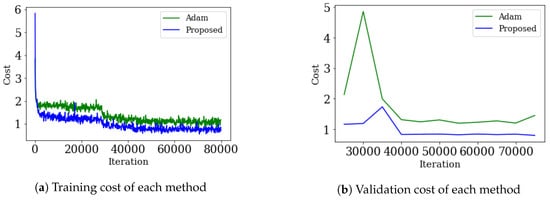

Figure 16 shows the results of the training CIFAR10 dataset using the RESNET structure, and are compared with ADAM. In Figure 16a, the training cost of each method was calculated and plotted every 100th iteration. In Figure 16b, the validation cost of each method was calculated and plotted every 5000th iteration. This shows that the proposed method works effectively for more complex networks as well as simple networks.

Figure 16.

Result of CIFAR10 dataset using the RESNET 44 model. The following parameters are default values; iteration: 80000, batch size: 128,weight decay: ,learning rate: 0.1, batch norm decay: 0.997.

6. Conclusions

In this paper, we propose a new optimization method based on ADAM. Many of the existing optimization methods (including ADAM) may not work properly according to the initial point. However, the proposed method finds the global minimum better than other methods, even if there is a local minimum near the starting point, and has better overall performance. We tested our method only on models for image datasets such as MNIST and CIFAR10. Our future work is to test our method on various models such as RNN models for time series prediction, various models for natural language processing, etc.

Author Contributions

Conceptualization, D.Y.; Data curation, S.J.; Formal analysis, D.Y. and J.A.; Funding acquisition, D.Y.; Investigation, D.Y.; Methodology, D.Y. and J.A.; Project administration, D.Y.; Resources, S.J.; Software, S.J.; Supervision, D.Y. and J.A.; Validation, J.A. and S.J.; Visualization, S.J.; Writing—original draft, D.Y.; Writing—review & editing, S.J. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the basic science research program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education, Science and Technology (grant number NRF-2017R1E1A1A03070311). Also, the second author Ahn was supported by research fund of Chungnam National University.

Acknowledgments

We sincerely thank anonymous reviewers whose suggestions helped improve and clarify this manuscript greatly.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Deng, L.; Li, J.; Huang, J.; Yao, K.; Yu, D.; Seide, F.; Seltzer, M.L.; Zweig, G.; He, X.; Williams, J.; et al. Recent advances in deep learning for speech research at microsoft. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing—ICASSP 2013, Vancouver, BC, Canada, 26–31 May 2013. [Google Scholar]

- Graves, A. Generating sequences with recurrent neural networks. arXiv 2013, arXiv:1308.0850. [Google Scholar]

- Graves, A.; Mohamed, A.; Hinton, G. Speech recognition with deep recurrent neural networks. In Proceedings of the 2013 International Conference on Acoustics, Speech and Signal Processing—ICASSP 2013, Vancouver, BC, Canada, 26–31 May 2013; pp. 6645–6649. [Google Scholar]

- Hinton, G.E.; Srivastava, N.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R.R. Improving neural networks by preventing co-adaptation of feature detectors. arXiv 2012, arXiv:1207.0580. [Google Scholar]

- Tieleman, T.; Hinton, G.E. Lecture 6.5—RMSProp, COURSERA: Neural Networks for Machine Learning; Technical Report; COURSERA: Mountain View, CA, USA, 2012. [Google Scholar]

- Dean, J.; Corrado, G.; Monga, R.; Chen, K.; Devin, M.; Le, Q.; Mao, M.; Ranzato, M.; Senior, A.; Tucker, P.; et al. Large scale distributed deep networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems—NIPS 2012, Lake Tahoe, NV, USA, 3 December 2012; pp. 1223–1231. [Google Scholar]

- Jaitly, N.; Nguyen, P.; Senior, A.; Vanhoucke, V. Application of pretrained deep neural networks to large vocabulary speech recognition. In Proceedings of the INTERSPEECH 2012, 13th Annual Conference of the International Speech Communication Association—ISCA 2012, Portland, OR, USA, 9–13 September 2012; pp. 2578–2581. [Google Scholar]

- Amari, S. Natural gradient works efficiently in learning. Neural Comput. 1998, 10, 251–276. [Google Scholar] [CrossRef]

- Duchi, J.; Hazan, E.; Singer, Y. Adaptive subgradient methods for online learning and stochastic optimization. J. Mach. Learn. Res. 2011, 12, 2121–2159. [Google Scholar]

- Hinton, G.; Deng, L.; Yu, D.; Dahl, G.E.; Mohamed, A.; Jaitly, N.; Senior, A.; Vanhoucke, V.; Nguyen, P.; Sainath, T.N. Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. Signal Process. Mag. 2012, 29, 82–97. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Neural Information Processing Systems–NIPS 2012, Lake Tahoe, NV, USA, 3 December 2012; pp. 1097–1105. [Google Scholar]

- Pascanu, R.; Bengio, Y. Revisiting natural gradient for deep networks. arXiv 2013, arXiv:1301.3584. [Google Scholar]

- Sutskever, I.; Martens, J.; Dahl, G.; Hinton, G.E. On the importance of initialization and momentum in deep learning. In Proceedings of the 30th International Conference on Machine Learning—ICML 2013, Atlanta, GA, USA, 16–21 Jun 2013; PMLR 28(3). pp. 1139–1147. [Google Scholar]

- Kochenderfer, M.; Wheeler, T. Algorithms for Optimization; The MIT Press Cambridge: London, UK, 2019. [Google Scholar]

- Kingma, D.P.; Ba, J. ADAM: A method for stochastic optimization. In Proceedings of the 3rd International Conference for Learning Representations—ICLR 2015, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Bottou, L.; Curtis, F.E.; Nocedal, J. Optimization Methods for Large-Scale Machine Learning. SIAM Rev. 2018, 60, 223–311. [Google Scholar] [CrossRef]

- Roux, N.L.; Fitzgibbon, A.W. A fast natural newton method. In Proceedings of the 27th International Conference on Machine Learning—ICML 2010, Haifa, Israel, 21–24 June 2010; pp. 623–630. [Google Scholar]

- Sohl-Dickstein, J.; Poole, B.; Ganguli, S. Fast large-scale optimization by unifying stochastic gradient and quasi-newton methods. In Proceedings of the 31st International Conference on Machine Learning—ICML 2014, Beijing, China, 21–24 June 2014; pp. 604–612. [Google Scholar]

- Zeiler, M.D. Adadelta: An adaptive learning rate method. arXiv 2012, arXiv:1212.5701. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Becker, S.; LeCun, Y. Improving the Convergence of Back-Propagation Learning with Second Order Methods; Technical Report; Department of Computer Science, University of Toronto: Toronto, ON, Canada, 1988. [Google Scholar]

- Kelley, C.T. Iterative methods for linear and nonlinear equations. In Frontiers in Applied Mathematics; SIAM: Philadelphia, PA, USA, 1995; Volume 16. [Google Scholar]

- Reddi, S.J.; Kale, S.; Kumar, S. On the Convergence of ADAM and Beyond. arXiv 2019, arXiv:1904.09237. [Google Scholar]

- Ruppert, D. Efficient Estimations from a Slowly Convergent Robbins-Monro Process; Technical Report; Cornell University Operations Research and Industrial Engineering: Ithaca, NY, USA, 1988. [Google Scholar]

- Zinkevich, M. Online Convex Programming and Generalized Infinitesimal Gradient Ascent. In Proceedings of the Twentieth International Conference on Machine Learning—ICML-2003, Washington, DC, USA, 21–24 August 2003; pp. 928–935. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).