3.1. A Classical Limit State Funtion

The two approaches described in the previous section are applied here to the simplest classical LSF

where

R and

L can be considered, in a broad sense, as random variables for the capacity and demand of a system element. For the sake of simplicity and illustration purposes, units are skipped and both random variables are assumed independent and characterized with lognormal distributions, with mean values and standard deviations

mR = 10,

mL = 5.6,

σR = 1, and

σL = 0.75 for the capacity and demand, respectively. These values are arbitrary, except by the fact that they lead to a reliability index equal to practically 3.5, which is a common reference for code calibration and that can be computed with the following expression [

21,

22]

where

νR and

νL are the coefficients of variation of

R and

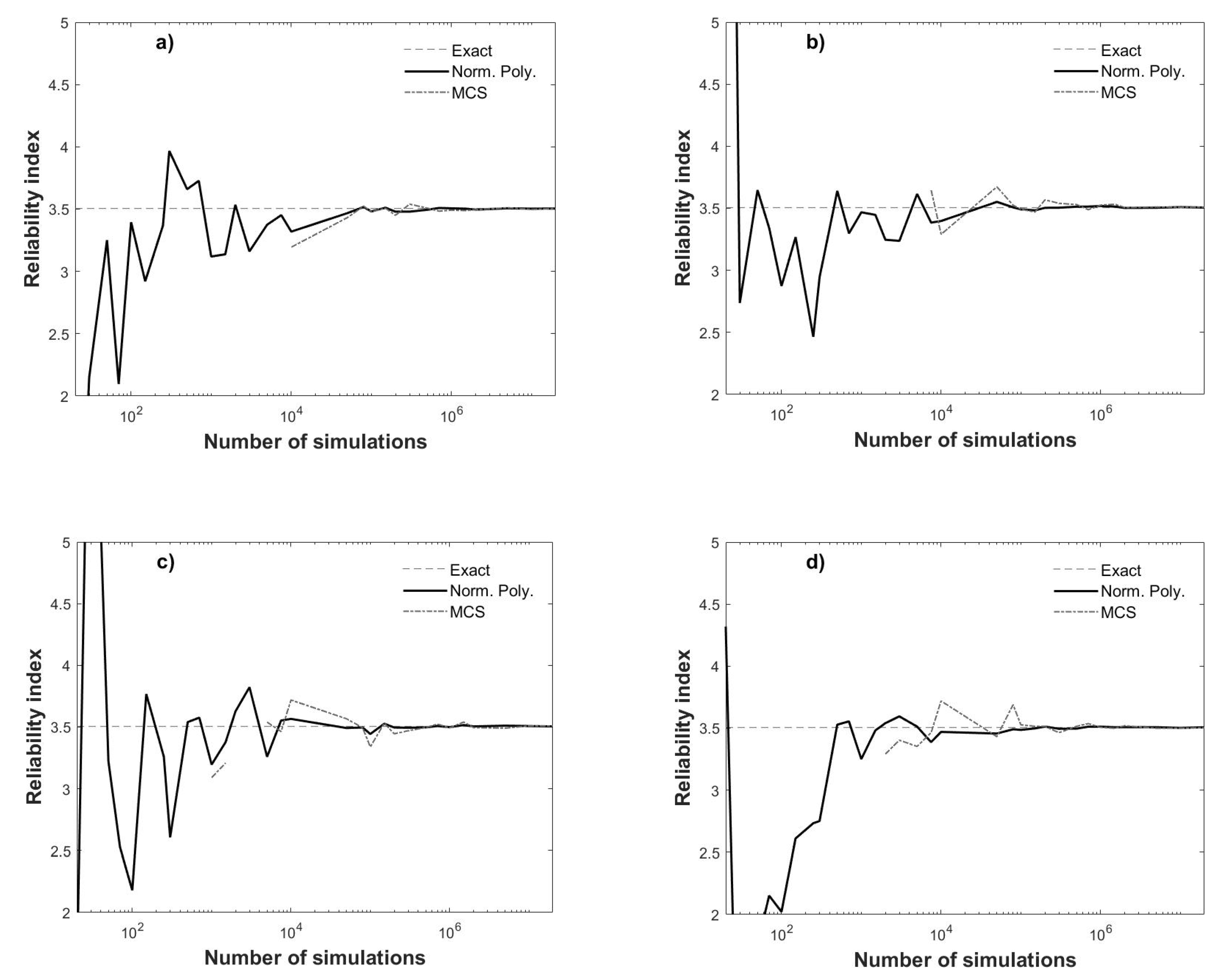

L, respectively. This reliability index is shown in

Figure 1a (dashed line), as a reference to inspect how close are the

βs obtained by crude Monte Carlo simulations as a function of the number of simulations (shown in logarithmic scale in the horizontal axis from 2 × 10

1 to 2 × 10

7) to the exact value. The reliability index for Equation (15) using Monte Carlo simulations (dashed-dotted line in

Figure 1a) is obtained by plugging into Equation (7) the following probability of failure

which is simply the ratio of number of failures,

nfail, to the total number of simulations. The latter (i.e.,

nsim) is also the number of fractile constraints when the reliability index is computed with the normality polynomial approach, also depicted in

Figure 1a (solid line).

Additional runs are shown in

Figure 1b–d, which indicate that the results are different and dependent on the generated random numbers of each run, but they stabilize if enough simulations are performed or enough fractile constraints are used. Other observations from

Figure 1 include that

β cannot always be computed with the Monte Carlo simulations (MCS) (not a single failure is obtained), while the opposite occurs when using normality polynomials, although significant deviations are observed for a limited number of simulations, that the fitted normality polynomials tends to deviate less from the exact reliability index for fewer

nsim, and that such error in the precision may not be large for a relative small

nsim, (e.g., 1 × 10

3).

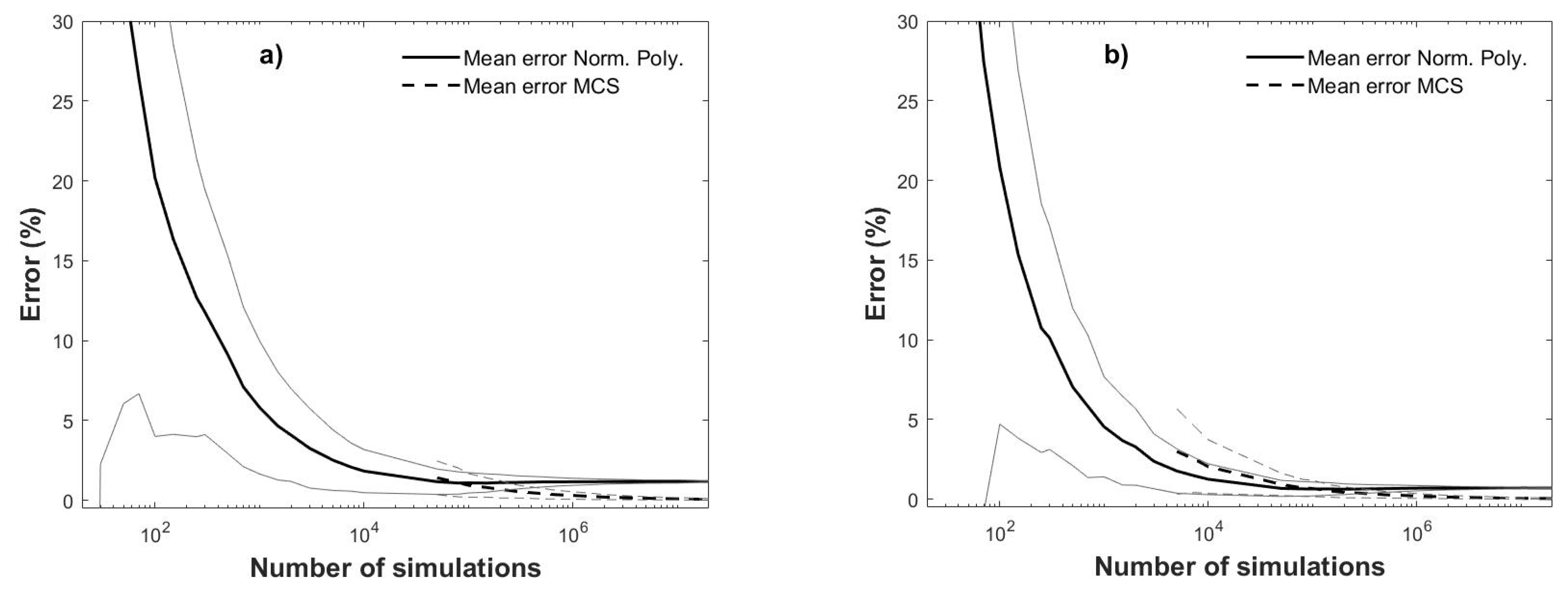

To quantitatively inspect these deviations,

βRE is to be used as benchmark to assess the exactness of the used methods (i.e., normality polynomials and MCS) in terms of the relative percentual error given by

where

βbench denotes the reliability index considered as benchmark and

βoth is the reliability index computed with any other method. A total of 1000 runs are performed for each

nsim, and

ε is computed for each of the runs. Then, the mean values of the error and their uncertainties (the unbiased standard deviation) are computed and plotted for the whole range of

nsim in

Figure 2 (solid lines and dashed lines for normality polynomial and MCS mean errors, respectively), where the mean errors ± one standard deviation are also depicted in grey lines.

Figure 2 shows that is not always possible to compute the statistics for the MCS; this happens when not a single failure is reported in one or more of the 1000 runs for a given

nsim (at least 5 × 10

4 are necessary). This is not a problem when using the fitted polynomials. Additionally, the MCS approach always tend to larger mean errors and standard deviations for a decreasing number of simulations. This makes the normality polynomial approach more adequate for estimating the reliability indices; however, for few simulations the errors are too large. Nevertheless, the designer could decide which precision level (quantitatively) is willing to accept using information like the one in

Figure 2 as an aid (and reduce the number of required simulations as a function of such an accepted error).

If desired, the curves in

Figure 2 could be casted as a mathematical expression. For instance, the following power equations fit very well the mean (

με) and standard deviation (

σε) of the error in

Figure 2 (by fitting from 2.5 × 10

2 simulations and over)

If the power in Equations (19) and (20) is assumed as −0.5 in both expressions, the coefficient of variation of the error is νε ≈ 150/250 ≈ 0.6, i.e., it is constant and roughly independent of the number of simulations; the actual νε obtained from the 1000 runs does exhibit such a roughly constant behavior for this case, except that it is ≈0.7 (difference related to the actual different powers in Equations (19) and (20)). Power equations like Equations (19) and (20) can be linearized by taking logarithms on both sides. Therefore, if the involved variables are transformed into the logarithmic space, a linear fitting can be performed. In this study, we simply used a built-in function in the commercial software for the fitting.

Coefficients for the fitted normality polynomial in

Figure 1a are shown in

Table 1 (upper set of values) for selected values of

nsim. The computing of these coefficients is based on minimizing the error in Equation (4) and was implemented in the coded program by using a built-in function of the programming language employed (MATLAB). As mentioned before, the coefficients

a0 can be linked to the generalized reliability index. Coefficients for results in

Figure 1b–d (or those associated to

Figure 2) were computed but not shown for brevity.

As regards the reliability index, design point and sensitivity factors derived from the simulations (i.e., those obtained with Equations (11), (12), and (14)), they are compared with those obtained by applying the FORM to Equation (15). They are summarized in

Table 2 for selected values of

nsim.

Results listed in

Table 2 indicate that when the points obtained by applying the criterion in Equation (8) were enough to successfully perform a multi-linear regression (also with a built-in function, as in the case of the normality polynomial fitting),

β did not deviate from the exact value, but marginally, just like the reliability index obtained with FORM. However, a relatively large

nsim was required, usually at least 1 × 10

5 simulations (by inspecting all the 1000 ×

nsim cases used to derived

Figure 2); this depends on each run (implicitly the generated random numbers in each simulation), and sometimes less than 1 × 10

5 simulations are required. For the considered runs, 2 × 10

5 simulations seem to guarantee the obtaining of the values reported in

Table 2. In any case, when the muti-linear regression is successfully performed, the results are quite adequate and invariant for increasing number of simulations; this is also the case for the coefficients of the regression (i.e., they remain independent of

nsim), which are

c = 0.5837,

b1 = 0.0998, and

b2 = −0.1333.

If the tolerance

el in Equation (8) is increased, the minimum number of required simulations can be decreased. For instance, if

e = 0.25 were used (instead of the actual used

e = 0.05), 5 × 10

4 simulations would be enough for a successful multi-linear regression. Moreover, the design point, sensitivity factors and reliability index would be the same as those reported in

Table 2. The opposite would occur if

e = 0.005 were used (instead of the actual used

e = 0.05), i.e., a much larger number of simulations would be required to determine the reliability parameters from the regression.

Therefore, this approach based on multi-linear regression can be quite an adequate alternative by itself to compute β, conditioned on the feasibility of performing enough simulations, the number of which can be decreased by using a large e; with the additional advantage that the design point and sensitivity factors can also be determined.

In the following section a more realistic LSF for a structural application is used to further investigate the revisited methods.

3.2. Reliability of Reinforced Concrete Beam under Flexure Moment

In this section the approaches described before are applied to a reinforced concrete beam (RCB) subjected to flexure moment. The example is the same investigated in a previous study [

23] but focused only in one design code [

24] and three ratios of the mean live to the mean dead load effect for the beam. The rectangular beam section information, LSF, and statistics are succinctly reproduced below. The LSF is

where

B is the modeling error,

f’c is the concrete compressive strength,

As is the reinforcement steel area,

fy is the yielding stress of the reinforcement steel,

b is the section width,

h is the effective depth, and

D and

V are the dead load and live load effect, respectively (flexure moment). The information of all the independent variables in Equation (21) is summarized in

Table 3.

As is assumed deterministic and equal to 3000 mm

2. The PDFs of the random variables in

Table 3 are based on previous literature, which in turn reflects results from experimental projects, field information, observed phenomena, and even the engineers experience to characterized these variables properly, since such PDFs have a direct impact in the computed reliabilities, code calibration tasks, and ultimately in the safety of real structures. More details can be found in [

23] and the references therein.

Mean values of

D and

V are not defined in

Table 3, but they are derived by considering given mean live load effect (

mV) to mean dead load effect (

mD) ratios (

rV/D = 0.4, 1.0 and 2.0 are used in this study) and the assumption that the RCB just meets the code requirement; thus, the following expression is used to determine the mean values.

where

m denotes the mean values of the variables in the corresponding subscripts, and Φ = 0.9.

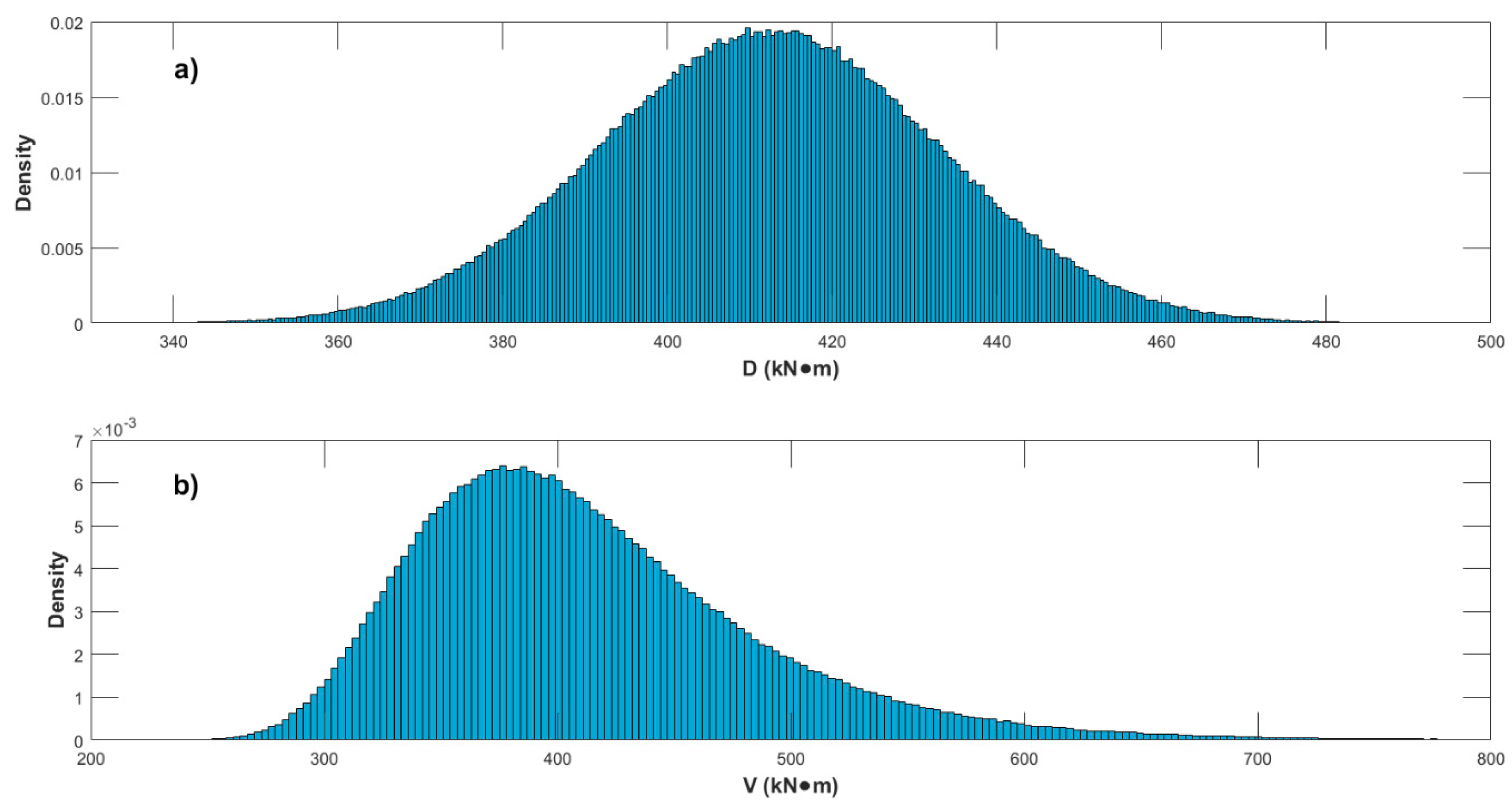

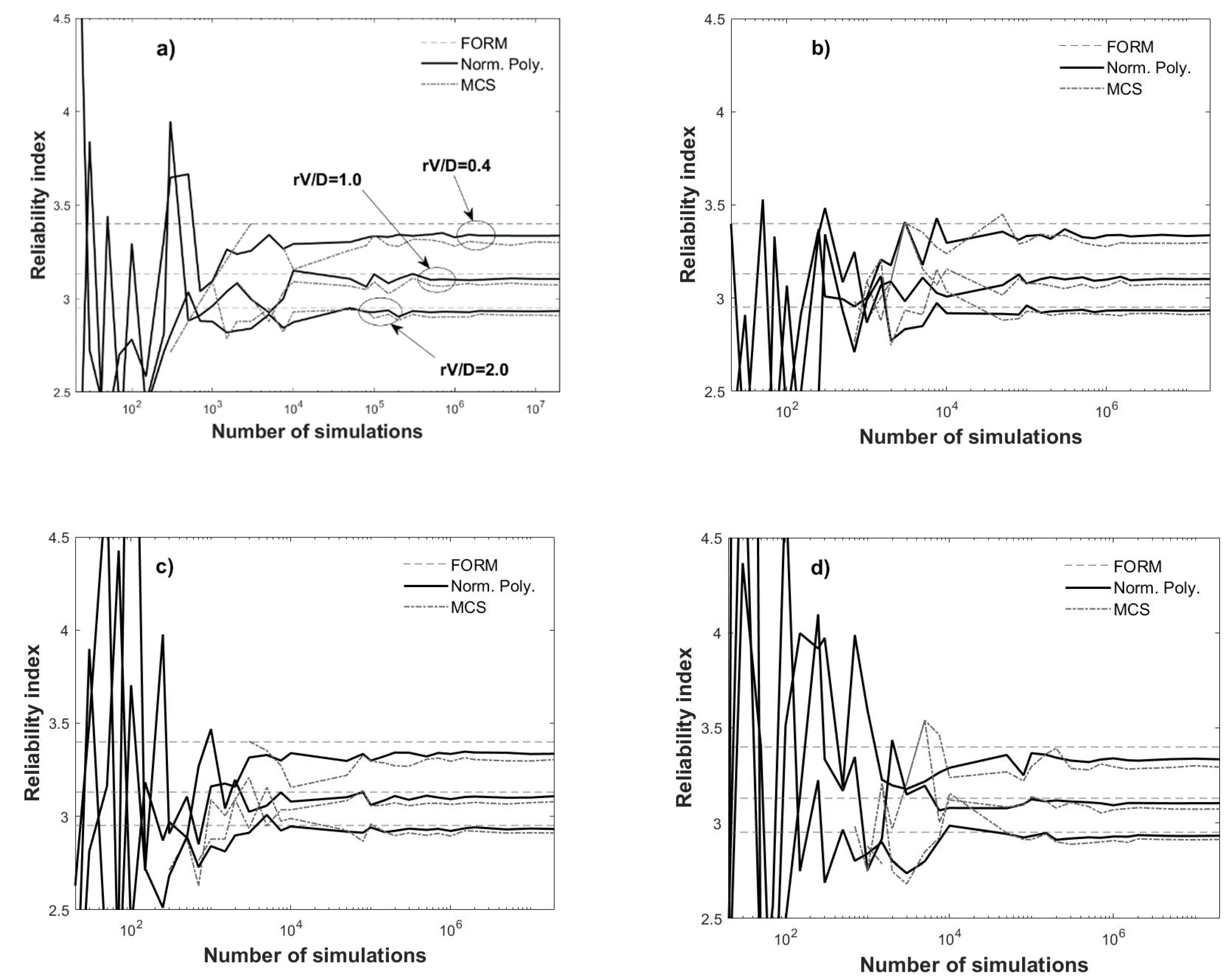

Using the previous information, the normality polynomial approach is applied to Equation (21) and the results are shown in

Figure 3 and

Figure 4. These figures are analogous to

Figure 1 and

Figure 2, except that the reference reliability indices (dashed lines) correspond to the values computed using FORM, that three cases of the ratio

rV/D =

mV/mD are depicted (the largest

βs correspond to

rV/D = 0.4 and the smallest to

rV/D = 2.0, as shown in

Figure 3a) and that the error in

Figure 4 is shown for

rV/D = 0.4 (

Figure 4a) and for

rV/D = 2.0 (

Figure 4b), which is computed by considering in Equation (18)

βbench as the average of the 1000 runs for

nsim = 2 × 10

7 (assumed as the exact value). MCS results are depicted with dashed-doted lines. To perform the MCS,

mD and

mV are defined using Equation (22) and

rV/D as mentioned before. Once they are determined, MCS can then be performed to obtain samples of

D and

V (and all other random variables in

Table 3) and the probability of failure as per Equation (17) can be computed. As an example of the simulated bending moments, histograms of

D and

V are shown in

Appendix A (

Figure A1) for 1 × 10

6 MCS and

rV/D = 1.0; it can be observed that the values are comparable in average (because

rV/D = 1.0 is considered), and that histograms for

D and

V clearly resemble normal and Gumbel distributions, respectively, which is expected given that these variables were sampled from such PDFs.

From

Figure 3 and

Figure 4 similar conclusions to those drawn from

Figure 1 and

Figure 2 can be extracted. Some additional observations worth to mention are that

nsim = 1 × 10

4 seems a reasonable number of simulations for the normality polynomial approach, if a compromise between

nsim and error (in terms of mean and standard deviation) is envisaged, that the fitted polynomials lead to better results than the FORM for increasing

nsim and that larger errors are obtained for smaller

rV/D; this latter aspect could be attributed to a better approximation of the failure surface for larger

rV/D, since the first order approximation to the failure surface by the other method shown in

Figure 3 (i.e., the FORM), also deviates more from the exact value for decreasing

rV/D.

By observing

Figure 4, it is pointed out once more that error and its uncertainty is less for normality polynomial when decreasing

nsim than for MCS for this case too and, as previously mentioned, is not always possible to estimate the error for MCS for decreasing number of simulations. The error for

β obtained with the normality polynomial approach exhibits an asymptotic behavior towards approximately

με = 1% for large

nsim. As before, power laws fit adequately the error and its uncertainty and are defined as

where

δ = 656, 1103, and 1349,

γ = 0.7401, 0.8493, and 0.9062,

κ = 129.8, 201, and 179.1,

τ = 0.4937, 0.5609, and 0.5703 for

rV/D = 0.4, 1.0, and 2.0, respectively. In Equation (23) the constant unity is included to shift the curve upwards to reproduce the asymptotic behavior mentioned; nonetheless, it could be skipped, and the equations will still fairly adequately describe the mean error. The fitting for

με was performed for the whole range of

nsim, while for

σε,

nsim from 250 and over was employed. Note that although the range for the fitting could be established based on practical grounds and fitting improvement, in any case the errors and their uncertainties follow a power law; this is the case for the three case studies carried out in this study.

It is noteworthy that the normality polynomial approach leads to comparable

με and

σε for

Figure 2 and

Figure 4, considering that the LSF for the RCB is a more complex (non-linear) function, and that it has much more random variables and several PDFs.

The fitted coefficients of the polynomials for

Figure 3 (corresponding to

rV/D = 0.4) and selected

nsim are listed in

Table 1 (middle set of values). If normality polynomials of order higher than 3 are used, no further accuracy is gained (or even higher inaccuracies could be obtained; [

11]). This is confirmed by carrying out a single case for

rV/D = 0.4 using a 4th-order polynomial, since the results are comparable to those of the 3rd-order polynomial case (

Table 1, lower set of values). Note that the order of the coefficients of the normality polynomials for the RCB problem can be very small (compared to the classical LSF problem and to a coastal engineering application shown later); this could be due to the units employed, and should not be understood as if the order of the polynomial could be decreased, whereas obtaining comparable precision, because the use of at least third-order normality polynomials was illustrated and found adequate in [

11].

To end this section, the results of using the multi-linear regression approach for the LSF defined in Equation (21) are listed in

Table 4 for

rV/D = 1.0. The subscripts in

Table 4 (and the units of the design point) are associated to the random variables in

Table 3. The reported values correspond to the last of the 1000 runs used to develop

Figure 4. As an example of the coefficients obtained by multi-linear regression, the ones from the last of the 1000 runs (for deriving

Figure 4) corresponding to

rV/D = 1.0 and 1 × 10

6 simulations resulted in

c = 3.3635 × 10

6,

b1 = 4.0861 × 10

5,

b2 = 2.7767 × 10

5,

b3 = 1.1215 × 10

5,

b4 = −1.1888 × 10

5,

b5 = −9.1330 × 10

5,

b6 = 3.1471 × 10

4, and

b7 = 2.8878 × 10

5.

The previous information indicates that a similar conclusion to that of the previous example (i.e., for the case of Equation (15)) can be drawn, i.e., at least a sufficiently large number of simulations is required for a successful multi-linear regression. However, unlike in the previous example, the design point and sensitivity factors are not invariant by varying

nsim. The differences are not so significant though; therefore, once a minimum number of simulations is ensured (around 8 × 10

4 simulations) a very precise

β is obtained; it is also observed that the required number of simulations for adequately carrying out the multi-linear regressions decreases with increasing

rV/D (this could be attributed to the same reason argued before about the larger errors obtained for smaller

rV/D). If the tolerance

e is increased, the number of simulations can be reduced, but not as significantly as for the classical LSF case (i.e., Equation (15)). For instance, an increment to

e = 0.35 reduces

nsim to around 5×10

4; this also changes the values of the design point and sensitivity factors, but not substantially. From

Table 4, it is also observed that the values are in very good agreement with the FORM results, with even higher precision from the regression approach for the reliability index. Therefore, it is concluded that the multi-linear regression by itself can be a very attractive alternative to compute

β, if a minimum

nsim (similar to those mentioned above) is feasible; it is emphasized once more that an additional advantage is that the design point and sensitivity factors are also determined.

One final application of the revisited described methods is performed for a coastal structure in the following section.

3.3. Overtopping Reliability of a Breakwater

In this section, we consider for the coastal engineering application the example reported in [

10], where certain conditions are assumed and where the reader is referred to for further details and used references. It is a breakwater with deterministic slope,

tan τ = 1/1.5, and freeboard,

Fb = 10 m. When the water runs up the breakwater, overtopping could occur (i.e., the water surpasses the freeboard), which is considered as a failure. This is defined by the LSF given by

where

Au and

Bu are coefficients characterized as independent normally distributed random variables, with mean values equal to 1.05 and −0.67, respectively, and coefficients of variation both equal to 0.2 [

10];

H denotes de wave height and

T represents wave period.

H and

T are random variables probabilistically characterized by the joint Longuet-Higgins distribution [

20] with parameter

ν = 0.25. The joint PDF of the Longuet-Higgins distribution is given by

where

Hn =

H/Hs and

Tn =

T/Tz are normalized wave heights and periods by considering

Hs = 5 m and

Tz = 10 s, which are the significant wave height and the zero up-crossing mean period, respectively, which define the sea state [

10];

L(ν) is a normalization factor implying only positive values of

Tn and defined by

First, the FORM is applied to Equation (25). Salient points of performing the FORM to this overtopping LSF are briefly described in the following. First, it is noted that since

Hn and

Tn are not independent, the Rosenblatt transformation is performed for the joint distribution to map the equivalent distribution parameters into the normal space by using [

17]

where

denotes the inverse of the CDF of a standard normal variable,

and

are the marginal distribution of

Hn and the conditional distribution of

Tn given

Hn for Equation(26), respectively, and defined by

where the error function is given by

In Equation (29) the equivalent version reported in [

25], rather than the original version in [

10], is considered. This is so, simply because the error function used in [

25] is more readily available in current software. Then, to derive the conditional probability distribution, we divided Equation (26) by Equation (29) yielding Equation (30) given above. Since the CDFs of Equation (29) and Equation (30) are also required to obtain the equivalent parameters mapped in the standardized normal space, other point to highlight is that they were obtained numerically at the design point, unlike for the normal distributed random variables, where simple analytical expressions can be used (which is also possible for other common PDFs).

Additionally, it is noted that as part of the procedure to obtain the reliability index in each iteration of the FORM, usually a vector obtained by multiplying each partial derivative of the LSF (i.e., Equation (25)), evaluated at the design point, by the equivalent second moment in the normal space (for the corresponding random variable) is enough. However, this approach is not possible for the joint random variables in this example. Therefore, the Jacobian (and its inverse) is required [

17]; once the inverse of the Jacobian is computed, it is multiplied by the vector of partial derivatives evaluated at the design point mentioned above, and the reliability index can then be obtained in each iteration in the regular way for the FORM (i.e., as when the variables are independent). This approach is followed in the present study. Note that for a set of jointly distributed random variables

xi (

zi in the normalized space), the inverse of the Jacobian is a lower-triangular matrix determined (often numerically) as [

17]

where Φ (

zi) is the PDF of a standard normal random variable, with the argument

zi obtained in an analogous way to Equation (28);

fi and

Fi refers to the PDF and CDF for the variable with subscript

i, respectively. It was noticed that for the present example, disregarding the elements outside the Jacobian diagonal does not impact very significantly the computed reliability indices.

A few final important aspects regarding the FORM worth to mention, include that the order of the variables in defining Equation (28) does matter, although similar results may be expected [

17]. For instance, in [

10] the marginal distribution of

Tn and the conditional distribution of

Hn given

Tn are used to define Equation (28) (i.e., the order of the variables is inverted as compared with this study), which results in a reliability index,

β, equal to 2.01 for the problem in question, whereas

β = 2.10 is obtained in this study with the FORM formulation described earlier, and adopted in the following;

β = 2.10 is also closer to the exact value to be discussed later. Another slight difference between [

10] and this study when applying the FORM, is that in the present work, when assuming initial design points, one is determined by setting

gbkw = 0, to ensure that the design point is on the failure boundary (e.g., [

26]).

To inspect the variation of

β for different

Fc values, the FORM is performed by varying the freeboard between 9 m and 12 m and the resulting reliability index is shown in

Figure 5a with a black dashed line. As expected, it can be observed that

β increases for increasing freeboard; if the slope of the breakwater is increased to

tan τ = 1/2 and the FORM is carried out for the same range of

Fc, it further increases reliability levels, as shown by the dashed grey line in

Figure 5a. These results are used as reference and for comparison purposes, with respect to the results from the normality polynomial and multi-linear regression approaches revisited in this study.

The simulations for this coastal engineering application, used as the basis of the revisited methods, are much more computationally intensive than for the classical and structural examples, because of the dependency between the wave height and period and inclusion of the Longuet-Higgins distribution, which imposes numerical computing for the probability levels (e.g., values from CDFs) and a different method for the sampling. This latter aspect, i.e., the generation of jointly distributed random numbers when a set of

xi variables are dependent, is based on expressing the joint PDF as [

22]

with the corresponding CDF given by

Using the previous concepts, and considering a set of values

U generated from

n independent standard uniformly distributed random variables, the set of dependent random variables can be determined as

where

F−1(•) denotes the inverse of the CDF. The obtaining of this inverse of the CDF can be relatively straightforward for some common probability distributions, where an analytical expression can be used for Equation (35). This is not the case for the Longuet-Higgins distribution. In this case, the jointly distributed random wave height and period must be determined numerically.

Figure 6 shows samples of jointly generated random values of wave height and period in the normalized space (for

nsim = 1 × 10

3, 5 × 10

3; 1 × 10

4 and 5 × 10

4). A few contours of the theoretical Longuet-Higgins distribution (i.e., Equation (26)) are also shown in

Figure 6; it can be observed that they are in good agreement. The values in the non-normalized space can be obtained simply by recognizing that

Hn =

H/Hs and

Tn =

T/Tz.

As mentioned before, the sampling procedure is significantly more time-consuming than for the LSFs in previous sections. Therefore, MCS are sampled only up to 1 × 10

6 simulations for all the variables of the LSF represented by Equation (25), and the reliability index is determined by employing Equation (17) and Equation (7), only for the case reported in [

10] (i.e.,

Fc = 10 m and

tan τ =1/1.5). Nonetheless, results depicted in

Figure 5b indicate that the reliability index stabilizes, from approximately

nsim = 1 × 10

5 and over, to a reliability index practically equal to 2.2 (gray solid line). Therefore,

β = 2.2 is adopted as the exact value of the reliability index for the breakwater under overtopping. This value is to be used to assess the error by estimating the reliability index with the normality polynomial approach, and to compare versus the results obtained with the multi-linear regression approach. In fact, the results from these two approaches are also shown in

Figure 5b (black solid line for the normality polynomial; grey dashed line for the multi-linear regression approach), where it is observed that the normality polynomial approach converges to a stable value (approximately

β = 2.12) from about 2 × 10

3 simulations on, leading in average to a slightly smaller reliability index (i.e., in the conservative side) but closer to the exact value than by using the FORM. The multi-linear regression approach (like the MCS and unlike the normality polynomial) requires a minimum number of simulations to be carried out, being this number 1 × 10

3 for

Figure 5b, but sometimes more simulations are required; nevertheless, when a sufficient large number of simulations is performed (e.g., about 3 × 10

4 or more in

Figure 5b), the results of the multi-linear regression leads to practically the exact

β, and the design point and sensitivity factors can also be determined.

For brevity, the coefficients of the polynomials and multi-linear regression, design points and sensitivity factors are not extensively listed in this section, but as an example values are given for a single case of 2 × 10

4 simulations, which led to coefficients for the normality polynomial of

a0 = −2.164,

a1 = 0.3175,

a2 = −0.0110 and

a3= 0.0028, and for the multi-linear regression of

c = 1.4760,

b1 = −0.3382,

b2 = 0.1235,

b3 = −0.5550 and

b4 = −0.0633, as well as sensitivity factors equal to

αAu = 0.5089,

αBu = −0.1859,

αH = 0.8351, and

αT = 0.0953 and design points equal to

xAu = 1.2874,

xBu = −0.7263,

xH = 9.3045 m, and

xT = 10.2051 s, which compares very well with the corresponding sensitivity factors computed with the FORM, that are equal to 0.4959, −0.1712, 0.8466, and 0.0900, respectively, and also very well to the design points from FORM equal to 1.2689, −0.7182, 9.0796 m, and 10.1933 s, respectively. These values of sensitivity factors and design points are also very similar with those reported in [

10].

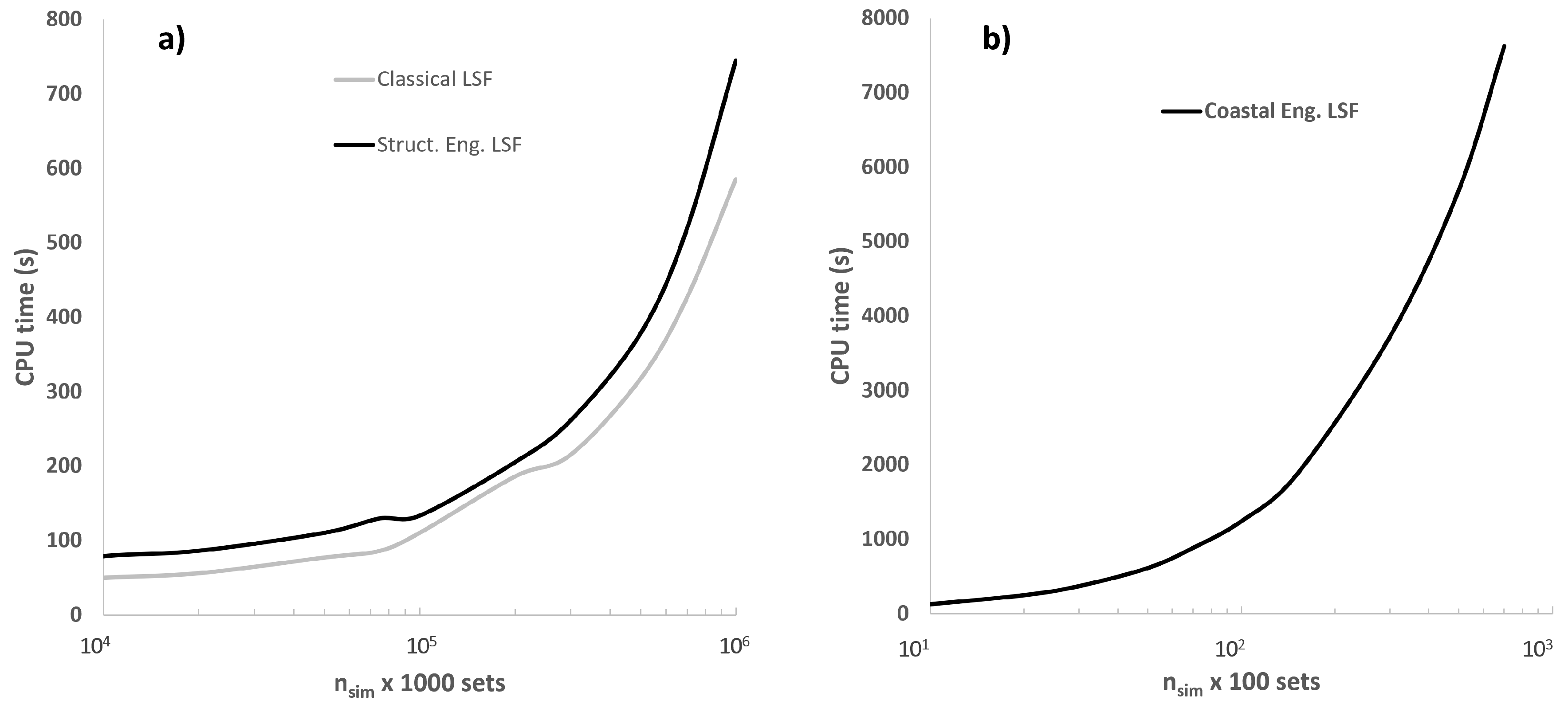

It is noted that

Figure 5b corresponds to only one set of simulations for every

nsim, which may vary for different sets of generated random numbers (as shown in

Figure 1 and

Figure 3), implying an uncertainty in the deviation from the exact value for different number of simulations. This uncertainty is assessed as for the classical and structural LFSs in previous sections, i.e., by computing the errors in the reliability index as per Equation (18) and fitting them to power laws with the mathematical functional form represented in Equations (19) and (20) (or Equations (23) and (24)), but with different values of the parameters. To do so, and unlike the case of the classical LSF and the reinforced concrete beam under flexure moment, not 1000 but only 100 sets of simulations are computed for each

nsim, due to the more extensive required time and computational resources referred to earlier (A comparison in terms of computing time (CPU time), a description and a discussion are given in

Appendix B and

Figure A2 of the appendix). This leads to the mean errors shown in

Figure 5c with a black solid line for the normality polynomial case (including mean values ± one standard deviation indicated in black dashed lines), and with a grey dashed line for the MCS case (including mean values ± one standard deviation indicated in grey dotted lines).

Even though errors reported in

Figure 5c exhibit not as a smooth behavior as those observed in

Figure 2 and

Figure 4 (obtained in an analogous way but for 1000 sets of

nsim), the qualitative trend is fairly similar, especially for mean values and not so small

nsim. Indeed, power laws can be adequately fitted to

με and

σε, as shown in

Figure 5d by fitting the computed errors from 1 × 10

2 simulations and over; the mathematical functional form is analogous to that of Equations (23) and (24), except that the constant 1 in Equation (23) is omitted. The obtained fitted parameters are

δ = 23.62,

γ = 0.2342,

κ = 47.94 and

τ = 0.4254. As observed in

Figure 5d the fitting is very adequate for

σε and adequate for

με, albeit only 100 sets of

nsim were employed for the statistics.

From

Figure 5c,d similar conclusions to those found before can be drawn, namely, that for decreasing

nsim the MCS tends to deviate more from the exact value than the normality polynomials (in terms of

ε), that for decreasing number of simulations the error for the MCS can be unknown and that power laws are adequate to mathematically defined

με and

σε for the normality polynomial approach. Therefore, a designer could for instance use the normality polynomial method to compute the reliability index for a reduced number of simulations, whereas accepting an error in the estimation. However, such an error could be estimated if expressions of

με and

σε (like the power laws determined in this study) are known.

As an example, in

Figure 5a

nsim = 7 × 10

2 is used for the normality polynomial approach (black solid line) and, as it is shown, this leads to reasonably adequate results (using a fairly small number of simulations) when compared with the FORM and the MCS (also included in

Figure 5a with a black dotted line). Moreover, the fitted equations shown in

Figure 5d can be used to quantitatively compute the associated error and its standard deviation with respect to the exact reliability index, that is

με = 5.09% and

σε = 2.95%. This is strictly applicable only to

Fc = 10 m; however, comparable errors may be expected for a range of freeboard values by inspecting

Figure 5a. Naturally, the contents of this paper could be extended to investigate how the error changes by varying one or more parameters of the LFSs. In such a case, one would expect that functional forms like those reported in this study can be used to assess

ε, but possibly with higher mean errors (and/or standard deviations) for higher reliability levels, because usually more simulations are required for lower probabilities of failure. This could be inferred from

Figure 5a, where a last set of calculations is shown by increasing the breakwater slope to 1/2 (dashed and dotted grey lines for the normality polynomial and MCS techniques, respectively), where higher variations of the normality polynomial in relation to the FORM are observed; this higher reliability levels also have the effect of decreasing the ability of the MCS to capture the probability of failure, as also observed in

Figure 5a for a wide range of

Fc. Additionally, although not shown in

Figure 5a, it was observed that the minimum number of simulations required to adequately performed the multi-linear regression increases for higher reliability levels (e.g., larger breakwater slopes).

Overall, results in this section indicate that the revisited simulation-based methods can be also effective for coastal engineering applications.