Abstract

As the inclusion of users in the design process receives greater attention, designers need to not only understand users, but also further cooperate with them. Therefore, engineering design education should also follow this trend, in order to enhance students’ ability to communicate and cooperate with users in the design practice. However, it is difficult to find users on teaching sites to cooperate with students because of time and budgetary constraints. With the development of artificial intelligence (AI) technology in recent years, chatbots may be the solution to finding specific users to participate in teaching. This study used Dialogflow and Google Assistant to build a system architecture, and applied methods of persona and semi-structured interviews to develop AI virtual product users. The system has a compound dialog mode (combining intent- and flow-based dialog modes), with which multiple chatbots can cooperate with students in the form of oral dialog. After four college students interacted with AI userbots, it was proven that this system can effectively participate in student design activities in the early stage of design. In the future, more AI userbots could be developed based on this system, according to different engineering design projects for engineering design teaching.

1. Introduction

With the rise of the concept of user-centered design, users are becoming the focus of the design process and designers must make an effort to analyze the real needs of product users [1]. Therefore, in engineering design education, students must learn how to define target users and understand their use needs; otherwise, they may produce inappropriate design solutions through misunderstanding users [2].

In response to the above-mentioned problem in teaching engineering design, several studies have emphasized the importance of having students cooperate with product users in design education [3]. This enables students to increase their understanding of product users, and produce creative design solutions that meet the needs of users.

Participatory design is a design method which allows users to actively participate in the design process, and treats users as collaborators [4]. Applying participatory design in design education can overcome students’ unfamiliarity with users, and allow students and users to jointly define problems and generate design ideas. However, it is quite difficult to invite users to a teaching site to participate in the design process because of time, budget, location, and other restrictions [5].

These problems may be solved in participatory design education with the development of artificial intelligence (AI) technology. Communication and cooperation between designers and product users is the main element of participatory design. Therefore, a natural language processing system with speech recognition capabilities can assist in the process of participatory design, and provide designers with user behavior patterns and product preferences [6].

Consequently, in order to assist students in participatory design with the aid of AI technology, this study proposes an “AI userbot system”, providing students with an interaction platform with users to understand real users’ needs, and other practical information.

1.1. Engineering Participatory Design

Compared with experts, students may have less experience and find it more difficult to deal with the information provided by users to find the best design solution. The development of the ability to deal with users should be a major goal in design education [7]. In the past, users, especially those whose age or usage habits deviated from the average, were not often considered in the commercial product development and design process [8]. However, from the perspective of business management, various users’ participation can effectively improve the versatility and market acceptance of a final design, thereby reducing product development risks. In addition to taking into account the physical characteristics, knowledge, and experience of users, the scope of consideration should also be extended to the users’ psychological characteristics. The application of these factors in the design process may enhance the long-term profitability of products [9].

Participatory design is a design method allowing experienced users with product-related knowledge to play an important role in the design process, which can effectively overcome the problems that can arise from neglecting users [7]. Participatory design was actually born in Scandinavia and is an action research and design approach emphasizing active co-operation between researchers and workers of the organization to help improve the latter’s work situation [5]. In the context of participatory design, users are seen as collaborators with designers. Participatory design allows designers and product users to jointly participate in the construction of design problems and in-depth analysis of limitations, to promote the transformation of designers’ design behaviors into design practices. For instance, participatory design has been used in designing chatbots for enhancing emotion management in teams [10]. Participatory design has proven to be a useful and appropriate method in design because it pays more attention to the exchange of design ideas than other design methods [11].

Based on the above literature review, the present study combines the characteristics of intent- and flow-based modes to build an AI userbot system with a dialog process mechanism. These userbots aim to be virtual representations of real product users, to help students carry out participatory design.

1.2. Chatbots

Machine learning has so far made outstanding progress in the field of natural language processing. One of the important applications is the dialog system, also known as a chatbot. The first chatbot system, ELIZA, began in 1966, and chatbots have continued to improve in imitating human talk, becoming more capable of meeting interlocutors’ expectations [12,13,14].

The types of chatbot can be divided into two categories according to their conversation mechanism: open-domain and closed-domain [14]. Open-domain chatbots can conduct free-style conversations without the need to define specific goals. In contrary, closed-domain chatbots are designed to help users achieve specific goals, so they usually limit the topic of conversation to a certain range [14,15]. According to the different ways of processing conversations, chatbots therefore can be intent- or flow-based [16]. In the intent-based mode, the user enters a message and the chatbot analyzes keywords in the message to understand the user’s intent and provide a reply. In the flow-based mode, the chatbot gradually guides users to a correct answer according to the preset dialog process. Both intent- and flow-based chatbots have their own advantages and disadvantages. With the advancement of machine learning technology, their advantages can be combined to help users talk to chatbots in their favorite ways, in the standard dialog process.

There has been a trend in studying engineering design conducted in a computer-aided way such as using a digital sketching tool [17], TRIZ and Biomimetics [18], or mixed-reality (MR) interaction metaphor [19] to generate engineering solutions. With the advent of interest in AI chatbot technology, chatbots may also be used in engineering industry and engineering design education. In education, chatbots can create an interactive learning experience platform for students [20], and therefore educational chatbots are increasingly being studied [21] to help students learning different academic subjects, such as language [22], accounting [23], ethnography [24], and data science [25]. However, to our knowledge, there is not any study that has incorporated the pedagogical use of a chatbot in the engineering field. By developing a new AI platform with a chatbot, this study is able to examine the feasibility of the proposed technology in learning engineering design skills, especially in collaborating with product users.

2. Method

2.1. Architecture and Chatbots

2.1.1. Proposed Architecture System

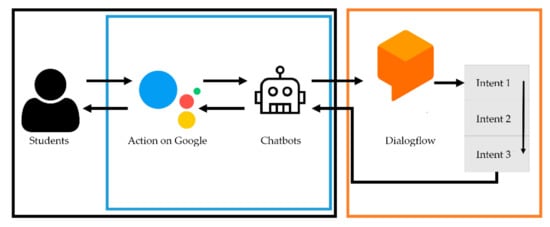

The proposed approach aims to develop a system in which AI userbots are built for different design projects (Figure 1). We use the Dialogflow platform to realize the semantic recognition function, and Google Assistant as the platform interface. The system, which is presented in the form of multiple chatbots, enables students to have real-time oral conversations with multiple virtual users and obtain the product preferences and needs of these different product users.

Figure 1.

Architecture of the proposed system.

Dialogflow is a natural language understanding (NLU) platform used to design and integrate a conversational user interface into mobile apps, web applications, devices, bots, interactive voice response systems, and so on [26]. Google Assistant is an AI-powered virtual assistant can engage in two-way conversations [27]. The Dialogflow development tool provides human–computer interaction technology based on NLU, which can be used to build a userbot with machine learning capabilities. NLU focuses on the recognition and understanding of the spoken language [28]. It consists of four main processes: speech recognition, syntax analysis, semantic analysis, and pragmatic analysis [29]. NLU in Dialogflow is performed through the identification and matching of intent and entities. Intent refers to the purpose of expression of users. When Dialogflow receives the expression of the user, the intent detection confidence (confidence score) is determined based on the similarity between the expression and the sample question sentences of each intent, and then the system gives each intent a score from 0 (completely uncertain) to 1 (completely certain). If the intent has the highest detection confidence score and the score is higher than the trigger threshold of 0.6, the system pairs the expression with the highest score intent and triggers a response to the user. For example, when the user expresses a sentence such as “Have you ever used self-defense products?” to the system, the aim of the users is to use this sentence to understand real users’ experiences in using self-defense products. The system pairs the intent with the preset entity of “user experience”. The intent detection confidence is higher than the trigger threshold of 0.6 and reaches 0.8, and the intent of “user experience” is therefore triggered.

Entities are keywords which help the system judge the confidence level of intent detection. The system extracts entities from the sentence expressions of users to identify the users’ intent. As the system is increasingly utilized, the Dialogflow platform begins to automatically expand the intents and entities and adjust the trigger priority of different intents. The Google Assistant interface uses voice recognition and electronically synthesized speech to allow users to reach a two-way dialog with the system in natural language. Users can choose to enter text with a virtual keyboard as well.

2.1.2. AI Userbot

Based on the architecture and process mechanism of the abovementioned system, the first group of AI userbots for participatory design for students in this study was built, and the design project was a female self-defense product design. Information on using self-defense products from real product users was collected by using semi-structured interviews, and the characters of the userbots were created according to the results of the interviews. Intents were also collected and input into the database of the Dialogflow platform.

A convenience sampling [30] was used to recruit product users from Facebook. Six women who had experience of using self-defense products under real world circumstances were invited to participate in semi-structured interviews. All the questions conducted in the interview were related to the product ownership, user experience, and product preference of self-defense products. These classified problems were the basic content that professional designers would put forward to users. After each user answered the questions, researchers further discussed more details with the users according to the users’ answers, or expanded on more questions to obtain more users’ information. The replies of six users were transferred to draft verbatim records. On average, each user’s verbatim draft is about 10,000 words. According to the questions we asked and users’ responses, two researchers of this study concurrently reviewed the whole verbatim draft and determined the possible intents and entities together. Moreover, we found that the six real users’ responses on self-defense products could be classified into three different virtual product users with different personalities, product experience, and preferences. They can be classified into users preferring defensive, deterring, and attacking products, respectively. Consequently, three AI userbots for self-defense products were established based on four steps:

- Determining every intent of each sample question sentence based on product ownership, user experience, and product preference of users.

- Enumerating the syntax of a question sentence with the same intent as much as possible to help the system recognize the consistent intent in different wordings of sentences.

- Defining entities in the sample question sentence to help the system recognize intent more accurately.

- Establishing a unified response to the sample question sentence based on the persona of the userbot. In addition to text responses, pictures, audio files, videos, and buttons are materials for the system to use to respond to users.

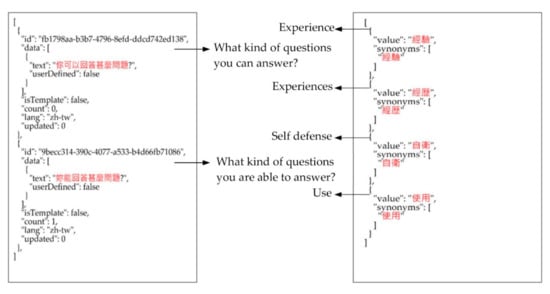

Figure 2 shows an example indicating how intent and entity were established based on the questions to users and users’ responses to the questions: (1) In the interview, we asked users the question of “What kind of questions can you answer?” Two researchers agreed the intent of this question would belong to the category of user experience. (2) Sentences such as “What kind of questions you are able to answer?” that may have the same expression as the sentence of “What kind of questions can you answer?” was built into the database of Dialogflow. (3) In between these similar sentences and users’ responses to the question of “What kind of questions can you answer?” experience, experiences, self-defense, and use frequently appeared in these sentences and were set as entities.

Figure 2.

The syntax interfaces show how an intent and entity related to a specific userbot was built in the database. The left side interface shows that different sentence wordings with the same expression are built into the system; the interface on the right side shows entities of a sample question sentence.

In total, 137 intents were established; they are 45, 48, and 44 intents for the three AI userbots, respectively. In the entities section, 58 total keyword-categories, corresponding to 20, 22, and 16 keyword categories respectively, were established as well. Table A1 shows the intents and entities for three AI userbots.

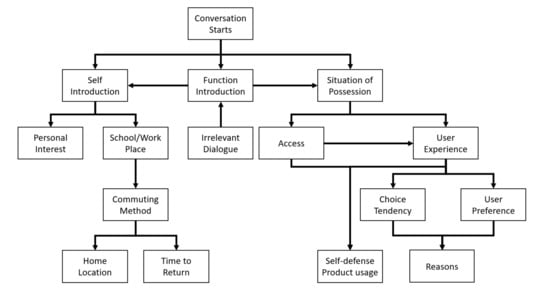

The system also has a flow-based dialogue process in the form of suggestion chips (a suggestion chip is a small visual element based on the flow-based mode that appears at the bottom of the Google Assistant user interface; users can tap on these instead of speaking phrases to continue the conversation). Users clicked a suggestion chip to trigger the next intent based on the flow-based mode, when the system could not match the intent from the users’ question under an intent-based dialogue process. Under the flow-based mode, users can be guided to focus on the topic of designing self-defense products and discussing user needs through the whole conversation flow. Users can freely return to an intent-based dialog process by entering other questions. Figure 3 shows the map for the flow-based dialog process. The AI userbot in flow-based dialogue guided students to discuss related issues shown in Figure 3.

Figure 3.

Dialog process map of the userbot system.

After the system completes the establishment of the intent for each userbot, the userbots need to be trained using the Dialogflow platform. During training, all the question sentences and the matched intents that have been used are displayed in the database and researchers manually tell the dialog system the correctness of each pair of question sentences and the matched intents. If the pairing is wrong, the researchers can specify a correct intent or create a new intent to the related question. Through training, the intent judgment system is strengthened and optimized. Every time the system receives a corrected pair of question sentences and matched intent, the new sample question sentence and intent are added to the system. The next time the userbot encounters a similar problem, the system has a better chance of matching a question to a more accurate intent.

2.2. The Proposed APP

After completing the setting and training of the three userbots representing different female self-defense product users, the userbots can be called using the Google Assistant platform in various computing devices.

2.2.1. Call a Specific AI Userbot

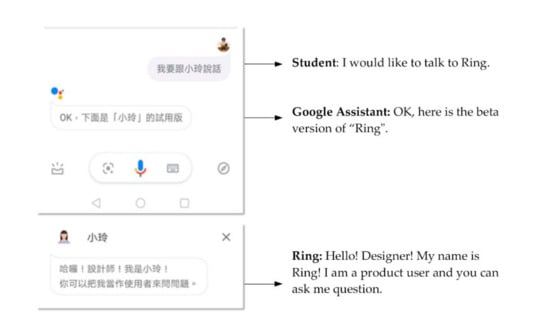

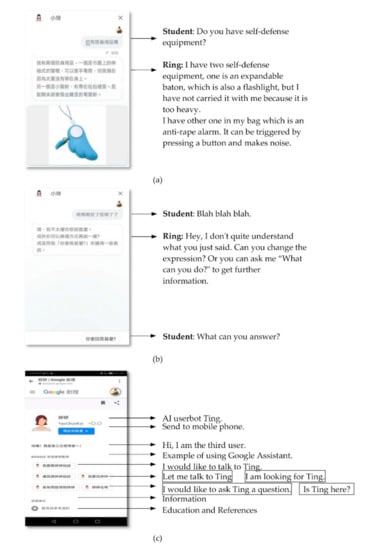

Users install the Google Assistant app and start it. Voice input or text input can be used through the app interface to request a dialogue with a specific userbot, and the Google Assistant opens a new conversation dialog page to lead the user in a conversation with the designated userbot in the dialog page. When a userbot is successfully activated, a greeting intent is automatically triggered (Figure 4), and the user can confirm whether they have successfully entered the dialogue interface with the userbot.

Figure 4.

The interface shows that a userbot named “Ring” is activated, and a new conversation dialog page has opened to lead the user in a conversation with userbot Ring.

2.2.2. Start Dialogue

After entering the dialog page, users can freely ask questions, and the system then responds with an appropriate conversation according to the matched intent (Figure 5a). The three userbots built in the system were based on different personas; they did not always respond with the same sentences to a question. When the question raised by a user did not match any intent built in the system, the system then triggered the default “fallback” intent and the userbot replied with its incomprehension of the content of the question, and suggested that the users expressed the same question in another way (Figure 5b).

Figure 5.

Dialog interfaces of the user and userbot. (a) The user asked a question to the userbot Ring about its experience in using self-defense products, and the userbot Ring told the user what related products it had used before; (b) userbot Ring did not understand the user’s question and asked the user to ask the same question with a different expression; (c) the user switched the conversation from userbot Ring to userbot Ting, and a sentence with the greeting intent is shown on the interface.

During the conversation between the user and the userbot, a recommended question button would appear at the bottom of the screen to provide users with the option of using flow-based dialog. The users could choose to try to keep asking their own questions, or to ask suggested questions to the userbot.

2.2.3. Switch Userbots

If users want to switch to different userbots, they give an instruction to the current userbot to trigger the “Leaving” intent for stopping the conversation. Google Assistant returns to the initial page and asks the user which userbot they would like to talk to (Figure 5c).

3. Evaluation

To assess the feasibility of the AI userbot system in the design process, a systematic empirical evaluation was conducted, in which a group of four college students used the system to discuss the concepts of female self-defense products with the userbots.

3.1. Partcipants and Procedure

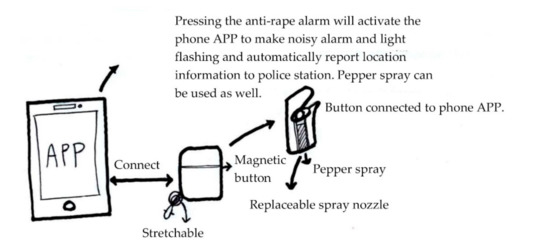

In an ergonomic course, 12 third-year engineering-background college students participated in a group design workshop. The 12 students were divided into three groups. One of the student groups was all male and was invited to collaborate with AI userbots to design female self-defense products. They collaborated with AI userbots to develop as many design ideas as they could during the whole design process, and drew these ideas on A4-sized paper. The discussions between the students and chatbots lasted a total of one and a half hours. Figure 6 shows one of the solutions generated from the student group.

Figure 6.

One of the solutions generated from the student group for a female self-defense product.

3.2. Results

According to the data extracted from the system, a total of 40 intents were triggered by the students, and only two of them failed to be matched to the dialogs. The reason that two user’s dialogs could not be matched to intent was because the system did not receive the student’s voice clearly, and therefore Google Assistant failed to convert voice to text. There was no operational error when students switched to different userbots.

Based on the discussions between the students and userbots, the students produced a design concept for a female self-defense product. In the discussions, one of the userbots expressed its concern about the possibility that attackers would seize self-defensive products from female users. The other two userbots expressed their expectations of having warning and attack functions for self-defense products. The students considered all the concerns from the userbots and then designed a self-defense product with a pepper sprayer connected. In addition to spraying an irritating powder, the self-defense product that students designed also had a button to activate a mobile phone application to emit a high-decibel sound, and send the user’s current location to an emergency contact. Moreover, in order to prevent the possibility of the self-defense equipment being taken, the students designed a retractable pull ring for the product.

4. Discussion and Conclusions

Given the importance of user-centered design, it is imperative for students to learn how to identify user needs and turn these needs into the catalyst for creative solutions [1]. Participatory design can produce products that meet user needs by allowing students and users to work together [2,3,4,5,7]. However, it is difficult for users to participate in design education at a teaching site [5]. The present study developed a userbot system based on real user information and AI technology. In this study, students talked to AI userbots through oral real-time dialogs to explore real female users’ product preferences, use experience, and needs for self-defense products, and eventually the students collaborated with the userbots to generate design solutions. Based on the development of the system and the verification process, the conclusions of the present study can be summarized as follows:

- 1.

- Using AI userbots to represent real-world users.

The userbots’ knowledge is based on real users’ experiences. The information, collected through persona design and interviews, is all included in the database, and Dialogflow’s algorithm allows the system to automatically accumulate new information during the conversation and correct the content of the responses, making the responses of the userbots more complete [26].

- 2.

- Combining intent and flow-based modes to increase the fluency of dialog [14,15,16].

For a chatbot, the fluency of dialog is an important indicator for user experience satisfaction. Dialogflow’s intent-based algorithm can accurately match students’ speech to a high-confidence-level intent to generate a correct response from the userbot [26]. When there was no agreement intent, the flow-based dialog process could continue dialog without being interrupted.

- 3.

- Using persona design and interviews to construct the initial database of the userbots.

Interviewing real users and making personas ensures the userbots’ responses about products are consistent with those of real users. When different real users have different views on the same product, multiple userbots can be included in the system to provide students multiple and complete real information.

- 4.

- This system has expandability of the design project.

The present study used the project of female self-defense product design to develop AI userbots in the system. Userbots can also be built for various design projects through the same process described in this study. When userbots with knowledge of the various uses of products are built into the system, the common characteristics, dialogs, and responses of the userbots can support other userbots through AI technology to increase the accuracy and completeness of dialogs between userbots and students. We expect to further establish AI userbots and develop product design projects for users whom students are not familiar with, need to pay more attention to, and have to collaborate with, such as pregnant women, elderly or disabled people.

5. Future Study

There are two aspects which were not covered in this study, and can be considered in future studies:

- Communication is a two-way interaction. The userbots in this system would not initiate dialog automatically, but adding rhetorical questions to increase interactions between userbots and students may allow students to collaborate with userbots more actively in the design process.

- In this study, four college students used this system for 1.5 h to collaborate with userbots on developing a female self-defense product design. The system was proven to be effective, through the collaboration between the userbots and students, in generating product solutions. However, there are more design activities in the design process, such as robust design, modelling, or feasibility tests, and it is imperative to understand how userbots could interact with students in different design activities in a long-term design process.

In this paper, we described an AI userbot system and discussed the pros and cons of collaboration between students and userbots. This system, to our knowledge, is the unique educational chatbot developed for engineering design education. The AI userbot system is currently a beta version under review by Google, and will be released to all community members who use the Google Assistant in design education when the AI userbot system is successfully deployed. Various userbots can be constructed according to the needs of different engineering design projects, and all members can share the outcomes in this system. Hopefully, this study provides a reference for developing an educational chatbot system and teaching engineering participatory design using AI technology.

Author Contributions

Conceptualization, design, methodology, interpretation, critical revision of the manuscript, and final approval of the manuscript, Y.-H.C.; data collection, formal analysis, and Writing—Original draft preparation C.-K.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Ministry of Science and Technology, Taiwan, grant number MOST 107-2511-H-003-047-MY3, and Ministry of Education, Taiwan, grant number PHA1080053.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

List of intents and entities which was built with Dialogflow for three AI userbots.

Table A1.

List of intents and entities which was built with Dialogflow for three AI userbots.

| AI Userbot | Intent | Entity |

|---|---|---|

| AI userbot 1 | 45 intents: Dialog.Conversation_Starts; Dialog.Function Introduction; Dialog.Irrelevant_Dialogue; Dialog.End_Dialog; Dialog.Greetings; Dialog.Apologize; Agent.Self_Introduction; Agent.Name; Agent.Age; Agent.Home_Location; Agent.Commuting_Method; Agent.School_Place; Agent.Hobby; Agent.Birth_date; Agent.Bad; Agent.Answer_my_question; Agent.Can_you_help; Agent.Chatbot; Agent.Clever; Agent.Crazy; Agent.Funny; Agent.Good; Agent.Happy; Agent.Annoying; Greetings.nice_to_meet_you; Greetings.nice_to_see_you; Product_Ownership.Access; Product_Ownership.Reasons_not_bringing; Product_Ownership.Situation_of_Pessession; Product_Ownership.Details; Product_Ownership.Approaches; Product_Ownership.Differences; Product_Ownership.Convenience; User_Experience.Useful_or_not; User_Experience.Occasion; User_Experience.Experience; User_Experience.placement; User_Experience.Harassment; Product_Preference.Conspicuous; Product_Preference.Choice_Tendency; Product_Preference.Shape; Product_Preference.Type; Product_Preference.Ideal; Product_Preference.Ideal.Reasons; Product_Preference.Price. | 20 entities: Benefit; Consider; Experience; EyeCatching; GotitOrNot; Holiday; How; Ideal; Interest; Not; Prefer; Prize; Reason; SearchInformation; SelfDefenseSupplies; SelfIntroduction; Size; Types; When; Where. |

| AI userbot 2 | 48 intents: Dialog.Conversation_Starts; Dialog.Function Introduction; Dialog.Irrelevant_Dialogue; Dialog.End_Dialog; Dialog.Greetings; Dialog.Apologize; Agent.Self_Introduction; Agent.Name; Agent.Age; Agent.Home_Location; Agent.Commuting_Method; Agent.School_Place; Agent.Hobby; Agent.Birth_date; Agent.Bad; Agent.Answer_my_question; Agent.Can_you_help; Agent.Chatbot; Agent.Clever; Agent.Crazy; Agent.Funny; Agent.Good; Agent.Happy; Agent.Annoying; Greetings.nice_to_meet_you; Greetings.nice_to_see_you; Product_Ownership.Access; Product_Ownership.Reasons_not_bringing; Product_Ownership.Situation_of_Pessession; Product_Ownership.Details; Product_Ownership.Approaches; Product_Ownership.Differences; Product_Ownership.Convenience; User_Experience.Useful_or_not; User_Experience.Occasion; User_Experience.Experience; User_Experience.placement; User_Experience.Harassment; Product_Preference.Conspicuous; Product_Preference.Choice_Tendency; Product_Preference.Shape; Product_Preference.Type; Product_Preference.Ideal; Product_Preference.Ideal.Reasons; Product_Preference.Price; Product_Preference.Evidence_Function; Product_Preference.Alarm_Type; Product_Preference.Alarm_Type.Reason. | 22 entities: Benefit; Consider; Experience; EyeCatching; GotitOrNot; Holiday; How; Ideal; Interest; Not; Prefer; Prize; Reason; SearchInformation; SelfDefenseSupplies; SelfIntroduction; Size; Types; When; Where; Avoid; Recording. |

| AI userbot 3 | 44 intents: Dialog.Conversation_Starts; Dialog.Function Introduction; Dialog.Irrelevant_Dialogue; Dialog.End_Dialog; Dialog.Greetings; Dialog.Apologize; Agent.Self_Introduction; Agent.Name; Agent.Age; Agent.Home_Location; Agent.Commuting_Method; Agent.School_Place; Agent.Hobby; Agent.Birth_date; Agent.Bad; Agent.Answer_my_question; Agent.Can_you_help; Agent.Chatbot; Agent.Clever; Agent.Crazy; Agent.Funny; Agent.Good; Agent.Happy; Agent.Annoying; Greetings.nice_to_meet_you; Greetings.nice_to_see_you; Product_Ownership.Access; Product_Ownership.Reasons_not_bringing; Product_Ownership.Situation_of_Pessession; Product_Ownership.Details; Product_Ownership.Approaches; Product_Ownership.Differences; Product_Ownership.Convenience; User_Experience.Useful_or_not; User_Experience.Occasion; User_Experience.Experience; User_Experience.placement; User_Experience.Harassment; Product_Preference.Conspicuous; Product_Preference.Choice_Tendency; Product_Preference.Shape; Product_Preference.Type; Product_Preference.Ideal; Product_Preference.Ideal.Reasons. | 16 entities: Benefit; Experience; GotitOrNot; How; Ideal; Interest; Not; Prefer; Prize; Reason; SearchInformation; SelfDefenseSupplies; SelfIntroduction; Size; When; Where. |

References

- Endsley, M.R. Designing for Situation Awareness: An Approach to User-Centered Design; CRC Press: Florida, FL, USA, 2016. [Google Scholar]

- Williams, D.J.; Groen, M. User-centred design as a risk management tool. In Risk and Cognition; Mercantini, J.M., Faucher, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2015; Chapter 5; pp. 107–127. [Google Scholar]

- Bjögvinsson, E.; Ehn, P.; Hillgren, P.A. Design things and design thinking: Contemporary participatory design challenges. Des. Issues 2012, 28, 101–116. [Google Scholar] [CrossRef]

- Sanders, E.B.N. From user-centered to participatory design approaches. In Design and the Social Sciences; Frascara, J., Ed.; CRC Press: London, UK, 2002; Chapter 1; pp. 18–25. [Google Scholar]

- Wilkinson, C.R.; De Angeli, A. Applying user centred and participatory design approaches to commercial product development. Des. Stud. 2014, 35, 614–631. [Google Scholar]

- Wu, S.; Ghenniwa, H.; Shen, W. Personal assistant for collaborative design environments. Comput. Ind. 2006, 57, 732–739. [Google Scholar] [CrossRef]

- Lahti, H.; Seitamaa-Hakkarainen, P. Towards participatory design in craft and design education. CoDesign 2005, 1, 103–117. [Google Scholar] [CrossRef]

- Hanson, J.; Percival, J.; Aldred, H.; Brownsell, S.; Hawley, M. Attitudes to telecare among older people, professional care workers and informal careers: A preventative strategy or crisis management? Univers. Access. Inf. Soc. 2007, 6, 193–205. [Google Scholar] [CrossRef]

- Mayhew, D.J. The usability engineering lifecycle. In CHI ’99 Extended Abstracts on Human Factors in Computing Systems; ACM: New York, NY, USA, 1999. [Google Scholar]

- Björgvinsson, E.; Ehn, P.; Hillgren, P.A. Agonistic participatory design: Working with marginalised social movements. CoDesign 2012, 8, 127–144. [Google Scholar] [CrossRef]

- Benke, I.; Knierim, M.T.; Maedche, A. Chatbot-based emotion management for distributed teams: A participatory design study. Proc. ACM Hum. Comput. Interact. 2020, 4, 118. [Google Scholar] [CrossRef]

- Dokukina, I.; Gumanova, J. The rise of chatbots–new personal assistants in foreign language learning. Procedia Comput. Sci. 2020, 169, 542–546. [Google Scholar] [CrossRef]

- Tsai, M.H.; Chan, H.Y.; Liu, L.Y. Conversation-based school building inspection support system. Appl. Sci. 2020, 10, 3739. [Google Scholar] [CrossRef]

- Kapočiūtė-Dzikienė, J. A domain-specific generative chatbot trained from little data. Appl. Sci. 2020, 10, 2221. [Google Scholar] [CrossRef]

- Quarteroni, S.; Manandhar, S. Designing an interactive open-domain question answering system. Nat. Lang. Eng. 2009, 15, 73–95. [Google Scholar] [CrossRef]

- Singh, A.; Ramasubramanian, K.; Shivam, S. Building an Enterprise Chatbot; Apress: Berkeley, CA, USA, 2019. [Google Scholar]

- Self, J.; Evans, M.; Kim, E.J. A comparison of digital and conventional sketching: Implications for conceptual design ideation. J. Des. Res. 2016, 14, 171–202. [Google Scholar]

- Mohan, M.; Shah, J.J.; Narsale, S.; Khorshidi, M. Capturing ideation paths for discovery of design exploration strategies in conceptual engineering design. In Design Computing and Cognition’12; Springer: Berlin/Heidelberg, Germany, 2014; pp. 589–604. [Google Scholar]

- Huo, K.; Vinayak, K.R.; Ramani, K. Window-shaping: 3D design ideation by creating on, borrowing from, and looking at the physical world. In Proceedings of the Eleventh International Conference on Tangible, Embedded, and Embodied Interaction, Yokohama, Japan, 20–23 March 2017; pp. 37–45. [Google Scholar]

- Clarizia, F.; Colace, F.; Lombardi, M.; Pascale, F.; Santaniello, D. Chatbot: An education support system for student. In Proceedings of the 10th International Symposium on Cyberspace Safety and Security, Amalfi, Italy, 29–31 October 2018; pp. 291–302. [Google Scholar]

- D’Aniello, G.; Gaeta, A.; Gaeta, M.; Tomasiello, S. Self-regulated learning with approximate reasoning and situation awareness. J. Ambient Intell. Humaniz. Comput. 2018, 9, 151–164. [Google Scholar] [CrossRef]

- Coniam, D. The linguistic accuracy of chatbots: Usability from an ESL perspective. Text Talk 2014, 34, 545–567. [Google Scholar] [CrossRef]

- Schmulian, A.; Coetzee, S.A. The development of Messenger bots for teaching and learning and accounting students’ experience of the use thereof. Br J. Educ. Technol. 2019, 50, 2751–2777. [Google Scholar] [CrossRef]

- Tallyn, E.; Fried, H.; Gianni, R.; Isard, A.; Speed, C. The Ethnobot: Gathering ethnographies in the age of IoT. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–13. [Google Scholar]

- Carlander-Reuterfelt, D.; Carrera, Á.; Iglesias, C.A.; Araque, Ó.; Sánchez-Rada, J.F.; Muñoz, S. JAICOB: A data science chatbot. IEEE Access 2020, 8, 180672–180680. [Google Scholar] [CrossRef]

- Singh, A.; Ramasubramanian, K.; Shivam, S. Introduction to Microsoft Bot, RASA, and Google Dialogflow. In Building an Enterprise Chatbot; Apress: Berkeley, CA, USA, 2019; pp. 281–302. [Google Scholar]

- Google: Google Assistant. Available online: https://assistant.google.com/ (accessed on 22 October 2020).

- Allen, J. Natural Language Understanding; Pearson: London, UK, 1995. [Google Scholar]

- Lee, R.S. Natural language processing. In Artificial Intelligence in Daily Life; Springer: Singapore; pp. 157–192.

- Etikan, I.; Musa, S.A.; Alkassim, R.S. Comparison of convenience sampling and purposive sampling. Am. J. Theoret. Appl. Stat. 2016, 5, 1–4. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).