1. Introduction

Several real-world applications can be formulated as continuous optimization problems in a wide range of scientific domains, such as engineering design, medical treatment, supply chain management, finance, and manufacturing [

1,

2,

3,

4,

5,

6,

7,

8,

9]. Many of these optimization formulations have some sort of uncertainty and their objective functions contain noise [

10,

11,

12,

13]. Moreover, it is sometimes necessary to deal with complex problems with high nonlinearity and/or dimensionality, and occasionally there is no analytical form for the objective function [

14]. Even if the objective functions associated with these types of problems are expressed mathematically, in most cases they are not differentiable. Therefore, classical optimization methods fail to adapt them, and it is impossible to compute their gradient. The situation is much worse when these functions contain high noise levels.

Simulation and optimization has attracted much interest recently, since the output response evaluation of such real-world problems need simulation techniques. Moreover, optimization problems in stochastic environments are realized by combining simulation-based estimation with an optimization process. Therefore, the title “simulation-based optimization” is commonly used instead of “stochastic optimization” [

15,

16].

Simulation-based optimization is used with certain types of uncertainties to optimize the real-world problem. There are four types of uncertainties discussed in [

14]: noise in objective function evaluations; approximation of computationally expensive objective functions with surrogate models; changes or disturbance of design parameters after determining the optimal solution; problems with time-varying objective functions. We consider the first type of uncertainty, where the problem is defined mathematically as follows [

17]:

where

f is a real-valued function defined on search space

with objective variables

, and

is a random variable whose probability density function is

. Problem (

1) is also referred to as the stochastic programming problem in which random variables appear in the formulation of the objective functions.

In spite of the importance of the choice of optimal simulation parameters in improving operation, configuring them well still remains a challenge. Because of the complicated simulation process, the objective function is subjected to different noise levels followed by expensive computational evaluation. These problems are restricted by the following characterizations:

The complexity and time necessary to compute the objective function values;

The difficulty of computing the exact gradient of the objective function, as well as its numerical approximation being very expensive;

The noise values in the objective function.

To deal with these characterizations, global search methods should be invoked to avoid using classical nonlinear programming that fails to solve such problems with multiple local optima.

Recently, the use of artificial intelligence methods in optimization has been of great interest. Metaheuristics play a significant role in both real-life simulations and invoking smart methods [

18,

19,

20,

21,

22,

23,

24]. Metaheuristics show strong validity rates across a wide variety of applications. These methods, however, suffer from slow convergence, especially in cases of complex applications, which lead to high computational costs. This slow convergence may be a result of the exploration structures of such methods, while exploring the search space depends on the random structures. On another hand, metaheuristics cannot utilize local information to deduce promising search directions. The estimation of Distribution Algorithms (EDAs) comprise a class of evolutionary computation [

25] and has been widely studied in the global optimization field [

26,

27,

28,

29]. Compared with traditional Evolutionary Algorithms (EAs), such as Genetic Algorithms (GAs), this type of algorithm has neither crossover nor mutation operators. Instead, an EDA explicitly builds a probabilistic model by learning and sampling the probability distribution of promising solutions in each generation. While building the probabilistic model presents statistical information from the search space, it is used as the guidance of reproduction to find better solutions.

On the other hand, several optimal search techniques have been designed to tackle the stochastic programming problem. Some of these techniques are known as variable-sample methods [

30]. The key aspect of the variable-sample approach is to reformulate the stochastic optimization problem in the form of a deterministic one. A differential evolution variant is proposed in [

12] equipped with three new algorithmic components, including a central tendency-based mutation, adopted blending crossover, and a new distance-based selection mechanism. To deal with the noise, their algorithm uses non-conventional mutation strategies. In [

31], an extension of multi-objective optimization is proposed, based on an differential evolution algorithm to manage the effect of noise in objective functions. Their method applies an adaptive range of the sample size for estimating the fitness values. In [

32], instead of using averages, the search policy considers the distribution of noisy samples during the fitness evaluation process. A number of different approaches to deal with noise are presented in [

33]. Most sampling methods are based on the use of averages, and this motivates us to use different sampling techniques. One possible sampling alternative is the use of fuzzy logic, which is an important pillar of computational intelligence. The idea of the fuzzy set was first introduced in [

34]; this enabled a member to belong to a set in a partitioned way, as opposed to in a definite way, as stated by classical set theory. In other words, membership can be assigned a value within the

interval instead of the

set. Over the past four decades, the theory of fuzzy random variables [

35] has been developed via a large number of studies in the area of fuzzy stochastic optimization [

36,

37]. The noisy part of our problem can be considered to be randomness or fuzziness, and it can be understood as a fuzzy stochastic problem, which can be found in the literature [

38,

39,

40,

41,

42].

In this paper, EDAs are used to solve nonlinear optimization problems that contain noise. The proposed EDA-based methods follow the class of EDAs proposed in [

43]. The designed EDA-model is firstly combined with variable-sample methods (SPRS) [

30]. Sampling techniques have a serious problem when the sample size is small, so estimating the objective function values with noise is accurate in these cases. Therefore, we propose a new sampling technique based on fuzzy systems to deal with small sample sizes. Another EDA-based method uses the proposed fuzzy sampling technique. Moreover, additive versions of the proposed methods are developed to optimize functions without noise in order to evaluate different efficiency levels of the proposed methods. In order to test the performance of the proposed methods, different numerical experiments were carried out using several benchmark test functions. Moreover, three real-world applications are considered to assess the performance of the proposed methods.

The rest of the paper is structured as follows. In

Section 2, we highlight the main structure and techniques for EDAs. The design elements and proposed methods are stated in

Section 3. In

Section 4, algorithmic implementations of the proposed methods and numerical experiments are discussed. The results for three stochastic programming applications are presented in

Section 5. Finally, the paper is concluded in

Section 6.

2. Estimation of Distribution Algorithms

EDAs were firstly introduced in [

44] as a new population-based method, and have been extensively studied in the field of global optimization [

26,

44]. Despite the fact that EDAs were firstly proposed for combinatorial optimization, many studies have been performed applying them to continuous optimization. The primary difference between EDAs is the aspect of building the probabilistic model. Generally, in continuous optimization there are two considerable branches: one is based on the Gaussian distribution model [

25,

26,

45,

46,

47,

48,

49,

50,

51], and the other on the histogram model [

47,

52,

53,

54,

55,

56,

57,

58]. The first is the most widely used and has been studied extensively. The main steps of general EDAs are stated in Algorithm 1.

| Algorithm 1 Pseudo-code for EDA approach |

| 1: |

| 2 Generate and evaluate M random individuals (the initial population).

|

| 3: repeat |

| 4: Select individuals from according to a selection method.

|

| 5: Estimate the joint probability distribution of the selected individuals.

|

| 6: Generate M individuals using , and evaluate them.

|

| 7: |

| 8: until a stopping criterion is met.

|

In the case of adapting a Gaussian distribution model

in Algorithm 1, it has the form of a normal density with a mean

and a covariance matrix

. The earliest proposed EDAs were based on simple univariate Gaussian distributions, such as the Marginal Distribution Algorithm for continuous domains (

) and Population-Based Incremental Learning for continuous domains (

) [

26,

45]. In these, all variables are taken to be completely independent of each other, and the joint density function is

where

is a set of local parameters. Such models are simple and easy to implement with a low computational cost, but they fail with high dependent variable problems. For this problem, many EDAs based on multivariate Gaussian models have been proposed, which adapt the conventional maximum likelihood-estimated multivariate Gaussian distribution, such as Normal IDEA [

46,

47], EMNA

[

26], and EGNA [

25,

26]. These methods have the same performance, since they are based on the same multivariate Gaussian distribution, and there is no significant difference between them [

26]. However, in these methods the dependence between variables is taken, so they have a poor exploitative ability and the computational cost increases exponentially with the problem size [

59]. To address this problem, various extensions of these methods have been introduced, which depend on scaling

after estimating the maximum likelihood according to certain criteria to improve the exploration quality. This has been done in methods such as EEDA [

48], SDR-AVS-IDEA [

50], and CT-AVS-IDEA [

49].

The EDA with Model Complexity Control (EDA-MCC) method was introduced to control the high complexity of the multivariate Gaussian model without losing the dependence between variables [

43]. Since the univariate Gaussian model has a simple structure and limited computational cost, it has difficulty solving nonseparable problems. On other hand, the multivariate Gaussian model can solve nonseparable problems, but it usually has difficulty as a result of its complexity and cost. In the EDA-MCC method, the advantages of the univariate and multivariate Gaussian models are combined according to certain criterion and by applying two main strategies:

5. Stochastic Programming Applications

In this section, we investigate the strength of the proposed methods in solving real-world problems. Therefore, the FSEDA and ASEDA methods attempted to find the best solutions for three different real stochastic programming applications:

The product mix (PROD-MIX) problem [

76,

77];

The modified production planning Kall and Wallace (KANDW3) problem [

77,

78];

The two-stage optimal capacity investment Louveaux and Smeers (LANDS) problem [

77,

79].

These applications are constrained stochastic programming problems. Therefore, the penalty methodology [

80] was used to transform these constrained problems into a series of unconstrained ones. These unconstrained solutions are assumed to converge to the solutions of the corresponding constrained problem.

To solve these problems, the proposed EDA-based methods were used with the parameters in

Table 1, except the population size, which was adjusted to

. The penalty parameter was set to

. The algorithms were terminated when they reached

function evaluations.

5.1. PROD-MIX Problem

This problem assumes that a furniture shop has two workstations ; the first workstation is for carpentry and the other for finishing. The furniture shop has four products . Each product i consumes a certain number of man-hours at j a workstation, with man-hours being limited at each workstation j. The shop should purchase man-hours from outside the workstation j if the man-hours exceed the limit. Each product earns a certain profit . The most important aspect is to maximize the total profit of our shop and minimize the cost of purchased man-hours.

5.1.1. The Mathematical Formulation of the PROD-MIX Problem

The formal description of the PROD-MIX Problem can be defined as follows [

76,

77]. The required values for parameters and constants are also expressed.

| i | The product class (). |

| j | The workstation (). |

| The quantities of product (decision variables). |

| The outside purchased man-hours for workstation j. |

| The profit per product unit at class i, . |

| The man-hour cost for workstation j, |

| Random man-hours at workstation j per unit of product class i, |

| | |

| Random available man-hours at j workstation, |

| | . |

Therefore, the object function for the PROD-MIX Problem can be expressed as

5.1.2. Results of the PROD-MIX Problem

The FSEDA method found a new solution with value

and the decision variable values

The best known value for this problem is

[

76,

77].

Figure 3 shows the comparison between the performance of the ASEDA and FSEDA methods. This figure shows that the FSEDA method demonstrated the best performance in terms of reaching the optimal solution.

5.2. The KANDW3 Problem

In the KANDW3 Problem [

77,

78], a refinery makes

J different products by blending

I raw materials. The refinery produces the quantities

of the raw material

i in period

t with cost

to meet the demands

. Each product

j requires the raw material

i to be stored in

. If the refinery does not satisfy the demands in period

t, it should outsource

y the product with cost

h. The main objective is to satisfy the demand completely with a minimum cost.

5.2.1. The Mathematical Formulation of the KANDW3 Problem

The formal description of the KANDW3 Problem can be defined as follows [

77,

78]. The required values for parameters and constants are also expressed.

| i | The materials |

| j | The products |

| t | The time periods |

| The quantity of material i in the period t (decision variables). |

| The quantity of outsourced product j in period t. |

| The cost of raw material i, |

| The amount of raw material i to a unit of product j, |

| | . |

| The cost of outsourced product j in period time t, |

| | . |

| b | The capacity of the inventory, . |

| Random demands of product j in period |

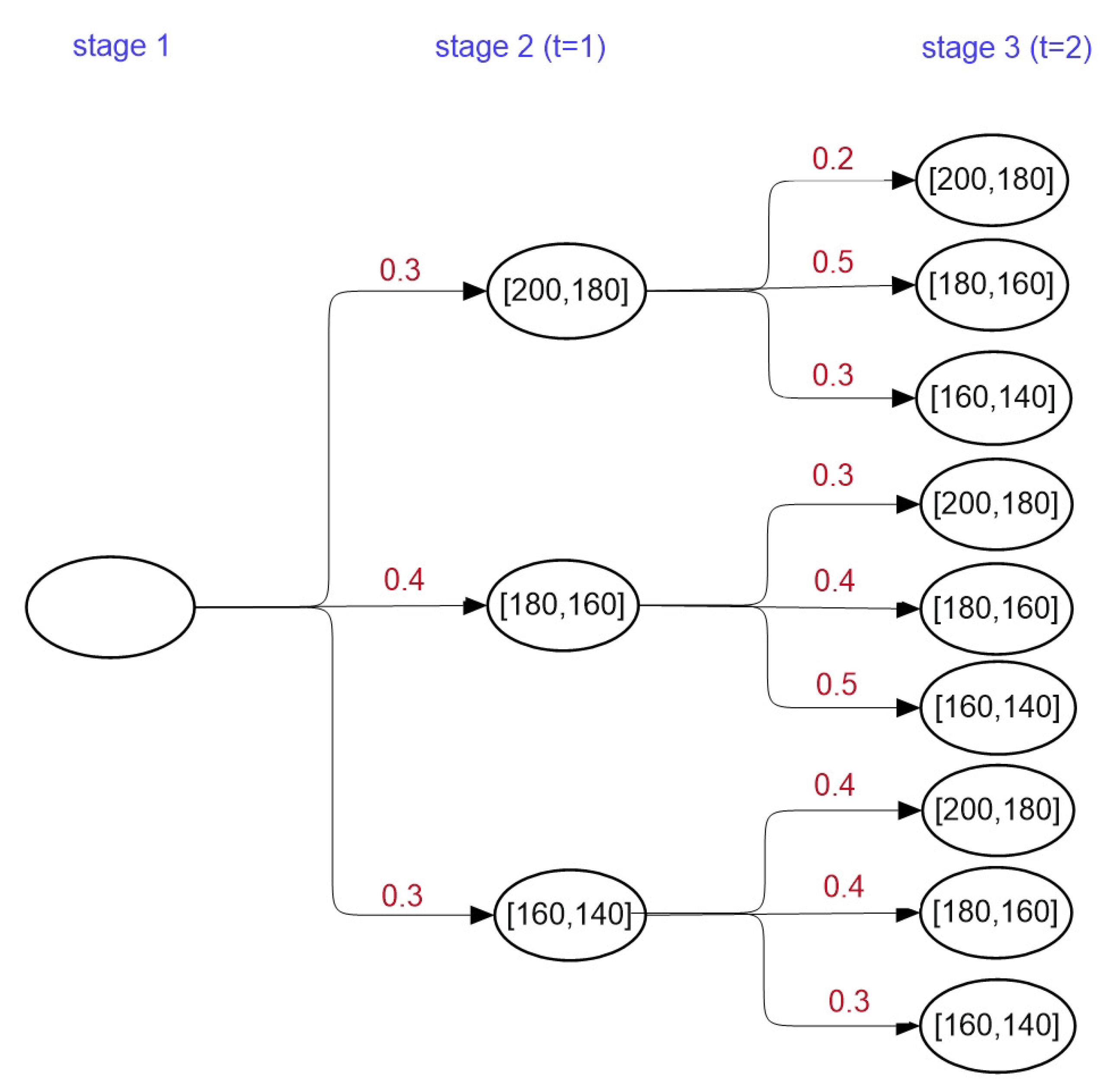

The values for demands can be obtained from the

Figure 4. The object function for the KANDW3 Problem [

77,

78] can be expressed as

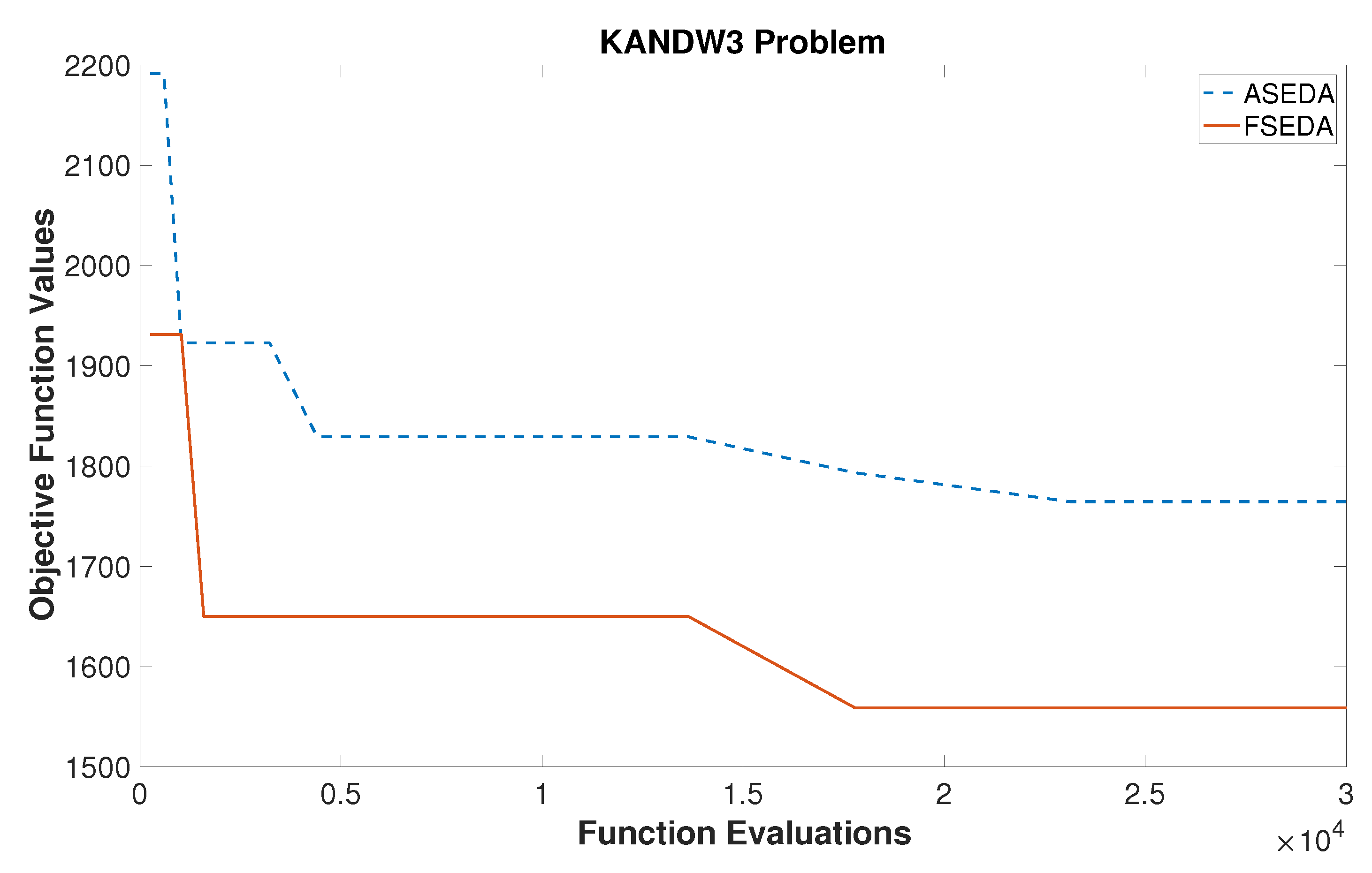

5.2.2. Results of KANDW3 Problem

The FSEDA method found the objective function value

with the decision variable values

The best known value for the KANDW3 Problem is

, as mentioned in [

78]. Therefore, the proposed method found a new minimal value for the KANDW3 Problem. The comparison between the ASEDA and FSEDA methods is shown in

Figure 5. In this figure, the FSEDA method provided better solutions as compared to the ASEDA method.

5.3. The LANDS Problem

Power plants are the key issue in the LANDS Problem [

77,

79]. Assume that there are four types of power plants which can be operated by three different modes to meet the electricity demands; the operating level

of power plant

i in mode

j to satisfy the demands

with the cost

. The budget

b is considered as a constraint which limits the total cost. The main objective is to determine the optimal capacity investment

in the power plant

i.

5.3.1. The Mathematical Formulation of the LANDS Problem

The formal description of the LANDS Problem can be defined as follows [

77,

79]. The required values for parameters and constants are also expressed.

| i | The power plant type . |

| j | The operating mode |

| The capacity of power plant i (decision variable). |

| The operating level of power plant i in mode j. |

| The unit cost of capacity installed for plant type i, . |

| The unit cost of operating level of power plant i in mode j, |

| | . |

| m | The minimum total installed capacity . |

| b | The available budget for capacity installment, . |

| Random power demands in mode j, |

| | where has values 3.0, 5.0, or 7.0 with probability 0.3, 0.4, and 0.3, respectively. |

Therefore, the object function for the LANDS Problem [

77,

79] can be expressed as

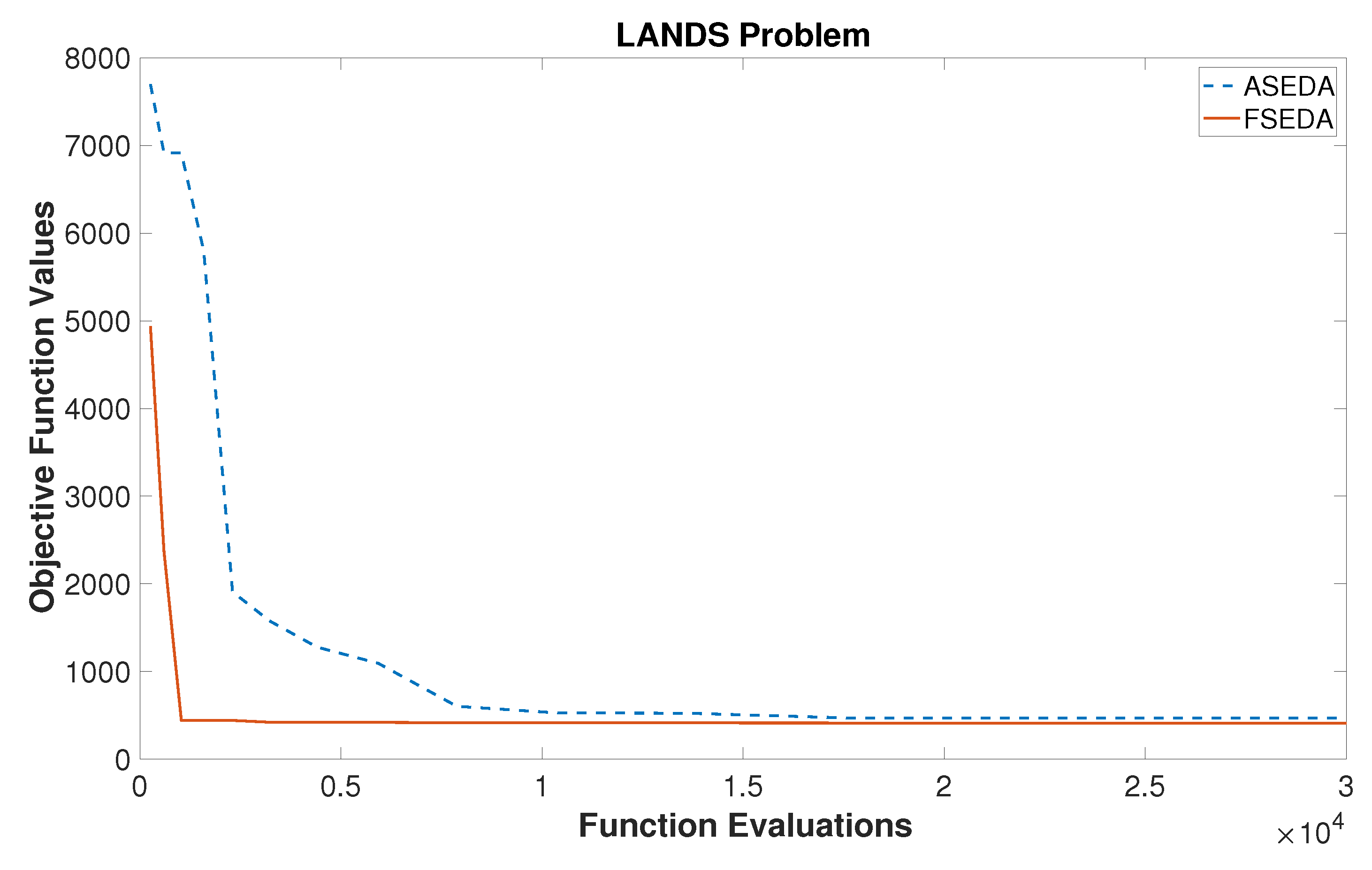

5.3.2. Results of the LANDS Problem

The objective function value

was obtained by the FSEDA method with the decision variables

The best known function value for this problem is

, which is presented in [

79].

Figure 6 presents the comparison between the FSEDA and ASEDA performance for the LANDS Problem. In

Figure 6, the FSEDA method reached the best solution faster than the ASEDA method.