Degradation State Recognition of Piston Pump Based on ICEEMDAN and XGBoost

Abstract

1. Introduction

2. Theoretical Background

2.1. Improved Complete Ensemble Empirical Mode Decomposition with the Adaptive Noise (ICEEMDAN) Model

- a.

- Adding white noise to the original signal x, is obtained aswhere represents the i-th white noise to be added.

- b.

- EMD is used to calculate the local mean of , and the first residual was obtained by taking the average of them; then, the first IMF value can be calculated by .

- c.

- The second mode component value(IMF2) can be calculated by , where .

- d.

- Similarly, calculate the k-th IMF value according to , where .

2.2. eXtreme Gradient Boosting (XGBoost) Classifier Design

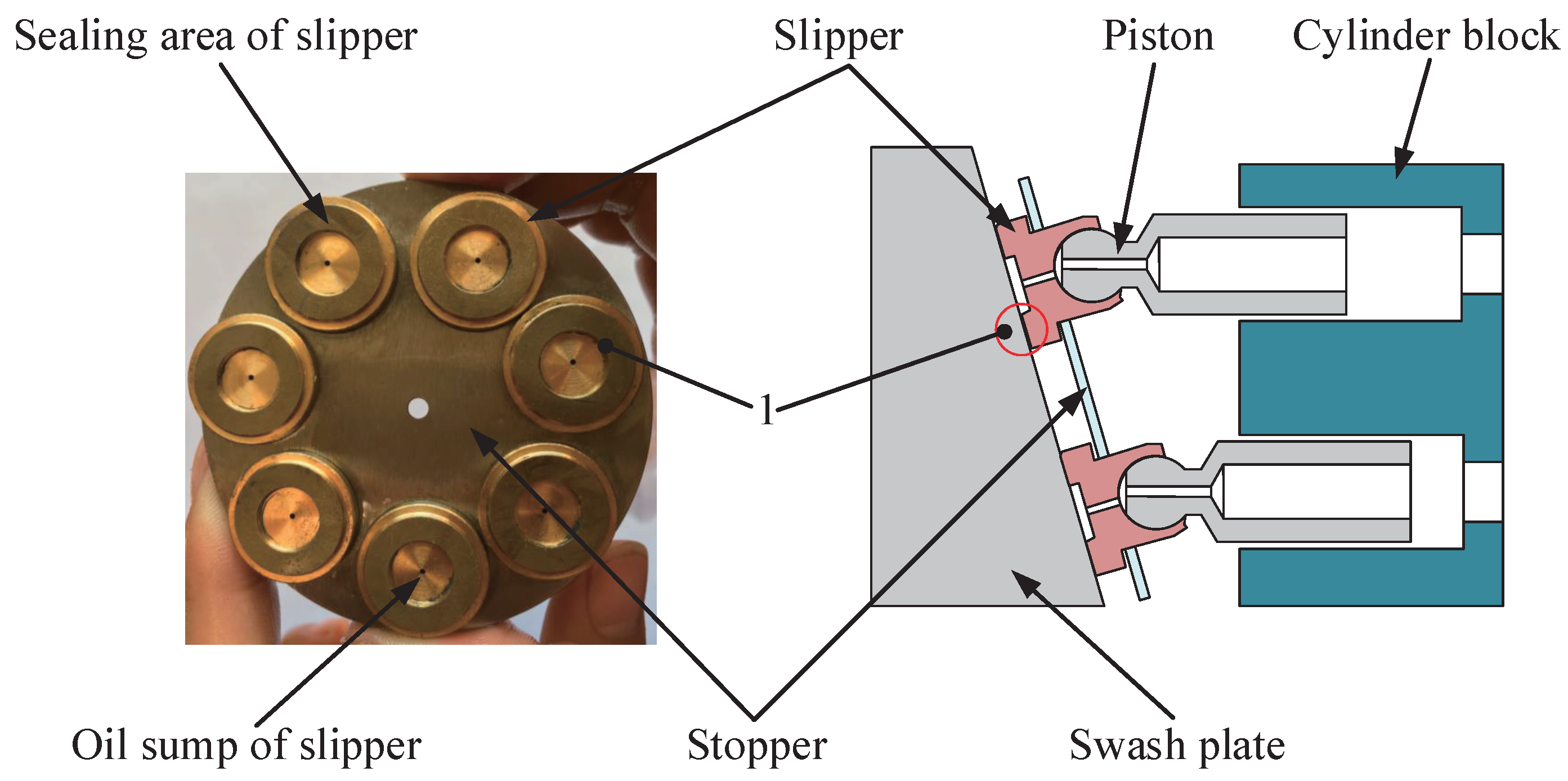

3. Performance Degradation Test of Piston Pump

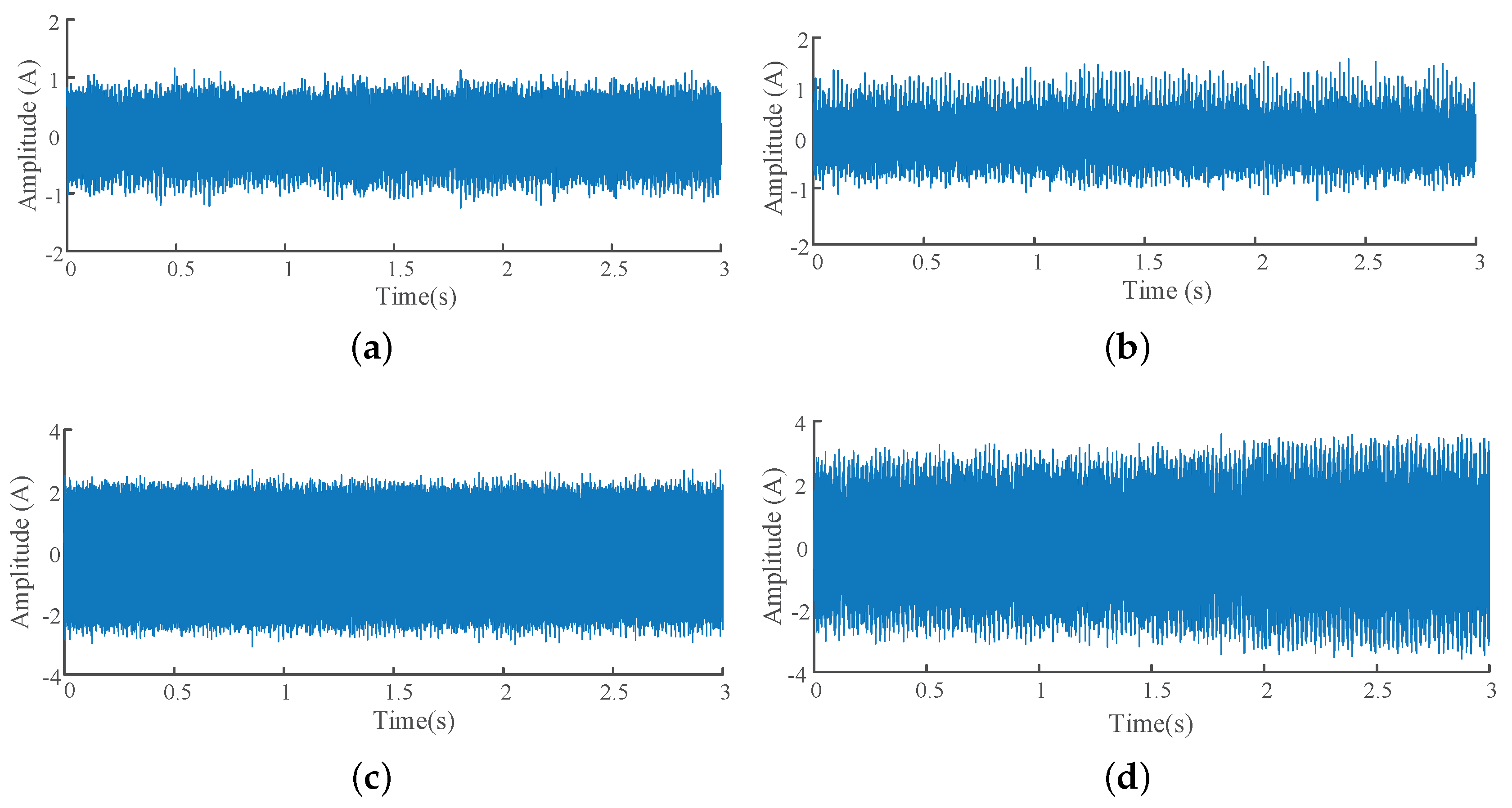

4. Test Data Processing of the Vibration Signal

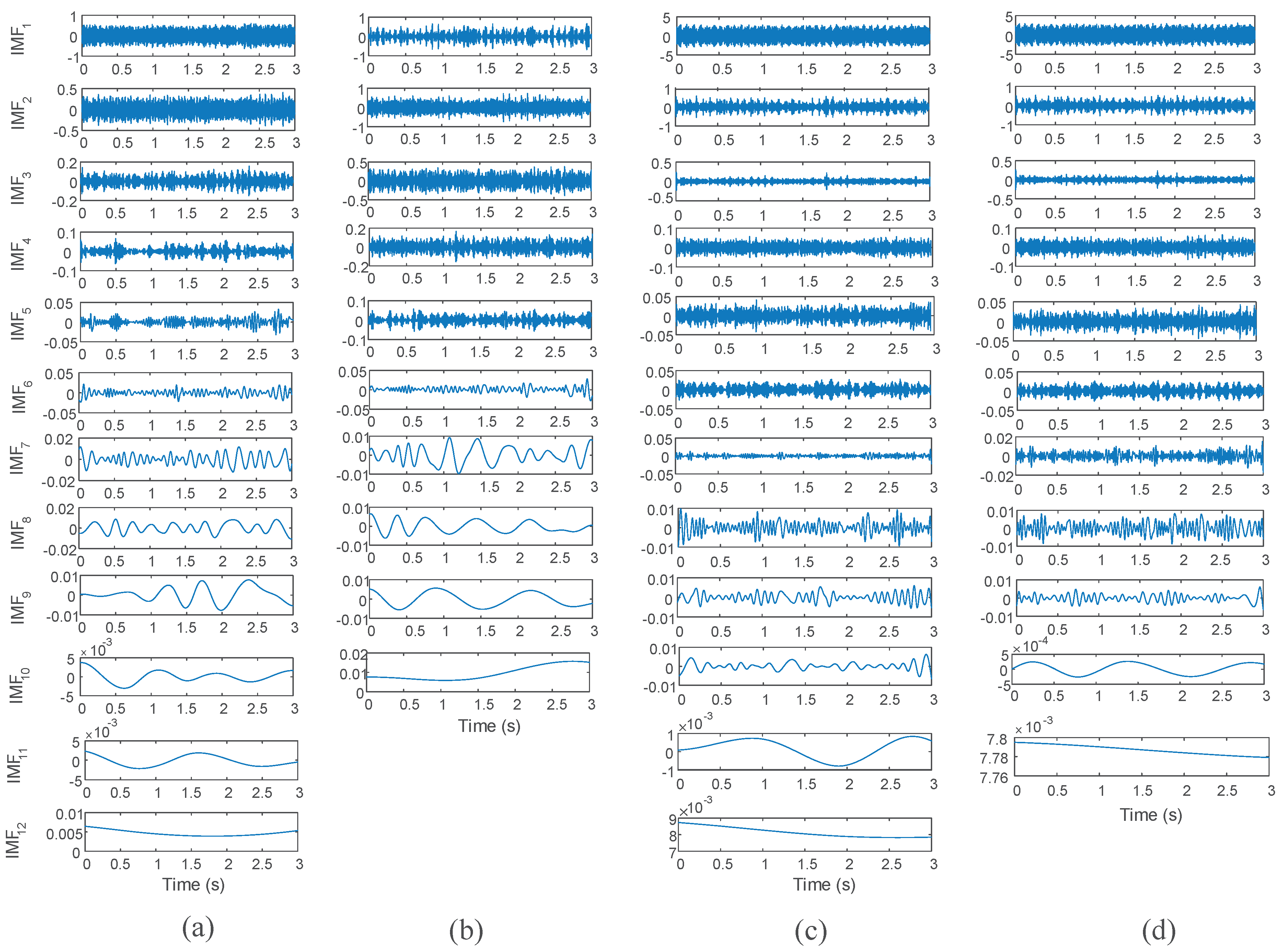

4.1. Pre-Processing of the Vibration Signal by ICEEMDAN

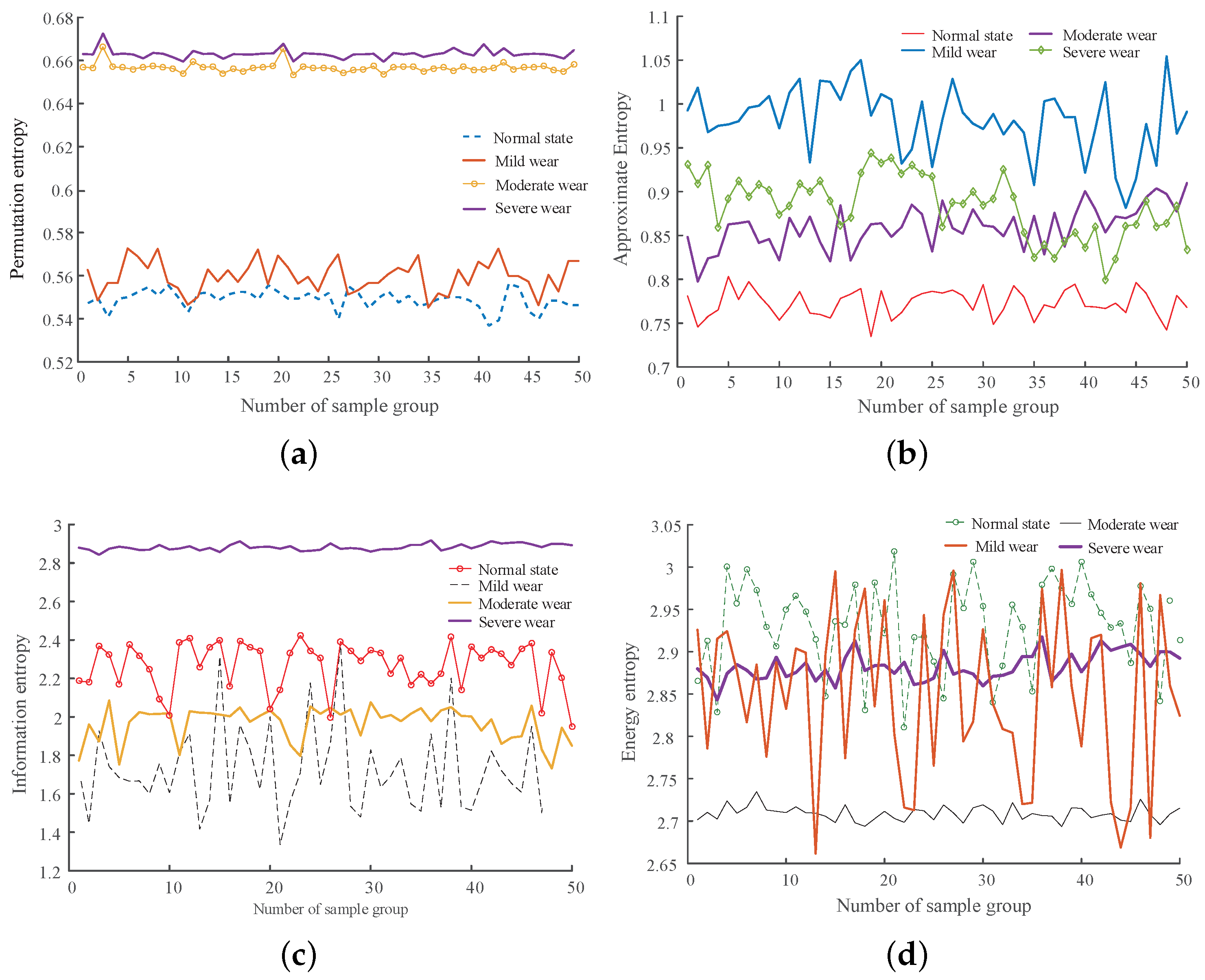

4.2. Multi-Domain Feature Selection

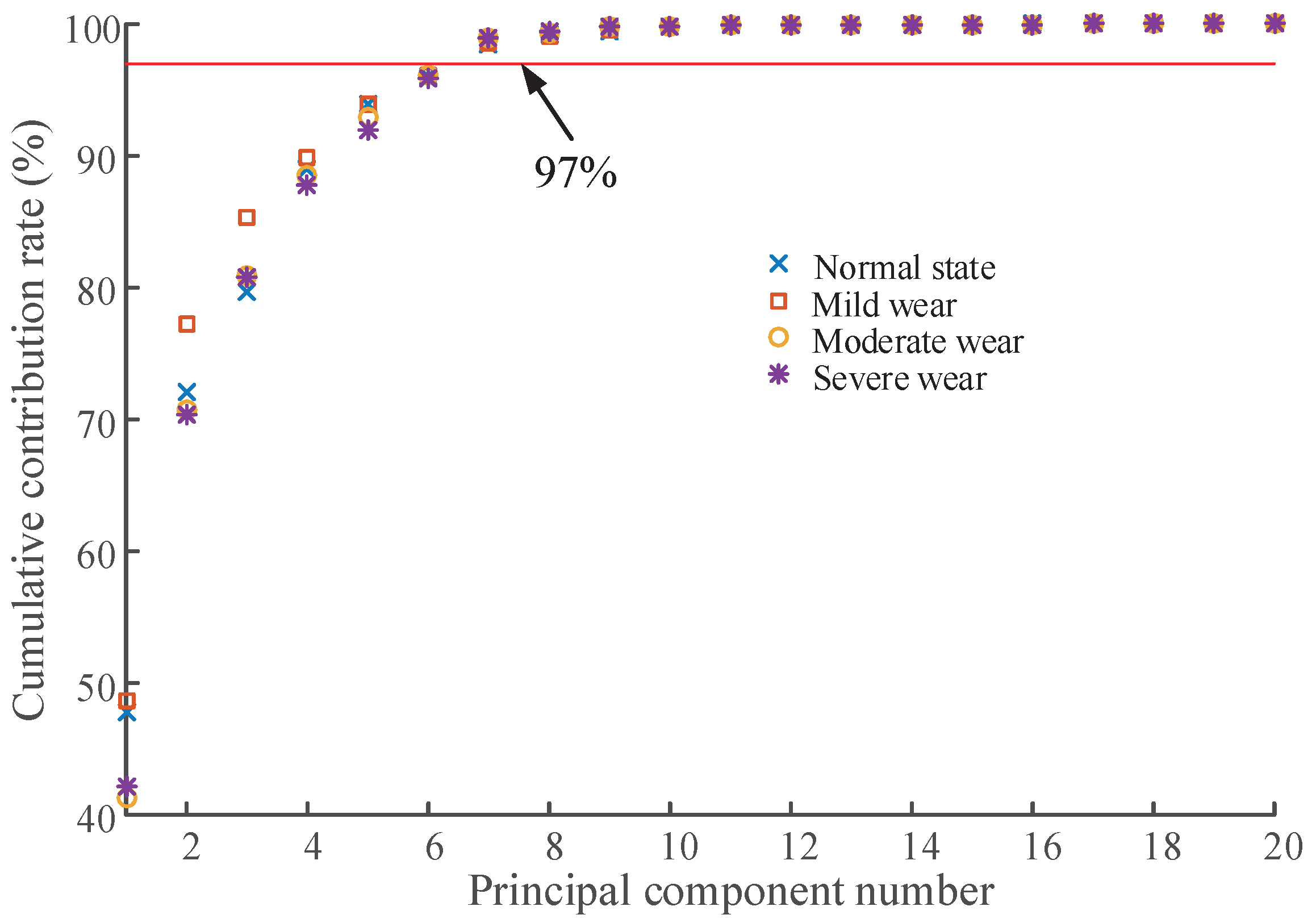

4.3. Feature Filtering by PCA

5. Degradation State Recognition with XGBoost

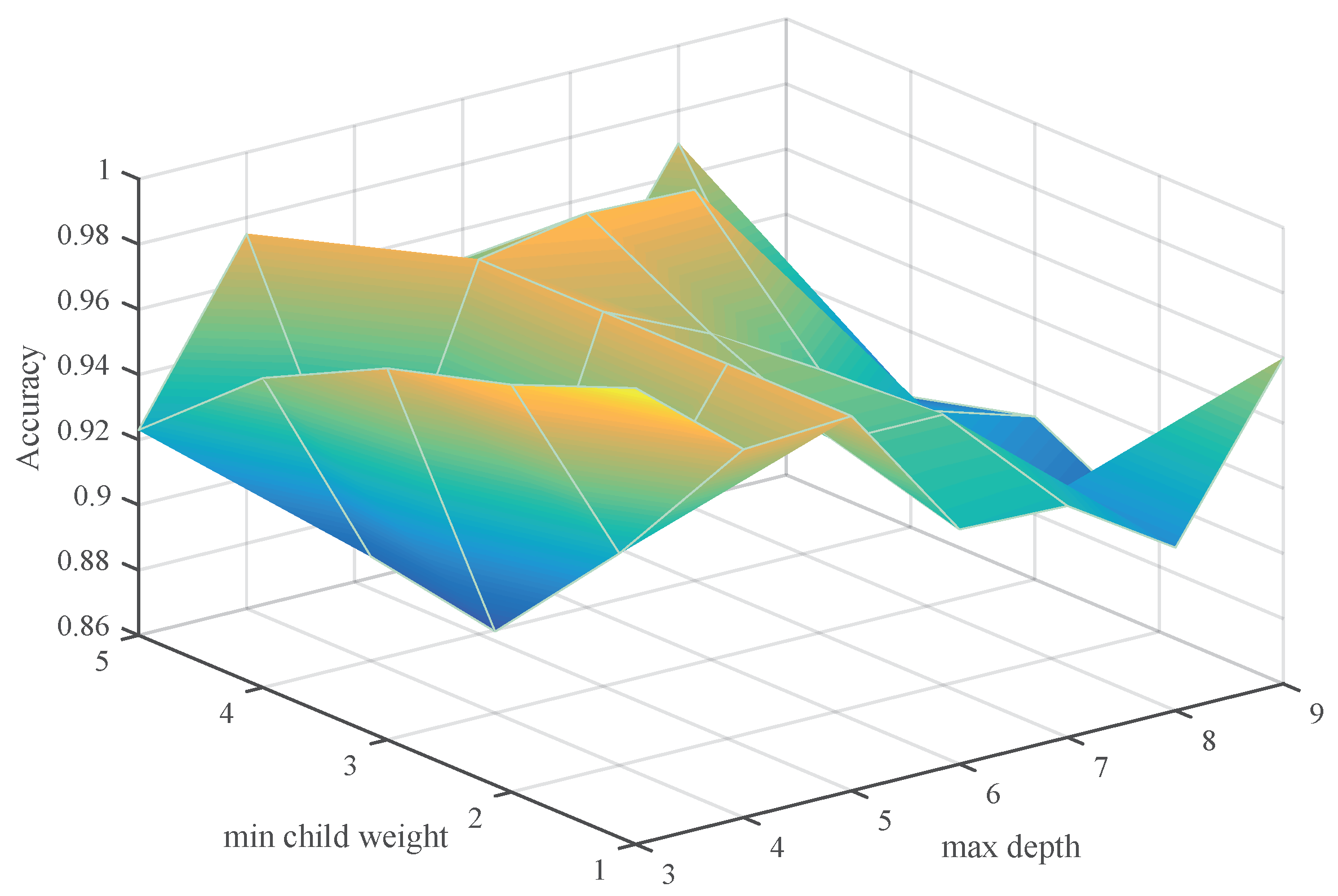

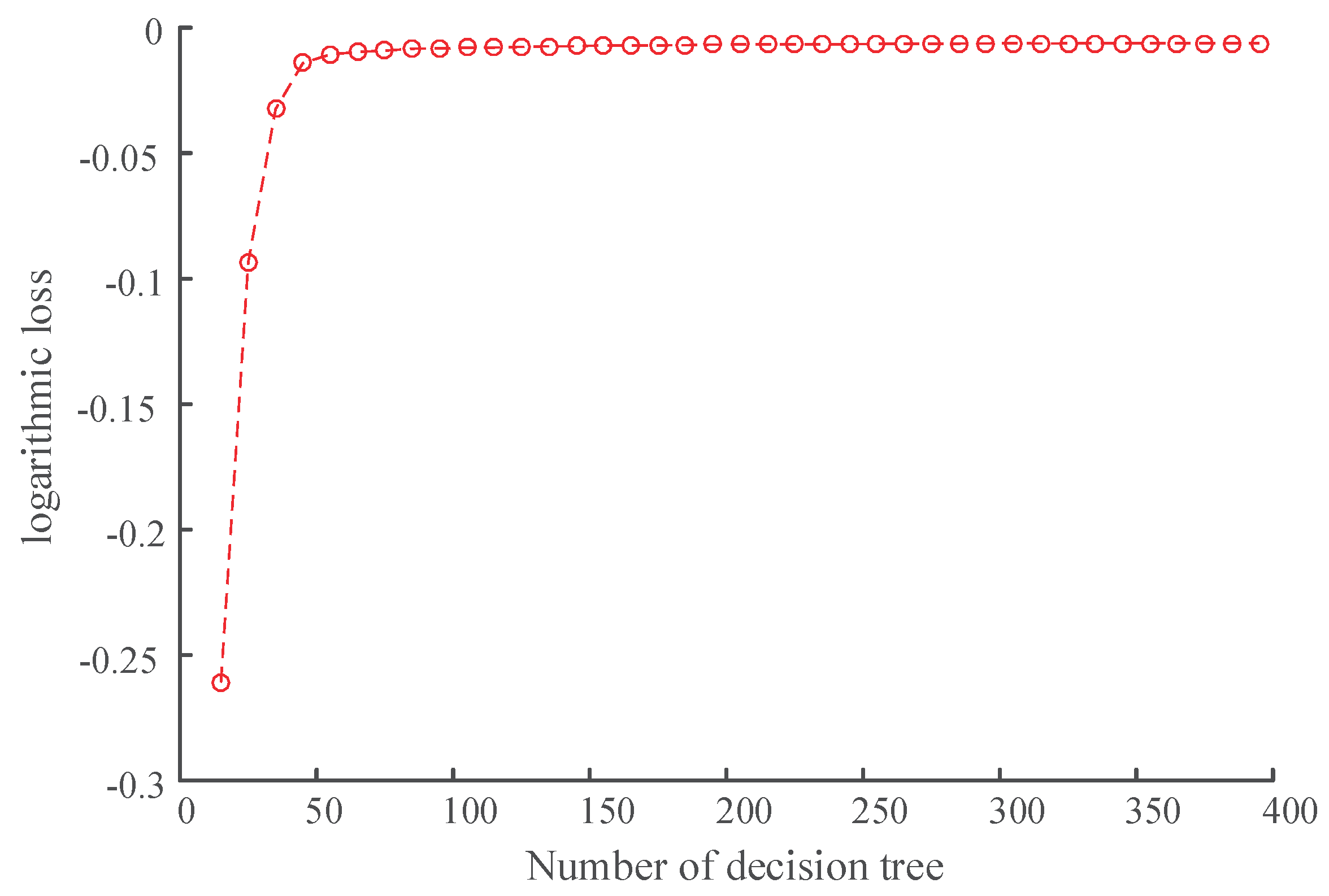

5.1. Parameter Optimization for the XGBoost Model

5.2. Experimental Results and Analysis

6. Conclusions

- a.

- The ICEEMDAN can decompose the vibration signal of piston pump adaptively, improve the decomposition efficiency, and suppress phenomena of mode mixing. It is feasible to select an effective IMF component by using correlation coefficient.

- b.

- Through time domain, frequency domain, and entropy, the deterioration process of piston pump can be tracked and identified comprehensively. The PCA is used to reduce data dimension and calculation cost, which improves the accuracy and efficiency of state identification.

- c.

- The average recognition accuracy of slipper wear state of piston pump based on ICEEMDAN and XGBoost is 99.7%. Compared with ANN, GBDT, and SVM algorithm, XGBoost identifies four different wear states better and saves the computing time, which highlights the advantages of XGBoost after parameter optimization in pattern recognition.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Lan, Y.; Hu, J.; Huang, J.; Niu, L.; Zeng, X.; Xiong, X.; Wu, B. Fault Diagnosis on Slipper Abrasion of Axial Piston Pump based on Extreme Learning Machin. Measurement 2010, 124, 378–385. [Google Scholar] [CrossRef]

- Guo, S.; Chen, J.; Lu, Y.; Yan, W.A.N.G.; Hongkang, D.O.N.G. Hydraulic piston pump in civil aircraft: Current status, future directions and critical technologies. Chin. J. Aeronaut. 2020, 33, 16–30. [Google Scholar] [CrossRef]

- Son, Y.K.; Savage, G.J. A New Sample-Based Approach to Predict System Performance Reliability. IEEE Trans. Reliab. 2008, 52, 322–330. [Google Scholar]

- Zamanian, A.H.; Ohadi, A. Wear prediction for metals. Tribol. Int. 1997, 30, 377–383. [Google Scholar]

- Lin, J.S.; Chen, Q. Fault diagnosis of rolling bearings based on multifractal detrended fluctuation analysis and Mahalanobis distance criterion. Mech. Syst. Signal Process. 2013, 38, 515–533. [Google Scholar] [CrossRef]

- Xu, B.; Zhang, J.; Yang, H. Investigation on structural optimization of anti-overturning slipper of axial piston pump. Sci. China Technol. Sci. 2012, 55, 3010–3018. [Google Scholar] [CrossRef]

- Liu, H.; Yuan, S.H.; Jing, C.B.; Zhao, Y.M. Effects of Wear Profile and Elastic Deformation on the Slipper’s Dynamic Characteristics. J. Mech. Eng. 2012, 28, 608–621. [Google Scholar] [CrossRef]

- Tian, Z.K.; Li, H.R.; Sun, J.; Li, B.C. Degradation state identification method of hydraulic pump based on improved MF-DFA and SSM-FM. Chin. J. Sci. Instrum. 2016, 37, 1851–1860. [Google Scholar]

- Xiao, W.B.; Chen, J.; Zhou, Y.; Wang, Z.Y.; Zhao, F.G. Wavelet packet transform and hidden Markov model based bearing performance degradation assessment. J. Mech. Eng. 2011, 30, 32–35. [Google Scholar]

- Singh, D.S.; Zhao, Q. Pseudo-fault signal assisted EMD for fault detection and isolation in rotating machines. Mech. Syst. Signal Process. 2016, 81, 202–218. [Google Scholar] [CrossRef]

- Lei, Y.G. Machinery Fault Diagnosis Based on Improved Hilbert-Huang Transform. J. Mech. Eng. 2011, 47, 71–77. [Google Scholar] [CrossRef]

- Lei, Y.G.; He, Z.J.; Zi, Y.Y. Application of the EEMD method to rotor fault diagnosis of rotating machinery. Mech. Syst. Signal Process. 2009, 23, 1327–1338. [Google Scholar] [CrossRef]

- Lee, D.-H.; Ahn, J.-H.; Koh, B.-H. Fault Detection of Bearing Systems through EEMD and Optimization Algorithm. Sensors 2017, 17, 2477. [Google Scholar] [CrossRef]

- Wu, Z.H.; Huang, N.E. Ensemble empirical mode decomposition: A noise-assisted data analysis method. Adv. Adapt. Data Anal. 2009, 1, 1–41. [Google Scholar] [CrossRef]

- Torres, M.E.; Colominas, M.A.; Schlotthauer, G.; Flandrin, P. A complete ensemble empirical mode decomposition with adaptive noise. In Proceedings of the 2011 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Prague, Czech Republic, 22–27 May 2011; pp. 4144–4147. [Google Scholar]

- Tian, Z.K.; Li, H.R.; Gu, H.Q.; Xu, B.H. Degradation status identification of a hydraulic pump based on local characteristic-scale decomposition and JRD. J. Vib. Shock 2016, 35, 54–59. [Google Scholar]

- Wang, B.; Li, H.R.; Chen, Q.H.; Xu, B.H. Rolling Bearing Performance Degradative State Recognition Based on Mathematical Morphological Fractal Dimension and Fuzzy Center Means. Acta Armamentarii 2015, 36, 1982–1990. [Google Scholar]

- Zhang, S.; Li, W. Bearing Condition Recognition and Degradation Assessment under Varying Running Conditions Using NPE and SOM. Math. Probl. Eng. 2014, 9, 781583. [Google Scholar] [CrossRef]

- Si, L.; Wang, Z.; Liu, X.; Tan, C. A sensing identification method for shearer cutting state based on modified multi-scale fuzzy entropy and support vector machine. Eng. Appl. Artif. Intell. 2019, 78, 86–101. [Google Scholar] [CrossRef]

- Ji, Y.; Sun, S. Multitask multiclass support vector machines: Model and experiments. Pattern Recognit. 2013, 46, 914–924. [Google Scholar] [CrossRef]

- Azadeh, A.; Saberi, M.; Kazem, A.; Ebrahimipour, V.; Nourmohammadzadeh, A.; Saberi, Z. A flexible algorithm for fault diagnosis in a centrifugal pump with corrupted data and noise based on ANN and support vector machine with hyper-parameters optimization. Appl. Soft Comput. 2013, 13, 1478–1485. [Google Scholar] [CrossRef]

- Siddiqui, S.A.; Verma, K.; Niazi, K.R.; Fozdar, M. Real-Time Monitoring of Post-Fault Scenario for Determining Generator Coherency and Transient Stability through ANN. IEEE Trans. Ind. Appl. 2017, 54, 685–692. [Google Scholar] [CrossRef]

- Wang, Y.S.; Liu, N.N.; Guo, H.; Wang, X.L. An engine-fault-diagnosis system based on sound intensity analysis and wavelet packet pre-processing neural network. Eng. Appl. Artif. Intell. 2020, 94, 103765. [Google Scholar] [CrossRef]

- Yan, X.; Jia, M. A novel optimized SVM classification algorithm with multi-domain feature and its application to fault diagnosis of rolling bearing. Neurocomputing 2018, 313, 47–64. [Google Scholar] [CrossRef]

- Wang, S.; Xiang, J.; Zhong, Y.; Tang, H. A data indicator-based deep belief networks to detect multiple faults in axial piston pumps. Mech. Syst. Signal Process. 2018, 112, 154–170. [Google Scholar] [CrossRef]

- Chen, C.-H.; Zhao, W.D.; Pang, T.; Lin, Y.-Z. Virtual metrology of semiconductor PVD process based on combination of tree-based ensemble model. ISA Trans. 2020, 103, 192–202. [Google Scholar] [CrossRef]

- Ampomah, E.K.; Qin, Z.; Nyame, G. Evaluation of Tree-Based Ensemble Machine Learning Models in Predicting Stock Price Direction of Movement. Information 2020, 11, 332. [Google Scholar] [CrossRef]

- Adler, W.; Potapov, S.; Lausen, B. Classification of repeated measurements data using tree-based ensemble methods. Comput. Stat. 2011, 26, 355–369. [Google Scholar] [CrossRef]

- Kotsiantis, S. Combining bagging, boosting, rotation forest and random subspace methods. Artif. Intell. Rev. 2011, 35, 223–240. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Friedman, J.H. Greedy Function Approximation: A Gradient Boosting Machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Wu, Z.; Wang, X.; Jiang, B. Fault Diagnosis for Wind Turbines Based on ReliefF and eXtreme Gradient Boosting. Appl. Sci 2020, 10, 3258. [Google Scholar] [CrossRef]

- Debaditya, C.; Hazem, E. Advanced machine learning techniques for building performance simulation: A comparative analysis. J. Build. Perform. Simul. 2019, 12, 193–207. [Google Scholar]

- Colominas, M.A.; Schlotthauer, G.; Torres, M.E. Improved complete ensemble EMD: A suitable tool for biomedical signal processing. Biomed. Signal Process. Control. 2014, 14, 19–29. [Google Scholar] [CrossRef]

- Ji, N.; Ma, L.; Dong, H.; Zhang, X. Selection of Empirical Mode Decomposition Techniques for Extracting Breathing Rate From PPG. IEEE Signal Process. Lett. 2019, 26, 592–596. [Google Scholar]

- Ali, M.; Prasad, R. Significant wave height forecasting via an extreme learning machine model integrated with improved complete ensemble empirical mode decomposition. Renew. Sustain. Energy Rev. 2019, 104, 281–295. [Google Scholar] [CrossRef]

- Huang, N.E.; Shen, Z.; Long, S.R.; Wu, M.C.; Shih, H.H.; Zheng, Q.; Yen, N.C.; Tung, C.C.; Liu, H.H. The empirical mode decomposition and the Hilbert spectrum for nonlinear and non-stationary time series analysis. Proc. Math. Phys. Eng. Sci. 1998, 454, 903–995. [Google Scholar] [CrossRef]

- Li, Y.; Xu, F. Signal processing and identification on the surface of Pinus massoniana Lamb. glulam using acoustic emission and improvement complete ensemble empirical mode decomposition with adaptive noise. Measurement 2019, 148, 106978. [Google Scholar] [CrossRef]

- Wang, L.; Zhou, D.; Zhang, H.; Zhang, W.; Chen, J. Application of Relative Entropy and Gradient Boosting Decision Tree to Fault Prognosis in Electronic Circuits. Symmetry 2018, 10, 495. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, X.; Jung, Y.H.; Abedi, V.; Zand, R.; Bikak, M.; Adibuzzaman, M. Classification of short single lead electrocardiograms (ECGs) for atrial fibrillation detection using piecewise linear spline and XGBoost. Physiol. Meas. 2018, 39, 104006. [Google Scholar] [CrossRef]

- Zheng, H.M.; Dang, C.L.; Gu, S.M.; Peng, D.; Chen, K. A quantified self-adaptive filtering method: Effective IMFs selection based on CEEMD. Meas. Sci. Technol. 2018, 29, 085701. [Google Scholar] [CrossRef]

- Jiang, L.; Li, B.; Li, X.J. An Improved HHT Method and its Application in Fault Diagnosis of Roller Bearing. Appl. Mech. Mater. 2013, 273, 264–268. [Google Scholar] [CrossRef]

- Lu, C.; Wang, S.; Maids, V. Fault severity recognition of aviation piston pump based on feature extraction of EEMD paving and optimized support vector regression model. Aerosp. Sci. Technol. 2017, 67, 105–117. [Google Scholar] [CrossRef]

- Eriştia, H.; Uçarb, A.; Demir, Y. Wavelet-based feature extraction and selection for classification of power system disturbances using support vector machines. Electr. Power Syst. Res. 2010, 80, 743–752. [Google Scholar] [CrossRef]

- Hasan, M.J.; Kim, J.; Kim, C.H.; Kim, J.-M. Health State Classification of a Spherical Tank Using a Hybrid Bag of Features and K-Nearest Neighbor. Appl. Sci. 2020, 10, 2525. [Google Scholar] [CrossRef]

- Lei, Y.G.; He, Z.J.; Zi, Y.Y.; Hu, Q. Fault diagnosis of rotating machinery based on multiple ANFIS combination with Gas. Mech. Syst. Signal Process. 2007, 21, 2280–2294. [Google Scholar] [CrossRef]

- Bandt, C.; Pompe, B. Permutation Entropy: A Natural Complexity Measure for Time Series. Phys. Rev. Lett. 2002, 88, 174102. [Google Scholar] [CrossRef]

- Jiang, Z.; Deng, A. Identification of Rotating Machinery Rub and Impact Fault Using Hurst Exponent and Approximate Entropy as Characteristic Parameters of Acoustic Emission. Proc. CSEE 2010, 30, 96–102. [Google Scholar]

- Li, J.L.; Huang, S.H. Fault Diagnosis of a Rotary Machine Based on Information Entropy and Rough Set. Int. J. Plant Eng. Manag. 2007, 12, 199–206. [Google Scholar]

- Zhu, L.D.; Liu, C.F.; Ju, C.Y.; Guo, M. Vibration recognition for peripheral milling thin-walled workpieces using sample entropy and energy entropy. Int. J. Adv. Manuf. Technol. 2020, 108, 3251–3266. [Google Scholar] [CrossRef]

- Bect, P.; Simeu-Abazi, Z.; Maisonneuve, P.L. Diagnostic and decision support systems by identification of abnormal events: Application to helicopters. Aerosp. Sci. Technol. 2015, 46, 339–350. [Google Scholar] [CrossRef]

- Wan, X.; Wang, D.; Tse, P.W.; Xu, G.; Zhang, Q. A critical study of different dimensionality reduction methods for gear crack degradation assessment under different operating conditions. Measurement 2016, 78, 138–150. [Google Scholar] [CrossRef]

- Hitchcock, J.M. Fractal Dimension and Logarithmic Loss Unpredictability. Theor. Comput. 2003, 304, 431–441. [Google Scholar] [CrossRef]

- Rodriguez, J.D.; Perez, A.; Lozano, J.A. Sensitivity Analysis of k-Fold Cross Validation in Prediction Error Estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 569–575. [Google Scholar] [CrossRef] [PubMed]

| Status of the Slippers | No Wear | Mild Wear | Moderate Wear | Severe Wear |

|---|---|---|---|---|

| 0.944 | 0.891 | 0.593 | 0.505 | |

| 0.762 | 0.787 | 0.904 | 0.994 | |

| 0.423 | 0.186 | 0.415 | 0.523 | |

| 0.196 | 0.119 | 0.113 | 0.109 | |

| 0.135 | 0.500 | 0.034 | 0.011 | |

| 0.329 | 0.093 | 0.008 | 0.003 | |

| 0.156 | 0.028 | 0.006 | 0.002 | |

| 0.068 | 0.008 | 0.004 | 0.000 | |

| 0.007 | 0.004 | 0.007 | 0.000 | |

| 0.004 | 0.008 | 0.008 | 0.008 | |

| 0.003 | – | 0.008 | 0.000 | |

| 0.000 | – | 0.004 | – |

| Time Domain Characteristic | Expression |

|---|---|

| Root mean square | |

| Peak index | |

| Peak-peak index | |

| Skewness index | |

| Variance | |

| Tolerance index | |

| Waveform index | |

| Kurtosis index | |

| Impulsion index |

| Frequency Domain Characteristic | Expression | Frequency Domain Characteristic | Expression |

|---|---|---|---|

| Parameter | Numerical Value |

|---|---|

| Max depth | 3 |

| Min child weight | 1 |

| N estimators | 167 |

| Objective | multi: softmax |

| Classification Method | Average Recognition Accuracy | Average Decision Time (s) |

|---|---|---|

| ANN | 0.991 | 0.094 |

| SVM | 0.997 | 0.012 |

| GBDT | 0.994 | 0.006 |

| XGBoost | 0.997 | 0.003 |

| Number | Feature Extraction Method | Feature Reduction Method | Classification Technique | Recognition Rate (%) |

|---|---|---|---|---|

| 1 | EEMD, Multi-domain features (time domain, frequency domain, entropy) | PCA | GBDT | 98.2 |

| 2 | EEMD, Multi-domain features | PCA | SVM | 98.6 |

| 3 | EEMD, Multi-domain features | – | XGBoost | 92.4 |

| 4 | EEMD, Multi-domain features | PCA | XGBoost | 97.1–100 |

| 5 | Multi-domain features | PCA | XGBoost | 69.5 |

| 6 | ICEEMDAN, Multi-domain features | – | XGBoost | 94.5–99.2 |

| Present work | ICEEMDAN, Multi-domain features | PCA | XGBoost | 98.3–100 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, R.; Zhao, Z.; Wang, T.; Liu, G.; Zhao, J.; Gao, D. Degradation State Recognition of Piston Pump Based on ICEEMDAN and XGBoost. Appl. Sci. 2020, 10, 6593. https://doi.org/10.3390/app10186593

Guo R, Zhao Z, Wang T, Liu G, Zhao J, Gao D. Degradation State Recognition of Piston Pump Based on ICEEMDAN and XGBoost. Applied Sciences. 2020; 10(18):6593. https://doi.org/10.3390/app10186593

Chicago/Turabian StyleGuo, Rui, Zhiqian Zhao, Tao Wang, Guangheng Liu, Jingyi Zhao, and Dianrong Gao. 2020. "Degradation State Recognition of Piston Pump Based on ICEEMDAN and XGBoost" Applied Sciences 10, no. 18: 6593. https://doi.org/10.3390/app10186593

APA StyleGuo, R., Zhao, Z., Wang, T., Liu, G., Zhao, J., & Gao, D. (2020). Degradation State Recognition of Piston Pump Based on ICEEMDAN and XGBoost. Applied Sciences, 10(18), 6593. https://doi.org/10.3390/app10186593