Computer-Assisted Translation Tools: An Evaluation of Their Usability among Arab Translators

Abstract

1. Introduction

2. Related Work

2.1. Computer-Assisted Translation

- Translation Memory (TM): The TM is a database that stores the texts and their translations in the form of bilingual segments. They operate by providing the translators with suggestions by automatically searching for similar segments. This tool allows the translators to recycle or “leverage” their previous translations, especially with similar or repetitive texts without the need to re-translate [1,2].

- Terminology Management: This component resembles the translation memory in the reusability of segments; however, it works on a term level. This allows the translators to create a database to store and retrieve terms related to a specific field, client, or project. Once the term base is created, the translator has the ability to manually search for a specific term or allow the system to automatically search and display potential matches. The main advantage of these tools, in addition to saving time and efforts, is the enhancement of the translation consistency when translating terminology, especially in group projects where more than one translator is working on the same task [1,6].

- Project Management PM: The increased demand on translations and the expansion of the translation projects required the development of a system to support the management and automation of the translation process [7]. These tools include collaboration functions to increase team efficiency and consistency. Accordingly, these features enable the invitation of collaborators to edit and/or review translations within the system interface and allow collaborations to work in real-time on translation tasks. Other collaborative features also include segment edit history and tracking. Most PM software include a budgeting feature to allow the calculation of the expenditure and revenues, reporting and analytics tools used to help track translation progress, create and assign tasks to translators, and the evaluation of the task deadlines.

- Supporting Tools: Based on the degree of sophistication, most CAT packages come with supporting tools and functions, such as (a) a translation editor that supports bilingual file formats (the editor has several features similar to word processing programs, such as spell checkers and editing tools); (b) an optical character recognition (OCR) tool used to convert a machine-printed or handwritten text image into an editable text format; (c) a segment analyzer, to analyze the translation files to assist the translator understand how much work a translation will require based on the identification of texts that have already been translated and the type of texts that require translations and similarity rate calculations; (d) an alignment tool to automatically divide and link the source text segments and their translations to help create a translation memory more quickly; (e) a machine translation engine; and (f) quality assurance features.

2.2. CAT Tools Among Arab Translators

2.3. Usability of CAT

3. Materials and Methods

3.1. SUMI

- Affect: indicates that the translator feels mentally stimulated and pleasant, or the opposite, i.e., stressed and frustrated as a result of interacting with the tool.

- Helpfulness: refers to the translator perceptions that the software communicates in a helpful way and assists in the resolution of any technical problems.

- Control: refers to the translator’s feeling toward how the tools respond in an expected and consistent way to various inputs and commands.

- Learnability: refers to the feeling that the translator has on becoming familiar with the software, and provides an indication that its tutorial interface, handbooks, etc., are readable and instructive. This also refers to re-learning how to use the software following a period during which it had not been used.

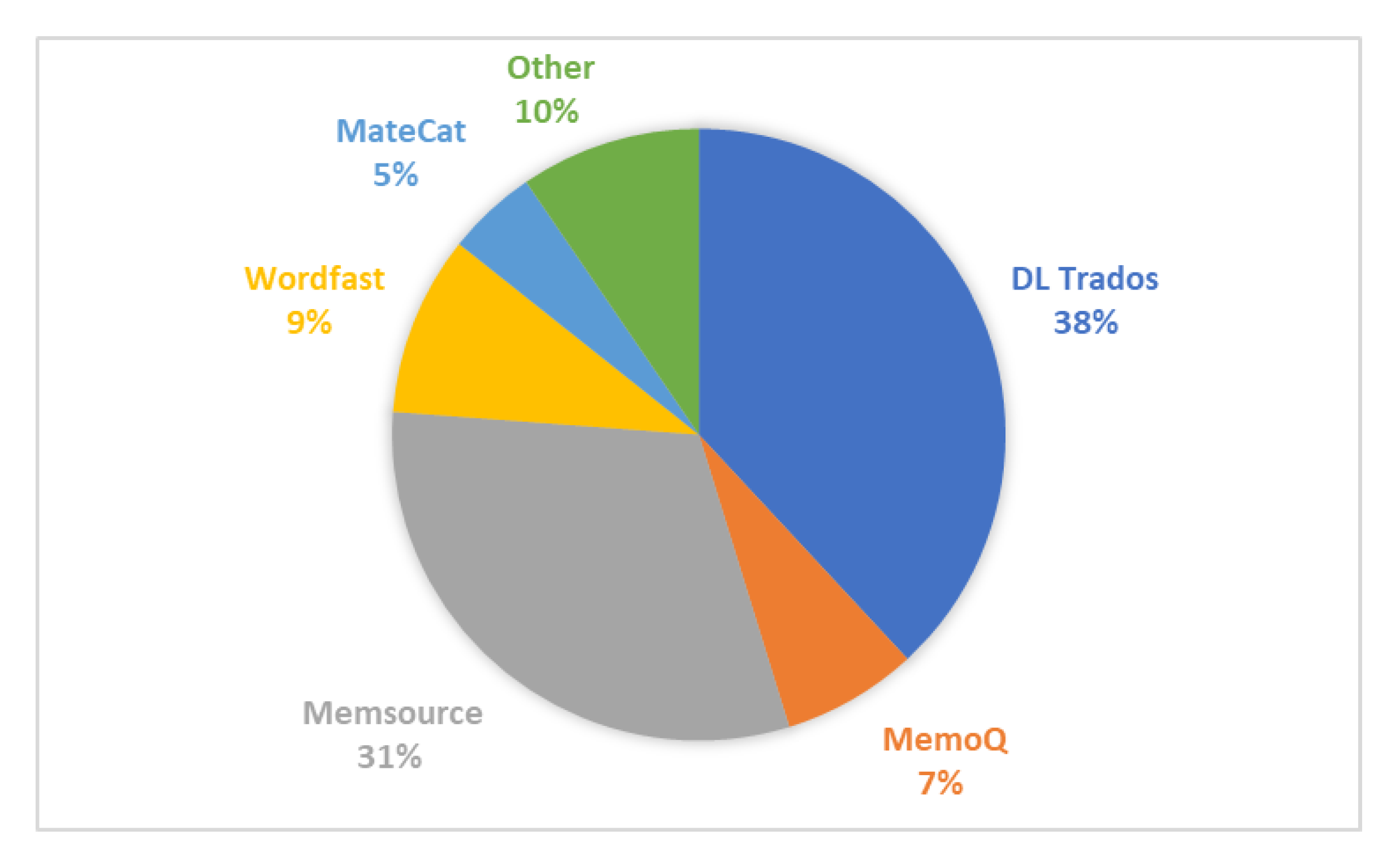

3.2. Participants

4. Results

4.1. Descriptive Analysis for SUMI

4.2. Analysis of Strengths and Weaknesses

4.3. Analysis of Open-Ended Questions

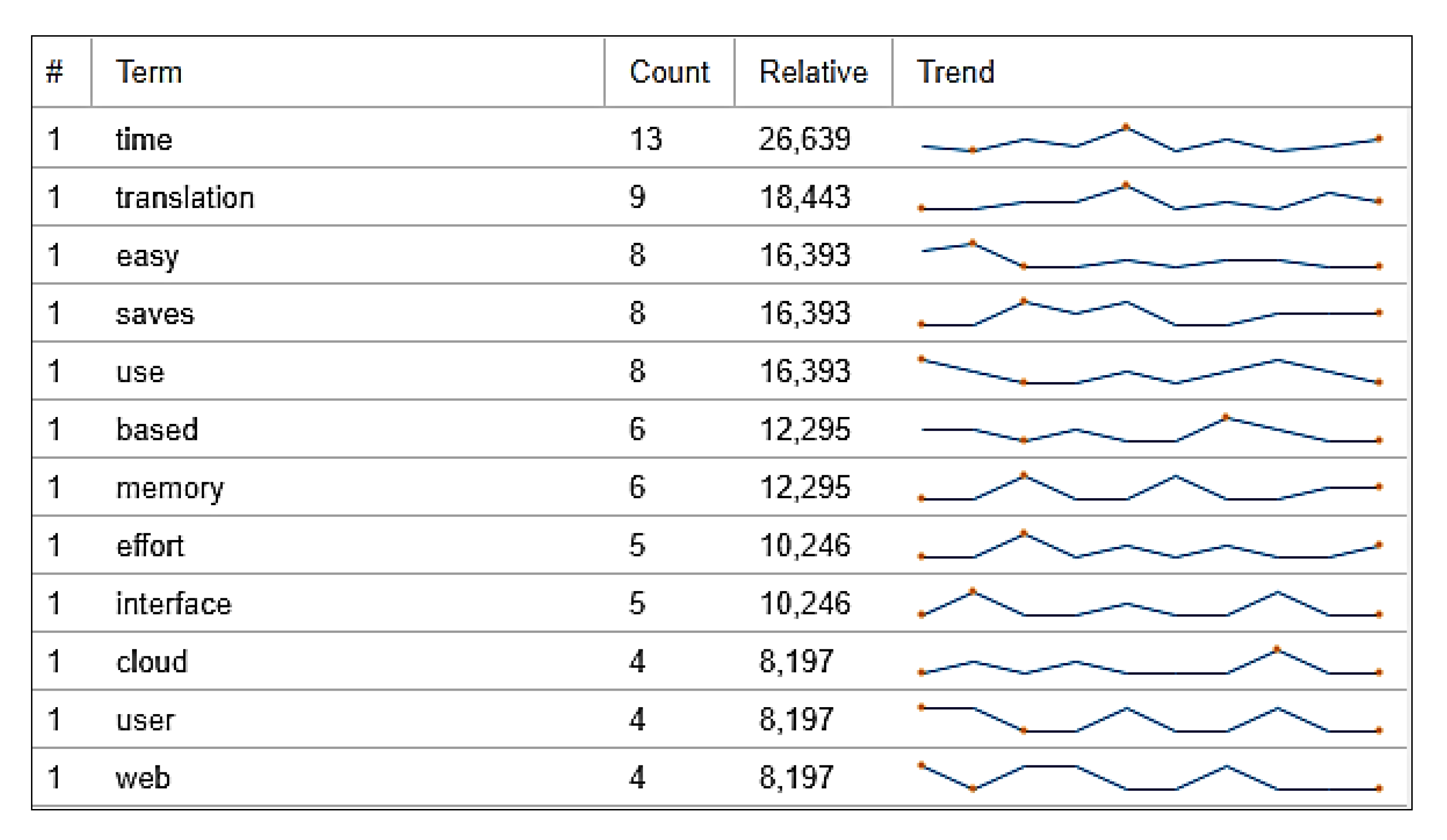

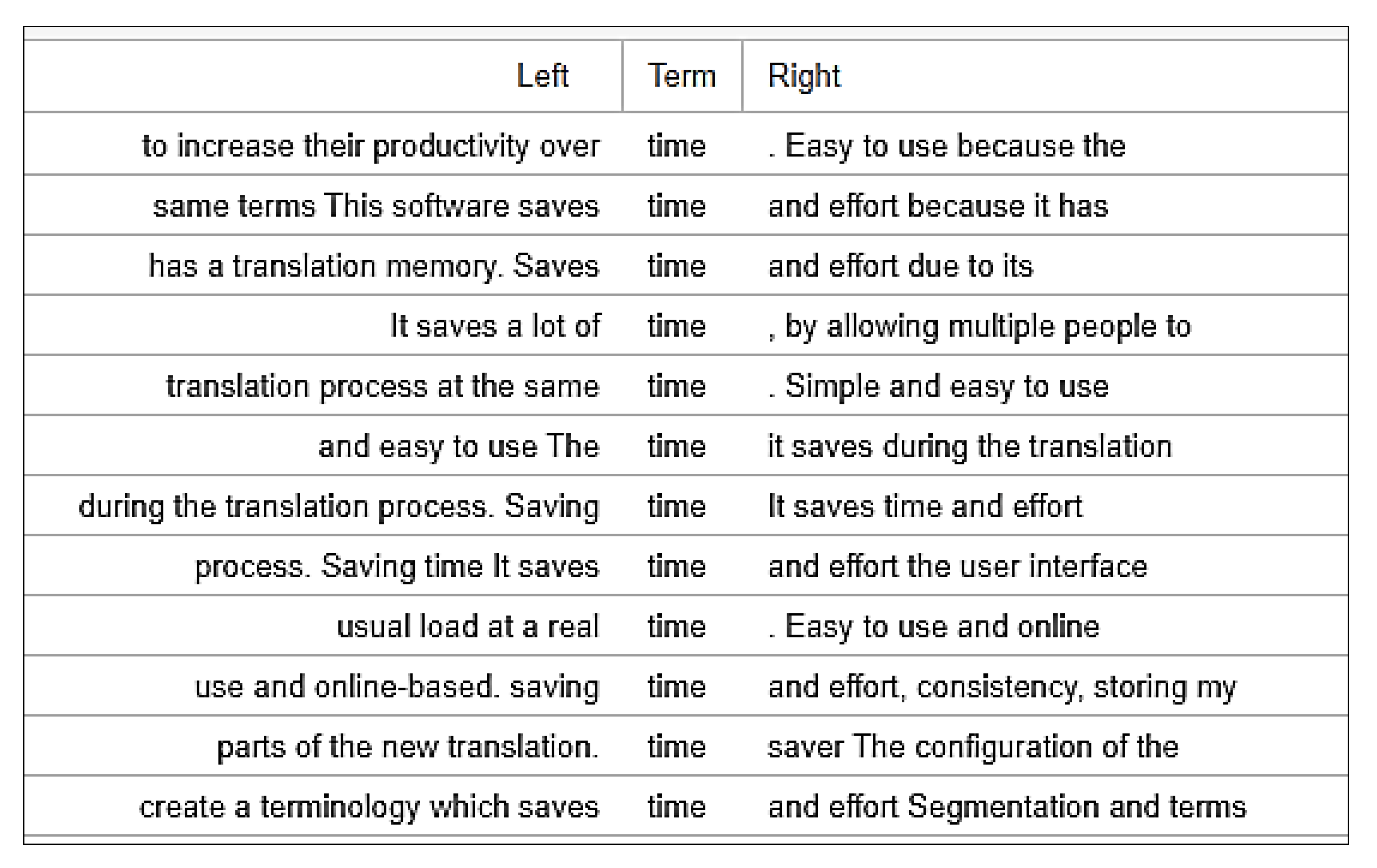

- What do you think is the best aspect of this software and why?

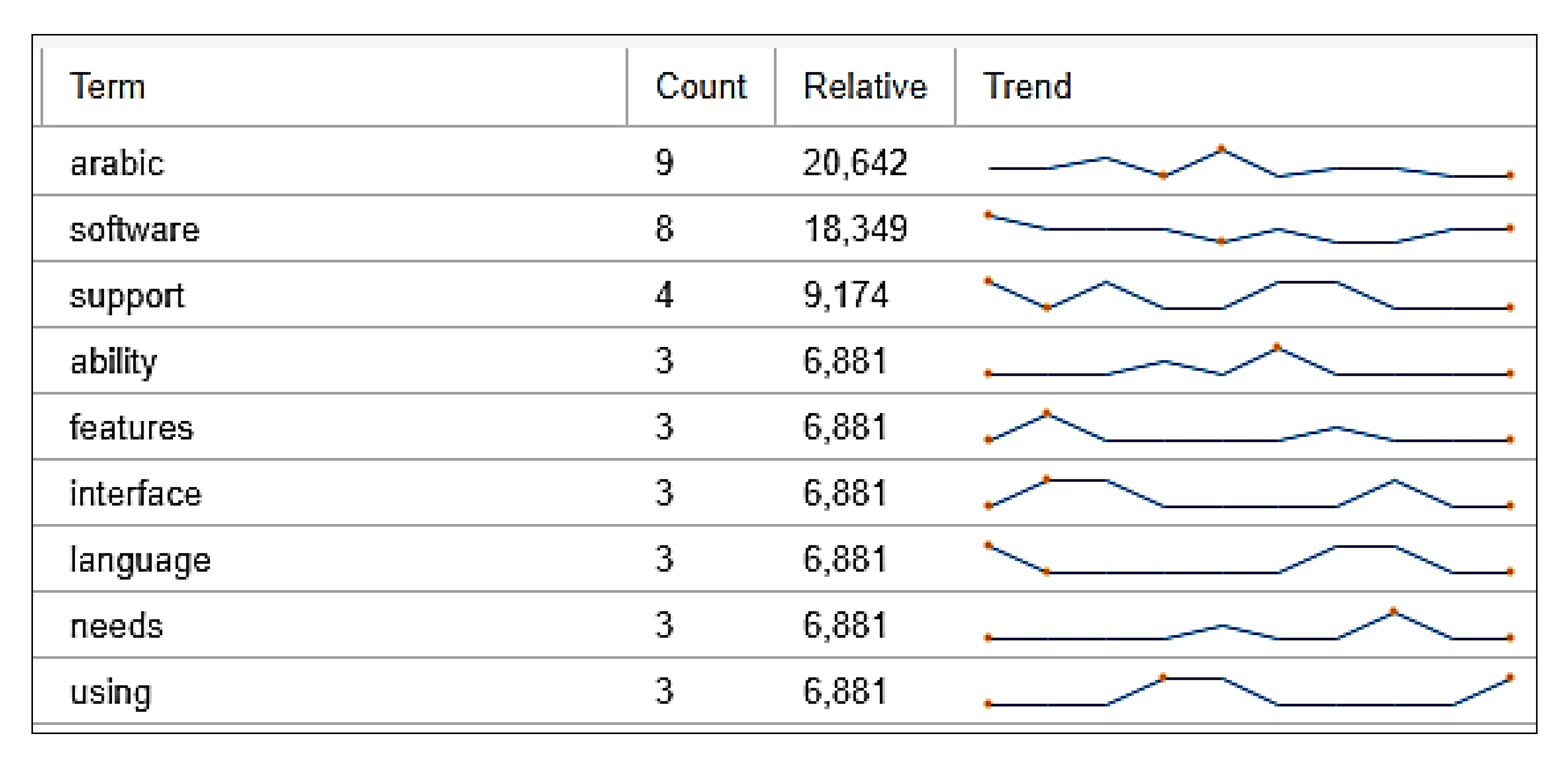

- What do you think needs most improvement and why?

5. Discussion

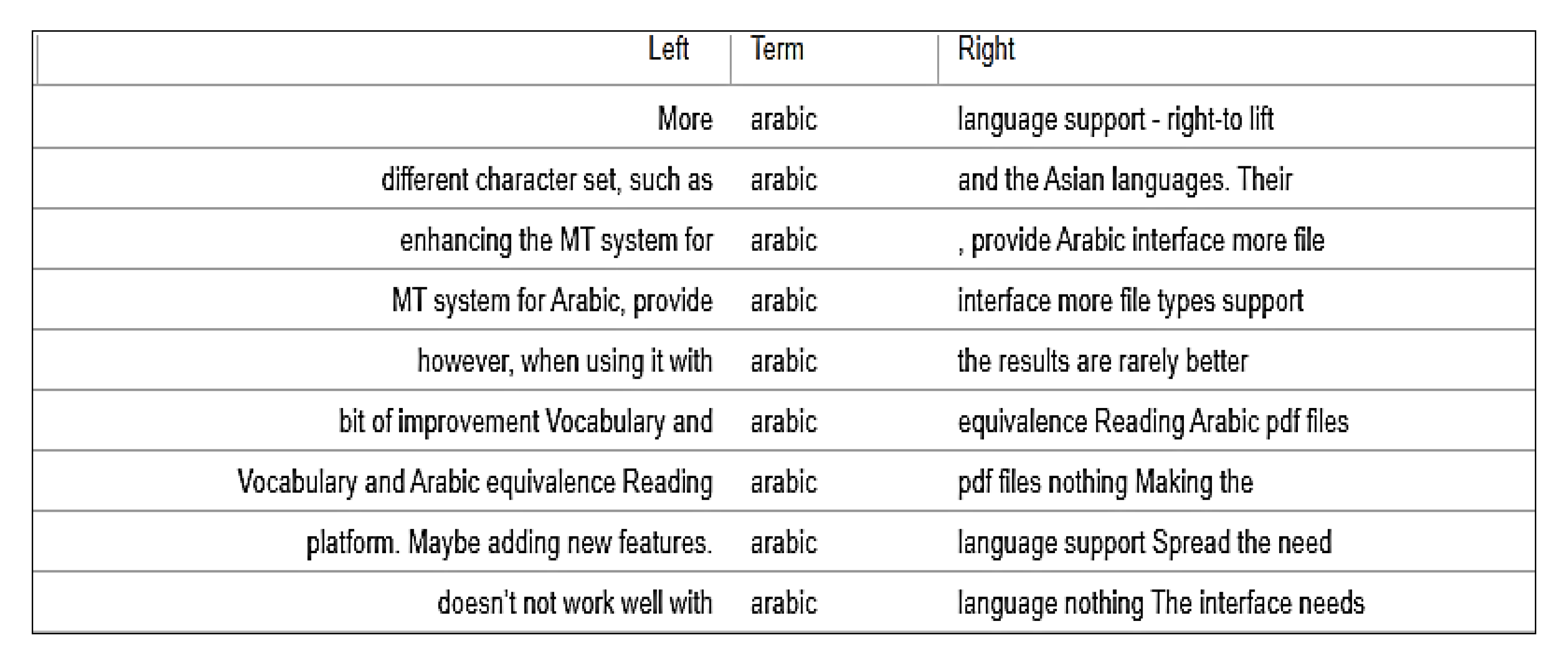

“Unfortunately, many documents I receive are PDFs or images. These require a software tool that can use its OCR features to recreate the text. The OCR is quite accurate when it is used on English texts, however, when using it with Arabic, the results are seldom better than 40%. This means that it is unreliable and thus cannot be used.”“Mostly the languages with different character set, such as Arabic and the Asian languages. Their character sets are still not processed well by most of the CAT software.”

6. Conclusions

Supplementary Materials

Funding

Acknowledgments

Conflicts of Interest

References

- Bowker, L. Computer-Aided Translation Technology: A Practical Introduction; University of Ottawa Press: Ottawa, ON, Canada, 2002. [Google Scholar]

- Hutchins, J. The development and use of machine translation systems and computer-based translation tools. Int. J. Transl. 2003, 15. Available online: http://ourworld.compuserve.com/homepages/WJHutchins (accessed on 23 April 2020).

- Lagoudaki, P.M. Expanding the Possibilities of Translation Memory Systems: From the Translators Wishlist to the Developers Design. Ph.D. Thesis, Imperial College London, London, UK, 2009. Available online: http://hdl.handle.net/10044/1/7879 (accessed on 10 August 2020).

- Krüger, R. Contextualising computer-assisted translation tools and modelling their usability. Trans.-Kom-J. Transl. Tech. Commun. Res. 2016, 9, 114–148. [Google Scholar]

- Vargas-Sierra, C. Usability evaluation of a translation memory system. Quad. Filol. Estud. Lingüístics 2019, 24, 119–146. [Google Scholar] [CrossRef]

- Garcia, I. Computer-aided translation: Systems. In Routledge Encyclopedia of Translation Technology; Routledge: London, UK; New York, NY, USA, 2014; pp. 106–125. [Google Scholar]

- Shuttleworth, M. Translation management systems. In Routledge Encyclopedia of Translation Technology; Routledge: London, UK; New York, NY, USA, 2014; pp. 716–729. [Google Scholar]

- Quaranta, B. Arabic and computer-aided translation: An integrated approach. Transl. Comput. 2011, 33, 17–18. [Google Scholar]

- Thawabteh, M. Apropos translator training aggro: A case study of the Centre for continuing education. J. Spec. Transl. 2009, 12, 165–179. [Google Scholar]

- Alotaibi, H.M. Arabic–English parallel corpus: A new resource for translation training and language teaching. Arab. World Engl. J. 2017, 8, 319–337. [Google Scholar] [CrossRef]

- Al-jarf, P.R. Technology Integration in Translator Training in Saudi Arabia. Int. J. Res. Eng. Soc. Sci. 2017, 7, 1–7. [Google Scholar]

- Breikaa, Y. The Major Problems That Face English–Arabic Translators While Using CAT Tools. 2016. Available online: https://www.academia.edu (accessed on 5 May 2020).

- Alanazi, M.S. The Use of Computer-Assisted Translation Tools for Arabic Translation: User Evaluation, Issues, and Improvements. Ph.D. Thesis, Kent State University, Kent, OH, USA, 2019. Available online: http://rave.ohiolink.edu/etdc/view?acc_num=kent1570489735521918 (accessed on 20 May 2020).

- ISO IEC 9126-1:2001. Software Engineering-Product Quality Part 1-Quality Model; ISO: Geneva, Switzerland, 2001. [Google Scholar]

- ISO. International Standard ISO/IEC 25022. In Systems and Software Engineering—Systems and Software Quality Requirements and Evaluation (SQuaRE)—Measurement of Quality in Use; ISO: Geneva, Switzerland, 2016. [Google Scholar]

- Frøkjær, E.; Hertzum, M.; Hornbæk, K. Measuring usability: Are effectiveness, efficiency, and satisfaction really correlated? In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, The Hague, The Netherlands, 1–6 April 2000; pp. 345–352. [Google Scholar] [CrossRef]

- Asare, E.K. An Ethnographic Study of the Use of Translation Tools in a Translation Agency: Implications for Translation Tool Design. Ph.D. Thesis, Kent State University, Kent, OH, USA, 2011. Available online: https://www.semanticscholar.org/paper/An-Ethnographic-Study-of-the-Use-of-Translation-inAsare/d72ddfa0d590921ec485121e4561988dd3d4cec7 (accessed on 10 August 2020).

- LeBlanc, M. Translators on translation memory (TM). Results of an ethnographic study in three translation services and agencies. Transl. Interpret. 2013, 5, 1–13. [Google Scholar] [CrossRef]

- Vela, M.; Pal, S.; Zampieri, M.; Naskar, S.K.; van Genabith, J. Improving CAT tools in the translation workflow: New approaches and evaluation. In Proceedings of the Machine Translation Summit XVII Volume 2: Translator, Project and User Tracks, Dublin, Ireland, 19–23 August 2019; pp. 8–15. [Google Scholar]

- Kirakowski, J.; Corbett, M. SUMI—The software usability measurement inventory. Br. J. Educ. Technol. 1993, 24, 210–212. [Google Scholar] [CrossRef]

- Kirakowski, J. The Software Usability Measurement Inventory: Background and Usage. In Usability Evaluation in Industry; Jordan, P., Thomas, B., Weedmeester, E.B., Eds.; Taylor & Francis: London, UK, 1996; pp. 169–178. [Google Scholar]

- Deraniyagala, R.; Amdur, R.J.; Boyer, A.L.; Kaylor, S. Usability study of the EduMod eLearning program for contouring nodal stations of the head and neck. Pract. Radiat. Oncol. 2015, 5, 169–175. [Google Scholar] [CrossRef] [PubMed]

- Khalid, M.S.; Hossan, M.I. Usability evaluation of a video conferencing system in a university’s classroom. In Proceedings of the 2016 19th International Conference on Computer and Information Technology (ICCIT), Dhaka, Bangladesh, 18–20 December 2016; pp. 184–190. [Google Scholar] [CrossRef]

- Weheba, G.; Attar, M.; Salha, M. A Usability Assessment of a Statistical Analysis Software Package. J. Manag. Eng. Integr. 2017, 10, 81–89. [Google Scholar]

- Alghanem, H. Assessing the usability of the Saudi Digital Library from the perspective of Saudi scholarship students. In Proceedings of the 2019 3rd International Conference on Computer Science and Artificial Intelligence, Beijing China, 6–8 December 2019; pp. 299–306. [Google Scholar] [CrossRef]

- Jeddi, F.R.; Nabovati, E.; Bigham, R.; Khajouei, R. Usability evaluation of a comprehensive national health information system: Relationship of quality components to users’ characteristics. Int. J. Med. Inform. 2020, 133, 104026. [Google Scholar] [CrossRef] [PubMed]

- Mahfouz, I. Attitudes to CAT Tools: Application on Egyptian Translation Students and Professionals. AWEJ 2018, 4, 69–83. [Google Scholar] [CrossRef]

- Van der Lek-Ciudin, I.; Vanallemeersch, T.; De Wachter, K. Contextual Inquiries at Translators’ Workplaces. In Proceedings of the TAO-CAT, Angers, France, 18–20 June 2015. [Google Scholar]

- Moorkens, J.; O’Brien, S. User attitudes to the post-editing interface. In Proceedings of the Machine Translation Summit XIV: Second Workshop on PostEditing Technology and Practice, Nice, France, 2–6 September 2013; pp. 19–25. [Google Scholar]

| Gender | 15 (Male) | 27 (Female) | ||

|---|---|---|---|---|

| Age | 10 (20–25 years) | 15 (26–30 years) | 10 (31–40 years) | 7 (above 40 years) |

| Occupation | 10 (full-time translator) | 26 (part-time translator) | 6 (translation students) | |

| Translation experience | 2 (less than a year) | 9 (1–5 years) | 16 (6–10 years) | 15 (more than 10 years) |

| Language Pair | Arabic < > English | Arabic < > English | ||

| Software skills and knowledge | 7 (very experienced and technical) | 19 (experienced but not technical) | 16 (can cope with most software) |

| Scale | Mean | Standard Deviation | Median | Interquartile Range (IQR) | Minimum | Maximum |

|---|---|---|---|---|---|---|

| Global | 52.38 | 11.10 | 51.0 | 18.0 | 27 | 74 |

| Efficiency | 53.00 | 9.76 | 54.0 | 15.0 | 30 | 67 |

| Affect | 54.69 | 10.97 | 58.5 | 18.0 | 31 | 69 |

| Helpfulness | 50.55 | 11.74 | 51.0 | 17.0 | 26 | 74 |

| Controllability | 52.88 | 10.97 | 53.5 | 16.0 | 27 | 74 |

| Learnability | 50.52 | 9.93 | 48.5 | 10.0 | 32 | 72 |

| Item | Scale | Statement | Difference | Verdict |

|---|---|---|---|---|

| 23 | H | I can understand and act on the information provided by this software. | +0.73 | Major agreement |

| 2 | A | I would recommend this software to my colleagues. | +0.67 | Major agreement |

| 26 | E | Tasks can be performed in a straightforward manner using this software. | +0.65 | Major agreement |

| 7 | A | I enjoy the time I spend with this software. | +0.62 | Major agreement |

| 12 | A | Working with this software is satisfying. | +0.61 | Major agreement |

| 44 | C | It is relatively easy to move from one part of a task to another. | +0.60 | Major agreement |

| 33 | E | The organization of the menus seems quite logical. | +0.59 | Major agreement |

| 13 | H | The way that system information is presented is clear and understandable. | +0.58 | Major agreement |

| 29 | C | The speed of this software is adequate. | +0.55 | Major agreement |

| 31 | E | It is obvious that user needs have been fully taken into consideration. | +0.54 | Major agreement |

| 3 | H | The instructions and prompts are helpful. | +0.48 | Minor agreement |

| 48 | H | It is easy to see at a glance what the options are at each stage. | +0.44 | Minor agreement |

| 42 | A | The software presents itself in a very attractive way. | +0.43 | Minor agreement |

| 19 | C | I feel in command of this software when I am using it. | +0.43 | Minor agreement |

| 17 | A | Working with this software is mentally stimulating. | +0.26 | Minor agreement |

| 4 | C | This software has at some time stopped unexpectedly. | +0.20 | Minor agreement |

| 34 | C | The software allows the user to be economic of keystrokes. | +0.13 | Minor Agreement |

| 1 | E | This software responds too slowly to inputs. | +0.13 | Minor agreement |

| 15 | H | The software documentation is very informative. | +0.08 | Minor agreement |

| 39 | C | It is easy to make the software do exactly what you want. | −0.27 | Minor disagreement |

| 28 | H | The software has helped me overcome any problems I have had in using it. | −0.02 | Minor disagreement |

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alotaibi, H.M. Computer-Assisted Translation Tools: An Evaluation of Their Usability among Arab Translators. Appl. Sci. 2020, 10, 6295. https://doi.org/10.3390/app10186295

Alotaibi HM. Computer-Assisted Translation Tools: An Evaluation of Their Usability among Arab Translators. Applied Sciences. 2020; 10(18):6295. https://doi.org/10.3390/app10186295

Chicago/Turabian StyleAlotaibi, Hind M. 2020. "Computer-Assisted Translation Tools: An Evaluation of Their Usability among Arab Translators" Applied Sciences 10, no. 18: 6295. https://doi.org/10.3390/app10186295

APA StyleAlotaibi, H. M. (2020). Computer-Assisted Translation Tools: An Evaluation of Their Usability among Arab Translators. Applied Sciences, 10(18), 6295. https://doi.org/10.3390/app10186295