An Attention-Based Network for Textured Surface Anomaly Detection

Abstract

1. Introduction

2. Materials and Methods

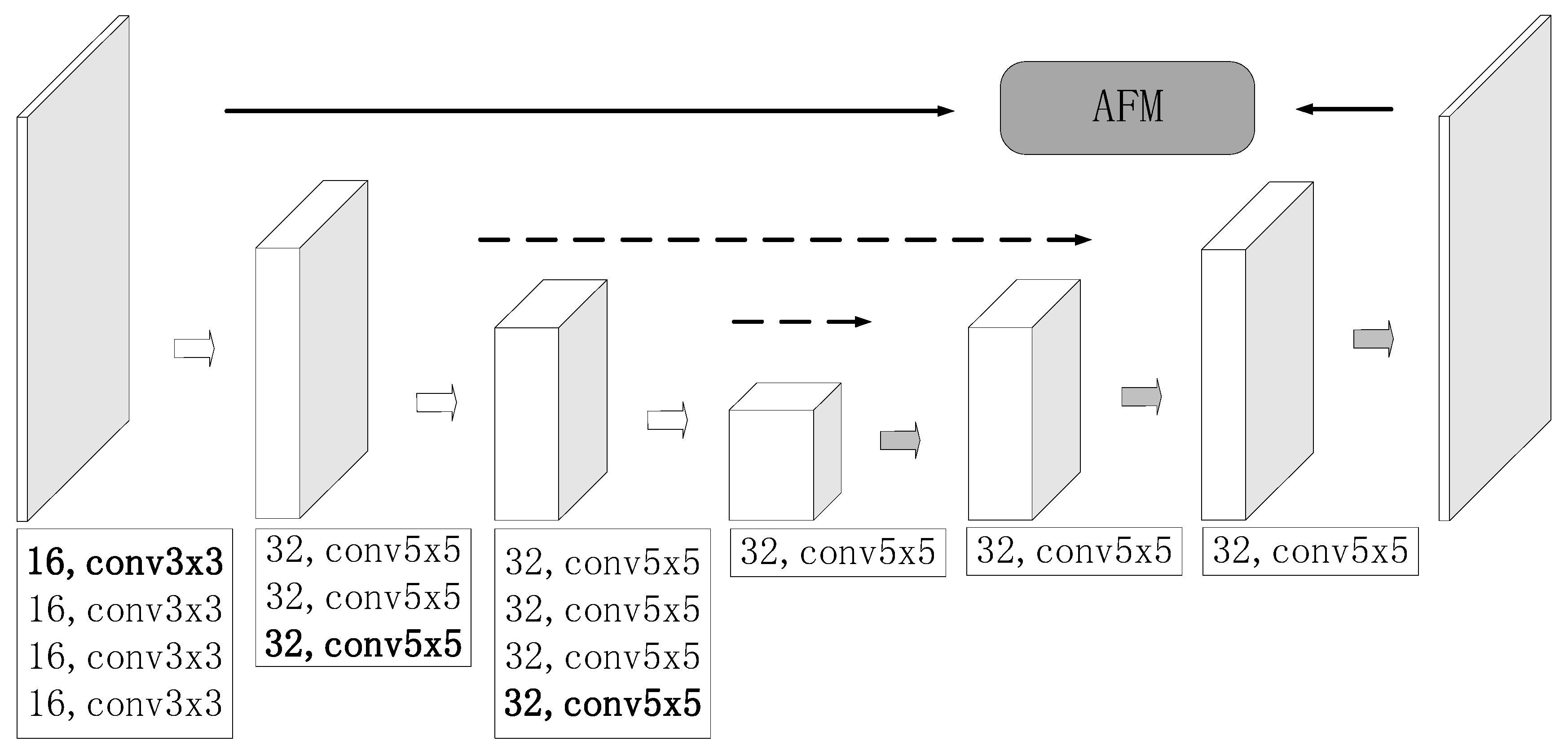

2.1. Segmentation Model

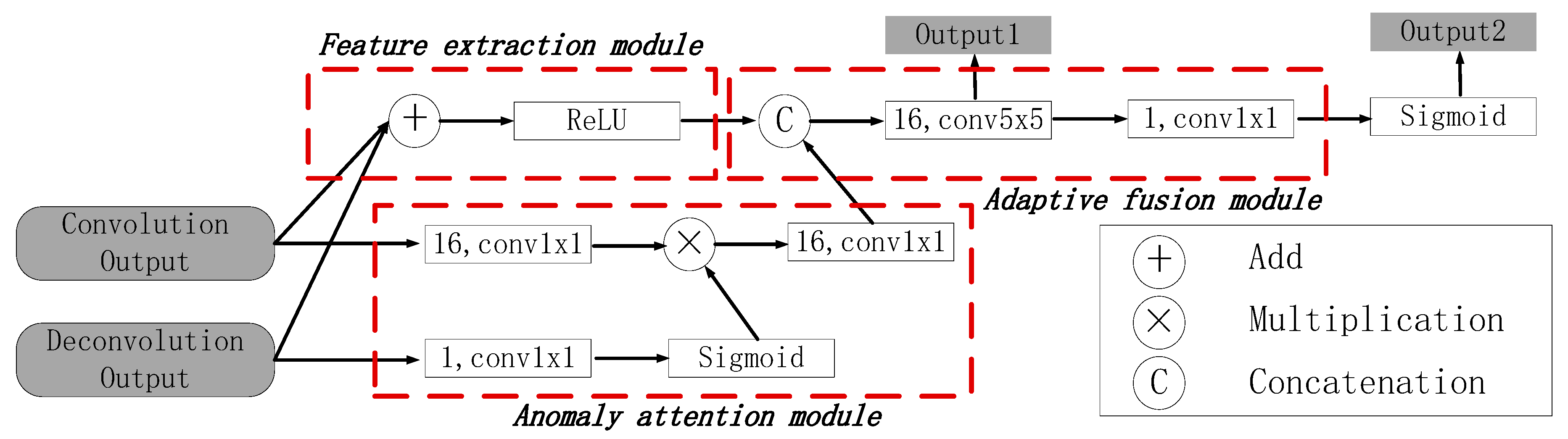

2.2. Attention-Based Fusion Module

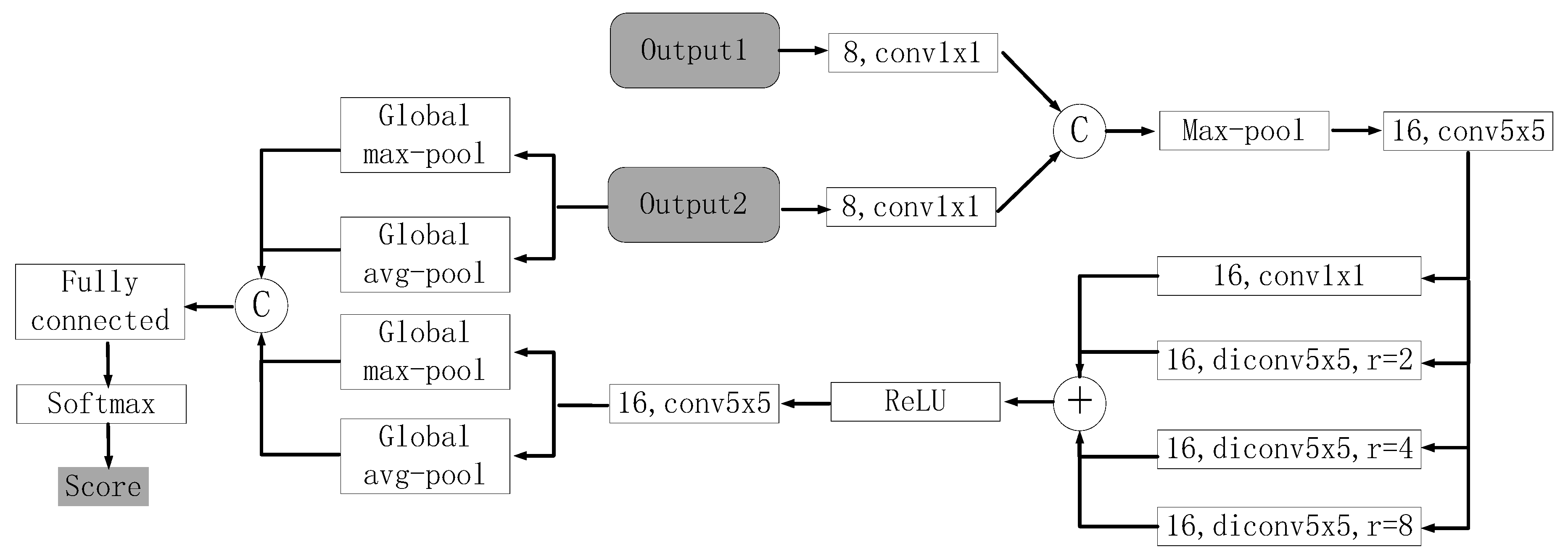

2.3. Classification Network

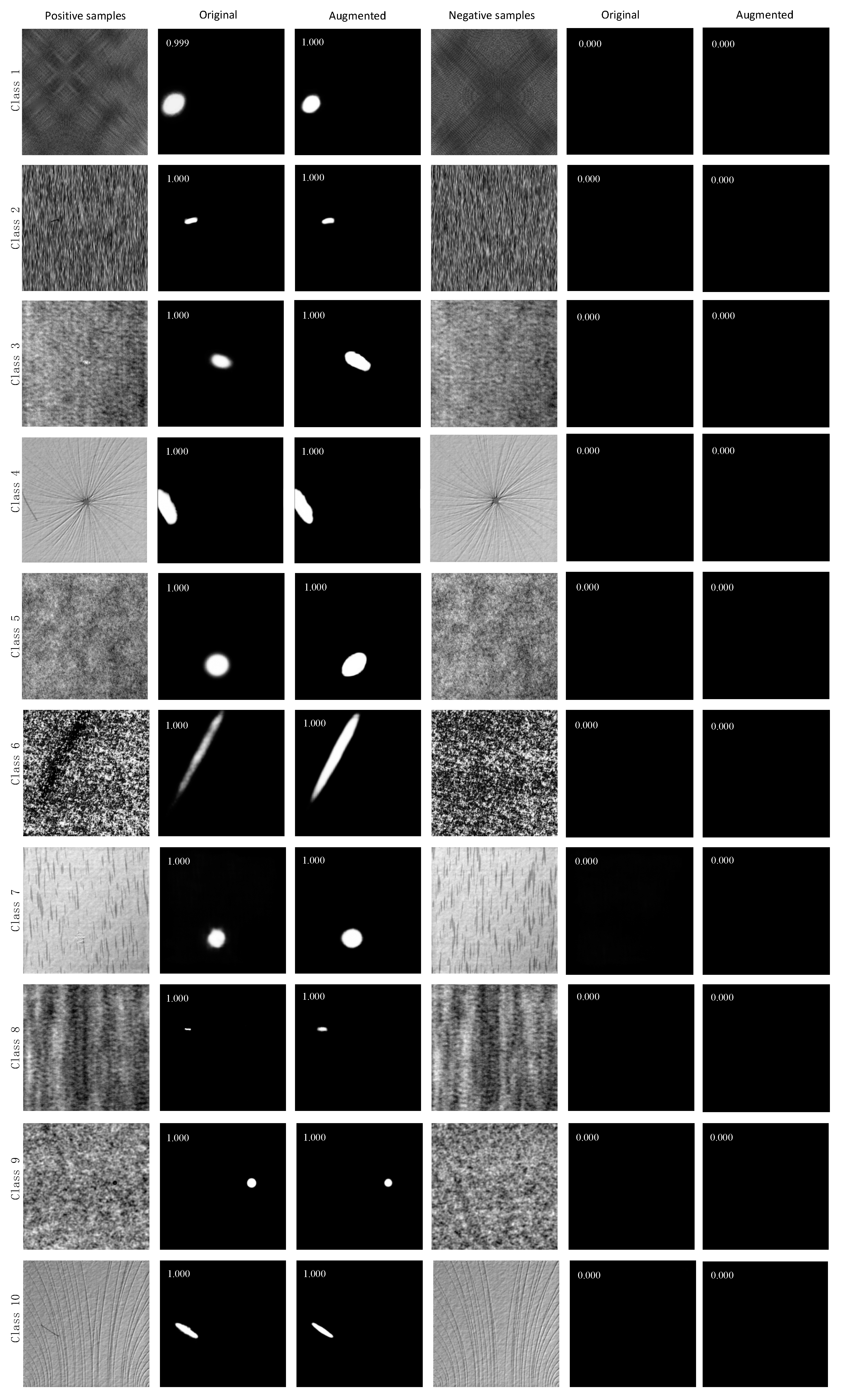

2.4. DAGM Textured Dataset

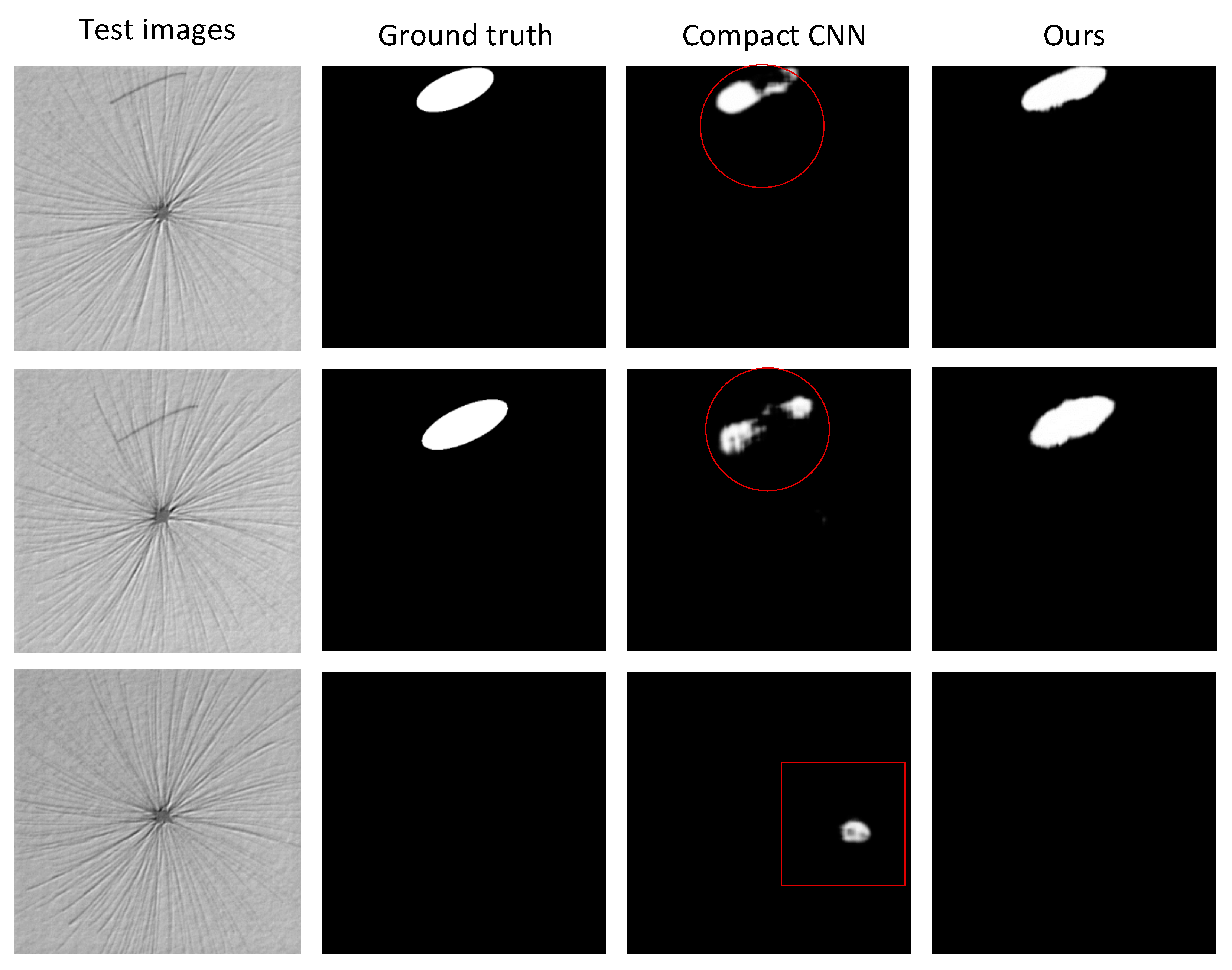

3. Result and Discussion

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Masci, J.; Meier, U.; Ciresan, D.; Schmidhuber, J.; Fricout, G. Steel Defect Classification with Max-Pooling Convolutional Neural Networks. In Proceedings of the IEEE International Joint Conference on Neural Networks (IJCNN), Brisbane, Australia, 10–15 June 2012; pp. 1–6. [Google Scholar]

- Ghorai, S.; Mukherjee, A.; Gangadaran, M.; Dutta, P.K. Automatic defect detection on hot-rolled flat steel products. IEEE Trans. Instrum. Meas. 2013, 62, 612–621. [Google Scholar] [CrossRef]

- Napoletano, P.; Piccoli, F.; Schettini, R. Anomaly detection in nanofibrous materials by cnn-based self-similarity. Sensors 2018, 18, 209. [Google Scholar] [CrossRef] [PubMed]

- Liu, G.K.; Yang, N.; Guo, L.; Guo, S.P.; Chen, Z. A One-Stage Approach for Surface Anomaly Detection with Background Suppression Strategies. Sensors 2020, 20, 1829. [Google Scholar] [CrossRef] [PubMed]

- Sanchez-Brea, L.M.; Siegmann, P.; Rebollo, M.A.; Bernabeu, E. Optical technique for the automatic detection and measurement of surface defects on thin metallic wires. Appl. Opt. 2000, 39, 539–545. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436. [Google Scholar] [CrossRef] [PubMed]

- Dumoulin, V.; Visin, F. A guide to convolution arithmetic for deep learning. arXiv 2016, arXiv:1603.07285. [Google Scholar]

- Song, K.; Yan, Y.H. Micro surface defect detection method for silicon steel strip based on saliency convex active contour model. Math. Probl. Eng. 2013, 2013, 1–13. [Google Scholar] [CrossRef]

- Shanmugamani, R.; Sadique, M.; Ramamoorthy, B. Detection and classification of surface defects of gun barrels using computer vision and machine learning. Measurement 2015, 60, 222–230. [Google Scholar] [CrossRef]

- Gong, R.; Chu, M.; Wang, A.; Yang, Y. A fast detection method for region of defect on strip steel surface. Isij Int. 2015, 55, 207–212. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.M.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M.; Heinrich, M.; Misawa, K.; Mori, K.; McDonagh, S.; Hammerla, N.Y.; Kainz, B.; et al. Attention U-Net: Learning Where to Look for the Pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar]

- Zhou, T.; Fu, H.; Chen, G.; Shen, J.; Shao, L. Hi-net: Hybrid-fusion network for multi-modal MR image synthesis. IEEE Trans. Med. Imaging 2020. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Cheng, M.M.; Hu, X.; Wang, K.; Bai, X. Richer convolutional features for edge detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3000–3009. [Google Scholar]

- Weimer, D.; Scholz-Reiter, B.; Shpitalni, M. Design of deep convolutional neural network architectures for automated feature extraction in industrial inspection. CIRP Ann. Manuf. Technol. 2016, 65, 417–420. [Google Scholar] [CrossRef]

- Racki, D.; Tomazevic, D.; Skocaj, D. A compact convolutional neural network for textured surface anomaly detection. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, CA, USA, 12–15 March 2018; pp. 1331–1339. [Google Scholar]

- Mei, S.; Wang, Y.; Wen, G. Automatic fabric defect detection with a multi-scale convolutional denoising autoencoder network model. Sensors 2018, 18, 1064. [Google Scholar] [CrossRef] [PubMed]

- Tabernik, D.; Šela, S.; Skvarč, J.; Skočaj, D. Segmentation-based deep-learning approach for surface-defect detection. J. Intell. Manuf. 2019, 31, 759–776. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision, (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M. Tensorflow: A system for large-scale machine learning. In Proceedings of the Symposium on Operating Systems Design and Implementation (OSDI), Savannah, GA, USA, 2–4 November 2016; pp. 265–283. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Scholz-Reiter, B.; Weimer, D.; Thamer, H. Automated surface inspection of cold-formed micro-parts. CIRP Ann. Manuf. Technol. 2012, 61, 531–534. [Google Scholar] [CrossRef]

- Timm, F.; Barth, E. Non-parametric texture defect detection using Weibull features. In Proceedings of the SPIE—The International Society for Optical Engineering, San Francisco, CA, USA, 25–27 January 2011; p. 78770J. [Google Scholar]

| Train | Test | |||

|---|---|---|---|---|

| Class | Positive | Negative | Positive | Negative |

| 1 | 79 | 496 | 71 | 504 |

| 2 | 66 | 509 | 84 | 491 |

| 3 | 66 | 509 | 84 | 491 |

| 4 | 82 | 493 | 68 | 507 |

| 5 | 70 | 505 | 80 | 495 |

| 6 | 83 | 492 | 67 | 508 |

| 7 | 150 | 1000 | 150 | 1000 |

| 8 | 150 | 1000 | 150 | 1000 |

| 9 | 150 | 1000 | 150 | 1000 |

| 10 | 150 | 1000 | 150 | 1000 |

| Original | Augmented | |||

|---|---|---|---|---|

| Class | TPR | TNR | TPR | TNR |

| 1 | 100[100] | 96.4[100] | 100[100] | 98.8[100] |

| 2 | 98.8[100] | 99.6[100] | 100[100] | 99.8[100] |

| 3 | 100[100] | 97.1[100] | 100[100] | 96.3[100] |

| 4 | 77.9[100] | 95.7[100] | 98.5[100] | 99.8[100] |

| 5 | 100[100] | 99.6[100] | 100[100] | 100[100] |

| 6 | 100[100] | 100[100] | 100[100] | 100[100] |

| 7 | 100[100] | 98.9[100] | 100[100] | 100[100] |

| 8 | 100[100] | 99.9[100] | 100[100] | 100[100] |

| 9 | 100[100] | 100[100] | 100[100] | 99.9[100] |

| 10 | 100[100] | 99.7[100] | 100[100] | 100[100] |

| Proposed | Compact CNN [19] | FC-CNN [18] | SIF [27] | Weibull [28] | |

|---|---|---|---|---|---|

| Class | TPR(TNR) | ||||

| 1 | 100(100) | 100(98.8) | 100(100) | 98.9(100) | 87.0(98.0) |

| 2 | 100(100) | 100(99.8) | 100(97.3) | 95.7(91.3) | - |

| 3 | 100(100) | 100(96.3) | 95.5(100) | 98.5(100) | 99.8(100) |

| 4 | 100(100) | 98.5(100) | 100(98.7) | - | - |

| 5 | 100(100) | 100(100) | 98.8(100) | 98.2(100) | 97.2(100) |

| 6 | 100(100) | 100(100) | 100(99.5) | 99.8(100) | 94.9(100) |

| 7 | 100(100) | 100(100) | - | - | - |

| 8 | 100(100) | 100(100) | - | - | - |

| 9 | 100(100) | 100(99.9) | - | - | - |

| 10 | 100(100) | 100(100) | - | - | - |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, G.; Yang, N.; Guo, L. An Attention-Based Network for Textured Surface Anomaly Detection. Appl. Sci. 2020, 10, 6215. https://doi.org/10.3390/app10186215

Liu G, Yang N, Guo L. An Attention-Based Network for Textured Surface Anomaly Detection. Applied Sciences. 2020; 10(18):6215. https://doi.org/10.3390/app10186215

Chicago/Turabian StyleLiu, Gaokai, Ning Yang, and Lei Guo. 2020. "An Attention-Based Network for Textured Surface Anomaly Detection" Applied Sciences 10, no. 18: 6215. https://doi.org/10.3390/app10186215

APA StyleLiu, G., Yang, N., & Guo, L. (2020). An Attention-Based Network for Textured Surface Anomaly Detection. Applied Sciences, 10(18), 6215. https://doi.org/10.3390/app10186215