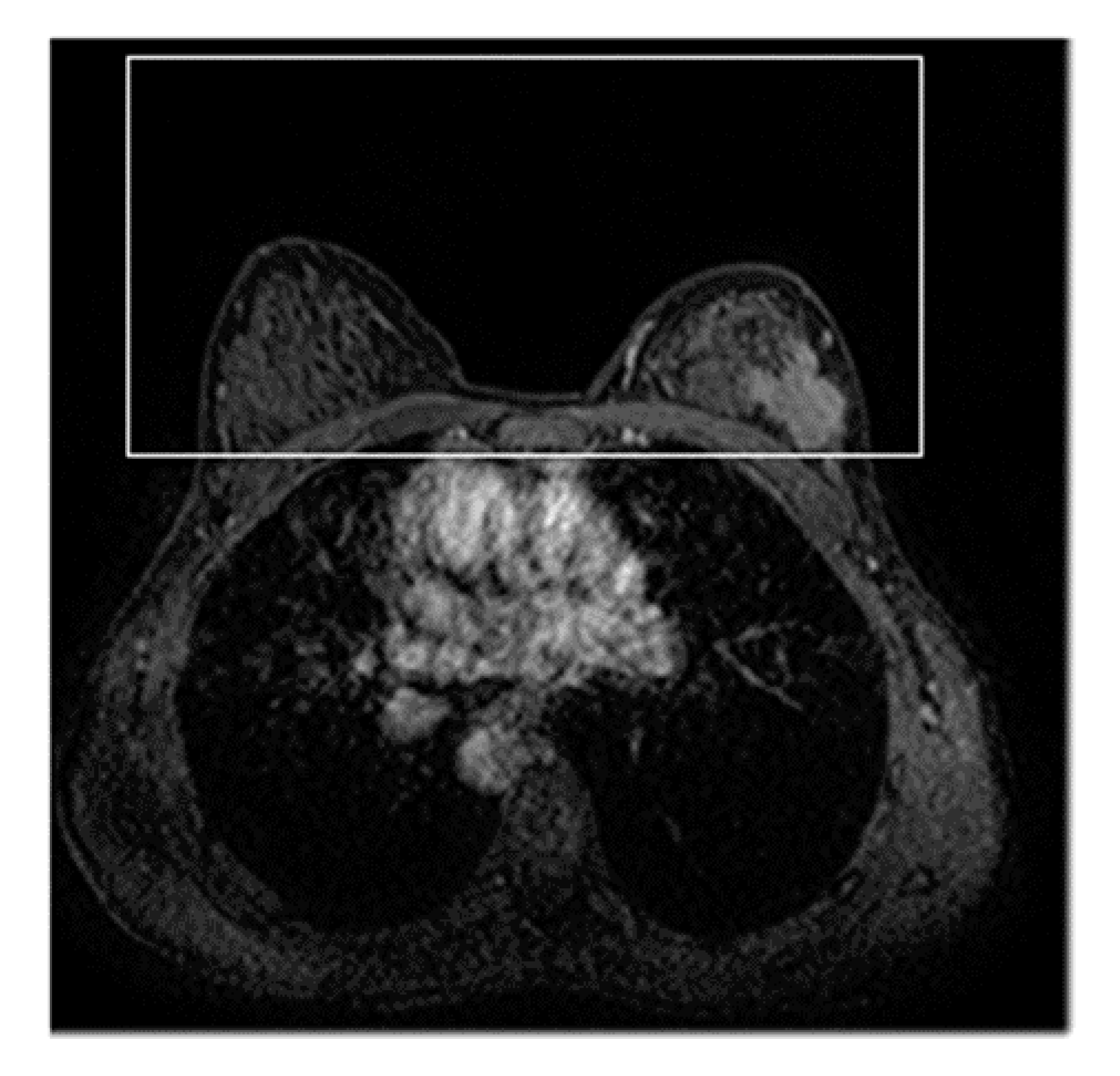

Breast Cancer Mass Detection in DCE–MRI Using Deep-Learning Features Followed by Discrimination of Infiltrative vs. In Situ Carcinoma through a Machine-Learning Approach

Abstract

Featured Application

Abstract

1. Introduction

2. Materials and Methods

2.1. ROI Hunter Procedure

2.2. Deep-Learning Feature Extraction

2.3. FP ROI Rejection through Binary Classification

2.4. Tumor Characterization by Radiomics Signature

2.5. Classification to Discriminate In Situ vs. Invasive BC

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Various Authors. I Numeri del Cancro in Italia; Intermedia Editore: Brescia, Italy, 2019. [Google Scholar]

- Bijker, N.; Meijnen, P.; Peterse, J.L.; Bogaerts, J.; Van Hoorebeeck, I.; Julien, J.-P.; Gennaro, M.; Rouanet, P.; Avril, A.; Fentiman, I.S.; et al. Breast-conserving treatment with or without radiotherapy in ductal carcinoma-in-situ: Ten-year results of randomized phase III trial. J. Clin. Oncol. 2006, 24, 3381–3387. [Google Scholar] [CrossRef] [PubMed]

- Ernster, V.L.; Ballard-Barbash, R.; Barlow, W.E.; Zheng, Y.; Weaver, D.L.; Cutter, G.; Yankaskas, B.C.; Rosenberg, R.; Carney, P.A.; Kerlikowske, K.; et al. Detection of ductal carcinoma in situ in women undergoing screening mammography. J. Natl. Cancer Inst. 2002, 94, 1546–1554. [Google Scholar] [CrossRef] [PubMed]

- Northridge, M.E.; Rhoads, G.G.; Wartenberg, D.; Koffman, D. The importance of histologic type on breast cancer survival. J. Clin. Epidemiol. 1997, 50, 283–290. [Google Scholar] [CrossRef]

- Gamel, J.W.; Meyer, J.S.; Feuer, E.; Miller, B.A. The impact of stage and histology on the long-term clinical course of 163,808 patients with breast carcinoma. Cancer 1996, 77, 1459–1464. [Google Scholar] [CrossRef]

- Mann, R.M.; Cho, N.; Moy, L. Breast MRI: State of the art. Radiology 2019, 292, 520–536. [Google Scholar] [CrossRef]

- Kuhl, C.K.; Schild, H.H. Dynamic image interpretation of MRI of the breast. J. Magn. Reson. Imaging 2000, 12, 965–974. [Google Scholar] [CrossRef]

- Kuhl, C.K.; Mielcareck, P.; Klaschik, S.; Leutner, C.; Wardelmann, E.; Gieseke, J.; Schild, H.H. Dynamic breast MR imaging: Are signal intensity time course data useful for differential diagnosis of enhancing lesions? Radiology 1999, 211, 101–110. [Google Scholar] [CrossRef]

- Schnall, M.D. Breast MR imaging. Radiol. Clin. N. Am. 2003, 41, 43–50. [Google Scholar] [CrossRef]

- Morris, E.A. Breast cancer imaging with MRI. Radiol. Clin. N. Am. 2002, 40, 443–466. [Google Scholar] [CrossRef]

- Gillies, R.J.; Kinahan, P.E.; Hricak, H. Radiomics: Images are more than pictures, they are data. Radiology 2016, 278, 563–577. [Google Scholar] [CrossRef]

- Brown, M.S.; Goldin, J.G.; Rogers, S.; Kim, H.J.; Suh, R.D.; McNitt-Gray, M.F.; Shah, S.K.; Truong, D.; Brown, K.; Sayre, J.W.; et al. Computer-aided lung nodule detection in CT: Results of large-scale observer test. Acad. Radiol. 2005, 12, 681–686. [Google Scholar] [CrossRef] [PubMed]

- Peldschus, K.; Herzog, P.; Wood, S.A.; Cheema, J.I.; Costello, P.; Schoepf, U.J. Computer-aided diagnosis as a second reader: Spectrum of findings in CT studies of the chest interpreted as normal. Chest 2005, 128, 1517–1523. [Google Scholar] [CrossRef] [PubMed]

- Tafuri, B.; Conte, L.; Portaluri, M.; Galiano, A.; Maggiulli, E.; De Nunzio, G. Radiomics for the Discrimination of Infiltrative vs In Situ Breast Cancer. Biomed. J. Sci. Tech. Res. 2019, 24, 17890–17893. [Google Scholar]

- Karen, D.; Schram, J.; Burda, S.; Li, H.; Lan, L. Radiomics Investigation in the distinction between in situ and invasive breast cancers. Med. Phys. 2015, 42, 3602–3603. [Google Scholar]

- Li, J.; Song, Y.; Xu, S.; Wang, J.; Huang, H.; Ma, W.; Jiang, X.; Wu, Y.; Cai, H.; Li, L. Predicting underestimation of ductal carcinoma in situ: A comparison between radiomics and conventional approaches. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 709–721. [Google Scholar] [CrossRef]

- Bickel, H.; Pinker-Domenig, K.; Bogner, W.; Spick, C.; Bagó-Horváth, Z.; Weber, M.; Helbich, T.; Baltzer, P. Quantitative apparent diffusion coefficient as a noninvasive imaging biomarker for the differentiation of invasive breast cancer and ductal carcinoma in situ. Investgative Radiol. 2015, 50, 95–100. [Google Scholar] [CrossRef]

- Pinker, K.; Bickel, H.; Helbich, T.H.; Gruber, S.; Dubsky, P.; Pluschnig, U.; Rudas, M.; Bago-Horvath, Z.; Weber, M.; Trattnig, S.; et al. Combined contrast-enhanced magnetic resonance and diffusion-weighted imaging reading adapted to the ‘Breast Imaging Reporting and Data System’ for multiparametric 3-T imaging of breast lesions. Eur. Radiol. 2013, 23, 1791–1802. [Google Scholar] [CrossRef]

- Spick, C.; Pinker-Domenig, K.; Rudas, M.; Helbich, T.H.; Baltzer, P.A. MRI-only lesions: Application of diffusion-weighted imaging obviates unnecessary MR-guided breast biopsies. Eur. Radiol. 2014, 24, 1204–1210. [Google Scholar] [CrossRef]

- Bhooshan, N.; Giger, M.L.; Jansen, S.A.; Li, H.; Lan, L.; Newstead, G.M. Cancerous breast lesions on dynamic contrast-enhanced MR images: Computerized characterization for image-based prognostic markers. Radiology 2010, 254, 680–690. [Google Scholar] [CrossRef]

- Zhu, Z.; Harowicz, M.; Zhang, J.; Saha, A.; Grimm, L.J.; Hwang, E.S.; Mazurowski, M.A. Deep learning analysis of breast MRIs for prediction of occult invasive disease in ductal carcinoma in situ. Comput. Biol. Med. 2019, 115, 103498. [Google Scholar] [CrossRef]

- Halalli, B.; Makandar, A. Computer Aided Diagnosis—Medical Image Analysis Techniques. In Breast Imaging; InTechOpen Limited: London, UK, 2018. [Google Scholar]

- El Adoui, M.; Drisis, S.; Larhmam, M.A.; Lemort, M.B.M. Breast Cancer Heterogeneity Analysis as Index of Response to Treatment Using MRI Images: A Review. Imaging Med. 2017, 9, 109–119. [Google Scholar]

- El Adoui, M.; Drisis, S.; Benjelloun, M. A PRM approach for early prediction of breast cancer response to chemotherapy based on registered MR images. Int. J. Comput. Assist. Radiol. Surg. 2018, 13, 1233–1243. [Google Scholar] [CrossRef] [PubMed]

- Benjelloun, M.; El Adoui, M.; Larhmam, M.A.; Mahmoudi, S.A. Automated Breast Tumor Segmentation in DCE-MRI Using Deep Learning. In Proceedings of the 2018 4th International Conference on Cloud Computing Technologies and Applications, Cloudtech 2018, Brussels, Belgium, 26–28 November 2018. [Google Scholar]

- Chen, W.; Giger, M.L.; Bick, U. A fuzzy c-means (FCM)-based approach for computerized segmentation of breast lesions in dynamic contrast-enhanced MR images. Acad. Radiol. 2006, 13, 63–72. [Google Scholar] [CrossRef] [PubMed]

- Tzacheva, A.A.; Najarian, K.; Brockway, J.P. Breast cancer detection in gadolinium-enhanced MR images by static region descriptors and neural networks. J. Magn. Reson. Imaging 2003, 17, 337–342. [Google Scholar] [CrossRef] [PubMed]

- Fusco, R.; Sansone, M.; Sansone, C.; Petrillo, A. Selection of suspicious ROIs in breast DCE-MRI. In Proceedings of the International Conference on Image Analysis and Processing, Ravenna, Italy, 14–16 September 2011; Volume 6978 LNCS, pp. 48–57. [Google Scholar]

- Kannan, S.R.; Ramathilagam, S.; Devi, R.; Sathya, A. Robust kernel FCM in segmentation of breast medical images. Expert Syst. Appl. 2011, 38, 4382–4389. [Google Scholar] [CrossRef]

- Kannan, S.R.; Sathya, A.; Ramathilagam, S. Effective fuzzy clustering techniques for segmentation of breast MRI. Soft Comput. 2011, 15, 483–491. [Google Scholar] [CrossRef]

- Gnonnou, C.; Smaoui, N. Segmentation and 3D reconstruction of MRI images for breast cancer detection. In Proceedings of the International Image Processing, Applications and Systems Conference, IPAS 2014, Sfax, Tunisia, 5–7 November 2014. [Google Scholar]

- Moftah, H.M.; Azar, A.T.; Al-Shammari, E.T.; Ghali, N.I.; Hassanien, A.E.; Shoman, M. Adaptive k-means clustering algorithm for MR breast image segmentation. Neural Comput. Appl. 2014, 24, 1917–1928. [Google Scholar] [CrossRef]

- Adams, R.; Bischof, L. Seeded Region Growing. IEEE Trans. Pattern Anal. Mach. Intell. 1994, 16, 641–647. [Google Scholar] [CrossRef]

- Al-Faris, A.Q.; Ngah, U.K.; Isa, N.A.M.; Shuaib, I.L. Breast MRI tumour segmentation using modified automatic seeded region growing based on particle swarm optimization image clustering. In Soft Computing in Industrial Applications; Springer: Cham, Switzerland, 2014; Volume 223, pp. 49–60. [Google Scholar]

- Cui, Y.; Tan, Y.; Zhao, B.; Liberman, L.; Parbhu, R.; Kaplan, J.; Theodoulou, M.; Hudis, C.; Schwartz, L.H. Malignant lesion segmentation in contrast-enhanced breast MR images based on the marker-controlled watershed. Med. Phys. 2009, 36, 4359–4369. [Google Scholar] [CrossRef]

- Saaresranta, T.; Hedner, J.; Bonsignore, M.R.; Riha, R.L.; McNicholas, W.T.; Penzel, T.; Anttalainen, U.; Kvamme, J.A.; Pretl, M.; Sliwinski, P.; et al. Clinical Phenotypes and Comorbidity in European Sleep Apnoea Patients. PLoS ONE 2016, 11, e0163439. [Google Scholar] [CrossRef]

- Zavala-Romero, O.; Meyer-Baese, A.; Lobbes, M.B.I. Breast lesion segmentation software for DCE-MRI: An open source GPGPU based optimization. In Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018), Washington, DC, USA, 4–7 April 2018; pp. 211–215. [Google Scholar]

- Sun, L.; He, J.; Yin, X.; Zhang, Y.; Chen, J.H.; Kron, T.; Su, M.Y. An image segmentation framework for extracting tumors from breast magnetic resonance images. J. Innov. Opt. Health Sci. 2018, 11, 1850014. [Google Scholar] [CrossRef]

- Nie, K.; Chen, J.H.; Yu, H.J.; Chu, Y.; Nalcioglu, O.; Su, M.Y. Quantitative Analysis of Lesion Morphology and Texture Features for Diagnostic Prediction in Breast MRI. Acad. Radiol. 2008, 15, 1513–1525. [Google Scholar] [CrossRef] [PubMed]

- El Adoui, M.; Mahmoudi, S.A.; Larhmam, M.A.; Benjelloun, M. MRI breast tumor segmentation using different encoder and decoder CNN architectures. Computers 2019, 8, 52. [Google Scholar] [CrossRef]

- Dalmiş, M.U.; Litjens, G.; Holland, K.; Setio, A.; Mann, R.; Karssemeijer, N.; Gubern-Mérida, A. Using deep learning to segment breast and fibroglandular tissue in MRI volumes. Med. Phys. 2017, 44, 533–546. [Google Scholar]

- Moeskops, P.; Wolterink, J.M.; van der Velden, B.H.M.; Gilhuijs, K.G.A.; Leiner, T.; Viergever, M.A.; Išgum, I. Deep learning for multi-task medical image segmentation in multiple modalities. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Athens, Greece, 17–21 October 2016; Volume 9901, pp. 478–486. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Volume 9351, pp. 234–241. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Stone, M. Cross-Validatory Choice and Assessment of Statistical Predictions. J. R. Stat. Soc. Ser. B 1974, 36, 111–133. [Google Scholar] [CrossRef]

- Parmar, C.; Grossmann, P.; Bussink, J.; Lambin, P.; Aerts, H.J.W.L. Machine Learning methods for Quantitative Radiomic Biomarkers. Sci. Rep. 2015, 5, 1–11. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Braman, N.M.; Etesami, M.; Prasanna, P.; Dubchuk, C.; Gilmore, H.; Tiwari, P.; Plecha, D.; Madabhushi, A. Intratumoral and peritumoral radiomics for the pretreatment prediction of pathological complete response to neoadjuvant chemotherapy based on breast DCE-MRI. Breast Cancer Res. 2017, 19, 57. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Conte, L.; Tafuri, B.; Portaluri, M.; Galiano, A.; Maggiulli, E.; De Nunzio, G. Breast Cancer Mass Detection in DCE–MRI Using Deep-Learning Features Followed by Discrimination of Infiltrative vs. In Situ Carcinoma through a Machine-Learning Approach. Appl. Sci. 2020, 10, 6109. https://doi.org/10.3390/app10176109

Conte L, Tafuri B, Portaluri M, Galiano A, Maggiulli E, De Nunzio G. Breast Cancer Mass Detection in DCE–MRI Using Deep-Learning Features Followed by Discrimination of Infiltrative vs. In Situ Carcinoma through a Machine-Learning Approach. Applied Sciences. 2020; 10(17):6109. https://doi.org/10.3390/app10176109

Chicago/Turabian StyleConte, Luana, Benedetta Tafuri, Maurizio Portaluri, Alessandro Galiano, Eleonora Maggiulli, and Giorgio De Nunzio. 2020. "Breast Cancer Mass Detection in DCE–MRI Using Deep-Learning Features Followed by Discrimination of Infiltrative vs. In Situ Carcinoma through a Machine-Learning Approach" Applied Sciences 10, no. 17: 6109. https://doi.org/10.3390/app10176109

APA StyleConte, L., Tafuri, B., Portaluri, M., Galiano, A., Maggiulli, E., & De Nunzio, G. (2020). Breast Cancer Mass Detection in DCE–MRI Using Deep-Learning Features Followed by Discrimination of Infiltrative vs. In Situ Carcinoma through a Machine-Learning Approach. Applied Sciences, 10(17), 6109. https://doi.org/10.3390/app10176109