Deep Instance Segmentation of Laboratory Animals in Thermal Images

Abstract

1. Introduction

1.1. Two-Stage Methods

- creating bottom-up path augmentation,

- adaptive feature pooling,

- augmenting mask prediction with fully connected layers.

1.2. Single-Stage Methods

2. Methods

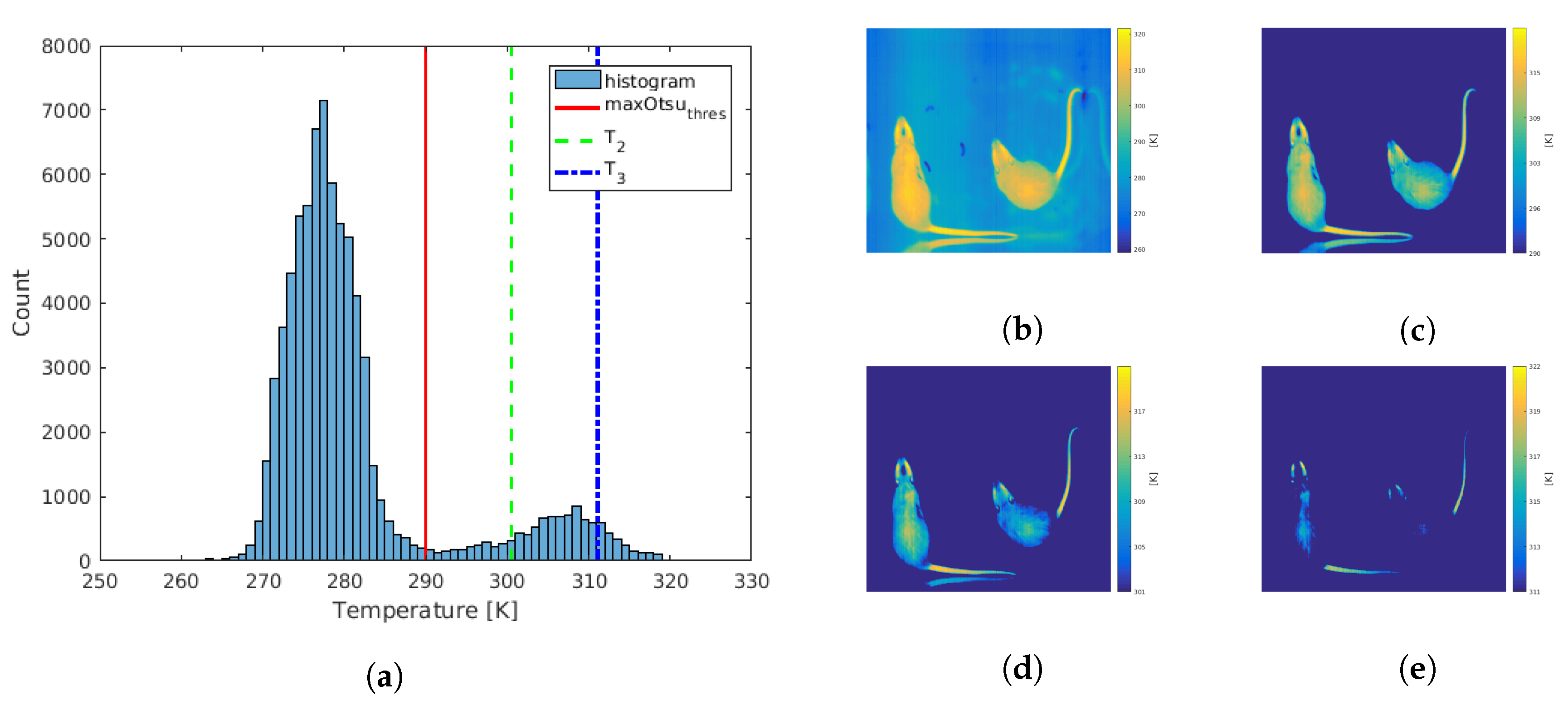

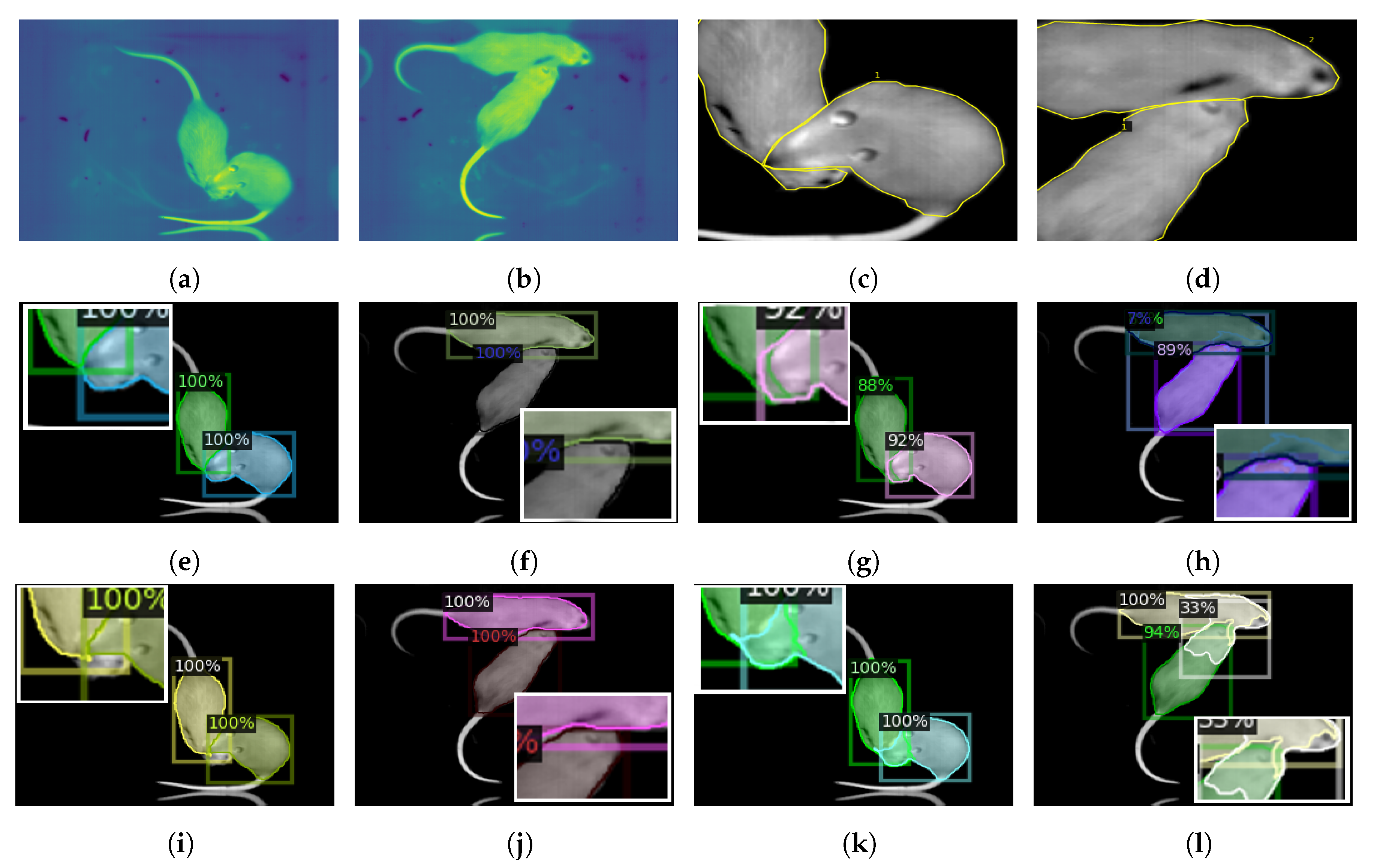

2.1. Data Preprocessing

2.2. Training Models

2.3. Learning Configurations

2.4. Evaluation Metrics

3. Results

4. Discussion

5. Conclusions

- the adopted deep instance segmentation algorithms have been experimentally verified for the laboratory rodents detection from thermal images,

- it was shown that laboratory rodents can be accurately detected (and separated from each other) from the thermal images using the Mask R-CNN and TensorMask models,

- the obtained results demonstrated that the adopted TensorMask model, pre-trained using visible light images and trained with thermal sequences gave the best results with the mean average precision (mAP) greater that 90,

- it was verified that thermal data conversion from narrower raw thermal range does not improve the quality of segmentation,

- single-stage pre-trained networks achieves better results than two-stage pre-trained models, however two-stage methods seem to work better than single-stage when trained from scratch,

- network pre-training using visible light images improves the segmentation results for thermal images.

Author Contributions

Funding

Conflicts of Interest

References

- Lezak, K.; Missig, G.; Carlezon, W.A., Jr. Behavioral methods to study anxiety in rodents. Dialogues Clin. Neurosci. 2017, 19, 181–191. [Google Scholar] [PubMed]

- Franco, N.H.; Gerós, A.; Oliveira, L.; Olsson, I.A.S.; Aguiar, P. ThermoLabAnimal—A high-throughput analysis software for non-invasive thermal assessment of laboratory mice. Physiol. Behav. 2019, 207, 113–121. [Google Scholar] [CrossRef] [PubMed]

- Junior, C.F.C.; Pederiva, C.N.; Bose, R.C.; Garcia, V.A.; Lino-de-Oliveira, C.; Marino-Neto, J. ETHOWATCHER: Validation of a tool for behavioral and video-tracking analysis in laboratory animals. Comput. Biol. Med. 2012, 42, 257–264. [Google Scholar] [CrossRef] [PubMed]

- Grant, E.; Mackintosh, J. A comparison of the social postures of some common laboratory rodents. Behaviour 1963, 21, 246–259. [Google Scholar]

- Kask, A.; Nguyen, H.P.; Pabst, R.; von Hoorsten, S. Factors influencing behavior of group-housed male rats in the social interaction test—Focus on cohort removal. Physiol. Behav. 2001, 74, 277–282. [Google Scholar] [CrossRef]

- Aslani, S.; Harb, M.; Costa, P.; Almeida, O.; Sousa, N.; Palha, J. Day and night: Diurnal phase influences the response to chronic mild stress. Front. Behav. Neurosci. 2014, 8, 82. [Google Scholar] [CrossRef]

- Roedel, A.; Storch, C.; Holsboer, F.; Ohl, F. Effects of light or dark phase testing on behavioural and cognitive performance in DBA mice. Lab. Anim. 2006, 40, 371–381. [Google Scholar] [CrossRef]

- Manzano-Szalai, K.; Pali-Schöll, I.; Krishnamurthy, D.; Stremnitzer, C.; Flaschberger, I.; Jensen-Jarolim, E. Anaphylaxis Imaging: Non-Invasive Measurement of Surface Body Temperature and Physical Activity in Small Animals. PLoS ONE 2016, 11, e0150819. [Google Scholar] [CrossRef]

- Etehadtavakol, M.; Emrani, Z.; Ng, E.Y.K. Rapid extraction of the hottest or coldest regions of medical thermographic images. Med. Biol. Eng. Comput. 2019, 57, 379–388. [Google Scholar] [CrossRef]

- Jang, E.; Park, B.; Park, M.; Kim, S.; Sohn, J. Analysis of physiological signals for recognition of boredom, pain, and surprise emotions. J. Phys. Anthropol. 2015, 34, 1–12. [Google Scholar] [CrossRef]

- Tan, C.; Knight, Z. Regulation of Body Temperature by the Nervous System. Neuron 2018, 98, 31–48. [Google Scholar] [CrossRef] [PubMed]

- Sona, D.; Zanotto, M.; Papaleo, F.; Murino, V. Automated Discovery of Behavioural Patterns in Rodents. In Proceedings of the 9th International Conference on Methods and Techniques in Behavioral Research, Wageningen, The Netherlands, 27–29 August 2014. [Google Scholar]

- Koniar, D.; Hargaš, L.; Loncová, Z.; Simonová, A.; Duchoň, F.; Beňo, P. Visual system-based object tracking using image segmentation for biomedical applications. Electr. Eng. 2017, 99, 1349–1366. [Google Scholar] [CrossRef]

- Fleuret, J.; Ouellet, V.; Moura-Rocha, L.; Charbonneau, É.; Saucier, L.; Faucitano, L.; Maldague, X. A Real Time Animal Detection And Segmentation Algorithm For IRT Images In Indoor Environments. Quant. InfraRed Thermogr. 2016, 265–274. [Google Scholar]

- Kim, W.; Cho, Y.B.; Lee, S. Thermal Sensor-Based Multiple Object Tracking for Intelligent Livestock Breeding. IEEE Access 2017, 5, 27453–27463. [Google Scholar] [CrossRef]

- Mazur-Milecka, M.; Ruminski, J. Deep learning based thermal image segmentation for laboratory animals tracking. Quant. InfraRed Thermogr. J. 2020, 1–18. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Milletari, F.; Navab, N.; Ahmadi, S. V-Net: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation. In Proceedings of the IEEE 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 565–571. [Google Scholar]

- Hariharan, B.; Arbelaez, P.; Girshick, R.B.; Malik, J. Simultaneous Detection and Segmentation. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2014; pp. 297–312. [Google Scholar]

- Lin, T.; Maire, M.; Belongie, S.J.; Bourdev, L.D.; Girshick, R.B.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Dai, J.; He, K.; Sun, J. Instance-aware Semantic Segmentation via Multi-task Network Cascades. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3150–3158. [Google Scholar]

- Chen, L.; Hermans, A.; Papandreou, G.; Schroff, F.; Wang, P.; Adam, H. MaskLab: Instance Segmentation by Refining Object Detection with Semantic and Direction Features. arXiv 2017, arXiv:1712.04837. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R.B. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Huang, Z.; Huang, L.; Gong, Y.; Huang, C.; Wang, X. Mask Scoring R-CNN. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 6409–6418. [Google Scholar]

- Girshick, R.B. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. arXiv 2015, arXiv:1506.01497. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Chen, K.; Pang, J.; Wang, J.; Xiong, Y.; Li, X.; Sun, S.; Feng, W.; Liu, Z.; Shi, J.; Ouyang, W.; et al. Hybrid Task Cascade for Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 4974–4983. [Google Scholar]

- Yao, J.; Yu, Z.; Yu, J.; Tao, D. Single Pixel Reconstruction for One-stage Instance Segmentation. arXiv 2019, arXiv:1904.07426. [Google Scholar]

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. YOLACT: Real-time Instance Segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 9157–9166. [Google Scholar]

- Xiang, C.; Tian, S.; Zou, W.; Xu, C. SAIS: Single-stage Anchor-free Instance Segmentation. arXiv 2019, arXiv:cs.CV/1912.01176. [Google Scholar]

- Ying, H.; Huang, Z.; Liu, S.; Shao, T.; Zhou, K. EmbedMask: Embedding Coupling for One-stage Instance Segmentation. arXiv 2019, arXiv:cs.CV/1912.01954. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully Convolutional One-Stage Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 9627–9636. [Google Scholar]

- Chen, X.; Girshick, R.B.; He, K.; Dollár, P. TensorMask: A Foundation for Dense Object Segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 2061–2069. [Google Scholar]

- Mazur-Milecka, M.; Ruminski, J. Automatic analysis of the aggressive behavior of laboratory animals using thermal video processing. In Proceedings of the IEEE Conference of the Engineering in Medicine and Biology Society, EMBC, Seogwipo, Korea, 11–15 July 2017; pp. 3827–3830. [Google Scholar]

- Abdulla, W. Mask R-CNN for Object Detection and Instance Segmentation on Keras and TensorFlow. 2017. Available online: https://github.com/matterport/Mask_RCNN (accessed on 1 February 2020).

- Wu, Y.; Kirillov, A.; Massa, F.; Lo, W.Y.; Girshick, R. Detectron2. 2019. Available online: https://github.com/facebookresearch/detectron2 (accessed on 1 May 2020).

- He, K.; Girshick, R.; Dollar, P. Rethinking ImageNet Pre-Training. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27–28 October 2019; pp. 4917–4926. [Google Scholar]

- Wu, Y.; He, K. Group Normalization. Int. J. Comput. Vis. 2019. [Google Scholar] [CrossRef]

- Dutta, A.; Zisserman, A. The VIA Annotation Software for Images, Audio and Video. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; ACM: New York, NY, USA, 2019. [Google Scholar] [CrossRef]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Gupta, A.; Dollár, P.; Girshick, R. LVIS: A Dataset for Large Vocabulary Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 5356–5364. [Google Scholar]

- Lu, Y.; Lu, C.; Tang, C. Online Video Object Detection Using Association LSTM. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2363–2371. [Google Scholar]

- Oksuz, K.; Cam, B.C.; Akbas, E.; Kalkan, S. Localization Recall Precision (LRP): A New Performance Metric for Object Detection. In Computer Vision—ECCV 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 521–537. [Google Scholar]

| Transfer Learning | Training from Scratch | ||||||

|---|---|---|---|---|---|---|---|

| Mask R-CNN | TensorMask | Mask R-CNN | TensorMask | ||||

| Batch | Epochs | Batch | Epochs | Batch | Epochs | Batch | Epochs |

| 2 | 1000 | 2 | 2000 | 4 | 16,000 | 2 | 100,000 |

| 4 | 1000 | 4 | 1000 | 4 | 24,000 | 4 | 100,000 |

| 4 | 2000 | 4 | 2000 | 8 | 16,000 | ||

| 8 | 2000 | 8 | 24,000 | ||||

| 2 | 100,000 | ||||||

| mAP | AP | AP | mAP | AP | AP | |

|---|---|---|---|---|---|---|

| orig | bbox | segm | ||||

| 2 batch, 1000 epochs | 88.641 | 100 | 98.99 | 89.007 | 100 | 98.99 |

| 4 batch, 1000 epochs | 89.025 | 100 | 100 | 89.444 | 100 | 100 |

| 4 batch, 2000 epochs | 88.781 | 100 | 99 | 89.068 | 100 | 99 |

| ch1 | bbox | segm | ||||

| 2 batch, 1000 epochs | 87.332 | 100 | 100 | 89.289 | 100 | 100 |

| 4 batch, 1000 epochs | 86.942 | 100 | 100 | 89.715 | 100 | 100 |

| 4 batch, 2000 epochs | 90.205 | 100 | 100 | 89.655 | 100 | 100 |

| ch2 | ||||||

| 2 batch, 1000 epochs | 85.252 | 100 | 99 | 87.323 | 100 | 100 |

| 4 batch, 1000 epochs | 85.847 | 100 | 98.98 | 88.256 | 100 | 100 |

| 4 batch, 2000 epochs | 87.915 | 100 | 98.98 | 88.893 | 100 | 100 |

| ch3 | ||||||

| 2 batch, 1000 epochs | 63.719 | 99.114 | 73.409 | 52.901 | 93.649 | 59.722 |

| 4 batch, 1000 epochs | 69.028 | 99.417 | 80.98 | 55.181 | 97.284 | 60.683 |

| 4 batch, 2000 epochs | 73.908 | 99.952 | 87.824 | 65.687 | 98.902 | 83.376 |

| 16-bit | bbox | segm | ||||

| 2 batch, 1000 epochs | 88.128 | 100 | 99.01 | 89.604 | 100 | 100 |

| 4 batch, 1000 epochs | 89.965 | 100 | 100 | 89.194 | 100 | 100 |

| 4 batch, 2000 epochs | 90.549 | 100 | 100 | 89.417 | 100 | 100 |

| mAP | AP | AP | mAP | AP | AP | |

|---|---|---|---|---|---|---|

| orig | bbox | segm | ||||

| 4 batch, 16,000 epochs | 81.811 | 100 | 98.307 | 85.586 | 100 | 100 |

| 4 batch, 24,000 epochs | 82.567 | 100 | 100 | 86.864 | 100 | 100 |

| 8 batch, 16,000 epochs | 81.658 | 100 | 100 | 84.728 | 100 | 98.584 |

| 8 batch, 24,000 epochs | 84.465 | 100 | 100 | 86.224 | 100 | 100 |

| 2 batch, 100,000 epochs | 86.695 | 100 | 100 | 89.569 | 100 | 100 |

| ch1 | ||||||

| 4 batch, 16,000 epochs | 82.085 | 100 | 100 | 83.666 | 100 | 100 |

| 4 batch, 24,000 epochs | 84.368 | 100 | 100 | 85.821 | 100 | 100 |

| 8 batch, 16,000 epochs | 81.426 | 100 | 100 | 84.038 | 100 | 100 |

| 8 batch, 24,000 epochs | 85.153 | 100 | 98.772 | 86.389 | 100 | 100 |

| 2 batch, 100,000 epochs | 86.564 | 100 | 100 | 87.208 | 100 | 100 |

| ch2 | ||||||

| 4 batch, 16,000 epochs | 80.629 | 100 | 98.95 | 80.896 | 100 | 100 |

| 4 batch, 24,000 epochs | 82.979 | 100 | 100 | 84.834 | 100 | 100 |

| 8 batch, 16,000 epochs | 80.983 | 100 | 100 | 79.931 | 100 | 100 |

| 8 batch, 24,000 epochs | 83.091 | 100 | 100 | 84.428 | 100 | 100 |

| 2 batch, 100,000 epochs | 82.55 | 100 | 97.55 | 82.397 | 100 | 100 |

| ch3 | ||||||

| 4 batch, 16,000 epochs | 60.947 | 97.766 | 73.004 | 48.183 | 93.325 | 47.4 |

| 4 batch, 24,000 epochs | 67.739 | 98.113 | 79.816 | 58.782 | 96.882 | 69.92 |

| 8 batch, 16,000 epochs | 65.355 | 97.874 | 74.09 | 54.855 | 96.873 | 64.968 |

| 8 batch, 24,000 epochs | 66.014 | 98.4 | 78.156 | 57.452 | 95.887 | 63.699 |

| 2 batch, 100,000 epochs | 66.369 | 98.504 | 84.891 | 56.116 | 94.891 | 67.155 |

| 16-bit | ||||||

| 4 batch, 16,000 epochs | 82.826 | 100 | 98.842 | 84.561 | 100 | 100 |

| 4 batch, 24,000 epochs | 84.29 | 100 | 100 | 86.111 | 100 | 100 |

| 8 batch, 16,000 epochs | 83.493 | 100 | 97.82 | 85.313 | 100 | 100 |

| 8 batch, 24,000 epochs | 85.596 | 100 | 100 | 86.607 | 100 | 100 |

| 2 batch, 100,000 epochs | 84.862 | 100 | 100 | 85.877 | 100 | 100 |

| mAP | AP | AP | mAP | AP | AP | |

|---|---|---|---|---|---|---|

| orig | bbox | segm | ||||

| 2 batch, 2000 epochs | 90.646 | 100 | 98.01 | 89.82 | 100 | 100 |

| 4 batch, 1000 epochs | 91.264 | 100 | 99.01 | 89.877 | 100 | 99.01 |

| 4 batch, 2000 epochs | 90.916 | 100 | 99.01 | 90.158 | 100 | 100 |

| 8 batch, 2000 epochs | 90.672 | 100 | 98.96 | 89.824 | 100 | 100 |

| ch1 | ||||||

| 2 batch, 2000 epochs | 89.091 | 100 | 100 | 89.808 | 100 | 100 |

| 4 batch, 1000 epochs | 89.624 | 100 | 100 | 89.547 | 100 | 99.01 |

| 4 batch, 2000 epochs | 90.299 | 100 | 100 | 90.171 | 100 | 99.01 |

| 8 batch, 2000 epochs | 90.052 | 100 | 100 | 90.087 | 100 | 99.01 |

| ch2 | ||||||

| 2 batch, 2000 epochs | 88.319 | 100 | 100 | 89.496 | 100 | 100 |

| 4 batch, 1000 epochs | 88.17 | 100 | 100 | 87.949 | 100 | 100 |

| 4 batch, 2000 epochs | 87.912 | 100 | 98.931 | 89.335 | 100 | 100 |

| 8 batch, 2000 epochs | 88.828 | 100 | 100 | 89.264 | 100 | 100 |

| ch3 | ||||||

| 2 batch, 2000 epochs | 74.37 | 99.933 | 88.433 | 65.062 | 99.933 | 80.743 |

| 4 batch, 1000 epochs | 71.325 | 99.834 | 84.895 | 60.251 | 99.602 | 69.757 |

| 4 batch, 2000 epochs | 75.031 | 99.961 | 91.558 | 64.849 | 99.423 | 79.397 |

| 8 batch, 2000 epochs | 76.159 | 100 | 91.406 | 65.307 | 99 | 83.105 |

| 16-bit | ||||||

| 2 batch, 2000 epochs | 91.248 | 100 | 100 | 90.249 | 100 | 100 |

| 4 batch, 1000 epochs | 90.443 | 100 | 98.951 | 89.857 | 100 | 98.951 |

| 4 batch, 2000 epochs | 91.609 | 100 | 100 | 90.199 | 100 | 100 |

| 8 batch, 2000 epochs | 90.939 | 100 | 100 | 90.171 | 100 | 98.99 |

| mAP | AP | AP | mAP | AP | AP | |

|---|---|---|---|---|---|---|

| orig | bbox | segm | ||||

| 2 batch, 100,000 epochs | 78.235 | 100 | 92.41 | 79.615 | 100 | 95.535 |

| 4 batch, 100,000 epochs | 76.413 | 99.99 | 95.435 | 80.371 | 99.99 | 91.678 |

| ch1 | ||||||

| 2 batch, 100,000 epochs | 78.862 | 100 | 98.307 | 77.724 | 100 | 90.687 |

| 4 batch, 100,000 epochs | 77.725 | 100 | 94.535 | 81.259 | 100 | 95.188 |

| ch2 | ||||||

| 2 batch, 100,000 epochs | 64.42 | 99.18 | 69.516 | 62.307 | 96.206 | 71.867 |

| 4 batch, 100,000 epochs | 75.275 | 99.833 | 90.342 | 74.431 | 99.833 | 89.674 |

| ch3 | ||||||

| 2 batch, 100,000 epochs | 44.557 | 84.367 | 40.402 | 13.097 | 60.133 | 0.012 |

| 4 batch, 100,000 epochs | 55.982 | 95.072 | 57.009 | 32.752 | 89.025 | 16.88 |

| 16-bit | ||||||

| 2 batch, 100,000 epochs | 78.112 | 99.99 | 97.29 | 80.198 | 99.99 | 94.435 |

| 4 batch, 100,000 epochs | 76.296 | 100 | 90.662 | 81.267 | 100 | 94.887 |

| mAP | AP | AP | mAP | AP | AP | |

|---|---|---|---|---|---|---|

| Mask R-CNN | bbox | segm | ||||

| MS COCO—implementation [37] | 4.14 | 13.39 | 1.4 | 5.36 | 14.44 | 1.05 |

| MS COCO—implementation [36] | 7.62 | 13.5 | 7.62 | - | - | - |

| Citiscapes | 0.06 | 0.3 | 0 | 0.01 | 0.09 | 0 |

| LVIS | 0.71 | 3.45 | 0.04 | 0 | 0 | 0 |

| TensorMask | ||||||

| MS COCO—implementation [37] | 1.69 | 3.41 | 1.29 | 3.06 | 6.31 | 2.6 |

| Mask Pre-Trained | Mask From Scratch | TensorMask Pre-Trained | TensorMask From Scratch | |||||

|---|---|---|---|---|---|---|---|---|

| mAP | bbox | segm | bbox | segm | bbox | segm | bbox | segm |

| orig | ||||||||

| 1. fold | 88.8 | 89.1 | 83.6 | 85.9 | 90.9 | 90.2 | 78.2 | 79.6 |

| 2. fold | 92.0 | 89.5 | 84.7 | 87.6 | 91.9 | 90.5 | 73.9 | 70.6 |

| 3. fold | 89.7 | 89.2 | 87.7 | 88.0 | 90.5 | 90.2 | 70.6 | 76.6 |

| average | 90.2 | 89.3 | 85.3 | 87.2 | 91.1 | 90.3 | 74.2 | 75.6 |

| ch1 | ||||||||

| 1. fold | 90.2 | 89.7 | 84.6 | 87.2 | 90.3 | 90.2 | 78.9 | 77.7 |

| 2. fold | 88.4 | 89.1 | 88.3 | 88.9 | 91.3 | 90.4 | 78.7 | 77.8 |

| 3. fold | 89.6 | 89.4 | 88.3 | 89.0 | 90.2 | 89.8 | 75 | 74.4 |

| average | 89.4 | 89.4 | 87.1 | 88.4 | 90.6 | 90.1 | 77.5 | 76.6 |

| ch2 | ||||||||

| 1. fold | 87.9 | 88.9 | 82.6 | 82.4 | 87.9 | 89.3 | 64.4 | 62.3 |

| 2. fold | 84.7 | 86.4 | 86.6 | 88.2 | 89.1 | 89.0 | 66 | 57.2 |

| 3. fold | 89.5 | 88.1 | 85.0 | 87.7 | 88.3 | 88.9 | 65.7 | 54.3 |

| average | 87.4 | 87.8 | 84.7 | 86.1 | 88.4 | 89.1 | 65.4 | 57.9 |

| ch3 | ||||||||

| 1. fold | 73.9 | 65.7 | 66.4 | 56.1 | 75.0 | 64.8 | 44.6 | 13.1 |

| 2. fold | 63.0 | 51.9 | 65.6 | 57 | 70.9 | 61.9 | 40.2 | 11.2 |

| 3. fold | 72.6 | 62.9 | 66.6 | 62.4 | 71.3 | 62.5 | 36.6 | 11.2 |

| average | 69.8 | 60.2 | 66.2 | 58.5 | 72.4 | 63.1 | 40.5 | 11.8 |

| 16-bit | ||||||||

| 1. fold | 90.5 | 89.4 | 84.9 | 85.9 | 91.6 | 90.2 | 78.1 | 80.2 |

| 2. fold | 89.2 | 89.4 | 85.8 | 88.5 | 92.5 | 90.8 | 76.8 | 77.6 |

| 3. fold | 90.0 | 89.1 | 86.6 | 87.8 | 90.6 | 89.9 | 71.5 | 73.9 |

| average | 89.9 | 89.3 | 85.8 | 87.4 | 91.6 | 90.3 | 75.5 | 77.2 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mazur-Milecka, M.; Kocejko, T.; Ruminski, J. Deep Instance Segmentation of Laboratory Animals in Thermal Images. Appl. Sci. 2020, 10, 5979. https://doi.org/10.3390/app10175979

Mazur-Milecka M, Kocejko T, Ruminski J. Deep Instance Segmentation of Laboratory Animals in Thermal Images. Applied Sciences. 2020; 10(17):5979. https://doi.org/10.3390/app10175979

Chicago/Turabian StyleMazur-Milecka, Magdalena, Tomasz Kocejko, and Jacek Ruminski. 2020. "Deep Instance Segmentation of Laboratory Animals in Thermal Images" Applied Sciences 10, no. 17: 5979. https://doi.org/10.3390/app10175979

APA StyleMazur-Milecka, M., Kocejko, T., & Ruminski, J. (2020). Deep Instance Segmentation of Laboratory Animals in Thermal Images. Applied Sciences, 10(17), 5979. https://doi.org/10.3390/app10175979