On Modeling and Simulation of Resource Allocation Policies in Cloud Computing Using Colored Petri Nets

Abstract

1. Introduction

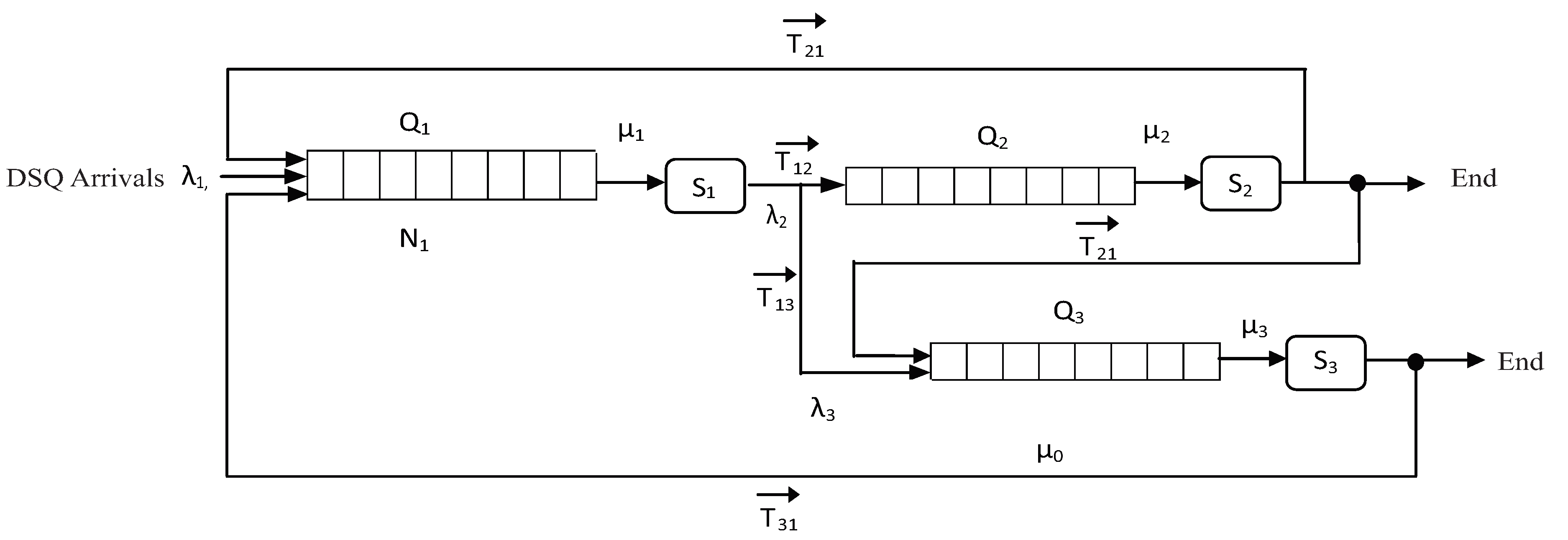

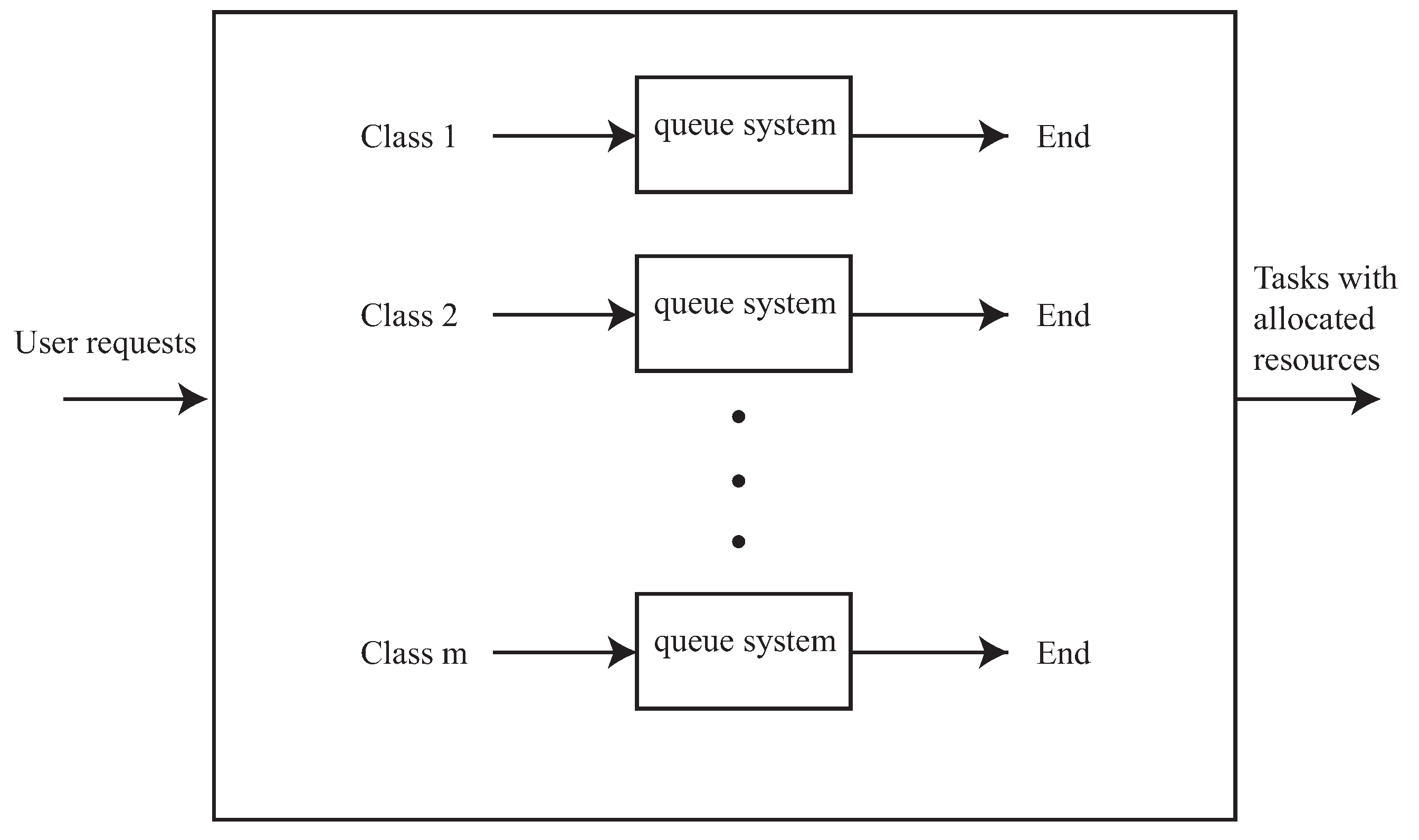

2. Resource Allocation Model

3. The CPN Model

3.1. The CPN Formalism

3.1.1. Data Extensions

- 1.

- A set of places P

- 2.

- A set of transitions T

- 3.

- An input function I

- 4.

- An output function O

3.1.2. Timing Extensions

3.1.3. Guarding Expressions

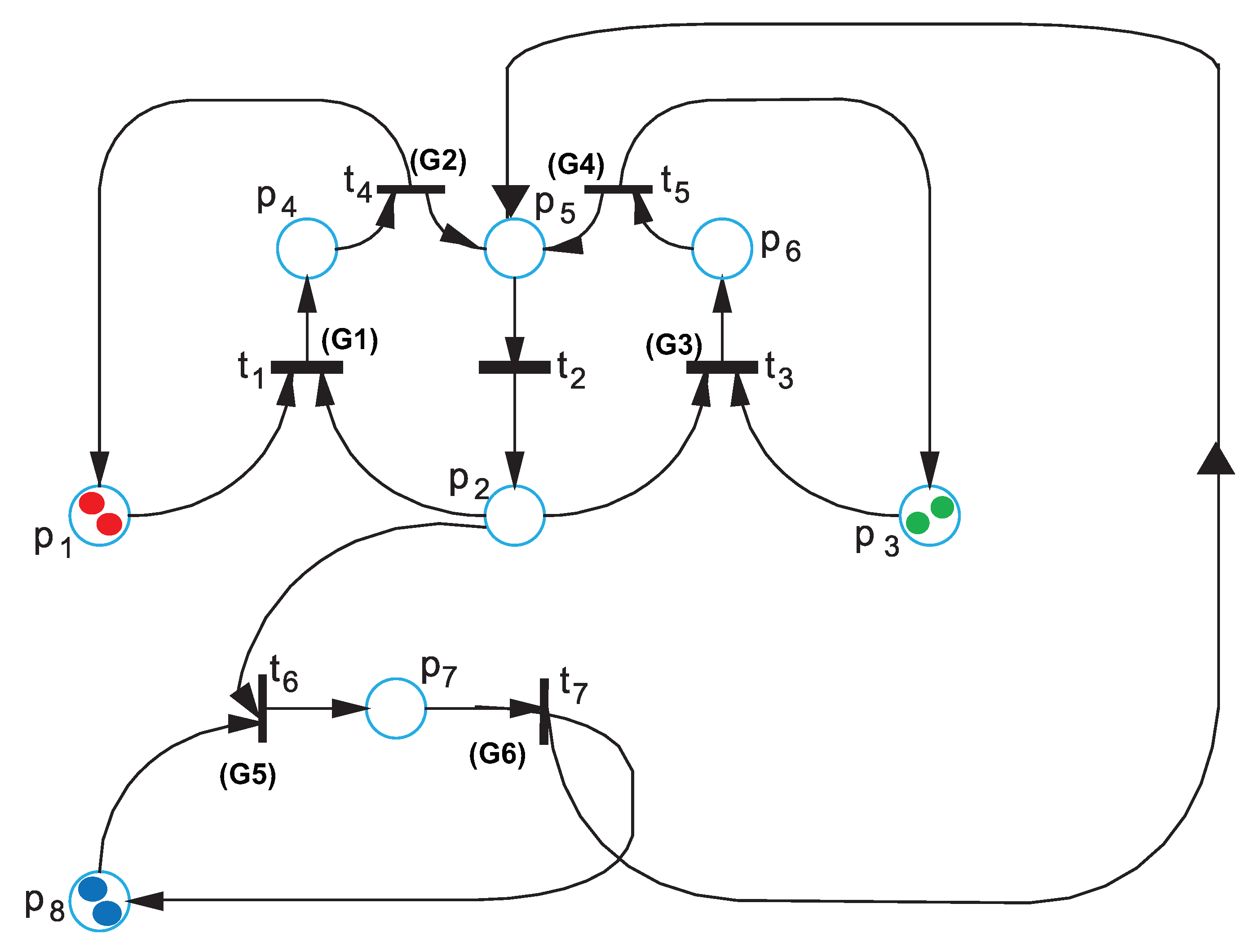

3.2. The CPN Model

3.2.1. The Core of Queue Members of a Queue System

- (1)

- : The transition from the DSQ to , in other words, the dominant resources have been allocated to a job, which then applies for resources from server , thus it enters its queue.

- (2)

- : The transition from the DSQ to , in other words, the dominant resources have been allocated to a job, which then apply for resources from server , thus it enters its queue.

- (3)

- : The transition from the to the DSQ; the requested resources have been allocated from to a job, that returns to request extra resources from the DSQ, thus it re-enters its queue.

- (4)

- : The transition from the to the DSQ; the requested resources have been allocated from to a job, that returns to request extra resources from the DSQ, thus it re-enters its queue.

- Places

- : Jobs requesting DSQ resources

- : Processing of next job request

- : Jobs requesting resources from server

- : Fair allocation policy over DSQ

- : All ToRRs (Type of Resources Required, see Section 3.2.2 where the token structure is described) updated, Next job selection

- : Fair allocation policy over

- : Fair allocation policy over

- : Jobs requesting resources from server

- Transitions

- : Compute DSQ resources to be allocated for the job in a fair way

- : Process the next job’s allocation request,

- : Compute resources to be allocated for the job in a fair way

- : Allocate DSQ resources, update ToRRs accordingly

- : Allocate resources

- : Compute resources to be allocated for the job in a fair way

- : Allocate resources

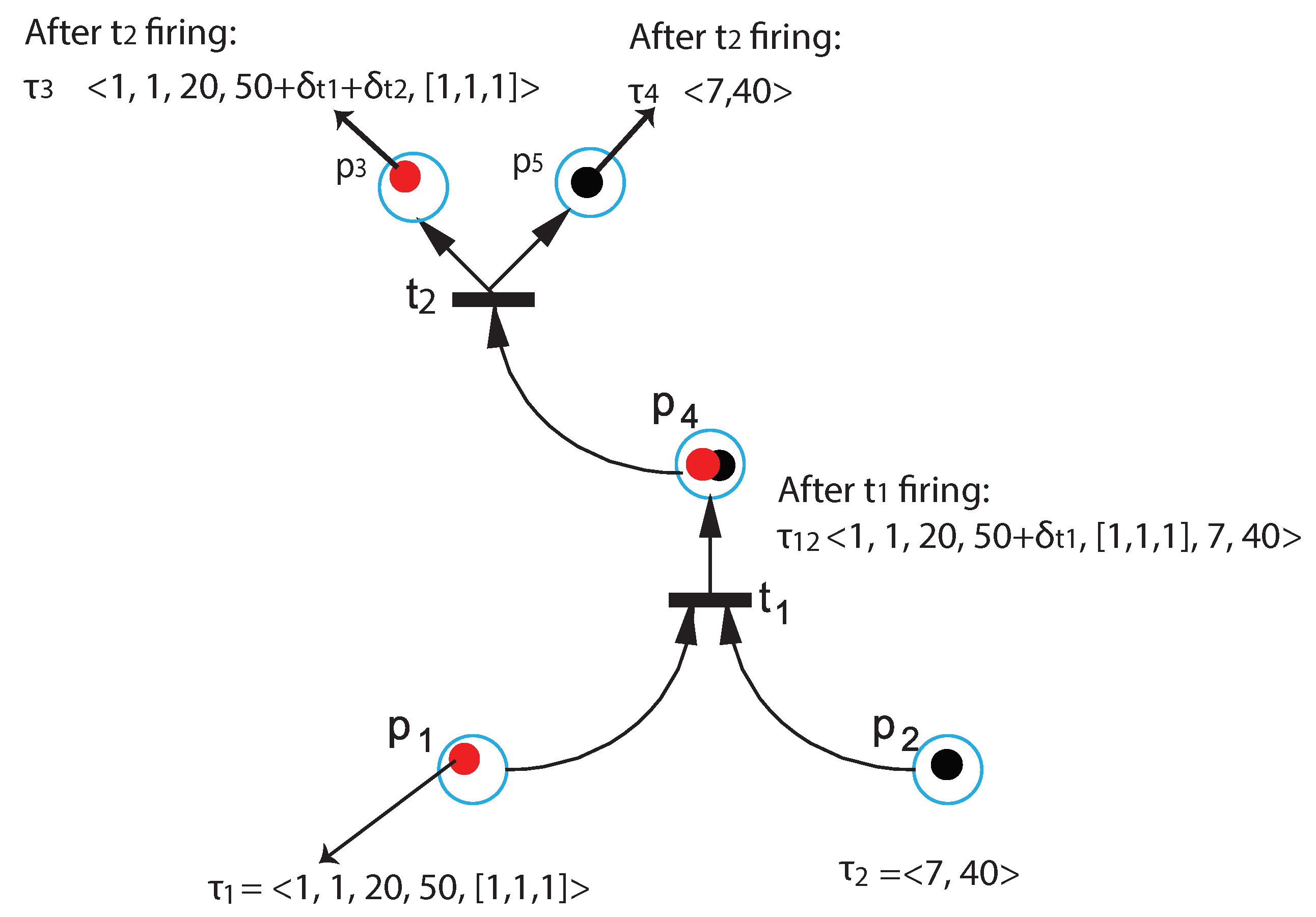

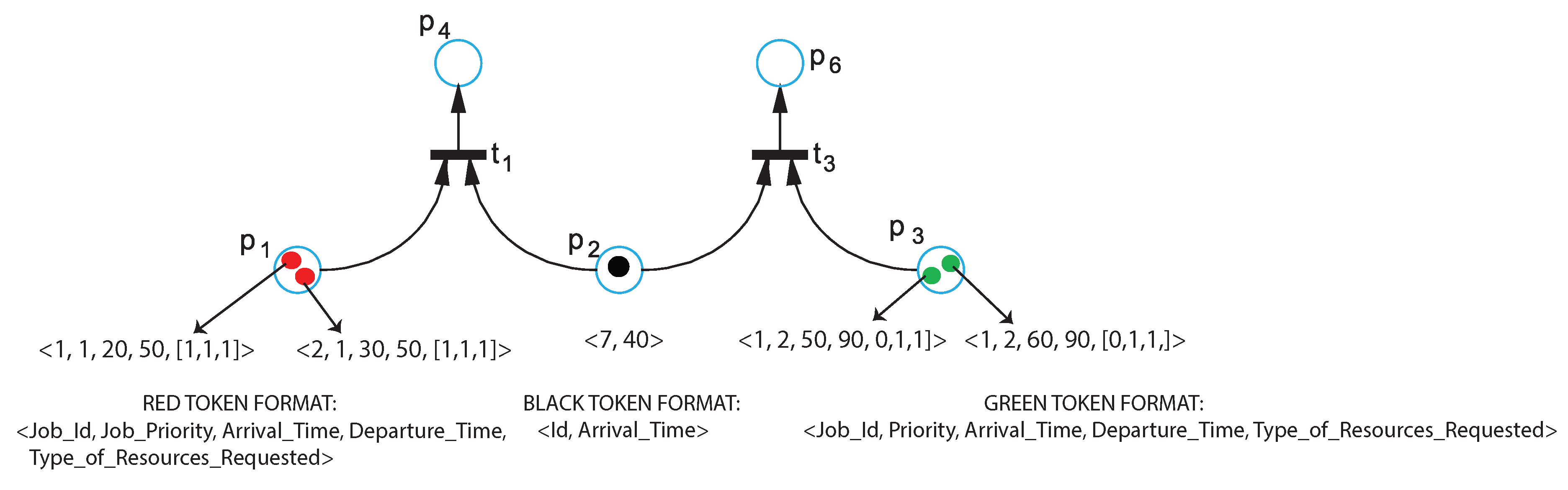

3.2.2. Token Structure

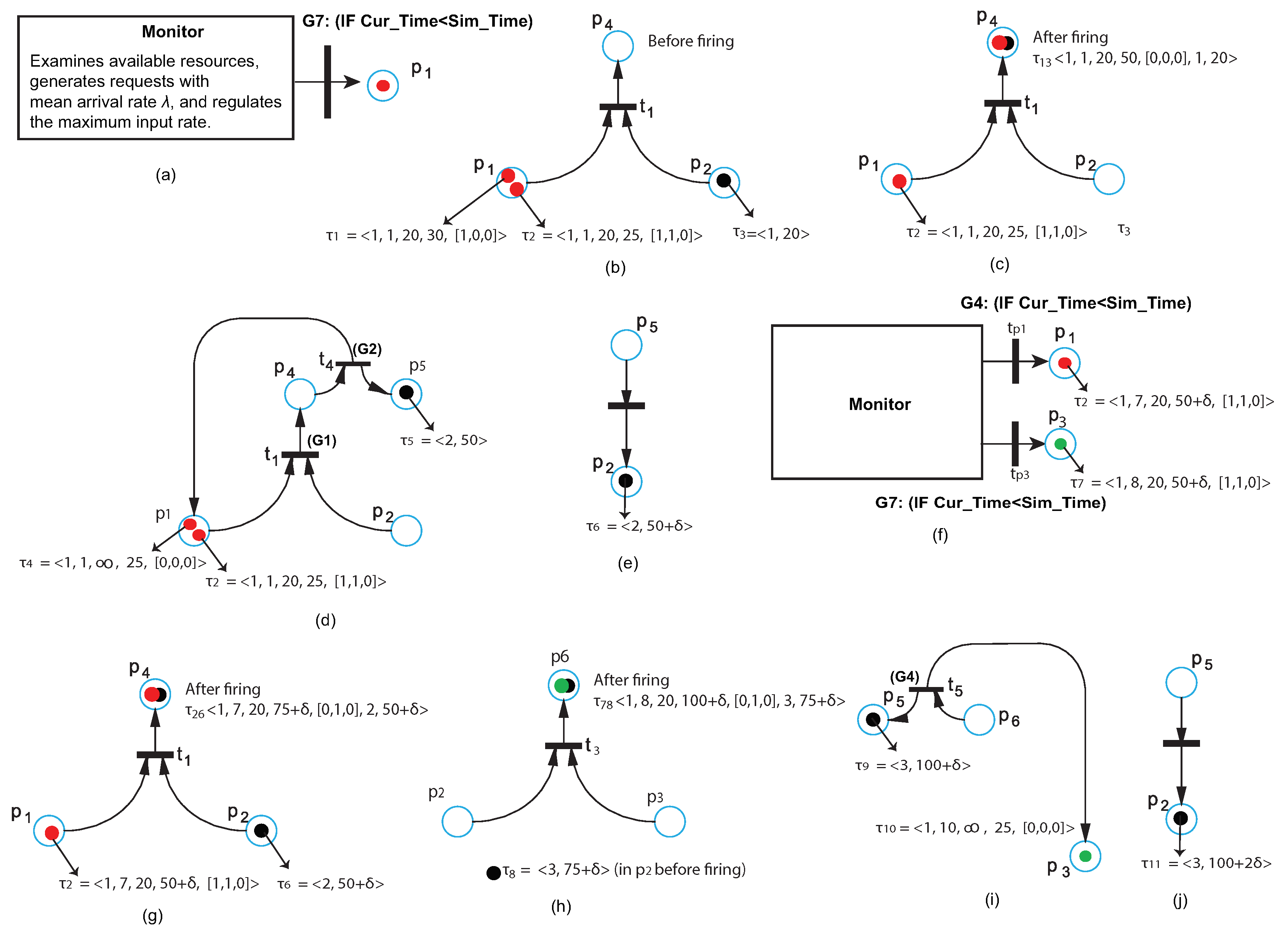

3.2.3. The Guard Expressions

3.2.4. Transition Firing Time

- Step 1:

- For the places that share a transition: Use the Arrival_Time values as time stamps, and compare all the arrival times for all the tokens of each place. Then, obtain the tokens with the minimum time stamp per place.

- Step 2:

- Find the max values from the tokens found in Step 1. Symbolically, we denote these values as , where i is the transition number. This max value gives the transition to fire next and the time of this firing.

- Step 3:

- If more than one transitions are active at a time, we find their firing time using the two steps above and then we choose the one withe the minimum firing time.

3.2.5. Deadlock Analysis

3.3. Complexity Analysis: Execution Timing of the CPN Model

4. Simulation Results and Discussion

4.1. CPNRA Simulator Execution

- 1.

- The monitor will place a red, a green, and a blue token to , and respectively, with initial ToRR = [1,1,1].

- 2.

- Transition will have priority over and until the DSQ request is fulfilled. Then, a token with infinite time stamp will be placed to .

- 3.

- Transition will have priority over until the request is fulfilled. Then, a token with infinite time stamp will be placed to .

- 4.

- Transition will have the lowset priority. When the request is fulfilled, a token with infinite time stamp will be placed to .

4.2. Experiments with CPNRA

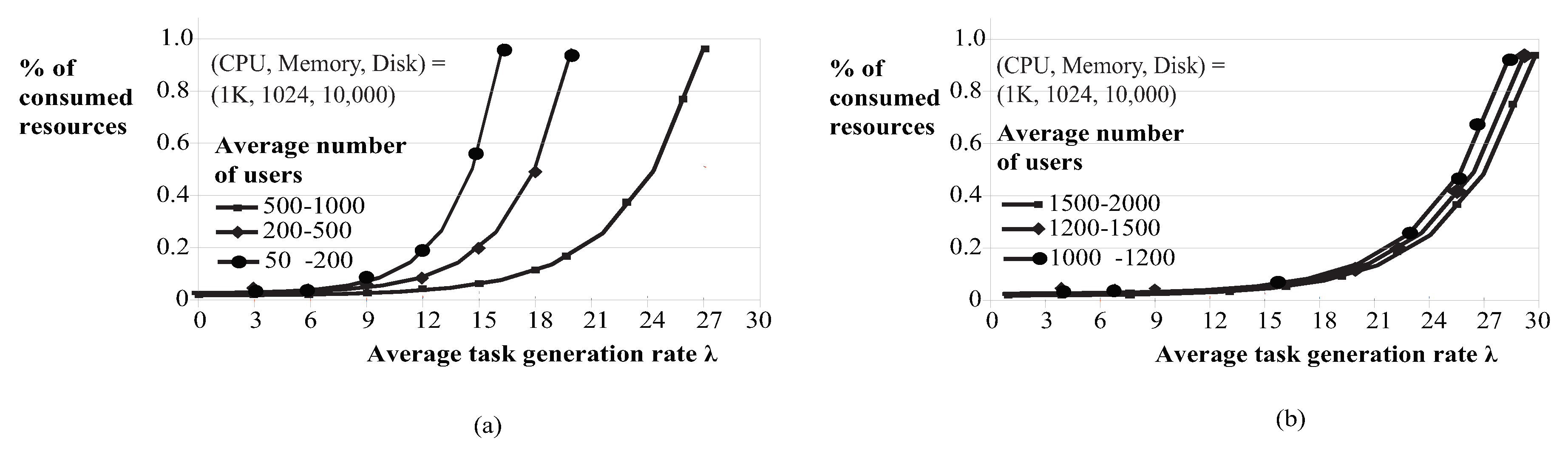

4.2.1. Job Input Rate Control

- 1.

- We generated a random number of users, from 50–2000, and a set of requests for each user. We run two sets of simulations. In every experiment, we used different total amounts of each available resource (CPU, Memory, Disk), so that in some cases the resources available were enough to satisfy all user requests, while in other cases, they were not. For example, in one experiment, the total number of resources was (1 K, 1024, 10,000), that is (1024 CPUs, 1 Tb memory, 100 Tb disk) while for next this number could be double or half and so forth.

- 2.

- We set the value of equal to 30 jobs per second, thus, the system’s input rate was at most jobs per second.

- 3.

- We kept tracing the system’s state at regular time intervals h and recorded the percentage of resources consumed between consecutive time intervals, thus, we computed the values. Every time a job i leaves a queue, the system’s state changes. For example, if a job leaves the DSQ, it means that it has consumed F units of the dominant resource, changing the system’s state from to . On the other hand, when multiple jobs enters a queue, the acceptable job input may be regulated accordingly, based on the model presented in Section 2.

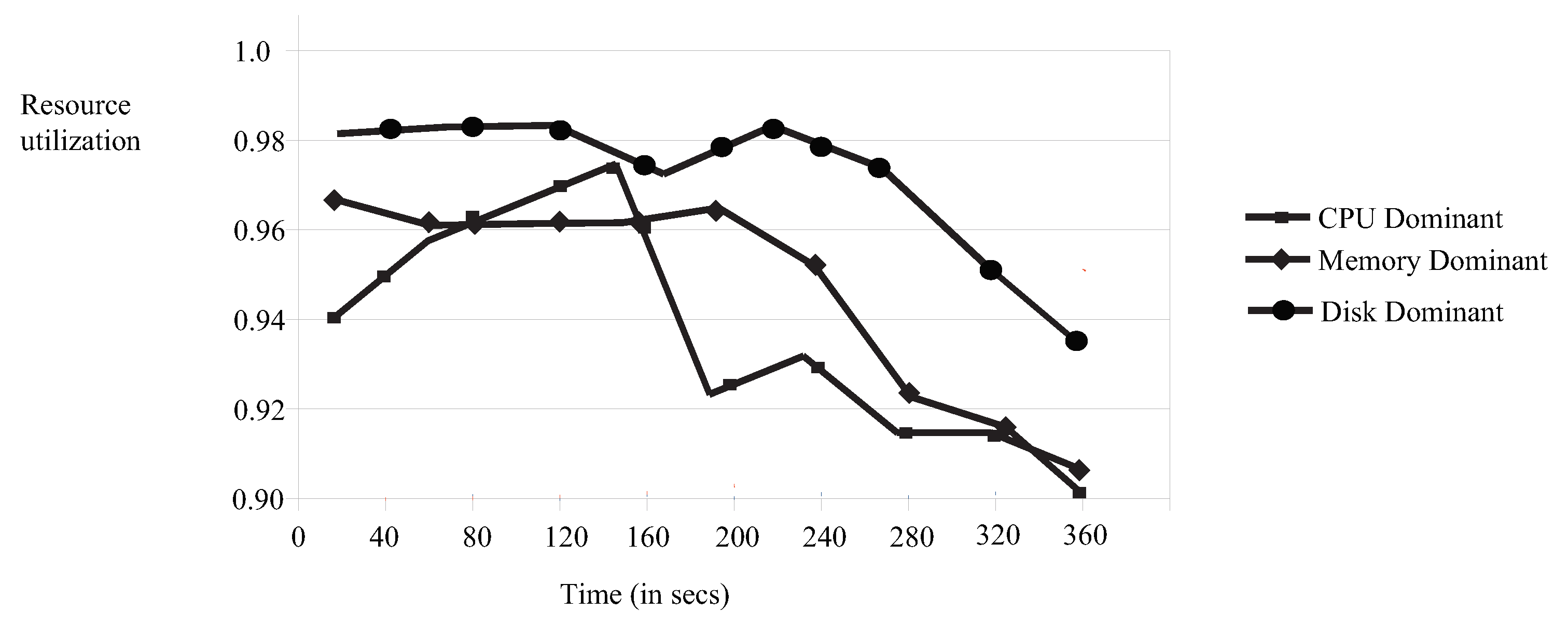

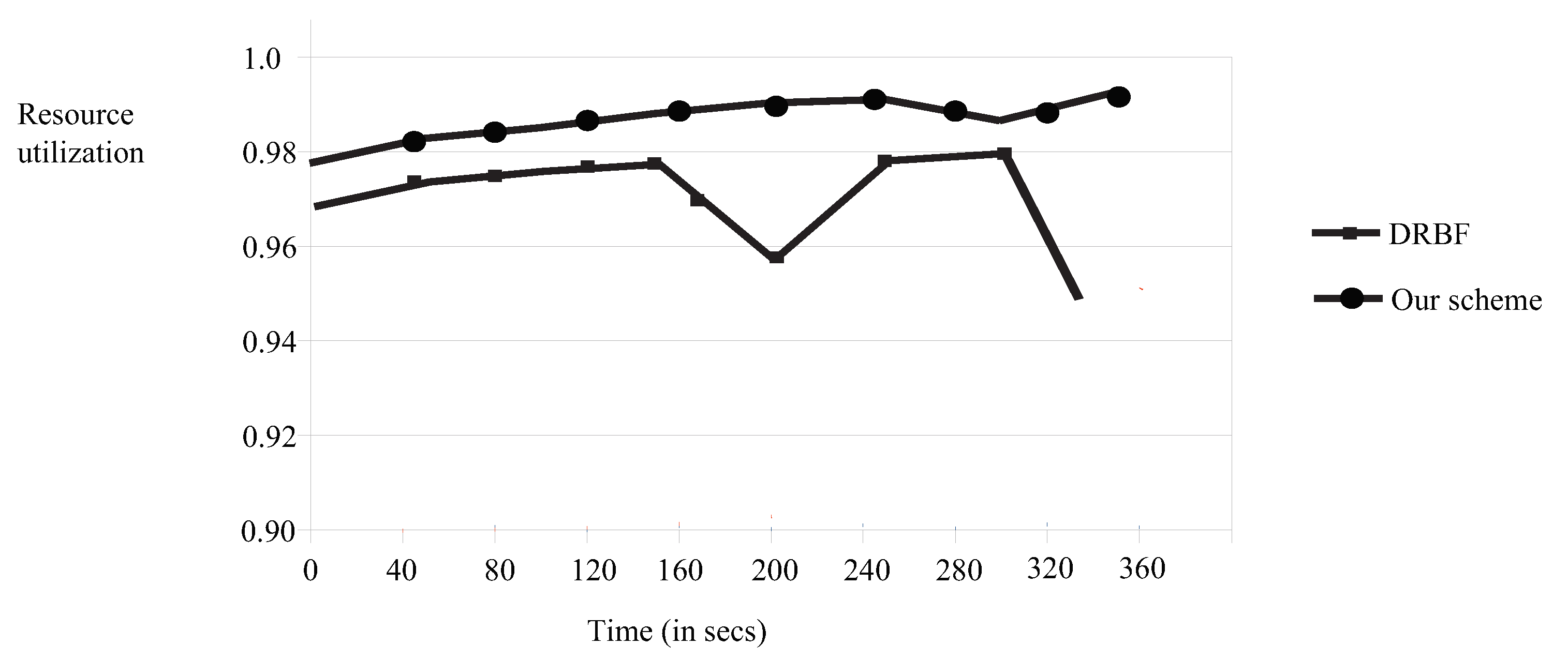

4.2.2. Resource Utilization

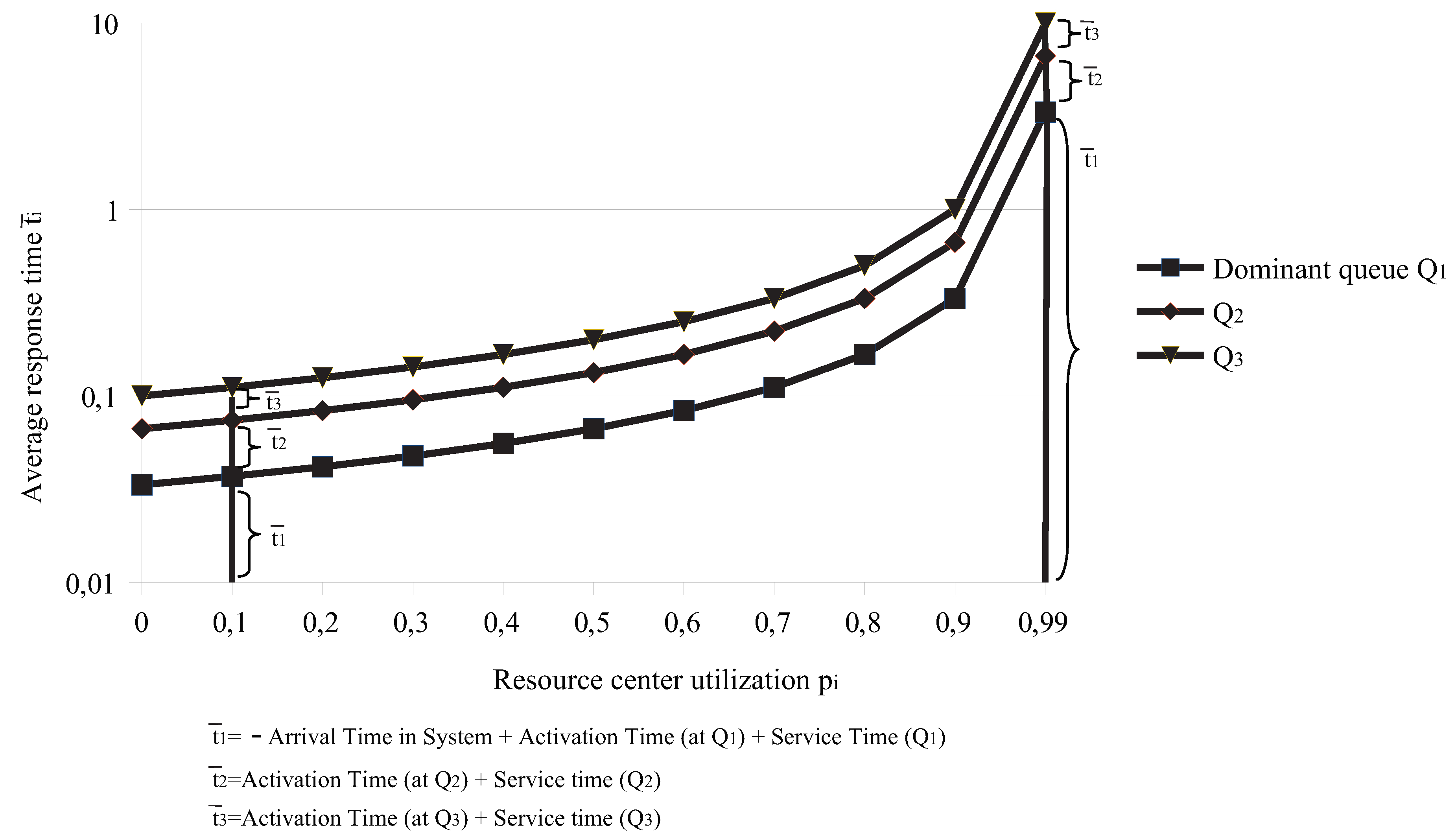

4.2.3. Average Response Time

5. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| PN | Petri Nets |

| CPN | Colored Petri Nets |

| CPNRA | Colored Petri Net-based Resource Allocation |

| ToRR | Type_of_Resources_Requested |

References

- Souravlas, S.; Katsavounis, S. Scheduling Fair Resource Allocation Policies for Cloud Computing through Flow Control. Electronics 2019, 8, 1348. [Google Scholar] [CrossRef]

- Lu, Z.; Takashige, S.; Sugita, Y.; Morimura, T.; Kudo, Y. An analysis and comparison of cloud data center energy-efficient resource management technology. Int. J. Serv. Comput. 2014, 23, 32–51. [Google Scholar] [CrossRef]

- Jennings, B.; Stadler, R. Resource management in clouds: Survey and research challenges. J. Netw. Syst. Manag. 2015, 2, 567–619. [Google Scholar] [CrossRef]

- Tantalaki, N.; Souravlas, S.; Roumeliotis, M.; Katsavounis, S. Pipeline-Based Linear Scheduling of Big Data Streams in the Cloud. IEEE Access 2020, 8, 117182–117202. [Google Scholar] [CrossRef]

- Tantalaki, N.; Souravlas, S.; Roumeliotis, M.; Katsavounis, S. Linear Scheduling of Big Data Streams on Multiprocessor Sets in the Cloud. In Proceedings of the 2019 IEEE/WIC/ACM International Conference on Web Intelligence (WI), Thessaloniki, Greece, 14–17 October 2019; pp. 107–115. [Google Scholar]

- Alam, A.B.; Zulkernine, M.; Haque, A. A reliability-based resource allocation approach for cloud computing. In Proceedings of the 2017 7th IEEE International Symposium on Cloud and Service Computing, Kanazawa, Japan, 22–25 November 2017; pp. 249–252. [Google Scholar]

- Kumar, N.; Saxena, S. A preference-based resource allocation in cloud computing systems. In Proceedings of the 3rd International Conference on Recent Trends in Computing 2015 (ICRTC-2015), Delhi, India, 12–13 March 2015; pp. 104–111. [Google Scholar]

- Lin, W.; Wang, J.Z.; Liang, C.; Qi, D. A threshold-based dynamic resource allocation scheme for cloud computing. Procedia Eng. 2011, 23, 695–703. [Google Scholar] [CrossRef]

- Tran, T.T.; Padmanabhan, M.; Zhang, P.Y.; Li, H.; Down, D.G.; Beck, J.C. Multi-stage resource-aware scheduling for data centers with heterogeneous servers. J. Sched. 2015, 21, 251–267. [Google Scholar] [CrossRef]

- Hu, Y.; Wong, J.; Iszlai, G.; Litoiu, M. Resource provisioning for cloud computing. In Proceedings of the 2009 Conference of the Center for Advanced Studies on Collaborative Research, Toronto, ON, Canada, 2–5 November 2009; pp. 101–111. [Google Scholar]

- Khanna, A. RAS: A novel approach for dynamic resource allocation. In Proceedings of the 1st International Conference on Next Generation Computing Technologies (NGCT-2015), Dehradun, India, 4–5 September 2015; pp. 25–29. [Google Scholar]

- Saraswathia, A.T.; Kalaashrib, Y.R.A.; Padmavathi, S. Dynamic resource allocation scheme in cloud computing. Procedia Comput. Sci. 2015, 47, 30–36. [Google Scholar] [CrossRef]

- Xiao, Z.; Song, W.; Chen, Q. Dynamic resource allocation using virtual machines for cloud computing environment. IEEE Trans. Parallel Distrib. Syst. 2013, 24, 1107–1117. [Google Scholar] [CrossRef]

- Mansouri, N.; Ghafari, R.; Zade, B.M.H. Mohammad Hasani Zade: Cloud computing simulators: A comprehensive review. Simul. Model. Pract. Theory 2020. [Google Scholar] [CrossRef]

- Calheiros, R.N.; Ranjan, R.; Beloglazov, A.; De Rose, C.A.; Buyya, R. CloudSim: A toolkit for modeling and simulation of cloud computing environments and evaluation of resource provisioning algorithm. Softw. Pract. Exp. 2011, 41, 23–50. [Google Scholar] [CrossRef]

- Garg, S.K.; Buyya, R. Networkcloudsim: Modelling parallel applications in cloud simulations. In Proceedings of the 4th IEEE International Conference on Utility and Cloud Computing, Victoria, NSW, Australia, 5–8 December 2011; pp. 105–113. [Google Scholar]

- Wickremasinghe, B.; Calheiros, R.N.; Buyya, R. CloudAnalyst: A CloudSim-based visual modeller for analysing cloud computing environments and applications. In Proceedings of the 24th IEEE International Conference on Advanced Information Networking, Perth, WA, Australia, 20–23 April 2010; pp. 446–452. [Google Scholar]

- Calheiros, R.N.; Netto, M.A.; De Rose, C.A.; Buyya, R. EMUSIM: An integrated emulation and simulation environment for modeling, evaluation, and validation of performance of cloud computing applications. Softw. Pract. Exp. 2013, 43, 595–612. [Google Scholar] [CrossRef]

- Fittkau, F.; Frey, S.; Hasselbring, W. CDOSim: Simulating cloud deployment options for software migration support. In Proceedings of the IEEE 6th International Workshop on the Maintenance and Evolution of Service-Oriented and Cloud-Based Systems, Trnto, Italy, 24 September 2012; pp. 37–46. [Google Scholar]

- Li, X.; Jiang, X.; Huang, P.; Ye, K. DartCSim: An enhanced user-friendly cloud simulation system based on CloudSim with better performance. In Proceedings of the IEEE 2nd International Conference on Cloud Computing and Intelligence Systems, Hangzhou, China, 30 October–1 November 2012; pp. 392–396. [Google Scholar]

- Sqalli, M.H.; Al-Saeedi, M.; Binbeshr, F.; Siddiqui, M. UCloud: A simulated Hybrid Cloud for a university environment. In Proceedings of the IEEE 1st International Conference on Cloud Networking, Paris, France, 28–30 November 2012; pp. 170–172. [Google Scholar]

- Kecskemeti, G. DISSECT-CF: A simulator to foster energy-aware scheduling in infrastructure clouds. Simul. Model. Pract. Theory 2015, 58, 188–218. [Google Scholar] [CrossRef]

- Tian, W.; Zhao, Y.; Xu, M.; Zhong, Y.; Sun, X. A toolkit for modeling and simulation of real-time virtual machine allocation in a cloud data center. IEEE Trans. Autom. Sci. Eng. 2015, 12, 153–161. [Google Scholar] [CrossRef]

- Fernández-Cerero, D.; Fernández-Montes, A.; Jakobik, A.; Kołodziej, J.; Toro, M. FSCORE: Simulator for cloud optimization of resources and energy consumption. Simul. Model. Pract. Theory 2018, 82, 160–173. [Google Scholar] [CrossRef]

- Gupta, S.K.; Gilbert, R.R.; Banerjee, A.; Abbasi, Z.; Mukherjee, T.; Varsamopoulos, G. GDCSim: A tool for analyzing green data center design and resource management techniques. In Proceedings of the International Green Computing Conference and Workshops, Orlando, FL, USA, 25–28 July 2011; pp. 1–8. [Google Scholar]

- Kristensen, L.M.; Jørgensen, J.B.; Jensen, K. Application of Coloured Petri Nets in System Development. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2003; pp. 626–685. [Google Scholar]

- Peterson, J.L. Petri nets. ACM Surv. 1997, 9, 223–252. [Google Scholar] [CrossRef]

- Souravlas, S.I.; Roumeliotis, M. Petri Net Based Modeling and Simulation of Pipelined Block-Cyclic Broadcasts. In Proceedings of the 15th IASTED International Conference on Applied Simulation and Modelling-ASM, Rhodos, Greece, 26–28 June 2006; pp. 157–162. [Google Scholar]

- Shojafar, M.; Pooranian, Z.; Abawajy, J.H.; Meybodi, M.R. Abawajy, and Mohammad Reza Meybodi: An Efficient Scheduling Method for Grid Systems Based on a Hierarchical Stochastic Petri Net. J. Comput. Sci. Eng. 2013, 7, 44–52. [Google Scholar] [CrossRef]

- Shojafar, M.; Pooranian, Z.; Meybodi, M.R.; Singhal, M. ALATO: An Efficient Intelligent Algorithm for Time Optimization in an Economic Grid Based on Adaptive Stochastic Petri Net. J. Intell. Manuf. 2013, 26, 641–658. [Google Scholar] [CrossRef]

- Barzegar, S.; Davoudpour, M.; Meybodi, M.R.; Sadeghian, A.; Tirandazian, M. Traffic Signal Control with Adaptive Fuzzy Coloured Petri Net Based on Learning Automata. In Proceedings of the 2010 Annual Meeting of the North American Fuzzy Information Processing Society, Toronto, ON, Canada, 12–14 July 2010; pp. 1–8. [Google Scholar]

- Ghodsi, A.; Zaharia, M.; Hindman, B.; Konwinski, A.; Shenker, S.; Stoica, I. Dominant resource fairness: Fair allocation of multiple resource types. NSDI 2011, 11, 323–336. [Google Scholar]

- Zhao, L.; Du, M.; Chen, L. A new multi-resource allocation mechanism: A tradeoff between fairness and efficiency in cloud computing. China Commun. 2018, 24, 57–77. [Google Scholar] [CrossRef]

- Souravlas, S. ProMo: A Probabilistic Model for Dynamic Load-Balanced Scheduling of Data Flows in Cloud Systems. Electronics 2019, 8, 990. [Google Scholar] [CrossRef]

- Souravlas, S.I.; Roumeliotis, M. Petri net modeling and simulation of pipelined redistributions for a deadlock-free system. Cogent Eng. 2015, 2, 1057427. [Google Scholar] [CrossRef]

- Little, J.D.C. A Proof for the Queuing Formula L = λW. Oper. Res. 1961, 9, 383–387. [Google Scholar] [CrossRef]

- Souravlas, S.; Roumeliotis, M. A pipeline technique for dynamic data transfer on a multiprocessor grid. Int. J. Parallel Programm. 2004, 32, 361–388. [Google Scholar] [CrossRef]

- Souravlas, S.; Sifaleras, A.; Katsavounis, S. Hybrid CPU-GPU Community Detection in Weighted Networks. IEEE Access 2020, 8, 57527–57551. [Google Scholar] [CrossRef]

| Paper Reference | Cost | Execution Time | Utilization |

|---|---|---|---|

| [6] | Yes | No | No |

| [7] | Yes | No | Yes |

| [8] | Yes | Yes | No |

| [10] | Yes | No | No |

| [11] | No | No | Yes |

| [9] | Yes | Yes | No |

| [12] | No | Yes | Yes |

| [13] | No | Yes | Yes |

| Our work | No | No | Yes |

| Job_Id | Priority | Arrival_ Time | Service_Time | Type_of_Resources_Requested |

|---|---|---|---|---|

| 1 | 1 | 20 | 30 | [1,0,0] |

| 2 | 1 | 20 | 25 | [1,1,0] |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Souravlas, S.; Katsavounis, S.; Anastasiadou, S. On Modeling and Simulation of Resource Allocation Policies in Cloud Computing Using Colored Petri Nets. Appl. Sci. 2020, 10, 5644. https://doi.org/10.3390/app10165644

Souravlas S, Katsavounis S, Anastasiadou S. On Modeling and Simulation of Resource Allocation Policies in Cloud Computing Using Colored Petri Nets. Applied Sciences. 2020; 10(16):5644. https://doi.org/10.3390/app10165644

Chicago/Turabian StyleSouravlas, Stavros, Stefanos Katsavounis, and Sofia Anastasiadou. 2020. "On Modeling and Simulation of Resource Allocation Policies in Cloud Computing Using Colored Petri Nets" Applied Sciences 10, no. 16: 5644. https://doi.org/10.3390/app10165644

APA StyleSouravlas, S., Katsavounis, S., & Anastasiadou, S. (2020). On Modeling and Simulation of Resource Allocation Policies in Cloud Computing Using Colored Petri Nets. Applied Sciences, 10(16), 5644. https://doi.org/10.3390/app10165644