Bearing Fault Classification of Induction Motors Using Discrete Wavelet Transform and Ensemble Machine Learning Algorithms

Abstract

1. Introduction

- Evaluate the performances of various wavelets for motor current signal analysis.

- Observe the effect of denoising the current signal on classification accuracy.

- Evaluate the performance of two ensemble classifiers for bearing fault identification of an IM.

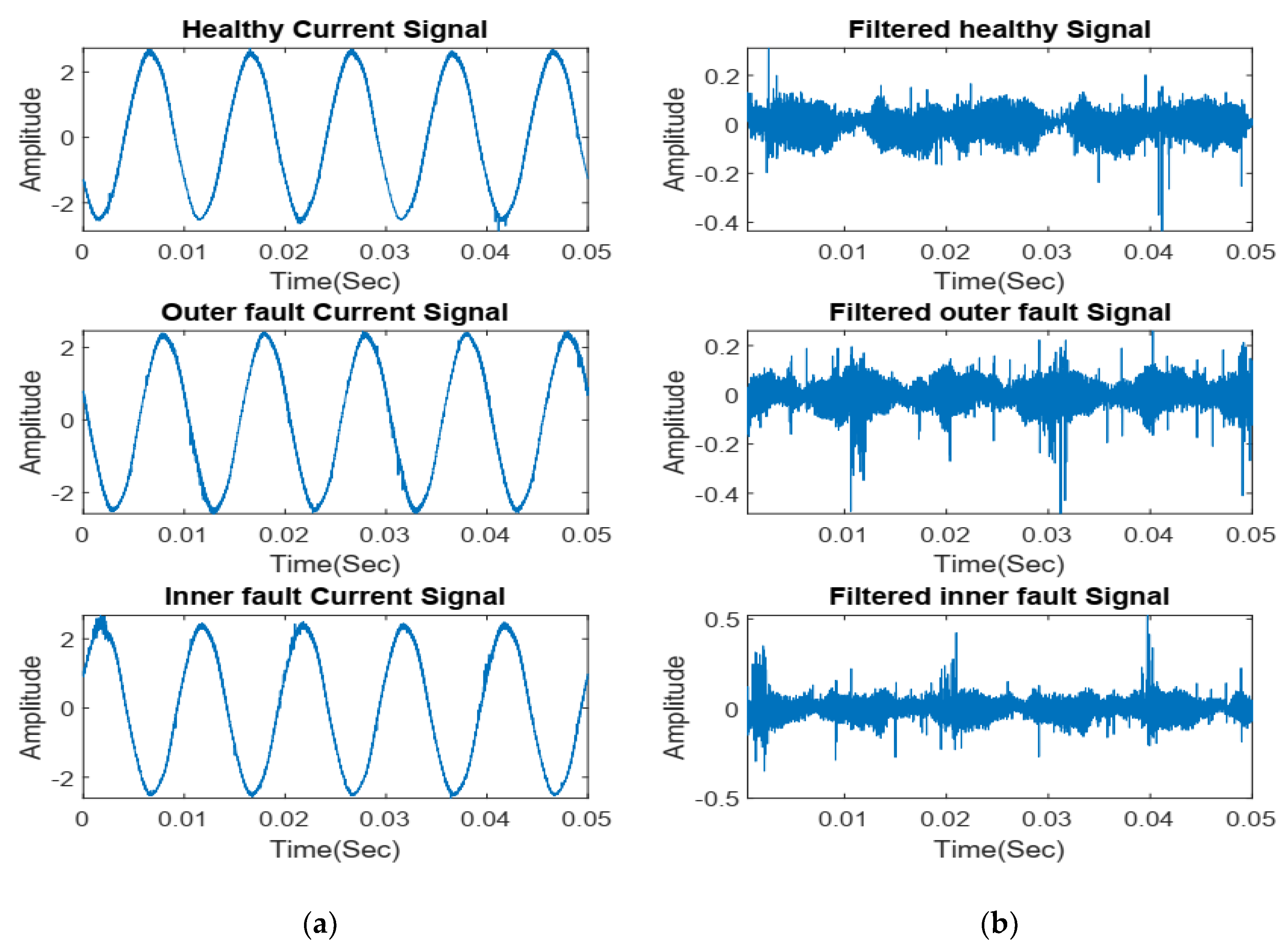

2. Experimental Test Rig and Data Description

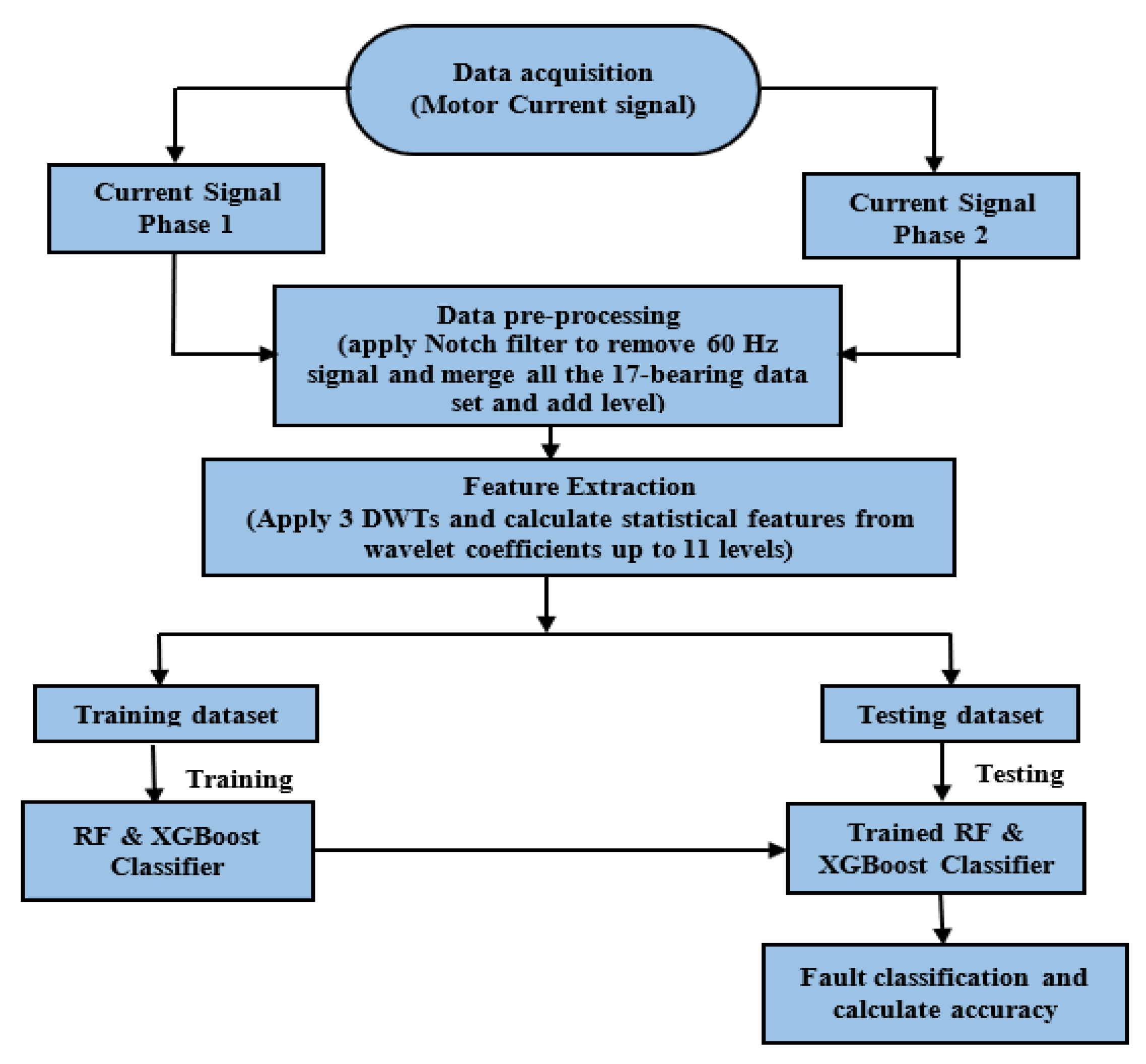

3. Methodology

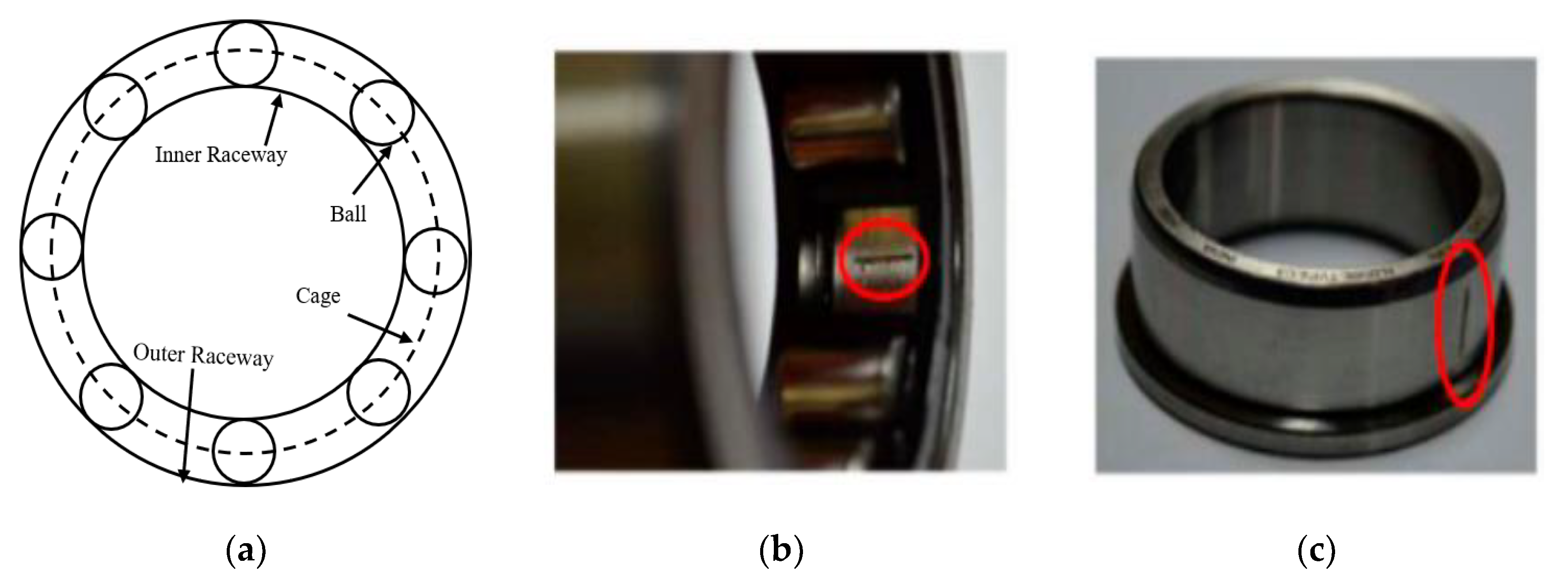

3.1. Bearing Fault Signatures

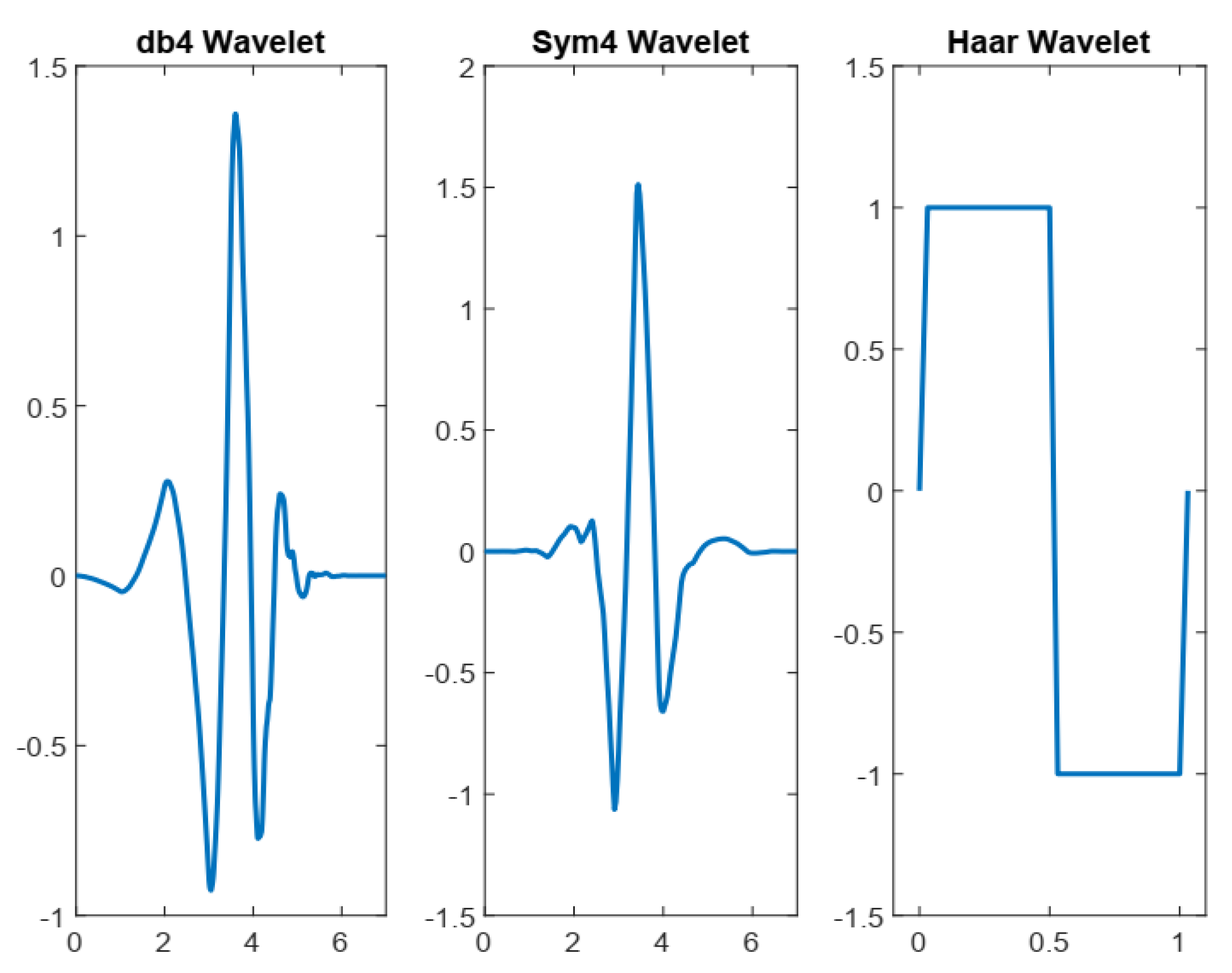

3.2. Wavelet Transform

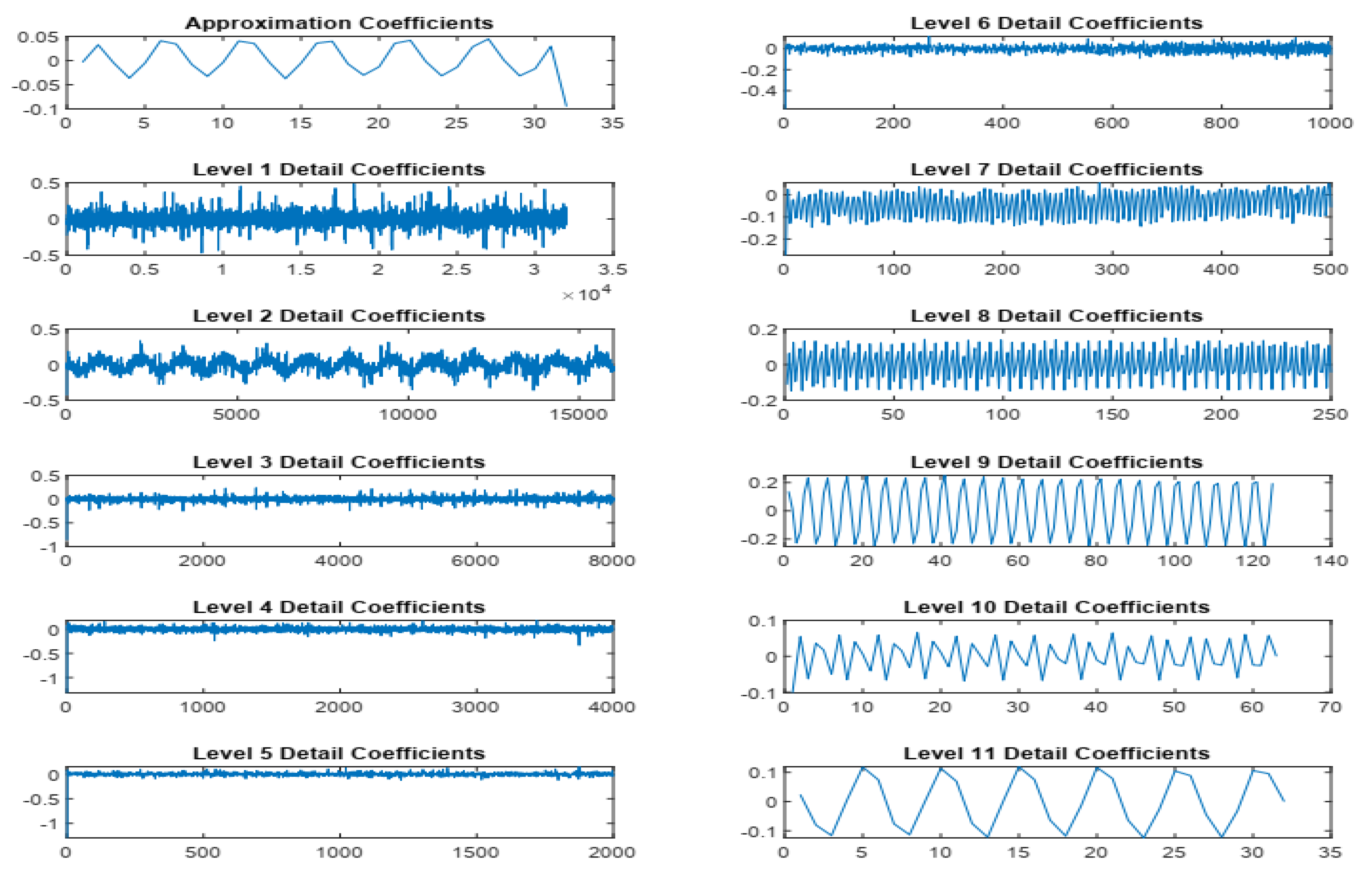

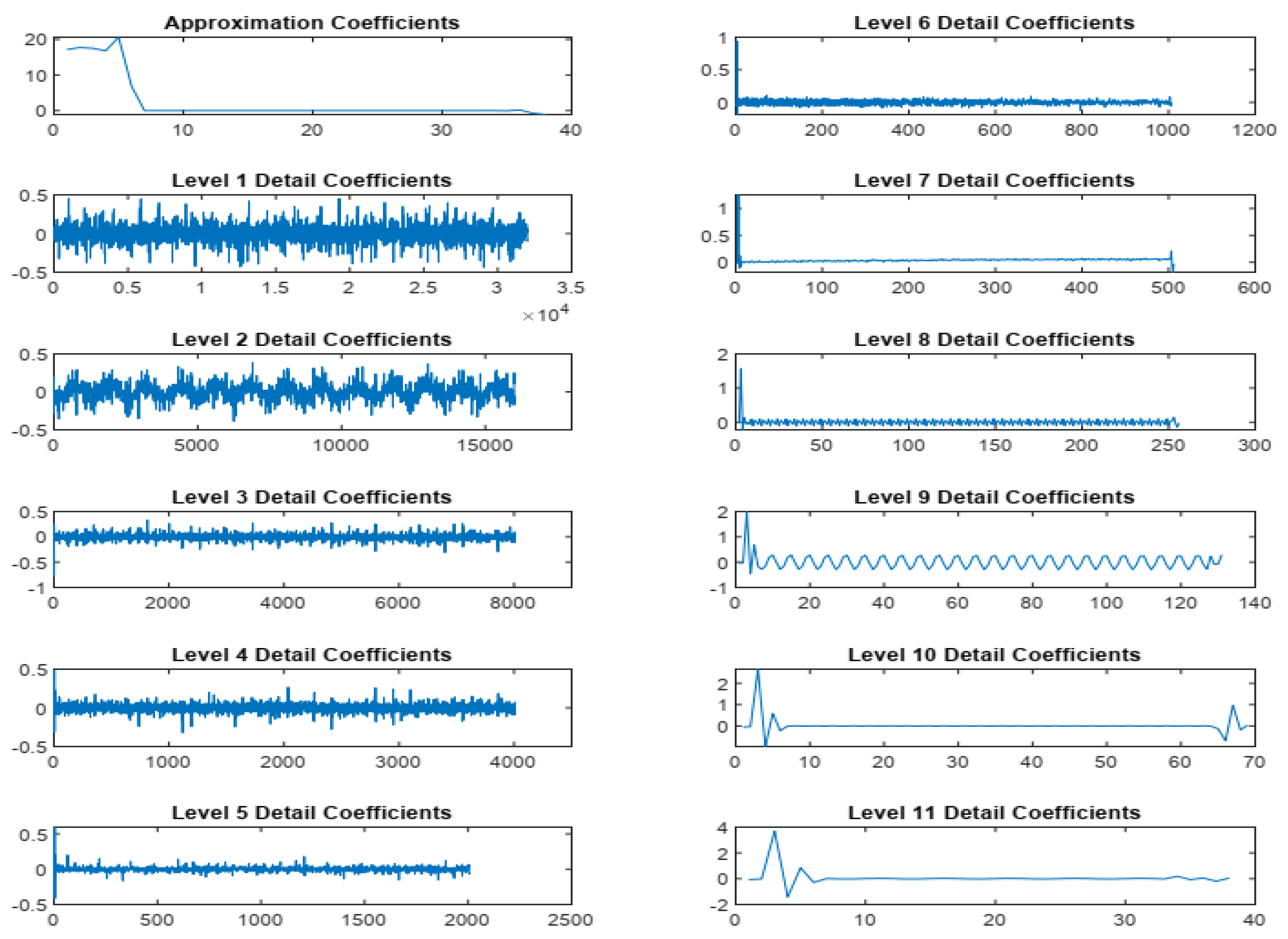

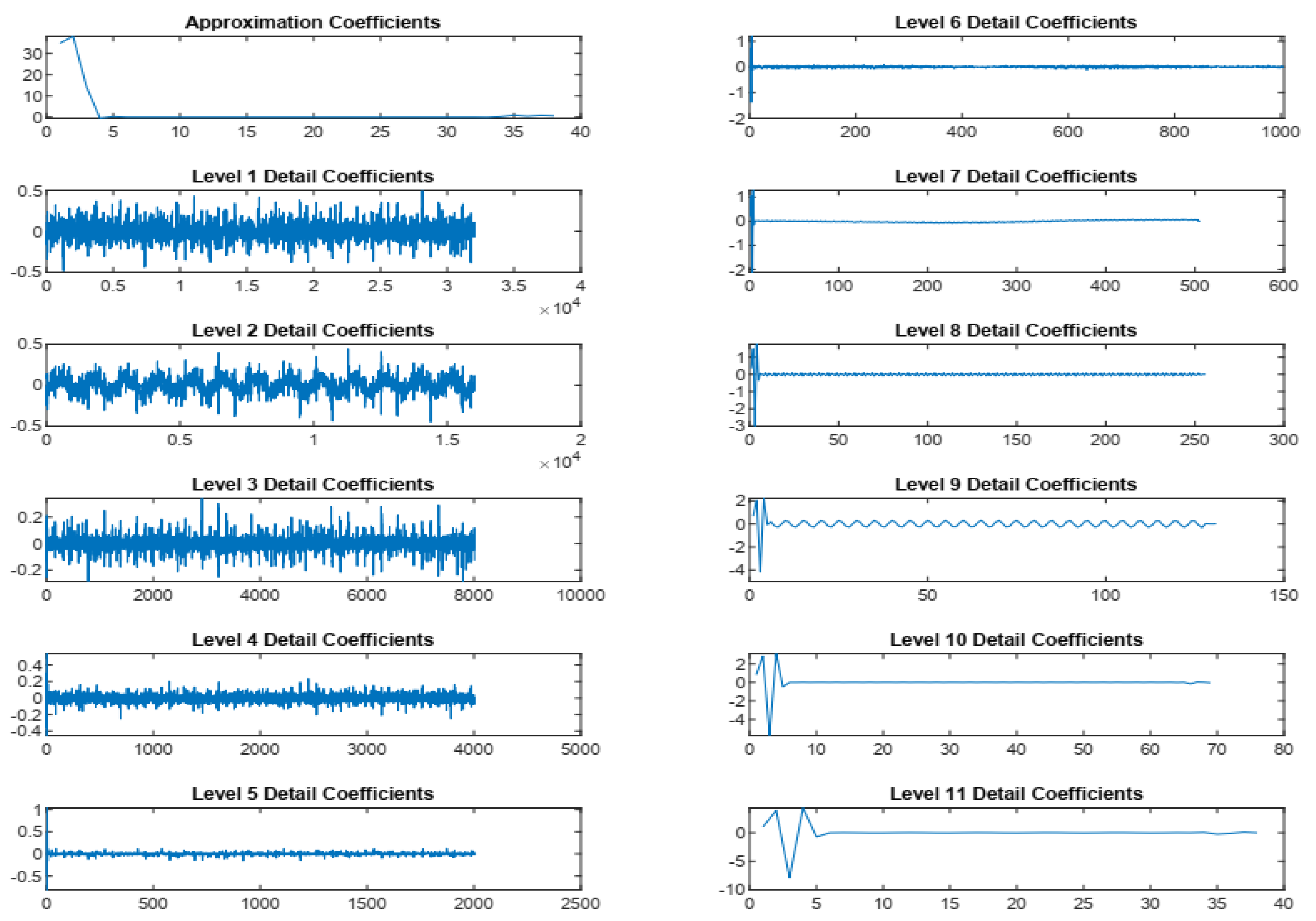

3.3. Feature Extraction

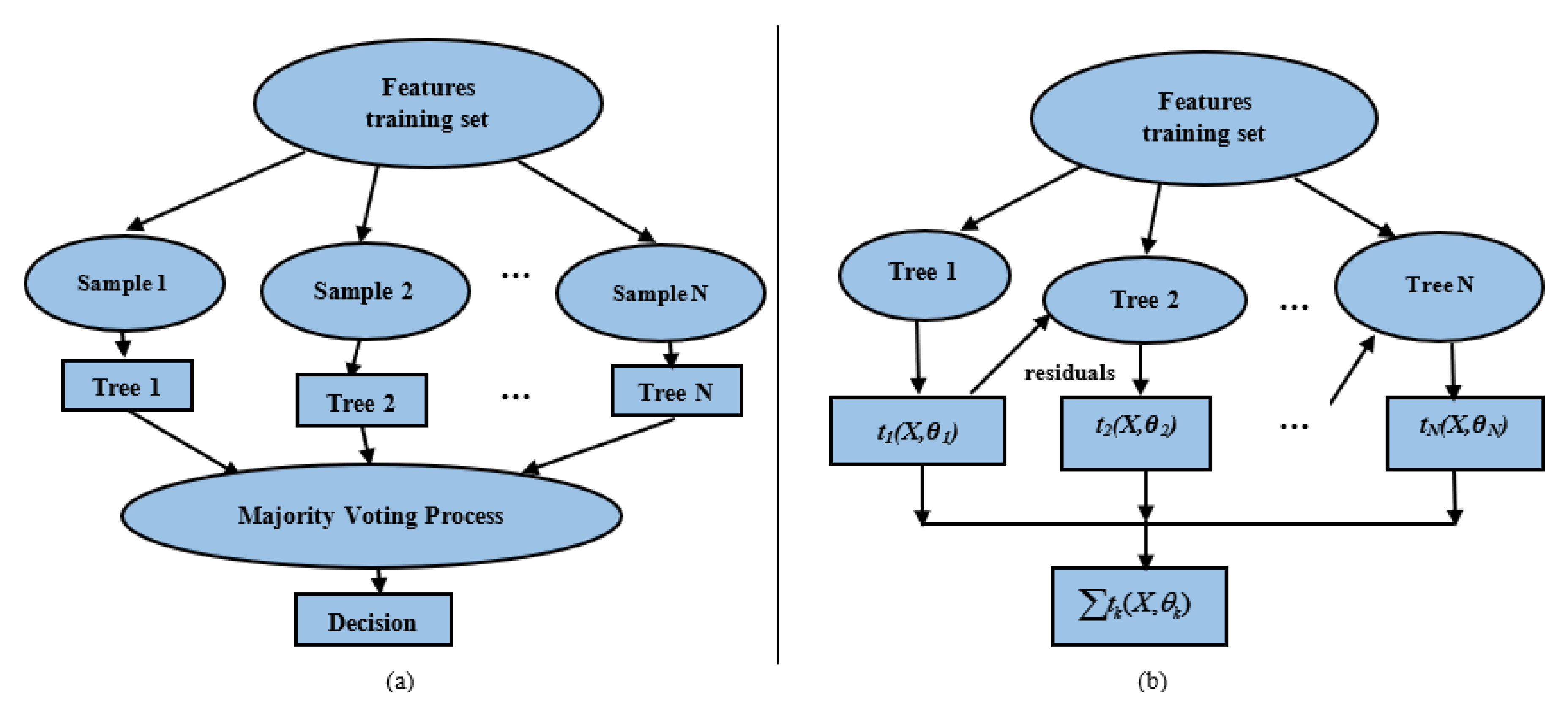

3.4. Ensemble Learning

3.4.1. Random Forest

3.4.2. Extreme Gradient Boosting (XGBoost)

3.5. Model Evaluation

3.6. Hyperparameter Selection

4. Results

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Khan, S.; Yairi, T. A review on the application of deep learning in system health management. Mech. Syst. Signal Process. 2018, 107, 241–265. [Google Scholar] [CrossRef]

- Liao, Y.X.; Zhang, L.; Li, W.H. Regrouping particle swarm optimization based variable neural network for gearbox fault diagnosis. J. Intell. Fuzzy Syst. 2018, 34, 3671–3680. [Google Scholar] [CrossRef]

- Zhao, R.; Yan, R.Q.; Chen, Z.H.; Mao, K.Z.; Wang, P.; Gao, R.X. Deep learning and its applications to machine health monitoring. Mech. Syst. Signal Process. 2019, 115, 213–237. [Google Scholar] [CrossRef]

- Thorsen, O.V.; Dalva, M. A survey of faults on induction-motors in offshore oil industry, petrochemical industry, gas terminals, and oil refineries. IEEE Trans. Ind. Appl. 1995, 31, 1186–1196. [Google Scholar] [CrossRef]

- Wang, D.; Miao, Q.; Fan, X.F.; Huang, H.Z. Rolling element bearing fault detection using an improved combination of Hilbert and Wavelet transforms. J. Mech. Sci. Technol. 2009, 23, 3292–3301. [Google Scholar] [CrossRef]

- Patil, A.B.; Gaikwad, J.A.; Kulkarni, J.V. Bearing fault diagnosis using discrete wavelet transform and artificial neural network. In Proceedings of the 2016 2nd International Conference on Applied and Theoretical Computing and Communication Technology (iCATccT), Bangalore, India, 21–23 July 2016; pp. 399–405. [Google Scholar]

- Lee, J.H.; Pack, J.H.; Lee, I.S. Fault diagnosis of induction motor using convolutional neural network. Appl. Sci. 2019, 9, 2950. [Google Scholar] [CrossRef]

- Glowacz, A. Acoustic based fault diagnosis of three-phase induction motor. Appl. Acoust. 2018, 137, 82–89. [Google Scholar] [CrossRef]

- Liu, J.; Shao, Y.M. Overview of dynamic modelling and analysis of rolling element bearings with localized and distributed faults. Nonlinear Dyn. 2018, 93, 1765–1798. [Google Scholar] [CrossRef]

- Immovilli, F.; Bianchini, C.; Cocconcelli, M.; Bellini, A.; Rubini, R. Bearing Fault model for induction motor with externally induced vibration. IEEE Trans. Ind. Electron. 2013, 60, 3408–3418. [Google Scholar] [CrossRef]

- Sohaib, M.; Kim, C.H.; Kim, J.M. A hybrid feature model and deep-learning-based bearing fault diagnosis. Sensors 2017, 17, 2876. [Google Scholar] [CrossRef]

- Elbouchikhi, E.; Choqueuse, V.; Auger, F.; Benbouzid, M.E. Motor current signal analysis based on a matched subspace detector. IEEE Trans. Instrum. Meas. 2017, 66, 3260–3270. [Google Scholar] [CrossRef]

- Soualhi, A.; Clerc, G.; Razik, H. Detection and diagnosis of faults in induction motor using an improved artificial ant clustering technique. IEEE Trans. Ind. Electron. 2013, 60, 4053–4062. [Google Scholar] [CrossRef]

- Zhou, W.; Habetler, T.G.; Harley, R.G. Bearing fault detection via stator current noise cancellation and statistical control. IEEE Trans. Ind. Electron. 2008, 55, 4260–4269. [Google Scholar] [CrossRef]

- Ince, T.; Kiranyaz, S.; Eren, L.; Askar, M.; Gabbouj, M. Real-time motor fault detection by 1-D Convolutional neural networks. IEEE Trans. Ind. Electron. 2016, 63, 7067–7075. [Google Scholar] [CrossRef]

- Pons-Llinares, J.; Antonino-Daviu, J.A.; Riera-Guasp, M.; Lee, S.B.; Kang, T.J.; Yang, C. Advanced Induction motor rotor fault diagnosis via continuous and discrete time-frequency tools. IEEE Trans. Ind. Electron. 2015, 62, 1791–1802. [Google Scholar] [CrossRef]

- Zhen, D.; Wang, Z.L.; Li, H.Y.; Zhang, H.; Yang, J.; Gu, F.S. An improved cyclic modulation spectral analysis based on the CWT and its application on broken rotor bar fault diagnosis for induction motors. Appl. Sci. 2019, 9, 3902. [Google Scholar] [CrossRef]

- Sinha, J.K.; Elbhbah, K. A future possibility of vibration based condition monitoring of rotating machines. Mech. Syst. Signal Process. 2013, 34, 231–240. [Google Scholar] [CrossRef]

- Antoni, J. Cyclic spectral analysis of rolling-element bearing signals: Facts and fictions. J. Sound Vib. 2007, 304, 497–529. [Google Scholar] [CrossRef]

- Parey, A.; El Badaoui, M.; Guillet, F.; Tandon, N. Dynamic modelling of spur gear pair and application of empirical mode decomposition-based statistical analysis for early detection of localized tooth defect. J. Sound Vib. 2006, 294, 547–561. [Google Scholar] [CrossRef]

- He, Q.B.; Wang, X.X. Time-frequency manifold correlation matching for periodic fault identification in rotating machines. J. Sound Vib. 2013, 332, 2611–2626. [Google Scholar] [CrossRef]

- Li, Z.; Feng, Z.P.; Chu, F.L. A load identification method based on wavelet multi-resolution analysis. J. Sound Vib. 2014, 333, 381–391. [Google Scholar] [CrossRef]

- Pothisarn, C.; Klomjit, J.; Ngaopitakkul, A.; Jettanasen, C.; Asfani, D.A.; Negara, I.M.Y. Comparison of various mother wavelets for fault classification in electrical systems. Appl. Sci. 2020, 10, 1203. [Google Scholar] [CrossRef]

- Peng, Z.K.; Tse, P.W.; Chu, F.L. An improved Hilbert–Huang transform and its application in vibration signal analysis. J. Sound Vib. 2005, 286, 187–205. [Google Scholar] [CrossRef]

- Liu, X.F.; Bo, L.; He, X.X.; Veidt, M. Application of correlation matching for automatic bearing fault diagnosis. J. Sound Vib. 2012, 331, 5838–5852. [Google Scholar] [CrossRef]

- Kar, C.; Mohanty, A.R. Vibration and current transient monitoring for gearbox fault detection using multiresolution Fourier transform. J. Sound Vib. 2008, 311, 109–132. [Google Scholar] [CrossRef]

- Lu, W.B.; Jiang, W.K.; Yuan, G.Q.; Yan, L. A gearbox fault diagnosis scheme based on near-field acoustic holography and spatial distribution features of sound field. J. Sound Vib. 2013, 332, 2593–2610. [Google Scholar] [CrossRef]

- Li, B.; Chen, X.F.; Ma, J.X.; He, Z.J. Detection of crack location and size in structures using wavelet finite element methods. J. Sound Vib. 2005, 285, 767–782. [Google Scholar] [CrossRef]

- Nikravesh, S.; Chegini, S.N. Crack identification in double-cracked plates using wavelet analysis. Meccanica 2013, 48, 2075–2098. [Google Scholar] [CrossRef]

- Chen, J.L.; Pan, J.; Li, Z.P.; Zi, Y.Y.; Chen, X.F. Generator bearing fault diagnosis for wind turbine via empirical wavelet transform using measured vibration signals. Renew. Energy 2016, 89, 80–92. [Google Scholar] [CrossRef]

- Muralidharan, V.; Sugumaran, V. Feature extraction using wavelets and classification through decision tree algorithm for fault diagnosis of mono-block centrifugal pump. Measurement 2013, 46, 353–359. [Google Scholar] [CrossRef]

- Zimroz, R.; Bartelmus, W.; Barszcz, T.; Urbanek, J. Diagnostics of bearings in presence of strong operating conditions non-stationarity—A procedure of load-dependent features processing with application to wind turbine bearings. Mech. Syst. Signal Process. 2014, 46, 16–27. [Google Scholar] [CrossRef]

- Adly, A.R.; El Sehiemy, R.A.; Abdelaziz, A.Y.; Ayad, N.M.A. Critical aspects on wavelet transforms based fault identification procedures in HV transmission line. IET Gener. Transm. Dis. 2016, 10, 508–517. [Google Scholar] [CrossRef]

- Gangsar, P.; Tiwari, R. A support vector machine based fault diagnostics of Induction motors for practical situation of multi-sensor limited data case. Measurement 2019, 135, 694–711. [Google Scholar] [CrossRef]

- Pandhare, V.; Singh, J.; Lee, J. Convolutional neural network based rolling-element bearing fault diagnosis for naturally occurring and progressing defects using time-frequency domain features. In Proceedings of the Prognostics and System Health Management Conference (PHM-Paris), Paris, France, 2–5 May 2019. [Google Scholar]

- Liu, R.N.; Yang, B.Y.; Zhang, X.L.; Wang, S.B.; Chen, X.F. Time-frequency atoms-driven support vector machine method for bearings incipient fault diagnosis. Mech. Syst. Signal Process. 2016, 75, 345–370. [Google Scholar] [CrossRef]

- Moosavian, A.; Jafari, S.M.; Khazaee, M.; Ahmadi, H. A comparison between ANN, SVM and least squares SVM: Application in multi-fault diagnosis of rolling element bearing. Int. J. Acoust. Vib. 2018, 23, 432–440. [Google Scholar] [CrossRef]

- Shao, H.D.; Jiang, H.K.; Li, X.Q.; Wu, S.P. Intelligent fault diagnosis of rolling bearing using deep wavelet auto-encoder with extreme learning machine. Knowl. Based Syst. 2018, 140, 1–14. [Google Scholar] [CrossRef]

- Chen, Z.Y.; Li, W.H. Multisensor feature fusion for bearing fault diagnosis using sparse autoencoder and deep belief network. IEEE Trans. Instrum. Meas. 2017, 66, 1693–1702. [Google Scholar] [CrossRef]

- Huang, R.Y.; Liao, Y.X.; Zhang, S.H.; Li, W.H. Deep decoupling convolutional neural network for intelligent compound fault diagnosis. IEEE Access 2019, 7, 1848–1858. [Google Scholar] [CrossRef]

- Jia, F.; Lei, Y.G.; Guo, L.; Lin, J.; Xing, S.B. A neural network constructed by deep learning technique and its application to intelligent fault diagnosis of machines. Neurocomputing 2018, 272, 619–628. [Google Scholar] [CrossRef]

- Shao, H.D.; Jiang, H.K.; Zhang, H.Z.; Duan, W.J.; Liang, T.C.; Wu, S.P. Rolling bearing fault feature learning using improved convolutional deep belief network with compressed sensing. Mech. Syst. Signal Process. 2018, 100, 743–765. [Google Scholar] [CrossRef]

- Divina, F.; Gilson, A.; Gomez-Vela, F.; Torres, M.G.; Torres, J.E. Stacking ensemble learning for short-term electricity consumption forecasting. Energies 2018, 11, 949. [Google Scholar] [CrossRef]

- Zhang, C.; Ma, Y. (Eds.) Ensemble Machine Learning: Methods and Applications; Springer Science & Business Media: Boston, MA, USA, 2012. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd Acm Sigkdd International Conference on Knowledge Discovery and Data Mining ACM, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Chakraborty, D.; Elzarka, H. Early detection of faults in HVAC systems using an XGBoost model with a dynamic threshold. Energy Build. 2019, 185, 326–344. [Google Scholar] [CrossRef]

- Zhang, D.H.; Qian, L.Y.; Mao, B.J.; Huang, C.; Huang, B.; Si, Y.L. A data-driven design for fault detection of wind turbines using random forests and XGboost. IEEE Access 2018, 6, 21020–21031. [Google Scholar] [CrossRef]

- Zhang, R.; Li, B.; Jiao, B. Application of XGboost algorithm in bearing fault diagnosis. In IOP Conference Series: Materials Science and Engineering, Proceedings of the 2nd International Symposium on Application of Materials Science and Energy Materials (SAMSE 2018), Shanghai, China, 17–18 December 2018; IOP Publishing: Bristol, UK, 2019; Volume 490. [Google Scholar] [CrossRef]

- Lessmeier, C.K.; Kimotho, J.K.; Zimmer, D.; Sextro, W. Condition monitoring of bearing damage in electromechanical drive systems by using motor current signals of electric motors: A benchmark data set for data-driven classification. In Proceedings of the European Conference of the Prognostics and Health Management Society, Bilbao, Spain, 5–8 July 2016; 2016. [Google Scholar]

- Hoang, D.T.; Kang, H.J. A motor current signal-based bearing fault diagnosis using deep learning and information fusion. IEEE Trans. Instrum. Meas. 2020, 69, 3325–3333. [Google Scholar] [CrossRef]

- Hsueh, Y.M.; Ittangihal, V.R.; Wu, W.B.; Chang, H.C.; Kuo, C.C. Fault diagnosis system for induction motors by CNN using empirical wavelet transform. Symmetry 2019, 11, 1212. [Google Scholar] [CrossRef]

- Oswald, F.B.; Zaretsky, E.V.; Poplawski, J.V. Effect of internal clearance on load distribution and life of radially loaded ball and roller bearings. Tribol. Trans. 2012, 55, 245–265. [Google Scholar] [CrossRef]

- Bloedt, M.; Granjon, P.; Raison, B.; Rostaing, G. Models for bearing damage detection in induction motors using stator current monitoring. IEEE Trans. Ind. Electron. 2008, 55, 1813–1822. [Google Scholar] [CrossRef]

- Jung, J.H.; Lee, J.J.; Kwon, B.H. Online diagnosis of induction motors using MCSA. IEEE Trans. Ind. Electron. 2006, 53, 1842–1852. [Google Scholar] [CrossRef]

- Nikravesh, S.Y.; Rezaie, H.; Kilpatrik, M.; Taheri, H. Intelligent fault diagnosis of bearings based on energy levels in frequency bands using wavelet and Support Vector Machines (SVM). J. Manuf. Mater. Process. 2019, 3, 11. [Google Scholar] [CrossRef]

- Wu, J.D.; Chen, J.C. Continuous wavelet transform technique for fault signal diagnosis of internal combustion engines. NDT E Int. 2006, 39, 304–311. [Google Scholar] [CrossRef]

- Eristi, H.; Ucar, A.; Demir, Y. Wavelet-based feature extraction and selection for classification of power system disturbances using support vector machines. Electr. Power Syst. Res. 2010, 80, 743–752. [Google Scholar] [CrossRef]

- Maritnez-Alvarez, F.; Troncoso, A.; Asencio-Cortes, G.; Riquelme, J.C. A survey on data mining techniques applied to electricity-related time series forecasting. Energies 2015, 8, 13162–13193. [Google Scholar] [CrossRef]

- Chakraborty, D.; Elzarka, H. Advanced machine learning techniques for building performance simulation: A comparative analysis. J. Build. Perform. Simul. 2019, 12, 193–207. [Google Scholar] [CrossRef]

| No. | Rotational Speed | Radial Force | Load Torque |

|---|---|---|---|

| (S) | (F) | (M) | |

| [rpm] | [N] | [Nm] | |

| 1 | 1500 | 1000 | 0.7 |

| 2 | 1500 | 1000 | 0.1 |

| 3 | 900 | 1000 | 0.7 |

| 4 | 1500 | 400 | 0.7 |

| Type of Bearing | Bearing Code | Damage (Main Mode & Symptoms) | Label | |

|---|---|---|---|---|

| Healthy Bearing Data | K001 | - | 0 | |

| K002 | - | |||

| K003 | - | |||

| K004 | - | |||

| K005 | - | |||

| K006 | - | |||

| Naturally Damaged Bearing Data | Outer Ring | KA04 | Fatigue: pitting | 1 |

| KA15 | Plastic deformity: Indentations | |||

| KA16 | Fatigue: pitting | |||

| KA22 | Fatigue: pitting | |||

| KA30 | Plastic deformity: Indentations | |||

| Inner Ring | KI04 | Fatigue: pitting | 2 | |

| KI14 | Fatigue: pitting | |||

| KI16 | Fatigue: pitting | |||

| KI17 | Fatigue: pitting | |||

| KI18 | Fatigue: pitting | |||

| KI21 | Fatigue: pitting | |||

| Techniques for Extracted Feature | Feature Number (k) | Equations of Detailed & Approximation Coefficients | |

|---|---|---|---|

| 1. Mean | k = 1, …, 12 | (10) | |

| 2. Standard deviation | k = 13, …, 24 | (11) | |

| 3. Skewness | k = 25, …, 36 | (12) | |

| 4. Kurtosis | k = 37, …, 48 | (13) | |

| 5. RMS | k = 49, …, 60 | (14) | |

| 6. Form factor | k = 61, …, 72 | (15) | |

| 7. Crest factor | k = 73, …, 84 | (16) | |

| 8. Energy | k = 85, …, 96 | (17) | |

| 9. Shannon- entropy | k = 97, …, 108 | (18) | |

| 10. Log-energy entropy | k = 109, …, 120 | (19) | |

| 11. Interquartile range | k = 121, …, 132 | (20) |

| (23) | |

| (24) | |

| (25) | |

| (26) | |

| (27) |

| Random Forest | Xtreme Gradient Boosting | ||

|---|---|---|---|

| Parameters | Values | Parameters | Values |

| max_depth | 90 | max_depth | 20 |

| max_features | 3 | learning rate | 0.1 |

| min_samples_leaf | 3 | n_estimator | 500 |

| min_samples_split | 8 | min_child_weight | 1 |

| n_estimators | 200 | gamma | 1 |

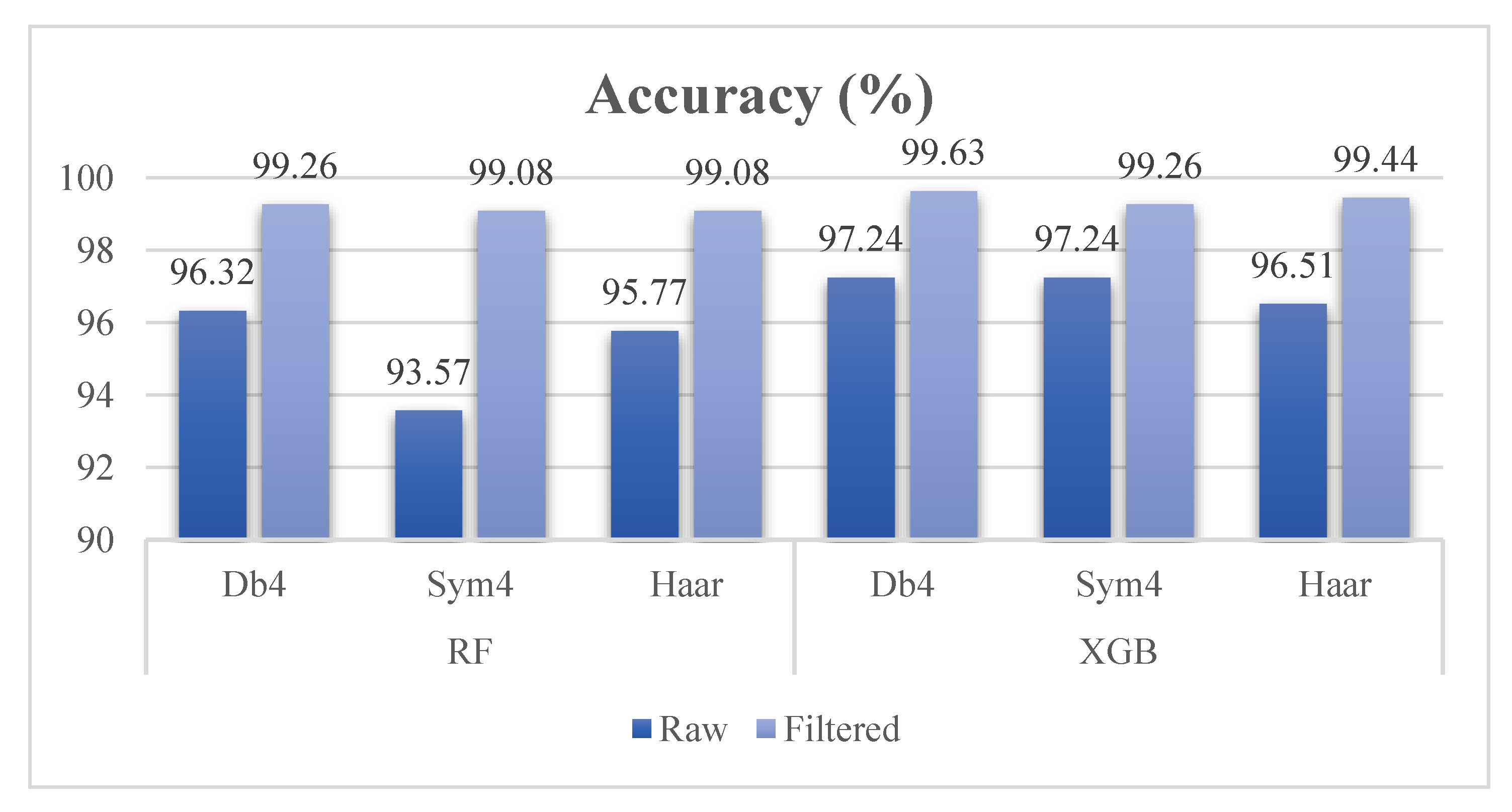

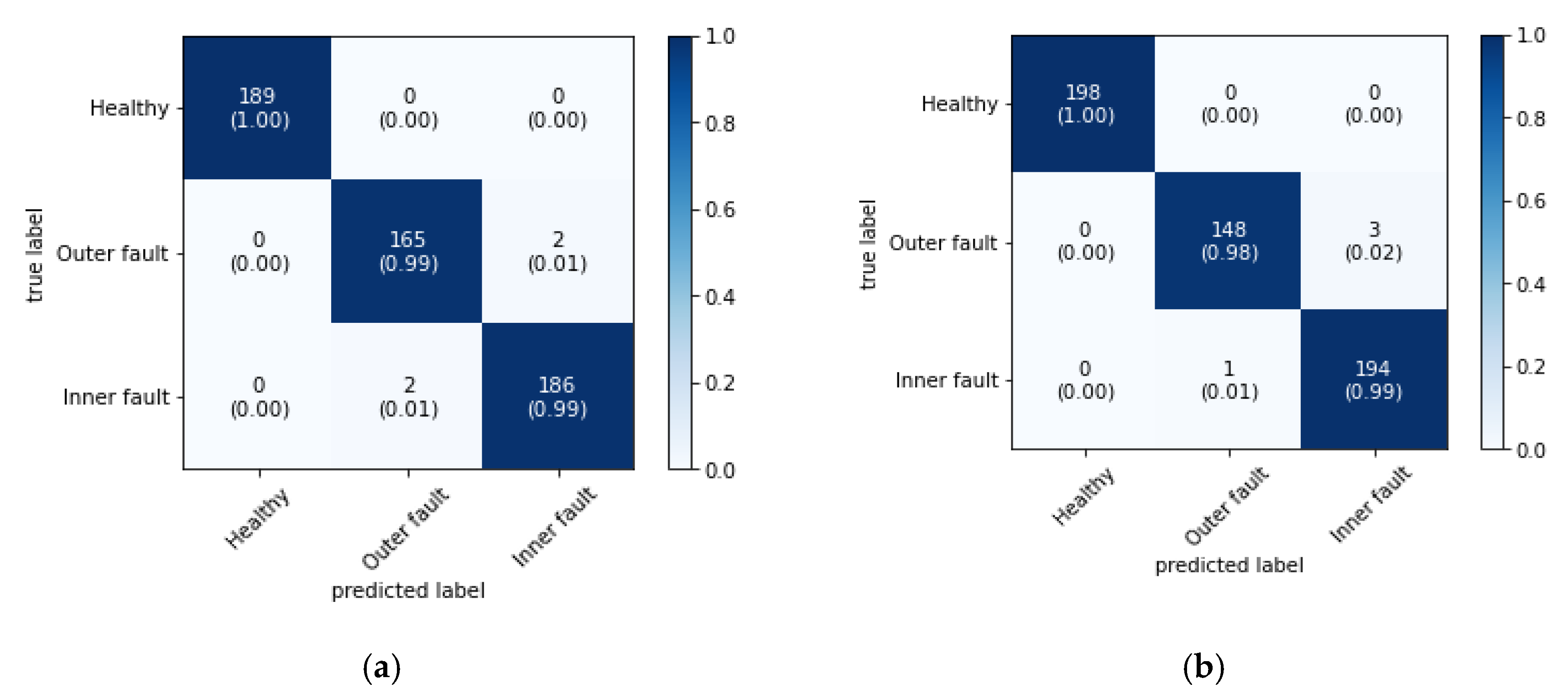

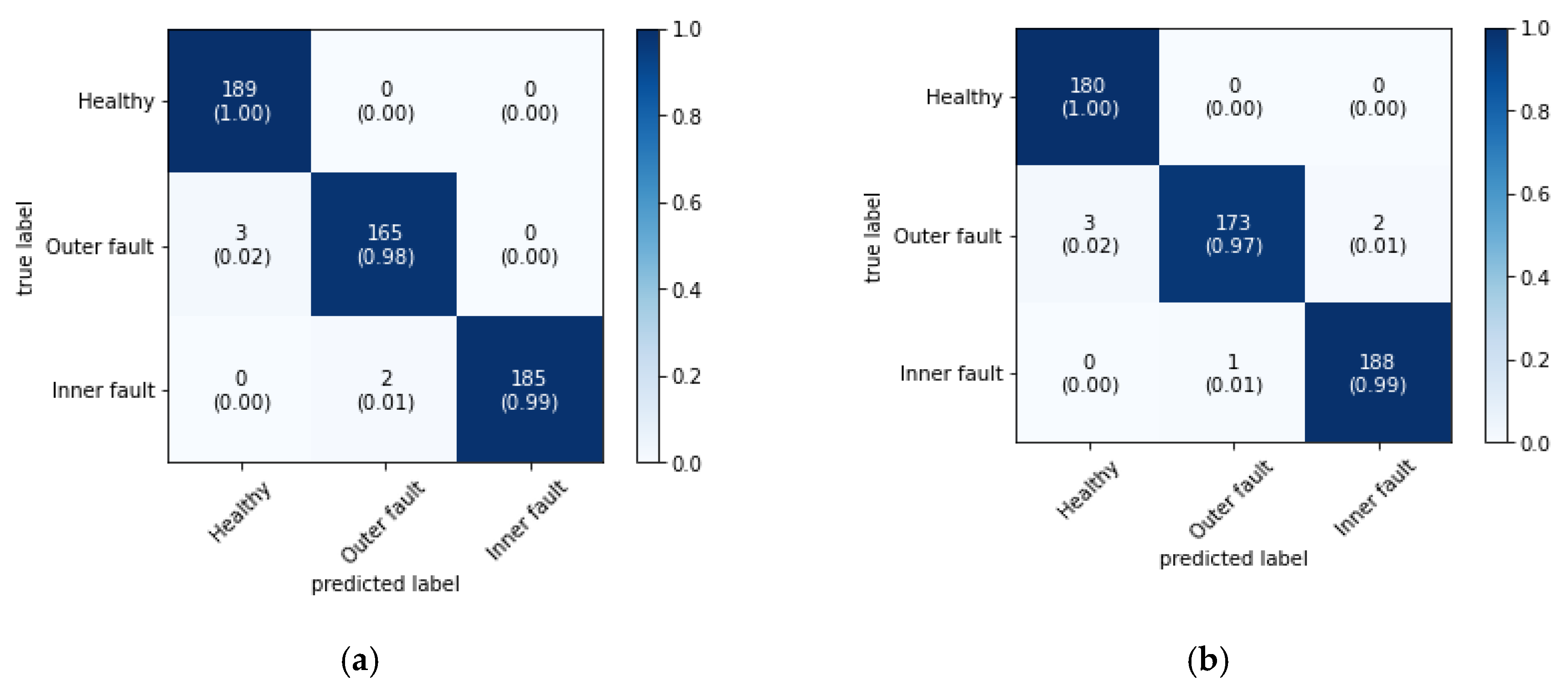

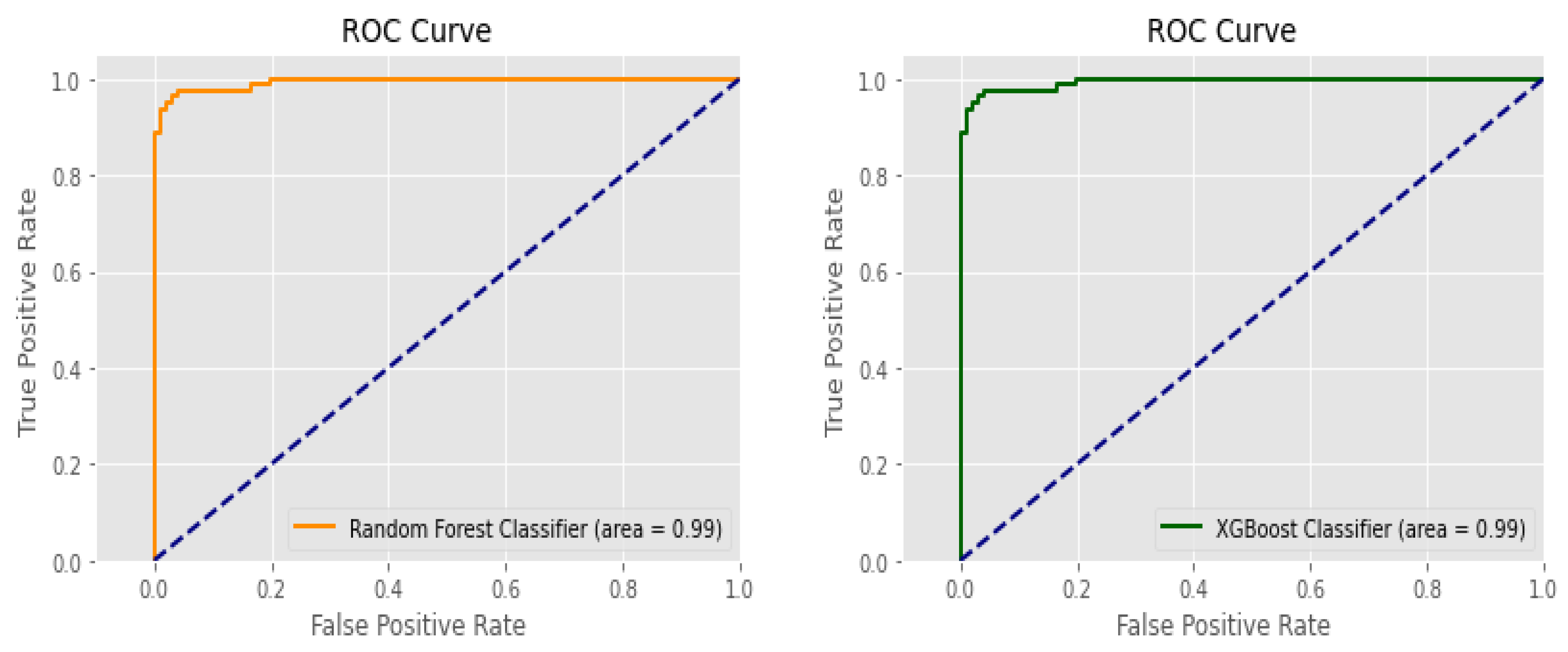

| RF | XGB | |||||

|---|---|---|---|---|---|---|

| db4 | Sym4 | Haar | db4 | Sym4 | Haar | |

| Precision | 0.96 | 0.94 | 0.96 | 0.97 | 0.97 | 0.97 |

| Sensitivity | 0.96 | 0.94 | 0.96 | 0.97 | 0.97 | 0.97 |

| F1_score | 0.96 | 0.94 | 0.96 | 0.97 | 0.97 | 0.97 |

| Specificity | 0.96 | 0.94 | 0.96 | 0.97 | 0.97 | 0.97 |

| Accuracy | 0.96 | 0.93 | 0.95 | 0.97 | 0.97 | 0.96 |

| RF | XGB | |||||

|---|---|---|---|---|---|---|

| db4 | Sym4 | Haar | db4 | Sym4 | Haar | |

| Precision | 0.99 | 0.99 | 0.99 | 0.99 | 0.99 | 0.99 |

| Sensitivity | 0.99 | 0.99 | 0.99 | 1.0 | 0.99 | 0.99 |

| F1_score | 0.99 | 0.99 | 0.99 | 1.0 | 0.99 | 1.0 |

| Specificity | 0.99 | 0.99 | 0.99 | 0.99 | 0.99 | 0.99 |

| Accuracy | 0.99 | 0.99 | 0.99 | 0.99 | 0.99 | 0.99 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nishat Toma, R.; Kim, J.-M. Bearing Fault Classification of Induction Motors Using Discrete Wavelet Transform and Ensemble Machine Learning Algorithms. Appl. Sci. 2020, 10, 5251. https://doi.org/10.3390/app10155251

Nishat Toma R, Kim J-M. Bearing Fault Classification of Induction Motors Using Discrete Wavelet Transform and Ensemble Machine Learning Algorithms. Applied Sciences. 2020; 10(15):5251. https://doi.org/10.3390/app10155251

Chicago/Turabian StyleNishat Toma, Rafia, and Jong-Myon Kim. 2020. "Bearing Fault Classification of Induction Motors Using Discrete Wavelet Transform and Ensemble Machine Learning Algorithms" Applied Sciences 10, no. 15: 5251. https://doi.org/10.3390/app10155251

APA StyleNishat Toma, R., & Kim, J.-M. (2020). Bearing Fault Classification of Induction Motors Using Discrete Wavelet Transform and Ensemble Machine Learning Algorithms. Applied Sciences, 10(15), 5251. https://doi.org/10.3390/app10155251