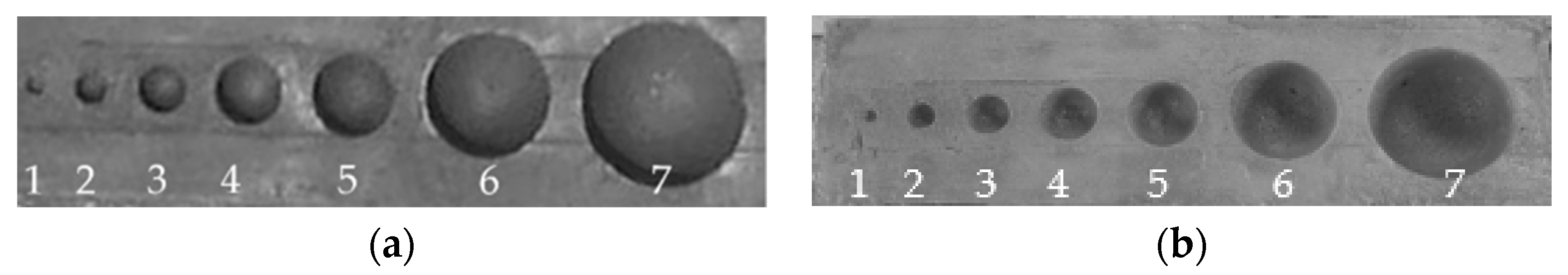

In this section, the results are displayed in four parts: (1) different flight plans to assess the influence of the angle on the variation of the error; (2) different flight plans to assess the influence of overlap and height on the variation of the error; (3) the selection of optimal parameters; and (4) the case study to validate the recommendations and results. The analysis of the results was made by the relative error presented by each pothole according to its actual characteristics.

3.1. Angle Variation

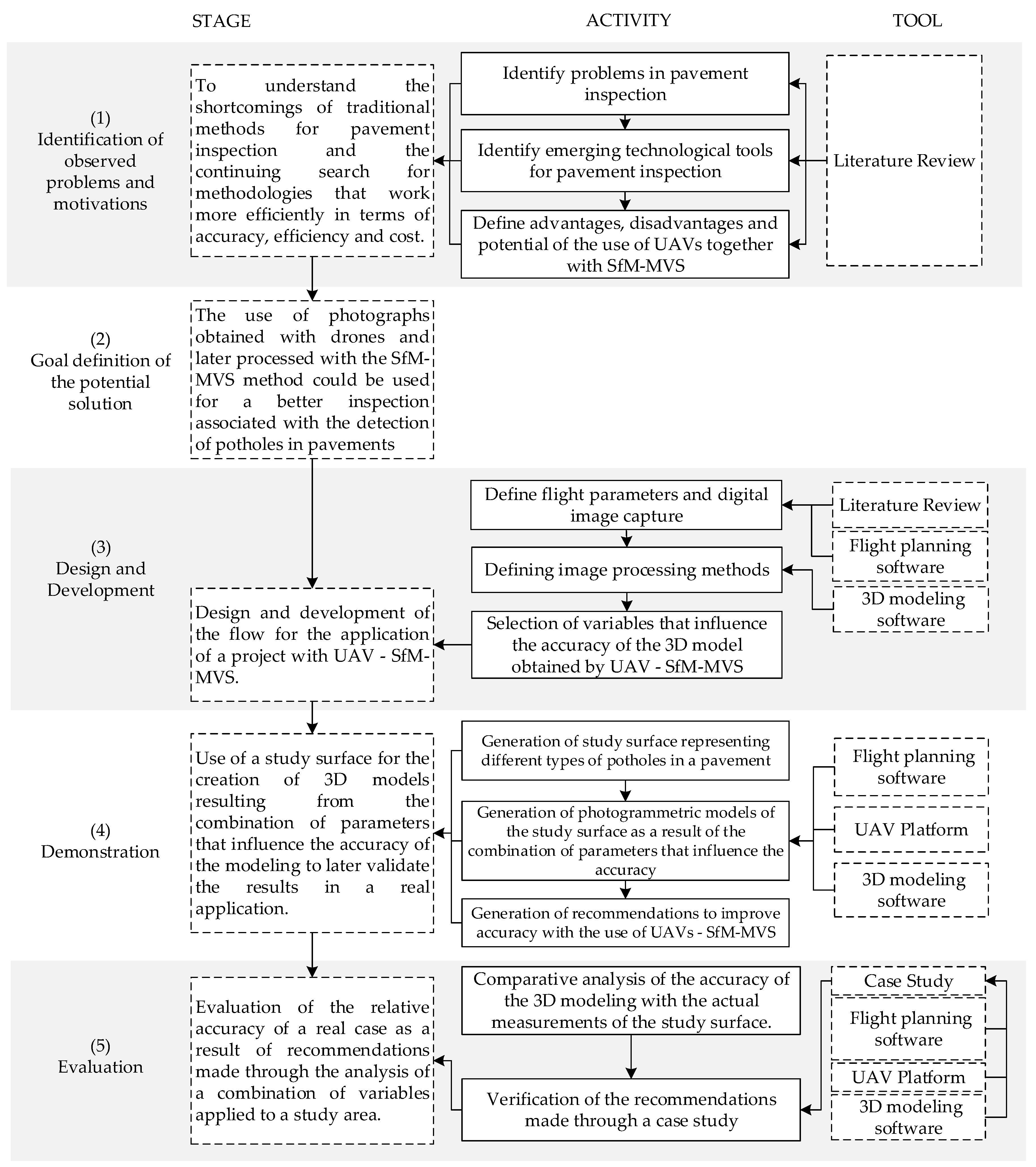

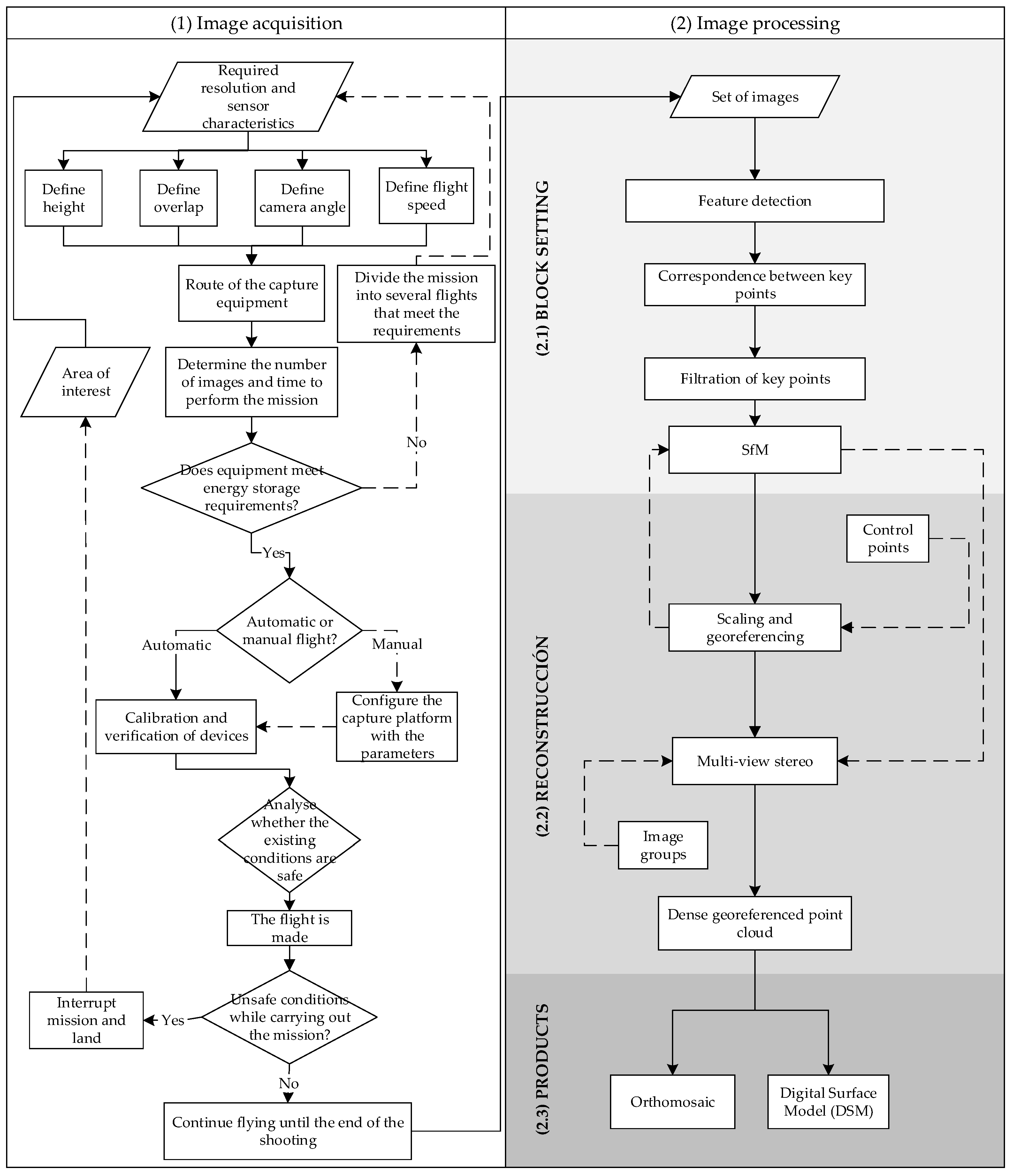

In

Table 3, the series are presented (named C1-C36 and associated with

Figure 5,

Figure 6 and

Figure 7) associated with different combinations of height and overlap. Each series includes the five angle combinations.

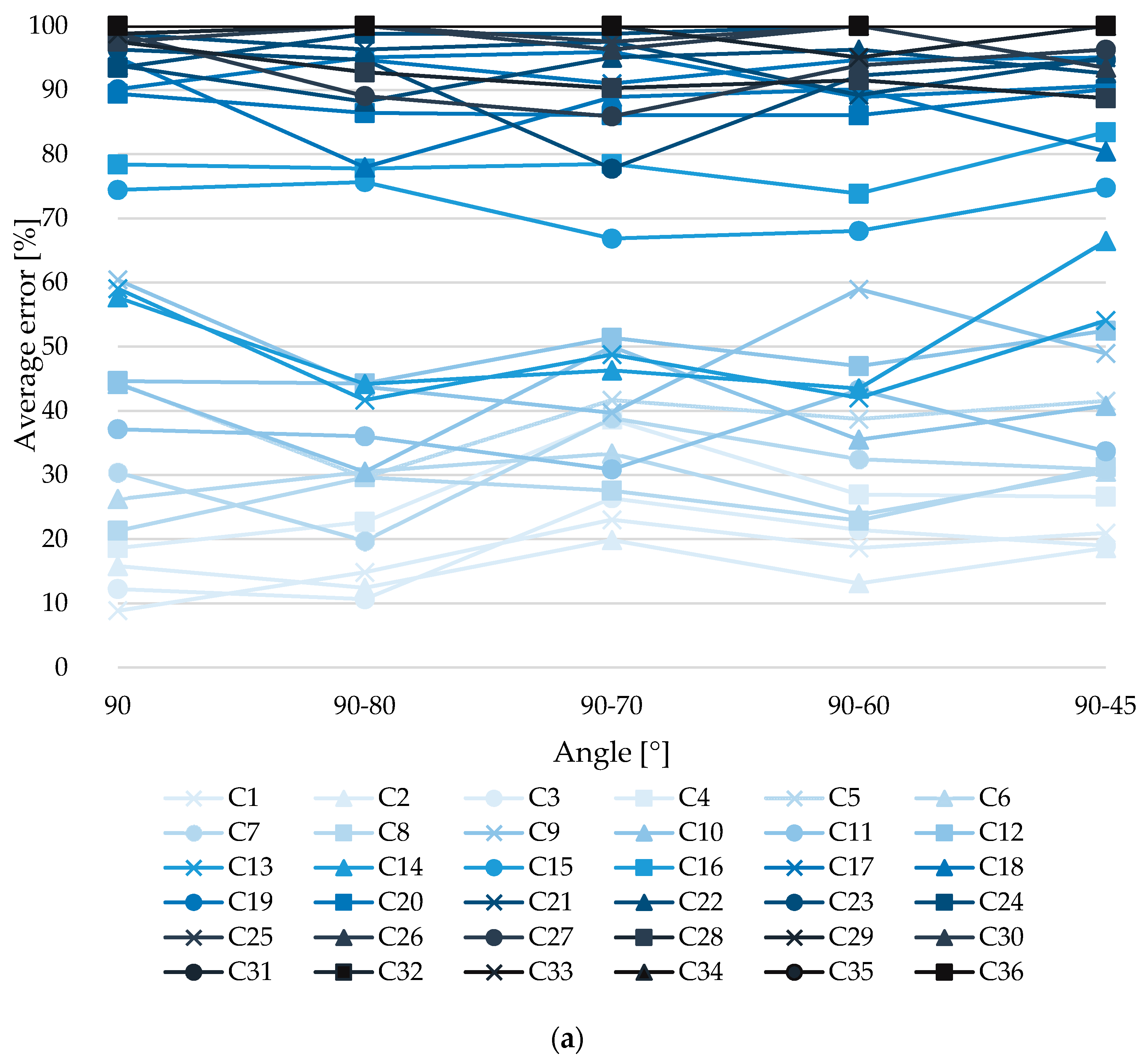

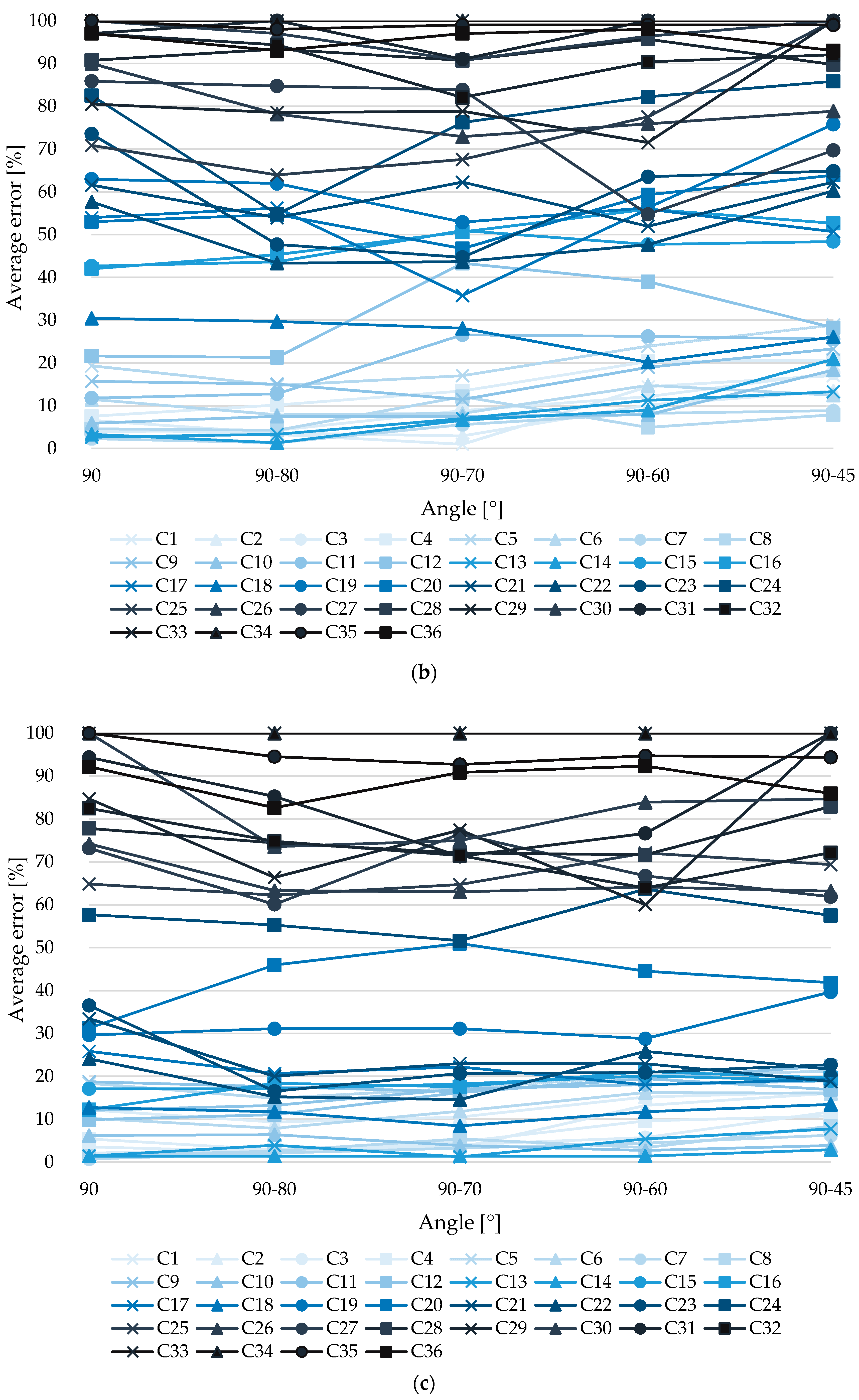

Figure 5,

Figure 6 and

Figure 7 show, respectively, the results of the experiment carried out. The horizontal axis corresponds to the variation or combination of camera angle for photo acquisition, while the vertical axis is the average error for potholes for depth (

Figure 5), width (

Figure 6), and volume (

Figure 7). The sub-figures correspond to the different severity levels of each of these parameters: (a) low, (b) medium, and (c) high. On the other hand, the curves correspond to the different combinations in

Table 3, starting with C1 (lighter color, lower height of photograph) to C36 (stronger color, the higher height of photograph). Thus, for each set of graphs, when the curves are located in the lower areas of each graph, it will represent that this combination Cn has a lower percentage of error in the measurement with the method used, with respect to its real value. On the other hand, those located in the upper part will have errors close to or equal to 100%; that is, in the latter case, it has not been possible to completely digitally reconstruct the pothole and the parameter of interest has not been measured.

Figure 5 displays the depth error values for each of the series, each including the variation and combination of camera angles for data acquisition. In

Figure 5a, it is observed that the incorporation of an oblique angle in the acquisition of photographs produces lower error levels compared to models produced only with vertical photographs; more precisely, the reduction of error is 20%. Then, in

Figure 5b,c the same behavior governs, i.e., the inclusion of an oblique angle decreases the error; however, for these severity levels (medium and high), the reduction of the error is much greater, on the order of 30%.

Figure 6 displays the width error values obtained from measuring the models of each series with different combinations and angle variations. In

Figure 6, it is observed that for this case the inclusion of angle has a minimum impact (less than 2%) for cases with the least error, which are the relevant ones, since those manage to reliably measure the geometric characteristics of potholes. For higher height cases the reduction of the error when incorporating oblique angles is up to 60%, with errors around 50–60%.

Figure 7 displays the volume error values, obtained from the measurement of the models of each series with combinations and angle variations. According to the figure, the horizontal axis is the variation or combination of camera angle for photo acquisition, while the vertical axis in

Figure 7a–c is the average error of measuring pothole volume by severity level, low in

Figure 7a, middle in

Figure 7b and high in

Figure 7c. In

Figure 7, for the best case, the reduction from considering only the vertical angle (90°) is 39%.

The results indicate that the inclusion of angles other than 90° [–] have a positive impact on accuracy (error) for measuring the depth, width, and volume of potholes. However, this impact is low compared to the time-to-time effort to acquire this extra number of images, and the processing time is almost double. Likewise, there is no specific oblique angle or clear trend to ensure a reduction of the error. Therefore, working with vertical angles in this method remains the most efficient option for the acquisition of images with application in measurement of potholes on pavements, and in the same way remains consistent as a simple and fast method against more accurate technologies. Therefore, from now on, combinations with 90° angle will be discussed without any other variation or combination.

3.2. Variation in Overlap and Height

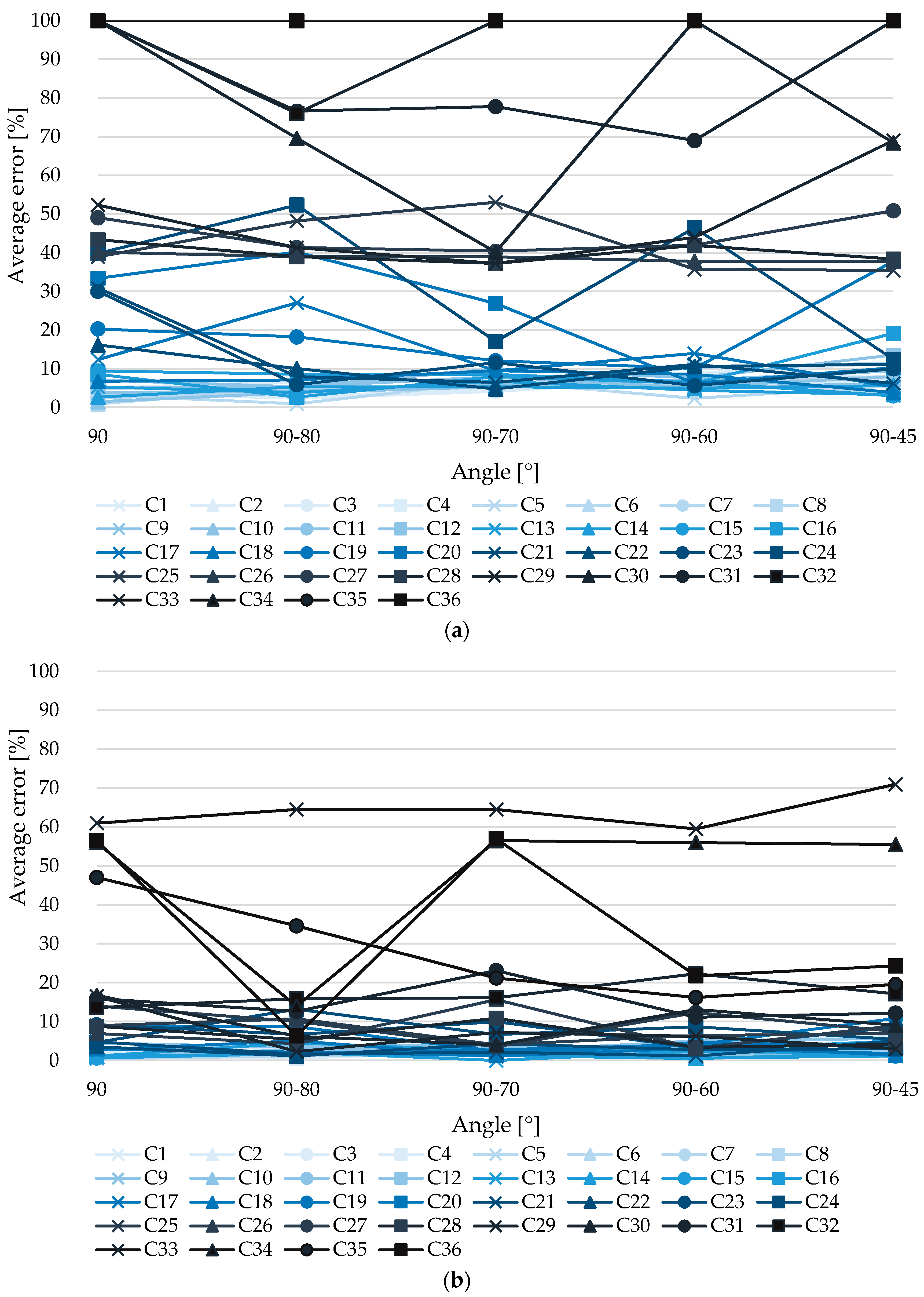

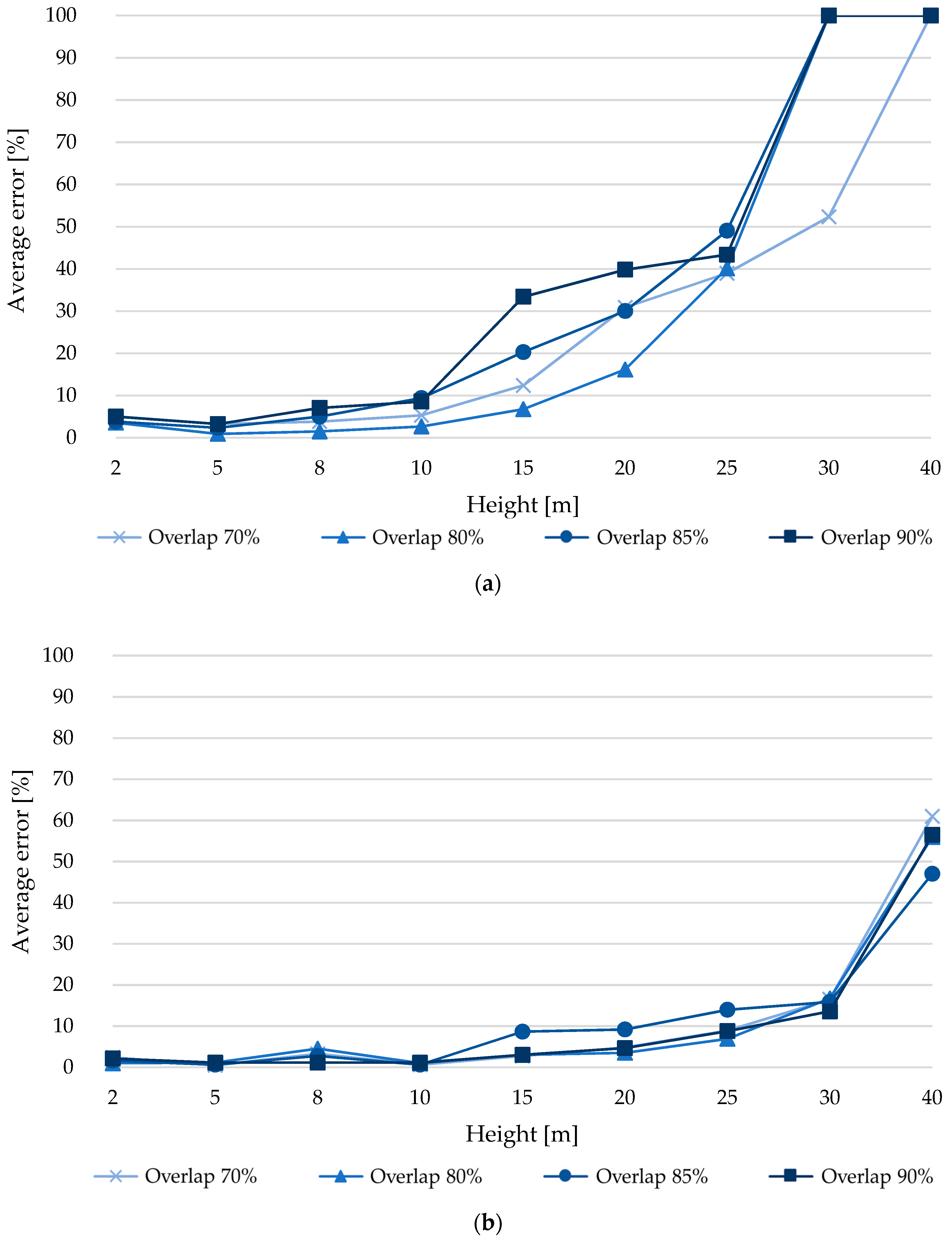

Figure 8 shows the values and behavior of the depth measurement error when varying height and overlap in vertical photo acquisition flights (90-degree camera angle) for different severity of potholes (low severity in

Figure 8a, middle severity in

Figure 8b and high severity in

Figure 8c).

According to

Figure 8a, it is observed that the error trend of all overlaps increases as the measurement height grows, which makes sense as the resolution of the photograph (GSD) decreases. In addition, the error variation decreases as the measurement height increases, indicating that errors converge due to their null interpretation, since the 100% error indicates that the variable to be measured in the model is not identified. On the other hand, there is no optimal overlap that applies to all of the heights. For 2 m, the best result was obtained for an overlap of 75%, with an error of 8.8%. For 5 m, the lowest error was 21.3% for a 90% overlap. For 8 m, the 85% overlap achieved the best results, resulting in an error of 37.1%. At 10 m, the minimum error was 57.7%, which is associated with an overlap of 80%, this error is 1.3% lower compared to an overlap of 75%, even more the error is reduced by more than 15% compared to overlaps of 85 and 90%. While, at 15 m, the 90% overlap has the lowest error (89.4%), an error that is 0.5% less compared to an overlap of 85%; moreover, the error is reduced by more than 5% compared to overlaps of 75 and 80%. However, the error is high (+89%). At 20 m, the least error (93.5%) was obtained with an overlap of 90%, the other overlaps had an error between 93.8–98.8%. At 25 m, there is a 100% error for the overlap of 80%, indicating that no depth could be measured for any of the potholes analyzed, while for overlaps of 75 and 90% the error is 87.5% for both and, for the overlap of 85%, an error of 98.8% was obtained. At 30 m, the overlaps of 80, 85 and 90% obtained a 100% error, which as stated above indicates that no depth was measured. Additionally, with the overlap of 75%, a 98.8% error was obtained, indicating that it was measured because the reconstruction of the 3D model created a slope in a pothole. At 40 m, all overlaps get an error of 100%, that is, no elevation was appreciated in the model; for this reason, the interpretation of the potholes in the model is like that of a circular horizontal dark spot. It can be observed that from 15 m that the error is high (about 90%) for the various overlaps, indicating that the analysis for higher heights is no longer representative for this type of measurement. However, it noted that the simulated potholes analyzed in the experiment would not present the same conditions in practice. In other words, since the experiment considers the value of width as twice the depth, due to its semi-spherical shape, it makes it a more conservative analysis, since the width of a pothole is much greater than its depth.

From

Figure 8b, an optimal overlap is clearly observed for the heights of 2, 5, 8, 15, 20, 25 and 30 m, while, for the heights of 10 and 40 m, its optimum is not apparent at first sight. At 2 m, the least error was obtained (4%), four with an overlap of 85%; the other overlaps (75, 85 and 90%) have an error difference that exceeds 0.6% compared to the overlap of 75%. At 5 m, the optimal overlap is 85%, because with this overlap and height, the error is the lowest, specifically 2.3%; this error is 2.3% lower compared to an overlap of 90%. At 15 m, the least error was obtained (30.2%) with an overlap of 80%, the other overlaps (75, 85 and 90%) have an error difference that exceeds 22% compared to the overlap of 80%. At 8 m and 80% overlap, a 6% error was obtained, which is the lowest—5.9% lower compared to the 75% overlap. At 10 m, the optimal overlap is 75%, because with this overlap and height the error is the lowest, specifically 2.6%; this error is 0.6% lower compared to an overlap of 80%. Simultaneously, the error is reduced by more than 39% compared to the overlaps of 85 and 90%. At 15 m, the least error was obtained (30.2%) with an overlap of 80%, the other overlaps (75, 85 and 90%) has an error difference that exceeds 22% compared to the overlap of 80%. At 20 and 80% overlap, a 57% error was obtained, which is the lowest and is 4% lower compared to the 75% overlap; even better, the error is reduced by more than 15.8% compared to 85 and 90% overlaps. At 25 m the least error was obtained (70.2%) with an overlap of 75%, the other overlaps (80, 85 and 90%) have an error difference that exceeds 14.7%, compared to the overlap of 75%. At 30 m, the least error was obtained (79.6%) with an overlap of 75%, the other overlaps (80, 85 and 90%) have an error difference greater than 16.1% compared to the overlap of 75%. At 40 m, the error of all overlaps exceeds 95%, so the analysis is no longer representative. As mentioned in the previous analysis, the potholes analyzed would not have the same conditions as in practice (width >> depth), so the analysis remains conservative. Additionally, when looking at the same figure, the error of average severity potholes is reduced compared to low severity bumps, because their dimensions are larger.

From

Figure 8c, an optimal overlap is observed for the heights of 8, 15, 20, 25 and 40 m, while, for the heights of 2, 5, 10 and 30 m its peak is not apparent at first sight. At 2 m, the least error was obtained (2.1%) with an overlap of 75%, the other overlaps (80, 85 and 90%) have an error difference greater than 1.5% compared to the overlap of 85%. For 5 m, the least error was obtained (0.7%) with an overlap of 85%, which has 0.7% less error compared to a 90% overlap. At 8 m, the least error (6.2%) was obtained with an overlap of 80%, which has 3.7% less error compared to an overlap of 90%. Simultaneously, the error is reduced by more than 5.9% compared to overlaps of 85 and 90%. At 10 m, the least error (1.4%) is for overlaps of 75 and 80%, the other overlaps (85 and 90%) have an error difference greater than 10.8% compared to overlaps of 75 and 80%. At 15 m, the least error was obtained (12.7%) with an overlap of 80%, the other overlaps (75, 85 and 90%) have an error difference greater than 13.1% compared to the overlap of 80%. At 20 m, the least error was obtained (24.1%) with an overlap of 80%, which has between 9.4% to 12.4% less error compared to an overlap of 75 and 85%. Moreover, the error is reduced more than 36.6% compared to the 90% overlap. At 25 m, the least error was obtained (64.8%) with an overlap of 75%, the other overlaps (80, 85 and 90%) have an error difference greater than 8.4% compared to the overlap of 75%. At 30 m, the least error was obtained (82.5%) with an overlap of 90%, which has 2.2% less error compared to an overlap of 75%, and at the same time, the error is reduced by more than 11.8% compared to the overlap of 85% and 17.5% compared to the overlap of 80%. At 40 m, the least error was obtained (92.2%) with an overlap of 90%, while for other overlaps (75, 80 and 85%) 100% errors were obtained, indicating that these measurements are no longer representative for this analysis. The potholes analyzed for high severity meet the conditions to be characterized as potholes, in the sense of their depth and width dimensions. However, it is important to be reminded that in practice the width is much larger than the depth, so the analysis remains conservative. Additionally, it is observed that the high severity bump error is reduced compared to the average severity potholes, because their dimensions are larger.

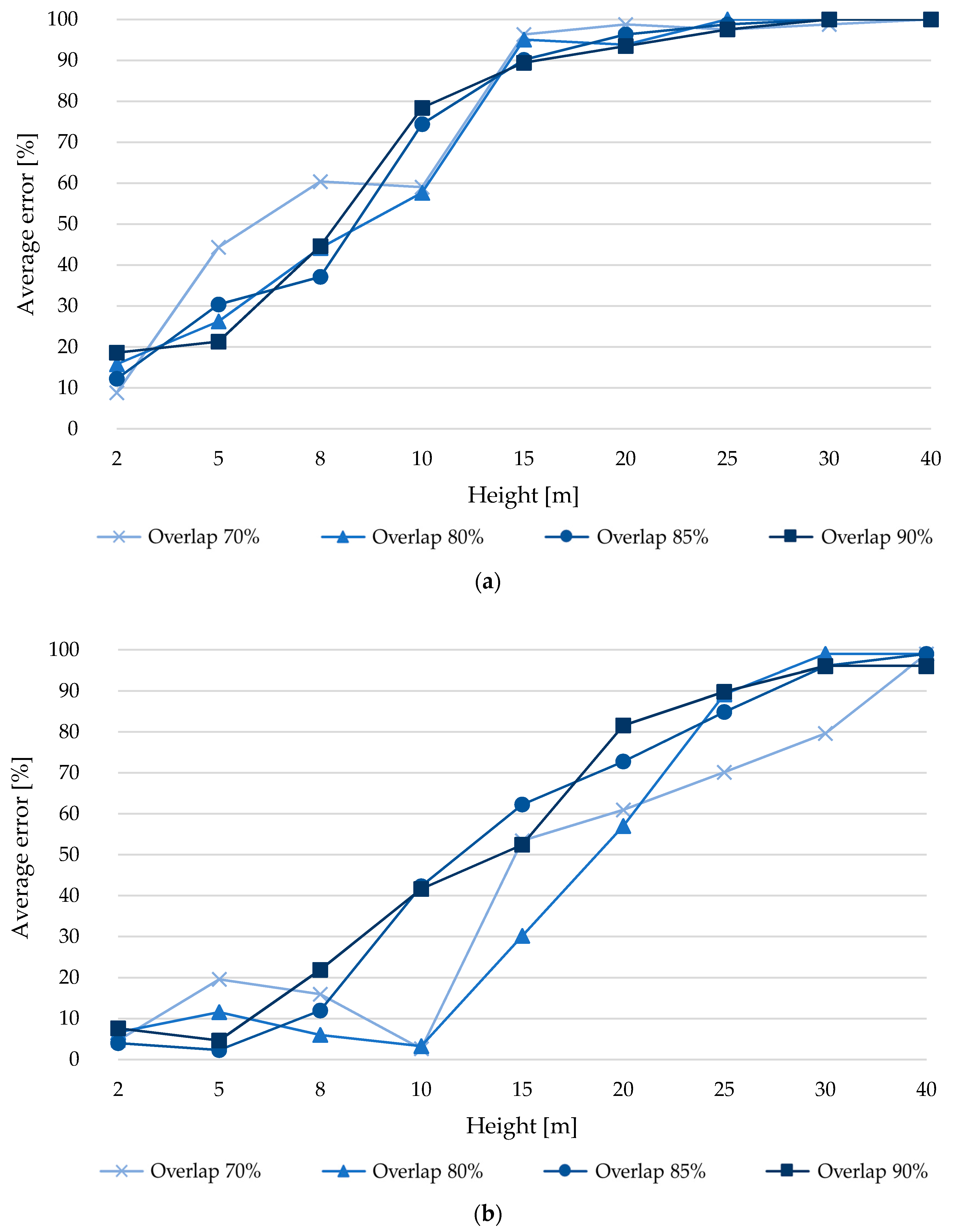

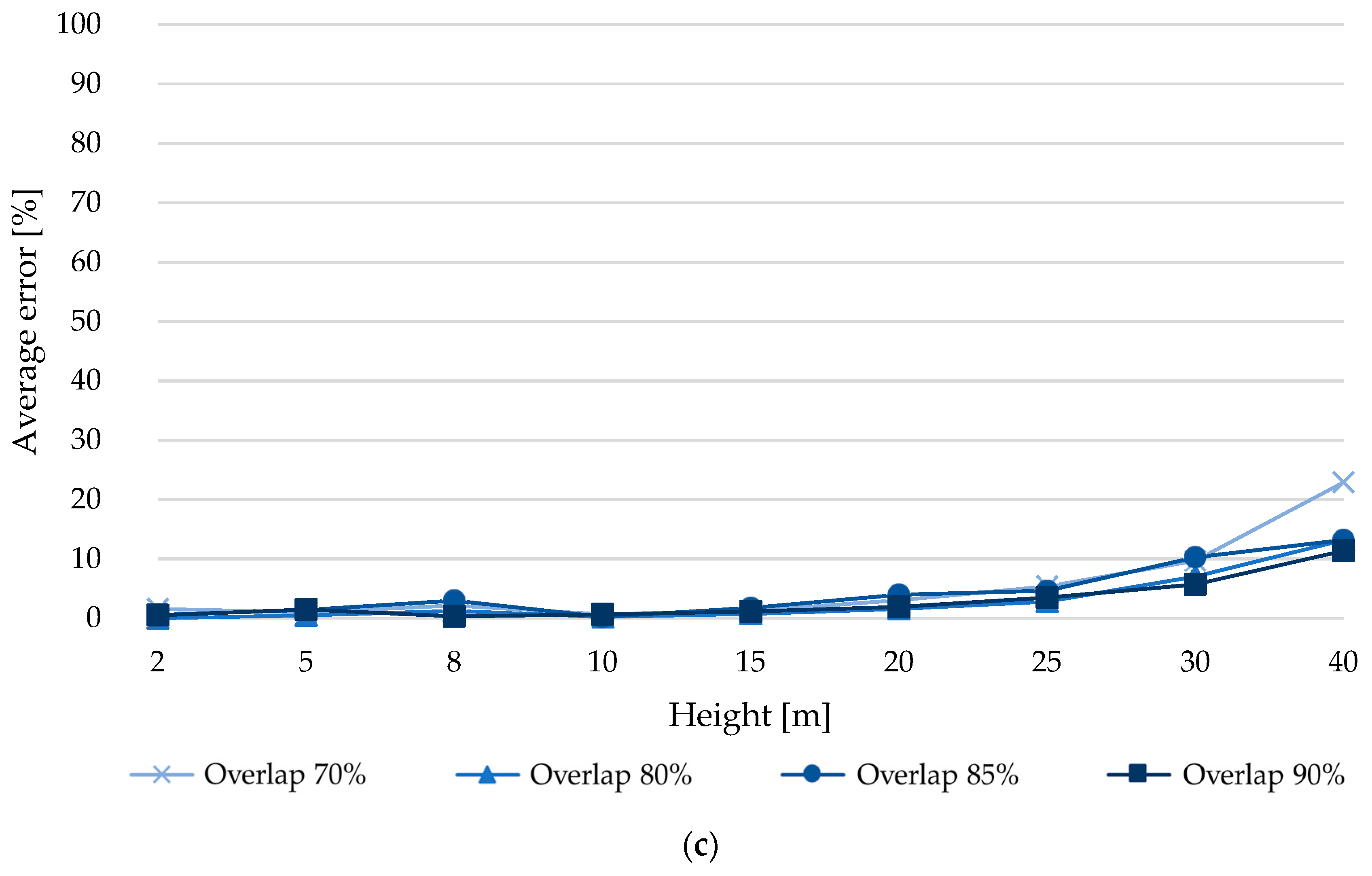

Figure 9 shows the values and behavior of the width measurement error when varying height and overlap in vertical photo acquisition flights (90-degree camera angle) for different severity of potholes (low severity in

Figure 9a, middle severity in

Figure 9b, high severity in

Figure 9c).

According to

Figure 9a and compared to the figure above, it is observed that the width measurement has a lower error than the bump depth measurement. At 2 m, the least error was obtained (3.6%) with an 80% overlap, which has 0.2% less error compared to the 85% overlap. At 5 m, the minimum error value (0.9%) was achieved with an overlap of 80%, which has between 1.4 and 2.3% less error compared to the other overlaps (75, 85 and 90%). For 8 m and an overlap of 80%, an error of 1.5% was found, which is the lowest for this height, comparing this error with respect to overlaps of 75 and 85% there is a difference between 2.3 and 3.5% less error respectively, even more, the error is reduced by 5.6% compared to an overlap of 90%. For 10 m, the least error was obtained (2.7%) with an overlap of 80%, which has 2.6% less error compared to an overlap of 75%, moreover, the error is reduced by more than 5.9% compared to overlaps of 85 and 90%. At 15 m, the least error (6.7%) it was obtained with an overlap of 80%, which has 5.7% less error compared to Z an overlap of 75%. Simultaneously, the error is reduced by more than 13.6% compared to overlaps of 85 and 90%. At 20 m, the variability of the error decreases compared to that obtained at 15 m. Additionally, for this height, the minimal error is obtained (16.2%) with an overlap of 80%, the other overlaps (75, 85 and 90%) have an error difference greater than 13.8% compared to the overlap of 80%. At 25 m, the error variation for the different overlaps decreases more compared to a height of 20 m. On the other hand, the least error (39.0%) was achieved with an overlap of 75%, which has 1.1% less error compared to an overlap of 80% and 4.4% less than the 90% overlap. Moreover, the error is reduced by 8.9% compared to an overlap of 85%. At 30 m, the least error was obtained (52.3%) with an overlap of 75%, for other overlaps (80, 85 and 90%) 100% errors were obtained, because the model did not rebuild potholes. At 40 m, all overlaps got 100% errors, which as mentioned earlier, is due to problems of modeling small bump sizes.

From

Figure 9b, it is observed that the measurement of widths has less error than the measurement of bump depth. In addition, the error obtained is reduced compared to the analysis for low severity potholes. It is observed that up to a 30-m measuring height, the error does not exceed 20% for the different overlaps and at 40 m, error values greater than 45% are reached. At 2 m, the least error was obtained (1%) with an overlap of 80%, which has 0.6% less error compared to the overlap of 85%, while for an overlap of 75 and 90% the error is reduced by 1.4 to 1.1%. At 5 m, the minimum error value (0.5%) was obtained with an overlap of 75%, which has between 0.1 and 0.6% less error compared to the other overlaps (80, 85 and 90%). For 8 m and an overlap of 90%, an error of 1.1% was made. At 10 m, the least error was obtained (0.6%) with an overlap of 75 and 85%, the other overlaps (80 and 90%) have an error difference of 0.5% compared to overlaps of 75 and 85%. For 15 m, the least error (2.8%) was obtained with an overlap of 75%, which has 0.2% less error compared to overlaps of 80 and 90%. Moreover, the error is reduced by more than 5.9% compared to the overlap of 85%. At 20 m, the least error (3.5%) is obtained with an overlap of 80%, which is 1.2 and 1.3% less error compared to 90 and 75% overlaps respectively. The error is further reduced, up to 5.7% compared to the 85% overlap. For 25 m, the least error (6.9%) was obtained with an overlap of 80%, which has 1.9 and 2% less error compared to overlaps of 75 and 90%, respectively, while compared to the overlap of 85% the error is reduced by 7.1%. For 30 m, the least error was obtained (13.6%) with an overlap of 90%, which is between 2.3 and 3.1% less error compared to the other overlaps (75, 80 and 90%). At 40 m, the least error was obtained (47.0%) with an overlap of 85%, which has between 9 and 14% less error compared to the other overlaps (75, 80 and 90%).

From

Figure 9c, it is observed that the measurement of widths has less error than the measurement of bump depth. The error obtained is reduced compared to the analysis for medium severity potholes. Additionally, note that for up to a 30-m measuring height, the error does not exceed 10.5% for the different overlaps and at 40 m, the overlap of 75% is the only one that exceeds 20% error, while the other overlaps do not exceed 14% error. At 2 m, the least error was obtained (0%) with an overlap of 80%, while for other overlaps the error was less than 1.6%. At 5 m, the minimum error value (0.5%) was obtained with an overlap of 80%, which has between 0.5 and 1% less error compared to the other overlaps (75, 85 and 90%). For 8 m and an overlap of 90%, an error of 0.3% was made, which is the lowest for this height, comparing this error with respect to overlaps of 75 and 80% there is a difference between 1.9 and 0.9% less error respectively, more so, the error is reduced by 2.7% compared to an overlap of 85%. At 10 m, the least error was obtained (0.3%) with an overlap of 80 and 85%, the other overlaps (70 and 90%) have an error difference of 0.4% compared to overlaps of 75 and 85%. At 15 m, the least error was obtained (0.8%) with an overlap of 80%, which has between 0.4 and 0.5% less error compared to overlaps of 90 and 75%, respectively, moreover, the error is reduced by 0.9% compared to the overlap of 85%. At 20 m, the least error is obtained with an overlap of 80%, which is 1.6% and is 0.3% lower compared to the overlap of 90%, moreover, the error is reduced by 1.5% to 2.4% compared to overlaps of 75 and 85%. At 25 m, the least error was obtained (2.8%) with an overlap of 80%, which is between 0.7 and 2.6% less error compared to the other overlaps (75, 85 and 90%). At 30 m, the least error was obtained (5.7%) with an overlap of 90%, which has 1.4% less error compared to an overlap of 80%. Moreover, the error is reduced by 4 to 4.3% compared to overlaps of 75 to 85%. At 40 m, the least error was obtained (11.4%) with an overlap of 90%, which has 1.8% less error compared to overlaps of 80 and 85%. Moreover, the error is reduced by 11.5% compared to an overlap of 75%.

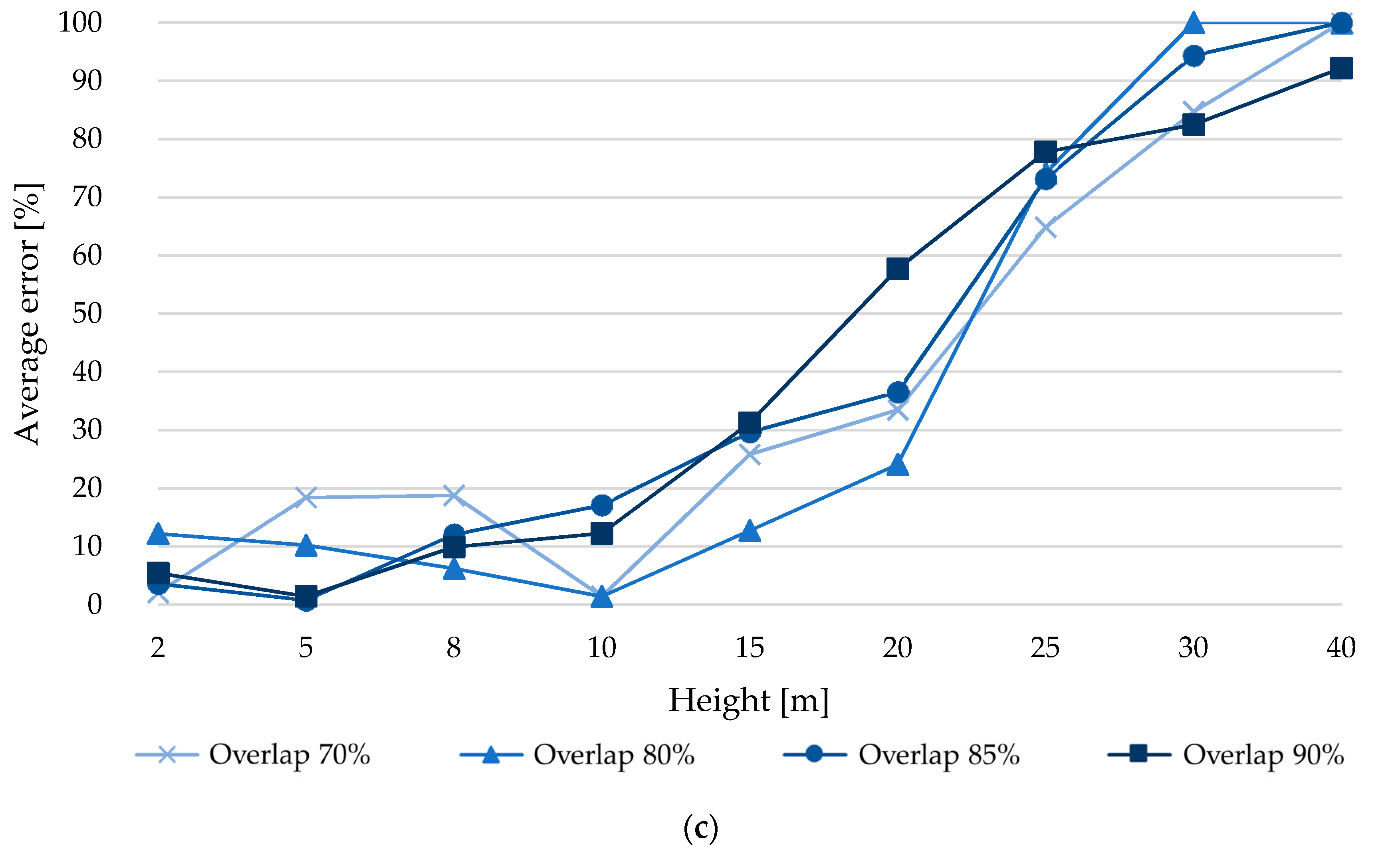

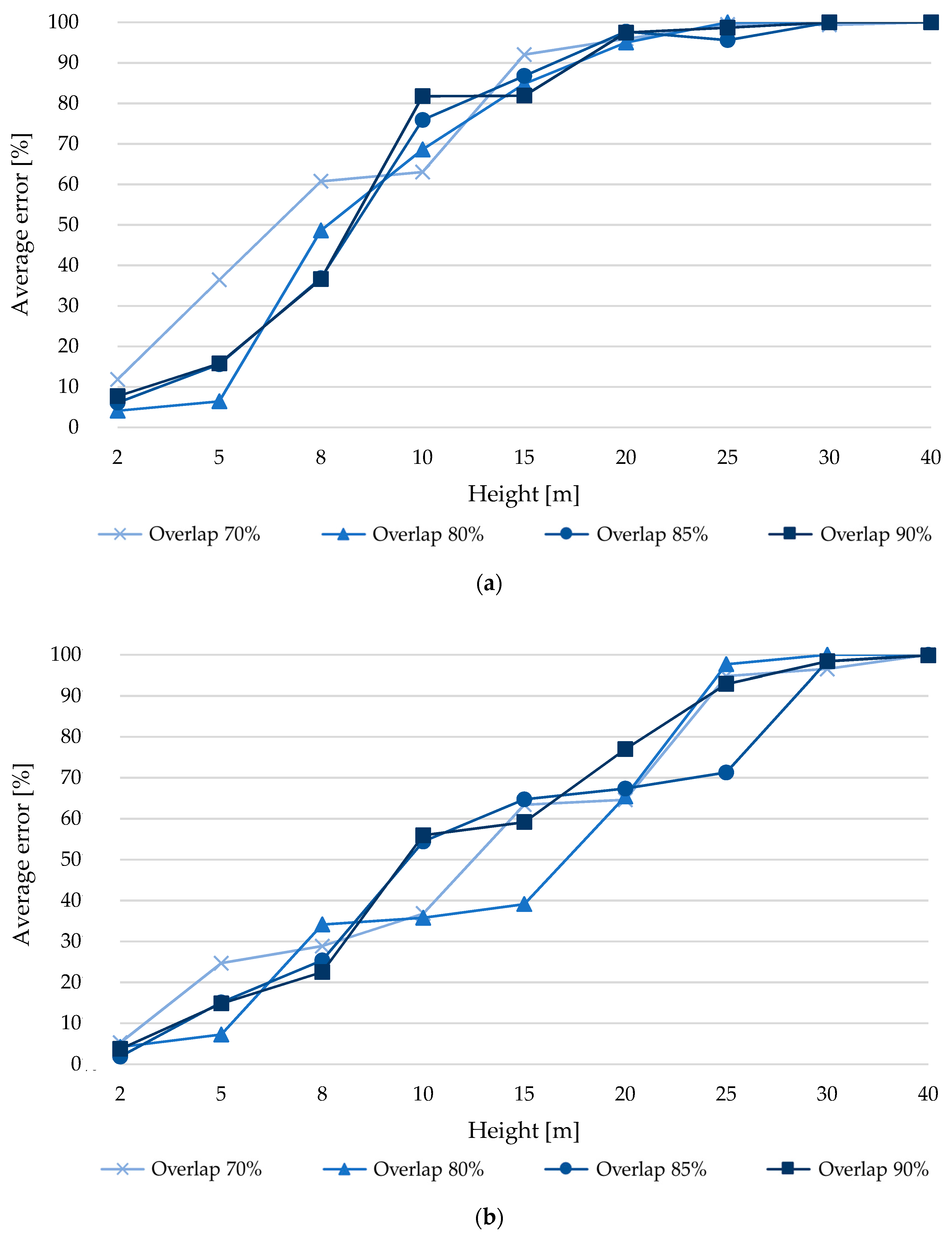

Figure 10 shows the values and behavior of the volume measurement error by varying the height and overlap in vertical photo acquisition flights (90-degree camera angle) for different severity in potholes (low severity in

Figure 10a, middle severity in

Figure 10b, high severity in

Figure 10c).

In

Figure 10a, a trend is observed of overlapping error increases as the measurement height grows. On the other hand, it is observed that the error at 10 m is greater than 60% for all overlaps, indicating that the experiment does not work well for this type of analysis. At 2 m, the minimum error was 4.1%, which is associated with an overlap of 80%, this error is 2% lower compared to an overlap of 85%, even more the error is reduced by more than 3.6% compared to overlaps of 75 and 90%. At 5 m, the 80% overlap has the lowest error (6.4%), an error that is 9.2% less compared to an overlap of 85%. Moreover, the error is reduced by more than 9.4% compared to 75 and 90% overlaps. At 8 m, the least error (36.6%) was obtained with a 90% overlap. For 10 m and with an overlap of 75% an error of 63.1% was obtained, which is the lowest for this height, since it has 5.5% less error compared to the overlap of 80%. Moreover, the error is reduced by 12.8 to 18.7% compared to the overlaps of 85 and 90%. At 15 m, the least error was obtained (81.9%) with an overlap of 90%, which is between 2.9 and 10.1% less error compared to the other overlaps (75, 80 and 85%). At 20 m, the error is 95% for an 80% overlap, which is high for further analysis of this type of measurement. So, from 20 m, an analysis of volume for low severity potholes loses value in practice, since the volume of the pothole fails to describe a semi-sphere, but rather tries to reconstruct a kind of cone, from the position in which the maximum depth was obtained. The behavior is similar to that observed in the pothole depth analyses, as the measured volume depends directly on how good the measurement has been with respect to depth and area characteristics.

From

Figure 10b, a trend is observed of overlapping error increases as the capture height grows. On the other hand, it is observed that the error at 10 m is greater than 30% for overlaps of 75 and 80% and more than 50% for overlaps of 85 and 90%, indicating that the experiment does not work properly for this type of analysis. However, the error is reduced from what was observed in the previous analysis. At 2 m, the minimum error was 1.9%, which is associated with an overlap of 85%, this error is 1.8% lower compared to an overlap of 90%, even more so the error is reduced by more than 2.3% compared to overlaps of 75 and 80%. At 5 m, the 80% overlap has the lowest error (7.3%), an error that is 7.5% less compared to a 90% overlap. Moreover, the error is reduced by more than 7.8% compared to 75 and 85% overlaps. At 8 m, the least error (22.5%) was obtained with a 90% overlap. At 10 m, the least error was obtained (35.8%) with an overlap of 80%, which has 1% less error compared to the overlap of 75%, while for an overlap of 85 and 90% the error is reduced by 18.6 to 20.1%. At 15 m, the minimum error value (39.1%) was obtained with an overlap of 80%, which has between 20.0 and 25.6% less error compared to the other overlaps (75, 85 and 90%). For 20 m and an overlap of 75%, an error of 64.6% was produced, which is the lowest for this height, comparing this error with respect to overlaps of 80 and 85% there is a difference between 0.8 and 2.8% less error respectively, more than that, the error is reduced by 11.6% compared to an overlap of 90%. At 25 m, the least error was obtained (71.3%) for an 85% overlap, which is between 21.6 and 26.4% less error compared to other overlaps (75, 80 and 90%). At 30 m, the error obtained was 96.5% for an overlap of 75%, which is the lowest and at the same time high considering its magnitude, so it loses practical value. At 40 m, the error is higher than that obtained at a height of 30 m. From the above it is observed that from 30 m it loses practical value.

From

Figure 10c, a trend is observed of overlapping error increasing as the capture height grows. However, at 15 m with an overlap of 80%, the volume obtained was 1.8% lower than that obtained at 10 m with the same overlap. This is probably explained by problems in rebuilding with the software. On the other hand, it is observed that the error is similar to that made in the previous analysis, since at 10 m the error is greater than 30% for overlaps of 75 and 80% and more than 40% for overlaps of 85 and 90%, indicating that the experiment does not work properly for this type of analysis. At 2 m, the minimum error was 0.9%, which is associated with a 90% overlap, this error is 1.2% lower compared to an overlap of 85%, even more so the error is reduced by more than 1.9% compared to overlaps of 75 and 80%. At 5 m, the 80% overlap has the lowest error (5.5%), an error that is 7.5% less compared to a 90% overlap. Moreover, the error is reduced by more than 8.8% compared to 75 and 85% overlaps. At 8 m, the least error (24.6%) was obtained with an overlap of 85%. At 10 m, the least error was obtained (35.7%) with an overlap of 80%, which has 0.9% less error compared to the overlap of 75%. Moreover, the error is reduced by 5.6 to 7.8% compared to overlaps of 85 and 90%. At 15 m, and with an overlap of 80% an error of 33.9% was obtained, which is the lowest for that height, and which is 11.9% lower compared to the overlap of 75%. Moreover, the error is reduced by 25.2 to 27.7% compared to overlaps of 85 and 90%. For 20 m, the lowest error is 41.2%, which is associated with an 80% overlap, and has 10% less error compared to an overlap of 75%. Moreover, the error is reduced by 23.8 to 34.6% compared to overlaps of 85 and 90%. At 25 m, the least error was obtained (75.9%) with an 85% overlap, which is between 9.7 and 18.8% less error compared to the other overlaps (75, 80 and 90%). At 30 m, the minimum error (90.2%) was held for an overlap of 90%, which has no practical value. At 40 m, the error is high with respect to the value obtained at a height of 30 m. For the overlaps of 75, 80 and 85%, the volume could not be measured because no depth was recorded.

3.3. Selecting Optimal Parameters

From the selection by geometric characteristic type, i.e., width, depth, and bump volume, the overall combination that allows for the most reliable results must be selected, since the characteristics of potholes in a real application will be determined by a single model. In this way, optimal recommendations in depth, width and volume measurements were analyzed, always seeking to minimize the error of these types of measurement.

Table 4 was based on the discussion presented in

Section 3.2, the recommended overlap was chosen by severity level, as the use of these recommendations varies depending on the minimum level of severity to be measured. For heights of 2, 5 and 8 m, the experiments were excessively laborious. For these heights, it is difficult to ensure proper positioning and correct overlap between images, as the handling of the UAV at such a low height becomes imprecise due to the sensitivity of the control. In addition, the GPS does not deliver a correct position of the aircraft, which in some cases led to the necessary repetition of the shooting. Although capturing photographs at low altitude allows for more accurate 3D models, in practical terms and in order to optimize the flight and inspection (balance in time, quality, area observed, etc.), it is not recommended to perform the flights at the indicated heights. Therefore, it is not recommended to plan flights with heights less than 10 m. At 40 m, the error levels are maximum, therefore there is no practical justification to make a recommendation for this. For the realization of the case study, the height recommendations of 10 and 15 m were used, which present an acceptable level of error in practice.

Table 5 shows longitudinal (B) and transverse distances (A) between photographs captured with the UAV to comply with overlaps; longitudinal (

p) and transverse (q) for each of the heights.

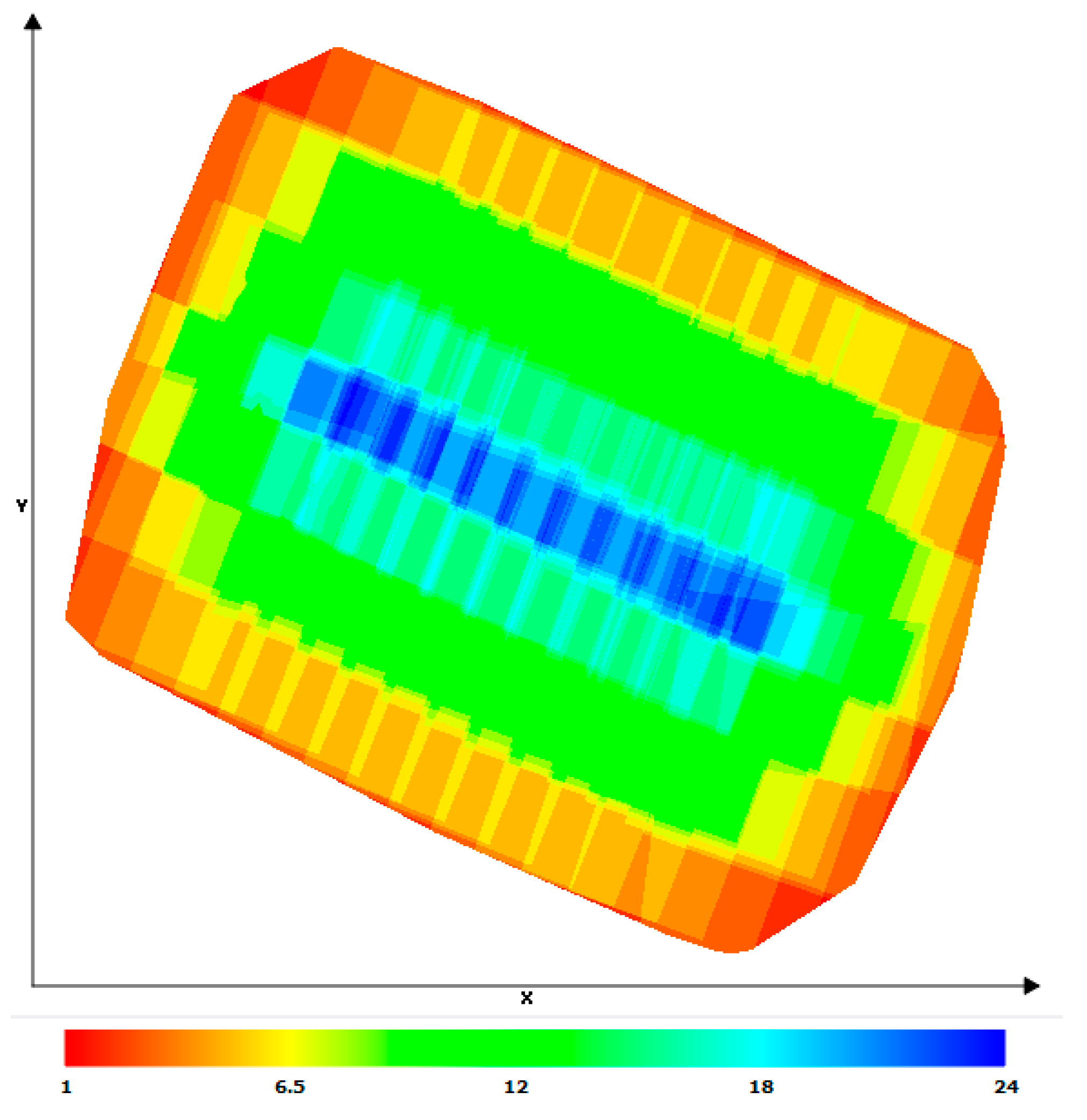

These distances are used to calculate the flight lines needed to fully cover the pavement unit to be inspected. To know the number of flight lines for a given road width, Equation (1) should be used. An additional line is added to ensure full width coverage. Additionally, to have a reference of the number of photographs to be taken per flight line, it is recommended to use Equation (2). Four additional photos are added, two for the start and two for the end of the flight line, in order to have the ends properly overlapped. Naturally, the corners will have a lower number of photo coverage compared to the center, as can be seen in an example in

Figure 11. That is necessary to guarantee a minimum of photographs in the corners of the pavement to have all the areas covered on the map.

3.4. Case Study

A study is carried out a real case study to validate whether recommendations can be extrapolated in the measurement of bumps of deteriorated asphalt pavements. The actual pavement section has 6 potholes with different dimensions (indicated in the

Table 6. Flexible pavement pothole for recommendation validation.

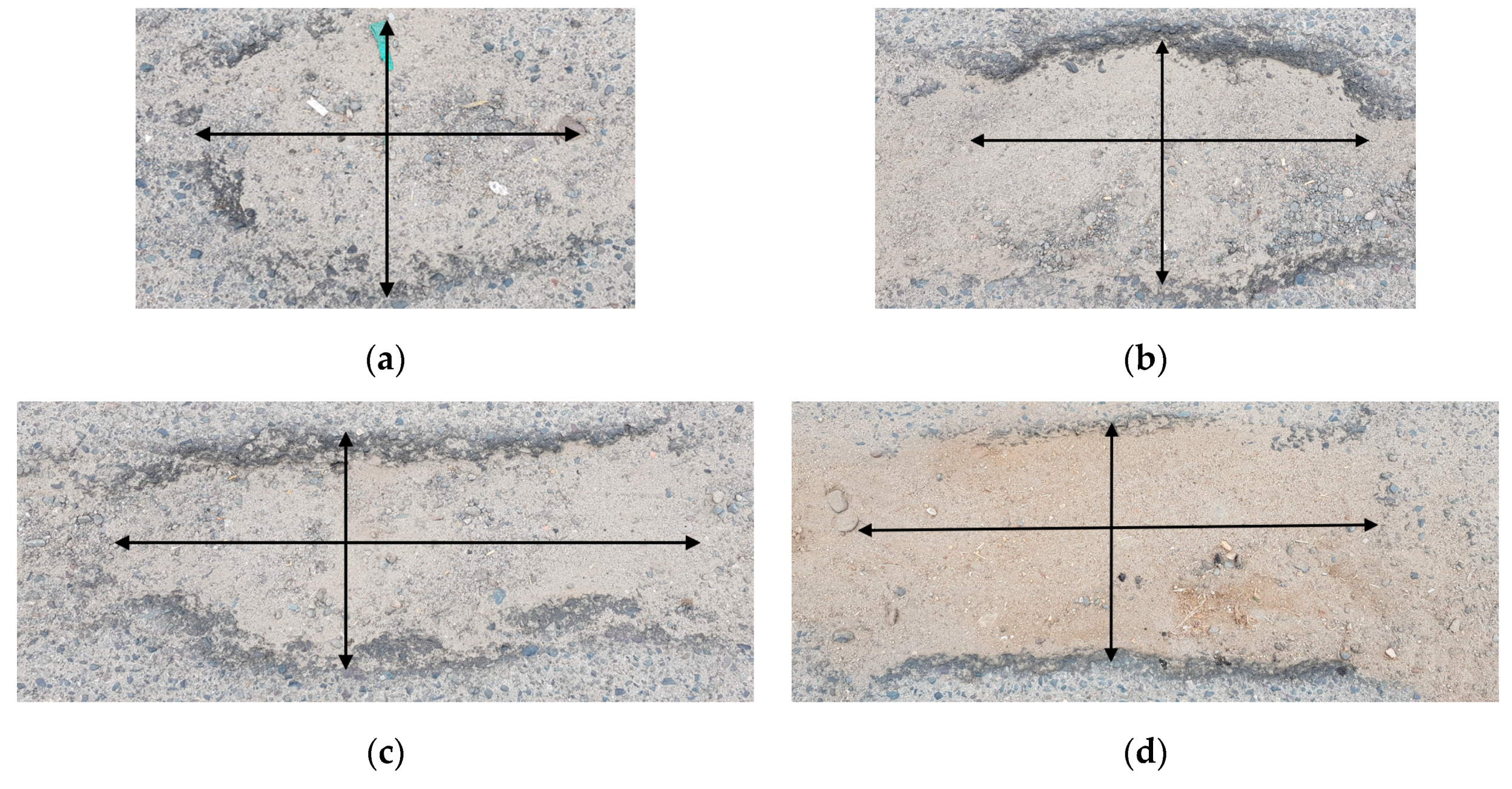

Figure 12 shows the potholes by indicating by a horizontal arrow the largest width and a vertical arrow the smallest width.

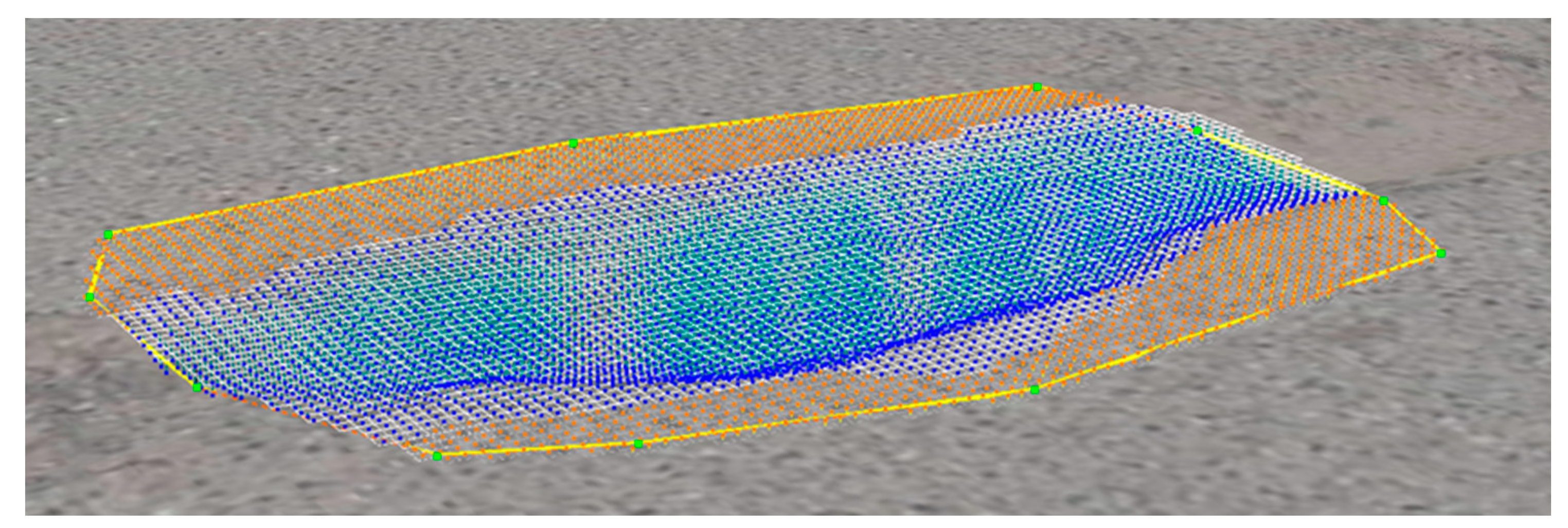

The first analysis was carried out at a height of 10 [m] applying the recommendations detailed above. The results are shown in

Table 7, in addition to

Figure 13, where the reconstruction of a pothole in the case study can be observed.

The second analysis went to one of 15 [m], the results are shown in

Table 8.

It is important to note that potholes were marked for case study planning in order to clearly identify the measured points in the field, in order to minimize the measurement error of the evaluator.

For the analysis at 10 m, the measurements of the height and width were obtained with high accuracy, compared to the other measurement variables, since with the help of the marks, these characteristics could be better determined. However, for depth measurement, the error was greater, with the worst case being 9.9% higher than the value of the recommendation; this can be explained by the irregular shape of the bumpy surface, i.e., the surface is not completely horizontal. However, the measurement error could be obtained with high accuracy (error less than 1 cm). On the other hand, the volume analysis presented a similar error with respect to the value of the recommendation. However, it remains a high error to consider it as a potential variable to be measured with this method.

For the 15 m height analysis, the width measurement was greater than the values obtained at 10 m, although for the pothole (c) a difference of 0.4% was presented. This is probably explained by the variability of measurement in terrain and the sensitivity of the study variable, which for a millimeter error in the measurement results in the error percentage presenting an atypical or unexpected behavior. In the measurement of depth and volume, the error behaved better than expected (according to the results of the study surface) which can be explained by the fact that these potholes have a large surface with respect to their depth; this certainly helps to rebuild the volume and obviously the depth.

As the above results demonstrate, the recommendations allow for a good measure of the geometric characteristics of pavement potholes. The level of error is about one centimeter, which is a promising methodology applied to the regular practice of engineering.