A Hierarchical Control System for Autonomous Driving towards Urban Challenges

Abstract

:1. Introduction

- (i)

- We successfully develop and implement a hierarchical control system for an autonomous vehicle platform. The vehicle can manipulate the whole mission autonomously within a proving ground.

- (ii)

- The motion planning with the decision-making mechanism using two-stage FSM for the SDCs is proposed. Besides, we solve an efficient LPP by using a nonlinear optimization technique and a real-time Hybrid A* algorithm.

- (iii)

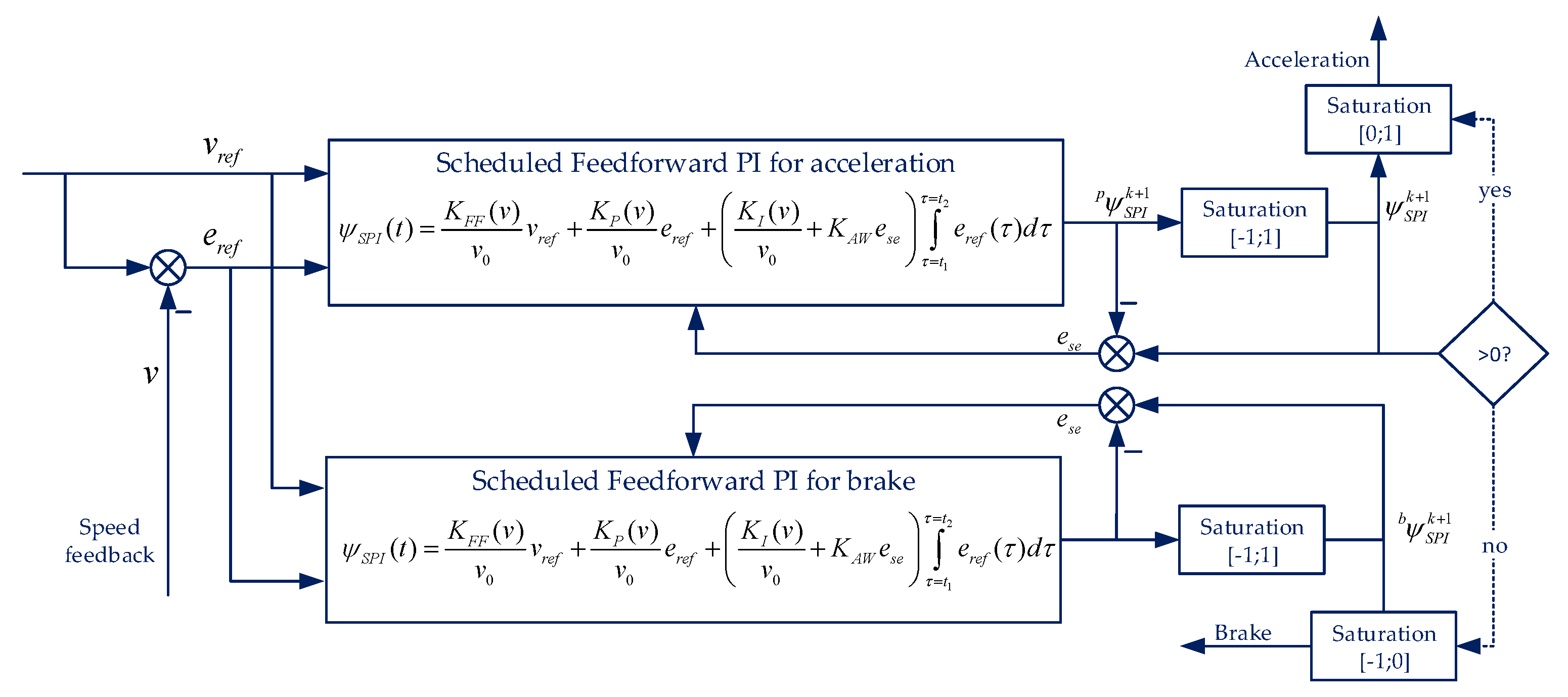

- The adaptive-pure pursuit algorithm for path tracking and a new SFF-PI architecture for longitudinal control are implemented successfully in urban environments.

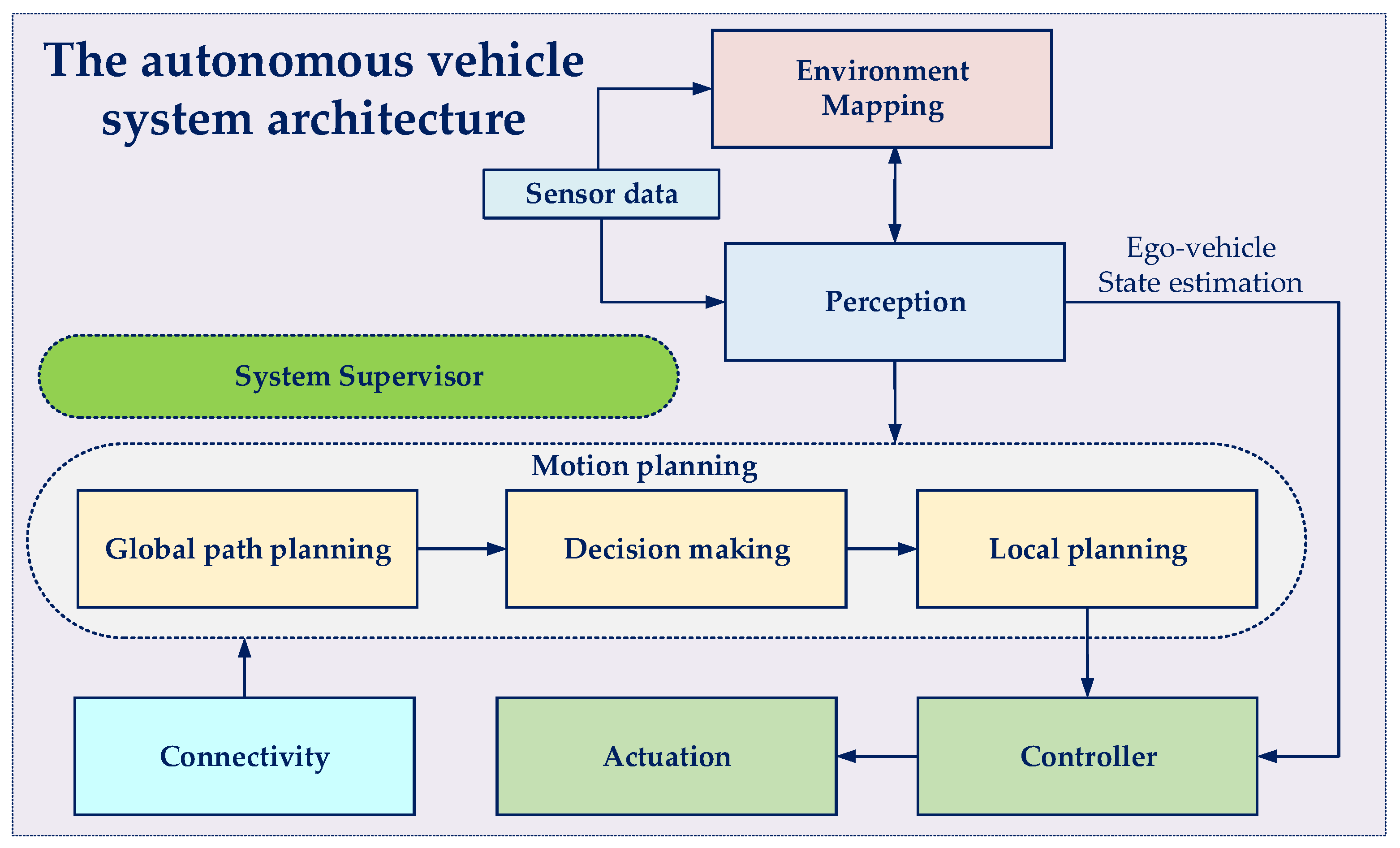

2. Hierarchical Control System

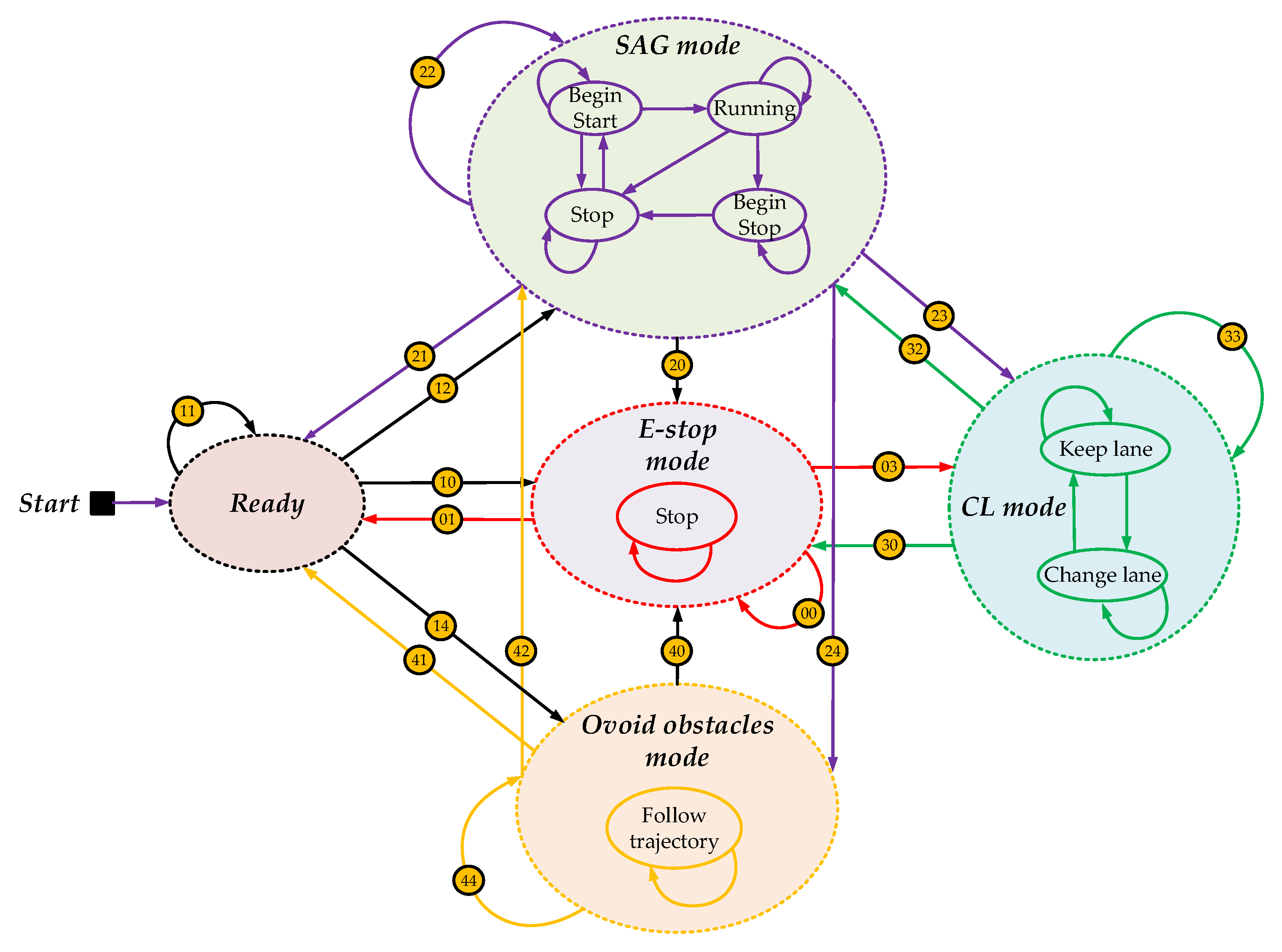

2.1. The Decision-Making Mechanism Based on Two-Stage FSM

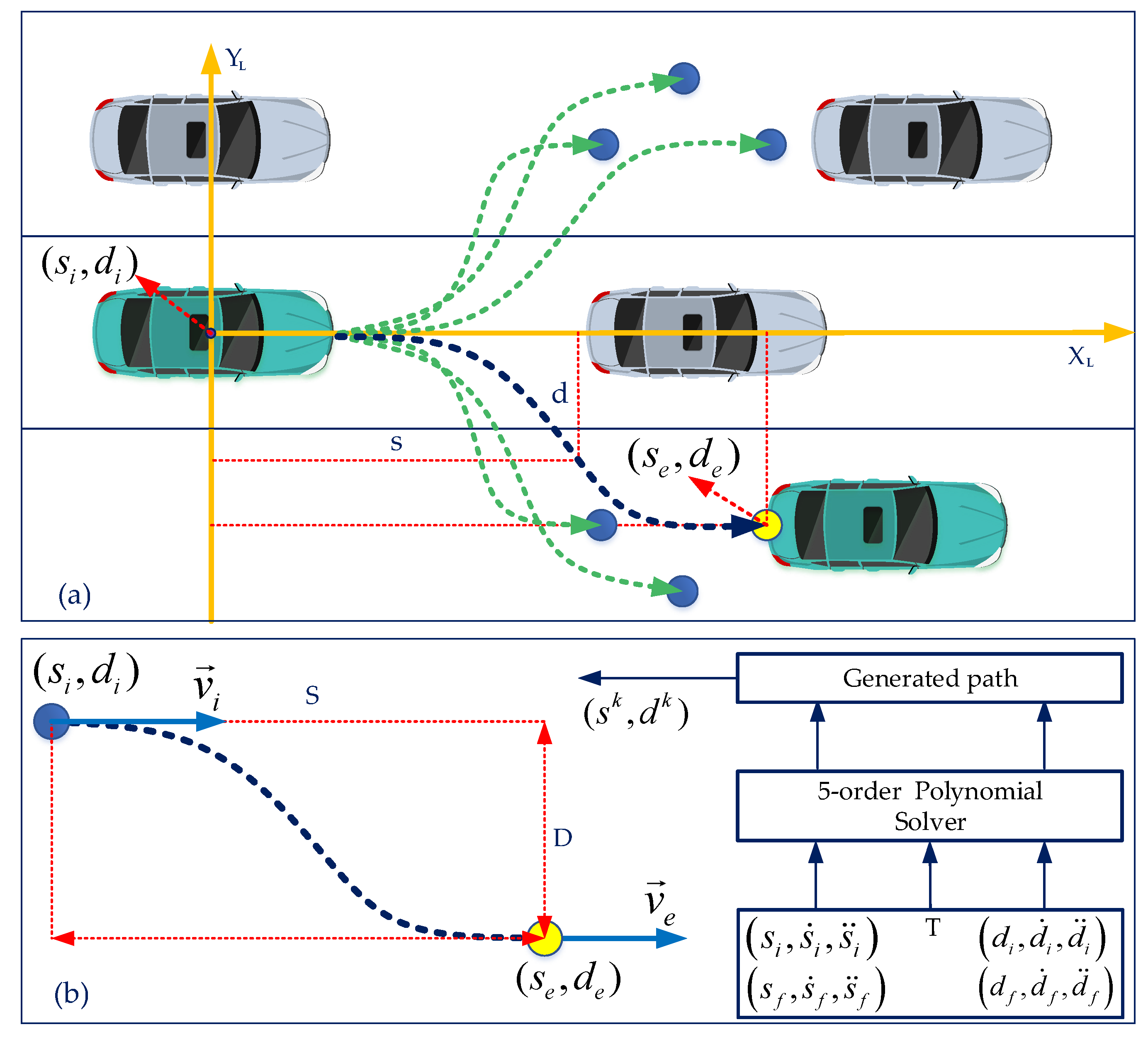

2.2. Local Path Planning

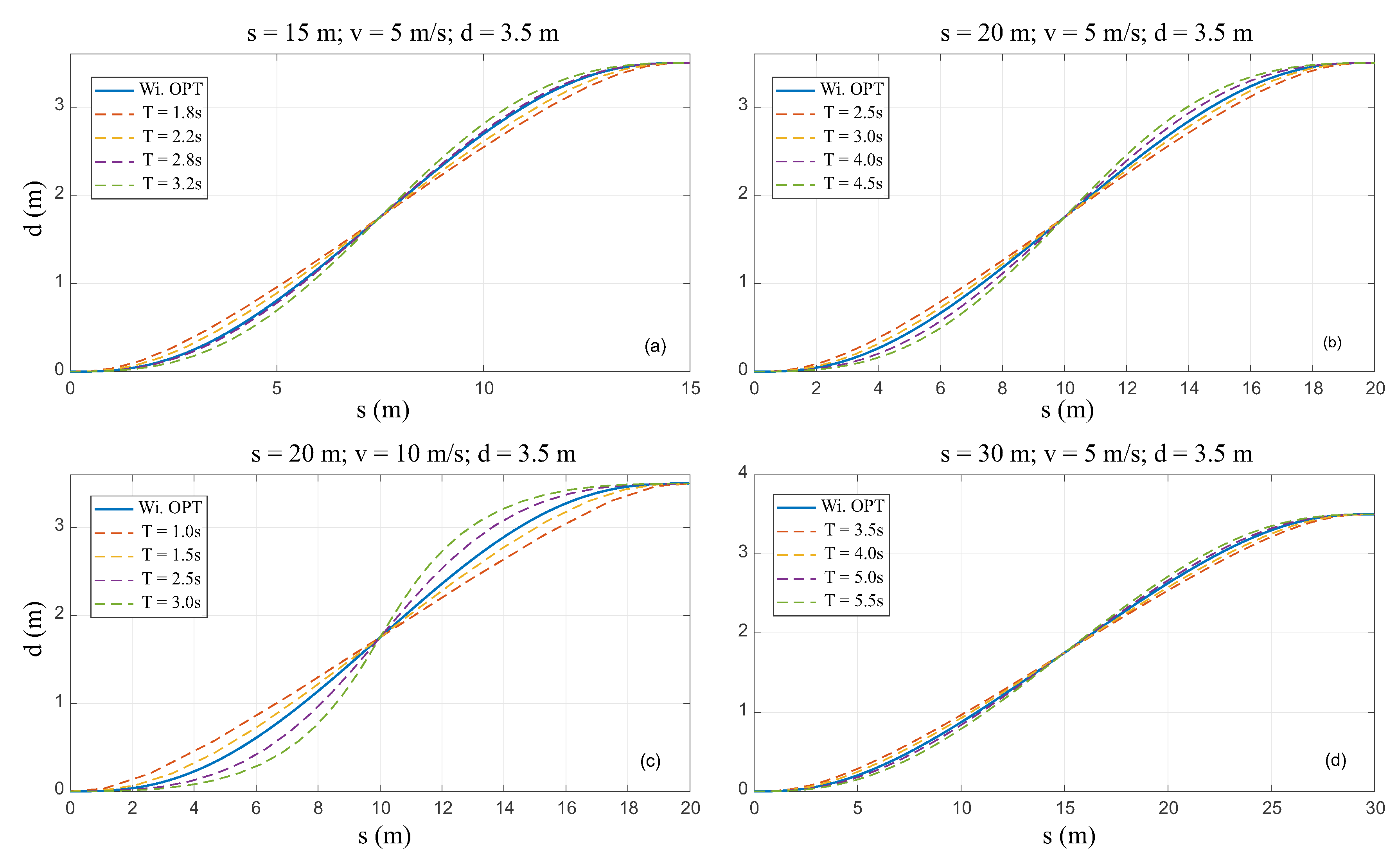

2.2.1. Optimization-Based LPP on the Local Frenet Coordinate

2.2.2. Obstacle Avoidance Based on Hybrid A* Algorithm

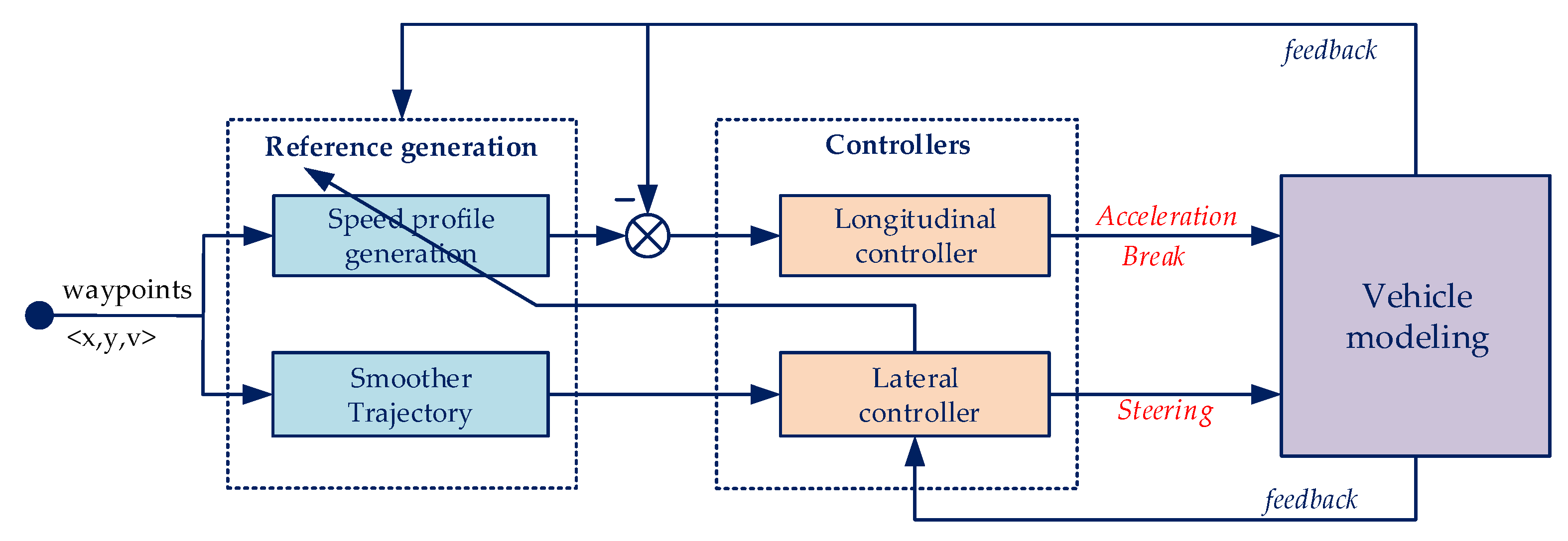

2.3. Vehicle Control Strategy

2.3.1. The Longitudinal Controller

| Algorithm 1: Longitudinal control algorithm based on SFF-PI. |

|

2.3.2. Adaptive-Pure Pursuit Algorithm Based-Lateral Controller

| Algorithm 2: The lateral controller and velocity planning algorithm based on PPC. |

|

| Algorithm 3: Find the closest waypoint to the ego-vehicle. |

|

| Algorithm 4: Find the ahead waypoint in the reference trajectory. |

|

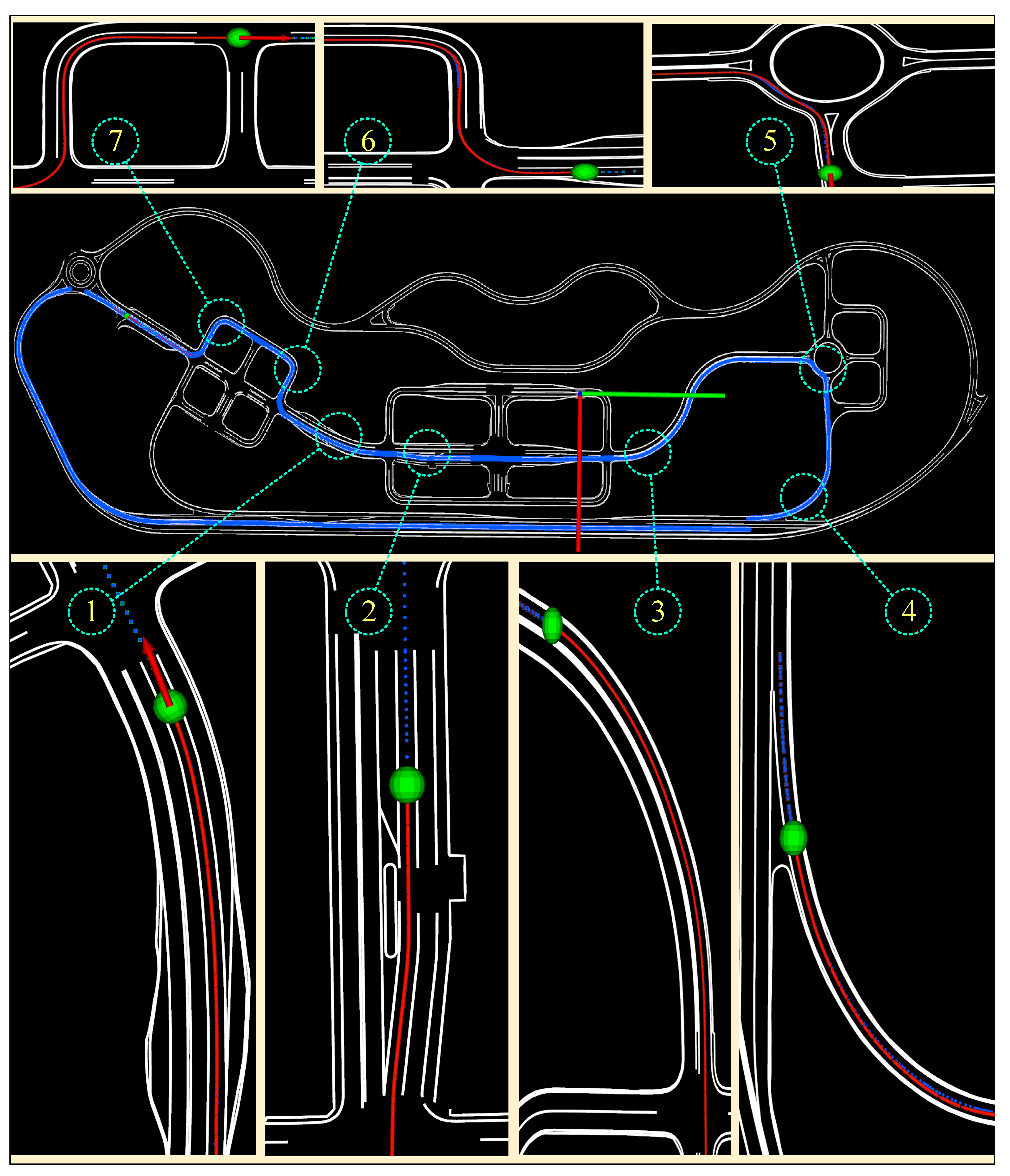

3. Experimental Results

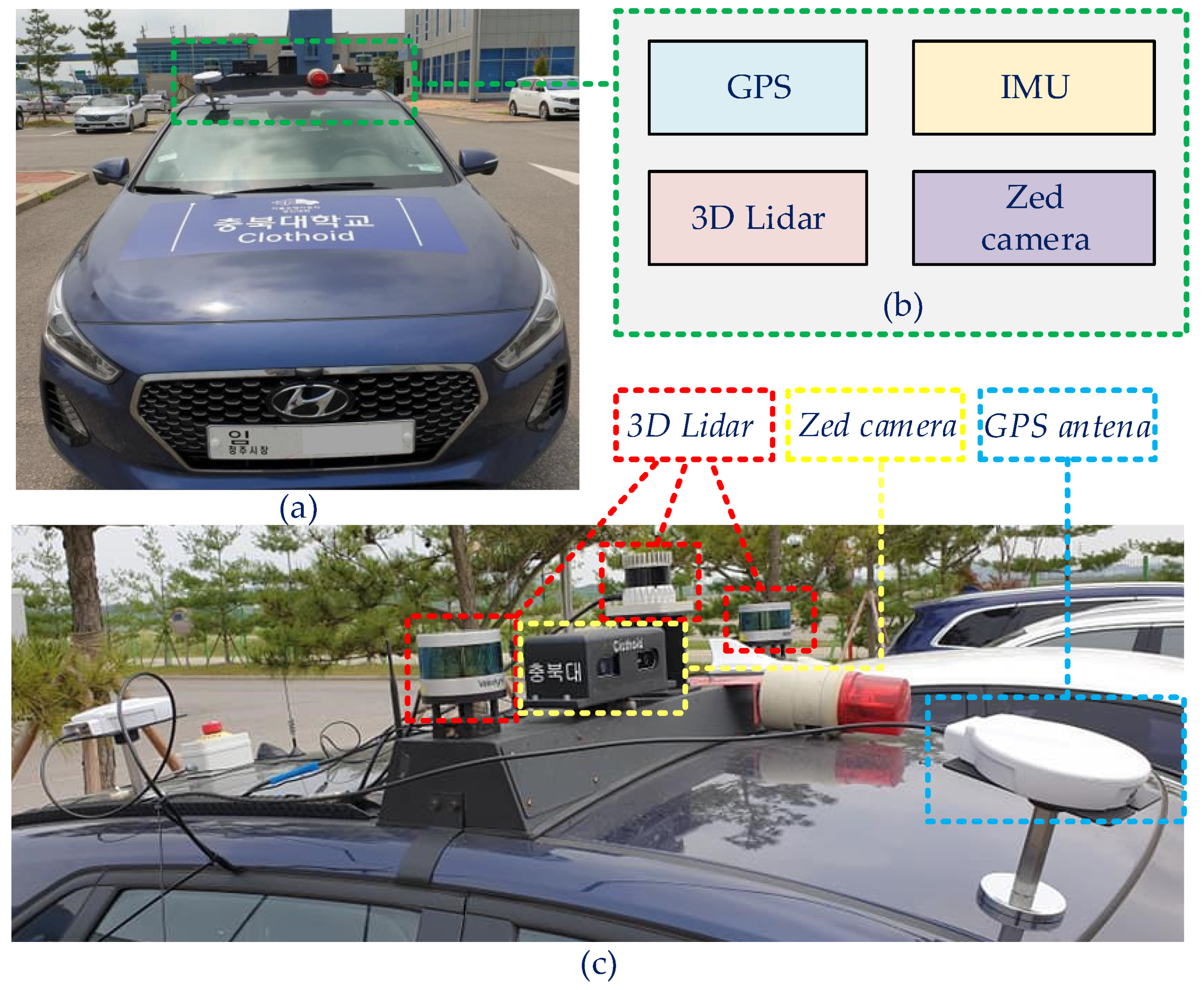

3.1. The System Integration of Autonomous Vehicle

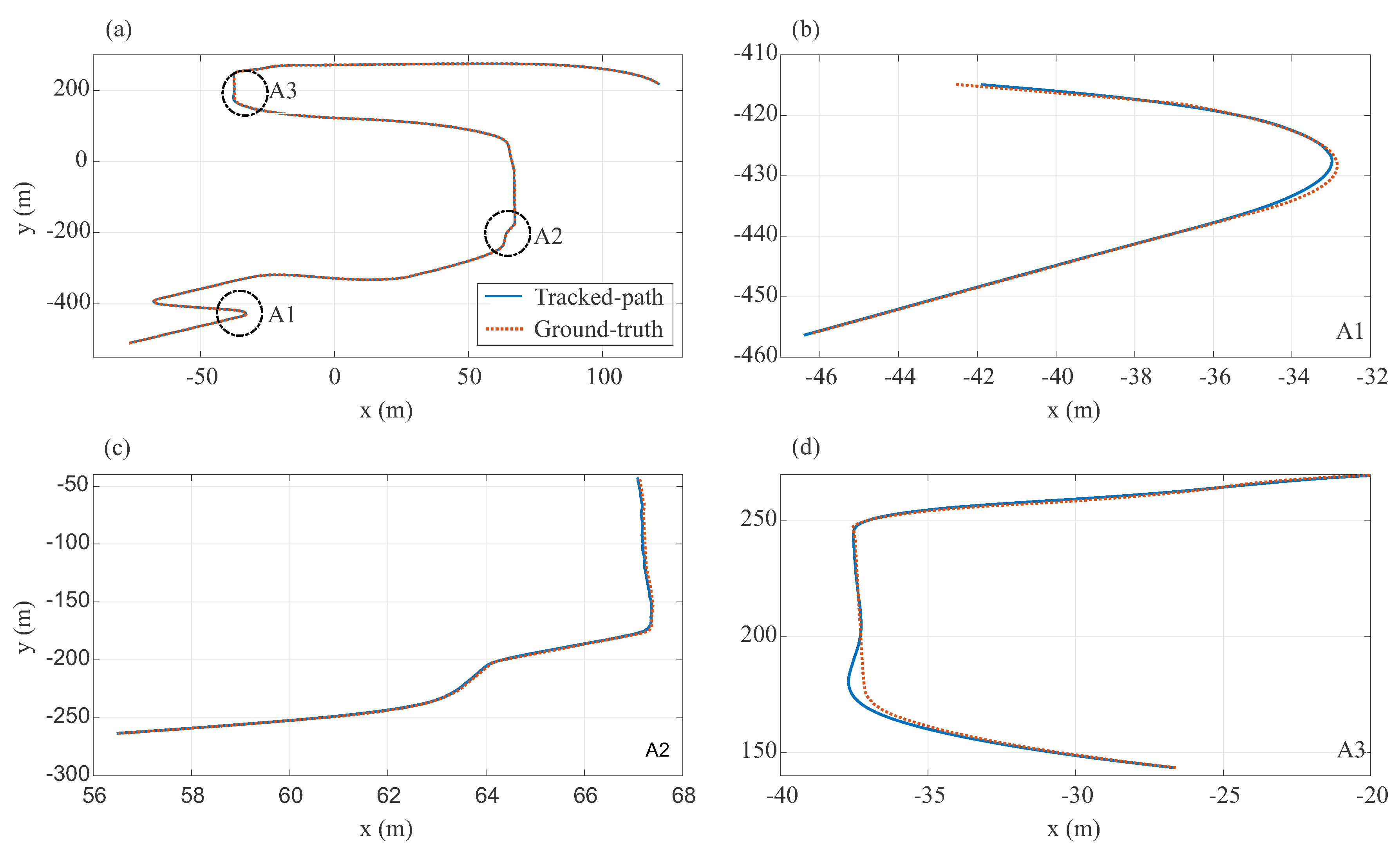

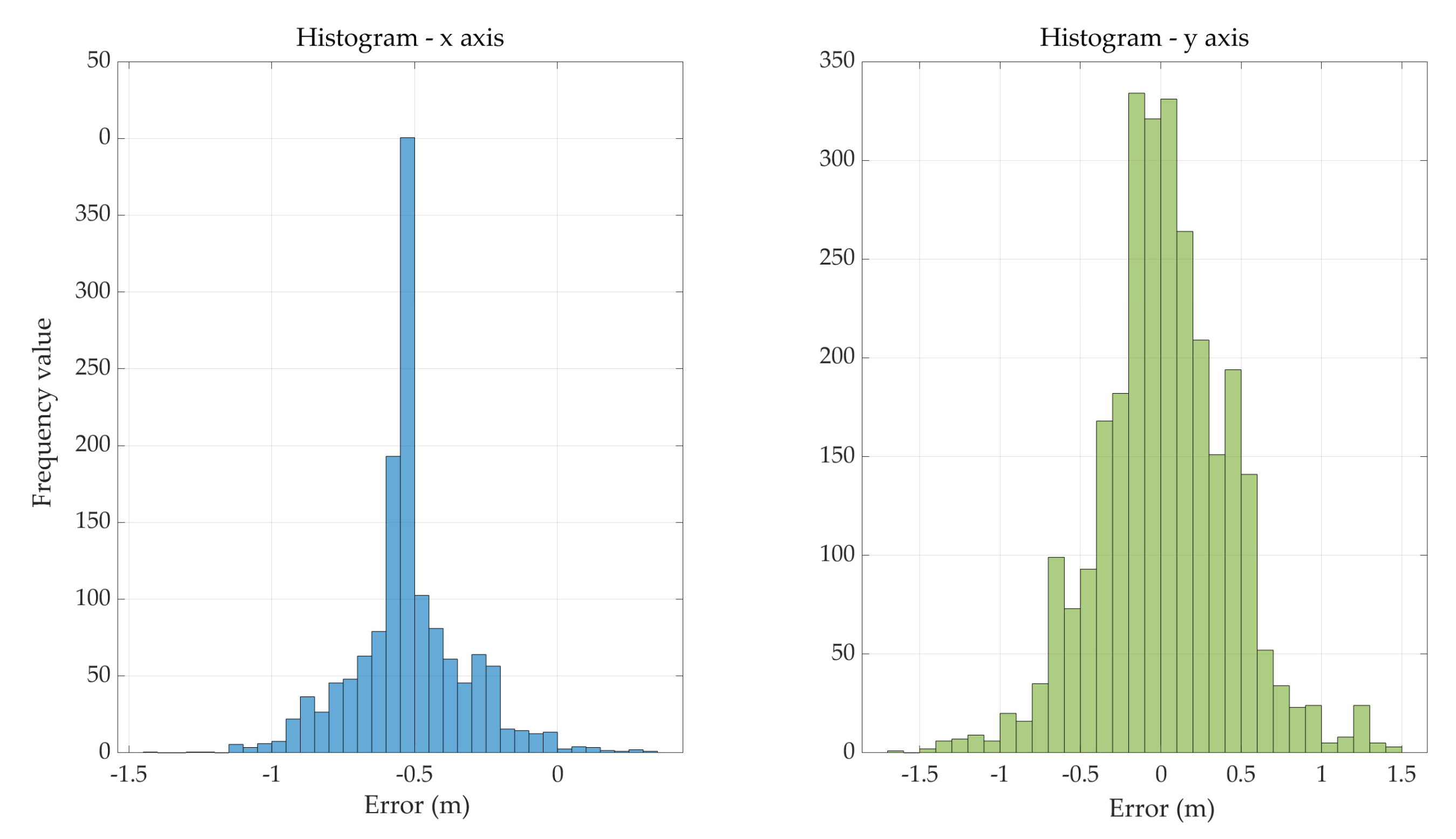

3.2. The Robustness of the Path Tracking Controller

3.3. Velocity Tracking Based on SFF-PI Controller

3.4. The Local Path Planning

3.4.1. The Local Path Generation

3.4.2. The Local Path with Hybrid A*

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A.

Appendix A.1. Coordinate Transformation

Appendix A.2. Smoother Trajectory

References

- Singh, S. Critical Reasons for Crashes Investigated in the National Motor Vehicle Crash Causation Survey. March 2018. Available online: https://crashstats.nhtsa.dot.gov/Api/Public/ViewPublication/812506 (accessed on 10 March 2020).

- SAE International. Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles; SAE International: Warrendale, PA, USA, 2019. [Google Scholar]

- Hawkins, A.J. Waymo is first to put fully self-driving cars on US roads without a safety driver. The Verge, 27 August 2019. [Google Scholar]

- Available online: https://en.wikipedia.org/wiki/DARPA_Grand_Challenge (accessed on 10 March 2020).

- Buehler, M.; Iagnemma, K.; Singh, S. The 2005 DARPA Grand Challenge: The Great Robot race; Springer: New York, NY, USA, 2007; Volume 36. [Google Scholar] [CrossRef]

- ELROB, The European Land Robot Trial (ELROB). Available online: http://www.elrob.org/ (accessed on 10 March 2020).

- Xin, J.; Wang, C.; Zhang, Z.; Zheng, N. China Future Challenge: Beyond the Intelligent Vehicle. IEEE Intell. Transp. Syst. Soc. Newslett. 2014, 16, 8–10. [Google Scholar]

- Available online: http://www.hyundai-ngv.com/en/tech_coop/sub05.do (accessed on 10 March 2020).

- Available online: https://selfdrivingcarx.com/google-waymo/ (accessed on 10 March 2020).

- Tesla Motors: Model S Press Kit. Available online: https://www.tesla.com/presskit/autopilot (accessed on 10 March 2020).

- Kato, S.; Takeuchi, E.; Ishiguro, Y.; Ninomiya, Y.; Takeda, K.; Hamada, T. An open approach to autonomous vehicles. IEEE Micro 2015, 35, 60–68. [Google Scholar] [CrossRef]

- Fan, H.; Zhu, F.; Liu, C.; Zhang, L.; Zhuang, L.; Li, D.; Zhu, W.; Hu, J.; Li, L.; Kong, Q. Baidu Apollo em motion planner. arXiv 2018, arXiv:1807.08048. [Google Scholar]

- NVIDIA. Driveworks SDK. Available online: https://developer.nvidia.com/driveworks (accessed on 10 March 2020).

- CommaAI. OpenPilot. Available online: https://github.com/commaai/openpilot (accessed on 10 March 2020).

- Available online: http://wiki.ros.org/kinetic/ (accessed on 10 March 2020).

- Paden, B.; Čáp, M.; Yong, S.Z.; Yershov, D.; Frazzoli, E. A Survey of Motion Planning and Control Techniques for Self-Driving Urban Vehicles. IEEE Trans. Intell. Veh. 2016, 1, 33–55. [Google Scholar] [CrossRef] [Green Version]

- Badue, C.; Guidolini, R.; Carneiro, R.V.; Azevedo, P.; Cardoso, V.B.; Forechi, A.; Jesus, L.; Berriel, R.; Paixão, T.; Mutz, F.; et al. Self-Driving Cars: A Survey. arXiv 2019, arXiv:1901.04407. [Google Scholar]

- Zhang, J.; Singh, S. LOAM: Lidar Odometry and Mapping in Real-time. Robot. Sci. Syst. 2014, 2. [Google Scholar] [CrossRef]

- Sualeh, M.; Kim, G.-W. Dynamic Multi-LiDAR Based Multiple Object Detection and Tracking. Sensors 2019, 19, 1474. [Google Scholar] [CrossRef] [Green Version]

- Pendleton, S.D.; Andersen, H.; Du, X.; Shen, X.; Meghjani, M.; Eng, Y.H.; Rus, D.; Ang, M.H. Perception, Planning, Control, and Coordination for Autonomous Vehicles. Machines 2017, 5, 6. [Google Scholar] [CrossRef]

- Gu, T.; Atwood, J.; Dong, C.; Dolan, J.M.; Lee, J.W. Tunable and stable real-time trajectory planning for urban autonomous driving. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–3 October 2015; pp. 250–256. [Google Scholar]

- Werling, M.; Ziegler, J.; Kammel, S.; Thrun, S. Optimal trajectory generation for dynamic street scenarios in a Frenét Frame. In Proceedings of the IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 987–993. [Google Scholar]

- Bojarski, M.; Del Testa, D.; Dworakowski, D.; Firner, B.; Flepp, B.; Goyal, P.; Goyal, P.; Jackel, L.D.; Monfort, M.; Muller, U.; et al. End to end learning for self-driving cars. arXiv 2016, arXiv:1604.07316. [Google Scholar]

- Van Dinh, N.; Kim, G. Fuzzy Logic and Deep Steering Control based Recommendation System for Self-Driving Car. In Proceedings of the 18th International Conference on Control, Automation and Systems (ICCAS), PyeongChang, Korea, 17–20 October 2018; pp. 1107–1110. [Google Scholar]

- Taku, T.; Daisuke, A. Model Predictive Control Approach to Design Practical Adaptive Cruise Control for Traffic Jam. Int. J. Automot. Eng. 2018, 9, 99–104. [Google Scholar]

- MacAdam, C.C. Development of Driver/Vehicle Steering Interaction Models for Dynamic Analysis, Final Technical Report UMTRI-88-53; Michigan Univ Ann Arbor Transportationresearch Inst: Ann Arbor, MI, USA, 1988. [Google Scholar]

- Yurtsever, E.; Lambert, J.; Carballo, A.; Takeda, K. A Survey of Autonomous Driving: Common Practices and Emerging Technologies. arXiv 2019, arXiv:1906.05113. [Google Scholar]

- Furda, A.; Vlacic, L. Towards increased road safety: Real-time decision making for driverless city vehicles. In Proceedings of the 2009 IEEE International Conference on Systems, Man and Cybernetics, San Antonio, TX, USA, 11–14 October 2009; pp. 2421–2426. [Google Scholar] [CrossRef] [Green Version]

- Xu, W.; Wei, J.; Dolan, J.M.; Zhao, H.; Zha, H. A real-time motion planner with trajectory optimization for autonomous vehicles. In Proceedings of the IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 2061–2067. [Google Scholar] [CrossRef] [Green Version]

- Thrun, S.; Montemerlo, M.; Dahlkamp, H.; Stavens, D.; Aron, A.; Diebel, J.; Fong, P.; Gale, J.; Halpenny, M.; Hoffmann, G.; et al. Stanley: The robot that won the DARPA Grand Challenge. J. Field Robot. 2006, 23, 661–692. [Google Scholar] [CrossRef]

- Kurzer, K. Path Planning in Unstructured Environments: A Real-time Hybrid A* Implementation for Fast and Deterministic Path Generation for the KTH Research Concept Vehicle; School of Industrial Engineering and Management (ITM): Stockholm, Sweden, 2016; p. 63. [Google Scholar]

- Dolgov, D.; Thrun, S.; Montemerlo, M.; Diebel, J. Path planning for autonomous vehicles in unknown semi-structured environments. Int. J. Robot. Res. 2010, 29, 485–501. [Google Scholar] [CrossRef]

- Van, N.D.; Kim, G. Development of an Efficient and Practical Control System in Autonomous Vehicle Competition. In Proceedings of the 19th International Conference on Control, Automation and Systems (ICCAS), Jeju, Korea, 15–18 October 2019; pp. 1084–1086. [Google Scholar]

- Rajamani, R. Vehicle Dynamics and Control; Spinger: New York, NY, USA, 2012; ISBN 978-1-4614-1433-9. [Google Scholar]

- Cervin, A.; Eker, J.; Bernhardsson, B.; Årzén, K.E. FeedbackFeedforward Scheduling of Control Tasks. Real-Time Syst. 2002, 23, 25–53. [Google Scholar] [CrossRef]

- Raffo, G.V.; Gomes, G.K.; Normey-Rico, J.E.; Kelber, C.R.; Becker, L.B. A Predictive Controller for Autonomous Vehicle Path Tracking. IEEE Trans. Intell. Transp. Syst. 2009, 10, 92–102. [Google Scholar] [CrossRef]

- Snider, J.M. Automatic Steering Methods for Autonomous Automobile Path Tracking; Robotics Institute Carnegie Mellon University: Pittsburgh, PA, USA, 2009; CMU-RI-TR-09-08. [Google Scholar]

- Park, M.; Lee, S.; Han, W. Development of Steering Control System for Autonomous Vehicle Using Geometry –Based Path Tracking Algorithm. ITRI J. 2015, 37, 617–625. [Google Scholar] [CrossRef]

- Zhang, F.; Stähle, H.; Chen, G.; Simon, C.C.C.; Buckl, C.; Knoll, A. A sensor fusion approach for localization with cumulative error elimination. In Proceedings of the IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), Hamburg, Germany, 13–15 September 2012; pp. 1–6. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

| Condition | Description |

|---|---|

| 10, 20, 30, 40 | The perception informs the emergency circumstances |

| 00 | Continuously works in an emergency mode |

| 11 | All ROS nodes staying healthy, and the vehicle status is ready to go |

| 01 | Non-dangerous and wake up to the ready state |

| 41 | Un-complete obstacle avoiding mission, and the time for the mission is over |

| 12, 22 | Standard scenario |

| 32, 42 | Completely performs the lane-changing and obstacle-avoidance mission, respectively |

| 33 | Operating in the lane changing mode |

| 23 | Demanding to change the path |

| 03 | Wake up to lane changing mode if a non-emergency case comes |

| 44 | Handling on the avoid obstacle mission |

| 14, 24 | Need to avoid the obstacle |

| 21 | Finished SAG mode, wait to new mission |

| Whole Trajectory (m) | A1 Area (m) | A2 Area (m) | A3 Area (m) | |

|---|---|---|---|---|

| 0.0037 | 0.0128 | 0.0029 | 0.0201 | |

| 0.0078 | 0.0162 | 0.0232 | 0.0227 | |

| 0.0057 | 0.0145 | 0.0130 | 0.0214 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Van, N.D.; Sualeh, M.; Kim, D.; Kim, G.-W. A Hierarchical Control System for Autonomous Driving towards Urban Challenges. Appl. Sci. 2020, 10, 3543. https://doi.org/10.3390/app10103543

Van ND, Sualeh M, Kim D, Kim G-W. A Hierarchical Control System for Autonomous Driving towards Urban Challenges. Applied Sciences. 2020; 10(10):3543. https://doi.org/10.3390/app10103543

Chicago/Turabian StyleVan, Nam Dinh, Muhammad Sualeh, Dohyeong Kim, and Gon-Woo Kim. 2020. "A Hierarchical Control System for Autonomous Driving towards Urban Challenges" Applied Sciences 10, no. 10: 3543. https://doi.org/10.3390/app10103543

APA StyleVan, N. D., Sualeh, M., Kim, D., & Kim, G.-W. (2020). A Hierarchical Control System for Autonomous Driving towards Urban Challenges. Applied Sciences, 10(10), 3543. https://doi.org/10.3390/app10103543