1. Introduction

Consumers have shifted from simply buying products to the consumption of improved quality in terms of culture and value [

1,

2,

3]. At the same time, companies have adapted to locate consumers among diverse cultures, and large complex spaces combining commercial and cultural areas, such as library and music hall, have been created. However, as spaces have expanded to contain various content and scale, the distance and the complexity of visitor circulation in these indoor environments has increased. For this reason, consumers must recognize spatial information when traversing paths using signs and maps, even in a complex cultural space. Although designers have developed and provided various forms of signs for visitors to recognize spatial information, there are limitations in understanding how visitors perceive this information and use it for wayfinding.

Data for spatial cognitive processes may be collected through questionnaires and interviews. However, to collect the sensory physiological signal data of the direct visual perception process, it is necessary to use parallel scientific experimental methods as a supplement. Questionnaires and interviews are limited because they provide subjective opinions about the spatial experience, and survey participants can consciously change answers. The scientific measurement method using physiological signals can compensate for such limitations and directly extract any sensory signals generated unconsciously [

4,

5].

Human visual information can be measured by using eye-tracking equipment [

6,

7]. To collect information about the direct visual perception process, we experimented with a virtual reality (VR)-based head mounted display (HMD) system. VR-based eye-tracking equipment allows participants to experience spaces even if they are not in the real world [

8]. The experience of the virtual space as if it is real using an HMD has the advantage of increasing the immersion in space, and it is possible to quantify the user’s gaze data. There are various constraints in a real environment. There are many variables in extracting data because the real environment cannot be regulated constantly as a consequence of population congestion or external environmental factors. However, in the laboratory using a VR-based HMD, it is possible to control the variable parts while realizing the commitment to space.

Although the diffusion of VR and the expansion of the market are expected to continue, the design and the evaluation of the space through VR can be considered an initial stage. In this study, we contributed to the field by suggesting a research method that involves experiments to apply the VR environment to spatial design using visual perception. VR equipment was used to convert human visual information into the cognition of sensation, and visual searches were monitored using eye-tracking experiments with VR equipment. In addition, data analysis was proposed to track the unconscious spatial search according to the time taken for cognitive processing to occur. This is significant in proposing a method for utilizing the developing VR technology according to human sensory information in architectural space planning.

3. Materials and Methods

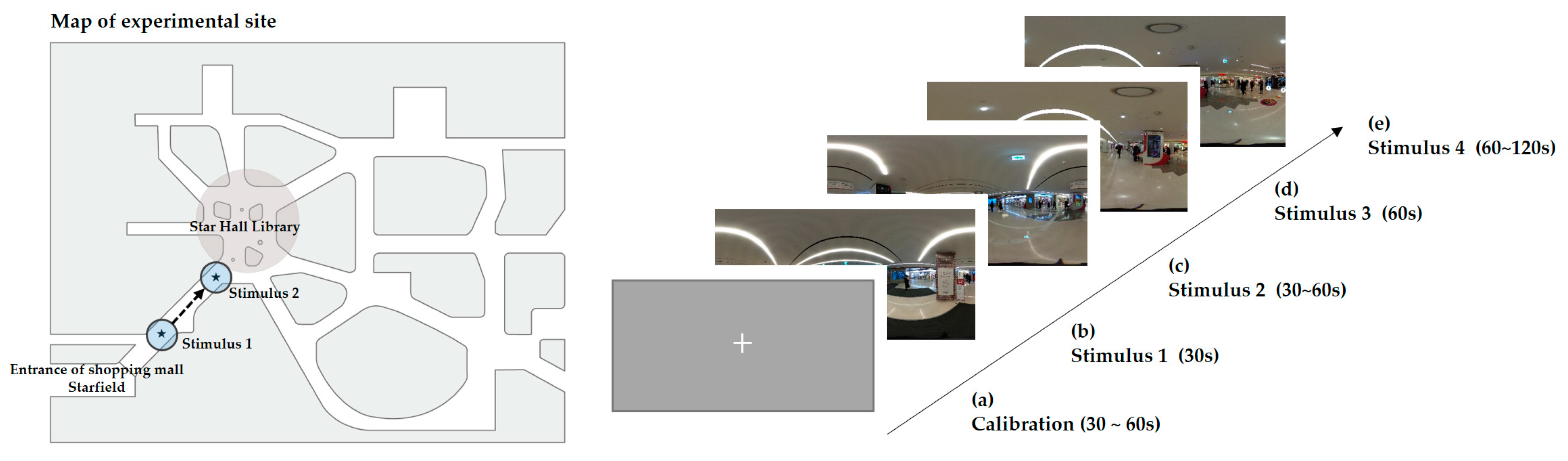

3.1. Visual Stimuli

The complex space connected to the subway station was constructed as an experimental setting. As visual stimuli in COEX Mall, Seoul, we included paths commencing at the entrance of Starfield and passing through the Star Hall Library to the COEX Exhibition Hall. The experiment stimuli were presented to participants as a way to recognize the paths in the immersive VR space. The experimental stages were as follows. First, to create the visual stimuli, a 360-degree camera was installed on the path from the entrance to the crossroads at the Star Hall Library. Several images were recorded, and visual stimuli were selected in consideration of the congestion, the location of the signs, and the visibility. During the preliminary investigation, it was found that the sign, the path, and the surrounding spaces were used to identify the destination at the crossroads of the experimental space. To obtain spatial information, the destination name of the sign and the direction of the arrow were found to be confusing factors in selecting the path of the space.

Second, through a pretest consisting of two participants, the field of view and its visibility to the participants were confirmed when the captured 360-degree images were applied to the VR-based HMD device (SMI-HTC vive). Third, by analyzing the precautions of the experimental steps presented to the participants of the pretest, a pre-explanation was added to improve the participants’ understanding of the experiment. Fourth, in the experiment (with a total of 23 participants—ten males and 13 females), participants each wore an HMD headset and observed visual stimuli while responding to an interview conducted by the experimenter.

A total of four visual stimuli were presented in an order and under a time limit for the experiment. The perception processes of clarity and cognition according to the area of interest in this study were analyzed using one visual stimulus. In the visual stimulus shown in

Figure 1a, the participant observed the environment for 30 s at the entrance of the experimental space. The experimenter explained to the participants the purpose of the experiment and the VR environment and asked the participant to recognize the visual information of the sign first. The next stimulus, shown in

Figure 1b,c, was an image that allowed the first sign to be observed closely and could be seen as the participant moved toward the crossroads. Here, the participants could observe the guide signs at the front for 30 to 60 s, allowing them to understand and adapt to the next experimental stimulus. The next stimulus in

Figure 1d was found at the first crossroad to the exhibition hall; participants were given the opportunity to select the next route to the exhibition hall after observing the spatial information on the signboard for one minute and recognizing the space. Finally, while observing the last visual stimulus in

Figure 1e, the participants chose an appropriate path among the numbered crossroads and provided answers to the spatial perception items in the questionnaire interview. The data extracted from the last visual stimulus were analyzed as areas of interest (AOIs) for clarity and recognition.

3.2. Experiment Procedure and Participants

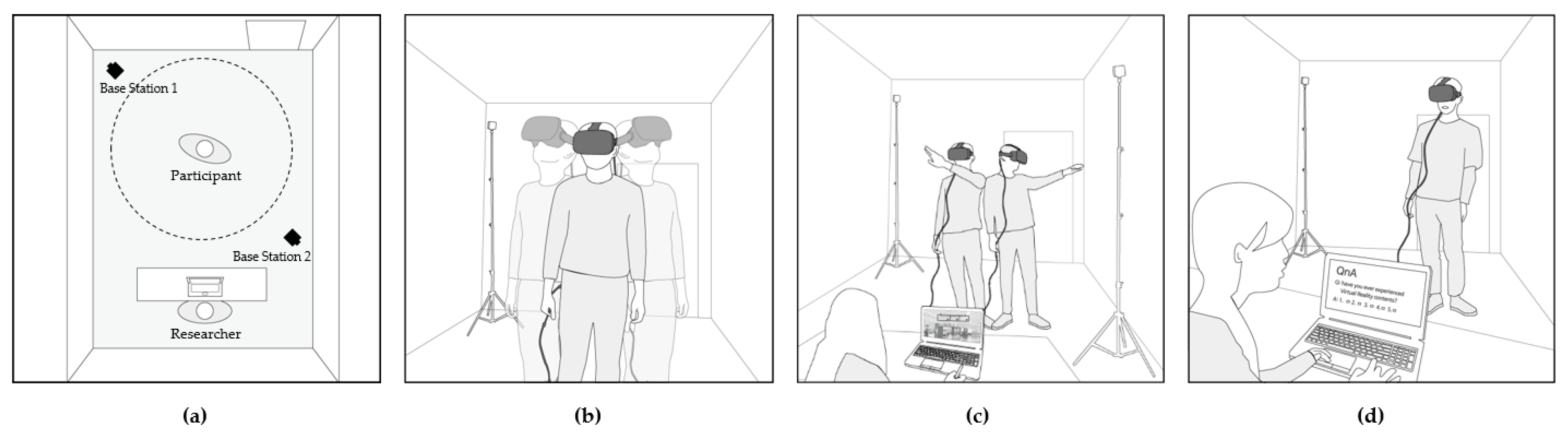

The VR experiment was conducted over two days from 10 January 2019 to 11 January 2019, and the participants were 24 undergraduate or graduate students. All participants had a visual acuity or corrected visual acuity score of 0.5 or higher, with an average age of 24.8 years (standard deviation: ±1.89).

A preliminary experiment was conducted to verify the experimental setting. In the preliminary experiment, participants were asked to follow simple instructions after focus adjustment, which allowed them to adapt to the immersive VR environment before entering the main experiment (see

Figure 2). The respondents observed the visual stimuli freely for 60 s, and as they had in the preliminary experiments, they explored the virtual space by freely turning their heads and bodies within the range allowed by the HMD equipment. The experimenter ended the preliminary experiment after monitoring the participants’ behavior in real time. After the completion of the preliminary experiment, participants entered the main experiment, and the time required for each participant to complete it was different; the participants took a minimum of four minutes and a maximum of five minutes and 40 s. According to the validity rate of data collection, the gaze data of 11 final selected participants were selected and analyzed.

The initial data for visual stimulus were reviewed to exclude participants whose validity rate was less than 85%. This was to increase the accuracy and the reliability of the experimental data by examining the validity rate in the process of recording the participants’ gaze data in real time. Data from 11 out of 24 participants (45.83%) were valid and were selected. The percentage of valid initial data was 69.2%, but this rate was increased to 92.9% by the inclusion of 11 participants with validity rates of higher than 85%. The extracted data were analyzed according to the validation of the effective rate.

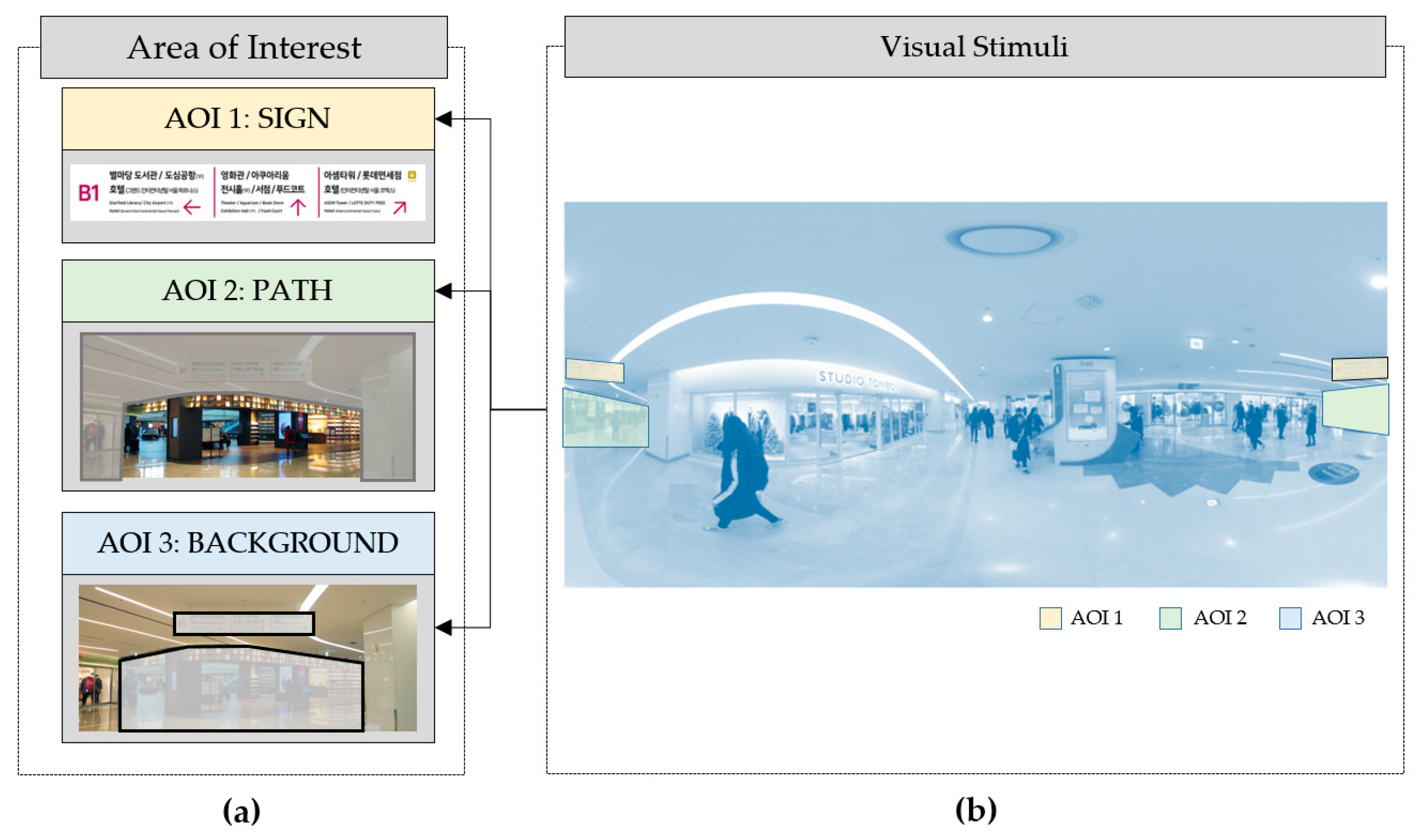

The visual stimuli used in the experiment were photographic images taken across 360 degrees. The participants performed in the experiment by observing a fixed virtual space image. AOI is an analysis method that can observe gaze fixation within the designated area to which the participants’ gazes were directed. For the setting of AOIs and the extraction of data, the SMI BeGaze 3.6 program was used. The experimenter can designate a specific region of interest within the entire spatial image to extract gaze movements generated within that region. The change of gaze can be analyzed using the quantitative data of gaze movements, which is used to obtain information on wayfinding by mapping participants’ cognitive processes of visual perception.

The area AOIs were construed as “path”, “sign”, and “background”. “Sign” was used as a guide to obtain spatial information (see

Figure 3), thus participants could receive information on the direction of the space. The AOIs used in the research analysis were identified using the data obtained by the participants looking for visual information and fixing their gazes accordingly in the VR images in adherence to the experiment’s purpose statement. This allowed the researchers to analyze the research results to reveal characteristics of spatial perception according to the intelligibility and the recognition of the “sign” within the research site.

4. Results

4.1. Average Gaze Data for Understanding Spatial Information

Time-series analysis was performed to analyze the experimental data through the recognition of spatial information. To analyze the visual information process, the time was divided into six sequences of ten-second intervals. To extract the gaze information for the AOI set (“path”, “sign”, and “background”) of visual stimuli, the number of gaze fixation data retrieved from each section was identified along with the AOIs that participants observed and the information they acquired over time.

In the interview conducted at the final stage of the experiment, participants answered questions about the clarity and the recognition of the crossroads selection process using a five-point scale (“very difficult”, “difficult”, “normal”, “easy”, and “very easy”). According to their answers, they were divided into two groups. Participants who selected “very difficult”, “difficult”, or “normal” were grouped as “difficult”, and those who chose “easy” or “very easy” were classified as “easy”. Dividing the group according to the level of spatial information recognition allowed the researchers to analyze the correlation between each group’s changing characteristics in the cognitive understanding of spatial information and the movement of gaze information according to time-series analysis.

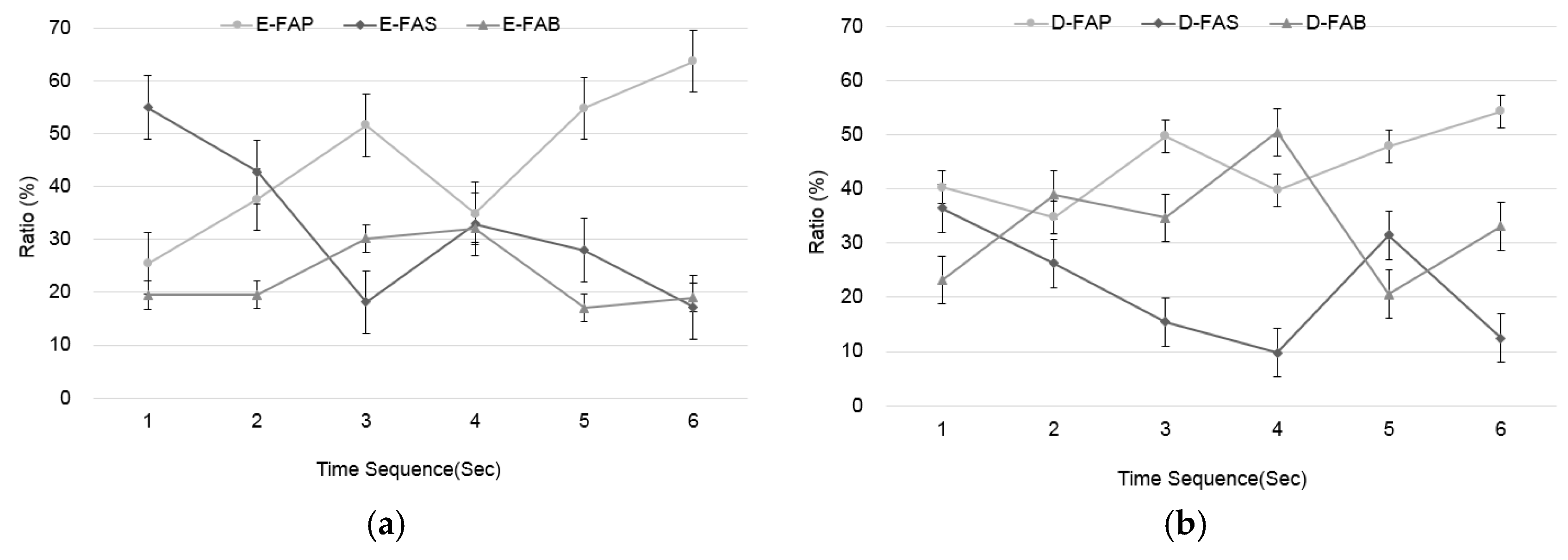

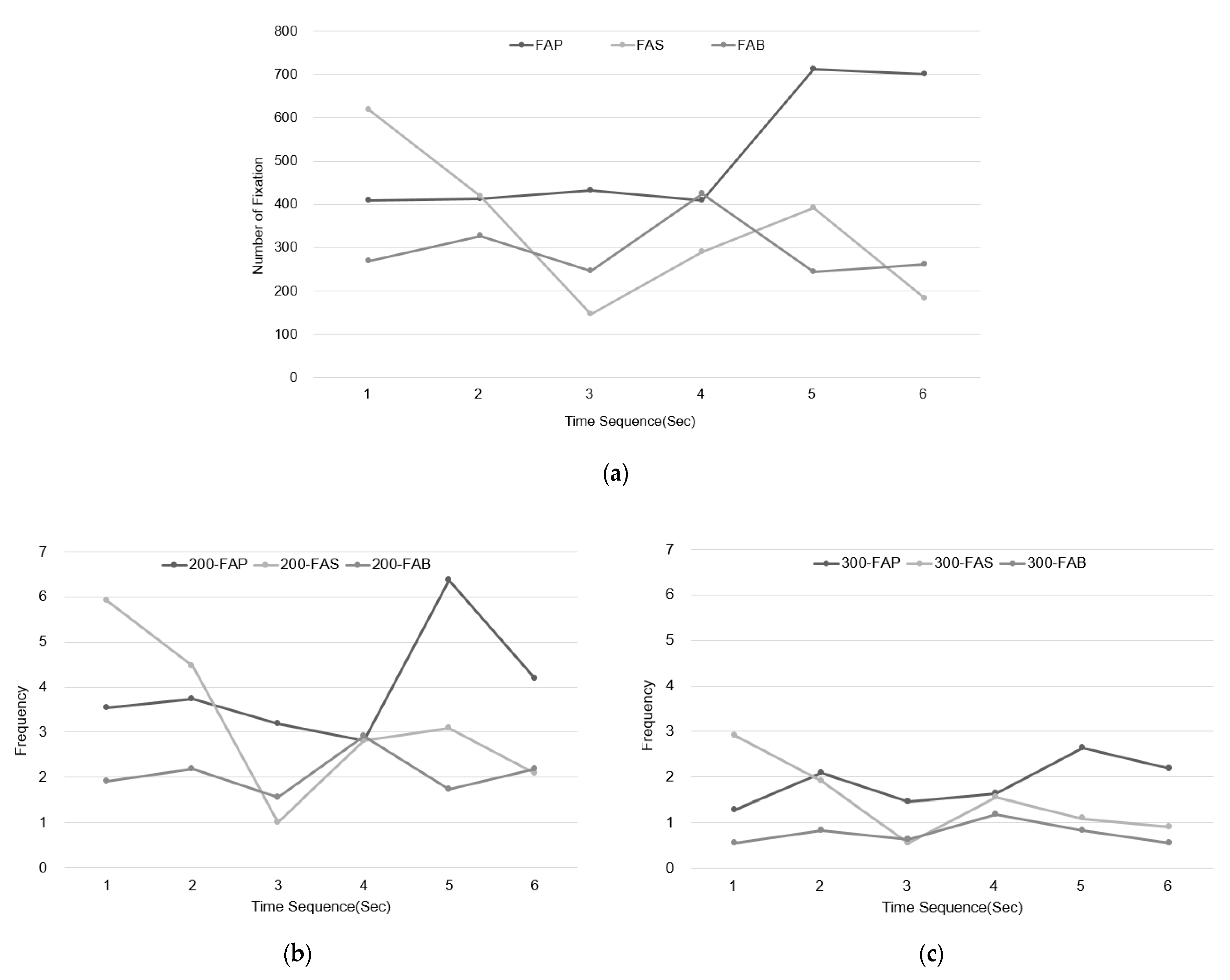

To analyze the spatial information, the movement of the gaze was studied over time to ascertain the average fixation number (see

Figure 4) and the ratio of each AOI (see

Figure 5). The data for the gaze increased and decreased over time in relation to the two groups (“easy” and “difficult”), which were divided according to their propensity for spatial understanding. Overall, using the averages and ratios extracted from the AOIs, the movement by which the gaze increases and decreases over time could be analyzed by applying the correlation between the understanding of space and the cognitive process.

Figure 4 shows the gaze fixation data in the AOIs for the two groups according to the scale of their understandings within the space. We abbreviated the “easy” group’s fixation AOI sign as E-FAS, the “difficult” group’s fixation AOI sign as D-FAS, the “easy” group’s fixation AOI path as E-FAP, and the “difficult” group’s fixation AOI path as D-FAP. After the start of the experiment, the gaze fixation of AOI for the “path” of the D-FAP group was 3.5 times higher than that of the E-FAS group in the experimental Time Sequence 1 (ten seconds). From the beginning of the gaze, the ratio of the D-FAP gaze gradually decreased throughout the entire experimental period, but the E-FAP gradually increased (see

Figure 4a). The AOI gaze fixation for “sign” showed initially a high average gaze fixation rate in both the E-FAS and the D-FAS groups, which then gradually decreased (see

Figure 4b).

Figure 5 shows the gaze fixation ratio for AOIs over the experimental period for the “easy” and the “difficult” groups. In the “easy” group, the gaze concentration ratio for the “sign” (55%) during the first section of the experiment (0 ~ 10 s) was approximately three times higher than that for the “path” (25%) and the “background” (19%). In the third section (20 ~ 30 s), which comprised the middle of the experiment, the “easy” group focused on the “path” rather than the “sign” in the order of “path” (52%), “background” (30%), and then “sign” (18%), unlike at the start of the experiment. After this, their attention was focused on the “path” rather than the other AOI areas, which shows that the gaze was fixed in accordance with the purpose of the experiment (see

Figure 5a). During the first section of the experiment (0 ~ 10 s), the gaze fixation in the “difficult” group occurred in the order of “path” (40%), “sign” (36%), and “background” (23%), and the difference was less pronounced. In the second section of the experiment (10 ~ 20 s), the gaze fixation was higher for “path” (35%) and “background” (39%) than for “sign” (26%). In the middle of the experiment, a high gaze fixation ratio was shown for “path’” (50%). In the final section of the experiment, gaze fixation was also observed in the AOIs rather than the direction for wayfinding in the order of “path” (54%), “sign” (33%), and “background” (12%) (see

Figure 5b).

In the interview during the final stage of the experiment, the groups were divided according to the difficulty experienced when perceiving space, and the extracted gaze fixation data were analyzed. As a result, differences in the capacity to understand the spatial information appeared according to the ratio of gaze fixation. The cognitive processing of information can be analyzed according to the time intervals of the experiment. In the experiment, the “easy” group’s understanding of spatial information was obtained by perceiving the “sign”, and their gaze was fixed on the wayfinding of the space for the purpose of the experiment. However, in the “difficult” group, the gaze fixation data appeared scattered in spaces other than the perception of the “sign” and the wayfinding.

4.2. Analysis of Conscious Gaze and the Visual Understanding of Data

To analyze the data of perceptual process, a time range of one visual movement was considered. The VR gaze tracking equipment recorded participant gaze data at 250 Hz per second, and the gaze time for each datum was 0.004 s—that is, one visual movement per 0.004 s was recorded and extracted at 250 Hz.

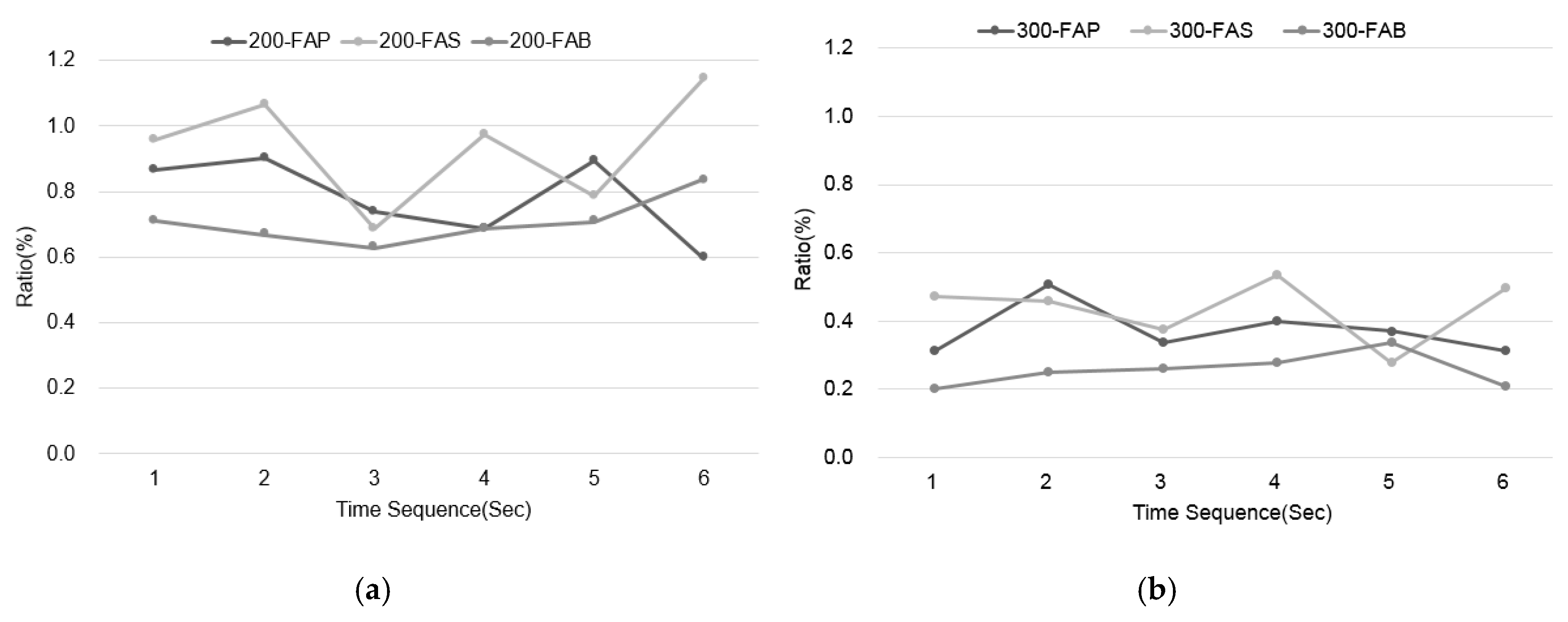

As seen in previous studies, the minimum time to consciously grasp an object in front of the eyes is 200 ms; it is understood that eyes perceive an object and consciously observe it when the gaze is fixed in one place. Therefore, to ensure a fixed gaze time of 0.2 s or more, at least 50 of the gaze data obtained in this experiment had to be continuous. However, less than 50 of the non-contiguous fixation data were also included in the gaze data recorded at 250 Hz. Using the above results, the raw gaze fixation data from the AOIs were analyzed by dividing the total experiment time (60 s) into six ten-second sections (see

Figure 6). All fixation data were recorded regardless of dwelling time. The visual perception process was analyzed by extracting 50 or more 200 ms continuous gaze fixation data for “conscious attention” and 300 ms continuous gaze fixation data for “visual understanding” (see

Figure 7).

The data for the participants’ gaze movement that passed the validity rate test indicates the value of gaze fixation in the AOI areas. The AOIs were classified into FAP, FAS, and FAB. Each AOI region allowed for the extraction of gaze data from the FAS to understand the spatial information as well as gaze data from the FAP to understand the direction of wayfinding within a target space. FAB accounted for the remaining areas beyond FAP and FAS and was interpreted as searching gaze. In Time Sequence 1 (0 ~ 10 s) shown in

Figure 6, the FAS value for gaze fixation was the highest, thus a conscious gaze fixation in the cognition of spatial information appeared. In Time Sequence 3 (20 ~ 30 s), the value of the FAS was the lowest, indicating that the search by the cognitive gaze for the “sign” was over at the beginning of the experiment. As the FAP value increased in Time Sequence 3, it continued until the end of the experiment. When analyzing the participants’ perception of information about the space, it is understood participants used their gazes to search for 10 to 20 s at the beginning of the experiment, and then, after 30 s, with a greater understanding of the space, they proceeded to observe the direction of the destination. In the gaze movement analysis, there was a difference in the visual fixation data of the AOIs according to the time sequence (see

Figure 6a).

A frequency of 200 ms when 50 or more gazes were fixed in succession was extracted from the data and analyzed as “conscious gaze” data.

Figure 6c shows the analysis of more than 75 gaze fixation data at 300 ms as a “cognitive gaze” beyond consciousness (see

Figure 6b). The 200 ms FAS (200-FAS) appears to have had a higher frequency than the other AOIs in Time Sequence 1 (0 ~ 10 s). Time Sequence 2 (10 ~ 20 s) revealed a decrease in raw data after the commencement of the experiment; however, it is understood that the “sign” area was perceived more consciously than the other AOI areas. The 300 ms FAP (300-FAP) increased in frequency from Time Sequence 2 (10 ~ 20 s) to Time Sequence 6 (50 ~ 60 s). It is conjectured that the spatial information was recognized in the “sign” during the initial Time Sequence 1.

4.3. Relative Proportions of the Conscious Gaze and Visual Understanding

In the relative ratio analysis, each change in participant gaze movement was noted during the perception and cognitive processing of the virtual space. The raw data, the visual perception movement of the conscious gaze at 200 ms and the visual perception at 300 ms were analyzed using visual steps as the gaze movements according to the relative ratio values of each AOI (see

Figure 7). Eye-movement data, for which the visual perception and cognitive stages were relative, were analyzed in terms of the stimulus and position of the visual perception in each time sequence. Analyzing the rate at which the highest level of perception was recorded for each time step shows conscious gaze for 200-FAS to be relatively high and increasing in contrast to that of the 200-FAP and the 200-FAB. Examining the detailed time sequence, the 200-FAS shows a lower rate than the 200-FAP in Steps 3 and 5, but the 300-FAS also exhibits a low rate in Steps 2 and 5. By the gaze fixation data analysis (see

Figure 6), it was confirmed that the visual attention related to the stimuli increased with the FAP. By analyzing the ratio, it is possible to define the meaning of visual attention by considering where the gaze dwelled.

4.4. Analysis of the Perception and Understanding of Spatial Information According to Cognitive Differences

The data extracted from the visual exploration of the “easy” and the “difficult” groups were divided into the perception and the understanding of spatial information through the analysis of AOIs (see

Figure 8). The stages of visual perception and exploration of space were investigated by analyzing the “easy” group’s perceptions (see

Figure 8a) and understandings (see

Figure 8c) as well as the “difficult” group’s perceptions (see

Figure 8b) and understandings (see

Figure 8d). The perception processes of the “easy” group were analyzed to be high for the “sign” (E-200-FAS), which was consistently searched for throughout the areas of the AOIs. However, the perception processes of the “difficult” group were analyzed to be either frequent or infrequent in terms of the gaze rate for the “sign” (D-200-FAS) relative to the areas of AOIs and depending on the time sequence. In the process of understanding the space at 300 ms, the E-FAS group continued to seek to comprehend the space, as the gaze was observed in the “sign” area at this time point. However, the “difficult” group was observed to stay focused on the “path”, except for viewing the “sign” in the initial time step. Revealing the conscious difficulty response to spatial information, differences were witnessed between the E-FAS group and the D-FAP group in the visual scan path pattern throughout the experimental stages.

5. Discussion and Conclusion

Visual perception in the process of spatial experience was measured using various research methods. The visual perception process can be divided into conscious perception and unconscious perception, and the gaze movement can be classified according to James [

14] “what you see” or Von Helmhotlz [

16] “where you see”. This research focused on “how” and “what” is seen as the gaze changes over time, focusing on the levels of perception and cognition throughout the VR experiment.

- ■

Extracting the meaning of gaze fixation data

The processes of perception and cognition in information understanding were defined according to the time of the gaze fixation, and analysis was conducted accordingly. In the study of eye tracking involving statistical analysis according to the number of eye fixations and the differences in frequency, it cannot be established that people actually perceived the objects because they saw something. This study sought to ascertain whether the length of time at which a gaze is fixed can be regarded as the actual seeing of an object and to grasp the data that are meaningful to the process of visual perception. In the study of eye tracking, 0.1 s is interpreted as temporary eye fixation at a low level of spatial perception. Data above 0.3 s are analyzed as indicating visual understanding at the cognition stage. The interpretation of meaning may vary depending on how long the conscious and the unconscious gaze dwells rather than interpreting all data on gaze fixation as “seeing”.

When comparing the average frequency of the gaze fixation data according to the ratio of the total AOIs, the “difficult” group exhibited greater gaze fixation than the “easy” group, and this trend continued over time (see

Figure 4). However, examining the gaze fixation according to the ratio among the AOIs, the “easy” group focused on the “sign” at the beginning of the experiment, and the ratio of gaze fixation increased after the middle of the experiment in other areas (see

Figure 5). That is, according to the degrees of perception indicated by the groups, it is possible to understand the process of directional gazing and the comprehension of spatial information through the ratio analysis of the gaze data, which remains unapparent in the frequency of the raw data for the gaze. Data interpretation and extraction methods according to the research purpose are emphasized to reveal information that is unknown within the number of gaze scan paths and fixation points.

- ■

Processing eye-tracking data according to time series

A time-series analysis of data or a research method of data processing according to frequency and ratio is required to understand the stages at which the levels of consciousness and unconsciousness change in the processes of visual perception. In particular, in the study of spatial information, it is important to understand the flow of visual attention and information acquisition over time to identify the machinations of perception and cognition. When a cognitive process for wayfinding occurs as a result given information about a large and complex space, it is necessary to extract the data about when, what, and how to understand and make decisions. In this study, the gaze movement was tracked according to a time series, revealing the processes of perception and cognition using eye tracking in the VR environment.

This study sought to identify when and where to see and understand according to the purpose of wayfinding in response to spatial information. As a result, the characteristics concerning whether or not the spatial information was understood appeared in the classification of the participants, and the value of the gaze data according to the time flow was significantly different accordingly. In this study, signs affecting the processes of perception and cognition in the research of the nature of visual perception were validated, thus the thoughts and the conscious focus of participants appeared as gaze movements.

At 200 ms, defined as the recognition level of the gaze, the ratios of AOIs were evenly distributed over time for the “easy” group; however, a large deviation in the field of the “sign” was revealed for the “difficult” group (see

Figure 8). It was interpreted that the “easy” group searched for “where to look” by determining the hierarchy of the gaze recognition area, whereas the “difficult” group was unable to locate this hierarchy of recognition. At 300 ms, defined as the understanding level of the gaze, it was shown that the “easy” group maintained intentional consciousness to continuously understand throughout the experiment, directing their gazes toward the “sign” for the entire experiment. However, the gazes of the “difficult” group were interpreted as searching the surrounding area rather than understanding the “sign” from the middle of the experiment. Accordingly, the tracking of eye movements is an invisible psychological mechanism for spatial information, which may be interpreted and verified.

- ■

Enhancing immersion using a VR eye-tracking experiment

There are many limitations to such experimentation with the consumer’s reaction to the spatial environment. However, an immersive experiment setting was created through the effectiveness of the VR technology, thus it was possible to quickly record the participants’ reactions to the spatial environment. These strengths of the VR experiment, such as immersion and control, will not apply to all studies. In this study, research on the control of the VR experiment was conducted in advance and found a limit to the participants’ immersion in the VR experiment over time. Thus, to enhance participants’ immersion for the purposes of the experiment, visual stimuli were presented for exploring the space, and cognitive psychological phenomena were examined through interviews. In the resulting analysis, the participants were divided into groups according to the degree of their cognition responses, and the data were classified and analyzed accordingly.

In conclusion, it was identified that visual information correlates with human perception. The data of the gaze can be interpreted as indicating a cognitive stage of the exploration and understanding of visual perception and may be judged as a useful mechanism for psychological decision making. Because of the attributes of the gaze data, researcher interpretation is essential in extracting meaning and interpreting “what”, “where”, and “how much” a person looks and comprehends. Depending on what purpose statement is given over the course of the VR experiment, the extraction and the analysis of the gaze data obtained may vary. Even in an experimental environment implemented with an efficient VR technique, quantitative data extraction and interpretation must be conducted in combination with a qualitative research method. Most of the subjects in this study were in their early twenties, since the study conducted VR experiments as a verification of gaze perception and cognition. It would be desirable to conduct another experiment with a mixed aged group, as data collected according to age may be expected to reveal interesting results concerning sensory perception and cognition.