1. Introduction

One of the most challenging problems within security management is ensuring desirable behavior from various agents in the organization. Traditional probabilistic security risk assessment methods have been dedicated to evaluating technological aspects of information systems [

1], and are often expressed as a function of probability and consequence. These risk assessment methods disregard the dimension of risks posed by incentive systems. These are complex risks where the design of incentive systems intends to promote a desired type of behavior but instead leads to adverse actions. This problem is known as

perverse incentives in information security [

2]. The Conflicting Incentives Risk Analysis (CIRA) represents an alternative method for risk assessment, where the focus is on the stakeholders and their incentives for taking action.

With the utilization of the opportunities the Internet offers, the peer review process (PRP) and refereeing of scientific manuscripts in its current form is a rapidly developing construct. In recent years, the increase of scientific disciplines, researchers and publication channels have led to an increase in the complexity of the peer review economy and decreased its transparency [

3]. This development is bound to introduce several new vulnerabilities and opportunities for exploitation of the system, as becoming large makes sources of error less visible and accumulates risk [

4]. Incidents such as actors gaming the system and becoming reviewers of their own work (The Peer Review Ring) [

5], and bogus papers being published at renowned publication channels (SCIgen - An automatic CS paper generator,

http://pdos.csail.mit.edu/scigen/), are likely examples of this development.

Peer review is a trust-based process that depends on stakeholders acting according to certain ethical standards for it to work as intended. Therefore, it depends on the integrity of the process participants. Being at the core of scientific knowledge production, the integrity of the PRP is essential. CIRA has been developed as a method but remains largely untested in different scenarios [

6]. As a trust-based process practiced fairly similarly in academia, the normative PRP represents a fitting target for a CIRA. The advantage of CIRA is that we obtain insight and understanding of what motivates actors to contribute in the process and circumstances that can lead to adverse actions. This kind of knowledge can lead to better process governance and decision making for the process. Therefore, the main idea of this paper is to apply CIRA to identify and assess risks from incentives in the PRP. This paper differs from previous work on trust-based processes and PRP in that we consider risks from incentives throughout the process.

This paper addresses the following research questions (RQ): (i) Can the root cause of the “Peer Review Ring” incident satisfactorily be explained in terms of conflicting incentives? (ii) What additional risks are present in the incentives of the normative PRP? (iii) What risk treatments options are available to address risks from incentives in the normative PRP?

NIST SP 800-39 [

7] operates with three organizational tiers when it comes to performing risk assessments. Where tier 1 is the top level organizational level, tier 2 is at the business process abstraction, and Tier 3 is the low-level information systems abstraction. The scope of the assessment includes the stakeholders and incentives involved in a normative PRP at tier 2 (as defined in NIST SP 800-39 and Business Process Management (BPM) [

8]). This article includes a novel approach to Root Cause Analysis (RCA) of the incentive risks of a known incident, with an exploration of incentives as a probable root cause of a known incident. A root cause is an underlying cause(s) to a problem, which needs to be identified to address the problem [

9]. The CIRA starting point is a normative description of a best practice PRP, considering aspects of primarily blind PRP practices. The assessment only considers participants and participant groups that are directly involved in the PRP as determined by the stakeholder analysis and does not consider threats posed by external actors. The main contributions of this work are: (i) Insight into incentives as root causes by applying CIRA; (ii) Offer insight into the normative PRP incentives and associated risks; (iii) Discussion and suggestions for risk treatments to make the PRP more robust; (iv) A subjective discussion on the performance and knowledge gained from analyzing incentives.

The remainder of this article has the following structure: First, related work on the PRP and associated risks, incentives, and CIRA. The methodology describes the extensions to the method. The results are presented in a two-part case study: (i) root cause of the “Peer Review Ring” incident and (ii) an assessment of the incentives of the complete process. We then discuss possible treatments to incentive risks and experiences with CIRA. Lastly, this paper discusses the limitations and assumptions of this research, and possible future work before concluding our work.

2. Background Knowledge & Related Work

The assessment of incentives in the PRP requires an insight into the state of the art PRP practices, together with research on human incentives. Further, this work provides the necessary information on CIRA and a summary of known risks in the PRP.

2.1. The Peer Review Process

One of the main purposes of the PRP is a quality assurance of research by subjecting manuscripts to the critical assessment and scrutiny of peers [

10]. In addition, peer review is designed to prevent dissemination of irrelevant findings, unwarranted claims, unacceptable interpretations, and personal views. Ideally, the peers should review each other’s work in an unbiased fashion [

11]. Peer review is also supposed to protect the public from potential pseudo-science and prevent readers from wasting their time on inferior research.

There are several different practices when it comes to PRP design, one of the core differences being between an open and a closed (double-blind) process. In a fully open PRP, every actor has access to the names of all the participants in the process. In a fully closed PRP, both the authors and reviewers will be anonymous to each other. There are different versions of review practices, e.g., where the reviewers remain anonymous, knowing the names of the authors, and vice-versa.

2.2. Incentives

An

incentive is something that motivates an actor to act on a capability and describes why an actor chooses to do something. E.g., a criminal who chooses to rob a bank, has a financial incentive. In this article, an

incentive structure encourages a certain behavior by applying incentives. For example juridical systems, where we enforce laws by having punishments that are undesirable to the system’s actors (disincentives), or reward systems such as getting money for working overtime. We define several such incentive structures as an

incentive system. Top level classifications of human incentives is provided by Dalkir [

12] (p. 309), a summary is presented in

Table 1.

Remunerative and financial incentives describes situations where an actor is motivated to act a certain way by financial or some material reward or deterred by some perceived material loss.

Moral incentives describe situations where a particular choice is widely regarded as the right thing to do, or as particularly admirable. Alternatively, where the failure to act in a certain way is condemned as indecent, leading to a loss of admiration. The community enforces moral incentives.

Coercive incentives are situations where an actor can expect certain reprisals if he/she fails to act in a particular way. The result can be in the form of physical punishment, imprisonment, confiscating possessions or being fined. These types of incentives are what we apply to uphold laws and regulations. A fourth class of incentives is

Natural/personal incentives [

13] (p. 136). These incentives are driven by human nature, and take shape as e.g., curiosity, mental or physical exercise, admiration, fear, anger, pain, joy, or the pursuit of truth, or the control over things in the world or people or oneself.

Table 1.

Summary of top level classification of incentives.

Table 1.

Summary of top level classification of incentives.

| Incentive Class | Enforced by | Incentive | Disincentive |

|---|

| Moral | Community | Gain in admiration | Loss in admiration |

| Financial | Employer or similar | Monetary/Material gains | Monetary/Material losses |

| Coercive | Juridical system or similar authority | - | Reprisals |

| Natural | Self | Human nature | - |

Chulef

et al. [

14] provides an in-depth and detailed study of human goals, which provides several sub-classes of motivators, although not grounded in the above classifications. In addition, there are incentives that motivate malicious actions. Pipkin [

15] provides an overview of such possible motivators: military, political, business, financial, advertising, terrorism, grudging, self-assertion, and fun.

Maslow’s [

16] human motivation theory and hierarchy of needs provide a theoretical foundation for deducting strength of incentives. Whereas if a person lacks a basic need, such as food, he is more likely to act in a way that secures this need, than to satisfy social needs higher up in the hierarchy.

2.3. Summary of CIRA

CIRA is a risk assessment method developed primarily by Rajbhandari [

6] and Snekkenes [

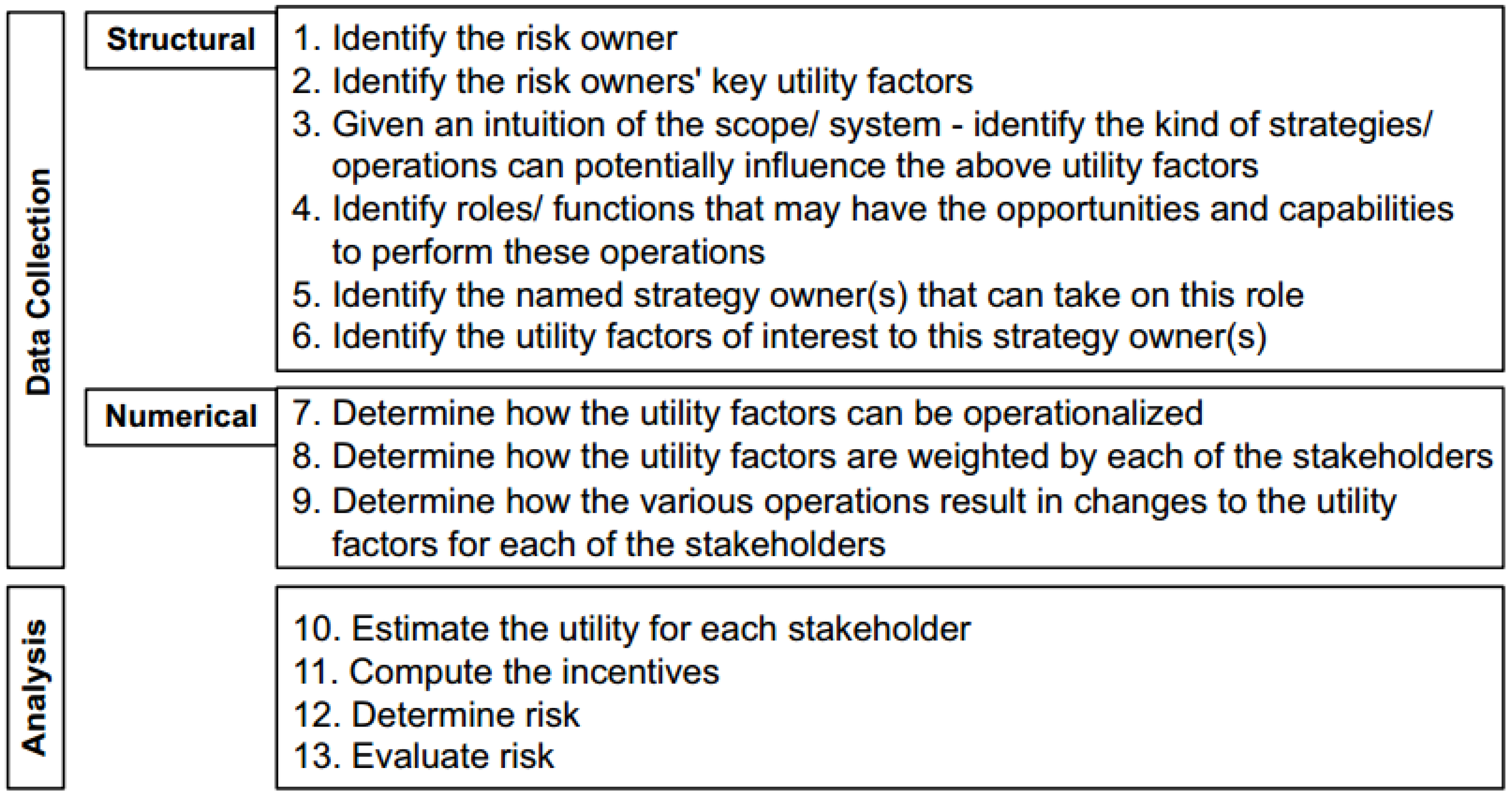

17]. CIRA frames risk in terms of conflicting incentives between stakeholders, focusing on the stakeholders, their actions and perceived outcomes of these actions. The risk owner and the strategy owner are the two classes of stakeholders in CIRA. The perspective for CIRA analysis is that of the risk owner. A strategy owner is capable of triggering an action to increase his perceived benefit. CIRA applies terms from economics where the corresponding stakeholder defines “utility” as the perceived benefit from triggering an action. According to the method, utility factors (UF) are weighted (Wts) by how much the stakeholder values it while the initial value (IV) represents the status of the UF. E.g., a person can weight money as extremely important while having a low IV by initially having a lot of money. Risk treatments in CIRA are controls that disincentivizes unwanted behavior or strengthens the incentives for desirable outcomes. The CIRA procedure consists of 13 steps; whereas 9 steps are for data collection and context establishment, and remaining steps for risk analysis, see

Figure 1. A summary of the steps is as follows [

6] (p. 63).

First, identify the stakeholders, including the risk owner and the other stakeholders within the scope of the assessment. For each stakeholder, identify utility factors, determine the scale, measurement procedure, and explain the underlying assumptions for the weights assigned to each stakeholder. Strategies considered as a part of the assessment influence the risk owner’s utility factors, which potentially includes both malicious and beneficial strategies.

Figure 1.

CIRA Procedure. Source: Rajbhandari [

6] (p. 28 and 63–82).

Figure 1.

CIRA Procedure. Source: Rajbhandari [

6] (p. 28 and 63–82).

Each scenario is modeled as a theoretical one-shot game, where the analyst calculates the final values and utilities for each of the strategies for each of the players. Lastly, the risk is determined by investigating each of the strategies with respect to the sign and magnitude of the determined changes.

2.4. Known Risks in the Peer Review Process

This research is not the first study of the PRP and the associated risks; this section presents a short review of the previous work on the topic, and positions this research paper in relation to this work.

The effects of the competitive “publish or perish” dogma has previously been studied and discussed [

18]. If it becomes too strong it can lead to adverse effects, especially in combination with factors such as limited time and resources. Jealousy is also a factor in the review process [

19]. The confirmation bias is the human tendency to search for, interpret and/or prioritize information that is consistent and confirms their perceptions and hypothesis, and discredit those ideas that do not. Mahoney [

20] has researched this phenomenon in science and found that scientists too look for evidence to support their perceptions while quickly dismissing contrary evidence. This bias also affects our judgment; Nickerson [

21] writes that humans can be remarkably objective judges in disputes where we have no personal stake. However, this changes rapidly when the dispute becomes our own, for example, contradictions to own work.

The Principal-Agent problem describes the relationship between two parties in business, where the agent represents the principal in a transaction with a third party. Agency Theory offers insight into information systems, outcome uncertainty, incentives and risk [

22]. Agency-problems may occur when there is an information asymmetry between the agent and the principal, in favor of the agent. Garcia

et. al. [

23] considers the well-known economic problem Principal-Agent in the PRP, where the journal Editor represents the principal and the Reviewer the agent. The problem centers on the difficulty in motivating the Reviewer to act in the best interest of the Editor, rather than his interests. Garcia

et. al. employs Agency-theory to investigate this problem by applying mathematical models of the Reviewer’s performance and

Expected Utility. Taleb and Soudis [

4] describe the lack of “skin in the game” as a persistent risk in systems where stakeholders perceive themselves to have little to lose and more to gain (Principal-Agent problem). Compared to CIRA, Agency-theory looks for ways to incentivize the agent to act in the best interest of the of the principal. While CIRA looks for situations in which there are conflicting incentives between the risk owner and other stakeholders in regards to the execution of actions [

6].

3. Methodology

The primary application of CIRA so far has been less complex cases with few stakeholders (see [

6]). To adapt the method to a more complex case and answer the research questions, this research builds on Design science research. Where we have combined aspects several existing methods to understand incentives in information systems and address the research questions. We addressed research question one by adapting CIRA for RCA of the incentives that triggered the Peer Review Ring incident. Research question two was addressed by adapting and modifying CIRA for assessment of key elements of the PRP to determine risk in the case study. Lastly, research question three was addressed by discussion of risk treatment strategy and measures to address incentive risks based on the results from research questions 1 & 2.

To address research question one, we chose a normative approach to the PRP, with the assessment centering on incentives for participating in the process. Normative approaches are appropriate for behavioral sciences and decision-making research [

24], which relates to risk assessments where the core function is improving decisions. CIRA contains aspects from both

Expected Utility Theory and

Game Theory, which are both examples of a primarily normative approaches. One of the strengths of a normative approach is that it reduces effects from the

If-Ought fallacy [

24]. While the primary weakness of a normative approach has these qualities, this may not reflect daily practices.

In the following sections, we outline the details of our study and show where we differ from previous CIRA work. The CIRA steps apply to both the CIRA process analysis and the Root Cause analysis. However, description of the steps taken particularly for the RCA comes after the main assessment. The research methodology of the main assessment follows the steps outlined in

Figure 1, the following description outlines each step as in the original methodology with the differences to this study highlighted and explained:

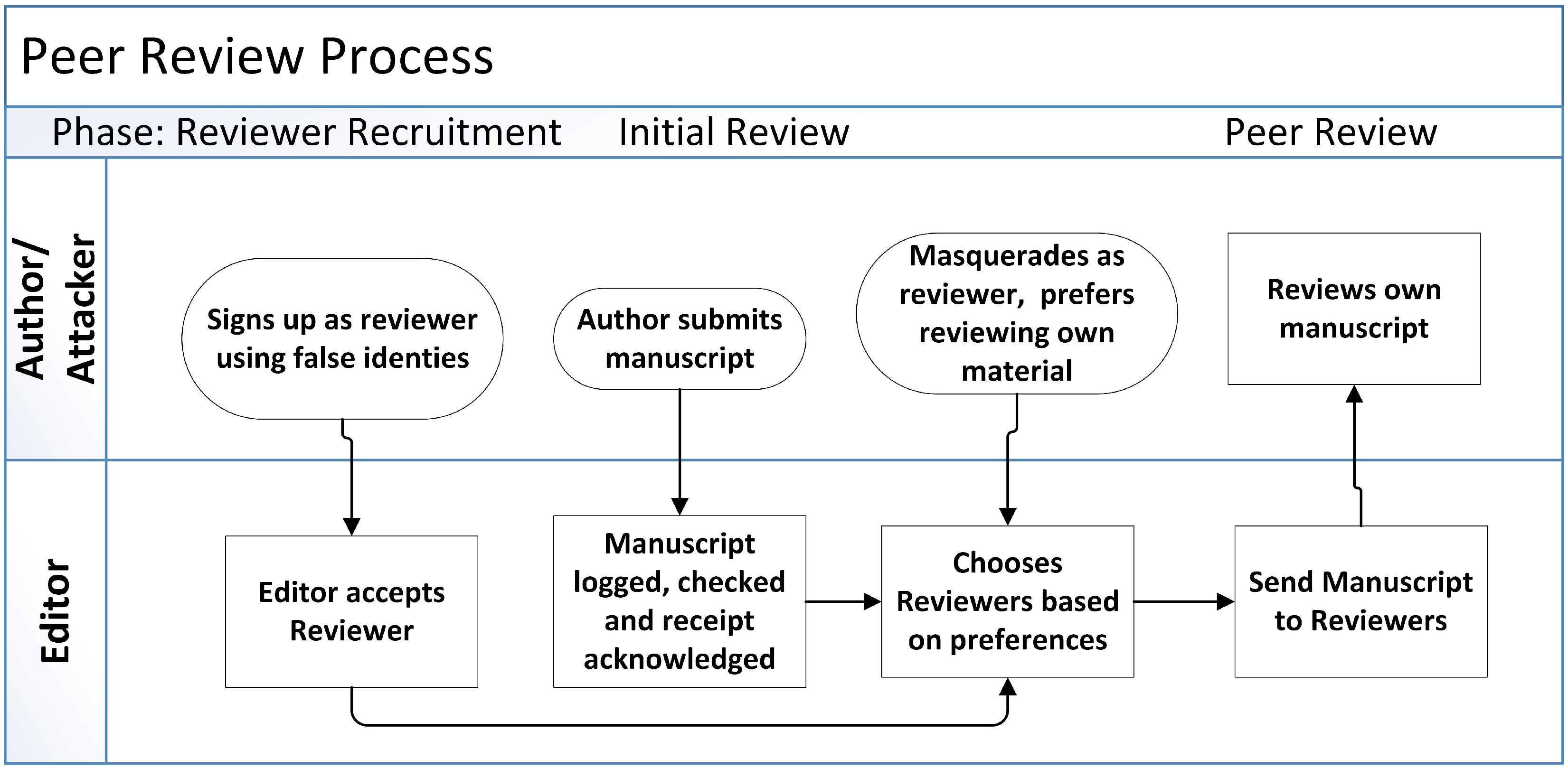

Step 1 - Identify the risk owner, the perspective that the risk analyst adopts for the assessment. Previous CIRA studies [

6,

17,

25] have operated with one risk owner. Having one risk owner will only detect risks posed to his/hers UFs, and is insufficient when considering the process as a whole. Therefore, this research operates with multiple stakeholders as risk owners to consider different perspectives on risk. This requirement highlighted a need for prioritization and scoping to be able to determine which perspectives to consider from the beginning. To address these issues, this study applied

stakeholder analysis, which represents an important addition to CIRA as it enables the risk analyst to prioritize his efforts efficiently by ruling out the non-important stakeholders [

8]. The stakeholder analysis in this paper followed the prioritization scheme described in Mitchell

et. al. [

26]. The scheme uses three subjective factors for salience: (i) Power, (ii) Legitimacy and (iii) Urgency, illustrated in

Figure 2. These categories define “

the degree to which managers give priority to competing stakeholder claims” [

26]. Power is the stakeholder’s ability to force his will upon another stakeholder, and make the other stakeholder do something he otherwise would not have done. Legitimacy is the stakeholder’s relationships with other stakeholders within the organization and the organization itself. Urgency represents the urgency of the stakeholder’s claim in and for the organization. This subjective vetting process is useful to avoid spending time assessing stakeholders that can potentially carry out a strategy, but do not have the sufficient penetration to pose any threat.

Figure 2.

Stakeholder classification scheme. Based on Mitchell

et al. [

26].

Figure 2.

Stakeholder classification scheme. Based on Mitchell

et al. [

26].

Step 2 - Identify and collect the risk owners’ key UFs, and his/hers opinions on the process, instead of focusing on assets as is common in traditional risk assessments. Whereas CIRA suggests using interviews in obtaining UFs for the stakeholders involved in the process. Given this research’s normative approach, the UFs for each stakeholder was determined based on facilitated workshops with previous/present participants of the PRP, normative literature [

10] and human motivation theory as described in

Section 2.2.

Step 3 - Given an intuition of the scope/ system- identify the kind/ classes of operations/ strategies that can potentially influence the above utility factors. CIRA considers strategies both potentially a threat and an opportunity for the risk owner. However, to limit the assessment scope, this research only considers potentially threat strategies with a negative impact on the risk owner in scope. In addition, CIRA primarily addresses risk analysis and does not provide tools for context establishment beyond mapping risk and strategy owners in a “static” environment. This research applied BPM tools to enable a broader scope that allowed for consideration of strategies throughout the process, and to provide a context and an additional level of process functionality to the assessment. Our model depicted the PRP with one round of peer review, based on the basic process depicted in Hames [

10] (pp. 9–10) and modified according to acquired information. However, the incentive analysis was still limited to a one-shot game.

Step 4, 5 & 6 - Identify the roles/ functions that may have the opportunities and capabilities to perform these operations, identify named strategy owners and identify the UFs of interest. The first step identifies the potential roles that can trigger the previously identified operations and are therefore key to the analysis. This assessment made considerations of Roles/functions and operations for each stakeholder within the scope. In Step 5, this research diverts from CIRA, since the assessment operated with personae, rather than named actors. In Step 6, this study followed CIRA by identifying UFs for all stakeholders within the scope.

Step 7, 8 & 9 - Determine how the utility factors can be operationalized, how the UFs are weighted by each of the stakeholders, and how the various operations results in changes to the UFs for each of the stakeholders. Regular CIRA requires scale, measurement procedure and semantics of values explained for each identified utility factor together with an explanation of the underlying assumptions. Obtaining this requires an individual to assume this role and quantify his preferences for each UF. This approach would represent the views of an individual rather than the ideal participant and is not ideal for a normative assessment. The UFs were instead operationalized by applying qualitative values to indicate preferences, and focusing on how a certain strategy influenced a UF, rather than how much. In addition to considering changes to UFs from various operations, this research proposed ideal values for each UF as a starting point, and experimented with these as a part of the assessment to determine risk in various identified scenarios. In contrast to the regular CIRA where the UF evaluations represent one individual view and, therefore, remain static.

Step 10, 11, 12 & 13 - Estimate the utility, compute the incentives, determine risk, and evaluate risk. These steps are conducted according to CIRA. One of the drawbacks of the approach described in this paper is that using qualitative values and several risk owners inhibits the use of the Incentive Graph to visualize risk. This research used areas of concern within the process for risk communication.

3.1. Root Cause Analysis

An RCA is conducted after an incident has occurred for the purpose of identifying factors that must be in place for an incident to occur [

27]. In addition, to identify the cause(s) of the problem, together with possible ways of eliminating them [

9]. The RCA conducted in this study was of the underlying incentives as causal factors for triggering the incident, rather than of the technical vulnerability that enabled the incident to happen. The purpose of RCA is to determine if the system is in a state in with unbalanced incentives in the sense that the upside from taking risk far outweighs the perceived downside. This approach provides insight into the motivation behind triggering the incident and enables different types of treatments.

The research applied process flow maps to visualize the reported flow of events and involved stakeholders. Following the steps of CIRA, interviews with the participants would be ideal for assigning UFs, but since we did not know the identities of the involved stakeholders, this was not feasible. As an alternative approach, each stakeholder was assigned probable UFs for participating in the process based on reported information about the incident. Further, to determine risk we experimented with the values of both the Wts and IV, together with the changes in utilities from triggering the action. The risk perception of triggering the threat risk lies in the difference in being caught and not being caught.

The RCA considers risk treatments from incentives, where the discussion centers on how to incentivize desirable behavior through UFs. Incentive theory provides the basis of the discussion on treatments.

4. Case Study: CIRA of the Normative Peer Review Process

The PRP is according to Hames [

10] “the critical assessment of manuscripts submitted to journals by experts who are not part of the editorial staff”. The main goal of the PRP in this case study is to ensure high-quality research and is thus primarily a high integrity quality control and improvement process (see

Section 2.1). In this case study, relevant risks pose a threat to the interests of the risk owner in question or the goals of peer review. The main normative incentive for publishing in peer-reviewed channels is to achieve external respect for work, in terms of being a credible source for future citations by others. In addition, research institutions within the scope of this study are incentivized to publish in approved channels by receiving funding and prestige based on quantities of academic publications.

The PRP consists of several stakeholders, e.g., there can be several roles of Editors within an editorial office, and likewise several interacting reviewers and authors. To stay true to the process level assessment scope, these were grouped into primarily five stakeholder groups. Our assessment consist of three active stakeholder groups (i) Authors; (ii) Reviewers and (iii) Editor(s); While (iv) Publisher and (v) Readers/audience have a secondary influence on the process, meaning the ability to influence the process post-enactment. Internal risks within the different groups fell outside of the scope and addressed at a lower abstraction level.

Table 2 shows the summarized results from the stakeholder analysis, where top level incentives for process participation are ranked hierarchically.

The PRP assessment is of a closed process with known authors and anonymous reviewers. The existing risk mitigating factors and controls considered in this assessment consists of the appeal system, rebuttal process, reader, other reviewers and editors, and the authors highlight personal conflicts upon manuscript submission.

Table 2.

Results of Stakeholder Analysis.

Table 2.

Results of Stakeholder Analysis.

| Role | Process Contribution | Responsibilities for process integrity | Incentive for participation | Normative Process Concern | Stakeholder Class |

|---|

| Editor | Decision Maker | Fair and transparent decisions on whether or not to publish. | 1. Moral

2. Financial | Expects honest recommendations from reviewers | 7 - Definitive |

| Author | Manuscript Owner | Submitted work being original and honestly carried out, evaluated and reported. | 1. Moral

2. Financial

3. Coercive | Expects fair and Honest judgments. Serious comments that improve the quality of the paper. | 6 - Dependent |

| Reviewer | Adviser | Assess submitted papers to the best of their ability in a courteous and expeditious manner

Advice editors on the quality and suitability, flaws and problems of the reviewed material | 1. Moral

2. Financial

3. Natural | Quality control and improvement. Expects respect for opinions and the review work/comments to be taken seriously by Authors/Editors. | 4 - Dominant |

| Publisher | Publishes material | Minor Responsible for hiring/firing Editor (2nd order influence) | 1. Financial | Sales | 1 - Dormant |

| Reader | Audience | Minor Will report fraud/cheating (2nd order influence on process) | 1. Natural | Knowledge | 3 - Dangerous |

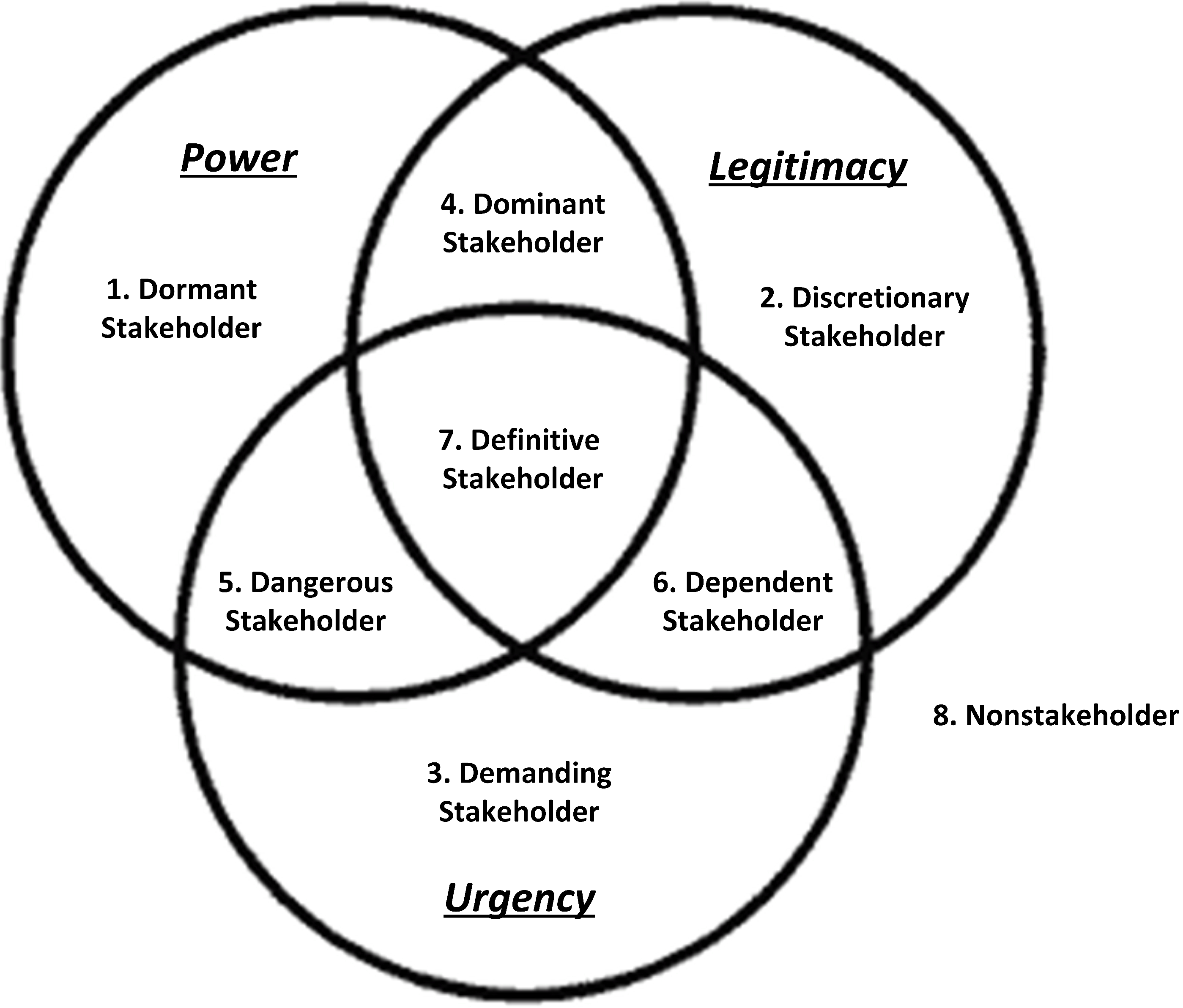

4.1. CIRA Root Cause Analysis—“Peer Review Ring” Incident

The first case study is an analysis of the previously mentioned incident where the Author managed to become Reviewer of his work. We present our analysis of this incident in terms of CIRA, and propose an explanation of the incident and risk treatment controls. The risk and process owner is the Editor for the RCA assessment.

The Author successfully carried out a masquerading attack by creating several fake e-mail addresses with corresponding fabricated identities, which was done to trick the Editor of the journal into allowing the Author to review his own work.

Figure 3 contains a flowchart description of the compromise. The perpetrator was caught leading to several papers being retracted and the resignation of an Editor [

5].

Figure 3.

The Author compromises the PRP integrity by masquerading as Reviewer.

Figure 3.

The Author compromises the PRP integrity by masquerading as Reviewer.

4.1.1. Analysis

The two main stakeholders of this process are the Author (perpetrator) and Editor (risk owner). What is obvious is that the Author broke the trust of the peer review system. Firstly, our analysis diverts a bit from the CIRA procedure (

Figure 1), as we already know how the Author triggered several actions that influenced the Editor negatively (resigned his position). The Author achieved and exploited the “influence” strategy of the Reviewer to game the process. In addition, we have applied qualitative values (high, medium, low) to indicate probable preferences.

Determining the incentives for the Author, we know that one of the main keys to an academic career and funding is publication quantity. Therefore, we assume that the Author had a high Wts on the UF “Quantity of publications”, and perceived his IV as low. We know that, e.g., the previously mentioned “Publish or perish” is a strong incentive for academics to publish their work. If this incentive gets too strong, it poses a risk to both the Editor and process integrity.

Table 3 shows the peer review incentive system in a state of risk. Whereas the “Gaming the system”—Incident is likely to occur; where the risk perception of the Author is key. The analysis indicates how UFs are affected using increase (positive), neutral and decrease (negative). Two potential outcomes are considered: not being caught and being caught.

Table 3.

Incentive system in a state that can trigger undesired actions.

Table 3.

Incentive system in a state that can trigger undesired actions.

| Changes in Utilities |

|---|

| Stakeholders | Utility factors | Wts | IV | GameSystem (Not Caught) | GameSystem (Caught) |

|---|

| Editor | Reputation | High | High | Neutral | Decrease |

| Career | Medium | High | Neutral | Decrease |

| Process Integrity | High | High | Decrease | Increase |

| Process Efficiency | High | High | Neutral | Decrease |

| Author | Career | High | Low | Increase | Neutral |

| Money/Funding | High | Low | Increase | Neutral |

| Reputation | Medium | Medium | Increase | Decrease |

| Academic Quality | High | Low | Decrease | Neutral/Increase |

| Academic Quantity | High | Low | Increase | Decrease |

4.1.2. Root Cause

The exploited vulnerability in this process was that the Editor was too trusting and complacent in his Reviewer screening, leaving the PRP vulnerable. From our analysis, we see that some of the Author’s assigned key UFs for participating in the process have strong relations to Pipkin’s motives for malicious actions. Recall that Pipkin [

15] outlines business and financial as motivations, the UFs “Career” and “Money/funding” have strong relations with them. In our opinion, a general indication of risk in the trust-based system is when external factors (e.g., publish or perish) increase the weights of UFs related to possible malicious motivations.

In addition, the opportunities for sanctions available to the risk owner are very limited; we identified few actions that could harm the Author’s UFs besides declining the work. There are few official coercive/juridical options (that we are aware of) available to the risk owner. One strategy available to the Editor is to make the fraud public such that the Author’s full name and cheating become public. Another possibly available strategy is for the Editor to make it clear to all authors that the management of the organization will receive a report if rules are broken. In which the organization may be ’blacklisted’ if no action is taken against the author. The first strategy relies on the capacity to “scorn” the Author from the research community. However, this strategy weakens with the increase in size and complexity of the scientific community and limits the opportunity to prevent the Author from being published elsewhere and blending in. The Editor does not easily undertake such a strategy, as it also can cause harm to his UFs by admitting the breach, such as damage to reputation. As we see in

Table 3, making the incident public knowledge is likely to cause harm to the risk owner. With the second strategy, the Editor relies on taking action a few times, which will cause the word to go around and the problem may get reduced.

There is a potential imbalance in the incentive systems here, whereas the risk exposure of the Author is more positive than negative from cheating. By analyzing this incident in terms of incentives, we find that the Author’s incentive for having work published is likely too strong. While the perceived disincentives (deterrents) are too weak, the Author perceives the Editor to have very limited capacities to “hurt” him. In other words, the reward outweighed perceived risk for the Author, and could act in his self-interest.

4.2. Identified Risks in the Peer Review Process

In this section, we present our findings from assessing the process, starting with identified risks. These were identified by considering possible malicious strategies at each step of the modeled process, considering the capabilities of the main persona. The main goal was to identify threats to process integrity from conflicting incentives. Experience from the root cause analysis also dictated that we consider cooperation between stakeholders. In total, we identified 19 risk scenarios, out of which we analyzed nine.

Table 4 illustrates the risk identification scheme with corresponding analyzed risks.

Table 4.

Risk Identification and screening table, containing nine risks chosen for further analysis.

Table 4.

Risk Identification and screening table, containing nine risks chosen for further analysis.

| No. | Risk Owner | Strategy Owner, Co-op | Harmful Operation (Vulnerability) | Process Phase | Harm to Additional Stakeholders | Harms Process | Existing Control | Consequence Stakeholders | Consequence Process Output |

|---|

| 1 | Author | actor Editor | Reject Manuscript from Personal/political reasons | Initial Review

Peer Review | Reader

Publisher | Yes | Appeal system

Personal Conflict | Suppression of Dissent. | Quality reduction. |

| 2 | Author | Editor \&Reviewer (Coop) | Influence Reviewers to favor/reject manuscripts based on relations/political views | Peer Review | Reader | Yes | Appeal system | Nepotism/Suppression of dissent | Quality reduction.

Reduction in integrity |

| 3 | Editor | Author | Exploit review process for comments, and withdraw submission | Peer Review | Reviewers | Yes | - | Waste of resources | Exploiting process by stealing resources |

| 4 | Reviewer | Author\&Editor (Co-op) | Influence Editor to favor manuscript | Initial review

Peer Review | Reader | Yes | Rebuttal

Appeal system | Suppression of Reviewer influence | Quality reduction

Reduction in integrity |

| 5 | Author | Reviewer | Exploit influence on manuscr to promote personal interests (give dishonest opinion) | Peer Review | Editor

Reader | Yes | Rebuttal

Appeal system

Personal conflict | Degradation of content | Reduction in Process integrity |

| 6 | Author | Reviewer | Give scarce feedback | Peer Review | Editor | Yes | Editor | Reduced Quality improvement of submitted work | Quality reduction |

| 7 | Author | Reviewer | Give rich feedback w\o proper expertise | Peer review | Reader | Yes | Other reviewers

Rebuttal | If followed, quality reduction | Quality reduction. |

| 8 | Editor | Author \&Reviewer (co-op) | Author suggests or Cooperates w reviewers for his own manuscript | Initial review

Peer review | Reader

Other Authors | Yes | - | Cheaters get advantages over other authors | Limited improvement of submitted work.

Less suited work accepted. |

| 9 | Editor | Reviewer | Spent too long time reviewing | Peer review | Authors

Publisher | Yes | - | Delay in Review and publication | Delay in publication |

4.3. PRP Areas of Concern

We applied CIRA to analyze the risks in the nine identified scenarios in

Table 4, considering the five stakeholder groups in the process. The results from running CIRA of the scenarios are summarized into areas of concern and presented below. In some of the scenarios we had to split the stakeholder groups, e.g., in scenario eight the cheating Author and the competing authors was counted as significant stakeholders. Below we provide a summary of each analysis; we only provide the full analysis of scenario 1 for illustration of the method, see

Table 5. This table also illustrates the difference in risk perception between being caught and not being caught while taking action. The risk mitigation strategies discussed in the following chapter builds on disincentivizing by making this gap bigger.

Table 5.

CIRA of Scenario 1 with two outcomes, illustrating the difference in incentives between getting detected and not getting detected.

Table 5.

CIRA of Scenario 1 with two outcomes, illustrating the difference in incentives between getting detected and not getting detected.

| | | Strategy Owner | Changes in Utilities Actor: Editor | |

|---|

| Stakeholder | Utility Factor | Wts | IV | 1. Reject Manusc (Not Detected) | 1. Reject Manusc (Detected) |

|---|

| Editor | Reputation | High | High | Neutral | Decrease |

| Money/Funding | Low | High | Neutral | Decrease |

| Career | Medium | High | Neutral | Decrease |

| Process Efficiency | Medium | High | Neutral | Decrease |

| Political Motivations | Medium | Medium | Increase | Decrease |

| Author | Career | High | Low | Decrease | Neutral |

| Money/Funding | High | Low | Decrease | Neutral |

| Reputation | Medium | Medium | Neutral | Increase |

| Academic Quality | Medium | Low | Neutral | Increase |

| Academic Quantity | High | Low | Decrease | Increase |

| Publisher | Process Integrity | Medium | High | Neutral | Decrease |

| Time to market | High | High | Neutral | Decrease |

| Publication Reputation | High | High | Neutral | Decrease |

Process influence from political and personal reasons

Analysis of identified scenarios 1 & 2 shows the PRP as vulnerable to exploitation by the quality assessing part. In these scenarios, the assessing parts reject the manuscript based on views instead of research quality. In terms of scenario 2, with multiple decision makers, an editor may influence several of the reviewers to provide negative reviews of a certain manuscript to compromise the process. Dissidence, theft of ideas and nepotism are examples of potential motivations behind triggering this scenario. Our results show that at least one of two factors must be in place to trigger these scenarios for political reasons or theft of ideas: (i) The political/personal incentive must be strong to trigger this action. E.g., in cases where the manuscript carries dissent perceived as threatening to the editors key UFs; (ii) The perceived threat from executing the action must be low or negligible. Two of the primary UFs at stake from triggering this risk is personal and publication channel reputation. The latter meaning that the UFs of the Publisher also are at risk.

In scenario 5, the Reviewer exploits his position to give dishonest feedback and promote personal interests. Given an anonymous review process, our analysis shows almost no downside for the Reviewer in triggering this capability. The rebuttal and appeal system only threatens to void the review comments but poses little threat to the Reviewer’s UFs. Thus, there are few disincentives to acting selfishly. The consequences of this action harm the UFs’ of mainly the Author, while we identified secondary effects for the Editor and reader, while threatening process integrity.

Submitting only for comments

In scenario 3, the Author exploits the PRP by submitting only for comments with no intention to publish at the venue, and then withdraws his manuscript. Circumstances for triggering this can be submitting to a less prestigious venue for comments, withdrawing, improving and resubmitting to the higher level venue. This vulnerability is inherent in the design of the PRP, and the option to withdraw papers must be present for a variety of reasons. There is the possibility of banning researchers who frequently do this, and there is a threat to their reputation within the community. However, this relies on being able to detect fraud, which is a challenge if the perpetrator does not continuously exploit the same PRP. The disincentives are also negated by the possibility for an author to commit this kind fraud across a variety of conferences. This practice results in wasting time and efficiency in the process by stealing review time from other more serious authors. Our analysis shows that the Author perceives little downside from this action and is, therefore, a likely scenario.

Manuscript acceptance through friendship and acquaintances

In scenario 4, the Author influences the Editor, either directly or through acquaintances, to favor manuscript over other submissions. Familiarity between stakeholders is quite common in many research disciplines, which enables this risk. The primary UFs are an increase of publications, funding, and career while the disincentives are risks to academic reputation. The consequences of this is inferior research being published at the possible expense of better manuscripts and their authors.

Risks of reviewing

In scenario 6, the Reviewer chooses to give shallow and superficial reviews. Our analysis shows that this strategy threatens process integrity by limiting the quality assurance function, especially if multiple reviewers of one process choose this strategy. It also threatens the reputation of the arrangement and participants. In the normative PRP, reviewing is voluntary work in which the incentives for reviewing properly may not be strong enough. The Editor is the main quality control of the reviews themselves and decides whether or not to send out the reviews. However, the Editor has few strategies to incentivize reviewers. This situation affects the Author’s UF, who expects to receive reasonable feedback on his work. More importantly, a weakened PRP will weaken the quality assurance function, which will enable inferior research to be published, and defeat the purpose of peer review.

In scenario 7, the Reviewer chooses to give rich feedback without having the proper expertise. This action threatens the integrity of the submitted manuscript, as it is not easy to ignore reviews, especially when accepted. Other reviewers and the rebuttal mitigate this risk, but the seniority of the Reviewer will also factor in on the risk.

In scenario 9, Reviewers spend too long time reviewing because of lack of incentives to complete within deadline. Our analysis shows that this will have consequences for process efficiency by causing delay, and is primarily the Editors concern.

Proposing Reviewers for Own Work

Depending on PRP design, some practices allow the Author to propose explicitly a list of reviewers. Similar to the “Peer Review Ring”, in scenario 8 this option can be exploited to game the process. Either by cooperation between Author and Reviewer through a former acquaintance or by having prior knowledge of the reviewers and their preferences. Exploiting this option will cause an unfavorable situation for competing authors, and reduce the integrity of the process.

4.3.1. Developments with Potentially Adverse Effects on Incentives

Changes in the academic community can influence incentives, such as an increase in competition for funding and changes in the academic economy. More competition will increase the pressure on the financial incentives, such as competing for funding. A warning sign is when the financial incentive becomes the strongest for doing science. Related to this is the industrialization of science, whereas industry funds the research, scientists will be reluctant to criticize these companies. Science is about the exchange of knowledge and sharing of ideas, an increase in financial incentives may lead to secrecy with the intent of making money instead. There is also uncertainty related to the future growth of the academic community. An example of unforeseen consequences from this is seemingly academic journals that are “predatory” in the sense that they exploit the open-access publishing business model. This exploitative practice by charging publication fees to authors without offering the editorial and publishing services associated with legitimate journals. These changes may have an adverse impact on the existing incentive system and put strain on the trust, which the process relies on to function.

5. Results and Discussion

The previous section highlighted risks inherent in the PRP, in this section we discuss these risks in terms how to make the process more robust. The discussion is structured in the following categories: Overall risk treatment approach, discussion of suggested treatments for the Peer Review Ring incident, treatments for identified risks with CIRA, performance of the approach, and lastly, assumptions and limitations of this research.

5.1. Treatment Strategy-Increasing Robustness

The balance between incentives and disincentives are the key in risk managing human factors. To determine treatment strategy, we apply risk management concepts defined by Taleb [

28]; (Taleb defines systems as (i) Fragile—dislikes randomness and sees only downside from shocks; (ii) Robust—Can absorb shocks without changing state; and (iii) Antifragile—Likes randomness and improves from shocks (up to a certain size)) Trust and integrity are inherently fragile as they take a long time to build and sustain, but can be torn in an instant. Publication channels are dependent on a good reputation, which makes them fragile in a competitive environment. Incidents that can damage the reputation of a publication channel can prove very harmful, as the trust from the potential submitters will decrease, and may consider other publication venues. Together with the findings from this study, the publication venues are facing unknown risks with large amounts of uncertainty with limited influence on externalities. The logical option is to make the process more robust. In addition, another risk management strategy from Taleb [

28] (p. 278), is to limit size and complexity of the system where possible. As an increase in these two areas introduces more hidden risk and increases the fragility of the system. A problem with incentive systems is that they are inherently complex and unpredictable, and humans do not always act as intended. Therefore, all suggested treatments provided in the following subsections aims to address these factors by increasing transparency and accountability.

5.1.1. Suggested Treatment for Peer Review Ring Incident

Mitigation of this particular incident/risk is achieved by implementing stronger authentication procedures for reviewers. However, this does not address the underlying incentives. A potential treatment for unbalanced incentives, in this case, would be a regulatory one. Where we introduce additional risk to the attacker’s utilities factors, making the difference between utilities in getting away with it and being caught larger. An example is to increase the sanction opportunities of the Editor and/or publisher by introducing the possibility to ban the attacker’s institution from publishing in that channel for a period. This measure will effectively raising the stake for the attacker, as he is no longer just taking personal risk, see

Table 6. Considering other regulatory options such as introducing laws and regulations is also an option as a long-term solution.

Table 6.

Example of risk treatment by introducing additional UFs for stakeholder.

Table 6.

Example of risk treatment by introducing additional UFs for stakeholder.

| | | | Final Values |

|---|

| Stakeholders | Utility Factors | Wts | IV | GameSystem (Caught) |

|---|

| Author | Relationship to Colleagues | High | High | Decrease |

| Job safety | High | High | Decrease |

5.1.2. Suggested Treatments of Risks Identified with CIRA

The risk assessment result shows that several risks are present because of cooperation between participants. Cooperation risks are familiar within the security community, whereas separation of duties may mitigate these risks, but have drawbacks in the additional overhead they impose on the process.

From our analysis, we found that mitigation of several risks, where actors abuse their capabilities by cooperating or otherwise acting unethically, are achieved by having a robust rebuttal process and appeal system (scenarios 1 & 2). We can consider the rebuttal process the first line of problem-solving if unsolved the dispute escalates to the appeal system. A robust appeal system should consist of an independent third party, which can handle academic disputes in an objective manner. Having an appeal system and rebuttal process is common in larger publication channels, but requires access to more resources and may not be suitable for smaller channels.

Authors exploiting the PRP for comments (Scenario 3) we believe to be quite common and not easily avoided. Possible approaches are to establish a form of “commitment to publish if accepted”-contract between Author and publication channel when submitting a manuscript.

One possible way of mitigating Scenario 4, regarding back-channel communication between Editor and Author, is to establish ethical guidelines concerning such communication. Other possible disincentivizes are strictly outing authors attempting this, effectively risking the reputation of stakeholders attempting this. Or not letting manuscripts into the PRP at all.

The blind review practice is such that the Reviewer remain unknown to the Author. The community enforces moral incentives (

Table 1), while the anonymous review practice removes the opportunity for the Reviewer to get admiration or loss because of that for his review work. This practice also decreases accountability in the process. Being anonymous, the Reviewer is invisible to the community as a whole and mainly held accountable to the Editor. In other words, the Reviewer has little upside from acting morally sound as few will know about it while there is little perceived downside of acting immorally and selfishly. Anonymity may change the risk perception of removing the Reviewer’s “skin in the game”(see [

4]), which permits the Reviewer to thrive and take risks while being shielded from major consequences, being directly related to identified risk scenarios 5 & 6. This outcome is somewhat mitigated in the normative PRP, as one usually has three reviews, and the reviewers get to see the reviews of the others once they have completed their review. Following the review there usually is a discussion phase between the reviewers. In addition, the Editor/Program Committee Chair may intervene during the discussion phase. Thus, the reviewer’s reputation is at stake by being visible to other reviewers and editor.

Treatment of risks from blind review has been discussed by other authors; Groves [

29] argues “

Open review at the BMJ currently means that all reviewers sign their reports, declare their competing interests, and desist from making additional covert comments to the editors”. In addition, we suggest it as a common courtesy by authors to acknowledge the names of reviewers who make significant contributions, such that reviewers get recognition for their work. This measure is not without consequences, as junior reviewers, who in fear of repercussions may be reluctant to criticize senior academics. However, from a security perspective, opening up the process increases transparency and accountability for the participants and makes cheating more visible. We also perceive the additional overhead costs of such a risk treatment to be minor.

Identified risk scenarios 5–7 & 9 are all concerned with Reviewer behavior and performance. Garcia

et al. [

23] suggest monitoring and incentive contracts as a possible treatment of the Agency problem in Editor-Reviewer relationship. In addition, they suggest incentivizing reviewers by promises of future promotion opportunities, praise from peers and more recognition. Praise and recognition from peers can be incentivized from low-cost measures such as “Best Reviewer Award”—Type competitions.

Co-operating with suggested reviewers (Scenario 8) is an issue that is hard to detect due to human networks being complex and the impossibility of monitoring communication through back-channels. The problem may be mitigated through anonymization of the author, but if the communication is already in place on the beforehand, the effect of this measure will be voided. In terms of incentives, a strong preventive moral disincentive is a possibility.

5.2. Experiences and Knowledge Gained on CIRA Performance

This research has provided experience on CIRA and necessary steps to adapt the method to a more complex case. BPM simplified the process of mapping basic strategies by providing a fixed scope, tasks, and decision points that the analyst can prioritize. Working on processes also provides scoped context that together with stakeholder analysis helps deal with complexity. However, we also found that grouping too much could make the analyst miss important risks, such as when several actors in a stakeholder group can trigger actions that affect each other. We have also identified the need for a subjective vetting process of risks, as it easily becomes too much work to calculate incentives for all identified risks, see our approach in

Table 4.

We found that CIRA worked well for RCA as a means to identify an incentive system state that incentivizes unwanted actions. CIRA also visualizes aspects that may not be obvious, consider

Table 3; the incentives for the Editor to report this incident are very weak. Were it not for the size of the fraud, the Editor had a strong incentive for handling this incident quietly to avoid embarrassment, and not putting his and the publication channel’s reputation at risk.

The process output is one of the most important aspects of a business process, and quality reduction is an important risk when taking the BPM perspective, which also provides a starting point for identifying undesirable events and undesirable incentive system states. However, BPM sequences also add another layer of complexity to the analysis, as actions could cause harm to process participants without risking the process output. We also found it difficult to express harm to process integrity and output, as this is dependent on the scale of cheating (e.g., if one cheat of hundred participants poses a threat to integrity). A weakness of the BPM perspective is that it only considers internal actors and not external threats [

8]. The flowcharts are helpful in visualizing the process, but the model will never be a replica of reality, and overlooking important risks by relying solely on the flowchart model.

An important aspect we discovered was that the analyst must consider two outcomes to obtain risk perspective; the difference between committing a malicious strategy and being caught (downside) and getting away with it (upside), see

Table 5.

CIRA is about stakeholder motivation from belief and perceptions. However, when considering the effect of strategies on the incentive system, counter-strategies enters the picture. Causal chains are inherently difficult to predict, a strategy may influence an opponent’s UF directly, but may have a secondary impact on another UF. For example, will have a manuscript rejected influence the Author’s funding and career? There are also instances where triggering a harmful action may go by unnoticed by victims. Several conditional strategies are only available under specific circumstances, which makes them hard to capture in the initial identification of strategies. Examples are counter strategies to malicious actions, such as whistleblowing. However, this moves beyond the limitations of the one-shot game approach and is an aspect that requires more research.

A weakness of CIRA is the volatility of the UFs, where the choice of UFs directly affects the risk profile. We found that some UFs for participating in peer review was obvious, while others were not, and individual UFs may change conditionally. Perhaps one of our most useful findings was the information obtained by manipulating UFs and the corresponding Wts and IVs during the main CIRA. This experimentation allows for identification of risk situations and provides insight into risk indicators.

We also found that Maslow’s hierarchy of need provides a rule of thumb for determining general incentive strength and identifying risk situations. In addition, knowledge about basic incentives and enforcers provides an additional insight into human factor risk. Researching these aspects we discovered the following risk indicators that can be applied when working with risks in incentive systems:

- Participants without skin in the game; little personal negative risk and they see mainly the upside of risk.

- Participants without visibility to incentive enforcer.

- Participants with perceived threats to their basic needs (Maslow).

- Participants are incentivized by one or more criteria from Pipkin’s motivators [

15].

5.3. Assumptions and Limitations

This research builds on several assumptions and has limitations. First, the normative PRP assessed in this article is for a non-profit academic conference/journal as described in Hames [

10]. We recognize that this is a best-case representation of the PRP and that the practice varies across disciplines and publication channels. This work provides insight into how and why certain interactions between stakeholders pose risks but do not cover all risks posed by stakeholders in the PRP.

There are limitations to the generalizability of our findings, such as cultural aspects. A model will never be an exact representation of reality, and we recognize that elements that may influence the risk profile will vary, such as the amount of people involved in the process, will vary depending on the size of the publication venue.

We did not consider external attackers or include positive/opportunity risk as a part of this risk assessment. The focus of this research has been on identifying and analyzing human factor risks and does therefore not conclude on risks being unacceptable or not. Incentives and UFs will also depend on a variety of factors, and therefore assume the stakeholders in our model to be acting to optimize their self-interests. Another limitation of this work is that we cannot provide exact estimates on how taxing a suggested risk treatment would be in the process, as this will vary in both publication channel size and process design.

6. Future Work

During this work we have identified the following issues that warrant further investigation:

The CIRA method (with extensions) still needs further validation through case studies. In this article, we considered a simple system with five stakeholder groups, applying CIRA in environments, that are more complex would provide useful input on the method’s performance. Further exploration and validation of heuristics for managing human factors.

This article outlined the basic incentive classes; however, more detailed risk response options in incentive systems are required in dealing with risks in such systems. A related topic that would be interesting to research is the fragility of incentive systems and the effects of risk treatments and strength of different incentive controls. Such work could prove useful in, e.g., building security cultures and mitigating risks from incentives.

A more complex problem to pursue is to explore action trees and second order actions with incentives. Such as dynamic states and event trees, where we consider game theoretic strategies and counter strategies with respect to incentives and risk.

RCA of incentives is an area that show has shown potential in this study. The approach to traditional incident handling is to apply a patch to the exploited security vulnerability, and not by modifying the incentives behind triggering the action. Applying CIRA as a tool for RCA can provide insight into the underlying incentive systems and knowledge about how to avoid similar incidents in the future. CIRA as an RCA tool to prevent future incidents is an area we consider important for future research.

7. Conclusions

This work has provided an approach to understanding incentives and shown how this provides an additional insight into risks in a complex process. This research has demonstrated the usefulness of CIRA as a tool for root cause analysis of incidents. The conclusion from the RCA of the Peer Review Ring-incident was that there was a potential imbalance in the incentive system, where the Author perceives his exposure as having a lot more upside than downside from cheating. By analyzing this incident in terms of incentives, we found that the Author’s incentive for having work published is likely too strong. While the perceived disincentives, such as loss of reputation, were too weak. The proposed treatments for this incident in terms of incentives centered on introducing additional risk to the attacker’s utilities factors, making the perceived risk from cheating more severe.

Our approach for the analysis of incentives in the normative PRP consisted of a combination of several tools, including stakeholder analysis and business process modeling. This research detected nineteen risks, out of which we analyzed nine with CIRA. One of the areas of concern addressed in this research was the vulnerability of the PRP from political and personal reasons, and the potential lack of disincentives. In addition, this research risk analyzed the effect of incentives from friendship and acquaintances on the PRP, and general incentive risk in the PRP. Based on this work, we identified external risk factors influencing the integrity of the PRP, such as moving from small and transparent to large and complex, the scientific community must deal with additional risks coming from an increase in complexity, transparency and competitiveness. The suggested overall treatment strategy was to increase the robustness of the process, in terms of more transparency and accountability, together with incentivizing process participants through a variety of risk treatments.