Affective Congruence between Sound and Meaning of Words Facilitates Semantic Decision

Abstract

1. Introduction

2. Materials and Methods

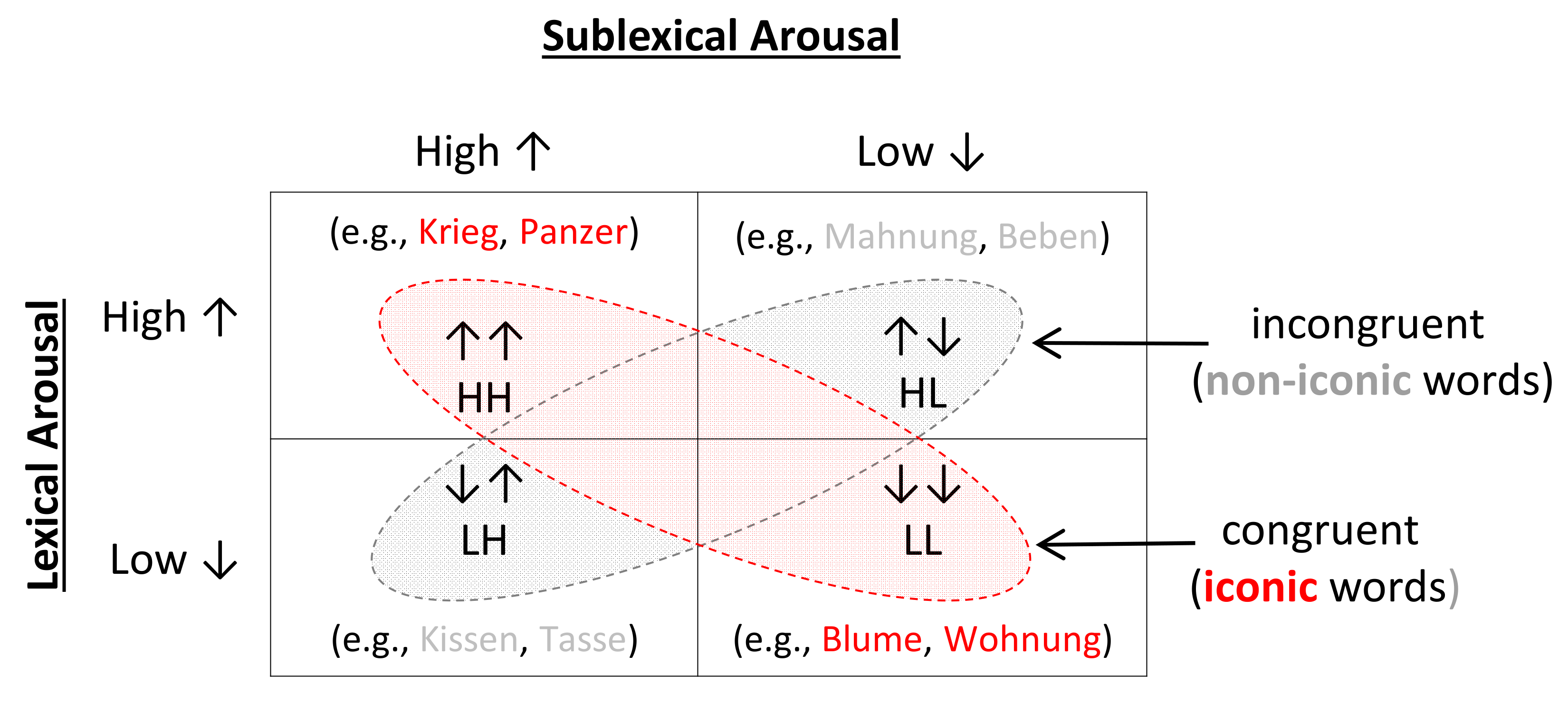

2.1. Stimuli

2.2. Participants

2.3. Procedure

2.4. Analysis

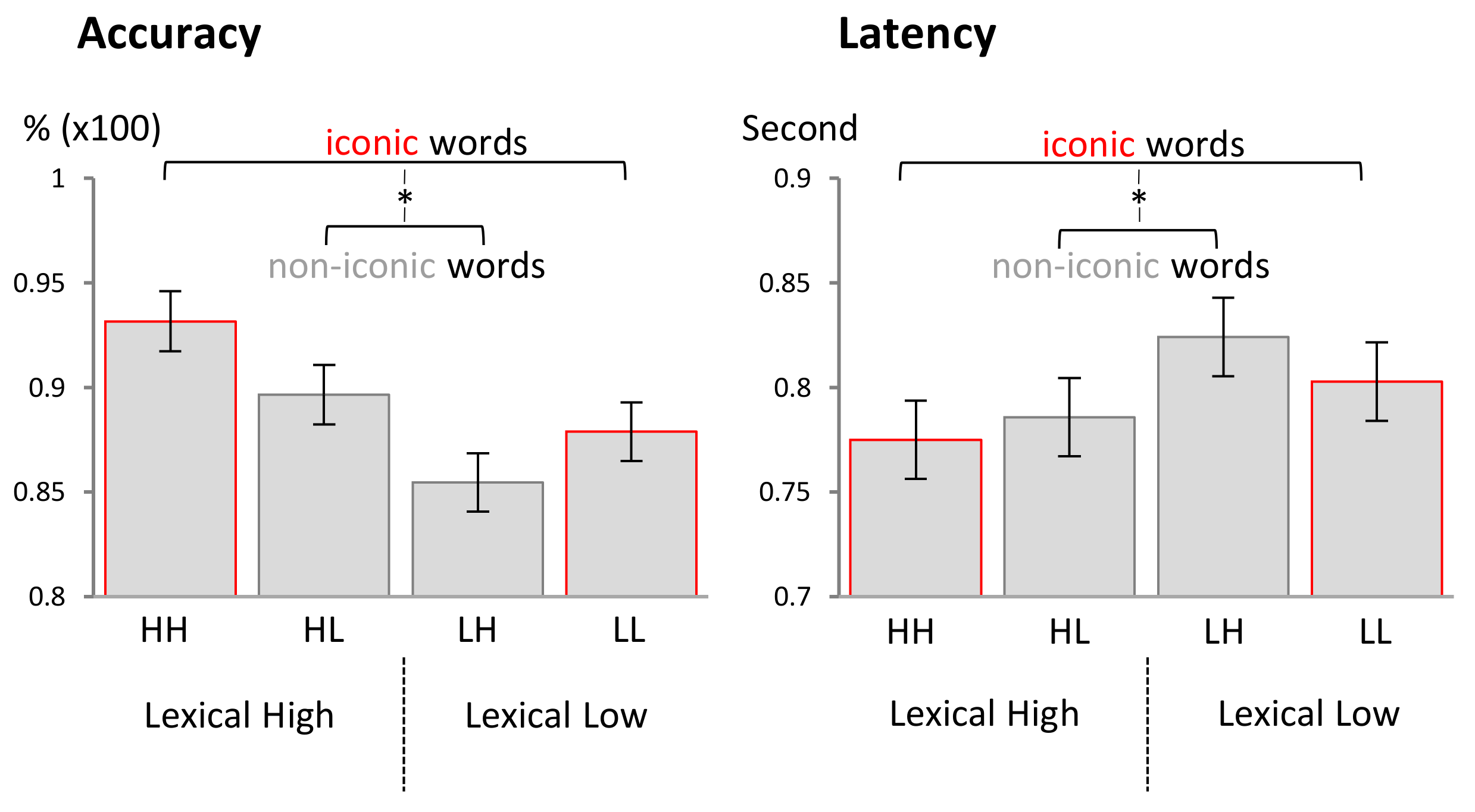

3. Results

4. Discussion

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- De Saussure, F. Course in General Linguistics; Columbia University Press: New York, NY, USA, 2011. [Google Scholar]

- Schmidtke, D.S.; Conrad, M.; Jacobs, A.M. Phonological iconicity. Front. Psychol. 2014, 5, 1–6. [Google Scholar] [CrossRef] [PubMed]

- Dingemanse, M.; Blasi, D.E.; Lupyan, G.; Christiansen, M.H.; Monaghan, P. Arbitrariness, Iconicity, and Systematicity in Language. Trends Cogn. Sci. 2015, 19, 603–615. [Google Scholar] [CrossRef] [PubMed]

- Perniss, P.; Vigliocco, G. The bridge of iconicity: From a world of experience to the experience of language. Philos. Trans. R. Soc. B Biol. Sci. 2014, 369, 20140179. [Google Scholar] [CrossRef]

- Christiansen, M.H.; Monaghan, P. Division of Labor in Vocabulary Structure: Insights from Corpus Analyses. Top. Cogn. Sci. 2016, 8, 610–624. [Google Scholar] [CrossRef] [PubMed]

- Farmer, T.A.; Christiansen, M.H.; Monaghan, P. Phonological typicality influences on-line sentence comprehension. Proc. Natl. Acad. Sci. USA 2006, 103, 12203–12208. [Google Scholar] [CrossRef] [PubMed]

- Reilly, J.; Westbury, C.; Kean, J.; Peelle, J.E. Arbitrary symbolism in natural language revisited: When word forms carry meaning. PLoS ONE 2012, 7. [Google Scholar] [CrossRef] [PubMed]

- Meteyard, L.; Stoppard, E.; Snudden, D.; Cappa, S.F.; Vigliocco, G. When semantics aids phonology: A processing advantage for iconic word forms in aphasia. Neuropsychologia 2015, 76, 264–275. [Google Scholar] [CrossRef] [PubMed]

- Lockwood, G.; Hagoort, P.; Dingemanse, M. How iconicity helps people learn new words: Neural correlates and individual differences in sound-symbolic bootstrapping. Collabra Psychol. 2016, 2. [Google Scholar] [CrossRef]

- Dingemanse, M.; Schuerman, W.; Reinisch, E.; Tufvesson, S.; Mitterer, H. What sound symbolism can and cannot do: Testing the iconicity of ideophones from five languages. Language 2016, 92, e117–e133. [Google Scholar] [CrossRef]

- Kwon, N.; Round, E.R. Phonaesthemes in morphological theory. Morphology 2014, 25, 1–27. [Google Scholar] [CrossRef]

- Childs, G.T. Sound Symbolism. In The Oxford Handbook of the Word; Oxford University Press: Oxford, UK, 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Kita, S. Two-dimensional semantic analysis of Japanese mimetics. Linguistics 1997, 35, 379–415. [Google Scholar] [CrossRef]

- Bowers, J.S.; Pleydell-Pearce, C.W. Swearing, euphemisms, and linguistic relativity. PLoS ONE 2011, 6. [Google Scholar] [CrossRef] [PubMed]

- Aryani, A.; Conrad, M.; Schmidtcke, D.; Jacobs, A.M. Why “piss” is ruder than “pee”? The role of sound in affective meaning making. PsyArXiv 2018. [Google Scholar] [CrossRef]

- Vinson, D.; Thompson, R.L.; Skinner, R.; Vigliocco, G. A faster path between meaning and form? Iconicity facilitates sign recognition and production in British Sign Language. J. Mem. Lang. 2015, 82, 56–85. [Google Scholar] [CrossRef]

- Kantartzis, K.; Kita, S.; Imai, M. Japanese sound symbolism facilitates word learning in English speaking children. Cogn. Sci. 2011, 35, 575–586. [Google Scholar] [CrossRef]

- Imai, M.; Kita, S.; Nagumo, M.; Okada, H. Sound symbolism facilitates early verb learning. Cognition 2008, 109, 54–65. [Google Scholar] [CrossRef] [PubMed]

- De Ruiter, L.E.; Theakston, A.L.; Brandt, S.; Lieven, E.V.M. Iconicity affects children’s comprehension of complex sentences: The role of semantics, clause order, input and individual differences. Cognition 2018, 171, 202–224. [Google Scholar] [CrossRef] [PubMed]

- Laing, C.E. What Does the Cow Say? An Exploratory Analysis of Development. Ph.D. Thesis, University of York, York, UK, 2015. [Google Scholar]

- Monaghan, P.; Shillcock, R.C.; Christiansen, M.H.; Kirby, S. How arbitrary is language? Philos. Trans. R. Soc. B Sci. 2014, 369. [Google Scholar] [CrossRef] [PubMed]

- Thompson, R.L.; Vinson, D.P.; Woll, B.; Vigliocco, G. The Road to Language Learning Is Iconic: Evidence From British Sign Language. Psychol. Sci. 2012, 23, 1443–1448. [Google Scholar] [CrossRef] [PubMed]

- Elliott, E.A.; Jacobs, A.M. Phonological and morphological faces: Disgust signs in German Sign Language. Sign Lang. Linguist. 2014, 17, 123–180. [Google Scholar] [CrossRef]

- Taub, S.F. Language from the Body: Iconicity and Metaphor in American Sign Language; Cambridge University Press: Cambridge, UK, 2001. [Google Scholar]

- Hashimoto, T.; Usui, N.; Taira, M.; Nose, I.; Haji, T.; Kojima, S. The neural mechanism associated with the processing of onomatopoeic sounds. Neuroimage 2006, 31, 1762–1770. [Google Scholar] [CrossRef] [PubMed]

- Westbury, C. Implicit sound symbolism in lexical access: Evidence from an interference task. Brain Lang. 2005, 93, 10–19. [Google Scholar] [CrossRef] [PubMed]

- Parise, C.V.; Pavani, F. Evidence of sound symbolism in simple vocalizations. Exp. Brain Res. 2011, 214, 373–380. [Google Scholar] [CrossRef] [PubMed]

- Iwasaki, N.; Vinson, D.P.; Vigliocco, G. What do English Speakers Know about gera-gera and yota-yota?: A Cross-linguistic Investigation of Mimetic Words for Laughing and Walking. Jpn. Lang. Educ. Globe 2007, 17, 53–78. [Google Scholar]

- Bergen, B.K. The psychological reality of phonaesthemes. Language 2004, 80, 290–311. [Google Scholar] [CrossRef]

- Jacobs, A.M.; Võ, M.L.H.; Briesemeister, B.B.; Conrad, M.; Hofmann, M.J.; Kuchinke, L.; Lüdtke, J.; Braun, M. 10 years of BAWLing into affective and aesthetic processes in reading: What are the echoes? Front. Psychol. 2015, 6, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Aryani, A.; Hsu, C.-T.; Jacobs, A.M. The Sound of Words Evokes Affective Brain Responses. Brain Sci. 2018, 8, 94. [Google Scholar] [CrossRef] [PubMed]

- Schirmer, A.; Adolphs, R. Emotion Perception from Face, Voice, and Touch: Comparisons and Convergence. Trends Cogn. Sci. 2017, 21, 216–228. [Google Scholar] [CrossRef] [PubMed]

- Calvert, G.A. Crossmodal processing in the human brain: Insights from functional neuroimaging studies. Cereb. Cortex 2001, 11, 1110–1123. [Google Scholar] [CrossRef] [PubMed]

- Schröger, E.; Widmann, A. Speeded responses to audiovisual signal changes result from bimodal integration. Psychophysiology 1998, 35, 755–759. [Google Scholar] [CrossRef] [PubMed]

- Bachorowski, J.-A. Vocal expression and perception of emotion. Curr. Dir. Psychol. Sci. 1999, 8, 53–57. [Google Scholar] [CrossRef]

- Bänziger, T.; Hosoya, G.; Scherer, K.R. Path models of vocal emotion communication. PLoS ONE 2015, 10, 1–29. [Google Scholar] [CrossRef] [PubMed]

- Dresler, T.; Mériau, K.; Heekeren, H.R.; Van Der Meer, E. Emotional Stroop task: Effect of word arousal and subject anxiety on emotional interference. Psychol. Res. 2009, 73, 364–371. [Google Scholar] [CrossRef] [PubMed]

- Anderson, A.K. Affective influences on the attentional dynamics supporting awareness. J. Exp. Psychol. Gen. 2005, 134, 258–281. [Google Scholar] [CrossRef] [PubMed]

- Schimmack, U. Attentional interference effects of emotional pictures: Threat, negativity, or arousal? Emotion 2005, 5, 55–66. [Google Scholar] [CrossRef] [PubMed]

- Braun, M.; Hutzler, F.; Ziegler, J.C.; Dambacher, M.; Jacobs, A.M. Pseudohomophone effects provide evidence of early lexico-phonological processing in visual word recognition. Hum. Brain Mapp. 2009, 30, 1977–1989. [Google Scholar] [CrossRef] [PubMed]

- Ziegler, J.C.; Jacobs, A.M. Phonological information provides early sources of constraint in the processing of letter strings. J. Mem. Lang. 1995, 34, 567–593. [Google Scholar] [CrossRef]

- Breen, M. Empirical investigations of the role of implicit prosody in sentence processing. Linguist. Lang. Compass 2014, 8, 37–50. [Google Scholar] [CrossRef]

- Võ, M.L.H.; Conrad, M.; Kuchinke, L.; Urton, K.; Hofmann, M.J.; Jacobs, A.M. The Berlin Affective Word List Reloaded (BAWL-R). Behav. Res. Methods 2009, 41, 534–538. [Google Scholar] [CrossRef] [PubMed]

- Oldfield, R.C. The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia 1971, 9, 97–113. [Google Scholar] [CrossRef]

- Bradley, M.M.; Lang, P.J. Affective Norms for English Words (ANEW): Instruction Manual and Affective Ratings; University of Florida: Gainesville, FL, USA, 1999. [Google Scholar]

- Janssen, D.P. Twice random, once mixed: Applying mixed models to simultaneously analyze random effects of language and participants. Behav. Res. Methods 2012, 44, 232–247. [Google Scholar] [CrossRef] [PubMed]

- Schirmer, A.; Kotz, S.A. Beyond the right hemisphere: Brain mechanisms mediating vocal emotional processing. Trends Cogn. Sci. 2006, 10, 24–30. [Google Scholar] [CrossRef] [PubMed]

- Klasen, M.; Chen, Y.H.; Mathiak, K. Multisensory emotions: Perception, combination and underlying neural processes. Rev. Neurosci. 2012, 23, 381–392. [Google Scholar] [CrossRef] [PubMed]

- Hofmann, M.J.; Tamm, S.; Braun, M.M.; Dambacher, M.; Hahne, A.; Jacobs, A.M. Conflict monitoring engages the mediofrontal cortex during nonword processing. Neuroreport 2008, 19, 25–29. [Google Scholar] [CrossRef] [PubMed]

- Hofmann, M.J.; Kuchinke, L.; Tamm, S.; Võ, M.L.H.; Jacobs, A.M. Affective processing within 1/10th of a second: High arousal is necessary for early facilitative processing of negative but not positive words. Cogn. Affect. Behav. Neurosci. 2009, 9, 389–397. [Google Scholar] [CrossRef] [PubMed]

- Ullrich, S.; Kotz, S.A.; Schmidtke, D.S.; Aryani, A.; Conrad, M. Phonological iconicity electrifies: An ERP study on affective sound-to-meaning correspondences in German. Front. Psychol. 2016, 7. [Google Scholar] [CrossRef] [PubMed]

- Den Bergh, O.; Vrana, S.; Eelen, P. Letters from the heart: Affective categorization of letter combinations in typists and nontypists. J. Exp. Psychol. Learn. Mem. Cogn. 1990, 16, 1153. [Google Scholar] [CrossRef]

- Cannon, P.R.; Hayes, A.E.; Tipper, S.P. Sensorimotor fluency influences affect: Evidence from electromyography. Cogn. Emot. 2010, 24, 681–691. [Google Scholar] [CrossRef]

- Vigliocco, G.; Meteyard, L.; Andrews, M.; Kousta, S. Toward a theory of semantic representation. Lang. Cogn. 2009, 1, 219–247. [Google Scholar] [CrossRef]

- Jacobs, A.M.; Hofmann, M.J.; Kinder, A. On elementary affective decisions: To like or not to like, that is the question. Front. Psychol. 2016, 7, 1–17. [Google Scholar] [CrossRef] [PubMed]

- Darwin, C. The Descent of Man and Selection in Relation to Sex; Murray: London, UK, 1888; Volume 1. [Google Scholar]

- Panksepp, J. Emotional causes and consequences of social-affective vocalization. In Handbook of Behavioral Neuroscience; Elsevier: New York, NY, USA, 2010; Volume 19, pp. 201–208. [Google Scholar]

- Stadthagen-Gonzalez, H.; Imbault, C.; Pérez Sánchez, M.A.; Brysbaert, M. Norms of valence and arousal for 14,031 Spanish words. Behav. Res. Methods 2017, 49, 111–123. [Google Scholar] [CrossRef] [PubMed]

- Warriner, A.B.; Kuperman, V.; Brysbaert, M. Norms of valence, arousal, and dominance for 13,915 English lemmas. Behav. Res. Methods 2013, 45, 1191–1207. [Google Scholar] [CrossRef] [PubMed]

- Andrews, M.; Vigliocco, G.; Vinson, D. Integrating Experiential and Distributional Data to Learn Semantic Representations. Psychol. Rev. 2009, 116, 463–498. [Google Scholar] [CrossRef] [PubMed]

- Jacobs, A.M. Neurocognitive poetics: Methods and models for investigating the neuronal and cognitive-affective bases of literature reception. Front. Hum. Neurosci. 2015, 9, 1–22. [Google Scholar] [CrossRef] [PubMed]

- Schrott, R.; Jacobs, A.M. Gehirn Und Gedicht: Wie Wir Unsere Wirklichkeiten Konstruieren; Hanser: Munich, Germany, 2011. [Google Scholar]

- Langer, S.K. Feeling and Form; Routledge and Kegan Paul London: London, UK, 1953. [Google Scholar]

- Jakobson, R. Linguistics and poetics. Context 1960, 1–8. [Google Scholar] [CrossRef]

- Lüdtke, J.; Meyer-Sickendieck, B.; Jacobs, A.M. Immersing in the stillness of an early morning: Testing the mood empathy hypothesis of poetry reception. Psychol. Aesthet. Creat. Arts 2014, 8, 363–377. [Google Scholar] [CrossRef]

- Aryani, A.; Jacobs, A.M.; Conrad, M. Extracting salient sublexical units from written texts: “Emophon”, a corpus-based approach to phonological iconicity. Front. Psychol. 2013, 4, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Aryani, A.; Kraxenberger, M.; Ullrich, S.; Jacobs, A.M.; Conrad, M. Measuring the Basic Affective Tone of Poems via Phonological Saliency and Iconicity. Psychol. Aesthet. Creat. Arts 2016, 10, 191–204. [Google Scholar] [CrossRef]

- Ullrich, S.; Aryani, A.; Kraxenberger, M.; Jacobs, A.M.; Conrad, M. On the relation between the general affective meaning and the basic sublexical, lexical, and inter-lexical features of poetic texts—A case study using 57 Poems of H. M. Enzensberger. Front. Psychol. 2017, 7, 1–19. [Google Scholar] [CrossRef] [PubMed]

- Bohrn, I.C.; Altmann, U.; Lubrich, O.; Menninghaus, W.; Jacobs, A.M. When we like what we know—A parametric fMRI analysis of beauty and familiarity. Brain Lang. 2013, 124, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Reber, R.; Schwarz, N.; Winkielman, P. Processing Fluency and Aesthetic Pleasure: Is Beauty in the Perceiver’s Processing Experience? Personal. Soc. Psychol. Rev. 2004, 8, 364–382. [Google Scholar] [CrossRef] [PubMed]

| Variable | Word Category | Inferential Statistics | |||||||

|---|---|---|---|---|---|---|---|---|---|

| HH | HL | LH | LL | ||||||

| M | SD | M | SD | M | SD | M | SD | ||

| Lexical Arousal | 3.86 | 0.43 | 3.75 | 0.35 | 2.13 | 0.28 | 2.17 | 0.32 | F(3,156) = 301, p < 0.0001 |

| Lexical Valence | −1.59 | 0.66 | −1.44 | 0.63 | 1.03 | 0.67 | 1.04 | 0.74 | F(3,156) = 190, p < 0.0001 |

| Sublexical Arousal | 3.00 | 0.25 | 2.17 | 0.15 | 2.97 | 0.22 | 2.17 | 0.14 | F(3,156) = 230, p < 0.0001 |

| Word Frequency | 0.97 | 0.67 | 0.88 | 0.75 | 0.90 | 0.74 | 0.93 | 0.66 | F(3,156) = 0.11, p = 0.95 |

| Imageability Rating | 4.44 | 1.27 | 4.31 | 1.08 | 4.32 | 1.43 | 4.27 | 1.37 | F(3,156) = 0.12, p = 0.94 |

| Number of Syllables | 2.13 | 0.56 | 2.08 | 0.41 | 2.15 | 0.57 | 2.08 | 0.47 | F(3,156 = 0.21, p = 0.88 |

| Number of Letters | 6.05 | 1.22 | 6.05 | 1.20 | 6.08 | 1.40 | 6.00 | 1.30 | F(3,156) = 0.02, p = 0.99 |

| Number of Phonemes | 5.53 | 1.20 | 5.33 | 1.02 | 5.45 | 1.22 | 5.20 | 1.07 | F(3,156) = 0.64, p = 0.59 |

| Orth-Neighbors | 1.40 | 1.69 | 1.08 | 1.79 | 1.45 | 2.00 | 1.75 | 2.06 | F(3,156) = 0.85, p = 0.46 |

| Orth-Neighbors-HF | 0.50 | 0.91 | 0.43 | 1.26 | 0.48 | 0.93 | 0.50 | 0.99 | F(3,156) = 0.04, p = 0.98 |

| Orth-Neighbors-Sum-F | 0.72 | 1.06 | 0.49 | 0.82 | 0.69 | 1.05 | 0.80 | 0.88 | F(3,156) = 0.76, p = 0.51 |

| Phon-Neighbors | 1.75 | 2.51 | 1.98 | 3.04 | 1.93 | 2.58 | 2.35 | 3.34 | F(3,156) = 0.30, p = 0.82 |

| Phon-Neighbors-HF | 0.55 | 0.88 | 0.63 | 1.76 | 0.55 | 1.08 | 0.60 | 1.24 | F(3,156) = 0.03, p = 0.99 |

| Phon-Neighbors-Sum-F | 0.79 | 1.02 | 0.69 | 1.01 | 0.67 | 0.92 | 0.88 | 0.88 | F(3,156) = 0.40, p = 0.75 |

| Term | Response Accuracy | Response Latency | ||||||

|---|---|---|---|---|---|---|---|---|

| Estimate | Std E | t | p | Estimate | Std E | t | p | |

| Intercept | 0.903 | 0.010 | 90.07 | <0.0001 | 0.797 | 0.017 | 45.47 | <0.0001 |

| lexical arousal | 0.018 | 0.005 | 3.55 | 0.0005 | −0.016 | 0.003 | −4.32 | <0.0001 |

| sublexical arousal | 0.002 | 0.005 | 0.56 | 0.5784 | 0.002 | 0.003 | 0.69 | 0.4899 |

| lexical*sublexcial | 0.013 | 0.005 | 2.55 | 0.0120 | −0.008 | 0.003 | −2.11 | 0.0369 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aryani, A.; Jacobs, A.M. Affective Congruence between Sound and Meaning of Words Facilitates Semantic Decision. Behav. Sci. 2018, 8, 56. https://doi.org/10.3390/bs8060056

Aryani A, Jacobs AM. Affective Congruence between Sound and Meaning of Words Facilitates Semantic Decision. Behavioral Sciences. 2018; 8(6):56. https://doi.org/10.3390/bs8060056

Chicago/Turabian StyleAryani, Arash, and Arthur M. Jacobs. 2018. "Affective Congruence between Sound and Meaning of Words Facilitates Semantic Decision" Behavioral Sciences 8, no. 6: 56. https://doi.org/10.3390/bs8060056

APA StyleAryani, A., & Jacobs, A. M. (2018). Affective Congruence between Sound and Meaning of Words Facilitates Semantic Decision. Behavioral Sciences, 8(6), 56. https://doi.org/10.3390/bs8060056