Evaluating a Custom Chatbot in Undergraduate Medical Education: Randomised Crossover Mixed-Methods Evaluation of Performance, Utility, and Perceptions

Abstract

1. Introduction

- Consistent with the Technology Acceptance Model (TAM), participants will report significantly higher scores in the measured perception parameters (such as ease of use, satisfaction, engagement and perceived usefulness) when using the LLM chatbot compared to conventional study tools.

- Informed by the Cognitive Load Theory (CLT), the use of the LLM chatbot will result in higher SBA performance scores compared to conventional tools, reflecting LLMs’ abilities to reduce extraneous cognitive load.

- According to Dual-Process Theory, Chatbot use will primarily enhance performance in questions targeting rapid factual recall and boost confidence in applying information (System 1 processing), but will not significantly enhance ratings of depth of content or critical thinking (System 2 processing).

- Based on the Epistemic Trust theory, students will demonstrate high functional trust in chatbot outputs (accuracy, reliability), while also expressing reservations about transparency and alignment with curricular expectations.

- 5.

- How do medical students perceive the usefulness and usability of LLM chatbots compared to conventional study tools?

- 6.

- What are students’ experiences with LLM chatbots in supporting their learning, engagement, and information retention?

- 7.

- How do students perceive the limitations or challenges of using LLM chatbots for medical studies?

- 8.

- What changes, if any, do students report in their attitudes toward AI in medical education after using the chatbot?

- 9.

- To what extent do students feel the chatbot aligns with their curriculum and supports deeper learning and critical thinking?

2. Materials and Methods

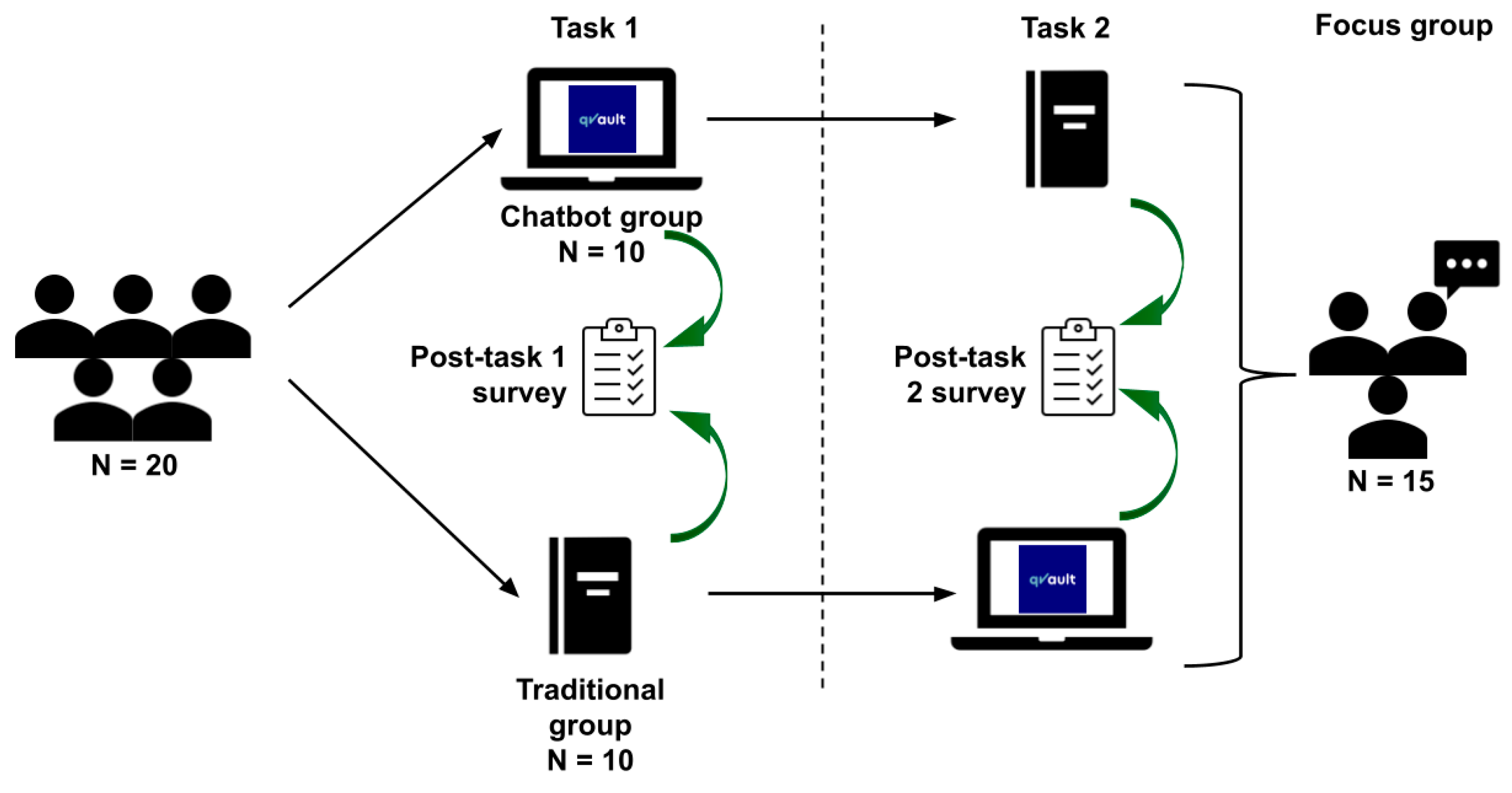

2.1. Study Design

2.2. Participants and Setting

2.3. Study Materials

2.3.1. Conventional Study Materials

- Clinical Anatomy: Applied Anatomy for Students and Junior Doctors (Ellis & Mahadevan, 2019).

- Clinically Oriented Anatomy (Moore et al., 2017).

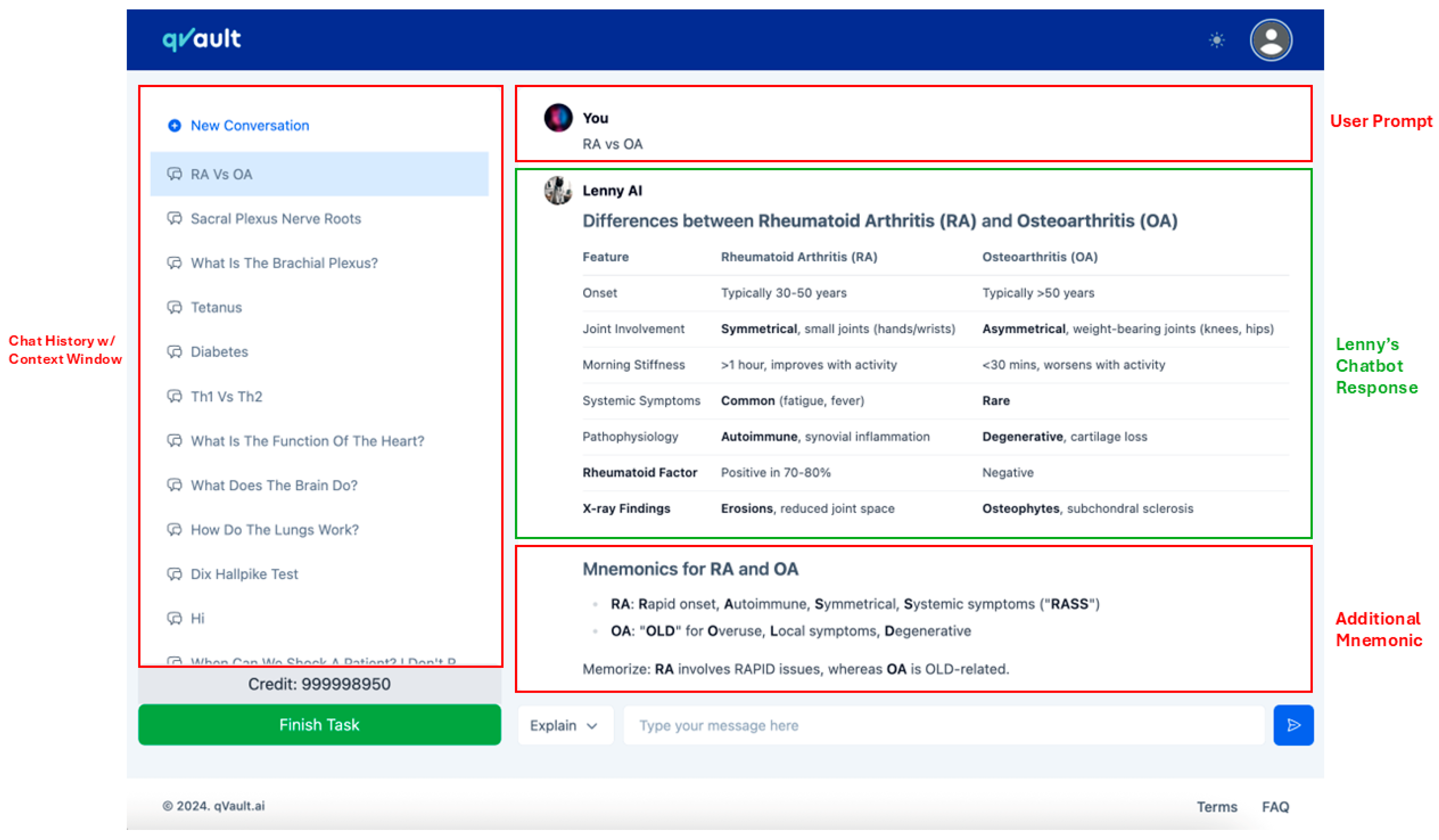

2.3.2. LLM Chatbot: Lenny AI

2.4. Study Procedures

2.4.1. Task 0: Baseline AI Perception Assessment

2.4.2. Task 1 and Task 2: Randomised Crossover Academic Tasks

2.4.3. Post-Task Questionnaire

2.4.4. Focus Group Discussion

- Experience with AI

- Changes in perceptions of AI

- Comparative effectiveness of AI tools

- Impact of AI on learning

- Usability and engagement with AI

- Challenges in using AI

- Potential future influences of AI

- Perceived role of AI in medical education

- Suggestions for improving Lenny AI

2.5. Blinding and Data Anonymisation

2.6. Data Analysis

Quantitative Analysis

- Between-arm performance in Task 1 (Lenny AI vs. conventional tools)

- Between-arm performance in Task 2 (conventional tools vs. Lenny AI)

- Within-arm performance change in Arm 1 (Lenny AI → conventional tools)

- Within-arm performance change in Arm 2 (conventional tools → Lenny AI)

2.7. Qualitative Analysis

3. Results

3.1. Baseline Perceptions

3.2. Quantitative Findings

- Ease of Use: Participants rated the chatbot as significantly easier to use than traditional materials (Arm 1: Mean Difference (MD) = 1.40, p = 0.040; Arm 2: MD = 1.20, p = 0.030). However, this difference did not reach statistical significance when compared with baseline expectations (Arm 1: p = 0.170; Arm 2: p = 0.510).

- Satisfaction: Satisfaction scores were significantly higher in the chatbot condition (Arm 1: MD = 1.40, p = 0.030; Arm 2: MD = 1.10, p = 0.037).

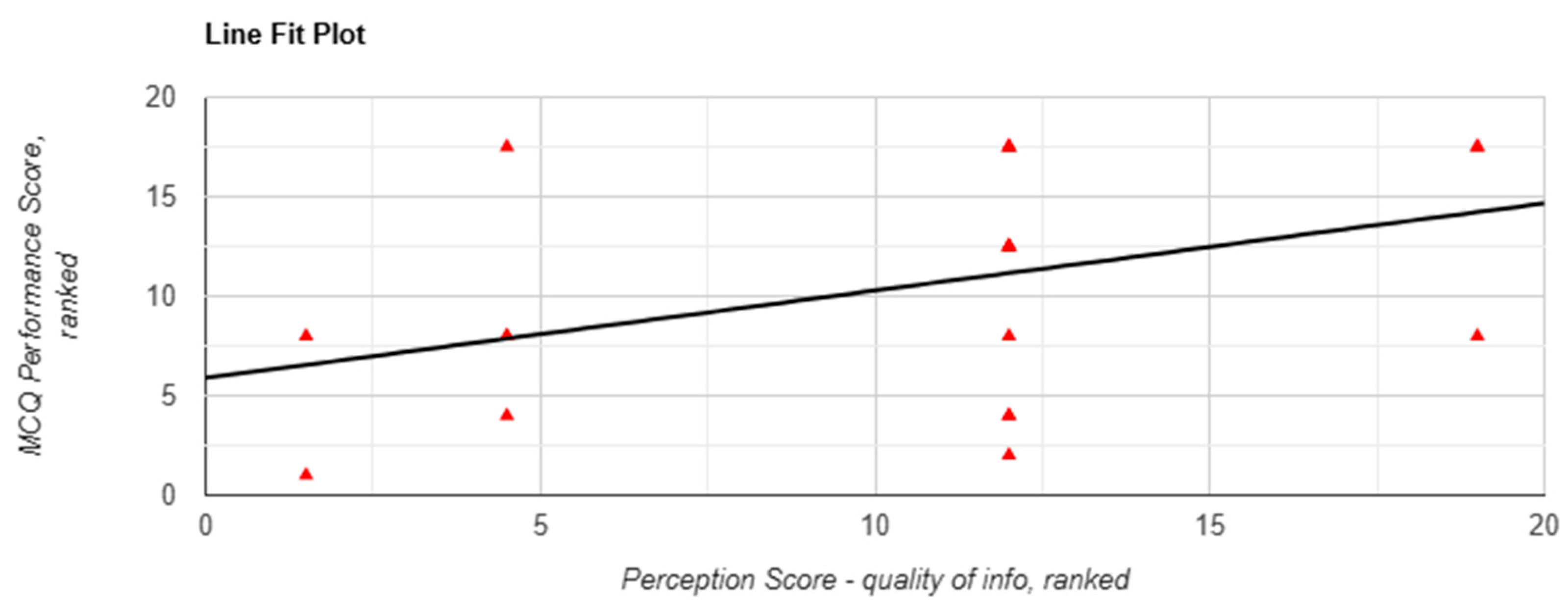

- Quality of Information: Both arms rated the chatbot more highly in terms of information quality (Arm 1: MD = 1.20, p = 0.050; Arm 2: MD = 1.00, p = 0.050). Notably, Arm 1 participants reported a significant improvement in their perception of information quality from baseline (3.40 to 4.30; p = 0.020)

- Ease of Understanding: The chatbot condition was rated more favourably in terms of ease of understanding, where both arms reported a higher score for the question “How easy was it to understand the information provided by your given learning method?” (Arm 1 MD = 1.30; Arm 2 MD = 1.40; both p = 0.010)

- Engagement: Chatbot use was associated with significantly higher engagement scores (Arm 1 MD = 1.60, p = 0.010; Arm 2 MD = 1.50, p = 0.005).

- Efficiency:

- ○

- Arm 1 (chatbot-first) reported significantly greater perceived efficiency (MD = 1.70, 4.40 vs. 2.70; p = 0.020) whilst completing Task 1.

- ○

- Arm 2 (conventional-first) showed no significant change (MD = 0.60, 3.60 vs. 3.00; p = 0.220).

- Confidence in Applying Information:

- ○

- Arm 1: Participants felt significantly more confident applying information learned from the chatbot (MD = 0.90, 3.40 vs. 2.5; p = 0.020).

- ○

- Arm 2: The increase was smaller and did not reach statistical significance (MD = 0.80, 3.30 vs. 2.50; p = 0.060).

- Perceived Performance Compared to Usual Methods:

- ○

- Arm 1: The difference was not statistically significant (MD = 0.80; p = 0.110).

- ○

- Arm 2: Participants reported a significant increase in perceived performance using the chatbot (MD = 1.00, 3.50 vs. 2.50; p = 0.040).

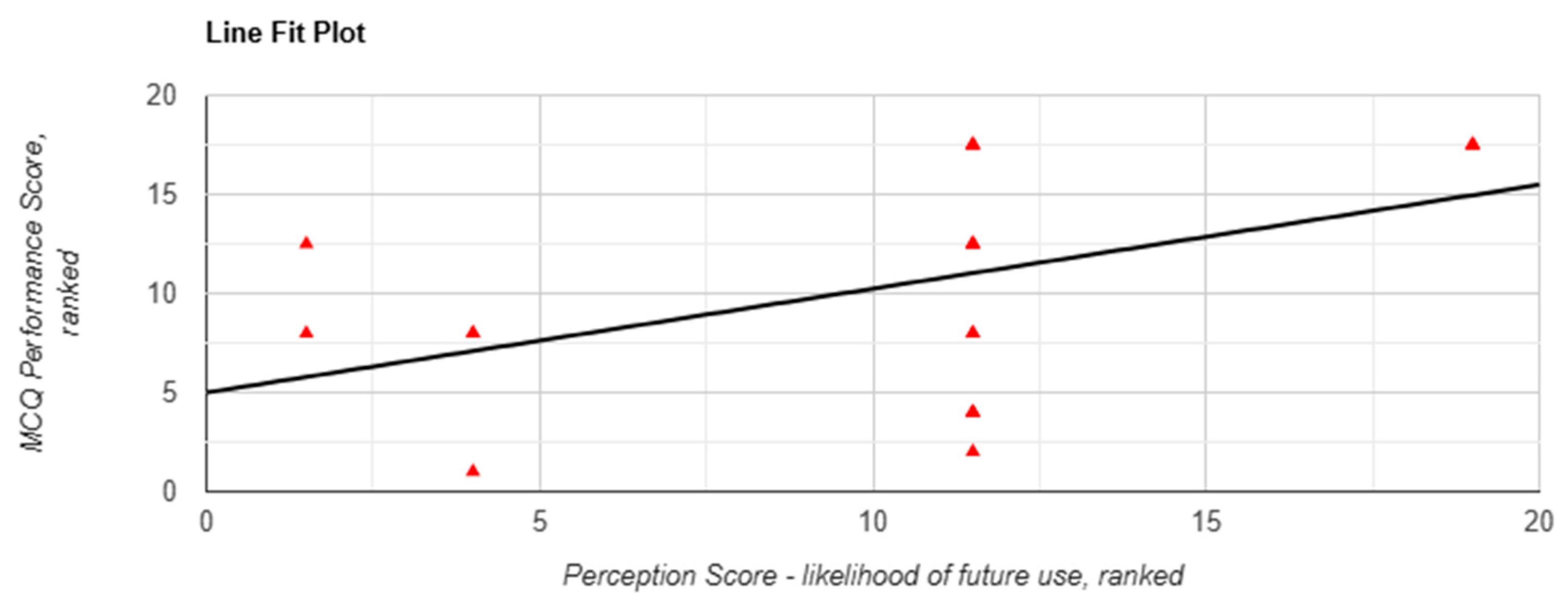

- Likelihood of Future Use:

- ○

- Arm 1: Reported a significantly greater intention to use chatbots in future learning (MD = 1.20; p = 0.020).

- ○

- Arm 2: The increase approached significance (MD = 0.90; p = 0.060).

3.3. Thematic Analysis

3.3.1. Speed and Efficiency

3.3.2. Depth and Complexity

3.3.3. Functional Use Case and Focused Questions

3.3.4. Accuracy and Credibility

3.3.5. Openness to AI as a Learning Tool

3.3.6. Curriculum Fit

3.3.7. Further Development and Technical Limitations

4. Discussion

4.1. The Efficiency-Depth Paradox: When Speed Compromises Comprehension

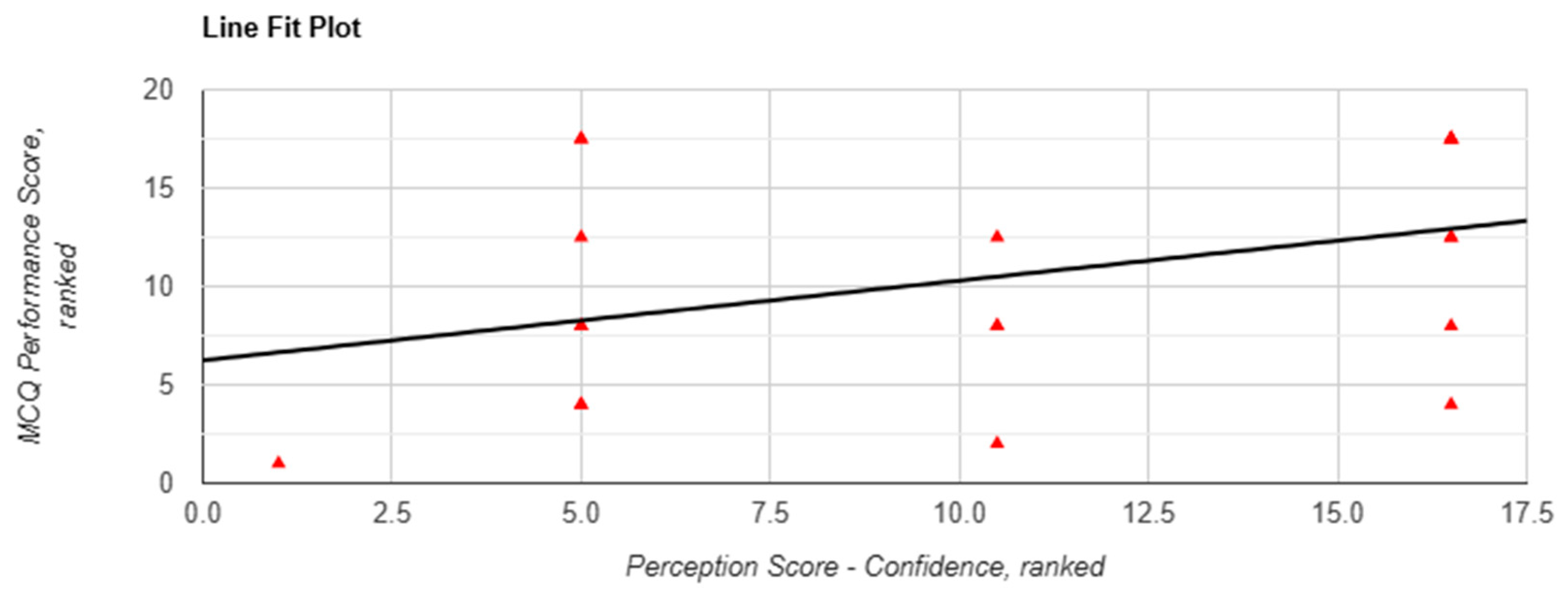

4.2. Confidence Versus Competence: The Illusion of Mastery

4.3. Transparency and Traceability: The Foundations of Trust in AI Learning Tools

- Citation Toggles: Allowing users to reveal underlying references where applicable, supporting source traceability.

- Uncertainty Indicators: Signalling lower-confidence outputs to prompt additional verification.

- Expandable Explanations: Offering tiered content depth, enabling students to shift from summary to substantiated detail on demand.

4.4. No Consistent Performance Gains from Chatbot Use

5. Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| Abbrev. | Full Form |

| AKT | Applied Knowledge Test |

| AI | Artificial Intelligence |

| CI | Confidence Interval |

| CLT | Cognitive Load Theory |

| DBR | Design-Based Research |

| GDPR | General Data Protection Regulation |

| KCL | King’s College London |

| LLM(s) | Large Language Model(s) |

| LMIC(s) | Low- and Middle-Income Country(ies) |

| M | Mean |

| MD | Mean Difference |

| n | Sample size |

| OSCE(s) | Objective Structured Clinical Examination(s) |

| p | p-value |

| RAG | Retrieval-Augmented Generation |

| r | Correlation coefficient (effect size) |

| REMAS | Research Ethics Management Application System |

| SAQ(s) | Short Answer Question(s) |

| SBA | Single Best Answer |

| SD | Standard Deviation |

| SPSS | Statistical Package for the Social Sciences |

| TAM | Technology Acceptance Model |

| T0, T1, T2 | Task 0 (baseline), Task 1, Task 2 timepoints |

| UI | User Interface |

| Z | Z-statistic |

| κ (kappa) | Cohen’s kappa coefficient |

Appendix A. Task 0 (Baseline) Questionnaire

| What is your KCL email address (name@kcl.ac.uk)? Q1. What is your year group? A100 Year 1 EMDP Year 1A EMDP Year 1B A100 Year 2 EMDP Year 2 Other… Q2. Assigned ID number Q3. What is your age? Q4. What is your gender? Male Female Prefer not to say Other… Q5. Are you a local or international student? Local International Q6. Have you used AI LLM Chatbots to help you study? (e.g., clarifying content, coming up with points, explanations, writing content, etc.) Yes No Q7. How long have you used AI LLM Chatbots? 1–3 months 4–6 months 7–9 months 10–12 months Under 2 years Over 2 years Q8. How frequent do you use AI LLM Chatbots per week for studying? 1 time per week 2 times per week 3 times per week 4 times per week 5 times per week 6 times per week 7 times per week (every day) Never/Rarely Q9. Which AI-powered chatbots have you used? (Select all that apply) ChatGPT Google Gemini Microsoft Copilot / Bing AI Meta’s Llama Other… Q10. Which of the following best describes how you use LLM chatbots in your studies? (Select all that apply) Understanding complex concepts Generating study materials (e.g., summaries, flashcards) Assisting with problem-solving or assignments Reviewing or editing written work Writing essays/paragraphs Preparing for exams Other… Q11. How confident are you in your ability to use AI-powered chatbots effectively? Not confident at all Very Confident 1 2 3 4 5 Q12. Please indicate your level of agreement with the following statements Strongly Disagree Disagree Neutral Agree Strongly Agree (a) LLM chatbots are helpful in enhancing my learning experience. (b) LLM chatbots provide accurate information relevant to my studies. (c) Using LLM chatbots has improved my academic performance. (d) I find LLM chatbots easy to use. (e) I am concerned about the reliability of information provided by LLM chatbots. (f) LLM chatbots encourage me to think critically about the information I receive. (g) I prefer traditional study resources over LLM chatbots. (h) LLM chatbots save me time in completing academic tasks. (i) I am worried about potential academic integrity issues when using LLM chatbots. (j) LLM chatbots will play a significant role in the future of medical education. Q13. How do you perceive the quality of responses provided by LLM chatbots? Very Poor Very Good 1 2 3 4 5 Q14. What limitations do you perceive in using AI-powered chatbots? (Select all that apply) Inaccuracy of information Difficulty prompting Difficulty in understanding complex queries Traditional Resources, including Google Search, are more than enough Lack of creativity Lack of depth in responses User interface or formatting is confusing Unclear Wordings Other… Q15. To what extent do you believe LLM chatbots can replace traditional learning resources (e.g., textbooks, lectures)? Not at all Completely 1 2 3 4 5 Q16. After using AI LLM Chatbots, how confident do you feel in applying the information learned? Not confident at all Very confident 1 2 3 4 5 Q17. What potential benefits do you see in integrating LLM chatbots into medical education? (Select all that apply) Personalized learning experiences Immediate access to information Enhanced understanding of complex topics Improved study efficiency Development of critical thinking skills Assistance with problem-solving Support for collaborative learning Other… Q18. In your opinion, how important is it for medical students to be proficient in using AI tools? Not Important Extremely Important 1 2 3 4 5 Q19. How likely are you to continue using AI LLM Chatbots in your future studies? Not likely at all Very likely 1 2 3 4 5 |

Appendix B. Task 1 Questions

| Part 1: Single Best Answer (SBA) Questions Question 1 A 24-year-old male presents to the emergency department after a motorcycle accident. He is unable to abduct his shoulder and has significant weakness when trying to externally rotate the arm. Physical examination reveals an inability to initiate shoulder abduction and a loss of sensation over the lateral aspect of the shoulder. Which part of the brachial plexus is most likely injured? A. Suprascapular and axillary nerves B. Long thoracic nerve C. Medial cord D. Musculocutaneous nerve E. Radial nerve Question 2 A 35-year-old construction worker reports weakness in his hand after a fall from a ladder. On examination, he exhibits wrist drop and weakened extension of the fingers and elbow. Sensory loss is noted on the posterior aspect of the forearm and dorsum of the hand. Which part of the brachial plexus is likely affected? A. Lateral cord B. Medial cord C. Posterior cord D. Suprascapular nerve E. Ulnar nerve Question 3 A 19-year-old college athlete presents with numbness and tingling along the medial side of the forearm and hand following an incident while lifting weights. Examination reveals weakness in flexing the fourth and fifth digits and diminished hand grip strength. Which nerve of the brachial plexus is most likely compressed or damaged? A. Axillary nerve B. Long thoracic nerve C. Median nerve D. Musculocutaneous nerve E. Ulnar nerve Question 4 A 32-year-old woman visits the clinic complaining of difficulty gripping objects and numbness along the lateral aspect of her palm and first three fingers. Upon examination, you notice she cannot make a fist, as the index and middle fingers remain extended while attempting to close her hand. What condition is she most likely suffering from? A. Axillary nerve damage B. Carpal tunnel syndrome C. Hand of benediction D. Radial nerve palsy E. Thoracic outlet syndrome Question 5 A 40-year-old office worker presents with tingling and numbness in his thumb, index, and middle fingers, especially at night. He also reports weakness when trying to perform pinching motions, such as holding a pen or turning a key. Physical examination shows atrophy of the thenar eminence. Which of the following is the most likely diagnosis? A. Carpal tunnel syndrome B. Cubital tunnel syndrome C. Hand of benediction D. Klumpke’s palsy E. Thoracic outlet syndrome Question 6 Which of the following signs is most consistent with cubital tunnel syndrome? A. Atrophy of the hypothenar and interosseous muscles B. Inability to extend the wrist, resulting in a wrist drop C. Loss of sensation over the lateral three and a half digits D. Weakness in shoulder abduction and external rotation E. Weakness in elbow flexion and forearm supination Question 7 A 25-year-old athlete presents with weakness when abducting the shoulder and externally rotating the arm. On examination, there is a notable flattening of the shoulder contour, and sensation is diminished over the lateral aspect of the upper arm. Which of the following clinical conditions is most likely? A. Erb-Duchenne palsy B. Klumpke’s palsy C. Radial nerve palsy D. Rotator cuff tear E. Shoulder dislocation-associated nerve injury Part 2: Short Answer Questions Question 1: What are the five terminal branches of the brachial plexus? Question 2: Which nerve of the brachial plexus is responsible for innervating the biceps brachii muscle? Question 3: A 34-year-old cyclist falls off his bike and lands on his outstretched arm. He presents with weakness in shoulder abduction, elbow flexion, and loss of sensation over the lateral aspect of the forearm. Which part of the brachial plexus is most likely affected? Question 4: A 50-year-old patient complains of difficulty with wrist and finger extension, resulting in a characteristic wrist drop. On examination, there is also numbness over the posterior aspect of the forearm and dorsum of the hand. Which nerve of the brachial plexus is most likely involved? Question 5: A 27-year-old weightlifter experiences pain and weakness when trying to lift his arm above his head. On examination, you notice scapular winging when he pushes against a wall. What condition related to the brachial plexus is most likely causing these symptoms? Question 6: A newborn is delivered via difficult labor and presents with a “claw hand” deformity affecting the wrist and fingers. The infant also exhibits weakness in the intrinsic muscles of the hand. Which condition related to the brachial plexus is most likely the diagnosis? Question 7: A 45-year-old man presents with a history of numbness and tingling in the fourth and fifth fingers, along with weakness in finger abduction and adduction. He also has atrophy of the hypothenar muscles. What condition related to the brachial plexus could explain these findings? |

Appendix C. Task 2 Questions

| Part 1: Single Best Answer (SBA) Questions Question 1: Which nerve, originating from the lumbar plexus, is responsible for innervating the quadriceps muscle group? A. Femoral nerve B. Obturator nerve C. Pudendal nerve D. Sciatic nerve E. Superior gluteal nerve Question 2 (this question is cancelled) A 50-year-old woman presents with difficulty climbing stairs and standing up from a seated position. She also reports weakness in hip extension. Which nerve is most likely affected? A. Femoral nerve B. Inferior gluteal nerve C. Obturator nerve D. Sciatic nerve E. Superior gluteal nerve Correct answer: B. Inferior gluteal nerve Question 3: A 45-year-old man reports pain radiating from his lower back to the posterior aspect of his thigh and down to his calf and foot. He also has difficulty with knee flexion and dorsiflexion of the foot. Which nerve is most likely implicated in this case? A. Femoral nerve B. Inferior gluteal nerve C. Obturator nerve D. Sciatic nerve E. Superior gluteal nerve Question 4 A 36-year-old woman presents to the clinic with a complaint of difficulty walking, particularly when stepping out of a car. On examination, she has weakness in thigh adduction and a sensory deficit over the medial aspect of her thigh. She denies any back pain. What nerve is most likely impaired? (A) Common peroneal nerve (B) Femoral nerve (C) Inferior gluteal nerve (D) Obturator nerve (E) Tibial nerve Question 5 A 45-year-old woman presents to the emergency department with acute onset of severe pain in the left lower limb, which started while she was climbing stairs. On examination, she has a palpable, tender mass in the popliteal fossa, and the pain increases with passive dorsiflexion of the foot. The patient has a history of chronic venous insufficiency. Which of the following conditions is the most likely cause of her symptoms? A. Achilles tendon rupture B. Deep vein thrombosis C. Femoral hernia D. Popliteal artery aneurysm E. Ruptured Baker’s cyst Question 6 Which of the following signs or symptoms is most characteristic of Charcot-Marie-Tooth disease? A. High-stepping gait with foot drop B. Claudication pain relieved by rest C. Glove and stocking sensory loss D. Severe pain and swelling after trauma E. Sudden, sharp back pain radiating down the leg Question 7 A 72-year-old man presents with pain in the left hip that radiates down the lateral aspect of the thigh to the knee. He has difficulty walking and frequently loses his balance. On examination, there is weakness in hip abduction, and Trendelenburg’s sign is positive on the left side. Which of the following is the most likely cause of his symptoms? A. Greater trochanteric pain syndrome B. Iliotibial band syndrome C. Lumbar spinal stenosis D. Meralgia paresthetica E. Osteoarthritis of the hip Part 2: Short Answer Questions Question 1: Which nerve originating from the lumbosacral plexus is responsible for motor innervation of the quadriceps muscle group? Question 2: A 30-year-old man presents with weakness in hip flexion and knee extension after a motor vehicle accident. He also complains of numbness over the anterior thigh. Which nerve of the lumbosacral plexus is likely injured? Question 3: A 50-year-old woman complains of pain radiating from her lower back to the posterior thigh and lateral aspect of the leg. On examination, she has weakness in plantarflexion and absent ankle reflex. Which nerve of the lumbosacral plexus is affected? Question 4: A 25-year-old male athlete presents with difficulty standing on his toes and sensory loss over the sole of his foot. He denies any lower back pain. What is the most likely nerve involved, and what could be a potential cause? Question 5: A 65-year-old woman with a history of hip replacement surgery reports weakness in hip abduction and numbness over the lateral aspect of her thigh. Which nerve of the lumbosacral plexus may have been damaged during the surgery, and what symptoms support this diagnosis? Question 6: A 45-year-old man presents with severe pain in his lower back that radiates down the posterior thigh and into the lateral aspect of his foot. On physical examination, he has difficulty with ankle dorsiflexion and reduced sensation over the dorsum of the foot. Which nerve root is most likely compressed, and what condition is commonly associated with this presentation? Question 7: A 60-year-old woman who recently underwent pelvic surgery presents with difficulty climbing stairs and weakness in extending her knee. She also complains of numbness over the anterior and medial thigh. Which nerve is most likely injured, and what is a potential complication related to this nerve damage? |

Appendix D. Post Task 1 Questionnaire

| QVault AI Chatbot Study—Post task 1 questionnaire Dear Participant, Thank you for completing Task 1 of our study. Please take a few minutes to complete this questionnaire about your experience during the task. Your responses are crucial for our research on learning methods in medical education. This questionnaire should take approximately 5 min to complete. Your responses are confidential and will be used solely for research purposes. Q1. Assigned ID number Section 1: Instructions Please answer the following questions based on the learning method you used during Task 1: Group A: Used the LLM chatbot along with the provided handout. Group B: Used traditional resources (e.g., Google search without AI features and textbook handout). Section 2 of 5 Section A: Experience with the learning method Q2. How easy was it to used the learning method provided during Task 1? Very difficult Very easy 1 2 3 4 5 Q3. How satisfied are you with the learning method you used during Task 1? Very dissatisfied Very satisfied 1 2 3 4 5 Q4. To what extent did the learning method help you understand the topic (brachial plexus) during task 1? Not at all A great deal 1 2 3 4 5 Q5. How efficient was the learning method in helping you find the information you needed? Very Inefficient Very efficient 1 2 3 4 5 Q6. After using the assigned learning method, how confident do you feel in applying the information learned? Not confident at all Very confident 1 2 3 4 5 Section 3 of 5 Section B: Perceptions of the learning method Q7. How would you rate the quality of the information provided by your assigned learning method? Very poor Excellent 1 2 3 4 5 Q8. To what extent do you believe the information obtained from your given learning method was accurate? Not accurate at all Completely Accurate 1 2 3 4 5 Q9. How would you rate the depth of content provided by your given learning method? Very superficial Very in-depth 1 2 3 4 5 Q10. How easy was it to understand the information provided by your given learning method? Very difficult Very easy 1 2 3 4 5 Q11. How engaging was the learning method in maintaining your interest during the task? Not engaging at all Extremely engaging 1 2 3 4 5 Section 4 of 5 Section C: Comparison with previous learning experiences Q12. Compared to your usual study methods, how did the learning method you used during Task 1 perform? Much worse Much better 1 2 3 4 5 Q13. How do you feel the learning method affected your ability to think critically about the subject matter? Strongly hindered Strongly aided 1 2 3 4 5 Q14. How likely are you to use this type of learning method in your future studies? Very Unlikely Very likely 1 2 3 4 5 Section 5 of 5 Section D: Perceptions of LLM chatbots Q14. What is your overall attitude toward the use of LLM chatbots in medical education? Very negative Very positive 1 2 3 4 5 Q15. How useful do you believe LLM chatbots are in supporting medical education? Not useful at all Extremely useful 1 2 3 4 5 Q16. Do you have any concerns about using LLM chatbots in your studies (Select all that apply) Accuracy of information Over reliance on technology Ethical considerations Privacy issues Impact on critical thinking skills Lack of human interaction No concerns Other… Q17. How interested are you in using LLM chatbots in your future studies? Not interested at all Extremely Interested 1 2 3 4 5 |

Appendix E. Post Task 2 Questionnaire

| QVault AI Chatbot Study—Post task 2 questionnaire Dear Participant, Thank you for completing Task 2 of our study. Please take a few minutes to complete this questionnaire about your experience during the task. Your responses are crucial for our research on learning methods in medical education. This questionnaire should take approximately 5 min to complete. Your responses are confidential and will be used solely for research purposes. Assigned ID number Section 1: Instructions Please answer the following questions based on the learning method you used during Task 2: Group A: Used traditional resources (e.g., Google search without AI features and textbook handout). Group B: Used the LLM chatbot along with the provided handout. Section 2 of 6 Section A: Experience with the learning method Q1. How easy was it to use the learning method provided during Task 2? Very difficult Very easy 1 2 3 4 5 Q2. How satisfied are you with the learning method you used during Task 2? Very dissatisfied Very satisfied 1 2 3 4 5 Q3. To what extent did the learning method help you understand the topic (lumbosacral plexus) during task 2? Not at all A great deal 1 2 3 4 5 Q4. How efficient was the learning method in helping you find the information you needed? Very Inefficient Very efficient 1 2 3 4 5 Q5. After using the assigned learning method, how confident do you feel in your understanding of the lumbosacral plexus? Not confident at all Very confident 1 2 3 4 5 Section 3 of 6 Section B: Perceptions of the learning method Q6. How would you rate the quality of the information provided by your assigned learning method? Very poor Excellent 1 2 3 4 5 Q7. To what extent do you believe the information obtained from your given learning method was accurate? Not accurate at all Completely Accurate 1 2 3 4 5 Q8. How would you rate the depth of content provided by your given learning method? Very superficial Very in-depth 1 2 3 4 5 Q9. How easy was it to understand the information provided by your given learning method? Very difficult Very easy 1 2 3 4 5 Q10. How engaging was the learning method in maintaining your interest during the task? Not engaging at all Extremely engaging 1 2 3 4 5 Section 4 of 6 Section C: Comparison with previous learning experiences Q11. Compared to your usual study methods, how did the learning method you used during Task 2 perform? Much worse Much better 1 2 3 4 5 Q12. How do you feel the learning method affected your ability to think critically about the subject matter? Strongly hindered Strongly aided 1 2 3 4 5 Q13. How likely are you to use this type of learning method in your future studies? Very Unlikely Very likely 1 2 3 4 5 Section 5 of 6 Section D: Overall Perceptions After Using Both Learning Methods Q14. After using both learning methods (LLM chatbot and Traditional resources), which do you prefer? Strongly prefer traditional resources Strongly prefer LLM chatbot 1 2 3 4 5 Q15. Which learning method do you feel improved your understanding of the topics more effectively? Traditional Resources LLM chatbot Both equally Neither Q16. Do you anticipate changing your study habits based on your experiences with these learning methods? Not at all Extremely 1 2 3 4 5 Q17. How likely are you to recommend the use of LLM chatbots to your peers for studying medical topics? Very unlikely Very likely 1 2 3 4 5 Section 6 of 6 Section E: Perceptions of LLM chatbots Q18. What is your overall attitude towards the use of LLM chatbots in medical education after completing both tasks? Very negative Very positive 1 2 3 4 5 Q19. How useful do you believe LLM chatbots are in supporting medical education after your experiences in this study? Not useful at all Extremely useful 1 2 3 4 5 Q20. How interested are you in using LLM chatbots in your future studies after participating in this study? Not interested at all Extremely interested 1 2 3 4 5 Q21. Do you have any concerns about using LLM chatbots in your studies after your experiences in this study? (Select all that apply) Accuracy of information Over-reliance on technology Ethical considerations Privacy issues Impact on critical thinking skills Lack of human interaction No concerns Other… |

Appendix F. Focus Group Transcripts

| Day 1 Focus Group 1 (20 November) 1. Describe your experiences using chatbot and traditional experiences Took a lot longer to filter through information in traditional. Chatbot was faster Traditional gave you everything Enjoyed a mix of both Speed—depended on the type of question—conditions you didn’t know—then traditional would have been quicker. But if it was single answer then chatbot was The aim was to get the answer down, not to learn the information, he won’t remember the information at all. Main goal was Chatbot was useful because it could provide and double check the answer, to verify it was helpful. When they didn’t have the chatbot he had to use the traditional sources and notes, Chatbot took some time to respond, some of the time was spent waiting for the chatbot to respond. Critical thinking questions—googling and using notes enhanced the critical thinking instead of in the chatbot. Chatbot didn’t encourage too much critical thinking. Very med student friendly as it gave mnemonics. Were there any surprises?

(3) Overall quite positive, not generalised like chat gpt and it was customisable which was good, sometimes gives too much information which I don’t necessarily need for the lecture. Don’t know if the information is legit using general chatGPT, but this one seems more legit. (2) Was surprised to be able to use a chatbot for reputable information within the first few years of university, instead of using textbooks. (4) You usually have to spend a lot of time refinding your prompts for chatGPT, but Lenny gives you the relevant med school related information as the prompt is already refined. 3. Question 3 (1) Traditional—you have to go through all of the information, and the residual information is quite useful for understanding, whereas with a chatbot you can ask the specific question. Chatbot is better for specific questions but traditional (2) Not sure how you would use the chatbot to understand a whole topic, feels like chatbot is good for filling in the gaps in the lecture knowledge. 4. How did each learning method impact understanding and retention (2) The nature of the exercise made it so that he would just get the answer, and not enough time to actually understand the information. This method didn’t allow retaining the information. (3) The nature of the tasks today—you have time constraints so it was a bit hard to concentrate on learning. And also anatomy is more about memorising, not understanding. (4) If the purpose was learning over answering questions, then we would have learned a bit more. We didn’t know if the answers we got were correct at all, and because of the time con Did you try to get the right answer or were you just trying to fill in the boxes? Both, mostly because of the time constraint but also wanted to get the questions right. 5. How would you evaluate… usability etc. (4) Naturally engagement with traditional methods would allow more learning. There is merit in the process of finding out the information for yourself and learning things in the process, as you are being curious about the topic and engaging with the resources more. However Lenny is good at (1) Also need to find reputable sources through traditional sources, otherwise you’re not going to get the right answer. By sifting through the materials by yourself and diving into other topics at the same time you learn more. (3) Lenny is more question and answer, question and answer, so there may not be as much understanding. 6. Any specific features? (3) Mnemonics—see that could be good in memorising, other features of qVault could be very useful as well, e.g., podcasts, anki generation etc. 7. Challenges or limitations? (4) lack of critical thinking with the chatbot—a little bit of surrounding information would be good. If she got another random question on the same topic, she wouldn’t know how to answer the questions. (Isaac says this is more of a resource constraint but acknowledges this point). But a good thing is that you don’t have to refine the prompt in order to get a medical school level answer. (2) could be useful to show diagrams—the chatbot didn’t have diagrams. He feels like the delay is okay for a while, but if he were to use it for a long time it could be frustrating to use. One feature to improve on is the scrolling aspect—it should scroll to the bottom (didn’t happen for two people) (3) Interface—the follow up between the questions is good in chatGPT, but maybe not in Lenny. Even when you used the reply function, the responses were not specific to the follow up question. Also there is a delay. Would prefer instantaneous responses and 8. How did your experience with it influence your view? (4) More inclined to use Lenny if it is more refined in the future, if it deals with the delays and also delves into a little bit more surrounding it. But is happy about the specificity of Lenny and how it can refine to medical school standard (2) in its current state would only use it from time to time, but will look out for similar models which would be useful for exams. Would be useful to go through the missing parts of exams just to fill in the knowledge missing from lecture slides or clarify things that they are unsure about. (1) Previously would not have been inclined to use ChatGPT or AI related sources 9. Suggestions for improvement

(2) The ideal scenario is to upload the powerpoint or transcript, and it could generate ankis and questions, and include diagrams—so you can do spaced repetition and also test your knowledge. Also test your own knowledge over time and see progress. (4) Being able to generate a whole booklet of questions would be really useful. (3) good quality questions—maybe collaborate with the universities/med schools to make sure the content is more accurate. 11. Anything you want to add? (4) The UI is very clean, easy to use, and despite the delays it was very good to use. Bearable for the purposes of this task. (1) King’s specific marketing is also good (2 and 3)—I like Lenny. Day 1 Focus Group 2 (20 November) Key Themes and Insights 1. Experiences Using Chatbot and Traditional Resources Chatbot give concise responses vs. Google searching gives complicated info (3) Hard to search directly for the answer in Google, but in chatbot it gives a direct answer (3) AI simplifies process to gain info, but need to learn how to use AI too, or else not helpful in knowledge retention (1) Traditional: all material given, can see what is related Chatbot needs user input and cannot see how everything is related, more useful when specific query in mind instead of learning entire topic Chatbots particularly useful in diagnosis questions vs. google Need to keep chatbot content accurate (4) Best to train it to be tailored to curriculum—ensure relevance (4) More useful if add reference in chatbot responses—credibility (everyone agrees) 2. Speed and Retention Latency—discourages users, may just sway people back to Google search (1,4) Waiting time/notifications/technical issues may disrupt learning 3. AI Handouts Handouts have distinct details, much quicker to get details than chatbot (only direct factual questions, chatbot better in diagnosis questions) (4) Liked how the handout give only relevant info (4) AI-generated handout vs. textbook: Handouts for basic knowledge, use textbooks for extra info (1) 4. Evolution of Thoughts on Chatbots Little chatbot exposure before, only the AI overview in Chrome, more open to using chatbot after this (2) Will only use chatbots when confused with medical concepts instead of learning new concepts (3) 5. Learning and Retention Understand lecture slides: use anki (4) AI for understanding harder topics (4) Only use chatbot when confused with something (2) More useful only in consolidating already learnt basic knowledge (3) Useful in learning & understanding concepts, not necessarily lead to better exam results (4) Chatbots may make students lazy in learning, requires discipline to memorize content (2,3) 6. Specific Features and Challenges Mnemonics (only) sometimes useful (1,3) interested in the AI question generation feature—Better than passmed since they are personalised to uploaded lectures Add diagrams, Anki generation, and question creation. 7. Roles in Learning Methods Still trust question banks more than AI-generated questions (1) Will trust AI more if it is trained based on past papers (all agree)—since users do not have knowledge whether all the content is true 8. Others Best to make it free to be accessible to everyone (1) (discussed cost issues) Day 2 Focus Group 3 (11 Dec) 1. Experiences with Learning Methods Can you describe your experiences using both the LLM chatbot and traditional resources during the tasks? Probe: What stood out to you about each method? Were there any surprises? 001:

How did your perceptions of LLM chatbots evolve, if at all, from before the study to after using them during the tasks? Probe: What specific experiences influenced your opinions positively or negatively? 001:

In what ways did the LLM chatbot and traditional resources differ in helping you understand medical topics? Probe: Can you give examples of when one method worked better than the other? 001: 003:

How did each learning method impact your understanding and retention of the material? Probe: Did one method encourage deeper learning or critical thinking? Why? (Moderator’s Note: Probe carefully to distinguish between perceived and actual retention.) 001:

How would you evaluate the usability and engagement levels of the LLM chatbot compared to traditional resources? Probe: Were there any specific features that enhanced or detracted from your learning experience? (Moderator’s Note: Encourage discussion of both usability and engagement to balance the responses.) 001:

What challenges or limitations did you face when using the LLM chatbot and traditional resources? Probe: How did you navigate or overcome these challenges? Did either method create unique difficulties? 001:

How might your experiences with LLM chatbots influence your future study habits or strategies? Probe: Do you see yourself integrating AI tools into your routine, and if so, how? 001:

What potential roles do you envision for LLM chatbots in medical education? Probe: How could they complement or enhance existing learning methods? 001:

What improvements would you suggest for integrating LLM chatbots into medical education to better support learning? Probe: Are there specific features or functionalities you think would make them more effective? 001:

|

Appendix G. Focus Group Question Set

1. Experiences with Learning Methods

|

References

- Amiri, H., Peiravi, S., rezazadeh shojaee, S. S., Rouhparvarzamin, M., Nateghi, M. N., Etemadi, M. H., ShojaeiBaghini, M., Musaie, F., Anvari, M. H., & Asadi Anar, M. (2024). Medical, dental, and nursing students’ attitudes and knowledge towards artificial intelligence: A systematic review and meta-analysis. BMC Medical Education, 24(1), 412. [Google Scholar] [CrossRef]

- Angoff, W. H. (1971). Educational measurement. American Council on Education. [Google Scholar]

- Araujo, S. M., & Cruz-Correia, R. (2024). Incorporating ChatGPT in medical informatics education: Mixed methods study on student perceptions and experiential integration proposals. JMIR Medical Education, 10(1), e51151. [Google Scholar] [CrossRef] [PubMed]

- Arun, G., Perumal, V., Paul, F., Ler, Y. E., Wen, B., Vallabhajosyula, R., Tan, E., Ng, O., Ng, K. B., & Mogali, S. R. (2024). ChatGPT versus a customized AI chatbot (Anatbuddy) for anatomy education: A comparative pilot study. Anatomical Sciences Education, 17(7), 1396–1405. [Google Scholar] [CrossRef] [PubMed]

- Attewell, S. (2024). Student perceptions of generative AI report. JISC. [Google Scholar]

- Banerjee, M., Chiew, D., Patel, K. T., Johns, I., Chappell, D., Linton, N., Cole, G. D., Francis, D. P., Szram, J., Ross, J., & Zaman, S. (2021). The impact of artificial intelligence on clinical education: Perceptions of postgraduate trainee doctors in London (UK) and recommendations for trainers. BMC Medical Education, 21(1), 429. [Google Scholar] [CrossRef] [PubMed]

- Benjamini, Y., & Hochberg, Y. (1995). Controlling the false discovery rate: A practical and powerful approach to multiple testing. Journal of the Royal Statistical Society. Series B (Methodological), 57(1), 289–300. [Google Scholar] [CrossRef]

- Biggs, J., & Tang, C. (2011). Teaching for quality learning at university (4th ed.). Open University Press. [Google Scholar]

- Bloom, B. S. (1956). Taxonomy of educational objectives, handbook 1: Cognitive domain. Longman. [Google Scholar]

- Bonferroni, C. (1936). Teoria statistica delle classi e calcolo delle probabilità. Pubblicazioni del R Istituto Superiore di Scienze Economiche e Commerciali di Firenze. [Google Scholar]

- Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77–101. [Google Scholar] [CrossRef]

- Brown, A. L. (1992). Design experiments: Theoretical and methodological challenges in creating complex interventions in classroom settings. Journal of the Learning Sciences, 2(2), 141–178. [Google Scholar] [CrossRef]

- Buabbas, A., Miskin, B., Alnaqi, A. A., Ayed, A. K., Shehab, A. A., Syed-Abdul, S., & Uddin, M. (2023). Investigating students’ perceptions towards artificial intelligence in medical education. Healthcare, 11(9), 1298. [Google Scholar] [CrossRef]

- Civaner, M. M., Uncu, Y., Bulut, F., Chalil, E. G., & Tatli, A. (2022). Artificial intelligence in medical education: A cross-sectional needs assessment. BMC Medical Education, 22(1), 772. [Google Scholar] [CrossRef]

- Cohen, J. (1960). A coefficient of agreement for nominal scales. Educational and Psychological Measurement, 20(1), 37–46. [Google Scholar] [CrossRef]

- Croskerry, P. (2009). A universal model of diagnostic reasoning. Academic Medicine, 84(8), 1022–1028. [Google Scholar] [CrossRef] [PubMed]

- Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly, 13(3), 319–340. [Google Scholar] [CrossRef]

- Ellis, H., & Mahadevan, V. (2019). Clinical anatomy: Applied anatomy for students and junior doctors (14th ed., pp. 193–200; 264–267). John Wiley & Sons. [Google Scholar]

- European Union. (2016). General data protection regulation (GDPR). Available online: https://gdpr-info.eu/ (accessed on 15 September 2025).

- Evans, J. S., & Stanovich, K. E. (2013). Dual-process theories of higher cognition: Advancing the debate. Perspectives on Psychological Science, 8(3), 223–241. [Google Scholar] [CrossRef] [PubMed]

- Gordon, M., Daniel, M., Ajiboye, A., Uraiby, H., Xu, N. Y., Bartlett, R., Hanson, J., Haas, M., Spadafore, M., Grafton-Clarke, C., & Gasiea, R. Y. (2024). A scoping review of artificial intelligence in medical education: BEME guide no. 84. Medical Teacher, 46(4), 446–470. [Google Scholar] [CrossRef]

- Gualda-Gea, J. J., Barón-Miras, L. E., Bertran, M. J., Vilella, A., Torá-Rocamora, I., & Prat, A. (2025). Perceptions and future perspectives of medical students on the use of artificial intelligence based chatbots: An exploratory analysis. Frontiers in Medicine, 12, 1529305. [Google Scholar] [CrossRef]

- Ho, Y.-R., Chen, B.-Y., & Li, C.-M. (2023). Thinking more wisely: Using the Socratic method to develop critical thinking skills amongst healthcare students. BMC Medical Education, 23(1), 173. [Google Scholar] [CrossRef]

- IBM. (2025). SPSS software. IBM. Available online: https://www.ibm.com/spss (accessed on 15 September 2025).

- Jackson, P., Ponath Sukumaran, G., Babu, C., Tony, M. C., Jack, D. S., Reshma, V. R., Davis, D., Kurian, N., & John, A. (2024). Artificial intelligence in medical education—Perception among medical students. BMC Medical Education, 24(1), 804. [Google Scholar] [CrossRef]

- Jebreen, K., Radwan, E., Kammoun-Rebai, W., Alattar, E., Radwan, A., Safi, W., Radwan, W., & Alajez, M. (2024). Perceptions of undergraduate medical students on artificial intelligence in medicine: Mixed-methods survey study from Palestine. BMC Medical Education, 24(1), 507. [Google Scholar] [CrossRef]

- Jha, N., Shankar, P. R., Al-Betar, M. A., Mukhia, R., Hada, K., & Palaian, S. (2022). Undergraduate medical students’ and interns’ knowledge and perception of artificial intelligence in medicine. Advances in Medical Education and Practice, 13, 927. [Google Scholar] [CrossRef]

- Kochis, M., Parsons, M. Q., Rothman, D., Petrusa, E., & Phitayakorn, R. (2024). Medical students’ perceptions and use of educational technologies and artificial intelligence chatbots as educational resources. Global Surgical Education—Journal of the Association for Surgical Education, 3(1), 94. [Google Scholar] [CrossRef]

- Lewis, P., Perez, E., Piktus, A., Petroni, F., Karpukhin, V., Goyal, N., Küttler, H., Lewis, M., Yih, W., Rocktäschel, T., Riedel, S., & Kiela, D. (2021). Retrieval-augmented generation for knowledge-intensive NLP tasks. arXiv, arXiv:2005.11401. [Google Scholar] [CrossRef]

- Likert, R. (1932). A technique for the measurement of attitudes. Archives of Psychology, 22(140), 55. [Google Scholar]

- Lucas, H. C., Upperman, J. S., & Robinson, J. R. (2024). A systematic review of large language models and their implications in medical education. Medical Education, 58(11), 1276–1285. [Google Scholar] [CrossRef]

- Luong, J., Tzang, C. C., McWatt, S., Brassett, C., Stearns, D., Sagoo, M. G., Kunzel, C., Sakurai, T., Chien, C. L., Noel, G., & Wu, A. (2025). Exploring artificial intelligence readiness in medical students: Analysis of a global survey. Medical Science Educator, 35(1), 331–341. [Google Scholar] [CrossRef]

- Malmström, H., Stöhr, C., & Ou, W. (2023). Chatbots and other AI for learning: A survey of use and views among university students in Sweden. Chalmers University of Technology. [Google Scholar] [CrossRef]

- Mann, H. B., & Whitney, D. R. (1947). On a test of whether one of two random variables is stochastically larger than the other. The Annals of Mathematical Statistics, 18(1), 50–60. [Google Scholar] [CrossRef]

- Marton, F., & Säljö, R. (1976). On qualitative differences in learning: I. outcome and process. British Journal of Educational Psychology, 46(1), 4–11. [Google Scholar] [CrossRef]

- McCraw, B. W. (2015). The nature of epistemic trust. Social Epistemology, 29(4), 413–430. [Google Scholar] [CrossRef]

- McHugh, M. L. (2012). Interrater reliability: The kappa statistic. Biochemia Medica, 22(3), 276–282. [Google Scholar] [CrossRef] [PubMed]

- Mcmyler, B. (2011). Testimony, trust, and authority. Oxford University Press. [Google Scholar]

- Messick, S. (1995). Standards of validity and the validity of standards in performance assessment. Educational Measurement: Issues and Practice, 14(4), 5–8. [Google Scholar] [CrossRef]

- Moore, K. L., Dalley, A. F., & Agur, A. M. R. (2017). Clinically oriented anatomy (8th ed., pp. 1597–1599). Lippincott Williams and Wilkins. [Google Scholar]

- OpenAI. (2024). Hello GPT-4o. Openai.com. Available online: https://openai.com/index/hello-gpt-4o/ (accessed on 10 September 2025).

- OpenAI. (2025). Introducing study mode. Available online: https://openai.com/index/chatgpt-study-mode/ (accessed on 10 September 2025).

- Origgi, G. (2004). Is trust an epistemological notion? Episteme, 1(1), 61–72. [Google Scholar] [CrossRef]

- Oyler, D. R., & Romanelli, F. (2014). The fact of ignorance revisiting the socratic method as a tool for teaching critical thinking. American Journal of Pharmaceutical Education, 78(7), 144. [Google Scholar] [CrossRef] [PubMed]

- Pelaccia, T., Tardif, J., Triby, E., & Charlin, B. (2011). An analysis of clinical reasoning through a recent and comprehensive approach: The dual-process theory. Medical Education Online, 16(1), 5890. [Google Scholar] [CrossRef]

- Pucchio, A., Rathagirishnan, R., Caton, N., Gariscsak, P. J., Del Papa, J., Nabhen, J. J., Vo, V., Lee, W., & Moraes, F. Y. (2022). Exploration of exposure to artificial intelligence in undergraduate medical education: A Canadian cross-sectional mixed-methods study. BMC Medical Education, 22(1), 815. [Google Scholar] [CrossRef]

- qVault.ai. (2025). qVault. Available online: https://qvault.ai (accessed on 30 April 2025).

- Salih, S. M. (2024). Perceptions of faculty and students about use of artificial intelligence in medical education: A qualitative study. Cureus, 16(4), e57605. [Google Scholar] [CrossRef]

- Shapiro, S. S., & Wilk, M. B. (1965). An analysis of variance test for normality (complete samples). Biometrika, 52(3–4), 591–611. [Google Scholar] [CrossRef]

- Sit, C., Srinivasan, R., Amlani, A., Muthuswamy, K., Azam, A., Monzon, L., & Poon, D. S. (2020). Attitudes and perceptions of UK medical students towards artificial intelligence and radiology: A multicentre survey. Insights into Imaging, 11(1), 14. [Google Scholar] [CrossRef] [PubMed]

- Spearman, C. (1904). The proof and measurement of association between two things. The American Journal of Psychology, 15(1), 72–101. [Google Scholar] [CrossRef]

- Sweller, J. (2011). Chapter two—Cognitive load theory. In J. P. Mestre, & B. H. Ross (Eds.), Psychology of learning and motivation (pp. 37–76). Academic Press. [Google Scholar] [CrossRef]

- Van Der Vleuten, C. P. M., & Schuwirth, L. W. T. (2005). Assessing professional competence: From methods to programmes. Medical Education, 39(3), 309–317. [Google Scholar] [CrossRef]

- Wartman, S., & Combs, C. (2017). Medical education must move from the information age to the age of artificial intelligence. Academic Medicine: Journal of the Association of American Medical Colleges, 93(8), 1107–1109. [Google Scholar] [CrossRef]

- Whitehorn, A., Fu, L., Porritt, K., Lizarondo, L., Stephenson, M., Marin, T., Aye Gyi, A., Dell, K., Mignone, A., & Lockwood, C. (2021). Mapping clinical barriers and evidence-based implementation strategies in low-to-middle income countries (LMICs). Worldviews on Evidence-Based Nursing, 18(3), 190–200. [Google Scholar] [CrossRef]

- Wilcoxon, F. (1945). Individual comparisons by ranking methods. Biometrics Bulletin, 1(6), 80–83. [Google Scholar] [CrossRef]

| Outcome Measures | Questions |

|---|---|

| Ease of Use | “How easy was it to use this learning method?” |

| Satisfaction | “Overall, how satisfied are you with this method for studying?” |

| Efficiency | “How efficient was this method in gathering info?” |

| Confidence in Applying Information | “How confident do you feel in applying the information learned?” |

| Quality of Information | “Rate the quality of the information provided.” |

| Accuracy of Information | “Was the information provided accurate?” |

| Depth of Content | “Describe the depth of content provided by the learning tool.” |

| Ease of Understanding | “Was the information easy to understand?” |

| Engagement | “How engaging was the learning method in maintaining your interest during the task?” |

| Performance Compared to Usual Methods | “Compared to usual study methods, how did this one perform?” |

| Critical Thinking | “How did this learning method affect your critical thinking?” |

| Likelihood of Future Use | “How likely are you to use this learning method again?” |

| Baseline (T0) | Perception Differences (T1 vs. T2) | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Mean | SD | T1 Mean (SD) | T1 Median (Range) | T2 Mean (SD) | T2 Median (Range) | Effect Size (r) | p Value | ||

| Ease of Use | Arm 1 | 3.79 | 0.79 | 4.20 (0.92) | 4.0 (3.0) | 2.80 (0.79) | 3.0 (2.0) | 0.68 | 0.040 * |

| Arm 2 | 3.00 (0.82) | 3.0 (2.0) | 4.20 (0.92) | 4.5 (2.0) | 0.75 | 0.030 * | |||

| Satisfaction | Arm 1 | - | - | 4.00 (0.94) | 4.0 (3.0) | 2.60 (1.16) | 3.0 (3.0) | 0.69 | 0.030 * |

| Arm 2 | 2.70 (0.84) | 3.0 (3.0) | 3.80 (1.03) | 4.0 (3.0) | 0.73 | 0.040 * | |||

| Quality of information | Arm 1 | 3.4 | 0.68 | 4.30 (0.48) | 5.0 (3.0) | 3.10 (1.20) | 2.5 (2.0) | 0.75 | 0.050 * |

| Arm 2 | 3.20 (0.79) | 3.0 (2.00 | 4.20 (0.92) | 4.0 (4.0) | 0.75 | 0.050 * | |||

| Ease of Understanding | Arm 1 | - | - | 4.40 (0.97) | 4.0 (2.0) | 3.10 (0.88) | 3.0 (3.0) | 0.89 | 0.010 * |

| Arm 2 | 3.00 (1.33) | 2.0 (3.0) | 4.40 (0.84) | 3.0 (3.0) | 0.88 | 0.010 * | |||

| Engagement | Arm 1 | - | - | 3.60 (0.97) | 4.0 (1.0) | 2.00 (0.82) | 3.0 (4.0) | 0.89 | 0.010 * |

| Arm 2 | 2.70 (0.82) | 3.0 (2.0) | 4.20 (0.63) | 4.5 (2.0) | 0.89 | 0.005 * | |||

| Efficiency | Arm 1 | - | - | 4.40 (0.97) | 4.0 (1.0) | 2.70 (0.82) | 4.5 (3.0) | 0.72 | 0.020 * |

| Arm 2 | 3.00 (0.82) | 4.0 (2.0) | 3.60 (1.17) | 4.0 (2.0) | 0.46 | 0.22 | |||

| Confidence in applying information | Arm 1 | 3.05 | 1 | 3.40 (0.84) | 4.0 (2.0) | 2.50 (0.97) | 2.5 (4.0) | 0.9 | 0.020 * |

| Arm 2 | 2.50 (0.97) | 3.0 (2.0) | 3.30 (1.06) | 3.5 (3.0) | 0.72 | 0.06 | |||

| Performance compared to usual methods | Arm 1 | 3.3 | 0.86 | 3.40 (0.7) | 5.0 (3.0) | 2.60 (0.84) | 3.0 (2.0) | 0.56 | 0.11 |

| Arm 2 | 2.50 (0.97) | 3.0 (4.0) | 3.50 (0.85) | 5.0 (2.0) | 0.73 | 0.040 * | |||

| Likelihood of future use | Arm 1 | 3.25 | 0.97 | 4.00 (0.82) | 3.5 (3.0) | 2.80 (0.79) | 2.0 (2.0) | 0.75 | 0.020 * |

| Arm 2 | 3.60 (0.84) | 3.0 (3.0) | 4.50 (0.71) | 4.0 (2.0) | 0.72 | 0.06 | |||

| Accuracy of information | Arm 1 | 3.5 | 0.89 | 3.90 (0.32) | 3.5 (2.0) | 4.20 (1.03) | 3.0 (3.0) | 0.39 | 0.3 |

| Arm 2 | 3.90 (0.74) | 3.0 (3.0) | 4.20 (0.63) | 3.5 (3.0) | 0.37 | 0.41 | |||

| Depth of content | Arm 1 | - | - | 4.20 (0.79) | 4.0 (3.0) | 2.90 (1.37) | 2.5 (2.0) | 0.59 | 0.06 |

| Arm 2 | 2.90 (0.74) | 3.0 (2.0) | 3.70 (1.06) | 3.0 (3.0) | 0.57 | 0.161 | |||

| Critical thinking | Arm 1 | 3.4 | 0.97 | 3.70 (1.25) | 4.0 (3.0) | 2.60 (0.82) | 3.0 (3.0) | 0.55 | 0.12 |

| Arm 2 | 2.90 (0.85) | 4.0 (3.0) | 3.20 (0.79) | 5.0 (2.0) | 0.23 | 0.52 | |||

| Comparison | Task 1 Mean Score % (SD) | Task 2 Mean Score % (SD) | Mean Difference (%) | 95% CI | p-Value |

|---|---|---|---|---|---|

| Task 1: Arm 1 vs. Arm 2 | 71.43 (15.06) | 54.29 (23.13) | 17.14 | −1.20 to 35.48 | 0.065 |

| Task 2: Arm 2 vs. Arm 1 | 63.33 (18.92) | 68.33 (26.59) | −5 | −16.68 to 26.68 | 0.634 |

| Within Arm 1: Task 1 vs. Task 2 | 71.43 (15.06) | 68.33 (26.59) | −3.1 | −15.41 to 21.60 | 0.7139 |

| Within Arm 2: Task 1 vs. Task 2 | 54.29 (23.13) | 63.33 (18.92) | 4.09 | −23.09 to 9.04 | 0.179 |

| Ability | Features and Functions |

|---|---|

| Accuracy | Curriculum fit |

| Complexity | Focused questions |

| Credibility | Further development |

| Depth | Functional use case |

| Efficiency | Openness to AI as a learning tool |

| Speed | Technical limitations |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ng, I.S.H.; Siu, A.; Han, C.S.J.; Ho, O.S.H.; Sun, J.; Markiv, A.; Knight, S.; Sagoo, M.G. Evaluating a Custom Chatbot in Undergraduate Medical Education: Randomised Crossover Mixed-Methods Evaluation of Performance, Utility, and Perceptions. Behav. Sci. 2025, 15, 1284. https://doi.org/10.3390/bs15091284

Ng ISH, Siu A, Han CSJ, Ho OSH, Sun J, Markiv A, Knight S, Sagoo MG. Evaluating a Custom Chatbot in Undergraduate Medical Education: Randomised Crossover Mixed-Methods Evaluation of Performance, Utility, and Perceptions. Behavioral Sciences. 2025; 15(9):1284. https://doi.org/10.3390/bs15091284

Chicago/Turabian StyleNg, Isaac Sung Him, Anthony Siu, Claire Soo Jeong Han, Oscar Sing Him Ho, Johnathan Sun, Anatoliy Markiv, Stuart Knight, and Mandeep Gill Sagoo. 2025. "Evaluating a Custom Chatbot in Undergraduate Medical Education: Randomised Crossover Mixed-Methods Evaluation of Performance, Utility, and Perceptions" Behavioral Sciences 15, no. 9: 1284. https://doi.org/10.3390/bs15091284

APA StyleNg, I. S. H., Siu, A., Han, C. S. J., Ho, O. S. H., Sun, J., Markiv, A., Knight, S., & Sagoo, M. G. (2025). Evaluating a Custom Chatbot in Undergraduate Medical Education: Randomised Crossover Mixed-Methods Evaluation of Performance, Utility, and Perceptions. Behavioral Sciences, 15(9), 1284. https://doi.org/10.3390/bs15091284