Recognition of Authentic Happy and Sad Facial Expressions in Chinese Elementary School Children: Evidence from Behavioral and Eye-Movement Studies

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Research Design

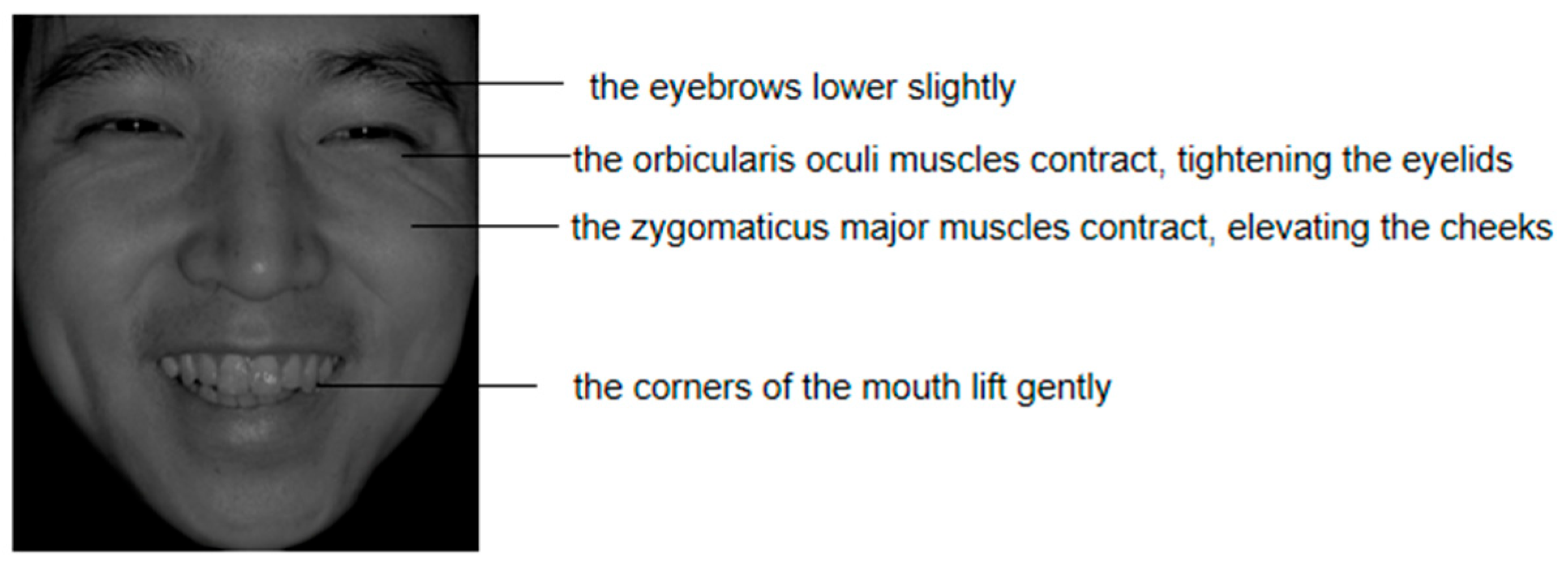

2.3. Apparatus and Materials

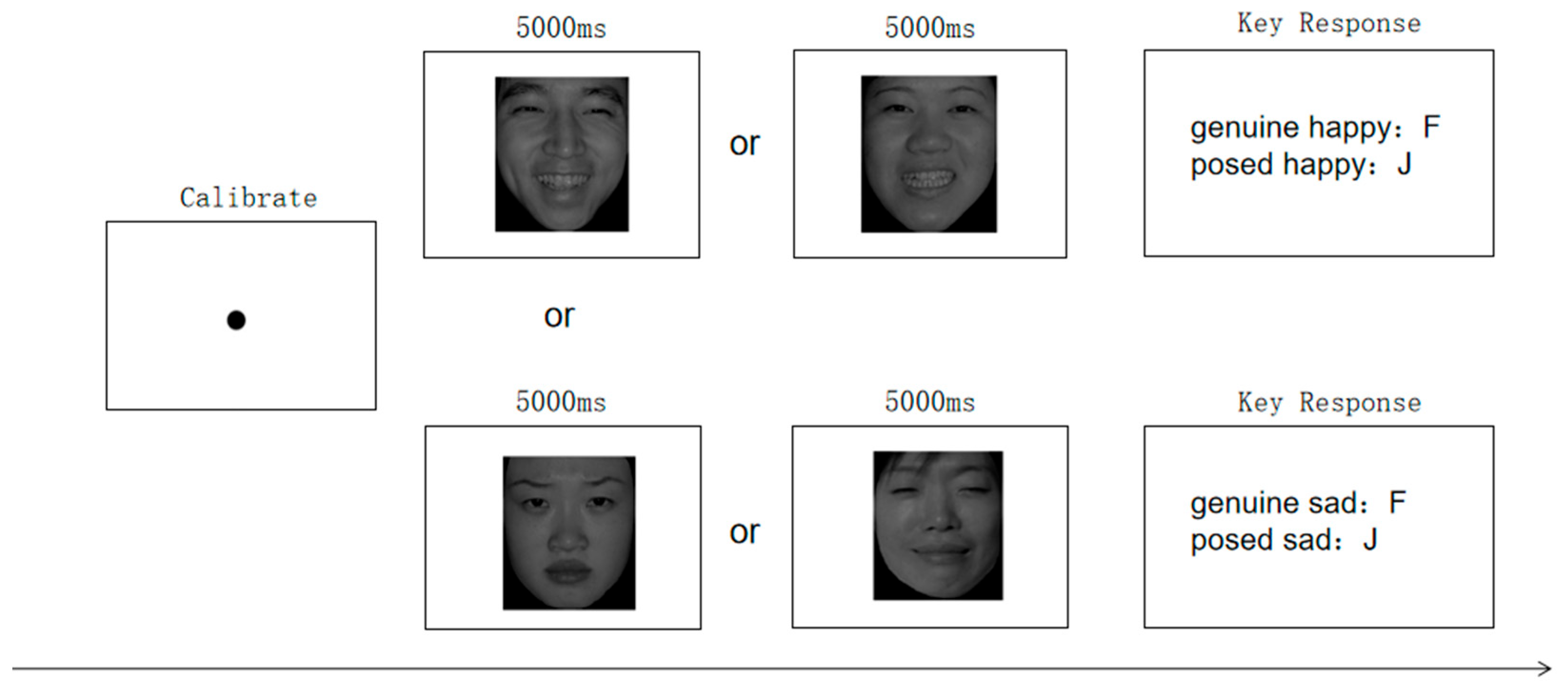

2.4. Procedure

3. Results

3.1. Behavioural Results

3.1.1. Accuracy

3.1.2. Discrimination Index

3.1.3. Reaction Time

3.2. Eye Movement Results

3.2.1. Fixation Duration (ms) Across Three Areas of Interest (Eye-Region, Midface, and Mouth)

3.2.2. Gaze Patterns in Correct vs. Incorrect Trials

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Black, M. H., Chen, N. T. M., Iyer, K. K., Lipp, O. V., Bölte, S., Falkmer, M., Tan, T., & Girdler, S. (2017). Mechanisms of facial emotion recognition in autism spectrum disorders: Insights from eye tracking and electroencephalography. Neuroscience & Biobehavioral Reviews, 80(2), 488–515. [Google Scholar] [CrossRef]

- Boraston, Z. L., Corden, B., Miles, L. K., Skuse, D. H., & Blakemore, S. J. (2008). Brief report: Perception of genuine and posed smiles by individuals with autism. Journal of Autism and Developmental Disorders, 38(3), 574–580. [Google Scholar] [CrossRef] [PubMed]

- Calvo, M. G., Fernandez-Martin, A., & Nummenmaa, L. (2012). Perceptual, categorical, and affective processing of ambiguous smiling facial expressions. Cognition, 125(3), 373–393. [Google Scholar] [CrossRef]

- Cannoni, E., Pinto, G., & Bombi, A. S. (2021). Typical emotional expression in children’s drawings of the human face. Current Psychology, 42(4), 2762–2768. [Google Scholar] [CrossRef]

- Chen, H. J., Zhao, Y., Wu, X. C., Sun, P., Xie, R. B., & Feng, J. (2019). The relation between vocabulary knowledge and reading comprehension in Chinese elementary children: A cross-lagged study. Acta Psychologica Sinica, 51(8), 924–934. [Google Scholar] [CrossRef]

- Dawel, A., Miller, E. J., Horsburgh, A., & Ford, P. (2021). A systematic survey of face stimuli used in psychological research 2000–2020. Behavior Research Methods, 54(4), 1889–1901. [Google Scholar] [CrossRef]

- Dawel, A., Palermo, R., O’Kearney, R., & McKone, E. (2015). Children can discriminate the authenticity of happy but not sad or fearful facial expressions, and use an immature intensity-only strategy. Frontiers in Psychology, 6, 462. [Google Scholar] [CrossRef]

- Dawel, A., Wright, L., Irons, J., Dumbleton, R., Palermo, R., O’Kearney, R., & McKone, E. (2017). Perceived emotion genuineness: Normative ratings for popular facial expression stimuli and the development of perceived-as-genuine and perceived-as-fake sets. Behavior Research Methods, 49(4), 1539–1562. [Google Scholar] [CrossRef] [PubMed]

- Del Giudice, M., & Colle, L. (2007). Difference between children and adults in the recognition of enjoyment smiles. Developmental Psychology, 43(3), 796–803. [Google Scholar] [CrossRef]

- Devine, R. T., & Hughes, C. (2013). Silent films and strange stories: Theory of mind, gender, and social experiences in middle childhood. Child Development, 84(3), 989–1003. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P. (1993). Facial expression and emotion. American Psychologist, 48(4), 384–392. [Google Scholar] [CrossRef]

- Ekman, P. (2003). Darwin, deception, and facial expression. Annals of the New York Academy of Sciences, 1000, 205–221. [Google Scholar] [CrossRef]

- Ekman, P., Davidson, R. J., & Friesen, W. V. (1990). The Duchenne smile: Emotional expression and brain physiology. II. Journal of Personality and Social Psychology, 58(2), 342–353. [Google Scholar] [CrossRef]

- Ekman, P., & Friesen, W. V. (1971). Constants across cultures in the face and emotion. Journal of Personality and Social Psychology, 17(2), 124–129. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P., Friesen, W. V., & O’Sullivan, M. (1988). Smiles when lying. Journal of Personality and Social Psychology, 54(3), 414–420. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P., & Rosenberg, E. L. (Eds.). (1997). What the face reveals: Basic and applied studies of spontaneous expression using the Facial Action Coding System (FACS). Oxford University Press. [Google Scholar]

- Fang, X., Sauter, D. A., Heerdink, M. W., & van Kleef, G. A. (2022). Culture shapes the distinctiveness of posed and spontaneous facial expressions of anger and disgust. Journal of Cross-Cultural Psychology, 53(5), 471–487. [Google Scholar] [CrossRef]

- Feng, R. J., Bi, Y. L., Fu, X. L., Wang, J., & Li, M. Z. (2020). The interpersonal effects of fake emotion and the way it works. Advances in Psychological Science, 28(10), 1762–1776. [Google Scholar] [CrossRef]

- Flavell, J. H., Flavell, E. R., & Green, F. L. (1983). Development of the appearance-reality distinction. Cognitive Psychology, 15(1), 95–120. [Google Scholar] [CrossRef]

- Fu, X. L. (2022). Tutorial on the psychology of lying. CITIC Publishing Group. [Google Scholar]

- Gong, X., Huang, Y. X., Wang, Y., & Luo, Y. J. (2011). Revision of the Chinese facial affective picture system. Chinese Mental Health Journal, 25(1), 40–46. [Google Scholar]

- Gosselin, P., Perron, M., Legault, M., & Campanella, P. (2002). Children’s and adults’ knowledge of the distinction between enjoyment and nonenjoyment smiles. Journal of Nonverbal Behavior, 26(2), 83–108. [Google Scholar] [CrossRef]

- Gu, L., & Bai, X. J. (2014). Visual preference of facial expressions in children and adults: Evidence from eye movements. Psychological Science (China), 37(1), 101–105. [Google Scholar]

- Guarnera, M., Hichy, Z., Cascio, M., Carrubba, S., & Buccheri, S. L. (2017). Facial expressions and the ability to recognize emotions from the eyes or mouth: A comparison between children and adults. The Journal of Genetic Psychology, 178(6), 309–318. [Google Scholar] [CrossRef]

- Harris, P. L., Donnelly, K., Guz, G. R., & Pitt-Watson, R. (1986). Children’s understanding of the distinction between real and apparent emotion. Child Development, 57(4), 895–909. [Google Scholar] [CrossRef]

- He, W. Q., Li, S. X., & Zhao, D. F. (2021). Neural mechanism underlying the perception of crowd facial emotions. Advances in Psychological Science, 29(5), 761–772. [Google Scholar] [CrossRef]

- Krumhuber, E. G., Hyniewska, S., & Orlowska, A. (2021). Contextual effects on smile perception and recognition memory. Current Psychology, 42(8), 6077–6085. [Google Scholar] [CrossRef]

- Liu, Y. J., & Fang, F. X. (2004). A Review on the Development of Children’s Emotional Dissemblance Competence. Psychological Science (China), 27(6), 1386–1388. [Google Scholar]

- Mai, X., Ge, Y., Tao, L., Tang, H., Liu, C., & Luo, Y. J. (2011). Eyes are windows to the Chinese soul: Evidence from the detection of real and fake smiles. PLoS ONE, 6(5), e19903. [Google Scholar] [CrossRef]

- Manera, V., Del Giudice, M., Grandi, E., & Colle, L. (2011). Individual differences in the recognition of enjoyment smiles: No role for perceptual-attentional factors and autistic-like traits. Frontiers in Psychology, 2, 143. [Google Scholar] [CrossRef]

- Maringer, M., Krumhuber, E. G., Fischer, A. H., & Niedenthal, P. M. (2011). Beyond smile dynamics: Mimicry and beliefs in judgments of smiles. Emotion, 11(1), 181–187. [Google Scholar] [CrossRef] [PubMed]

- McLellan, T., Johnston, L., Dalrymple-Alford, J., & Porter, R. (2010). Sensitivity to genuine versus posed emotion specified in facial displays. Cognition & Emotion, 24(8), 1277–1292. [Google Scholar]

- McLellan, T. L., Wilcke, J. C., Johnston, L., Watts, R., & Miles, L. K. (2012). Sensitivity to posed and genuine displays of happiness and sadness: A fMRI study. Neuroscience Letters, 531(2), 149–154. [Google Scholar] [CrossRef]

- Miller, E. J., Krumhuber, E. G., & Dawel, A. (2022). Observers perceive the Duchenne marker as signaling only intensity for sad expressions, not genuine emotion. Emotion, 22(5), 907–919. [Google Scholar] [CrossRef]

- Mui, P. H. C., Gan, Y., Goudbeek, M. B., & Swerts, M. G. J. (2020). Contextualising smiles: Is perception of smile genuineness influenced by situation and culture? Perception, 49(3), 357–366. [Google Scholar] [CrossRef]

- Perron, M., & Roy-Charland, A. (2013). Analysis of eye movements in the judgment of enjoyment and non-enjoyment smiles. Frontiers in Psychology, 4, 659. [Google Scholar] [CrossRef]

- Rayner, K. (2009). The thirty fifth sir frederick bartlett lecture: Eye movements and attention during reading, scene perception, and visual search. Quarterly Journal of Experimental Psychology, 62(8), 1457–1506. [Google Scholar] [CrossRef] [PubMed]

- Scarpazza, C., Gramegna, C., Costa, C., Pezzetta, R., Saetti, M. C., Preti, A. N., Difonzo, T., Zago, S., & Bolognini, N. (2025). The Emotion Authenticity Recognition (EAR) test: Normative data of an innovative test using dynamic emotional stimuli to evaluate the ability to recognize the authenticity of emotions expressed by faces. Neurological Sciences, 46(1), 133–145. [Google Scholar] [CrossRef] [PubMed]

- Serrat, E., Amado, A., Rostan, C., Caparros, B., & Sidera, F. (2020). Identifying emotional expressions: Children’s reasoning about pretend emotions of sadness and anger. Frontiers in Psychology, 11, 602385. [Google Scholar] [CrossRef] [PubMed]

- Shimojo, S., Simion, C., Shimojo, E., & Scheier, C. (2003). Gaze bias both reflects and influences preference. Nature Neuroscience, 6(12), 1317–1322. [Google Scholar] [CrossRef]

- Snodgrass, J. G., & Corwin, J. (1988). Pragmatics of measuring recognition memory: Applications to dementia and amnesia. Journal of Experimental Psychology: General, 117(1), 34–50. [Google Scholar] [CrossRef]

- Song, R., Over, H., & Carpenter, M. (2016). Young children discriminate genuine from fake smiles and expect people displaying genuine smiles to be more prosocial. Evolution and Human Behavior, 37(6), 490–501. [Google Scholar] [CrossRef]

- Song, Y., & Hakoda, Y. (2012). Selective attention to facial emotion and identity in children with autism: Evidence for global identity and local emotion. Autism Research, 5(4), 282–285. [Google Scholar] [CrossRef] [PubMed]

- Sui, X., & Ren, Y. T. (2007). Online processing of facial expression recognition. Acta Psychologica Sinica, 39(1), 64–70. [Google Scholar]

- Wang, F. X., Hou, X. J., Duan, Z. H., Liu, H. S., & Li, H. (2016). The perceptual differences between experienced Chinese chess players and novices: Evidence from eye movement. Acta Psychologica Sinica, 48(5), 457–471. [Google Scholar] [CrossRef]

- Webster, P. J., Wang, S., & Li, X. (2021). Review: Posed vs. genuine facial emotion recognition and expression in autism and implications for intervention. Frontiers in Psychology, 12, 653112. [Google Scholar] [CrossRef] [PubMed]

- Wellman, H. M., Cross, D., & Watson, J. (2001). Meta-analysis of theory-of-mind development: The truth about false belief. Child Development, 72(3), 655–684. [Google Scholar] [CrossRef]

- Widen, S. C., & Russell, J. A. (2003). A closer look at preschoolers’ freely produced labels for facial expressions. Developmental Psychology, 39(1), 114–128. [Google Scholar] [CrossRef]

- Zhu, X. L., & Zhao, X. (2023). Role of executive function in mathematical ability of children in different grades. Acta Psychologica Sinica, 55(5), 696–710. [Google Scholar] [CrossRef]

- Zloteanu, M., Krumhuber, E. G., & Richardson, D. C. (2021). Acting surprised: Comparing perceptions of different dynamic deliberate expressions. Journal of Nonverbal Behavior, 45, 169–185. [Google Scholar] [CrossRef]

| Expression | Authenticity | Grade | ||

|---|---|---|---|---|

| Lower | Middle | Upper | ||

| Happy | Genuine | 0.64 (0.48) | 0.80 (0.40) | 0.80 (0.40) |

| Posed | 0.75 (0.44) | 0.74 (0.44) | 0.82 (0.38) | |

| Sad | Genuine | 0.49 (0.50) | 0.53 (0.50) | 0.55 (0.50) |

| Posed | 0.73 (0.45) | 0.76 (0.43) | 0.73 (0.45) | |

| Expression | Grade | ||

|---|---|---|---|

| Lower | Middle | Upper | |

| Happy | 1.11 (0.96) | 1.59 (0.82) | 1.94 (0.89) |

| Sad | 0.67 (0.74) | 0.80 (0.44) | 0.85 (0.60) |

| Expression | Authenticity | Grade | ||

|---|---|---|---|---|

| Lower | Middle | Upper | ||

| Happy | Genuine | 1885 (2294) | 1417 (1598) | 1119 (1027) |

| Posed | 1957 (1931) | 1653 (1671) | 1291 (1132) | |

| Sad | Genuine | 1880 (1948) | 1741 (1506) | 1445 (1362) |

| Posed | 1857 (1738) | 1864 (1648) | 1386 (1147) | |

| Expression | Authenticity | Area of Interest | Grade | ||

|---|---|---|---|---|---|

| Lower | Middle | Upper | |||

| Happy | Genuine | Eye-region | 1717 (848) | 1689 (878) | 1487 (883) |

| Mouth | 1158 (811) | 1039 (772) | 1002 (866) | ||

| Midface | 831 (654) | 901 (736) | 849 (681) | ||

| Posed | Eye-region | 1911 (1018) | 1969 (911) | 1779 (978) | |

| Mouth | 874 (791) | 755 (703) | 750 (728) | ||

| Midface | 888 (743) | 861 (651) | 869 (730) | ||

| Sad | Genuine | Eye-region | 2400 (1020) | 2134 (940) | 2055 (1012) |

| Mouth | 495 (573) | 409 (507) | 429 (563) | ||

| Midface | 884 (691) | 1011 (676) | 909 (794) | ||

| Posed | Eye-region | 1946 (963) | 1814 (959) | 1663 (1019) | |

| Mouth | 601 (620) | 527 (535) | 588 (714) | ||

| Midface | 986 (698) | 1127 (750) | 1024 (783) | ||

| Expression | Authenticity | Detection Response | Grade | ||

|---|---|---|---|---|---|

| Lower | Middle | Upper | |||

| Happy | Genuine | Correct | 1687 (865) | 1684 (904) | 1428 (875) |

| Incorrect | 1769 (818) | 1709 (779) | 1757 (876) | ||

| Posed | Correct | 1858 (980) | 1987 (888) | 1734 (980) | |

| Incorrect | 2074 (1115) | 1918 (977) | 2003 (945) | ||

| Sad | Genuine | Correct | 2484 (905) | 2141 (941) | 2160 (973) |

| Incorrect | 2321 (1116) | 2126 (941) | 1875 (1036) | ||

| Posed | Correct | 1878 (943) | 1812 (907) | 1685 (999) | |

| Incorrect | 2115 (995) | 1821 (1111) | 1602 (1073) | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Q.; Xu, H.; Zhou, X.; Bakari, W.; Gao, H. Recognition of Authentic Happy and Sad Facial Expressions in Chinese Elementary School Children: Evidence from Behavioral and Eye-Movement Studies. Behav. Sci. 2025, 15, 1099. https://doi.org/10.3390/bs15081099

Wang Q, Xu H, Zhou X, Bakari W, Gao H. Recognition of Authentic Happy and Sad Facial Expressions in Chinese Elementary School Children: Evidence from Behavioral and Eye-Movement Studies. Behavioral Sciences. 2025; 15(8):1099. https://doi.org/10.3390/bs15081099

Chicago/Turabian StyleWang, Qin, Huifang Xu, Xia Zhou, Wanjala Bakari, and Huifang Gao. 2025. "Recognition of Authentic Happy and Sad Facial Expressions in Chinese Elementary School Children: Evidence from Behavioral and Eye-Movement Studies" Behavioral Sciences 15, no. 8: 1099. https://doi.org/10.3390/bs15081099

APA StyleWang, Q., Xu, H., Zhou, X., Bakari, W., & Gao, H. (2025). Recognition of Authentic Happy and Sad Facial Expressions in Chinese Elementary School Children: Evidence from Behavioral and Eye-Movement Studies. Behavioral Sciences, 15(8), 1099. https://doi.org/10.3390/bs15081099