Abstract

As extended reality (XR) technologies such as virtual and augmented reality rapidly enter mental health care, ethical considerations lag behind and require urgent attention to safeguard patient safety, uphold research integrity, and guide clinical practice. This scoping review aims to map the current understanding regarding the main ethical issues arising on the use of XR in clinical psychiatry. Methods: Searches were conducted in 5 databases and included 29 studies. Relevant excerpts discussing ethical issues were documented and then categorized. Results: The analysis led to the identification of 5 core ethical challenges: (i) Balancing beneficence and non-maleficence as a question of patient safety, (ii) Altering autonomy by altering reality and information, (iii) data privacy risks and confidentiality concerns, (iv) clinical liability and regulation, and v) fostering inclusiveness and equity in XR development. Most authors have stated ethical concerns primarily for the first two topics, whereas the remaining four themes were not consistently addressed across all papers. Conclusions: There remains a great research void regarding such an important topic due the limited number of empirical studies, the lack of involvement of those living with a mental health issue in the development of these XR-based technologies, and the lack of clear clinical and ethical guidelines regarding their use. Identifying broader ethical implications of such novel technology is crucial for best mental healthcare practices.

1. Introduction

Mental health systems face rising demand while resources and workforce capacity remain constrained, creating persistent gaps in access and quality. Against this backdrop, and specifically within mental healthcare, there is growing interest in extended reality (XR) as a set of tools that could expand capacity and personalize care. XR is an umbrella term spanning the reality–virtuality continuum—from full immersive virtual reality (VR) to augmented reality (AR), which overlays digital content onto the physical world (Milgram & Kishino, 1994). VR typically uses head-mounted displays to deliver a fully computer-generated environment, often leveraging multisensory cues to enhance immersion and presence (Slater, 2009; Cipresso et al., 2018; Park et al., 2019; Torous et al., 2021). AR is commonly defined—following a widely cited, consensus formulation—as combining real and virtual content, being interactive in real time, and being registered in three dimensions (Azuma, 1997; Billinghurst et al., 2015). In psychiatric applications, some XR environments also include avatars. These are computer-generated representations of either humans or other characters and are commonly guided by a professional (Rudschies & Schneider, 2024). Additionally, virtual embodied conversational agents are computer systems usually based on artificial intelligence (AI) with the aim to simulate human conversation while interacting with users in an autonomous manner (Rudschies & Schneider, 2024).

Among the various areas of healthcare where XR can make a significant impact, mental health stands out, having seen a wide range of developments over the past decade (Cieślik et al., 2020; Freeman et al., 2017; Wiebe et al., 2022). Psychiatric disorders remain one of the top significant public health challenges and are among the top leading cause of disability and overall disease burden worldwide (World Health Organization (WHO), 2022). XR has emerged as an effective and promising tool for assessing a large range of mental disorders and to expand the range of psychotherapy modalities (Carl et al., 2019; Dellazizzo et al., 2020; Freeman et al., 2017; Maples-Keller et al., 2017; Rus-Calafell et al., 2018; Valmaggia et al., 2016). Notably, a recent systematic review by Wiebe et al. (2022) included 721 studies on the use of VR in mental health, and highlighted that such technology can benefit several disorders, including anxiety disorders, autism spectrum disorder, posttraumatic stress disorder, dementia, schizophrenia spectrum disorders, and addiction disorders.

To date, the most supported application is VR exposure therapy for anxiety and trauma-related disorders, with numerous meta-analyses showing its efficacy (Carl et al., 2019). XR also provides new possibilities to go beyond current treatment techniques for complex and challenging patients. For instance, a few studies have explored the usability of VR in the context of forensic mental health and appears as a suitable tool for the prevention of aggression (Fromberger et al., 2018; Klein Tuente et al., 2020; Sygel & Wallinius, 2021). In the last few years, avatars have been increasingly used for treating persecutory auditory verbal hallucinations in treatment-resistant schizophrenia patients. Findings have shown positive effects on these difficult to treat patients (Craig et al., 2018; Dellazizzo et al., 2021).

There are several advantages of XR for training, diagnostic and therapeutic purposes, including reduced cost, interactivity, and safety (Li et al., 2017). XR therefore entails several advantages for mental healthcare, such as real-time performance and behavioral monitoring, personalised exposure and creation of real-world situations that may otherwise be impossible (Best et al., 2022). Moreover, virtual environments have been shown to produce physiological changes consistent with emotional responses to real-world scenarios and can elicit different symptoms (i.e., paranoia, cravings, anxiety) (Costanzo et al., 2014; Freeman et al., 2003; Kuntze et al., 2001; Lee et al., 2003; Owens & Beidel, 2015). Since virtual environments are computer-generated, patients can experience these environments in a safe and controlled manner in a clinical setting (Bell et al., 2020). XR may offer users experiences involving scenarios that would be too difficult or too dangerous to practice real world (Baus & Bouchard, 2014) and offer the illusion of being located inside the rendered virtual environment (Baus & Bouchard, 2014; Slater, 2009). XR experiences may consequently meet patients’ needs, abilities, or preferences (Bell et al., 2020). Recent work further documents effectiveness and implementation in mental-health contexts (e.g., randomized trials of VR relaxation; implementation processes; avatar-based digital therapies) (Veling et al., 2021; M. M. T. E. Kouijzer et al., 2023; Garety et al., 2024). Beyond VR, other XR modalities have also been explored: AR as an aid to cognitive behavioral therapy for anxiety disorders and mixed reality environments for adaptive mental-health support, with growing evidence syntheses in specific conditions such as obsessive–compulsive disorder (Rajkumar, 2024; Navas-Medrano et al., 2024; Colman et al., 2024).

Besides the above-mentioned potential of these technologies, there are limiting factors to the implementation of XR in clinical practice beyond research settings, comprising the lack of high-quality evidence XR programs, the absence of training and standardized evidence-based packages, fears that technology may deter patient engagement and lack of infrastructure to support the technology within clinical settings (Bell et al., 2020; Selaskowski et al., 2024).

Furthermore, it has been demonstrated that digital applications are often designed without any specific ethical considerations (Fiske et al., 2019; Ienca et al., 2018). These challenges may go beyond the training received by professionals and directly affect patient care—for example, ensuring safe exposure, informed consent, and respect for autonomy when using immersive systems—while also shaping research integrity (transparent reporting, protection of participants, and governance of data) and clinical practice (clear protocols, competency requirements, and accountability) (Rizzo & Koenig, 2017; Chung et al., 2021). As such, clinicians may be unprepared for legal and ethical challenges (e.g., privacy, electronic security, accountability) involved in using virtual environments (Parsons, 2021). Moreover, ethical issues may arise depending on the type of population being assessed or treated. For example, the distortion of realities with XR may have unjustified consequences on how users relate to the real world, which may be especially challenging for patients with pre-existing reality distortion (Bell et al., 2020). Utilizing XR on patients with dementia or in forensic settings may also raise ethical intricacies, particularly regarding autonomy and vulnerability, requiring proportionate safeguards and context-specific oversight (Ligthart et al., 2022).

Ethical implications consequently require further probing to identify pertinent concerns surrounding key principles in biomedical ethics, such as autonomy, beneficence, non-maleficence and justice as well as to anticipate concerns (T. Beauchamp & Childress, 2019; T. L. Beauchamp, 2007). This theoretical framework describes the following ethical principles: (i) Autonomy, which includes informed consent and the right of the patients to choose or refuse treatment; (ii) beneficence, which means that the professional acts in the best interest of the patient; (iii) non-maleficence, which entails minimizing risks; and (iv) justice, which concerns the fair distribution of benefits, burdens, and health-care resources.

Given the rapid development of XR possibilities, several key stakeholders have questioned the ethical dilemmas in various types of literature. It has thus been noted that each technological modality brings its own nuanced ethical challenges (Bond et al., 2023; D’Alfonso et al., 2019; Kellmeyer, 2018; Kellmeyer et al., 2019; Ligthart et al., 2022; Marloth et al., 2020; Parsons, 2021; Slater et al., 2020; Torous & Roberts, 2017; Wykes et al., 2019). A scoping review is necessary to assess the breadth of existing knowledge on the topic, to synthesize the literature, and to highlight key findings while facilitating a dialogue between them.

In this paper, we aim to provide an in-depth analysis of the main ethical issues arising from mental health literature on the use of XR in clinical settings. Identifying the ethical implications of such novel technologies is crucial for exploring research avenues that will advance these ethical considerations and progressively help shape ethical guidelines to regulate these practices in mental healthcare.

2. Materials and Methods

This scoping review is based on the methodological framework of the Joanna Briggs Institute (JBI) (Peters et al., 2020). This approach aims to provide an overview of the existing literature on a specific topic and identify gaps in knowledge to guide future research. This manuscript follows the Preferred Reporting Items for Systematic Reviews and Meta-Analyses extension for Scoping Reviews (PRISMA-ScR) checklist (see Supplementary Material S1). The review process was structured around five key steps: (a) defining the research question (what are the main ethical issues arising from mental health literature on the use of XR in clinical settings); (b) search strategy; (c) study selection; (d) data extraction; and (e) data analysis and synthesis (Peters et al., 2020). Two reviewers conducted all phases—title/abstract screening, full-text eligibility, data extraction, and analysis—and held regular consensus meetings with the research team.

2.1. Search Strategy and Eligibility Criteria

The inclusion and exclusion criteria established to meet the study objective follow the PCC framework: Population, Concept, and Context (see Table 1).

Table 1.

Population, concept and context.

The online databases of PubMed, Medline, EMBASE, PsycINFO, and Google Scholar were systematically searched by our team with the help of a librarian specialized in mental health to identify all relevant research reporting ethical issues associated with the use of XR in mental health. Searches used mesh terms and keywords in the title as well as abstract that were inclusive for ethical issues as defined by the biomedical ethics theoretical framework (T. Beauchamp & Childress, 2019) or identified as an ethical issue by the authors themselves, XR, and mental health (e.g., mental disorders, psychiatry, mental health). The search syntax was tailored for each database (see Supplementary Material S2 an example). Searches were limited to English and French language sources. No setting, date or geographical restrictions were applied. The search was last updated in January 2025. We included all study designs including theoretical and empirical research (qualitative, quantitative, mixed), as well as literature reviews and commentaries. Papers were considered for inclusion if they specifically addressed XR environments. Text-based agents, such as chatbots, were excluded because the goal was to include what contains a virtual environment. Studies focusing solely on virtual healthcare, telemedicine, or artificial intelligence without involving a virtual environment were also excluded. We also excluded articles dealing with educational purposes or training professionals. References were exported to the EndNote version 21 software. Additional records were identified through cross-referencing. To ensure consensus, discussions on the inclusion of articles were held regularly with team members.

2.2. Study Selection

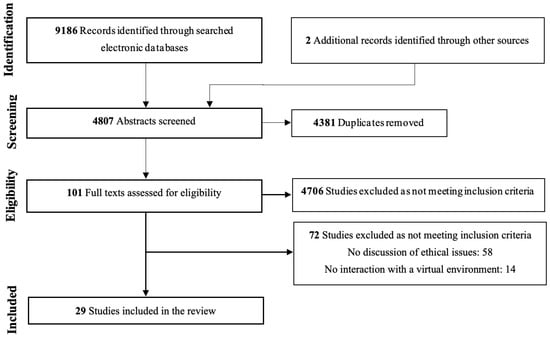

After removing duplicates, the identified articles were independently screened by LD and SG based on their titles and abstracts. A pilot test was conducted on 25 articles, as suggested by Peters et al. (2020), to compare their selection. An in-depth review of full-text articles included in the scoping review was then performed to assess their eligibility based on the inclusion and exclusion criteria. In cases of disagreement regarding article selection, a third evaluator has been consulted. The reasons for article exclusion have been documented and the results of the selection process are presented in a PRISMA-ScR flow diagram (Figure 1).

Figure 1.

PRISMA flow-chart.

2.3. Data Extraction

The information was recorded in a data charting format, with the components of the extraction form identified through team discussions and inspired by the extraction grid proposed by the JBI, which was adapted to align with the aim of this scoping review. Key information included authors; year of publication; country of origin; type of study; aim; theoretical framework or perspective; methodology; population; type of XR platform; healthcare setting; sample size; and identified knowledge gaps related to the topic of interests. To facilitate close, text-proximal analysis by team members who had not read the full texts, ethics-related excerpts were copied verbatim into the chart, with citation details to ensure traceability.

2.4. Data Analysis and Synthesis

We employed an inductive-deductive approach inspired by the qualitative content analysis method (Miles et al., 2018). All relevant excerpts discussing ethical issues were documented and categorized by the authors into ethical spheres aligned with the principles of biomedical ethics (T. Beauchamp & Childress, 2019; T. L. Beauchamp, 2007), while remaining open to emerging concepts. Patterns and contradictions among the studies were identified. Research synthesis was conducted based on the various subcategories grounded in this framework. Frequent meetings involving the authors of this review were held to refine the themes. A summary of the findings was then drafted and discussed with all authors to develop a thematic mapping that reached consensus.

3. Results

This section first presents the characteristics of the included articles, followed by the main themes emerging from the analysis.

3.1. Characteristics of Included Articles

The search on the databases led to 9186 studies. Additional records were identified through other sources (n = 2). Once removing duplicates and irrelevant studies by screening articles based on their titles and abstracts, 98 full texts were read leading to the inclusion of 29 studies (see Table 2). The reviewed articles included opinions or commentary pieces (n = 11), narrative or descriptive reviews (n = 11), qualitative studies (n = 5), and quantitative studies (n = 2). These studies were picked up for data analysis and research synthesis. Table 2 presents the characteristics and methods of the assessed articles.

Table 2.

Summary of articles included in the scoping review.

3.2. Themes Associated with Ethical Issues Arising on the Use of Extended Reality in Clinical Psychiatry

- Theme 1. Balancing beneficence and non-maleficence as a question of safety

Challenges in balancing beneficence and non-maleficence were identified in 19 articles and cluster into four subthemes: (1) physical adverse effects; (2) psychological and emotional unintended consequences; (3) population- and context-specific risks; and (4) clinical issues (Anderson et al., 2004; Bell et al., 2020; Botella et al., 2009; Chung et al., 2023; Chung et al., 2021; Cornet & Van Gelder, 2020; Fromberger et al., 2018; Georgieva & Georgiev, 2019; Kellmeyer, 2018; Klein Haneveld et al., 2023; M. T. E. Kouijzer et al., 2024; Kruk et al., 2019; Ligthart et al., 2022; Marloth et al., 2020; Ozerol & Andic, 2023; Parsons, 2021; Pellicano, 2023; Selaskowski et al., 2024; Washburn et al., 2021).

Physical adverse effects. Mental health professionals and patients should be alert to the potential physical adverse effects related to XR environments, with simulator sickness and XR aftereffects being the most discussed by authors (Anderson et al., 2004; Bell et al., 2020; Botella et al., 2009; Chung et al., 2023; Chung et al., 2021; Fromberger et al., 2018; Georgieva & Georgiev, 2019; Kellmeyer, 2018; Klein Haneveld et al., 2023; Parsons, 2021). As may be observed in motion sickness, several authors discuss how a minority of patients may have a greater sensitivity to being immersed in XR environments and may experience, during or post-immersion, varying degrees of manifestations, including fatigue, headache, eye strain, nausea, disorientation, ataxia, and vertigo, disturbed locomotion, perceptual-motor disturbances, flashbacks and lowered arousal (Anderson et al., 2004; Bell et al., 2020; Botella et al., 2009; Kellmeyer, 2018; Kruk et al., 2019; Parsons, 2021; Selaskowski et al., 2024).

Psychological and emotional unintended consequences. Other relevant considerations discussed by authors with respect to the use of XR have been related to the psychological and emotional side effects of specific XR experiences, including the addictive potential of VR technology (Georgieva & Georgiev, 2019; Marloth et al., 2020), reality distortion (Bell et al., 2020; Georgieva & Georgiev, 2019; Marloth et al., 2020), depersonalization or derealization (Kruk et al., 2019; Parsons, 2021; Selaskowski et al., 2024), trigger symptoms and distress (ex., traumatization, re-traumatization, hallucinations) (M. T. E. Kouijzer et al., 2024; Marloth et al., 2020; Ozerol & Andic, 2023; Pellicano, 2023). Conversely, not all XR-related emotional effects are adverse: in a randomized crossover trial of 50 outpatients with anxiety, psychotic, depressive, or bipolar disorders, VR-based relaxation produced a significantly greater reduction in total negative affective state compared with standard relaxation (Veling et al., 2021), suggesting potential short-term emotional benefits when appropriately designed and supervised.

Population- and context-specific risks. Some have questioned whether XR would benefit those with more severe psychopathologies (e.g., psychosis) or interact with psychotropic medications (Botella et al., 2009; Chung et al., 2021; Parsons, 2021). Additionally, the use of VR technology appears to present distinct risks when applied to forensic populations (Cornet & Van Gelder, 2020; Fromberger et al., 2018; M. T. E. Kouijzer et al., 2024; Ligthart et al., 2022). For instance, for child abusers, exposure to virtual children as a treatment modality could theoretically induce sexual arousal even after the XR experience is over; virtual risk situations could also be misused as child pornography (Cornet & Van Gelder, 2020). Mental health professionals must consider whether they are acting in the best interest of patients (beneficence) and consider the risks of immersion (non-maleficence) (Parsons, 2021).

Clinical issues. Qualitative studies indicates variable clinician perceptions of risk—some see no added risk beyond routine practice, whereas others report concerns (e.g., simulation sickness, distress, dissociation, panic attacks) (Chung et al., 2023; Chung et al., 2021). Additional concerns include maintaining appropriate boundaries around physical touch to prevent patient injury while in a XR environment (risk of misinterpretation (Chung et al., 2021), ensuring fitness to perform tasks post-session (e.g., driving) given potential aftereffects (Anderson et al., 2004), managing session dosage (Botella et al., 2009; Kellmeyer, 2018), and allowing sufficient time to re-acclimate to the non-VR world (Washburn et al., 2021).

- Theme 2. Altering autonomy by altering reality and information

We distinguish three subthemes that connect simulated realities to autonomy and informed consent: (1) deception and transparency; (2) vulnerabilities and reality-testing; and (3) informed consent as the operationalization of autonomy (Anderson et al., 2004; Campaner & Costerbosa, 2023; Chivilgina et al., 2021; Georgieva & Georgiev, 2019; Kellmeyer, 2018; Kellmeyer et al., 2019; Kruk et al., 2019; Ligthart et al., 2022; Luxton & Hudlicka, 2021; Marcoux et al., 2021; Marloth et al., 2020; Selaskowski et al., 2024; Whalley, 1995). Similarly, the issues concerning informed consent and patient autonomy were raised by 9 articles (Campaner & Costerbosa, 2023; Fromberger et al., 2018; Kellmeyer et al., 2019; Klein Haneveld et al., 2023; Ligthart et al., 2022; Marcoux et al., 2021; Marloth et al., 2020; Parsons, 2021; Whalley, 1995).

Deception and transparency deception. Authors explained how XR may create altering and prefabricated realities causing deception and lies to patients, while altering therapeutic relationships (Anderson et al., 2004; Campaner & Costerbosa, 2023; Chivilgina et al., 2021; Georgieva & Georgiev, 2019; Kellmeyer et al., 2019; Kruk et al., 2019; Ligthart et al., 2022; Marloth et al., 2020; Selaskowski et al., 2024; Washburn et al., 2021; Whalley, 1995). Deception notably occurs when patients may not know whether they are interacting with a human controlling the environment or whether it is controlled autonomously (Luxton & Hudlicka, 2021). In such a case, the patient’s autonomy would therefore not be respected. Some highlight that these realities might be more appealing to some patients leading to ethical concerns regarding the fact that it may provide an escape from reality leading to addiction and social withdrawal (Georgieva & Georgiev, 2019; Selaskowski et al., 2024; Whalley, 1995). Modern technologies, such as those using artificial agents, may likewise have human-like appearances and show empathy that may bring on concern about artificial relationships, whereas patients may develop feelings and attachment towards the simulated agent (Luxton & Hudlicka, 2021). Though it is worth noting that these immersive realities nonetheless have an unspecified loss of authenticity and result in a loss of certain aspects of social interaction, which may negatively affect patients (Campaner & Costerbosa, 2023; Kruk et al., 2019; Marloth et al., 2020). Some argue that patients should not be imposed in such environments with constraints that lack meaning and authenticity, which may even manipulate them into behavioral changes unconsciously, which affect a patients’ autonomy (Campaner & Costerbosa, 2023; Ligthart et al., 2022; Marloth et al., 2020). Moreover, it remains questionable whether patients could be themselves when interacting in virtual environments or whether sanctions should occur, for instance, in the case of violence against virtual characters (Chivilgina et al., 2021).

Vulnerabilities and reality-testing. On the other hand, some patients with reduced reality testing capacity, such as those with cognitive impairments or those with psychosis, may not distinguish “real” reality from the vivid and compelling details provided by the immersive reality, thereby deceiving the patient and potentially destabilizing them (Chivilgina et al., 2021; Kellmeyer et al., 2019; Luxton & Hudlicka, 2021; Marloth et al., 2020; Washburn et al., 2021). Individuals with disturbed self-perceptions, such as those experiencing eating disorders, may also face impacts on their identity and self-perception, potentially leading to feelings of loss of control (Chivilgina et al., 2021; Kellmeyer, 2018). They state that a certain amount of deception remains in such a therapy. It is moreover important to pay attention to the content of dialogue and limits to pressure, solicitation and suggestions by ensuring deontological guidelines are followed (Campaner & Costerbosa, 2023).

Informed consent as the operationalization of autonomy. Authors discuss the importance that patients must be informed, in a language that they understand, about the nature of the therapy (e.g., XR environment, type of exposure, duration of exposure), limitations of the therapy as well as the possibility of withdrawing from virtual exposure at any moment and any potential unpleasant effects or risks of XR immersion, which may persist in time (Fromberger et al., 2018; Klein Haneveld et al., 2023; Marcoux et al., 2021; Marloth et al., 2020; Parsons, 2021). Concerning the latter, patients should nonetheless be informed that the level of anxiety may increase at the early stages of immersion, though cumulative exposure is aimed at augmenting their tolerance, thereby improving their autonomy (Parsons, 2021). In the case patients are in contact with virtual characters (i.e., avatars), they must clearly understand the degree of agency of these characters to reduce the risks of strong attachments and adjust their expectations (Marcoux et al., 2021). They must also be informed if it is planned that the data that will be collected during the therapy (e.g., biomarkers) and any data that will be conserved in time, for instance, to develop algorithms and how data will be conserved (e.g., anonymized) (Marcoux et al., 2021). Informed consent should be a collaborative effort between the clinicians and patients, which may increase treatment efficacy, cooperation and trust (Parsons, 2021). Special attention must be paid to patients who are asked to consent and who have cognitive deficits (e.g., dementia) or who have a court order (e.g., offenders with mental disorders) (Fromberger et al., 2018; Kellmeyer et al., 2019; Klein Haneveld et al., 2023; Parsons, 2021; Whalley, 1995). Whereas these patients remain particularly vulnerable, Kellmeyer et al. (2019) suggest that they may actually stand to benefit the most from XR, as they are often confined to secure and sterile environments. While this may be true, it is questioned whether immersion in forensic settings, for instance, infringes the right to mental integrity without valid consent, since such technologies aim to monitor and alter an offender’s mental state and behavior (Ligthart et al., 2022). It is argued that clinicians should therefore practice more diligence when considering the ethical risks of using technologies with such vulnerable patients (Parsons, 2021).

- Theme 3. Data privacy risks and confidentiality concerns

While clinical XR may appear to offer a higher degree of confidentiality (Botella et al., 2004), concerns related to data privacy with implications for non-maleficence persist as ethical concerns: (1) data sensitivity and security; and (2) governance, ownership, and secondary use (Botella et al., 2009; Fromberger et al., 2018; Luxton & Hudlicka, 2021; Marcoux et al., 2021; Marloth et al., 2020; Ozerol & Andic, 2023; Parsons, 2021).

Data sensitivity and security. As the technology continue to be developed by private companies, the collected data may be used for other purposes (Marcoux et al., 2021; Marloth et al., 2020; Ozerol & Andic, 2023). XR environments, mainly when associated with wearable sensors, gather a large amount of personal information about the patient, including eye movements, behavioral response patterns, and motor as well as emotional responses, raising concerns of how to guarantee data security (Luxton & Hudlicka, 2021; Marcoux et al., 2021; Marloth et al., 2020; Ozerol & Andic, 2023; Parsons, 2021). It remains questionable to what extent, for instance, a patient’s voice recording will be stored securely, mostly when there are instances of security leaks caused by software (Ozerol & Andic, 2023). In such cases, a patient may reveal issues, thoughts, and emotions that could have adverse repercussions on their life if they were disclosed to others (Luxton & Hudlicka, 2021). This may therefore disturb the principle of confidentiality and bring forth harm to patients (Ozerol & Andic, 2023). Moreover, virtual environments used in non-clinical settings, such as the case of artificial agents, may lead to login details and personal data being shared, thereby threatening personal privacy (Parsons, 2021). Hackers also present a risk, potentially exploiting sensitive data (Luxton & Hudlicka, 2021). Mental health professionals should inform patients of these limitations, as discussed in the section on consent, and ensure patient data is stored and transmitted securely (e.g., password-protected) (Parsons, 2021). Concerning storage, another important privacy-related query is whether data should be made available for data mining to improve algorithms (Marloth et al., 2020).

Governance, ownership, and secondary use. As the technology continue to be developed by private companies, collected data may be used for other purposes (Marcoux et al., 2021; Marloth et al., 2020; Ozerol & Andic, 2023). It remains an open question whether, and under what conditions, voice recordings or other data are stored securely, how long they are retained, and whether they become available for data mining to improve algorithms (Marloth et al., 2020). Such practices implicate ownership, consent for secondary use, and cross-context data flows. There is a necessity of regulating and ensuring ethical principles are followed by the widespread use of technology in mental health settings (Marcoux et al., 2021; Ozerol & Andic, 2023). These privacy and confidentiality risks directly engage the principle of non-maleficence, as preventable data harms (e.g., breaches, misuse, unauthorized inferences) must be minimized.

- Theme 4. Clinical liability and regulation

Clinical liability concerns were raised by several authors (n = 7) and are is divided into 3 subthemes: (1) autonomous systems, responsibility, and liability; (2) clinician competence and training; and (3) norms, protocols, and regulation (Anderson et al., 2004; Chung et al., 2021; Geraets et al., 2021; Luxton & Hudlicka, 2021; Marcoux et al., 2021; Marloth et al., 2020; Parsons, 2021; Rizzo & Koenig, 2017).

Autonomous systems, responsibility, and liability. The use of clinical XR without the oversight of trained professionals, such as in the case of more automated virtual agents, may bring several ethical dilemmas (Marloth et al., 2020). The decision-making capacity of autonomous systems and the allocation of responsibility/liability to ensure patient safety have been questioned (Luxton & Hudlicka, 2021; Marcoux et al., 2021). Examples include virtual agents persuading patients to adopt continuously “healthier” behavior, thereby potentially limiting autonomy (Marcoux et al., 2021), and increases in self-diagnosis/self-treatment with risks of misdiagnosis, mistreatment, and aggravation of existing conditions (Marloth et al., 2020). In scenarios where a patient reveals suicidal or violent thoughts, the responsibility of the agent versus the developer remains unresolved (Luxton & Hudlicka, 2021; Marcoux et al., 2021); many ethical requirements expected of clinicians (e.g., duty-to-warn) have not been fully examined for autonomous characters (Luxton & Hudlicka, 2021). Notably, such agents cannot accept appropriate responsibility nor bear moral consequences (Luxton & Hudlicka, 2021). These issues can arise when XR developments are implemented without sufficient validation or when they omit important patient data (Marcoux et al., 2021; Rizzo & Koenig, 2017).

Clinician competence and training. The competency of the therapists has also been put into question, stating that health professionals should be competent in the technical and ethical use of XR (Anderson et al., 2004; Chung et al., 2021; Rizzo & Koenig, 2017). As shown by Chung et al. (2021), clinicians identified knowledge and skills gaps that needed to be addressed to feel confident implementing VR. Training is necessary in technical XR skills, assessing patient suitability and managing ethical and safety risks for professionals to be qualified (Anderson et al., 2004; Chung et al., 2021; Rizzo & Koenig, 2017). Such lack of knowledge could have detrimental effects on patients.

Norms, protocols, and regulation. On an ethical basis, providers cannot rely entirely on such technologies due to the lack of adequate norms ensuring safe and ethical use (Parsons, 2021). Harm can occur from inadequate guidelines or monitoring of adverse effects (Chung et al., 2021). Participants in the study by Chung et al. (2021) felt that specific protocols would need to be developed to promote safe and ethical usage. Patient misinformation may also occur in the absence of norms and guidelines (Marcoux et al., 2021).

Authors recommend an ethical use of XR grounded in principles that respect patients’ rights and protect from harm by minimizing potential adverse effects—for example, creating algorithms to reliably indicate its presence during XR and using pre–post measures for simulator-sickness monitoring (Bell et al., 2020; Fromberger et al., 2018; Kellmeyer, 2018; Marloth et al., 2020; Ozerol & Andic, 2023; Parsons, 2021; Selaskowski et al., 2024).

- Theme 5. Fostering inclusiveness and equity in XR development

Other considerations on inclusiveness and equity have been associated with the development of virtual scenarios or characters: (1) Representation, normative assumptions, and bias; and (2) Protection for vulnerable users and governance (Campaner & Costerbosa, 2023; Fromberger et al., 2018; Kellmeyer, 2018; Luxton & Hudlicka, 2021; Marcoux et al., 2021; Marloth et al., 2020).

Representation, normative assumptions, and bias. As Marloth et al. (2020) note, program developments require a definition of normality, which remains a matter of debate universally, to evaluate and allow following changes in dysfunctional functions. Designers must carefully consider patients’ preferences and the context of use during the development and deployment process (Luxton & Hudlicka, 2021). This is especially important in the case of automated characters and conversational agents that are developed based on knowledge databases, which may be susceptible to bias and may not pay adequate attention to cultural nuances (Luxton & Hudlicka, 2021; Marcoux et al., 2021). In a co-design study in forensic youth care, stakeholders (experiential experts and professionals) emphasized that “reality equals diversity,” arguing that diversity must be clearly visible in content (Klein Schaarsberg et al., 2024). Such bias can introduce systematic errors leading to inequalities in diagnosis and treatment (Marcoux et al., 2021), engaging justice (fairness) and non-maleficence (avoidance of preventable harm).

Protections for vulnerable users and governance. The creation of these entities leads to ethical considerations concerning their degree of identity (Campaner & Costerbosa, 2023). When creating personalized virtual characters for patients, clinicians should support patients with vulnerabilities, such as impaired body image, to prevent adverse effects on body image (e.g., eating disorders) (Kellmeyer, 2018). For scenarios involving forensic populations (e.g., diagnosis or treatment of child abusers), developers should not design sexually explicit content nor record real-life children (Fromberger et al., 2018). Actors used for motion capture should likewise be informed of the context in which the data will be used (Fromberger et al., 2018). Finally, albeit more marginally, some authors caution against the potential political misuse of extended reality as a tool for control and normalization toward certain political ideals, highlighting the importance of promoting independent regulation. These safeguards operationalize non-maleficence (risk minimization) while promoting justice (equitable protection across groups).

4. Discussion

This review examined the ethical concerns regarding the applications of XR in mental health care. We synthesize concerns across five themes: i) Balancing beneficence and non-maleficence as a question of patient safety, (ii) Altering autonomy by altering reality and information, (iii) data privacy risks and confidentiality concerns, (iv) clinical liability and regulation, and v) fostering inclusiveness and equity in XR development.

Most of the reviewed studies emphasized the balance between beneficence and non-maleficence, with some addressing autonomy, while the principle of justice—the fourth ethical principle in medical bioethics (T. Beauchamp & Childress, 2019)—was notably underexplored. Yet, no single ethical principle should outweigh another, underscoring the need for clinical judgment and the contextualization of XR use based on individual patient needs—an area scarcely addressed in the existing literature on XR in psychiatric settings. A likely reason is that most studies prioritize immediate, patient-level safety and feasibility, whereas justice (fair access, representation, distribution of benefits/burdens) requires system-level data and equity metrics rarely collected. Moreover, the corpus is dominated by non-empirical work from early-adopter services with limited stakeholder diversity, sparse reporting of sociodemographics, and little co-design, making equity effects less visible. As XR deployment broadens, embedding equity indicators, participatory methods, and transparent reporting on inclusion will be needed to make justice analysable alongside the other principles.

Most authors stated ethical concerns about the use of XR primarily for patient safety and effects of simulating reality. There is a clear need to monitor and ensure patient safety during and after the use of XR. Furthermore, the prefabricated realities may cause patient deception and lead to negative effects (e.g., attachment to the virtual agent, addiction, manipulation, altered therapeutic relationship) as well as developmental biases. Unfortunately, long-term effects remain insufficiently documented for both XR applications and embodied virtual agents (Rudschies & Schneider, 2024). This evidentiary gap raises specific ethical concerns: when longer-term benefits and harms are unknown, patients cannot be fully informed about material risks and alternatives, which challenges the validity of informed consent and the respect for autonomy.

Although such risks exist, many studies, including meta-analyses have shown acceptability and benefits of using XR applications in psychiatry, even in patients with severe mental disorders, such as schizophrenia (e.g., (Carl et al., 2019; Dellazizzo et al., 2020; Freeman et al., 2017; Maples-Keller et al., 2017; Rus-Calafell et al., 2018; Valmaggia et al., 2016)). While genuine human–human interactions may appear preferable to human–machine contact and lead to better therapeutic alliance, mostly in vulnerable patients, evidence remains limited (Kellmeyer et al., 2019; Rudschies & Schneider, 2024; Selaskowski et al., 2024). XR for clinical use, for instance, may seem like a non-invasive technique in comparison to pharmacological or other medical techniques (Hardy et al., 2019). Hence, as stated by Kellmeyer et al. (2019), professionals have an ethical responsibility to ensure patients benefit from new technologies and consider patient vulnerability that may compromise safety. This could, however, be hindered by the lack of competencies and knowledge in XR use from professionals (Chung et al., 2023), which may bring forth additional questions concerning responsibility and liability (Luxton & Hudlicka, 2021; Marcoux et al., 2021; Marloth et al., 2020). In these newer automated developments and research area, it is thus crucial to rapidly involve patients, technical and mental health professionals (Brett et al., 2014).

The use of XR and artificial agents in mental health care introduces important ethical complexities, particularly when simulated environments alter patients’ perceptions of reality and influence the development of a Digital Therapeutic Alliance (DTA). Multiple studies from this review have explored how immersive technologies may fabricate or distort reality, raising concerns about deception, loss of authenticity, and compromised autonomy. In these environments, patients may struggle to discern whether they are interacting with a human clinician or an autonomous system, which undermines informed consent and the authenticity of the therapeutic bond (Luxton & Hudlicka, 2021; Marcoux et al., 2021). When patients cannot tell who (or what) is delivering care—and the degree of autonomy, human oversight, and data practices involved—their ability to receive and understand material information about risks, alternatives, and the right to refuse is limited, thereby compromising the essential elements of valid informed consent and diminishing the authenticity of the therapeutic bond. Furthermore, patients may develop emotional attachments to avatars or agents that simulate empathy and responsiveness, leading to artificial relationships that lack mutuality and reciprocity, which are key components of a traditional therapeutic alliance (Luxton & Hudlicka, 2021; Selaskowski et al., 2024).

These dynamics challenge the integrity of the DTA, which relies on shared goals, task agreement, and a genuine therapeutic bond (Malouin-Lachance et al., 2025; Beatty et al., 2022; Goldberg et al., 2022). When patients are unaware of the artificial nature of the agent or the environment, the alliance may be built on a foundation of illusion rather than trust. Campaner and Costerbosa (2023) argue that a certain degree of deception is inherent in avatar-based therapies, where patients engage in dialogue with fictional entities. While this imaginative engagement may enhance insight and therapeutic efficacy, it also risks undermining the patient’s autonomy and capacity for informed decision-making if the artificiality is not fully disclosed. Moreover, immersive XR environments may impose prefabricated constraints or behavioral nudges that subtly manipulate patients without their awareness, further complicating the ethical landscape of the DTA (Ligthart et al., 2022; Marcoux et al., 2021). To preserve the ethical integrity of the DTA, clinicians and developers must ensure transparency regarding the agent’s autonomy, establish clear boundaries in therapeutic dialogue, and adhere to deontological guidelines that protect against undue influence or coercion (Malouin-Lachance et al., 2025; Beatty et al., 2022; Ligthart et al., 2022; Goldberg et al., 2022).

Patients, regardless of their capacity to consent, therefore have the right to be informed about the different features concerning the use of XR (e.g., nature of XR, limitations, unpleasant effects, data collection and security). Applications should be used transparently and respect patients’ autonomy as well as confidentiality. While XR technologies offer immersive and interactive therapeutic environments, the question arises as to what extent these features should be revealed to patients. Debate persists about the appropriate level of disclosure for technical/immersive features much like how certain aspects of psychotherapy are not fully disclosed to maintain therapeutic efficacy (Barrett & Berman, 2001). On one hand, comprehensive disclosure aligns with ethical principles of autonomy and informed consent. On the other hand, withholding some information—such as immersive elements or data processing details—could be justified if deemed beneficial to the therapeutic process. Thus, developing clear guidelines on the degree of disclosure remains crucial to balancing patient autonomy with clinical efficacy, particularly in psychiatric contexts where patients may be more vulnerable to XR-induced distortions or misunderstandings.

The involvement of various stakeholders is crucial to maintaining an ethical perspective in the development and implementation of these emerging mental healthcare technologies. Currently, these increasingly sophisticated technologies are becoming pervasive in everyday life, leaving little time to pause and reassess ethical frameworks to effectively guide their use in clinical practice. While the principles of biomedical ethics remain foundational (T. Beauchamp & Childress, 2019), complementary frameworks have emerged such as the Montreal Declaration for a Responsible Development of Artificial Intelligence (Montréal Declaration, 2018) and the World Health Organization (WHO) (2021) which outline principles to guide digital and AI-related practices. They articulate well-being, safety, public interest, autonomy, responsibility, accountability, and the patient–machine relationship. However, these remain high-level and can fall short of clinicians’ needs for actionable protocols (Chung et al., 2021). Participatory, value-based development work in forensic mental health shows how end-users and professionals can co-create design requirements and iteratively test prototypes, translating stakeholder input into concrete design and reporting decisions (Kip et al., 2019). Similarly, implementation research indicates that introducing VR into clinical services depends on early training, organizational fit, and explicit, co-designed implementation strategies that go beyond efficacy testing (M. T. E. Kouijzer et al., 2024). Together, these approaches help operationalize ethical principles into practice-ready guidance. Building on the Montreal Declaration, Laverdière and Régis (2023) recently published a report aimed at supporting the establishment of a prototype code of ethics for AI use in health and human relations. However, they emphasize that ethical practice requires more than mere adherence to a code—it necessitates critical thinking and ethical reasoning from users, as Madary and Metzinger (2016) previously argued. In this context, the focus should shift toward developing guidelines that not only regulate but also personalize practices, rather than imposing rigid norms (Laverdière & Régis, 2023). Consequently, integrating diverse stakeholders, including citizens from various backgrounds, becomes essential for reflecting on the development of these cutting-edge AI technologies and for co-constructing practical guidelines that will govern their use in clinical settings (World Health Organization (WHO), 2022).

5. Limitations

This study presents certain limitations. Scoping reviews typically do not assess the quality of included studies, potentially integrating research with varying methodological rigor (Peters et al., 2020). This can be particularly challenging in emerging fields like XR, where empirical data are limited, and much of the literature consists of narratives reviews or commentary or opinion papers rather than robust empirical studies. This context can foster an echo chamber effect, similar to that observed in social media networks, where the same articles are repeatedly discussed, amplifying their perceived influence and reinforcing their reach. Regarding our analysis process, codes and subcodes were developed iteratively by two reviewers with documented consensus meetings; we did not compute a formal inter-rater reliability coefficient, which enhances reflexivity but may limit reproducibility. Furthermore, Conceptual heterogeneity across included studies (e.g., varying definitions of XR/VR/AR) and our choice not to require “sense of presence” as an inclusion criterion may introduce classification variability.

6. Conclusions

To conclude, this review highlighted several ethical concerns following the use of XR technologies in the domain of mental health care. Despite the limited number of studies reviewed, many of the identified concerns—particularly those related to more automated technologies—are relevant across other healthcare domains and emerging technologies, such as AI applications. Because scoping reviews do not appraise study quality and the evidence base is largely non-empirical, the claims advanced here should be read as a map of ethical salience. There is a pressing need for more transdisciplinary research with a bioethical focus as clinical care increasingly integrates novel technologies.

For researchers, priorities include prospective and longitudinal studies, mixed-methods evaluations in real-world services, equity and inclusion metrics, and implementation reporting that makes ethical trade-offs explicit. For clinicians, a precautionary approach is warranted now: ensure competence and training, obtain and document robust informed consent, monitor and mitigate adverse effects, and apply strong data-governance safeguards while tailoring use to patient context.

Active participation from all stakeholders, including patients, researchers, clinicians, and XR developers, is crucial in shaping the ethical use of XR through its development, implementation, and evaluation. Evidence-based guidelines are essential to ensure safe and ethically sound practices; however, they cannot replace the clinical judgment necessary to tailor interventions to the specific context of each patient. These guidelines may also be a framework for newer technological developments, notably with the blooming use of artificial intelligence and their associated algorithms in mental health. Ultimately, significant research gaps persist, particularly regarding XR and the development of a comprehensive regulatory framework that ensures responsible and patient-centered implementation.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/bs15101431/s1. Reference Tricco et al. (2018) is cited in the supplementary materials.

Author Contributions

M.-H.G., L.D., S.G., S.D., A.H., K.P. and A.D.: study planning and design. L.D., S.G. and M.D.: literature search. M.-H.G., L.D., S.G. and S.D.: data extraction and analysis. L.D. and M.-H.G.: original draft preparation. A.D.: supervision. K.P.: project administration. All authors provided a critical revision of the paper. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Acknowledgments

MHG is holder of a Junior 1 salary award from the Fonds de recherche du Québec—Santé (FRQS) and AD is holder of a Junior 2 salary award from the FRQS. The authors would like to extend their gratitude to Jean-Simon Drouin, nursing student, for his help. The authors also acknowledge the support provided by the Fondation de l’Institut universitaire en santé mentale de Montréal.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Anderson, P., Jacobs, C., & Rothbaum, B. O. (2004). Computer-supported cognitive behavioral treatment of anxiety disorders. Journal of Clinical Psychology, 60(3), 253–267. [Google Scholar] [CrossRef]

- Azuma, R. (1997). A survey of augmented reality. Presence: Teleoperators and Virtual Environments, 6(4), 355–385. [Google Scholar] [CrossRef]

- Barrett, M. S., & Berman, J. S. (2001). Is psychotherapy more effective when therapists disclose information about themselves? Journal of Consulting and Clinical Psychology, 69(4), 597–603. [Google Scholar] [CrossRef]

- Baus, O., & Bouchard, S. (2014). Moving from virtual reality exposure-based therapy to augmented reality exposure-based therapy: A review. Frontiers in Human Neuroscience, 8, 112. [Google Scholar] [CrossRef]

- Beatty, C., Malik, T., Meheli, S., & Sinha, C. (2022). Evaluating the therapeutic alliance with a free-text CBT conversational agent (Wysa): A mixed-methods study. Frontiers in Digital Health, 4, 847991. [Google Scholar] [CrossRef]

- Beauchamp, T., & Childress, J. (2019). Principles of biomedical ethics: Marking its fortieth anniversary. The American Journal of Bioethics, 19(11), 9–12. [Google Scholar] [CrossRef] [PubMed]

- Beauchamp, T. L. (2007). The ‘four principles’ approach to health care ethics. In R. E. Ashcroft, A. Dawson, H. Draper, & J. R. McMillan (Eds.), Principles of health care ethics (pp. 3–10). Wiley. [Google Scholar]

- Bell, I. H., Nicholas, J., Alvarez-Jimenez, M., Thompson, A., & Valmaggia, L. (2020). Virtual reality as a clinical tool in mental health research and practice. Dialogues in Clinical Neuroscience, 22(2), 169–177. [Google Scholar] [CrossRef] [PubMed]

- Best, P., Meireles, M., Schroeder, F., Montgomery, L., Maddock, A., Davidson, G., Galway, K., Trainor, D., Campbell, A., & Van Daele, T. (2022). Freely available virtual reality experiences as tools to support mental health therapy: A systematic scoping review and consensus based interdisciplinary analysis. Journal of Technology in Behavioral Science, 7(1), 100–114. [Google Scholar] [CrossRef]

- Billinghurst, M., Clark, A., & Lee, G. (2015). A survey of augmented reality. Foundations and Trends in Human–Computer Interaction, 8(2–3), 73–272. [Google Scholar] [CrossRef]

- Bond, R. R., Mulvenna, M. D., Potts, C., O’Neill, S., Ennis, E., & Torous, J. (2023). Digital transformation of mental health services. Npj Mental Health Research, 2(1), 13. [Google Scholar] [CrossRef]

- Botella, C., Garcia-Palacios, A., Banos, R. M., & Quero, S. (2009). Cybertherapy: Advantages, limitations, and ethical issues. PsychNology Journal, 7(1), 77–100. Available online: https://ovidsp.ovid.com/ovidweb.cgi?T=JS&CSC=Y&NEWS=N&PAGE=fulltext&D=psyc8&AN=2009-06809-005 (accessed on 4 April 2024).

- Botella, C., Quero, S., Baños, R. M., Perpiñá, C., García Palacios, A., & Riva, G. (2004). Virtual reality and psychotherapy. Studies in Health Technology and Informatics, 99, 37–54. [Google Scholar] [PubMed]

- Brett, J., Staniszewska, S., Mockford, C., Herron-Marx, S., Hughes, J., Tysall, C., & Suleman, R. (2014). A systematic review of the impact of patient and public involvement on service users, researchers and communities. The Patient, 7(4), 387–395. [Google Scholar] [CrossRef] [PubMed]

- Campaner, R., & Costerbosa, M. L. (2023). Avatar therapy and clinical care in psychiatry: Underlying assumptions, epistemic challenges, and ethical issues. In M. Michałowska (Ed.), Humanity in-between and beyond (pp. 43–61). Springer International Publishing. [Google Scholar] [CrossRef]

- Carl, E., Stein, A. T., Levihn-Coon, A., Pogue, J. R., Rothbaum, B., Emmelkamp, P., Asmundson, G. J., Carlbring, P., & Powers, M. B. (2019). Virtual reality exposure therapy for anxiety and related disorders: A meta-analysis of randomized controlled trials. Journal of Anxiety Disorders, 61, 27–36. [Google Scholar] [CrossRef]

- Chivilgina, O., Elger, B. S., & Jotterand, F. (2021). Digital technologies for schizophrenia management: A descriptive review. Science and Engineering Ethics, 27(2), 25. [Google Scholar] [CrossRef] [PubMed]

- Chung, O. S., Dowling, N. L., Brown, C., Robinson, T., Johnson, A. M., Ng, C. H., Yücel, M., & Segrave, R. A. (2023). Using the theoretical domains framework to inform the implementation of therapeutic virtual reality into mental healthcare. Administration and Policy in Mental Health, 50(2), 237–268. [Google Scholar] [CrossRef]

- Chung, O. S., Robinson, T., Johnson, A. M., Dowling, N. L., Ng, C. H., Yücel, M., & Segrave, R. A. (2021). Implementation of therapeutic virtual reality into psychiatric care: Clinicians’ and service managers’ perspectives. Frontiers in Psychiatry, 12, 791123. [Google Scholar] [CrossRef]

- Cieślik, B., Mazurek, J., Rutkowski, S., Kiper, P., Turolla, A., & Szczepańska-Gieracha, J. (2020). Virtual reality in psychiatric disorders: A systematic review of reviews. Complementary Therapies in Medicine, 52, 102480. [Google Scholar] [CrossRef]

- Cipresso, P., Giglioli, I. A. C., Raya, M. A., & Riva, G. (2018). The past, present, and future of virtual and augmented reality research: A network and cluster analysis of the literature. Frontiers in Psychology, 9, 2086. [Google Scholar] [CrossRef]

- Colman, M., Millar, J., Patil, B., Finnegan, D., Russell, A., Higson-Sweeney, N., Da Silva Aguiar, M., & Stanton Fraser, D. (2024). A systematic review and narrative synthesis of the use and effectiveness of extended reality technology in the assessment, treatment and study of obsessive compulsive disorder. Journal of Obsessive-Compulsive and Related Disorders, 42, 100893. [Google Scholar] [CrossRef]

- Cornet, L. J., & Van Gelder, J.-L. (2020). Virtual reality: A use case for criminal justice practice. Psychology, Crime & Law, 26(7), 631–647. [Google Scholar] [CrossRef]

- Costanzo, M. E., Leaman, S., Jovanovic, T., Norrholm, S. D., Rizzo, A. A., Taylor, P., & Roy, M. J. (2014). Psychophysiological response to virtual reality and subthreshold posttraumatic stress disorder symptoms in recently deployed military. Psychosomatic Medicine, 76(9), 670–677. [Google Scholar] [CrossRef]

- Craig, T. K., Rus-Calafell, M., Ward, T., Leff, J. P., Huckvale, M., Howarth, E., Emsley, R., & Garety, P. A. (2018). AVATAR therapy for auditory verbal hallucinations in people with psychosis: A single-blind, randomised controlled trial. The Lancet Psychiatry, 5(1), 31–40. [Google Scholar] [CrossRef] [PubMed]

- D’Alfonso, S., Phillips, J., Valentine, L., Gleeson, J., & Alvarez-Jimenez, M. (2019). Moderated Online social therapy: Viewpoint on the ethics and design principles of a web-based therapy system. JMIR Mental Health, 6(12), e14866. [Google Scholar] [CrossRef]

- Dellazizzo, L., Potvin, S., Luigi, M., & Dumais, A. (2020). Evidence on virtual reality-based therapies for psychiatric disorders: Meta-review of meta-analyses. Journal of Medical Internet Research, 22(8), e20889. [Google Scholar] [CrossRef]

- Dellazizzo, L., Potvin, S., Phraxayavong, K., & Dumais, A. (2021). One-year randomized trial comparing virtual reality-assisted therapy to cognitive-behavioral therapy for patients with treatment-resistant schizophrenia. NPJ Schizophrenia, 7(1), 9. [Google Scholar] [CrossRef]

- Fiske, A., Henningsen, P., & Buyx, A. (2019). Your robot therapist will see you now: Ethical implications of embodied artificial intelligence in psychiatry, psychology, and psychotherapy. Journal of Medical Internet Research, 21(5), e13216. [Google Scholar] [CrossRef]

- Freeman, D., Reeve, S., Robinson, A., Ehlers, A., Clark, D., Spanlang, B., & Slater, M. (2017). Virtual reality in the assessment, understanding, and treatment of mental health disorders. Psychological Medicine, 47(14), 2393–2400. [Google Scholar] [CrossRef] [PubMed]

- Freeman, D., Slater, M., Bebbington, P. E., Garety, P. A., Kuipers, E., Fowler, D., Met, A., Read, C. M., Jordan, J., & Vinayagamoorthy, V. (2003). Can virtual reality be used to investigate persecutory ideation? The Journal of Nervous and Mental Disease, 191(8), 509–514. [Google Scholar] [CrossRef] [PubMed]

- Fromberger, P., Jordan, K., & Müller, J. L. (2018). Virtual reality applications for diagnosis, risk assessment and therapy of child abusers. Behavioral Sciences & the Law, 36(2), 235–244. [Google Scholar] [CrossRef]

- Garety, P. A., Edwards, C. J., Jafari, H., Emsley, R., Huckvale, M., Rus-Calafell, M., Fornells-Ambrojo, M., Gumley, A., Haddock, G., Bucci, S., McLeod, H. J., McDonnell, J., Clancy, M., Fitzsimmons, M., Ball, H., Montague, A., Xanidis, N., Hardy, A., Craig, T. K. J., & Ward, T. (2024). Digital AVATAR therapy for distressing voices in psychosis: The phase 2/3 AVATAR2 trial. Nature Medicine, 30(12), 3658–3668. [Google Scholar] [CrossRef]

- Georgieva, I., & Georgiev, G. V. (2019). Reconstructing personal stories in virtual reality sas a mechanism to recover the self. International Journal of Environmental Research and Public Health, 17(1), 26. [Google Scholar] [CrossRef]

- Geraets, C. N. W., van der Stouwe, E. C. D., Pot-Kolder, R., & Veling, W. (2021). Advances in immersive virtual reality interventions for mental disorders: A new reality? Current Opinion in Psychology, 41, 40–45. [Google Scholar] [CrossRef]

- Goldberg, S. B., Baldwin, S. A., Riordan, K. M., Torous, J., Dahl, C. J., Davidson, R. J., & Hirshberg, M. J. (2022). Alliance with an unguided smartphone app: Validation of the digital working alliance inventory. Assessment, 29(6), 1331–1345. [Google Scholar] [CrossRef] [PubMed]

- Hardy, G. E., Bishop-Edwards, L., Chambers, E., Connell, J., Dent-Brown, K., Kothari, G., O’Hara, R., & Parry, G. D. (2019). Risk factors for negative experiences during psychotherapy. Psychotherapy Research: Journal of the Society for Psychotherapy Research, 29(3), 403–414. [Google Scholar] [CrossRef]

- Ienca, M., Wangmo, T., Jotterand, F., Kressig, R. W., & Elger, B. (2018). Ethical Design of intelligent assistive technologies for dementia: A descriptive review. Science and Engineering Ethics, 24(4), 1035–1055. [Google Scholar] [CrossRef]

- Kellmeyer, P. (2018). Neurophilosophical and ethical aspects of virtual reality therapy in neurology and psychiatry. Cambridge Quarterly of Healthcare Ethics, 27(4), 610–627. [Google Scholar] [CrossRef] [PubMed]

- Kellmeyer, P., Biller-Andorno, N., & Meynen, G. (2019). Ethical tensions of virtual reality treatment in vulnerable patients. Nature Medicine, 25(8), 1185–1188. [Google Scholar] [CrossRef] [PubMed]

- Kip, H., Bouman, Y. H. A., Kelders, S. M., & van Gemert-Pijnen, J. E. W. C. (2019). The importance of systematically reporting and reflecting on eHealth development: Participatory development process of a virtual reality application for forensic mental health care. Journal of Medical Internet Research, 21(8), e12972. [Google Scholar] [CrossRef]

- Klein Haneveld, L., Kip, H., Bouman, Y. H. A., Weerdmeester, J., Scholten, H., & Kelders, S. M. (2023). Exploring the added value of virtual reality biofeedback game DEEP in forensic psychiatric inpatient care—A qualitative study. Frontiers in Psychology, 14, 1201485. [Google Scholar] [CrossRef]

- Klein Schaarsberg, R. E., van Dam, L., Widdershoven, G. A. M., Lindauer, R. J. L., & Popma, A. (2024). Ethnic representation within virtual reality: A co-design study in a forensic youth care setting. BMC Digital Health, 2(1), 25. [Google Scholar] [CrossRef]

- Klein Tuente, S., Bogaerts, S., Bulten, E., Keulen-de Vos, M., Vos, M., Bokern, H., SV, I. J., Geraets, C. N. W., & Veling, W. (2020). Virtual Reality Aggression Prevention Therapy (VRAPT) versus waiting list control for forensic psychiatric inpatients: A multicenter randomized controlled trial. Journal of Clinical Medicine, 9(7), 2258. [Google Scholar] [CrossRef]

- Kouijzer, M. M. T. E., Kip, H., Bouman, Y. H. A., & Kelders, S. M. (2023). Implementation of virtual reality in healthcare: A scoping review on the implementation process of virtual reality in various healthcare settings. Implementation Science Communications, 4(1), 67. [Google Scholar] [CrossRef] [PubMed]

- Kouijzer, M. T. E., Kip, H., Kelders, S. M., & Bouman, Y. H. A. (2024). The introduction of virtual reality in forensic mental healthcare—An interview study on the first impressions of patients and healthcare providers regarding VR in treatment. Frontiers in Psychology, 15, 1284983. [Google Scholar] [CrossRef]

- Kruk, D., Metel, D., & Cechnicki, A. (2019). A paradigm description of virtual reality and its possible applications in psychiatry. Advances in Psychiatry and Neurology, 28(2), 116–134. [Google Scholar] [CrossRef]

- Kuntze, M. F., Stoermer, R., Mager, R., Roessler, A., Mueller-Spahn, F., & Bullinger, A. H. (2001). Immersive virtual environments in cue exposure. Cyberpsychology & Behavior, 4(4), 497–501. [Google Scholar] [CrossRef]

- Laverdière, M., & Régis, C. (2023). Soutenir l’encadrement des pratiques professionnelles en matière d’intelligence artificielle dans le secteur de la santé et des relations humaines. Available online: https://www.chairesante.ca/en/articles/2023/soutenir-lencadrement-des-pratiques-professionnelles-en-matiere-dintelligence-artificielle-dans-le-secteur-de-la-sante-et-des-relations-humaines/ (accessed on 24 May 2025).

- Lee, J. H., Ku, J., Kim, K., Kim, B., Kim, I. Y., Yang, B. H., Kim, S. H., Wiederhold, B. K., Wiederhold, M. D., Park, D. W., Lim, Y., & Kim, S. I. (2003). Experimental application of virtual reality for nicotine craving through cue exposure. Cyberpsychology & Behavior, 6(3), 275–280. [Google Scholar] [CrossRef]

- Li, L., Yu, F., Shi, D., Shi, J., Tian, Z., Yang, J., Wang, X., & Jiang, Q. (2017). Application of virtual reality technology in clinical medicine. American Journal of Translational Research, 9(9), 3867–3880. Available online: https://pubmed.ncbi.nlm.nih.gov/28979666/ (accessed on 4 April 2024).

- Ligthart, S., Meynen, G., Biller-Andorno, N., Kooijmans, T., & Kellmeyer, P. (2022). Is virtually everything possible? The relevance of ethics and human rights for introducing extended reality in forensic psychiatry. AJOB Neuroscience, 13(3), 144–157. [Google Scholar] [CrossRef]

- Luxton, D. D., & Hudlicka, E. (2021). Intelligent virtual agents in behavioral and mental healthcare: Ethics and application considerations. In F. Jotterand, & M. Ienca (Eds.), Artificial intelligence in brain and mental health: Philosophical, ethical & policy issues (pp. 41–55). Springer. [Google Scholar] [CrossRef]

- Madary, M., & Metzinger, T. K. (2016). Real virtuality: A code of ethical conduct. Recommendations for good scientific practice and the consumers of VR-technology. Frontiers in Robotics and AI, 3, 180932. [Google Scholar] [CrossRef]

- Malouin-Lachance, A., Capolupo, J., Laplante, C., & Hudon, A. (2025). Does the digital therapeutic alliance exist? Integrative review. JMIR Mental Health, 12, e69294. [Google Scholar] [CrossRef] [PubMed]

- Maples-Keller, J. L., Bunnell, B. E., Kim, S. J., & Rothbaum, B. O. (2017). The use of virtual reality technology in the treatment of anxiety and other psychiatric disorders. Harvard Review of Psychiatry, 25(3), 103–113. [Google Scholar] [CrossRef]

- Marcoux, A., Tessier, M. H., Grondin, F., Reduron, L., & Jackson, P. L. (2021). Basic, clinical and social perspectives on the use of virtual characters in mental health. Sante Mentale Quebec, 46(1), 35–70. [Google Scholar] [CrossRef]

- Marloth, M., Chandler, J., & Vogeley, K. (2020). Psychiatric interventions in virtual reality: Why we need an ethical framework. Cambridge Quarterly of Healthcare Ethics, 29(4), 574–584. [Google Scholar] [CrossRef]

- Miles, M. B., Huberman, A. M., & Saldaña, J. (2018). Qualitative data analysis: A methods sourcebook (4th ed.). Sage Publication. [Google Scholar]

- Milgram, P., & Kishino, F. (1994). Taxonomy of mixed reality visual displays. IEICE Transactions on Information and Systems, E77(12), 1321–1329. [Google Scholar]

- Montréal Declaration. (2018). Montréal declaration for a responsible development of artificial intelligence. Université de Montréal. Available online: https://www.montrealdeclaration-responsibleai.com/ (accessed on 5 May 2025).

- Navas-Medrano, S., Soler-Domínguez, J. L., & Pons, P. (2024). Mixed reality for a collective and adaptive mental health metaverse. Frontiers in Psychiatry, 14, 1272783. [Google Scholar] [CrossRef]

- Owens, M. E., & Beidel, D. C. (2015). Can virtual reality effectively elicit distress associated with social anxiety disorder? Journal of Psychopathology and Behavioral Assessment, 37(2), 296–305. [Google Scholar] [CrossRef]

- Ozerol, Z., & Andic, S. (2023). Avatar therapy model and ethical principles in the treatment of auditory hallucinations in patients with schizophrenia. Psikiyatride Güncel Yaklaşımlar, 15(4), 665–676. [Google Scholar] [CrossRef]

- Park, M. J., Kim, D. J., Lee, U., Na, E. J., & Jeon, H. J. (2019). A literature overview of virtual reality (VR) in treatment of psychiatric disorders: Recent advances and limitations. Frontiers in Psychiatry, 10, 505. [Google Scholar] [CrossRef] [PubMed]

- Parsons, T. D. (2021). Ethical challenges of using virtual environments in the assessment and treatment of psychopathological disorders. Journal of Clinical Medicine, 10(3), 378. [Google Scholar] [CrossRef] [PubMed]

- Pellicano, A. (2023). Efficacy of virtual reality exposure therapy in treating post-traumatic stress disorder. Archives of Clinical Psychiatry, 50(5), 132–138. [Google Scholar] [CrossRef]

- Peters, M. D. J., Godfrey, C., McInerney, P., Munn, Z., Tricco, A. C., & Khalil, H. (2020). Chapter 11: Scoping reviews. In E. Aromataris, & Z. Munn (Eds.), JBI manual for evidence synthesis (pp. 407–452). JBI. [Google Scholar]

- Rajkumar, R. P. (2024). Augmented reality as an aid to behavior therapy for anxiety disorders: A narrative review. Cureus, 16(9), e69454. [Google Scholar] [CrossRef]

- Rizzo, A. S., & Koenig, S. T. (2017). Is clinical virtual reality ready for primetime? Neuropsychology, 31(8), 877–899. [Google Scholar] [CrossRef]

- Rudschies, C., & Schneider, I. (2024). Ethical, legal, and social implications (ELSI) of virtual agents and virtual reality in healthcare. Social Science and Medicine, 340, 116483. [Google Scholar] [CrossRef]

- Rus-Calafell, M., Garety, P., Sason, E., Craig, T., & Valmaggia, L. (2018). Virtual reality in the assessment and treatment of psychosis: A systematic review of its utility, acceptability and effectiveness. Psychological Medicine, 48(3), 362–391. [Google Scholar] [CrossRef]

- Selaskowski, B., Wiebe, A., Kannen, K., Asché, L., Pakos, J., Philipsen, A., & Braun, N. (2024). Clinical adoption of virtual reality in mental health is challenged by lack of high-quality research. NPJ Mental Health Research, 3(1), 24. [Google Scholar] [CrossRef] [PubMed]

- Slater, M. (2009). Place illusion and plausibility can lead to realistic behaviour in immersive virtual environments. Philosophical Transactions of the Royal Society B: Biological Sciences, 364(1535), 3549–3557. [Google Scholar] [CrossRef]

- Slater, M., Gonzalez-Liencres, C., Haggard, P., Vinkers, C., Gregory-Clarke, R., Jelley, S., Watson, Z., Breen, G., Schwarz, R., Steptoe, W., Szostak, D., Halan, S., Fox, D., & Silver, J. (2020). The ethics of realism in virtual and augmented reality. Frontiers in Virtual Reality, 1, 1. [Google Scholar] [CrossRef]

- Sygel, K., & Wallinius, M. (2021). Immersive virtual reality simulation in forensic psychiatry and adjacent clinical fields: A review of current assessment and treatment methods for practitioners. Frontiers in Psychiatry, 12, 673089. [Google Scholar] [CrossRef] [PubMed]

- Torous, J., Bucci, S., Bell, I. H., Kessing, L. V., Faurholt-Jepsen, M., Whelan, P., Carvalho, A. F., Keshavan, M., Linardon, J., & Firth, J. (2021). The growing field of digital psychiatry: Current evidence and the future of apps, social media, chatbots, and virtual reality. World Psychiatry, 20(3), 318–335. [Google Scholar] [CrossRef]

- Torous, J., & Roberts, L. W. (2017). The ethical use of mobile health technology in clinical psychiatry. The Journal of Nervous and Mental Disease, 205(1), 4–8. [Google Scholar] [CrossRef]

- Tricco, A. C., Lillie, E., Zarin, W., O’Brien, K. K., Colquhoun, H., Levac, D., Moher, D., Peters, M. D. J., Horsley, T., Weeks, L., Hempel, S., Akl, E. A., Chang, C., McGowan, J., Stewart, L., Hartling, L., Aldcroft, A., Wilson, M. G., Garritty, C., … Straus, S. E. (2018). PRISMA extension for scoping reviews (PRISMAScR): Checklist and explanation. Annals of Internal Medicine, 169(7), 467–473. [Google Scholar] [CrossRef]

- Valmaggia, L. R., Latif, L., Kempton, M. J., & Rus-Calafell, M. (2016). Virtual reality in the psychological treatment for mental health problems: An systematic review of recent evidence. Psychiatry Research, 236, 189–195. [Google Scholar] [CrossRef] [PubMed]

- Veling, W., Lestestuiver, B., Jongma, M., Hoenders, H. J. R., & van Driel, C. (2021). Virtual reality relaxation for patients with a psychiatric disorder: Crossover randomized controlled trial. Journal of Medical Internet Research, 23(1), e17233. [Google Scholar] [CrossRef] [PubMed]

- Washburn, M., Hagedorn, A., & Moore, S. (2021). Creating virtual reality based interventions for older adults impacted by substance misuse: Safety and design considerations. Journal of Technology in Human Services, 39(3), 275–294. [Google Scholar] [CrossRef]

- Whalley, L. J. (1995). Ethical issues in the application of virtual reality to medicine. Computers in Biology and Medicine, 25(2), 107–114. [Google Scholar] [CrossRef]

- Wiebe, A., Kannen, K., Selaskowski, B., Mehren, A., Thöne, A.-K., Pramme, L., Blumenthal, N., Li, M., Asché, L., Jonas, S., Bey, K., Schulze, M., Steffens, M., Pensel, M. C., Guth, M., Rohlfsen, F., Ekhlas, M., Lügering, H., Fileccia, H., … Braun, N. (2022). Virtual reality in the diagnostic and therapy for mental disorders: A systematic review. Clinical Psychology Review, 98, 102213. [Google Scholar] [CrossRef]

- World Health Organization (WHO). (2021). Ethics and governance of artificial intelligence for health: WHO guidance. WHO. [Google Scholar]

- World Health Organization (WHO). (2022). Mental disorders. Available online: https://www.who.int/news-room/fact-sheets/detail/mental-disorders (accessed on 5 May 2025).

- Wykes, T., Lipshitz, J., & Schueller, S. M. (2019). Towards the design of ethical standards related to digital mental health and all its applications. Current Treatment Options in Psychiatry, 6(3), 232–242. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).